Abstract

Small and medium enterprises (SMEs) represent a large segment of the global economy. As such, SMEs face many of the same ethical and regulatory considerations around Artificial Intelligence (AI) as other businesses. However, due to their limited resources and personnel, SMEs are often at a disadvantage when it comes to understanding and addressing these issues. This literature review discusses the status of ethical AI guidelines released by different organisations. We analyse the academic papers that address the private sector in addition to the guidelines released directly by the private sector to help us better understand the responsible AI guidelines within the private sector. We aim by this review to provide a comprehensive analysis of the current state of ethical AI guidelines development and adoption, as well as identify gaps in knowledge and best attempts. By synthesizing existing research and insights, such a review could provide a road map for small and medium enterprises (SMEs) to adopt ethical AI guidelines and develop the necessary readiness for responsible AI implementation. Additionally, a review could inform policy and regulatory frameworks that promote ethical AI development and adoption, thereby creating a supportive ecosystem for SMEs to thrive in the AI landscape. Our findings reveal a need for supporting SMEs to embrace responsible and ethical AI adoption by (1) Building more tailored guidelines that suit different sectors instead of fit to all guidelines. (2) Building a trusted accreditation system for organisations. (4) Giving up-to-date training to employees and managers about AI ethics. (5) Increasing the awareness about explainable AI systems, and (6) Promoting risk-based assessments rather than principle-based assessments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The increasing complexity of AI-powered systems has reduced the need for human intervention in their design and deployment, impacting critical domains such as medicine, law, and defense (Barredo Arrieta et al., 2020). Consequently, it is crucial to understand automated decisions and establish guidelines for human interaction with AI systems. This has led to the emergence of numerous regulations, frameworks, and guidelines aimed at promoting trustworthy AI (Floridi, 2019; Hagendorff, 2020; Morley et al., 2019).

Our background research in this paper on AI ethics focuses on examining ethical guidelines, their adoption by different companies and sectors, and the role of small and medium-sized enterprises (SMEs) in addressing AI ethics. We have identified two key observations. The first observation is the close relationship between “Ethical AI” or “AI ethics” and “Trustworthy AI” or “Responsible AI”. The second observation is the limited presence of SMEs in AI ethics discussions. To address both observations, we posed the following research questions: What recommendations exist for responsible, ethical, trustworthy AI that governments and the private sector can use? What are the guidelines the private sector is following on developing or adopting AI ethics? And, what AI guidelines and or recommendations exist for SMEs to build ethical AI? To answer the research questions, we conducted a review to identify the recommendations and guidelines for having a trustworthy, ethical or responsible AI within the private sector. In addition, we evaluate the position of SMEs in these guidelines and recommendations. The paper consists of four main sections. Section 1 summarises our background research in which we discuss international, regional and institutional guidelines. Section 2 presents the review process. Section 3 demonstrates the results of the review. Finally, Sect. 4 discusses the recommendations to the private and public sectors for adopting an ethical and responsible AI system.

2 Background

There is still a need for clearer definitions and standardized terminology in the AI ethics field. Many of the terms are used interchangeably; hence, we wish to remind us of the basic meanings of the most used concepts. According to Cambridge dictionary a “Principle”Footnote 1 means “a basic idea or rule that explains or controls how something happens or works” or from moral perspective “a moral rule or standard of good behavior or fair dealing”. A “Guideline”Footnote 2 means “information intended to advise people on how something should be done or what something should be.” And “Recommendations”Footnote 3 a “statement that someone or something would be good or suitable for a particular job or purpose, or the act of making such a statement”. In this literature, we mean by AI ethics guidelines, AI recommendations or AI principles, any information that advice on the design and outcomes of artificial intelligence systems. And intend to ensure that AI systems behave in a way that is ethical, responsible, transparent, and trustworthy. An illustrative example pertaining to the subject matter of this paper is the EU AI Act,Footnote 4 which was proposed in 2021 and subsequently approved in 2023.Footnote 5 In terms of guidelines, a notable example is the Frequently Asked Questions (FAQs) document on the EU AI Act,Footnote 6 which serves as a comprehensive guide produced by the AI and Data Working Group of the Confederation of European Data Protection Organizations.

Earlier, in its Communication of 25 April 2018 and 7 December 2018, the European Commission set out its vision for AI, which supports ethical, secure and cutting edge AI made in Europe. To support the implementation of this vision, the Commission established the High-Level Expert Group on Artificial Intelligence (AI-HLEG) (AI-HLEG, 2019, p. 4). By 2019, the AI-HLEG published the ethics guidelines for a trustworthy AI. According to the guidelines (AI-HLEG, 2019, p. 2):

Trustworthy AI has three components, which should be met throughout the system’s entire life cycle: (1) it should be lawful, complying with all applicable laws and regulations (2) it should be ethical, ensuring adherence to ethical principles and values and (3) it should be robust, both from a technical and social perspective since, even with good intentions, AI systems can cause unintentional harm. Each component is necessary, but more is needed to achieve Trustworthy AI. Ideally, all three components work in harmony and overlap in their operation. If, in practice, tensions arise between these components, society should endeavour to align them.

In April 2021, the EU commission proposed new actions following a risk-based approach to positioning the EU as a global hub for trustworthy AI (European Commission, 2021). The Commission classified AI systems into unacceptable, high, limited, and minimal risks according to the proposed risk- based approach as we demonstrate in Fig. 1.

By April 2020, there were 173 guidelines submitted in AI Ethics Guidelines Global Inventory from different sectors (Algorithmic Watch, 2020). As shown in Fig. 2, 167 guidelines are classified in the inventory according to their sectors. Figure 2 reflects the active role of the governmental, civic society and private sectors in building and publishing guidelines, compared to the academic sector.

Between October 2019 and December 2019, Deloitte Insights discussed the gap between AI adopters’ concerns and how they prepare to face those concerns by surveying 2,737 IT and business executives from nine countries. The countries involved were: Australia, Canada, China, France, Germany, Japan, Netherlands, the United Kingdom and the United States (Deloitte, 2020). According to the report, no matter how far the experience of the adopting companies, around a third of those companies are working to manage the risks of AI implementation or are aware of AI ethics (Deloitte, 2020). The gap between the AI adopters’ activities to address the AI risks is expected to rise due to the increase in investment in AI companies (Razon, 2021). On the other hand, the fewer ethical AI models we develop, the less AI adoption and the less confidence from investors and research funders in AI projects (Morley et al., 2019). To have a closer look at the status of AI ethical efforts, in the following section, we briefly discuss examples of the work taking place on the international level regarding AI ethics which is helping to lay the groundwork for responsible AI adoption.

2.1 International AI Ethics Guidelines, Recommendations and Tools

According to Floridi (2019), establishing clear and publicly accepted ethical guidelines might help private organisations subscribe to those ethical guidelines instead of cooking their ethics. World Government Summit (2019) discussed if a global ethics AI framework could be seen as a solution to maintain responsible AI models. The summit highlighted the importance of having AI awareness at the institutional level, which is currently insufficient. In addition, the summit stressed on designing AI systems that are transparent, explainable, designed on human-first and common-sense principles, interpretable, auditable, accountable, and built on unbiased data (World Government Summit, 2019).

On the international level, there are different guidelines, and tools to support the AI industry, such as the Open Data Barometer. The barometer measures how governments publish and use open data to achieve an accountable, innovative, and social impact. The barometer is based on the Open Data Charter, collaboration between more than 150 governments and organisations to promote policies that enable governments to collect, share, and use well governed data. Another tool targeting governments is the AI Readiness Index, first released in 2017 by Oxford Insights. The index started with the question: How ready is a government to implement AI to deliver public services to their citizens? The index sets dimensions for the responsible use of AI. The AI readiness index in 2020 shows that Nordic and Baltic Countries currently lead in the responsible use of AI, with Estonia, Norway, Finland and Sweden all in the top five. The US and the UK, both world leaders in government AI readiness, score noticeably lower in the responsible use of AI. Meanwhile, India, Russia and China all score near the bottom of the Sub-Index (Oxford Insights, 2020).

An example of an active government focusing on responsible AI practices is Canada. In 2017, the Government of Canada developed a Pan-Canadian Artificial Intelligence Strategy, the world’s first national AI strategy (CIFAR, 2022). The government has built an Algorithmic Impact Assessment (AIA) tool, a mandatory risk assessment tool that has been co-created by different stakeholders and made available to the public. The tool is a questionnaire that determines the impact level of an automated decision system. The assessment scoring covers many factors, including systems design, algorithm, decision type, impact and data (Government of Canada, 2022).

Another critical international player is the International Telecommunication Union (ITU). ITU has launched (AI for Good), an active platform where AI stakeholders, innovators and decision-makers, connect to build AI solutions to advance the United Nations Sustainability Goals (UN-SDGs). ITU is also co-leading another initiative with UNESCO to combine the UN’s ethical and technological pillars (UNSCEB, 2022). The World Economic Forum (WEF) is another AI promotion and readiness player. To help governments to harness AI potential while addressing risks, WEF has launched an actionable toolbox, AI procurement in a Box. Organisations from UK, Bahrain and UAE already used the toolbox (World Economic Forum, 2020).

2.2 Regulations, Guidance and Standards

The European Union’s General Data Protection Regulation (GDPR) specifies a right to obtain meaningful information about the logic involved behind an automatic decision, commonly interpreted as a right to an explanation for users affected by an automatic decision (Parliament and Council of the European Union, 2016). In 2020, the ICO and Alan Turing Institute published a joint guidance on explaining decisions. The guidance provides practical advice on explainability expected by the UK data protection regulator. The guidance tackles issues such as accountability and legal matters with the GDPR. Moreover, the guidance advises organisations that use assisted decision automated systems to involve product managers, developers, those who use the AI systems, compliance teams, and senior management in every part of the decision-making pipeline. At the same time, the guidance recommends setting policies and procedures for all the employees when dealing with AI systems to be understood by technical and non-technical teams.

Standardization, according to the EU AI Act, should play a crucial role to provide technical solutions to providers to ensure compliance with this Regulation (EU Commission, 2021). In that context, the Joint Research Centre (JRC), and the European Commission’s science and knowledge service conducted a studyFootnote 7 and analysis focusing on the mapping of the AI standards onto the requirements introduced by the European Commission AI Act. The study provides a comprehensive survey and analysis of international AI standardization initiatives relevant to high-risk applications. It assessed and mapped the current standards from ISO/IEC, ETSI, ITU-T, and IEEE in relation to the requirements of the proposed EU Artificial Intelligence Act.

The mapped requirements, as shown in Fig. 3, are data and governance, technical documentation, record keeping, transparency and provision of information to users, human oversight, accuracy, robustness and cyber security, risk management system and quality management system. According to (Nativi & De Nigris, 2021), data and governance mean that data training of models of high-risk AI systems shall meet a set of quality criteria; Technical documentation for high-risk AI must be created before use and kept updated; Record keeping, requires high-risk AI systems to have automatic event logging abilities. These logs must follow common standards while the system operates; Transparency means that users can understand the outputs and use of the systems properly. Human oversight requires that AI systems have user-friendly interfaces for effective human oversight during their use and proper training should be given for anyone interacting with the system; AI system should achieve a specific level of accuracy, robustness and cyber security through their lifecycle; A risk management system must be established and maintained for high-risk AI systems; Quality management system, requires conducting an assessment procedure, documenting relevant information, and implementing a strong post-market monitoring system. It is worth highlighting, that while existing standards go some way in addressing certain requirements like data governance, model documentation and robustness, there are still gaps to be filled (Nativi & De Nigris, 2021).

Overall representation of the AI standards mapped to the EU AI Act requirements adapted from (Nativi & De Nigris, 2021)

While international standards offer some level of coverage for the requirements outlined in the legal text for high-risk AI systems, it is important to note that this coverage is only partial. On the other hand, there are significant areas where international efforts do not fully align with the provisions of the AI Act, particularly concerning the types of risks considered, and there are instances where the coverage provided by international standards is inadequate (Soler Garrido et al., 2023).

Access to standards and best practices would help SMEs ensure their AI systems meet requirements in a compliant and cost-effective way which reduces the need of SMEs to develop their own solutions from scratch. However, when it comes to guidelines, a downside in the majority of the guidelines is the low involvement of SMEs (Crockett et al., 2021). It has been determined by Clark (2022) that there are over 300 million SMEs globally in 2021. Taking into account the lack of attention given to SMEs in the academic publications, and due to their fundamental role in the economy, we decided to conduct a systematic review within the academic publications and the guidelines published by the private sector to determine the practical recommendations for having an ethical, responsible, or trustworthy AI within the private sector especially SMEs.

3 Literature Review

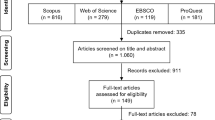

The research work we present is based on a literature review (LR) methods widely used in medical sciences and adapted by Kitchenham and Charters (2007) to software engineering. The review allows us to find the best available evidence by methods to identify, evaluate and synthesise relevant studies on a research topic (Zapata, 2015). Moreover, reviews present “a comprehensive summary of research-based knowledge that can aid practitioners and policymakers in decision-making” (Brettle, 2009, p. 43). With the knowledge production within the AI ethics field accelerating at high speed, it became hard to keep up with state-of-the-art research, develop well researched guidelines, and assess the collective evidence in the field. For this reason, the literature review as a research methodology is the most relevant to the purpose of this study (Snyder, 2019). We started our review by driving the research questions using the PICOC criterion introduced by Petticrew and Roberts and discussed by Kitchenham and Charters (2007). According to the Centre of Evidence-Based Management, PICOC is a method used to describe the five elements of a searchable question. “PICOC” is an acronym for (Population, Intervention, Comparison, Outcome and Context). Table 1 shows the results obtained by applying the PICOC criterion in our research.

The aim of the paper is to evaluate how the private sector especially SMEs are addressing AI ethics. As a result, we came up with the following research questions:

-

Q1: What recommendations exist for responsible, ethical, trustworthy AI that governments and the private sector can use?

-

Q2: What are the guidelines the private sector is following on developing or adopting AI ethics?

-

Q3: What AI guidelines and or recommendations exist for SMEs to build ethical AI?

3.1 Search Terms

Based on the background research and the research questions, search terms were defined, and the following search string was driven: (Interpretability OR trust OR trustworthy OR trustworthiness OR transparency OR ethics OR responsible OR explainable OR explainability OR explicability) AND (AI OR” artificial intelligence” OR XAI) AND (company OR companies OR organisation OR organisation OR SME OR SMEs OR private sector OR enterprise OR industry).

3.2 Search Process

Table 2, adapted from Kitchenham and Charters (2007), presents the search process documentation. According to Kitchenham and Charters (2007), researchers can undertake initial searches for primary studies using digital libraries, but a full review needs more. Hence, other sources of evidence must also be searched, sometimes manually, to avoid publication bias. We applied the search string in three databases: Web of Science, IEEE and Scopus. We included grey literature by including the guidelines submitted by the private sector in the Algorithmic Global Inventory. As a result, we reached 184 research documents from the three databases and 41 documents submitted by the private sector in the global inventory.

The 184 research documents, abstracts and titles were reviewed to select the documents relevant to the study. The selection process was done by the main author under the supervision of the second author. After we removed the duplicates, we reached 106 documents that underwent the entire reviewing process. All the 41 guidelines submitted in the global inventory were thoroughly screened and reduced to 25 guidelines that serve the purpose of this study.

4 Results

In total, we have screened and reviewed 131 documents published by industry and academia. After the screening process, 106 academic publications were reduced to 41 documents and were considered relevant to the research questions.

We included in this stage research articles that relate to an industry or at least propose practical responsible AI methodologies that an industry can adopt. We reduced the guidelines submitted by the private sector in the Global Inventory to 25, after removing broken links and entities providing a very generic code of ethics. For the 25 guidelines in the Global Inventory, we present distribution of countries and sectors in Figs. 4 and 5 respectively. Figures 6 and 7 show the distribution according to countries and sectors of the academic papers which we considered for full reviewing.

In Figs. 4 and 5 we included 3 entities that are not companies but support the private sector and submitted guidelines in the Inventory. The entities are: Working group “Vernetzte Anwendungen und Plattformen fu¨r die digitale Gesellschaft” from Germany, “Partnership in AI” which is a global organisation and “AI for Latin America”. Table 3 shows the classification of the companies’ guidelines as submitted in the Global Inventory with their countries. It is worth mentioning, that companies presented in Table 3 are large companies with number of employees more than 250. We didn’t find any submissions for SMEs. The countries column we presented in Table 3 is according to the companies submissions in the Global Inventory. Table 4 shows the classification of the academic publications according to the research questions. Tables 5 and 6 show the classification of the academic publications according to countries and sectors respectively.

We considered the classification according to countries and sectors important for future research work and for practitioners. The classification of the papers related to the industrial topics, reflect which industrial sectors are missing from the AI ethics discussion and which industrial sectors are receiving more attention. For example in academic publications the health and financial sectors are gaining more attention than the transportation sector.

Around 16.7% of the academic papers are not related to a specific industry, but propose responsible AI guidelines that can be developed and adopted by the private sector in general. Around half of the publications do not relate to a specific geographical area. Those general publications are a good base for researchers and the academic sector to build a comprehensive set of guidelines for SMEs.

We aim by showing the classification of guidelines according to countries to spark a more focused AI ethics discussion for other researchers and practitioners interested in a certain geographical area. It is worth noting that, the guidelines submitted to the Global Inventory answer the second research question. The insights we got from answering the research questions are discussed further in the next section. The answer to the first question provides a set of suggestions that can support any organisation looking for adopting responsible AI practices.

In answering the second question we covered the guidelines provided by big companies, with the aim to present to organisations especially SMEs, working models of responsible AI practices. The answer of the third question serves different stakeholders from researchers, SMEs and agencies concerned to serve SMEs as we summaries and highlight the key findings from the published academic work featuring SMEs.

5 Discussion

Who should be responsible in case of damage or harm caused by an AI system? Is it fair to hold the developers of an AI system responsible for AI systems? Are the current AI guidelines assuring the development of ethical practices? Or are ethics simply serving the purpose of calming critical voices (Hagendorff, 2020)? In other words, there are concerns not only about the algorithms but also about the guidelines and regulations governing the ethical process associated with the algorithms (Morley et al., 2019). Hagendorff (2020) highlighted a group of concerns associated with the current guidelines, such as: most of the guidelines lack diversity when it comes to the background of the research team, as the majority of the guidelines are written by researchers with backgrounds in computer sciences solely; The current guidelines hardly discuss the possibility of political abuse as automated fake news, deep fakes, election fraud and the like; And public-private partnerships and how the funding institutions are affecting the guidelines research outcomes.

As with any human partnership, the “how to trust AI?” Alternatively, “should we trust AI?” became urgent questions (Bryson, 2018; Hengstler et al., 2016; Ryan, 2020). And although different organisations released guidelines with the position that AI could be trusted (Ryan, 2020), some scholars see that we should be cautious in attaching the aspect of trust to AI. As trusting AI might be a misplaced trust and can mislead individuals into downsizing the companies’ responsibilities beyond those technologies (Bryson, 2018; Hagendorff, 2020; Ryan, 2020).

To summarise the current situation, there are many guidelines. However, very few systems are in place to check for compliance with these guides (Morley et al., 2019), which created a space for those producing, purchasing, or using AI systems for (1) ethics shopping, (2) ethics blue-washing; (3) ethics lobbying; (4) ethics dumping; and (5) ethics shirking (Floridi, 2019). How the current guidelines are harmonised with the corporate laws or be put into action is an open question (Hickman & Petrin, 2020; Vică et al., 2021). Issues such as fairness, bias, and accountability are already fairly discussed in the literature or when discussing general guidelines for building ethical, trustworthy AI systems. The health and financial sectors are the two most in the academic work, as presented in Fig. 5 and Table 6.

There are sectors that need more attention such as the education sector and investment. Table 3 and Fig. 4 show how the guidelines are lead by big companies with the absence of SMEs from the responsible AI discussions. Another important observation from the results section is the lack of presence of the Global South. To avoid a geographical divide in the future, governments and researchers need to give more attention to the Global South. The group of papers classified as general are not targeting a specific industry; however those papers form a good base for industry researchers who are interested in developing AI ethics guidelines for SMEs.

We referred to the unclassified articles by General. By general we refer to the proposed and recommended guidelines and recommendations that can benefit any organisation regardless the sector as in Clarke (2019), Shneiderman (2020), and Zhang and Gao (2019). For the articles classified according to sectors, researchers focused on a specific field, such as Li et al. (2021) who focused on the petrochemical sector in China. Sharma et al. (2022) focused on implementing concepts such as fairness and accountability in the hospitality industry. Pillai and Matus (2020) identified social, financial and legal risks and challenges associated with AI adoption in the construction industry. Wasilow and Thorpe (2019) focused on the defence sector based on the Canadian perspective. Alami et al. (2020), Larson et al. (2020), and Roski et al. (2021), and focused on the health sector.

Interestingly few studies focused on the end-user; Degas et al. (2022) highlighted the importance of focusing on the end user. Daudt et al. (2021) compared the different types of explainability methods, concluding that few works seriously address the problem of measuring the understanding of the explanations and the quality of the explanations for AI system users. Qin et al. (2020) is one of the few works that focused on what it means to trust AI in education systems from the users’ perspective either educators, parents or students.

Generally, most of the researchers tried to give practical recommendations, however, those recommendations are scattered between papers. In this section through answering the research questions, we gathered and grouped the scattered recommendations suggested by other researchers. With the aim to support organisations and practitioners with a base for executable recommendations and guidelines to adopt responsible AI practices. We also, tried to analyse and reflect on the position of the private sector and SMEs from the responsible AI guidelines and practices.

5.1 RQ1: What Recommendations Exist for Responsible, Ethical, Trustworthy AI That Governments and the Private Sector Can Use?

By answering this research question we present in the following subsections a list of recommendations that can catalyse the readiness of different organisations including SMEs for a responsible and ethical AI adoption. Those recommendations are extracted and grouped from various academic publications and generally adopted by the private sector. This list is recommended for SMEs.

5.1.1 Accreditation and Certification

One of the general recommendations when building a trustworthy AI, is trusting the institutes behind the technology itself (Hengstler et al., 2016). To achieve that level of institutional trust, one of the possible solutions could be establishing a responsible AI accreditation process and certification released by independent unbiased organisations (Roski et al., 2021; Shneiderman, 2020).

We believe that involving governments, academia, and SMEs in the development of accreditation and certification processes is important. This collaboration ensures that the processes are effective and meet the needs of all parties. By working together, we can use the expertise and resources of all parties to create reliable systems for accreditation and certification.

5.1.2 Up-to-date Training

Another recommendation is giving up-to-date training to the employees and stakeholders in contact with AI systems in different sectors (Alami et al., 2020; Fulmer et al., 2021; Ibáñez & Olmeda, 2021; Li et al., 2021), including investors (Minkkinen et al., 2022), and addressing the gap between academic research and practitioners needs (Rakova et al., 2021). The need for constant training is further proven by the fact that 79% of tech workers report that they would like more practical, down-to- earth instructions on how to deal with ethical issues within the AI development and adoption cycle (Vică et al., 2021). Lapińska et al. (2021) examined trust in AI from the employees’ perspective and confirmed the importance of updating the managers through training. The study surveyed 428 employees from the energy and chemical sectors in Poland. The study found that managers play a vital role in building the AI trust culture within the company and among the employees.

Hence it is crucial to keep updating the managers with the necessary guidelines on how to build internal capacities. And to establish collaborative programs between the academia and the SMEs. Our rationale for the collaboration between academia and SMEs on training, design content, and methods is to ensure that our approach is well informed and effective. This sort of collaboration can leverage the expertise and resources of all parties involved to achieve the best possible outcomes.

5.1.3 Risk-Based Assessments

Despite the consensus on the importance of AI ethics, the current guidelines focus on ethical analysis, which does not deliver what organisations need (Clarke, 2019). Moreover, the extra focus of researchers on ethics opens the doors not only for ethics washing but also for technology firms and governments to use ethics as a locus of power (Vică et al., 2021). Hence, a more practical solution suggested by Clarke (2019) when assessing an AI system is to use a risk-based assessment instead of an ethical one. Giving importance to the risks in assessing an AI system was seconded by Fulmer et al. (2021) and Golbin et al. (2020). Adams and Hagras (2020) also focused on risk assessment. They proposed a risk management framework for implementing AI in banking with consideration of explainability and outlined the implementation requirements to enable AI to achieve positive outcomes for financial institutions and the customers, markets and societies they serve.

We propose that a toolbox for assessing risks should be developed in collaboration with academia and SMEs. To ensure that the toolbox is well-informed and effective in addressing the needs of all parties involved. This collaborative approach allows SMEs to create a comprehensive and reliable tool for risk assessment.

5.1.4 Building Explainable and Interpretable Models

Embedding explainable and interpretable models in AI systems have been widely discussed and recommended in the literature for different industries. Nicotine (2020) discussed and recommended explainable and interpretable models for the French railway industry. Ferreyra et al. (2019) focused on constructing an explainable AI framework to assist workforce allocation in the telecommunications industry. Milosevic (2021) discussed explainable AI for the healthcare industry. Ahmed et al. (2022) focused on Explainable AI based approaches and their importance in industry 4.0. To develop an explainable model, Engers and Vries (2019) highlighted the importance of interaction between the explainer and the explainee and how the user’s interaction might increase with having good explanations.

Balasubramaniam et al. (2022) analysed the ethical guidelines of AI published by 16 organisations and proposed a model of explainability which addresses four critical questions to develop the quality of explanations (1) to whom to explain, (2) what to explain, (3) in what kind of situation to explain, and (4) who explains. When it comes to what technologies companies could use the development of interpretable and explainable AI models, Golbin et al. (2020) recommended AI Fairness 360 Toolkit, Fairlearn, and InterpretML.

5.1.5 Checklists, Frameworks and Guides

Golbin et al. (2020) recommended strategy and design guides to improve the adoption of responsible AI tools such as The AI Canvas, the Data Ethics Canvas, the Ethical Operating System, the Ethos design for trustworthy AI, and AI ethics cards. Checklists also offer a mechanism for organisations to assess their practices across several dimensions like bias, fairness, privacy and others related to responsible AI adoption practices (Golbin et al., 2020; Koster et al., 2021). Koster et al. (2021) proposed a checklist to assess and help assure transparency and explainability for AI applications in a realistic environment. This checklist extends an existing checklist of the standardisation institute for digital cooperation and innovation in Dutch insurance.

Frameworks that address the different stages of the AI life cycle are essential (Golbin et al., 2020). Degas et al. (2022) proposed a recognisable conceptual framework for explainable AI named the Descriptive, Predictive, and Prescriptive (DPP) model after analysing how the general Air Traffic Management (ATM) explainable AI works and where and why explainable AI is needed and what are the limitations. Another remarkable practical framework is the Explainable Multiview Game Cheating Detection framework (EMGCD) proposed and tried by Tao et al. (2020) on NetEase games. EMGCD uses XAI for cheating detection in online games. The framework combines cheating explainers with cheating classifiers, generates individual, local and global explanations, and has positive feedback from the game operator teams.

5.2 RQ2: What Are the Guidelines the Private Sector Is Following on Developing or Adopting AI Ethics?

We aim by answering this question to inspire SMEs that are not adopting responsible AI to know more about what are the guidelines other companies are adopting. We took into account the Grey literature by examining the guidelines published by the private sector. As part of the analysis to answer the second research question, we considered the 25 guidelines published in the Global inventory. This provided insights into how companies perceive AI ethics. For instance, some companies submitted in the Global Inventory a blog page or a project focusing on AI ethics as guidelines, as we explain in this section. It is important to note that the classification used in this analysis reflects how private companies represented their relationship with AI ethics in the inventory. One important observation, there is no submissions for guidelines by SMEs in the Global Inventory. However, companies such as Microsoft,Footnote 8 Google,Footnote 9 SageFootnote 10 and IBMFootnote 11 are providing services to support other companies and SMEs with tools to adopt AI ethics. Most companies fall in the ICT sector, and companies in the US submit software development with around 44% of the guidelines. The submitted guidelines to the inventory are not all on the same level; some companies have multiple different submissions, such as IBM, Google and Microsoft, which reflects the highly active efforts of those companies and their research teams regarding AI ethics. There is a consensus among the companies’ guidelines on AI ethics principles such as fairness, transparency, accountability, and avoiding bias. However, each company has its own approach. For instance, AccentureFootnote 12 has set up an AI Advisory Body to consider ethical issues, foster discussion forums and publish resulting guidance to the industry and regulators. Accenture participated actively in the international development of ethics codes, such as the “Asilomar AI Principles” and the “Partnership on AI” codes. Aptiv,Footnote 13 Audi, BMW, Daimler and other automotive companies proposed detailed technical standards for developing Automated Driving.

Google has multiple submissions in the inventory. One of them is the People and AI partnership Guidebook, a set of methods, workshops, guidelines and case studies for designing with AI based on insights and data from industry experts and academic researchers inside and outside Google. Another submission from Google is “Responsible AI Practices”, a set of general principles, including ethical claims by the company, such as avoiding technologies that cause harm. However, these guidelines might proceed in technologies that accommodate risk materials if Google believes that the benefits outweigh the risks. That includes weapons and technologies that gather information for surveillance. Google also established the Advanced Technology External Advisory Council (ATEAC) to help implement the AI ethics principles set by Google and help the company overcome facial recognition and fairness challenges.

IBM also has multiple submissions in the inventory. IBM stresses the importance of transparency and explainability as a must condition to go to the market. The first submission is the company’s principles for trust and transparency, which explains the company’s mission of having a responsible AI. Another submission from IBM is about Trusted AI research teams. The research teams include a team for explainable AI for training highly optimised, directly interpretable models and explanations of black-box models and visualisations of neural network information flows. The submission includes the AI explainability 360 toolkit, an open source toolkit that can help the user understand how machine learning models predict labels. One of the exciting publications by IBM is “Everyday ethics for Artificial Intelligence”, an example of a virtual assistant in a hotel. It discusses the principles of explainability, accountability, bias, and fairness.

Intel submitted a white paper in the inventory tackling AI privacy that presents five observations on how autonomous decisions can potentially affect citizens (Intel, 2018). Kakao Corp, a South Korean company, announced on its website eight algorithm ethics the company aims to create for designing a healthy digital culture with technology and people. The algorithm ethics code declared by Kakao, highlights the need to eliminate bias and adherence to social ethical norms when collecting and managing data for algorithm learning, as well as to provide explanations to strengthen user trust and ensure that algorithms are not manipulated internally or externally. Additionally, priority should be given to protecting children and youth from inappropriate and threatening content, and a strong effort must be made to protect user privacy during the design and operation of algorithm driven services and technologies. Microsoft stressed the importance of giving up-to-date training to its employees (Microsoft, 2018a). Microsoft has multiple submissions in the inventory. The first submission is a set of ten guidelines for developers working on conversational AI bots. The guidelines covered the concepts of privacy, fairness and accountability (Microsoft, 2018b).

The second submission is a blog article discussing principles and laws related to facial recognition (Microsoft, 2018c). The third submission is a Microsoft platform presenting how the company supports responsible AI practices and case studies of how Microsoft empowered Telefonica, State Farm and TD bank group. The platformFootnote 14 also includes an AI impact assessment template designed by the company. OP financial groupFootnote 15 in Finland published five principles on their website as ethical guidelines. The principles stressed that people come first, transparency, privacy, impact and ownership. In 2020, Philips in the Netherlands published five principles for the responsible use of AI in healthcare. The five principles cover fairness, robustness, transparency, oversight and well-being (Philips, 2020). PriceWaterhouseCoopers in the UK 2019 published a practical guide for responsible AI to help companies focus on five key dimensions when designing AI systems. The five dimensions are governance, robustness, bias and fairness, ethics and explainability (Price Water House Coopers, 2019). In 2017 Sage announced five core principles for designing AI solutions serving businesses. These principles are diversity, accountability, and rewarding AI systems for showing good work. AI systems are supposed to create new opportunities and provide solutions to disadvantaged groups (Sage, 2017). SAP in Germany, Sony in Japan, and UX studio in Hungary announced seven guiding principles that govern the design of their solutions (SAP, 2018; Sony, 2021; Pásztor, 2018). Telia publish another set of principles in Sweden, Unity in the US and Vodafone group in the UK, reflecting the AI guidelines that govern the design of their solutions (Telia, 2019; Unity Technologies, 2018; Vodafone Group, 2019).

Last but not least, there is a submission on the Global Inventory by Partnership on AI, which includes 100 partners around the world, including Microsoft, Google, IBM, Sony and Intel. The Partnership on AI platformFootnote 16 includes various resources and initiatives around responsible AI. AI ethics with the board of directors of Partnership on AI includes people from IBM, Microsoft, Apple and Amazon. Partnership on AI is not a company. However, various companies contributed to it.

Despite the efforts of the private sector to promise a bright world, the AI Incident DatabaseFootnote 17 platform sponsored by Partnership on AI has recorded more than 1600 reports of AI harm. Academic literature has also spotted cases highlighting the importance of being aware of AI systems’ impact. For example, Koster et al. (2021) criticized an algorithmic fraud risk scoring system (SyRi) used by the Dutch government and AI driven credit risk system by Apple. SyRi violated privacy and lacked transparency. And the Dutch court deemed the system illegal. On another incident, the AI driven credit risk system by Apple was gender biased, as the system classified men as more credit worthy than women. Lui and Lamb (2018) have mentioned another sensitive case by giving a brief overview of the AI status in the banking sectors and a case study about Barclays UK. One of the remarkable aspects of Barclays’ case is when a customer contacts the bank following the death of a family member to organise the finances of the deceased. Barclays prefer a human to handle the situation in those sensitive situations rather than a chatbot.

After surveying more than 150 companies from the US, Finland and other countries, Vakkuri et al. (2020) have found that companies treat AI as a feature of ethics. In other words, most of the measures taken in AI projects are the same measures taken in any software project. Many respondents noted that they do not have or they need to know if they have backup plans for unexpected AI system behaviour, with about half of the companies who believed that their system could not be misused. 36% of the respondents considered that their company is responsible for meeting the mandatory regulatory standards and that anything that might happen beyond those specified guidelines is the end user’s responsibility. Engaging employees and end users are essential, especially in the healthcare sector. For example, Martinho et al. (2021), after surveying medical doctors from the Netherlands, Portugal and US, observed that medical doctors are concerned about the role large companies play in the healthcare sector more than issues such as fairness and bias of health inequalities. Such findings reveal the importance of engaging medical doctors in the AI systems design process in addition to industry stakeholders and policymakers.

5.3 RQ3: What AI Guidelines and or Recommendations Exist for SMEs to Build Ethical AI?

We found very few articles addressing or featuring SMEs. In general, AI ethical dis- cussions rarely address SMEs. The studies we found addressed or featured SMEs from different angles. For example, Bussmann et al. (2020) analysed 15k SMEs and proposed an explainable AI model for Fintech risk management, particularly in measuring the risks that arise when clients borrow credit. The study is the only one involving such a large number of SMEs. Another study is for Crockett et al. (2021), in which they analyse 77 toolkits covering the different aspects of Machine Learning and AI lifecycle and ethical principles. The study aimed to find which toolkits SMEs can use. The study concluded that there currently needs to be a toolkit that meets the SMEs’ needs and that SMEs need help with long, wordy and technical documents. Moreover, 83% of the current toolkits do not provide training, while SMEs look for case studies, training, cost, and instruction manuals. The study proposed to produce an online tool to help SMEs select the best toolkits to implement/inform Practice based on coverage and ease of implementation.

A third study comes from Beckert (2021). The study concluded that ethical AI projects are in a research context and a few companies. The study concluded that reasons beyond the rare presence of concrete ethical AI examples inside companies might be due to time-to-market considerations, the lack of access to expertise and the difference between the mindsets of software engineers and social scientists. The study proposed two solutions to support companies implementing Ethical, Trustworthy AI systems. The first solution is to break down the AI guidelines according to the needs of computer scientists, managers and software engineers. The second solution is to embed social scientists in the implementation process. The study highlighted a need for SMEs to be actively involved in the ethical AI discussions and that startups and SMEs seem to follow a wait-and-see approach compared to some large companies that already took concrete steps to reach Ethical, Trustworthy AI systems.

The fourth is from Jobin et al. (2019), who analysed 84 documents containing ethical principles and guidelines for AI. The study found that private companies, governmental agencies, and academic and research institutions released most of the documents. The last study is from Ibáñez and Olmeda (2021). The focus of the study is to get insights into how companies apply AI ethical principles, including explainability, by selecting 22 companies. The focus is on Spanish companies and interviewing the top or senior level managers with an average of over 16 years of experience in AI. One of the recommendations suggested by the study is developing specific sector regulations and providing specific training on ethical issues.

In conclusion, SMEs need more attention and more research work is needed to know SMEs’ needs. Generally, SMEs lack the needed expertise and resources to adopt responsible AI practices in the same level of large companies. Therefore, building cooperation models between SMEs, academic sector, civil society and governmental agencies is necessary to mend the responsible AI adoption gap between SMEs and large companies.

6 Conclusion

AI is increasingly being used in various industries, from healthcare to retail. As a result, the ethical implications of AI in these industries have become important. Companies must consider the implications of AI on the rights, safety, and security of stakeholders, such as their customers, employees, and shareholders. They must also consider how AI will affect the environment and other members of society, as well as ethical issues such as data privacy. The companies should ensure that AI technology is developed and used in a responsible manner, taking into consideration not just the bottom line, but also the ethical implications of the technology. This means that companies must put in place robust ethical principles, a strong governance structure, and the necessary resources and processes to ensure that ethical concerns are addressed.

The current literature is a first step to supporting SMEs in knowing more about AI ethics and establishing responsible AI practices. There is still a demand for more effort to study the situation of SMEs with academia, accelerator centers, business innovation ecosystems, adult education, and lifelong learning hubs to support findings and creating collaborative suitable practical guidelines, tools and training for the SMEs. Our research work revealed a focus on AI ethics and responsible practices from the academic sector, governments and the private sector presented by big firms. The following are the brief list of recommendations that support SMEs to be ready for ethical and responsible AI adoption:

-

There is a need to evaluate the current training materials recommended by private and public organisations.

-

There is a need to have tailored, customised and practical guidelines and tools that takes into consideration the differences between organisations.

-

There is a demand to find solutions to scaffold and help SMEs to adopt AI ethics.

-

Organisations, especially SMEs, wish for a trusted accreditation system fortheir AI ethical practices, up-to-date training for their employees and managers, to be constantly updated with the latest frameworks and guidelines, to support the developers building the explainable systems and applying risk assessments.

As a starting point, SMEs could benefit from international standards as, governance structures and definitions outlined in standards help SMEs establish proper risk management, quality control, and oversight measures in a harmonized manner. Moreover, standards featuring human factors, interface design, and roles/responsibilities help SMEs address legal duties and responsibilities for human oversight and control. One of the limitations of this study is the time gap between the reviewing and analysis of the references we presented in the review and the time of publication. The stage of gathering references ended by mid of April 2022. The second stage concerning screening, evaluation and anaylsis of the references ended by November 2022. The aim of the paper was to evaluate how the private sector is addressing AI ethics. However, the paper did not address why the reviewed AI guidelines cannot be implemented in their current form by SMEs. To bridge this gap, our research team is currently conducting interviews, meetings and workshops with representatives from SMEs for a future publication. Our goal in this future publication is to determine the perceived drivers and inhibitors that prevent SMEs from participating in the writing of ethical guidelines that better meet their needs. And to present a model for a training tool that supports SMEs in adopting AI ethics.

Studying the position of the AI systems from the environment is missed from this research. A potential upcoming research work is to study the position of the environmental impact the AI systems are creating from the guidelines and the academic work. By considering the environmental impact and carbon footprint, and studying how responsible AI practices could improve the environment and save resources, more companies might get encouraged to adopt responsible practices.

In conclusion, this research highlighted the gap between SMEs and big firms in adopting ethical AI practices. As a result, developing and studying cooperation models between SMEs, academic sector, civil society and governmental agencies is necessary to mend the responsible AI adoption gap between SMEs and large companies.

Data Availability

All data generated or analysed during this study are included in this published article [and its supplementary information files].

Code Availability

Not applicable.

Notes

For discussion of the amendment success of the EU AI Act see Haataja, M., & Bryson, J. J. (2023). The European parliament’s AI regulation: Should we call it progress? Amicus Curiae, 4(3), 707–718.

AI Standardisation Landscape: state of play and link to the EC proposal for an AI regulatory framework. https://publications.jrc.ec.europa.eu/repository/handle/JRC125952.

Microsoft. (n.d.). Responsible AI principles from Microsoft. Retrieved 25 May 2022, from https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1%3Aprimaryr6.

Google. Responsible AI Practices. Retrieved 25 May 2022, from https://ai.google/responsibilities/review-process/.

Sage (2017). Business builders ethics of code - sage US. Retrieved 26 May 2022, from https://www.sage.com//media/group/files/business-builders/business-builders-ethics-of-code.pdf?la=en.

IBM. (n.d.). Trusted AI research teams. Retrieved 25 May 2022, from https://aix360.mybluemix.net/?ga=2.175851307.842973985.16511542241153023123.1651154224.

Accenture. (n.d.). An ethical framework for responsible AI and robotics. Retrieved 25 May 2022, from https://www.accenture.com/gb-en/company-responsible-ai-robotics.

Aptiv, Audi, BMW, Daimler, & others. (2019). Safety first for automated driving. Retrieved 25 May 2022, from https://www.heise.de/downloads/18/2/7/0/8/1/7/0/safety-first-for-automated-driving.pdf.

Microsoft. (n.d.). Responsible AI principles from Microsoft. Retrieved 25 May 2022, from https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1%3Aprimaryr6.

OP Financial Group. (n.d.). Commitments and principles - reponsibilities - op group. Retrieved 25 May 2022, from https://www.op.fi/op-financial-group/corporate-social-responsibility/commitments-and-principles.

Tenets Partnership on AI. Retrieved 25 May, 2022, from https://partnershiponai.org/.

References

Accenture. An ethical framework for responsible AI and robotics. Retrieved 25 May, 2022, from https://www.accenture.com/gb-en/company-responsible-ai-robotics

Adams, J., & Hagras, H. (2020). A type-2 fuzzy logic approach to explainable AI for regulatory compliance, fair customer outcomes and market stability in the global financial sector. In 2020 IEEE international conference on fuzzy systems (FUZZ-IEEE). https://doi.org/10.1109/fuzz48607.2020.9177542

Ahmed, I., Jeon, G., & Piccialli, F. (2022). From artificial intelligence to explainable artificial intelligence in industry 4.0: A survey on what, how, and where. IEEE Transactions on Industrial Informatics, 18(8), 5031–5042. https://doi.org/10.1109/tii.2022.3146552

AI for Good. (2022, 12 May). AI for good. Retrieved 24 May, 2022, from https://aiforgood.itu.int/

AI for Latin America. (2021, January 4). Ética IA LATAM. Retrieved May 25, 2022, from https://ia-latam.com/etica-ia-latam/

AI-HLEG. (2019, 8 April). Ethics guidelines for trustworthy AI. Retrieved 13 June, 2021, from https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

Alami, H., Rivard, L., Lehoux, P., Hoffman, S. J., Cadeddu, S. B., Savoldelli, M., Samri, M. A., Ag Ahmed, M. A., Fleet, R., & Fortin, J. P. (2020). Artificial intelligence in health care: Laying the foundation for responsible, sustainable, and inclusive innovation in low- and middle-income countries. Globalisation and Health, 16(1). https://doi.org/10.1186/s12992-020-00584-1

Algorithmic Watch. (2020, April). Retrieved 4 April, 2022, from https://inventory.algorithmwatch.org/about

Balasubramaniam, N., Kauppinen, M., Hiekkanen, K., & Kujala, S. (2022). Transparency and explainability of AI systems: Ethical guidelines in practice. Requirements Engineering: Foundation for Software Quality, 3–18. https://doi.org/10.1007/978-3-030-98464-9

Barredo Arrieta, A., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., Garcia, S., Gil-Lopez, S., Molina, D., Benjamins, R., Chatila, R., & Herrera, F. (2020). Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82–115. https://doi.org/10.1016/j.inffus.2019.12.012

Beckert, B. (2021). The European way of doing artificial intelligence: The state of play implementing trustworthy AI. In 2021 60th FITCE communication days congress for ICT professionals: Industrial data – cloud, low latency and privacy (FITCE). https://doi.org/10.1109/fitce53297.2021.9588560

Bejger, S., & Elster, S. (2020). Artificial intelligence in economic decision making: How to assure a trust? Ekonomia I Prawo, 19(3), 411. https://doi.org/10.12775/eip.2020.028

Brettle, A. (2009). Systematic reviews and evidence based library and information practice. Evidence Based Library and Information Practice, 4(1), 43. https://doi.org/10.18438/b8n613

Bryson, J. (2018, 13 November). AI & global governance: No one should trust AI. Retrieved 4 April, 2022, from https://cpr.unu.edu/publications/articles/ai-global-governance-no-one-should-trust-ai.html

Bussmann, N., Giudici, P., Marinelli, D., & Papenbrock, J. (2020). Explainable AI in fintech risk management. Frontiers in Artificial Intelligence, 3. https://doi.org/10.3389/frai.2020.00026

CIFAR. (2022, 8 February). Pan-Canadian AI strategy. Retrieved 24 May, 2022, from https://cifar.ca/ai/

Clark, D. (2022, 15 August). Global SMEs 2021. Estimated number of small and medium sized enterprises (SMEs) worldwide from 2000 to 2021. Retrieved 3 November, 2022, from https://www.statista.com/statistics/1261592/global-smes/:%98:text=There%20were%20estimated%20to%20be,when%20there%20were%20328.5%20million

Clarke, R. (2019). Principles and business processes for responsible AI. Computer Law & Security Review, 35(4), 410–422. https://doi.org/10.1016/j.clsr.2019.04.007

Crockett, K. A., Gerber, L., Latham, A., & Colyer, E. (2021). Building trustworthy AI solutions: A case for practical solutions for small businesses. IEEE Transactions on Artificial Intelligence, 1–1. https://doi.org/10.1109/tai.2021.3137091

Dağlarli, E. (2020). Explainable artificial intelligence (XAI) approaches and deep meta-learning models. In M. A. Aceves-Fernandez (Ed.), Advances and applications in deep learning. IntechOpen. https://doi.org/10.5772/intechopen.92172

Daudt, F., Cinalli, D., & Garcia, A. C. (2021). Research on explainable artificial intelligence techniques: An user perspective. In 2021 IEEE 24th international conference on computer supported cooperative work in design (CSCWD). https://doi.org/10.1109/cscwd49262.2021.9437820

Degas, A., Islam, M. R., Hurter, C., Barua, S., Rahman, H., Poudel, M., Ruscio, D., Ahmed, M. U., Begum, S., Rahman, M. A., Bonelli, S., Cartocci, G., Di Flumeri, G., Borghini, G., Babiloni, F., & Aricó, P. (2022). A survey on artificial intelligence (AI) and explainable AI in air traffic management: Current trends and development with future research trajectory. Applied Sciences, 12(3), 1295. https://doi.org/10.3390/app12031295

Deloitte. (2020, 14 July). Thriving in the era of pervasive AI. Retrieved 10 May, 2021, from https://www2.deloitte.com/us/en/insights/focus/cognitive-technologies/state-of-ai-and-intelligent-automation-in-business-sur-vey.html?id=us%3A2el%3A3pr%3A4di6462%3A5awa%3A6di%3AMMD-DYY%3A%26

Engers, T. M. V., & Vries, D. M. (2019). Governmental transparency in the era of artificial intelligence. In M. Araszkiewicz & V. Rodríguez-Doncel (Eds.), Legal knowledge and information systems, Ser. Frontiers in Artificial Intelligence and Applications (Vol. 322, pp. 33–42). IOS Press. https://doi.org/10.3233/FAIA190304

EU Commission. (2021, 21 April). Europe fit for the digital age: Commission proposes new rules and actions for excellence and trust in artificial intelligence. Retrieved 10 May, 2021, from https://ec.europa.eu/commission/presscorner/detail/en/ip211682

Ferretti, T. (2021). An institutionalist approach to AI ethics: Justifying the priority of government regulation over self-regulation. Moral Philosophy and Politics. https://doi.org/10.1515/mopp-2020-0056

Ferreyra, E., Hagras, H., Kern, M., & Owusu, G. (2019). Depicting decision- making: A type-2 fuzzy logic based explainable artificial intelligence system for goal-driven simulation in the workforce allocation domain. In 2019 IEEE international conference on fuzzy systems (FUZZ-IEEE). https://doi.org/10.1109/fuzz-ieee.2019.8858933

Floridi, L. (2019). Translating principles into practices of digital ethics: Five risks of being unethical. Philosophy & Technology, 32(2), 185–193. https://doi.org/10.1007/s13347-019-00354-x

Fulmer, R., Davis, T., Costello, C., & Joerin, A. (2021). The ethics of psychological artificial intelligence: Clinical considerations. Counseling and Values, 66(2), 131–144. https://doi.org/10.1002/cvj.12153

General Data Protection Regulation (GDPR). Compliance guidelines. Retrieved 12 June, 2021, from https://gdpr.eu/

Golbin, I., Rao, A. S., Hadjarian, A., & Krittman, D. (2020). Responsible AI: A primer for the legal community. In 2020 IEEE international conference on big data (big data). https://doi.org/10.1109/bigdata50022.2020.9377738

Google. People and AI partnership guidebook. Retrieved 25 May, 2022, from https://pair.withgoogle.com/

Google. Responsible AI practices. Retrieved 25 May, 2022, from https://ai.google/responsibilities/review-process/

Government of Canada. Algorithmic impact assessment tool. Canada.ca. Retrieved 3 November, 2022, from https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/responsible-use-ai/algorithmic-impact-assessment.html

Hagendorff, T. (2020). The ethics of AI ethics: An evaluation of guidelines. Minds and Machines, 30(1), 99–120. https://doi.org/10.1007/s11023-020-09517-8

Hengstler, M., Enkel, E., & Duelli, S. (2016). Applied artificial intelligence and trust—the case of autonomous vehicles and medical assistance devices. Technological Forecasting and Social Change, 105, 105–120. https://doi.org/10.1016/j.techfore.2015.12.014

Hickman, E., & Petrin, M. (2020). Trustworthy AI and corporate governance – The EU’s ethics guidelines for trustworthy artificial intelligence from a company law perspective. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3607225

Ibáñez, J. C., & Olmeda, M. V. (2021). Operationalising AI ethics: How are companies bridging the gap between Practice and principles? An exploratory study. AI & Society. https://doi.org/10.1007/s00146-021-01267-0

IBM. (2019, 11 December). IBM’s principles for data trust and transparency. Retrieved 25 May, 2022, from https://www.ibm.com/blogs/policy/trust-principles/

IBM. Everyday ethics for artificialintelligence. IBM. Retrieved 25 May, 2022, from https://www.ibm.com/watson/assets/duo/pdf/everydayethics.pdf

IBM. Trusted AI research teams. Retrieved 25 May, 2022, from https://aix360.mybluemix.net/?ga=2.175851307.842973985.1651154224-1153023123.1651154224

Intel. (2018, 22 October). Intel’s AI privacy policy white paper. Retrieved 25 May, 2022, from https://www.intel.com/content/dam/www/public/us/en/ai/documents/Intels-AI-Privacy-Policy-White-Paper-2018.pdf

Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389–399. https://doi.org/10.1038/s42256-019-0088-2

Kakao Corp. (n.d.). Algorithm ethics charter. Retrieved May 25, 2022, from https://www.kakaocorp.com/page/responsible/detail/algorithm

Kitchenham, B. A., & Charters, S. (2007). Guidelines for performing systematic literature reviews in software engineering (EBSE 2007-001). Keele University and Durham University Joint Report.

Koster, O., Kosman, R., & Visser, J. (2021). A checklist for Explainable AI in the insurance domain. Communications in Computer and Information Science, 446–456. https://doi.org/10.1007/978-3-030-85347-132

Lapińska, J., Escher, I., Górka, J., Sudolska, A., & Brzustewicz, P. (2021). Employees’ trust in artificial intelligence in companies: The case of energy and chemical industries in Poland. Energies, 14(7), 1942. https://doi.org/10.3390/en14071942

Larson, D. B., Magnus, D. C., Lungren, M. P., Shah, N. H., & Langlotz, C. P. (2020). Ethics of using and sharing clinical imaging data for Artificial Intelligence: A proposed framework. Radiology, 295(3), 675–682. https://doi.org/10.1148/radiol.2020192536

Li, J., Zhou, Y., Yao, J., & Liu, X. (2021). An empirical investigation of trust in AI in a Chinese petrochemical enterprise based on institutional theory. Scientific Reports, 11(1). https://doi.org/10.1038/s41598-021-92904-7

Lui, A., & Lamb, G. W. (2018). Artificial intelligence and augmented intelligence collaboration: Regaining trust and confidence in the financial sector. Information & Communications Technology Law, 27(3), 267–283. https://doi.org/10.1080/13600834.2018.1488659

Martinho, A., Kroesen, M., & Chorus, C. (2021). A healthy debate: Exploring the views of medical doctors on the ethics of artificial intelligence. Artificial Intel- Ligence in Medicine, 121, 102190. https://doi.org/10.1016/j.artmed.2021.102190

McStay, A., & Rosner, G. (2021). Emotional artificial intelligence in children’s toys and devices: Ethics, governance and practical remedies. Big Data & Society, 8(1), 205395172199487. https://doi.org/10.1177/2053951721994877

Microsoft. (2018a). The future computed: Artificial intelligence and its role in society. Microsoft News Center Network site. Retrieved 4 November, 2022, from https://news.microsoft.com/futurecomputed/

Microsoft. (2018b). Responsible bots: 10 guidelines for developers of conversational AI. Retrieved 25 May, 2022, from https://www.microsoft.com/en-us/research/uploads/prod/2018/11/BotGuidelinesNov2018.pdf

Microsoft. (2018c, May). Facial recognition: It’s time for action. Retrieved 25 May, 2022, from https://blogs.microsoft.com/on-the-issues/2018/12/06/facial-recognition-its-time-for-action/

Microsoft. Responsible AI principles from Microsoft. Retrieved 25 May, 2022, from https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1%3Aprimaryr6

Milosevic, Z. (2021). Enabling scalable AI for digital health: Interoperability, consent and ethics support. In 2021 IEEE 25th international enterprise distributed object computing workshop (EDOCW). https://doi.org/10.1109/edocw52865.2021.00028

Minkkinen, M., Niukkanen, A., & Mäntymäki, M. (2022). What about investors? ESG analyses as tools for ethics-based AI auditing. AI Society. https://doi.org/10.1007/s00146-022-01415-0

Morley, J., Floridi, L., Kinsey, L., & Elhalal, A. (2019). From what to how: An initial review of publicly available AI ethics tools, methods and research to trans- late principles into practices. Science and Engineering Ethics, 26(4), 2141–2168. https://doi.org/10.1007/s11948-019-00165-5

Nativi, S., & De Nigris, S. (2021). AI Watch, AI standardisation landscape state of play and link to the EC proposal for an AI regulatory framework. Publications Office of the EU. https://op.europa.eu/en/publication-detail/-/publication/36c46b8e-e518-11eb-a1a5-01aa75ed71a1/language-en/format-PDF

Nicotine, C. (2020). Build confidence and acceptance of AI-based decision support systems—explainable and liable AI. In 13th international conference on human system interaction (HSI). https://doi.org/10.1109/hsi49210.2020.9142668

OECD. (n.d.). Retrieved 28 April, 2022, from https://stats.oecd.org/index.aspx?queryid=81354

Open Data Charter. (2015). Principles. Retrieved 24 May, 2022, from https://opendatacharter.net/principles/

Oxford Insights. (2020). Government AI readiness index 2020. Retrieved 4 April, 2022, from https://www.oxfordinsights.com/government-ai-readiness-index-2020

Parliament and Council of the European Union. (2016). General data protection regulation.

Pásztor, D. (2018, April 17). AI UX: 7 principles of designing good AI products. Retrieved 26 May, 2022, from https://uxstudioteam.com/ux-blog/ai-ux/

Philips. (2020, 21 June). Five guiding principles for responsible use of AI in healthcare and healthy living. Retrieved 26 May, 2022, from https://www.philips.com/a-w/about/news/archive/blogs/innovation-matters/2020/20200121-five-guiding-principles-for-responsible-use-of-ai-in-healthcare-and-healthy-living.html

Pillai, V. S., & Matus, K. J. (2020). Towards a responsible integration of artificial intelligence technology in the construction sector. Science and Public Policy, 47(5), 689–704. https://doi.org/10.1093/scipol/scaa073

Price Water House Coopers. (2019). Responsible AI toolkit. Retrieved 26 May, 2022, from https://www.pwc.com/gx/en/issues/data-and-analytics/artificial-intelligence/what-is-responsible-ai.html

Qin, F., Li, K., & Yan, J. (2020). Understanding user trust in artificial intelligence-based educational systems: Evidence from China. British Journal of Educational Technology, 51(5), 1693–1710. https://doi.org/10.1111/bjet.12994

Rakova, B., Yang, J., Cramer, H., & Chowdhury, R. (2021). Where responsible AI meets reality. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW1), 1–23. https://doi.org/10.1145/3449081

Razon, O. (2021, 12 January). Council post: The state of AI in production in 2021. Retrieved 10 May, 2021, from https://www.forbes.com/sites/forbestechcouncil/2021/01/13/the-state-of-ai-in-production-in-2021/?sh=130d6cfd1669

Rodríguez Oconitrillo, L. R., Vargas, J. J., Camacho, A., Burgos, Á., & Corchado, J. M. (2021). Ryel: An experimental study in the behavioral response of judges using a novel technique for acquiring higher-order thinking based on explainable artificial intelligence and case-based reasoning. Electronics, 10(12), 1500. https://doi.org/10.3390/electronics10121500

Roski, J., Maier, E. J., Vigilante, K., Kane, E. A., & Matheny, M. E. (2021). Enhancing trust in AI through industry self-governance. Journal of the American Medical Informatics Association, 28(7), 1582–1590. https://doi.org/10.1093/jamia/ocab065

Ryan, M. (2020). In AI we trust: Ethics, artificial intelligence, and reliability. Science and Engineering Ethics, 26(5), 2749–2767. https://doi.org/10.1007/s11948-020-00228-y

Sage. (2017). Business builders ethics of code - Sage US. Retrieved 26 May, 2022, from https://www.sage.com//media/group/files/business-builders/business-builders-ethics-of-code.pdf?la=en

SAP. (2018, 18 September). Sap’s guiding principles for artificial intelligence. Retrieved 26 May, 2022, from https://news.sap.com/2018/09/sap-guiding-principles-for-artificial-intelligence/

Sharma, S., Rawal, Y. S., Pal, S., & Dani, R. (2022). Fairness, accountability, sustainability, transparency (fast) of artificial intelligence in terms of hospitality industry. ICT Analysis and Applications, 495–504. https://doi.org/10.1007/978-981-16-5655-248

Shneiderman, B. (2020). Bridging the gap between ethics and practice. ACM Transactions on Interactive Intelligent Systems, 10(4), 1–31. https://doi.org/10.1145/3419764

Snyder, H. (2019). Literature review as a research methodology: An overview and guidelines. Journal of Business Research, 104, 333–339. https://doi.org/10.1016/j.jbusres.2019.07.039

Soler Garrido, J., Fano Yela, D., Panigutti, C., Junklewitz, H., Hamon, R., Evas, T., … Scalzo, S. (2023). Analysis of the preliminary AI standardisation work plan in support of the AI Act. Publications Office of the EU. https://publications.jrc.ec.europa.eu/repository/handle/JRC132833

Sony Group. (2021, 1 April). AI engagement within Sony Group. Retrieved 26 May, 2022, from https://www.sony.com/en/SonyInfo/csr_report/humanrights/AI_Engagement_within_Sony_Group.pdf

Statistics explained. Retrieved 28 April, 2022, from https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Statisticsonsmallandmedium-sizedenterprises

Tao, J., Xiong, Y., Zhao, S., Xu, Y., Lin, J., Wu, R., & Fan, C. (2020). Xai-driven explainable multi-view game cheating detection. In 2020 IEEE conference on games (CoG). https://doi.org/10.1109/cog47356.2020.9231843

Telia. (2019). Guiding principles on trusted AI ethics. Telia Company. Retrieved 26 May, 2022, from https://www.teliacompany.com/globalassets/telia-company/documents/about-telia-company/public-policy/2018/guiding-principles-on-trusted-ai-ethics.pdf

The Alan Turing Institute & ICO. (2020, 20 May). ICO: Explaining decisions made with AI. Retrieved 10 May, 2021, from https://iapp.org/resources/article/ico-explaining-decisions-made-with-ai/

Unity Technologies. (2018, 28 November). Introducing unity’s guiding principles for ethical AI. Retrieved 26 May, 2022, from https://blog.unity.com/technology/introducing-unitys-guiding-principles-for-ethical-ai

UNSCEB. Inter-agency working group on artificial intelligence. Retrieved 24 May, 2022, from https://unsceb.org/inter-agency-working-group-artificial-intelligence

Vakkuri, V., Kemell, -K.-K., Kultanen, J., & Abrahamsson, P. (2020). The current state of industrial practice in artificial intelligence ethics. IEEE Software, 37(4), 50–57. https://doi.org/10.1109/ms.2020.2985621

Vică, C., Voinea, C., & Uszkai, R. (2021). The emperor is naked. Információs Társadalom, 21(2), 83. https://doi.org/10.22503/inftars.xxi.2021.2.6

Vodafone Group. (2019). Artificial intelligence framework. Retrieved 26 May, 2022, from https://www.vodafone.com/about-vodafone/how-we-operate/public-policy/policy-positions/artificial-intelligence-framework

Wasilow, S., & Thorpe, J. B. (2019). Artificial intelligence, robotics, ethics, and the military: A Canadian perspective. AI Magazine, 40(1), 37–48. https://doi.org/10.1609/aimag.v40i1.2848

World Economic Forum. (2020, 11 June). AI procurement in a box. Retrieved 24 May, 2022, from https://www.weforum.org/reports/ai-procurement-in-a-box

World Government Summit. (2019). AI ethics: The next big thing in government. Retrieved 10 May, 2021, from https://www.worldgovernmentsummit.org/observer/reports/2019/detail/ai

Zapata, C. (2015). Integration of usability and agile methodologies: A systematic review. Design, user experience, and usability. Design Discourse, 368–378. https://doi.org/10.1007/978-3-319-20886-235

Zhang, H., & Gao, L. (2019). Shaping the governance framework towards the artificial intelligence from the responsible research and innovation. In 2019 IEEE international conference on advanced robotics and its social impacts (ARSO). https://doi.org/10.1109/arso4640

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by [Marwa Soudi]. The first draft of the manuscript was written by [Marwa Soudi] and supervised by [Prof. Merja Bauters]. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Competing Interests

Not applicable.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Soudi, M., Bauters, M. AI Guidelines and Ethical Readiness Inside SMEs: A Review and Recommendations. DISO 3, 3 (2024). https://doi.org/10.1007/s44206-024-00087-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44206-024-00087-1