Abstract

With the rapid and continuing development of AI, the study of human-AI interaction is increasingly relevant. In this sense, we propose a reference framework to explore a model development in the context of social science to try to extract valuable information to the AI context. The model we choose was the Prisoner Dilemma using the Markov chain approach to study the evolution of memory-one strategies used in the Prisoner’s Dilemma in different agent-based simulation contexts using genetic algorithms programmed on the NetLogo environment. We developed the Multiplayer Prisoner’s Dilemma simulation from deterministic and probabilistic conditions, manipulating not only the probability of communication errors (noise) but also the probability of finding again the same agent. Our results suggest that the best strategies depend on the context of the game, following the rule: the lower the probability of finding the same agent again, the greater the chance of defect. Therefore, in environments with a low probability of interaction, the best strategies were the ‘Always Defect’ ones. But as the number of interactions increases, a different strategy emerges that is able to win Always Defect strategies, such as the Spiteful/grim. In addition, our results also highlight strategies that emerge in situations in which Spiteful/grim and Always Defect were banned. These are memory-one strategies with better performance than both TFT and PAVLOV under all conditions showing behaviors that are particularly deceiving but successful. The previously memory-one strategies for the Prisoner Dilemma represent a set of extensively tested strategies in contexts with different probability of encountering each other again and provide a framework for programming algorithms that interact with humans in PD-like trusted contexts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The common basis of human interaction is trust, whenever we meet someone we decide whether we trust them or not and, as we gather more information, this initial judgment is either reinforced or challenged. This dynamic is not exclusive to human interactions; it also extends to other realms, such as the social dynamics of non-human organisms and, more recently, interactions between humans and artificial intelligence (AI). Notably, there are some examples of experiments in human-AI interaction where trust has been disrupted. Take, for instance, the case of hitchBOT, a hitchhiking robot who travels across Canada, Germany and Netherlands asking to be carried to those who found it, because it was unable to walk, but when it started to travel across United State, it met a tragic end when it was stripped and decapitated in Philadelphia, Pennsylvania [23]. Another pertinent example involves the interaction between humans and autonomous vehicles (AVs), where instances of vandalism against these machines have been observed [17].

Those examples of aggressive behaviors towards AI organisms highlight the importance of the study of trust among humans and AI, in special when due to the advance of Artificial Intelligence (AI) and the development of Large Language Models (LLMs) such as ChatGPT, the interaction between humans and AI (human-AI) is becoming more and more common, and their study is a critical point in the modern technology landscape [14]. Many different approaches have been used to address this issue, generally focusing on algorithm trust or distrust [1]. Here, we want to explore human-AI interaction from another point of view, using models that have been useful in social psychology, specifically, in the study of social dilemmas, such as The Prisoner’s Dilemma (PD).

The PD is one of the social dilemmas studied in game theory [4, 11]. It was originally proposed by Merrill Flood and Melvin Dresher, and formalized by Albert W. Tucker [21]. Social dilemmas, such as the PD, are situations in which two or more individuals/organisms interact and have to decide whether to maximize personal or collective interest, but if they try to maximize personal interests the results will be worse than if they try to maximize collective interests [9]. The PD represents a situation in which two robbers are arrested and imprisoned, but evidence for conviction is weak and the prosecutor tries to induce a confession. The prisoners are put in separate rooms and informed that the best one can do is betray the other because the other partner has already betrayed him/her.

The prisoners have the option of betraying or not, and the prosecutor explains a set of outcomes: if each betray the other, each will serve 2 years in prison. If one of them betrays but the other remains silent, the one that betrays is set free and the other serves 3 years in prison. Finally, if both remain silent, they will serve one year in prison. The possible outcomes receive a name that describes the situation from the point of view of each prisoner. When both decide to remain silent, the situation is called Reward (R). When one of them decides to betray but the other remains silent, the one that betrays is in a Temptation (T) situation, and the partner in a Sucker (S) situation. Finally, if both decide to betray, they are in a Punishment (P) situation.

The relationship between the outcomes obtained in each of the four possible situations is described mathematically in (1) [3, 19],

PD as a social dilemma depends on those relationships. If Punishment were greater than Reward, both prisoners would opt to betray without doubt [19]. Similarly, if Reward were greater than Temptation, the best option would certainly be for both to remain silent.

The definition of possible outcomes such as R, S, T, and P [19] also allows us to approach the PD mathematically and computationally, as the decision processes of each prisoner could be considered a system that changes from one state to another following a specific probability. This decisions of this system are controlled by a matrix of conditional probabilities (pR, pT, pS, pP), following Nowak and Sigmund, [19], which define the probability to cooperate given the respectively outcome in the last trial. From this point of view, the probability of change from one state to another representing a specific strategy, and for this reason, this representation could be consider the PD as a Markov process, for which only the last outcome will be define the odds of cooperation in the next trial.

The approach of PD as a Markov chain process was introduced originally by [19] and formally developed by Press and Dyson [22], who also proposed describing seminal PD strategies, such as Tit-for-tat (TFT) and Win-stay, lose-switch (also known as PAVLOV [12]), using the probability matrix of state change (see Table 1).

Considering the prisoners' actions as a Markov chain system implies that their decisions depend only on the result of the last interaction (a condition commonly called memory-one), but not all the well-known PD strategies fit this criterion such as TIT FOR TWO TATS (tf2t) or soft-majo [3] among others. In the tf2t, the player cooperate the two first moves, and only defect when the opponent has defected twice in a row. In the soft-majo, the player begins and continues to cooperate as long as the number of times his opponent has cooperated is greater than the number of times he has defected. These strategies are interesting because they involve a number of elements that are not included in memory-one strategies, however, the inclusion of more information in the decision-making process does not necessarily improve players’ performance. Li and Kendall [15] showed that longer memory strategies underperformed when competing against memory-one strategies. Considering the above, we will focus in the memory-one strategies that can be describe as a Markov chain system. As can be seen in Table 1, beyond the seven combinations previously described in the literature [13] presented under the name designated in Table 1, there are nine other possibilities of zeros and ones combinations that could represent other memory-one strategies for PD, representing both deterministic and probabilistic environments.

Each of these possible strategies has a performance that depends not only on the probability matrix, but also on some other factors that could be introduced in the game, such as more than two players playing more than once [a variant of the PD called the Iterated Multiplayer Prisoner’s Dilemma (IMPD)] and an environment in which the players interact [8]. The manipulation of the environment, for instance changing the probability of two prisoners to interact, allows the introduction of variations on the IMPD expanding the possibilities of application and interpretation [28]. This approximates the IMPD to situations in which the interactions happen sporadically, and a player does not have to worry about a retaliation from another player due to the low probability of finding him or her again, a situation very similar to car traffic.

When both factors (the probabilistic definition of strategies and different probabilities of interaction in an IMPD environment) are combined, an efficient scenario to evaluate the effectiveness of all possible Markov chain PD strategies can be obtained. In the present work, we evaluated if there is a Markov chain PD strategy that would be considered ideal in an iterated PD, in which the prisoner has to play more than once, with more than one prisoner (PD N-Person Iterated). Similar works have been done [5, 16] but with a different strategy definition and without altering the probability of interactions.

We worked in an evolutionary and a tournament contexts. The evolutionary context is a simulated situation of a PD N-Person Iterated game in which each simulated prisoner (also called agent) is created with a random strategy and has to play with other agents. After a set of interactions, the best strategies (depending on their accumulated outcomes) are selected to compound the next generation. In addition to the best strategies, a set of new agents with random strategies is introduced generation after generation, increasing the probability of finding a good strategy, following the methodology of genetic algorithm [7, 10].

Otherwise, the tournament context is a group of computational agents competing in a PD N-Person Iterated game using from two up to five different strategies in deterministic and probabilistic environments. We compared these two environments with the objective of providing information on the performance of memory-one strategies in an environment with a low probability of meeting again, when compared to an environment in which there is always a high probability of playing again with any player, that the context used in previously works [5, 16]. In this sense, the fact that our players have a history of each interaction with each one of the other players will provide information regarding the specific performance of each memory-one strategies in this kind of context.

We strongly believe that an evolutionary evaluation of the quality of a PD strategy would provide different information than a traditional tournament in which the strategies are compared exhaustively. In addition, both the tournament and evolutionary evaluations will provide a wide vision of the PD strategies based on Markov chains, and that would be and interesting framework to the study of human-AI interactions, in special, to design elements to build trusted interactions.

2 Methods

We adapted two models available in the NetLogo library [26] to develop two experiments. In the first, our focus was to identify the best memory-one strategies in an evolutionary context, and in the second, the objective was to identify which strategies were the best in a championship context.

NetLogo is an agent-based modeling software [26] and we chose it because it is an open source, multi-platform, and intuitive program. NetLogo is relatively easy to use and was designed for teaching audiences with little programming background. The software comes with a series of models from different domains, such as psychology, economics, biology, and physics, in a built-in library.

Our model was created over a series of customizations of previously existing models of the PD (NetLogo: PD N-Person Iterated) and genetic algorithms (NetLogo: Simple Genetic Algorithm). PD N-Person Iterated is a multiplayer version of the iterated PD [27], and the Simple Genetic Algorithm is a genetic algorithm that solves the “ALL-ONES” problem [24].

A series of alterations were made, beginning with the implementation of a Markov chain system in the NetLogo PD N-Person Iterated (PDNI) in order to create stochastic memory-one strategies. These strategies were represented as a [R S T P] matrix as cited in the Introduction. The PDNI consists of a multiplayer version of PDI. This model explores the implications of strategic decisions on a PDI environment. It allows different strategies such as RANDOM, COOPERATE, DEFECT, TIT-FOR-TAT, PAVLOV, among others. Based on the interactions, an AVERAGE-PAYOFF parameter is calculated, following Table 2, for each interaction and works as an indicator of how well a strategy of an agent is doing given its last decision. Additionally, the outcomes given to each agent in this model, following Press and Dyson [22], were fixed on [3 0 5 1] for [R S T P] situations, respectively, as shown in Table 2. The set of agents is located in a bidimensional space in which they can walk, meet, and interact. Each player recorded the status of the last interaction with each player, using that information to decide whether to cooperate or defect in the event of meeting that player again (See more programming details in Figshare repository https://figshare.com/articles/software/NetLogo_code_for_the_High_Interaction_Probability_HIP_and_the_Low_Interaction_Probability_LIP_environments/24480493).

We translated the previous mentioned strategies (RANDOM, COOPERATE, DEFECT, TIT-FOR-TAT, GRIM, STUBBORN, FICKLE) to the Markov chain system model and implemented the possibility of defining more strategies on demand. We also allowed changing the outcomes through the GUI interface while the simulation was running.

2.1 Experiment 1

For the first experiment, we prepared two different environments by changing the probability of interaction. In the High Interaction Probability (HIP) environment, all of the agents interacted with all others in each round (representing 100 interactions by round for each agent). In the Low Interaction Probability (LIP) environment, the simulation started with the agents distributed randomly in the space, and each step could be taken in any direction trying to find a partner, although not necessarily interacting with another agent. Every round took 320 steps, which is approximately the number of steps that an agent takes to make 100 interactions. In the HIP environment, a round takes one tick (step), in contrast to the LIP environment where a round takes 320 ticks.

Both models, HIP and LIP, were used to evaluate the generation of new strategies using an adaptation of the NetLogo’s Simple Genetic Algorithm (SGA). The SGA works by generating a random population of solutions to a problem and then evaluating those solutions. This model has a list of 0’s and 1’s called BITS which composes the chromosome that evolves over generations. In our implementation, the BITS parameter was composed of four numbers, each representing the values of conditional probabilities matrix that controlled the probability of cooperating on the next trial. The BITS parameter is originally set randomly and then, in each generation, new versions of BITS’s are created by cloning (keeping the BITS unaltered in the next generation), crossover or mutation.

The crossover was performed by selecting two sets of BITS and a cross-over point, a random number between 1 and 4 that defines the recombination point. For example, if that number was 1, the new set of BITS must be composed by including the first parameter (pR) of one of the BITS and the next three parameters (pT, pS, pP) of the other. For the mutation, a random number between 1 and 4 was drawn and used to define the mutation point, and the BITS number in that position was replaced by a new randomly generated number. At the end of each interaction, the fitness of each agent was estimated as a function of its gains using the payoff matrix shown on Table 2.

We adapted the SGA for solving an N-person PDNI problem, introducing the two previously mentioned environments into the SGA model and maintaining their structure. The processes of mutation, crossover, and cloning were adapted for a population of PDI agents. Our population consisted of agents with random strategies formed by matrices of four numbers 0 or 1. The agents interacted over a series of TICKs and evolved into new agents with strategies based on the fitness of the previous agents. The individual fitness was the sum of all agent payoffs during all interactions over a generation (a round). For every tick, graphs were plotted showing the evolution of each strategy over the simulation. These data were saved in external files for posterior analysis with Python scripts.

The initial population in both environments was 100 agents, all created with random Markovian strategies. The “chromosome” was its strategy matrix, based on conditional probabilities of cooperation depending on the result of the last interaction. Each generation played around 50,000 ticks and then, based on their fitness, some died (50 agents) and were replaced by new agents with random strategies, others crossovered (40 agents) generating new agents, and others (10 agents) cloned their chromosome to the next generation. The mutation-rate was set to 0.1. This number, among with the crossover and elitism rates, was setting following values described in the literature for a similar size population [20].

The simulation stopped when dominant strategies appeared and were maintained over time. After that, new simulations were performed, banning the dominant strategies previously found. This process was repeated until the best ten strategies were found.

The last modification was prepared to extend the number of ticks of the LIP (LIP2) environment from 320 up to 50,000 in an attempt to offer a sufficient number of interactions and guarantee that each agent had interacted with each other, in other words, that the dominant strategy that emerges has been in contact with all other strategies at least once.

2.2 Experiment 2

To assess the performance of the new strategies, a series of competitions was set up to compare them against well-known strategies, including COOPERATE, Always Defect, TIT-FOR-TAT, and PAVLOV. Each strategy under evaluation participated in individual matchups and group competitions with one, two, three, and four of the aforementioned strategies. In each round, 45 agents per strategy competed over 50,000 ticks, and the average score was calculated to determine the strategy's overall performance. This process was repeated until each strategy was tested against all combinations of two, three, and four well-known strategies. The outcomes were then compiled to identify the number of victories for each strategy, providing a measure of its quality.

In contrast with the first experiment, in the second experiment we introduce a noise condition, taking advantage of the Markov chain system, allowing numbers from 0 to 1 in the Markov chain matrices, making the agent decision probabilistic. Therefore, if the noise was set to 1, the strategy of a TFT agent was [1 0 1 0], whereas a noise of 0.8 gave a TFT strategy matrix of [0.8 0.2 0.8 0.2].

3 Results

Our model was able to reproduce the dynamics of the PD, and confirm the thesis by Axelrod [3] and Nowak and Sigmund [19] that determines that TFT is the best strategy among the other common strategies in a deterministic environment (PAVLOV, Always Defect, Always Cooperate) in a two-player world (see Fig. 1a). Results of the PAVLOV Strategy [19] were also observed and confirmed, which won against TFT on a probabilistic environment (see Fig. 1b) reproducing the expected results of an IPD model.

Regarding the first experiment and the genetic algorithm model, the performance of the best 10 memory-one strategies were ranked (see Table 3) based on the Markovian model for the three different environments. For HPI and LIP2, the same strategies in the same order were found. The best strategies for all simulations were Spiteful/grim [1 0 0 0] and Always Defect [0 0 0 0], where Spiteful/grim always emerged when the agents had a large number of interactions, contrary to Always Defect, which was selected when the agents had a low number of interactions. These strategies consistently appeared in all replications, and all of the elite agents used it when they were present in the “genetic pool”.

In the case of the HIP and LIP2, when Spiteful/grim was banned from the simulation, the second and third best strategies were Always Defect ([0 0 0 0]) and [0 1 0 0], which appeared together among the genetic pool of the elite agents. In fourth place, after banning the previous ones, [0 0 0 1] appeared as the predominant strategy of the elite agents.

The Pavlov and TFT strategies appeared only in fifth and sixth position, respectively, as dominant strategies. After them, four different strategies ([0 0 1 0], [1 0 1 1], [0 1 0 1] and [0 1 1 0]) dominated together the genetic pool of the elite agents.

On the other hand, for the LIP environment, once the Always Defect strategy was banned, the second best strategy was [0 1 0 0] (third strategy for HIP and LIP2) followed by [0 0 1 0] and [0 1 1 0] (fourth and ninth strategy for HIP and LIP2). The Pavlov and TFT strategies appeared in the ninth and tenth positions.

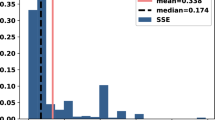

For the second experiment, when comparing the new strategies (the ones in bold in Table 3) with the traditional ones (TFT, Pavlov, Always Defect, and Always Cooperate) in a tournament environment, the interference of noise on the performance of the different strategies could be observed.

In the deterministic environment, two strategies stood out: [1 0 0 0] and [1 0 1 1] (see Fig. 2), which earned the best results against the four traditional strategies, in all cases these strategies started cooperating.

In a noise condition (see Fig. 3), there was a greater variety of winners, depending not only on the strategy but also on the number of opponents. For one and two opponents, the best strategies were [0 0 0 1] and [0 1 0 0] and for three or more, the best was Always Defect (see Fig. 3). Another strategy that stood out in a noise environment was [1 0 0 0], which had a tie with [0 0 0 1] and [0 1 0 0] in a tournament with 3 opponents.

4 Conclusions

This paper presents two computational approaches to understand memory-one strategies in the PDI game, and explore how those strategies behave in different environments, such as with the presence of other strategies, with different probabilities of interactions, and with or without noise interference in player’s decisions, in order to understand and describe possible strategies to could used in human-AI interaction.

Four memory-one strategies were highlighted, which topped the well-known TFT, PAVLOV, Always Defect, and Always Cooperate PD strategies. One of them (grim, [1 0 0 0])) appeared as a dominant strategy in an evolutionary environment (first experiment) and defeated all of the four traditional strategies in tournament conditions (second experiment) without noise in interactions. This strategy was named Spiteful/grim [16] probably because the agents that used it only cooperated with the ones that had previously cooperated with them, otherwise they would use Always Defect, and for this it is an optimal candidate to emerge in contexts full of defectors to encourage cooperation [6]. The second strategy was also highlighted in the evolutionary environments HIP and LIP 2 and in the noise tournament condition, this strategy is very similar to Always Defect with a low probability of remaining silent: [0 0 0 1]. It can be considered as a “when both lose, I change” strategy, which avoids mutual defection. The thirst strategy standouts only in the evolutionary environment and it was also very similar than the previously with a low probability of remaining silent: [0 1 0 0], and it could be considered a “stubborn loser”, because it insists on remaining silent when the opponent defected. These last two strategies cannot defeat Always Defect, but because they are deceitful strategies, they can defeat all others, always defecting after remaining silent.

The fourth strategy was only outstanding in the deterministic environment (second experiment) and it was a mix between TFT and PAVLOV: [1 0 1 1] defecting only after being in the Sucker (S) situation. This characteristic can be interpreted as a TFT that avoids mutual defecting, because after mutual defecting, the agent would remain silent in the next interaction, preventing the defecting loop in which the TFT can fall. At the same time, the strategy can be understood as a gentle or forgiving PAVLOV, because after being in the Punishment situation (P) the agent would still remain silent in the next interaction. The first and the last of these strategies share the four characteristics of the effective strategies in the PD: being nice, forgiving, provocable and clear [2], in contrast, the second and third are deceitful strategies, and share only the provocable characteristic.

Another interesting aspect that stands out in our results is the way in which the probability of interactions affects the importance of a strategy in the first experiment. For instance, in LPI environments, the strategy Always Defect won over all the others, and the best strategy found in HPI (Spiteful/grim) was not among the top ten best strategies in the low probability environment. Also, the TFT and PAVLOV strategies were ranked worst in LPI than HPI, and strategies that were high-ranked in HPI were low-ranked in LPI and vice-versa. This pattern suggests that the dynamics of the PD depends on the probability of interactions, similar to the dynamics of interactions in traffic, in which the low probability of meeting again other drivers makes strategies such as Always Defect dominate drivers decisions, in a similar way as describe by Nakata et al. [18], Tanimoto and Nakamura [25] and Yamauchi et al. [29] who affirm that traffic flow involves social dilemma structures, such as that in the Chicken game or PD game. The above corroborates the importance of social dilemmas in the context of human-AI interactions, in particular with regard to AV programming, for which the incorporation of this type of rules would be useful for models that estimate the probability of a certain behavior by another vehicle.

On the other hand, there was no strategy that overcame the strategy of Always Defect in noisy environments when there were more than two other strategies in the tournament environment. The All Defeat was defeated by [0 0 0 1], [0 1 0 0] and [0 1 1 1] but only when no other strategy was available. The above contrasts sharply with the results of the championship without noise, for which the strategy Spiteful/grim ([1 0 0 0]) were dominant. That points out the weakness of traditional strategies, such as TFT, Pavlov and Always Cooperate, in noisy contexts that can be easily exploited by deceitful strategies. In contrast, the Spiteful/grim showed a reasonable performance even against Always Defect in noisy environments. This points out the strength of Spiteful/grim strategy, which stood out as the best strategy among the different environments and conditions studied in the two experiments presented here.

Here we presented two approaches to evaluate memory-one strategies in the PD game. In this process, a group of strategies stood out, some of them can be considered deceitful strategies, such as [0 0 0 1] and [0 1 0 0], but others, like Spiteful/grim or [1 0 1 1], can be considered as nice strategies showing excellent performance in LPI or noisy environments and that outperform traditional TFT and PAVLOV strategies. The latter strategies are good examples of forgiven and clear strategies that can be use to programming human/AI interactions, they will not inhibit aggressive behaviors against the AI, but will help in building human/IA trusting interactions.

Data availability

The data that support the findings of this study as well as the analysis scripts in Python are openly available in figshare https://figshare.com/articles/dataset/Data_generated_by_the_simulations_file_resultados_csv_and_bash_pythons_scripts_to_process_this_data/24535306

Code availability

The NetLogo code for all models presented are openly avaiable in figshare https://figshare.com/projects/New_memory-one_strategies_of_the_Iterated_Prisoner_s_Dilemma_a_new_framework_to_programmed_human-AI_interaction/184795

References

Amershi S, Weld D, Vorvoreanu M, Fourney A, Nushi B, Collisson P, Horvitz E. Guidelines for human-AI interaction. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. New York: Association for Computing Machinery. 2019. pp. 1–13. https://doi.org/10.1145/3290605.3300233.

Axelrod R. More effective choice in the Prisoner’s Dilemma. J Confl Resolut. 1980;24(3):379–403.

Axelrod R. The evolution of cooperation. New York: Basic Books; 1984.

Axelrod R. Advancing the art of simulation in the social sciences. In: Conte R, Hegselmann R, Terna P, editors. Simulating Social phenomena. Berlin, Heidelberg: Springer; 1997. p. 21–40.

Cimini G, Sanchez A. How evolutionary dynamics affects network reciprocity in Prisoner’s Dilemma. J Artif Soc Soc Simul. 2015;18(2):22. https://doi.org/10.18564/jasss.2726.

Dragicevic AZ. Conditional rehabilitation of cooperation under strategic uncertainty. J Math Biol. 2019;79(5):1973–2003. https://doi.org/10.1007/s00285-019-01417-5.

Golbeck J. Evolving strategies for the Prisoner’s Dilemma. Advances in intelligent systems, fuzzy systems, and evolutionary computation. 2002. pp. 299–306.

Harrald PG, Fogel DB. Evolving continuous behaviors in the Iterated Prisoner’s Dilemma. Biosystems. 1996;37(1):135–45. https://doi.org/10.1016/0303-2647(95)01550-7.

Kelley HH. An atlas of interpersonal situations. Cambridge: Cambridge University Press; 2003.

Kim Y-G. Evolutionarily stable strategies in the repeated prisoner’s dilemma. Math Soc Sci. 1994;28(3):167–97. https://doi.org/10.1016/0165-4896(94)90002-7.

Komorita SS, Parks CD. Social dilemmas. Boulder: Westview Press; 1996.

Kraines D, Kraines V. Pavlov and the prisoner’s dilemma. Theor Decis. 1989;26(1):47–79. https://doi.org/10.1007/BF00134056.

Kraines DP, Kraines VY. Natural selection of memory-one strategies for the Iterated Prisoner’s Dilemma. J Theor Biol. 2000;203(4):335–55. https://doi.org/10.1006/jtbi.2000.1089.

Krishna R, Lee D, Fei-Fei L, Bernstein MS. Socially situated artificial intelligence enables learning from human interaction. Proce Natl Acad Sci USA. 2022;119(39): e2115730119. https://doi.org/10.1073/pnas.2115730119.

Li J, Kendall G. The effect of memory size on the evolutionary stability of strategies in Iterated Prisoner’s Dilemma. IEEE Trans Evol Comput. 2014;18(6):819–26. https://doi.org/10.1109/TEVC.2013.2286492.

Mathieu P, Delahaye J-P. New winning strategies for the iterated prisoner’s dilemma. J Artif Soc Soc Simul. 2017;20(4):12. https://doi.org/10.18564/jasss.3517.

Moore D, Currano R, Shanks M, Sirkin D. Defense against the dark cars: design principles for Griefing of autonomous vehicles. In: Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction. New York: Association for Computing Machinery. 2020. pp. 201–9. https://doi.org/10.1145/3319502.3374796.

Nakata M, Yamauchi A, Tanimoto J, Hagishima A. Dilemma game structure hidden in traffic flow at a bottleneck due to a 2 into 1 lane junction. Phys A. 2010;389(23):5353–61. https://doi.org/10.1016/j.physa.2010.08.005.

Nowak M, Sigmund K. A strategy of win-stay, lose-shift that outperforms tit-for-tat in the Prisoner’s Dilemma game. Nature. 1993;364(6432):56–8. https://doi.org/10.1038/364056a0.

Patil V, Pawar D. The optimal crossover or mutation rates in genetic algorithm: a review. Int J Appl Eng Technol. 2015;5(3):38–41.

Poundstone W. Prisoner’s Dilemma: John von Neumann, game theory, and the puzzle of the bomb. New York: Anchor; 1993.

Press WH, Dyson FJ. Iterated Prisoner’s Dilemma contains strategies that dominate any evolutionary opponent. Proc Natl Acad Sci. 2012;109(26):10409–13. https://doi.org/10.1073/pnas.1206569109.

Smith DH, Zeller F. The death and lives of hitchBOT: the design and implementation of a hitchhiking robot. Leonardo. 2017;50(1):77–8. https://doi.org/10.1162/LEONa01354.

Stonedahl F, Wilensky U. Netlogo simple genetic algorithm model. Evanston: Center for Connected Learning and Computer-Based Modeling, North-Western University. 2008. http://ccl.northwestern.edu/netlogo/models/simplegeneticalgorithm. https://ccl.northwestern.edu/netlogo/faq.html Accessed 28 July 2016.

Tanimoto J, Nakamura K. Social dilemma structure hidden behind traffic flow with route selection. Phys A. 2016;459:92–9. https://doi.org/10.1016/j.physa.2016.04.023.

Wilensky U. NetLogo center for connected learning and computer-based modeling, northwestern university, Evanston. 1999. https://ccl.northwestern.edu/netlogo/faq.html. Accessed 28 July 2016.

Wilensky U Netlogo pd n-person iterated model. Evanston: Center for Connected Learning and Computer-Based Modeling, Northwestern University. 2002. http://ccl.northwestern.edu/netlogo/models/pdn-personiterated. https://ccl.northwestern.edu/netlogo/faq.html. Accessed 28 July 2016.

Wu J, Axelrod R. How to cope with noise in the Iterated Prisoner’s Dilemma. J Confl Resolut. 1995;39(1):183–9. https://doi.org/10.1177/0022002795039001008.

Yamauchi A, Tanimoto J, Hagishima A, Sagara H. Dilemma game structure observed in traffic flow at a 2-to-1 lane junction. Phys Rev E. 2009;79(3):036104. https://doi.org/10.1103/PhysRevE.79.036104.

Funding

KPP was the recipient of a Fellowship for scientific initiation from CNPq.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Competing interests

The authors declare the absence of any financial, personal, or professional interests that could potentially bias their work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Paulo, K.P., Estombelo-Montesco, C.A. & Tejada, J. New memory-one strategies of the Iterated Prisoner’s Dilemma: a new framework to programmed human-AI interaction. Discov Psychol 4, 20 (2024). https://doi.org/10.1007/s44202-024-00133-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44202-024-00133-6