Abstract

In this manuscript, we implement a spectral collocation method to find the solution of the reaction–diffusion equation with some initial and boundary conditions. We approximate the solution of equation by using a two-dimensional interpolating polynomial dependent to the Legendre–Gauss–Lobatto collocation points. We fully show that the achieved approximate solutions are convergent to the exact solution when the number of collocation points increases. We demonstrate the capability and efficiency of the method by providing four numerical examples and comparing them with other available methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the special cases of partial differential equations (PDEs) is reaction diffusion equation (RDE) that has attracted the attention of many researchers, recently [1, 20, 28, 32, 33]. RDEs are the mathematical models which correspond with physical and chemical phenomena. Often, it is the change in space and time in viscosity of one and more chemical materials: chemical reactions in which the materials converted in each other, and diffusion which causes the materials to extend over a surface in space. RDEs are also applied in sciences such that biology [14], geology [15], ecology [20] and physics [23].

The general form of RDEs can be described as follows

and here we can consider the following initial and boundary conditions

where K is the diffusion coefficient, \(\varphi _{1}:[0,T]\rightarrow {{\mathbb {R}}} \), \(\varphi _{2}:[0,T]\rightarrow {{\mathbb {R}}} \) and \(\varphi _{3}:[0,L]\rightarrow {{\mathbb {R}}} \) are given sufficiently smooth functions. The target of this manuscript is to present an effective numerical method for solving the RDE (1) with conditions (2) and to analyze the convergence of the method.

There are several methods for solving this class of PDEs such as traveling wave method [19], finite elements [6], fixed-node finite-difference schemes [7] and spectral methods [4]. One of other methods for solving RDE presented by Reitz [22]. He applied different several methods for solving RDE. His methods had good numerical stability and can be used for multidimensional cases. Sharifi and Rashidian [24] applied an explicit finite difference associated with extended cubic B-spline collocation method for solving RDEs. Wang et al. [27] used the compact boundary value method (CBVM) for solving RDE. Their method is the combination of compact fourth-order differential method (CFODM) and P-order boundary value method (POBVM). This method is locally stable and have unique solution. Furthermore this method have fourth-order accuracy in space and P-order accuracy in place. Wu et al. [29] applied variational iteration method (VIM) for structuring integral equations to solve RDE. In this method, Lagrange multipliers and a discrete numerical integral formula are used to solve RDE. This method for first time was proposed by He [11]. Biazar and Mehrlatifan [3] solved RDE using the compact finite difference method. Diaz and Puri [8] applied the explicit positivity-preserving finite-difference method for solving RDE. Lee et al. [17] in their work investigated and found exact solutions of derivative RD system and next they showed some exact solutions of derivative nonlinear Schr\(\ddot{\mathrm{o}}\)dinger equation ( DNLS) via Hirota bilinearization method. Gaeta and Mancinelli [9] analyzed the asymptotic scaling properties of anomalous RDE. Their numerical results showed that for large t, well defined scaling properties. Another method for solving RDE is lifted local Galerkin which was presented by Xiao et al. [30]. Yi and Chen [31] introduced a new method based on repeated character maping of traveling wave for solving RDE. Toubaei et al. [26] represented one of the most applied functions of RDE in chemistry and biologic sciences in their paper and then they solved RDE by using collocation methods and finite differences methods. Koto [16] applied the implicit-explicit Range–Kutta method for RDE. Diaz [7] utilized a logarithmic numerical model. He considered the monotonousness, bounding and positiveness of approximations in following of his work and for first time he showed that the logarithmic designs are stable and convergent. The nonclassical symmetries method is used by Hashemi and Nucci [10] to solve the diffusion reaction equations. An et al. [2] suggested a method to compute the numerical approximation for both solutions and gradients, while the other methods can also compute the numerical solutions. Moreover, in this method they computed the element by element instead of solving the whole of system that this can decrease the expenses of computations.

Despite the existence of above-mentioned numerical methods , providing a numerical convergent method with simple structure and high accuracy, for solving RDEs, is still required. Hence, we extend a spectral collocation method to estimate the solution of RDEs. Spectral methods are one of the most powerful methods for solving the ordinary and partial differential equations [5, 25]. In this method, we apply a two-dimensional Lagrange interpolating polynomial to estimate the solution of the RDE. We apply the Legendre–Gauss–Lobatto (LGL) nodes as interpolating or collocation points and convert the RDE with its initial and boundary conditions into a system of algebraic equations. By solving this system, the coefficients of interpolating polynomial can be gained. We fully show that the approximate solutions are convergent to the exact solution when the number of collocation points tends to infinity. Note that spectral collocation methods have high accuracy and exponential convergence and, up to now many researchers utilized them to solve different continuous-time problems involving the ordinary and partial differential equations [12, 13, 18].

The paper is structured as follow: in Sect. 2, we implement the spectral collocation method for approximating the solution of RDE. In Sect. 3, we study the convergence of approximations to the exact solution of RDE. In Sect. 4, four numerical examples are given to show the efficiency and accuracy of methods comparing with those of others. Finaly, the conclusions and the suggestions are presented in Sect. 5.

2 Approximating the Solution by Spectral Collocation Method

We approximate the solution of system (1)–(2) as follows

where \(L_{r}(t)\) and \(L_{r}(x)\) are the Lagrange polynomials and defined as

where \( \{x_{n}\}_{n=0}^{N} \) and \( \{t_{m}\}_{m=0}^{N} \) are shifted LGL points [25] in intervals [0, L] and [0, T] , respectively, and are defined with the following relations

where \( \{x^{1}_{n}\}_{n=0}^{N} \) and \( \{t^{1}_{m}\}_{m=0}^{N} \) are the roots of the following polynomial

where \( W_{N}(.) \) is the Legendre polynomial [5] that is defined with the following recurrence formula

According to the approximation (3) we have

The Lagrange polynomials satisfy

So we can get

where \( D_{mi} \) and \( D^{(2)}_{nj} \) are defined as follow

and

By replacing the relations (9), (10) and (11) in (1) we get

where \( {\tilde{u}}_{mn}\) for \( m,n= 0,1,\ldots ,N\) are the unknowns. By solving algebraic system (14), we achieve the point-wise approximate solutions \( {\tilde{u}}_{mn}\ (m,n=0,1,\ldots ,N) \) and the continuous approximate solution \( u^N(.,.) \) defined by (3).

3 Convergence Analysis

In this section we analyze the convergence of the proposed method. We assume \( \Lambda =[0,T]\times [0,L] \) and \( C^{k}(\Lambda ) \) is the set of all continuously differentiable functions from order k. To check the convergence of the method, we initial with the following definition.

Definition 3.1

The continuous function \(F:{{\mathbb {R}}}^{+}\rightarrow {{\mathbb {R}}}^{+}\) with the following properties is called modulus of continuity [21]

-

1.

F is increasing,

-

2.

\(F(y)\rightarrow 0\) as \(y\rightarrow 0\),

-

3.

\( F(y_{1}+y_{2})\le F(y_{1})+F(y_{2}) \) for any \( y_{1},y_{2}\in {{\mathbb {R}}} \),

-

4.

\( y\le aF(y) \) for some \( a>0 \) and \( 0<y\le 2 \) .

A special case for modulus of continuity is

Here we consider that \(O ^{2} \) is unit circle in \( {{\mathbb {R}}}^{2} \). The continuous function f on \( \Lambda \), accepts F(.) as modulus of continuity when the following is finite

where

We utilize \( C^{1}_{F}(O^{2}) \) to show the set of the first continuously differentiable functions on the unit circle \( O ^{2}\) and equippe it with the following norm

Now we define

According to above if for some maps \( \Gamma _{1}, \ldots , \Gamma _{n} \)

then \( f(.,.)\in C^{1}_{F}(\Lambda )\) if and only if \( f\circ \Gamma _{i}(.,.)\in C_{F}(O ^{2}) \) for each \( i=1,\ldots ,N \). Furthermore \( C^{1}_{F}(\Lambda ) \) is a Banach space with the norm

We define \( P(N,N,\Lambda ) \), the space of all Polynomials, as

Lemma 3.1

For any \( f(.,.)\in C_{F}^{1}(\Lambda ) \), exists a polynomial \( \rho (.,.)\in P(N,N,\Lambda ) \) such that

where \(\alpha _{1}=\Vert f(.,.)\Vert _{1,F} \) and constant \( \alpha _{0} \) is independent of N.

Proof

The proof has been obtained from Theorem 2.1 in Ragozin [21]. \(\square \)

Related to the existence of solution, we convert system (14) into the following system

where N is enough large and F(.) is a function which satisfies Definition 3.1. Since \( \lim _{N\rightarrow \infty }\frac{\sqrt{N}}{2N-1}F\Big(\frac{1}{2N-1}\Big)=0\), every \( {\tilde{u}}_{mn}\ (m,n=0,1,\ldots ,N) \) in system (21) is a solution for system (14) as \( N\rightarrow \infty \). We now define

In the following, we show that system (21) is feasible.

Theorem 3.1

Suppose u(., .) is a solution for system (1)–(2) such that \( u(.,.)\in C_{F}^{1}(\Lambda ) \) then there is \( {\bar{N}}>0 \) such that for any \( N\ge {\bar{N}} \) the relaxed system (21) has a solution as

that satisfies

where constant \( \delta >0 \) is independent of N.

Proof

We suppose that \( \rho (.,.)\in P(N-1,N,\Lambda ) \) is the best approximation for \( u_{t}(.,.)\). With the Lemma 1

\(\square \)

where positive constant \(\kappa \) is independent from N. Define

and

We want to prove that \( {\tilde{u}}_{N} =({\tilde{u}}_{mn};\ m,n=0,1,\ldots ,N)\) satisfies system (21). By (24), (25) and (26) for \( (t,x)\in \Lambda \) we have

Now, according to the definition (25), the function \( {\tilde{u}}(.,x) , x\in [0,L]\) is a polynomial of degree less than or equal to N. So,

Therefore by (27) we have

where M is the Lipschitz constant of function \(\phi (.,.,.,.)\) with respect to the third component. Also, for bounded conditions we have for \( m=0,\ldots , N \)

where \(u_{mn}=u(t_m,x_n)\) for all \(m,n=0,1,...,N.\) Moreover for \( n=0,1,\ldots ,N \) we have

Now we can choice \( {\bar{N}} \) such that

for all \( N\geqslant {\bar{N}}\) and this completes the proof.

Here we want to give the convergence theorem of solutions.

Theorem 3.2

Suppose \( \{{\tilde{u}}_{mn}\};m,n=0,1,\ldots ,N\}_{N={\bar{N}}}^{\infty } \) is the sequence of the solutions of system (21) and \( \{u^{N}(.,.)\}_{N={\bar{N}}}^{\infty } \) is the sequence of polynomials defined in (3). We assume that for any \( x\in [0,L] \), the sequence \( \{(u^{N}(0,x),u^{N}_{t}(.,.))\}_{N={\bar{N}}}^{\infty } \) has a subsequence \(\{(u^{N_{i}}(0,x),u_{t}^{N_{i}}(.,.))\}_{i=0}^{\infty }\) such that converges to \( (\psi ^{\infty }(x),p(.,.)) \) uniformly, where \( p(.,.)\in C^{2}(\Lambda ) \), \( \psi ^{\infty }(.)\in C^{2}([0,L]) \) and \( \lim _{i\rightarrow \infty } N_{i}=\infty \). Then

Proof

Define

We show that \( {\tilde{u}}(.,.) \) satisfies system (1)–(2). Firstly, let \( {\tilde{u}}(.,.) \) does not satisfy (1). So there is a \((\tau ,y)\in \Lambda \) such that

We know the shifted LGL points \(\{t_m\}_{m=1}^N\) and \(\{x_m\}_{n=1}^N\) are dense in [0, T] and [0, L] , respectively, when \( N\rightarrow \infty \). So there are subsequences \( \{t_{m_{N_{i}}}\}_{i=1}^{\infty } \) and \( \{x_{n_{N_{i}}}\}_{i=1}^{\infty } \) such that \( 0<m_{N_{i}}<N_{i}, 0<n_{N_{i}}<N_{i}\), \( \lim _{i\rightarrow \infty }t_{m_{N_{i}}}=\tau , \; \lim _{i\rightarrow \infty }x_{n_{N_{i}}}=y \) and \(\lim _{i\rightarrow \infty }N_i=\infty \). Hence, by (35) we get

On the other hand, since \(\lim _{i\rightarrow \infty }\frac{\sqrt{N_{i}}}{2N_{i}-1}F\Big(\frac{1}{2N_{i}-1}\Big)=0\), by (21) we get

and this contradicts relation (36). So \( {\tilde{u}}(.,.) \) satisfies the Eq. (1). Moreover, it is easy to show that \( {\tilde{u}}(.,.) \) satisfies the initial and boundary conditions (2) and this completes the proof. \(\square \)

4 Examples

In this section, we have provided four of examples to illustrate the efficiency of method in solving RDEs. The first example is constructed by the authors to test the method. The next three examples show the comparison of the suggested method with other existing methods. We solve the corresponding system (14) using FSOLVE command in MATLAB software. The absolute error of gained estimate solution \( u^{N}(.,.) \) is defined by

Also, We calculate the \(L_{2}\) and \(L_{\infty }\) errors of approximations by the following relations

Example 4.1

Consider the RDE (1)–(2) with \( g(t,x,u)=u+e^{t}sinx, K=1 \) and the following conditions

The accurate solution is \( u(t,x)=e^tsinx,\ (t,x)\in [0,1]^2\). We solve this equation for N = 10 using suggested method. Figure 1 shows the obtained approximate solution and its absolute error. Also, Fig. 2 illustrates that by increasing N, the \(L_{2}\) and \(L_{\infty }\) errors decrease. This shows our presented method has good accuracy and stable treatment.

Example 4.2

Consider the RDE (1)–(2) with \( g(t,x,u)=u-u^{2}+3e^{t-x}cos(t+x)+e^{2(t+x)}sin(t+x)^{2}, \; K=1 \) and the following conditions

The accurate solution for this example is \( u(t,x)=e^{t-x}sin(t+x),\ (t,x)\in [0,1]^2\). We illustrate the obtained approximate solution and its absolute error for N = 10 in Fig. 3. \( E_{2}^{N}\) and \( E_{\infty }^{N}\) errors are presented in Fig. 4. It can be seen that by increasing N, these errors decrease and our method is stable. Also we compare the presented method with IMEX Range–Kutta method [16], that are shown in Table 1. These results present that the \( E_{2}^{N} \) error of suggested method is less than that of the method [16].

Example 4.3

Consider the RDE (1)–(2) with \( g(t,x,u)=6u(1-u), \;K=1\) and the following conditions

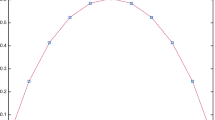

For this example, the accurate solution is \( u(t,x)=\frac{1}{(1+e^{x-5t})^{2}},\ (t,x)\in [0,1] \). We solve this equation for N = 20 using our approach . Figure 5 shows the gained approximate solution and its absolute error. Also, Fig. 6 illustrates that by increasing N, the \( E_{2}^{N}\) and \( E_{\infty }^{N}\) errors decrease and the presented method has good accuracy. Then we compare with VIM method [29], that are shown in Table 2.

Example 4.4

Consider the RDE (1)–(2) with \( g(t,x,u)=-0.5u \), \(K=0.1\) and following conditions

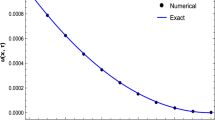

The accurate solution is \( u(t,x)=e^{(-0.5-0.1\pi ^{2})t}sin(\pi x),\ (t,x)\in [0,1]^{2} \). We illustrate the obtained results, for N = 9, in Fig. 7. \( E_{2}^{N}\) and \( E_{\infty }^{N} \) errors, for N = 9, are presented in Fig. 8. It can be seen that the errors decrease when N increases. We also give the absolute error of suggested method, compact finite difference method [3], explicit finite difference method [8] and collocation method [24] in the Table 3 . The results show that the error of suggested method is less than that of others.

The estimate solution \(U^N(.,.)\) and logarithm of \( E^{N}(.,.) \) with \( N=10 \) for Example 4.1

The estimate solution \(U^N(.,.)\) and logarithm of \( E^{N}(.,.) \) with \( N=10 \) for Example 4.2

The logarithm of \( E_{2}^{N}\) and \( E_{\infty }^{N} \) for Example 4.2

The estimate solution \(U^N(.,.)\) and logarithm of \( E^{N}(.,.) \) with \( N=20 \) for Example 4.3

The logarithm of \( E_{2}^{N} \) and \( E_{\infty }^{N} \) for Example 4.3

The estimate solution \(U^N(.,.)\) and logarithm of \( E^{N}(.,.) \) with \( N=9 \) for Example 4.4

The logarithm of \( E_{2}^{N}\) and \(E_{\infty }^{N}\) for Example 4.4

5 Conclusions and Suggestions

In this text we showed that spectral collocation method can be utilized to find a solution for RDE with a simple structure. We analyzed the convergence of approximate solutions to the accurate solution by utilizing the theory of module of continuity and a normed space of polynomials. We presented two main theorem related to feasibility of obtained estimate solutions and their convergence. We solved some numerical examples and illustrated the capability of the presented method. For future work, we will utilize this powerful method and its convergence results for other types of PDEs involving delay and fractional derivatives.

Availability of Data and Materials

There is no data and material outside the article.

Abbreviations

- RD:

-

Reaction diffusion

- RDE:

-

Reaction diffusion equation

- PDE:

-

Partial differential equations

- CBVM:

-

Compact boundary value method

- CFODM:

-

Compact fourth-order differential method

- POBVM:

-

P-order boundary value method

- LGL:

-

Legendre–Gauss–Lobatto

References

Ambrosio, B., Ducrot, A., Ruan, S.: Generalized traveling waves for time-dependent reaction–diffusion systems. Mathematische Annalen (2020)

An, N., Huang, C., Yu, X.: LDG methods for reaction–diffusion systems with application of Krylov implicit integration factor methods. Taiwan. J. Math. 23(3), 727–749 (2019)

Biazar, J., Mehrlatifan, M.B.: A compact finite difference scheme for reaction–convection–diffusion equation. Chiang Mai J. Sci. 45(3), 1559–1568 (2018)

Bueno-Orovio, A., Pérez-García, V.M., Fenton, F.H.: Spectral methods for partial differential equations in irregular domains: the spectral smoothed boundary method. SIAM J. Sci. Comput. 28(3), 886–900 (2006)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Thomas, A., Jr.: Spectral methods in fluid dynamics. Springer Science and Business Media, Berlin (2012)

Chernyshenko, A.Y., Olshanskii, M.A.: An adaptive octree finite element method for PDEs posed on surfaces. Comput. Methods Appl. Mech. Eng. (2015)

Macías-Díaz, J.E.: On the numerical and structural properties of a logarithmic scheme for diffusion–reaction equations. Appl. Numer. Math. 140, 104–114 (2019)

Macías-Díaz, J.E., Puri, A.: An explicit positivity-preserving finite-difference scheme for the classical Fisher–Kolmogorov–Petrovsky–Piscounov equation. Appl. Math. Comput. 218(9), 5829–5837 (2012)

Gaeta, G., Mancinelli, R.: Asymptotic scaling in a model class of anomalous reaction–diffusion equations. J. Nonlinear Math. Phys. 12(4), 550–566 (2005)

Hashemi, M.S., Nucci, M.C.: Nonclassical symmetries for a class of reaction–diffusion equations: the method of heir-equations. J. Nonlinear Math. Phys. 20(1), 44–60 (2013)

He, J.H.: Approximate analytical solution for seepage flow with fractional derivatives in porous media. Comput. Methods Appl. Mech. Eng. 167(1–2), 57–68 (1998)

Huang, Y., Noori Skandari, M.H., Mohammadizadeh, F., Tehrani, H.A., Georgiev, S.G., Tohidi, E., Shateyi, S.: Space-time spectral collocation method for solving burgers equations with the convergence analysis. Symmetry 11(12), 1439 (2019)

Huang, Y., Mohammadi Zadeh, F., Noori Skandari, M.H., Ahsani Tehrani, H., Tohidi, E.: Space-time Chebyshev spectral collocation method for nonlinear time-fractional Burgers equations based on efficient basis functions. Math. Methods Appl. Sci.

Kolmogorov, A.N., Petrovskii, I.G., Piskunov, N.S.: Study of a diffusion equation that is related to the growth of a quality of matter, and its application to a biological problem. Byul. Mosk. Gos. Univ. Ser. A Mat. Mekh 1(1), 26 (1937)

Kuttler, C.: Reaction–diffusion equations and their application on bacterial communication. In: Handbook of Statistics, vol. 37, pp. 55–91. Elsevier, Amsterdam (2017)

Koto, T.: IMEX Runge–Kutta schemes for reaction–diffusion equations. J. Comput. Appl. Math. 215(1), 182–195 (2008)

Lee, J.H., Lee, Y.C., Lin, C.C.: Exact solutions of DNLS and derivative reaction–diffusion systems. J. Nonlinear Math. Phys. 9(sup1), 87–97 (2002)

Mahmoudi, M., Ghovatmand, M., Noori Skandari, M.H.: A novel numerical method and its convergence for nonlinear delay Volterra integro-differential equations. Math. Methods Appl. Sci. 43(5), 2357–2368 (2020)

Mañosa, V.: Periodic travelling waves in nonlinear reaction–diffusion equations via multiple Hopf bifurcation. Chaos Solitons Fractals 18(2), 241–257 (2003)

Rani, R.U., Rajendran, L.: Taylor’s series method for solving the nonlinear reaction–diffusion equation in the electroactive polymer film. Chem. Phys. Lett. 137573 (2020)

Ragozin, D.L.: Polynomial approximation on compact manifolds and homogeneous spaces. Trans. Am. Math. Soc. 150(1), 41–53 (1970)

Reitz, R.D.: A study of numerical methods for reaction–diffusion equations. SIAM J. Sci. Stat. Comput. 2(1), 95–106 (1981)

Schenk, C.P., Or-Guil, M., Bode, M., Purwins, H.G.: Interacting pulses in three-component reaction–diffusion systems on two-dimensional domains. Phys. Rev. Lett. 78(19), 3781 (1997)

Sharifi, S., Rashidinia, J.: Collocation method for convection–reaction–diffusion equation. J. King Saud Univ.-Sci. 31(4), 1115–1121 (2019)

Shen, J., Tang, T., Wang, L.L.: Spectral Methods: Algorithms, Analysis and Applications, vol. 41. Springer Science and Business Media, Berlin (2011)

Toubaei, S., Garshasbi, M., Jalalvand, M.: A numerical treatment of a reaction–diffusion model of spatial pattern in the embryo. Comput. Methods Differ. Equ. 4(2), 116–127 (2016)

Wang, H., Zhang, C., Zhou, Y.: A class of compact boundary value methods applied to semi-linear reaction–diffusion equations. Appl. Math. Comput. 325, 69–81 (2018)

Wang, W., Ma, W., Feng, Z.: Dynamics of reaction–diffusion equations for modeling CD4+ T cells decline with general infection mechanism and distinct dispersal rates. Nonlinear Anal. Real World Appl. 51, 102976 (2020)

Wu, G., Lee, E. W. M., Li, G.: Numerical solutions of the reaction–diffusion equation. Int. J. Numer. Methods Heat Fluid Flow (2015)

Xiao, X., Wang, K., Feng, X.: A lifted local Galerkin method for solving the reaction–diffusion equations on implicit surfaces. Comput. Phys. Commun. 231, 107–113 (2018)

Yi, T., Chen, Y.: Study on monostable and bistable reaction–diffusion equations by iteration of travelling wave maps. J. Differ. Equ. 263(11), 7287–7308 (2017)

Yu, F., Guo, Z., Lowengrub, J.: Higher-order accurate diffuse-domain methods for partial differential equations with Dirichlet boundary conditions in complex, evolving geometries. J. Comput. Phys. 406, 109174 (2020)

De Zan, C., Soravia, P.: Singular limits of reaction diffusion equations and geometric flows with discontinuous velocity. Nonlinear Anal. 200, 111989 (2020)

Funding

There are no funders to report for this submission.

Author information

Authors and Affiliations

Contributions

MH carried out the research, study, methodology and writing. MG carried out the methodology and supervisor role. MHNS participated in MATLAB program and methodology. DB participated in the validity confirmation and advisor role.

Corresponding author

Ethics declarations

Conflict of interest

he authors declare that they have no competing interests.

Consent to participate

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heidari, M., Ghovatmand, M., Skandari, M.H.N. et al. Numerical Solution of Reaction–Diffusion Equations with Convergence Analysis. J Nonlinear Math Phys 30, 384–399 (2023). https://doi.org/10.1007/s44198-022-00086-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44198-022-00086-1