Abstract

In view of the current problems of low detection accuracy, poor stability and slow detection speed of intelligent vehicle violation detection systems, this article will use human–computer interaction and computer vision technology to solve the existing problems. First, the picture data required for the experiment is collected through the Bit Vehicle model dataset, and computer vision technology is used for preprocessing. Then, use Kalman filtering to track and study the vehicle to help better predict the trajectory of the vehicle in the area that needs to be detected; finally, use human–computer interaction technology to build the interactive interface of the system and improve the operability of the system. The violation detection system based on computer vision technology has an accuracy of more than 96.86% for the detection of the eight types of violations extracted, and the average detection is 98%. Through computer vision technology, the system can accurately detect and identify vehicle violations in real time, effectively improving the efficiency and safety of traffic management. In addition, the system also pays special attention to the design of human–computer interaction, provides an intuitive and easy-to-use user interface, and enables traffic managers to easily monitor and manage traffic conditions. This innovative intelligent vehicle violation detection system is expected to help the development of traffic management technology in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, people’s lifestyles have been continuously improving, and cars have become more family oriented. More and more people are using private cars for transportation, and cars have become the most important means of transportation. With the increase in the number of cars, there have been some violations. The situation of some people stealing other car license plates and forging fake license plates is becoming increasingly severe in order to avoid police investigation after traffic accidents. Traditional methods and systems for detecting violations not only consume police force and money, but also are very inefficient. At present, most traffic violation detections are based on manual methods, and there are obvious shortcomings in the accuracy and speed of vehicle violation detection systems [1]. Therefore, this article would use human–computer interaction and computer vision technology to study the violation detection system. Computer vision technology can timely and accurately analyze and understand the collected image data, quickly detect images, and timely discover violation information. Through human–computer interaction technology, multiple information fusion of intelligent vehicle violation detection systems can be achieved. This helps to improve the accuracy, reliability, and fault tolerance of the detection system, reducing problems caused by single sensor failure or misjudgment.

Vehicle violation is one of the main causes of traffic accidents, which poses a serious threat to the safety of people’s lives and property. Through human–computer interaction and computer vision, the intelligent vehicle violation detection system can detect and correct violations in time, reduce the probability of traffic accidents, and improve the safety of road traffic. At the same time, violations will lead to traffic congestion and delays, affecting people’s travel efficiency and comfort. Through the intelligent vehicle violation detection system, it can reduce the occurrence of violations, improve the smooth degree of traffic flow, and alleviate the urban traffic congestion problem.

Nowadays, the main means of transportation for people to travel is by car. Cars not only bring many conveniences to people’s lives, but also increase the probability of traffic accidents and violations. Vehicle detection systems can effectively solve this problem [2, 3], for which many researchers have conducted research. Maha Vishnu, V. C. proposed a mechanism for detecting violations using traffic videos, detecting accidents through dynamic traffic signal control, and classifying vehicles using flow gradient feature histograms [4]. Zhang, Rusheng proposed a new reinforcement learning algorithm for detecting intelligent traffic signal control systems, and studied the performance of the system under different traffic flows and road network types. Although the system can effectively reduce the average waiting time of vehicles at intersections, the accuracy of violation checks needs to be improved [5]. Liu Shuo used the YOLOv3 algorithm to detect vehicles in traffic intersection images, improving the model’s detection ability for small target objects such as license plates and faces [6]. Alagarsamy, Saravanan designed a system to detect and analyze driver violations of traffic rules. The proposed system tracks driver activities and stores violations in a database [7]. Bhat, Akhilalakshmi T believed that the machine has completed all tasks including automatic vehicle detection and violations. Traffic records would be collected through closed circuit television recordings and then detected by the system for violations [8]. Charran, R. Shree proposed a system to automatically detect car violations and ultimately process tickets by capturing violations and corresponding vehicle numbers in a database [9]. Ozkul, Mukremin proposed a traffic violation detection and reporting system. It does not rely on expensive infrastructure or the presence of law enforcement personnel. It determines whether these changes comply with the traffic rules encoded in the system by observing the changes in nearby vehicles [10]. In summary, research on vehicle violation detection has achieved some results, but there are still shortcomings in terms of accuracy in violation detection. Therefore, this article would use human–computer interaction and computer vision technology to study it, in order to improve the accuracy of detection.

In the literature review section, the comparison table of this literature and other literature is shown in Table 1.

Computer vision typically requires extracting and reconstructing three-dimensional data from two-dimensional data, so that computers can understand the environment and respond or react. It uses computers to simulate lively objects for preprocessing, view and analyze the characteristics of the target to determine its situation, thereby achieving target detection and planning functions [11, 12]. Yang Zi believed that computer vision technology has been able to detect vehicles on the road. For this purpose, research was conducted on vehicle detection in different environments, and it was found that due to the variability of road driving environment, computer vision technology has effectively improved the accuracy of monitoring automobile illegal behavior [13]. Kumar, Aman proposed an intelligent traffic violation detection system. It helped detect violations in different scenarios and provide corresponding alerts based on the corresponding types of violations [14]. Mahmud Yusuf Tanrikulu Ensuring sound arrangements when organizing and promoting vaccines for people with dementia and establishing support mechanisms where public health measures designed to control the spread of the virus have profound and often tragic consequences for people with dementia, their families and caregivers [15]. Tutsoy, Onder established constrained multidimensional mathematics and meta-heuristic algorithms based on graph theory to learn the unknown parameters of large-scale epidemiological model, with the specified parameters and the coupling parameters of the optimization problem. The results obtained under equal conditions show that the mathematical optimization algorithm CM-RLS is better than the MA algorithm [16]. Arabi, Saeed provided a practical and comprehensive engineering vehicle detection solution based on deep learning and computer vision [17]. Overall, using computer vision technology can effectively improve the accuracy of detection.

Among the accidents that occur every year, the proportion of accidents caused by violations is very high, which may directly cause financial losses of billions of yuan. In order to strengthen the detection of cars and avoid traffic accidents, in addition to strengthening legal education for drivers, a system for detecting illegal vehicles is also needed to help better correct driver violations. This article would use human–computer interaction and computer vision technology to construct an intelligent vehicle violation detection system. It first utilizes self-photography and public databases to collect the image dataset required for the experiment. Then, this article uses computer vision technology to preprocess a portion of the data images. It mainly involves noise filtering and image enhancement processing, followed by the use of Kalman filtering for vehicle tracking research. Finally, based on human–computer interaction technology for system interface design, this article effectively improves the performance of the violation detection system through the above steps. This also improves the accuracy and speed of the system’s detection.

The novelty of the intelligent vehicle violation detection system based on human–computer interaction and computer vision is mainly reflected in the following aspects: 1. Comprehensive use of human–computer interaction and computer vision technology: the system combines human–computer interaction and computer vision two technologies, so that the system can not only automatically detect and identify illegal behaviors, but also provide intuitive monitoring interface for traffic managers through human–computer interaction, so as to realize efficient human–machine cooperation. 2. Focus on user-friendly design: compared with the traditional monitoring system, the system pays more attention to user-friendly design. Through the human–computer interaction technology, users can intuitively view the information of the illegal vehicles, and respond quickly. This greatly improves the efficiency and convenience of traffic management. 3. Dynamic adjustment and adaptive learning ability: the system has the ability of dynamic adjustment and adaptive learning, and can automatically adjust the working mode according to different environments and conditions to ensure the accuracy of detection. In addition, the system can also improve its ability to detect violations through continuous learning and training.

2 Vehicle Violation Image Data Based on Computer Vision-Related Technology

Computer vision is a general term that involves any visual content computation [18, 19]. It learns how to help computers gain a higher level of understanding from digital images or videos, and extract valuable information from the real world for decision-making purposes. In order to better detect violations of intelligent vehicles and improve the accuracy and real-time performance of violations detection, it is necessary to process the front image before detecting the image.

2.1 Data Collection

Data reliability mainly refers to the accuracy and completeness of the data. For the vehicle violation detection system, the accuracy of the data is very important, because the wrong detection results may lead to misjudgment or missed judgment. During data acquisition, there may be outliers, duplicate data, or incomplete data, requiring data cleaning to remove these invalid or low-quality data. For the data in the training set, annotation is required, that is, each sample is classified or marked by professionals. The annotation process needs to follow uniform rules and standards to ensure the accuracy and consistency of the data.

There are two sources of data for this article. One is online data, and the other is how to divide the size of photos taken with a mobile phone into four parts. The datasets used are the BIT-Vehicle dataset from Beijing University of Technology [20] and the Apollo Scape dataset [21]. The paper extracted 49,223 images of size 1920 × 1080 or 1600 × 1200 from them, which were taken from cameras at intersections. Part of the image data listed in this article is shown in Fig. 1.

As shown in Fig. 1, this article randomly selected a portion of data from the dataset studied, each with different characteristics. Some of the vehicles in the pictures are illegal, while others are not. Based on these datasets, all the datasets in this study were photos taken by mobile phones and other public databases, with a total of 49,521 images and 56,372 annotation boxes.

2.2 Image Preprocessing

2.2.1 Image Denoising

During the recording and transmission process of automotive digital images, they are affected by factors such as their own equipment and external noise, resulting in images with noise effects. Pixel blocks or elements that appear abrupt in an image can be understood as being affected by noise. This would seriously affect the quality of car images, making them unclear, so it is necessary to filter the images. Due to the difference between the grayscale values of noise in the image and those of nearby normal pixels, the mean filtering method utilizes this feature to replace the grayscale values of the center pixel with the average of all pixel grayscale values in the image. This reduces the impact of image noise and achieves the filtering effect [22, 23]. The mathematical expression is

In the above formula, \(k\) represents the set of all pixels in the neighborhood determined by the \(\left(a,b\right)\) point. \(N\) represents the number of pixels in the neighborhood determined by \(\left(a,b\right)\) points, while \({f}_{\left(a,b\right)}\) represents the grayscale value of the original image at \(\left(a,b\right)\) points. \({h}_{\left(a,b\right)}\) represents the grayscale value of the image after mean filtering for the pixel. The comparison of the effects of mean filtering on the original image is shown in Fig. 2.

2.2.2 Image Contrast Enhancement

The cameras used for vehicle image collection are in different environments, which greatly affects the photos taken. Therefore, improving the contrast of vehicle violation images is very important. In the same image, certain areas have lower pixel values, making these features unclear. Therefore, this can be improved by balancing the histogram of the target image, which is beneficial for subsequent correlation processing. The method of histogram equalization is simple and effective, and has been widely used in image enhancement. This article would also use this method to improve image comparison [24, 25]. The principle of histogram equalization is to widen the grayscale values with more pixels in image processing and merge the grayscale values with smaller pixels, thereby improving the contrast of the image and making it clearer [26]. The comparison before and after histogram equalization and the corresponding image histograms are shown in Fig. 3.

Parameter updates or learning rules are a key part in machine learning that describes how a model adjusts its internal parameters to improve performance against new data. In supervised learning, this often involves minimizing a loss function that measures the difference between the model predictions and the actual labels. For the vehicle violation detection system, these labels indicate whether there is any violation in the image and what type of violation it is. Invite professional annotators or teams to mark the collected vehicle images one by one. The labeling process needs to follow the unified labeling criteria to ensure the accuracy of each sample label. To ensure the accuracy of annotation, annotated data can be spot-sampled and verified to detect and correct possible errors.

3 Vehicle Tracking on Kalman Filter

The detection and tracking technology of moving objects is one of the research hotspots in the fields of digital image processing and recognition, as well as computer vision. It has many applications in various aspects of human life. Computer vision technology has also made great progress in vehicle tracking and has many good benefits. The first thing that a violation detection system based on computer vision needs to do is to detect and recognize each moving object in the image, and then analyze the specific situation of the moving object according to necessary standards to determine whether further work is needed. Therefore, this article would use Kalman filtering to study vehicle tracking. Based on estimation theory, Kalman filtering introduces the concept of state equations and establishes a system state model [27, 28].

The Kalman filter is described by a state equation and an observation equation. A discrete dynamic system can be considered to consist of two systems, and the expression is as follows:

Among them, \({\text{X}}\) is the transition matrix used to measure the transition from the state of the system \({\text{h}}-1\) to the state at that time \({\text{h}}\). \({{\text{M}}}_{{\text{h}}-1}\) is the zero mean used to represent the state model error, and the observation system can be expressed by the following observation equation, as shown as follows:

In the equation, h is called the observation matrix. \({V}_{h}\) represents the value of the matrix.

The principle of Kalman filtering is linear least squares error estimation. Due to the fact that the noise is Gaussian white noise, its limitation on target movement is very high [29, 30]. The simulation results of Kalman filtering trajectory for uniform linear motion are shown in Fig. 4.

In order to narrow the search range of the target vehicle in the new image, it is necessary to first use a certain algorithm to estimate the approximate position of the target vehicle in the new image frame and determine its matching range. The Kalman filter tracking model continuously predicts, corrects, and adjusts target violations through calculations [31]. Due to the small time delay between adjacent frames in the image, the speed and direction of target motion do not immediately change. Based on this characteristic, the matching of the target vehicle is determined as follows:

\((\overline{{a }_{i}},\overline{{b }_{i}})\) is the predicted centroid coordinate of the target vehicle in a continuous image line; \(Q\) is the radius of the target vehicle search area in the next image. The circular area with the predicted centroid as the center and \(Q\) as the radius serves as the matching range of the target vehicle in the next frame of the image, avoiding search and matching within the entire image range. It not only increases the accuracy of tracking, but also improves the real-time performance of tracking, that is to say, when completing target matching, the vehicle to be matched meets the following conditions:

\((\overline{{a }_{j}},\overline{{b }_{j}})\) is the centroid coordinate of the vehicle to be matched; \({\text{d}}\) is the distance between the centroid of the vehicle to be matched and the predicted centroid of the target vehicle. For target vehicles that have just entered the image frame and have not yet created a tracking sequence, due to the lack of specific information, this method cannot narrow the search range. Instead, feature sets can be directly used to match within the entire image range. The Kalman filter tracking effect is shown in Fig. 5.

As shown in Fig. 5, using Kalman filtering to track vehicles can still effectively track the vehicles that need to be tracked even when the traffic flow is high. Based on the results of object detection, this article frames the size of foreground clumps in the figure, as shown in Fig. 5. The red rectangle represents the smallest box that can frame the foreground, and its centroid coordinates can be calculated:

Among them, \(M\) and \({M}_{i}\), respectively, represent the size and average grayscale value of the vehicle to be matched; \(x\), \(\gamma\), \(\beta\) are the weighted coefficients; \(t\) is the threshold, and \(dif\) is the degree of dissimilarity. The higher the degree of similarity, the higher the degree of matching between these two targets, and the greater is the likelihood of being the same target vehicle.

Based on the speed and direction of the vehicle, and over time, the estimated new position of the vehicle can be calculated. The new speed of the vehicle can be estimated based on the speed and direction in the previous state, and the time that has passed. The actual observation location can be obtained through the sensor vehicle actual location data. In some cases, control inputs, such as acceleration or braking commands, may be introduced to influence the speed and direction of the vehicle. These control inputs can be used as additional input conditions for the Kalman filter. The Kalman filter uses a noise model to describe both the measurement noise and the process noise. These noise models can be tuned to historical data and experience to optimize filter performance.

When conducting vehicle tracking, the first step is to determine the operating parameter values and initial values of the vehicle through multiple on-site tests. Then, this article calculates the feature results based on the vehicle detection results, establishes a centroid position estimation model, and performs search matching. This article selects a video for vehicle tracking, recording images at a frequency of 6 frames per second. This article marks the vehicles entering the tracking sequence and identifies the same vehicle with the same number. The effect of tracking processing is shown in Fig. 6.

As shown in Fig. 6, it is the implementation result of the vehicle tracking process based on the Kalman filter prediction tracking method. Figure 6(a–d) show the motion modes of two mobile vehicles named 4 and 5, respectively. From Fig. 6(a–d), it can be seen that the area of the car has changed due to the car moving away from the camera along the lane. Therefore, the block diagram of the tracking algorithm based on Kalman filtering has also changed. It ensures that the size of the tracking window and the size of the moving vehicle are basically consistent at each moment. In addition, from the tracking window of the moving vehicle in the figure, it can be seen that the upper and lower positions of the tracking window are relatively accurate.

4 Design of Intelligent Vehicle Violation Detection System

4.1 System Requirements and Architecture

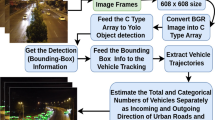

With the development of humanity and the advancement of technology, the number of vehicles continues to increase, leading to an increasing number of traffic problems, and illegal driving is one of the main reasons for traffic accidents. Therefore, establishing an inspection system that can detect illegal vehicles in a timely manner is very important [32, 33]. In the era of advanced technology, traffic management would not be easy to succeed if it relies on numerous police officers as before. In order to efficiently complete the detection of road violations, it is necessary to understand the intelligent vehicle violation detection system. This system typically uses computer vision and image processing technology to identify and detect vehicle violations, saving police force and time [34, 35]. At the same time, it has good stability and can work for a long time. The detailed information is shown in Fig. 7.

As shown in Fig. 7, the system deploys cameras in areas or intersections that need to be monitored. It can take photos of vehicles based on photo triggering patterns, and then send the captured photos to the central processing system. It utilizes communication networks to collect images from the automotive data storage system for transmission and storage.

The violation detection module detects the vehicle’s speed and violation behavior based on the tracking results [36, 37]. It can use computer vision technology to locate and recognize vehicle information, and then calculate the vehicle’s speed based on driver’s license information. If the speed of a car exceeds the threshold or is different from the speed of surrounding cars, it is determined that the car has violated regulations. The main characteristic parameter settings of the corresponding control are shown in Table 2:

4.2 Illegal Parking of Vehicles

At present, there is no unified and effective detection system for illegal parking, and research on illegal parking is still ongoing. This article compares the changes in background pixel values before and after vehicles enters the area of interest, and uses computer vision technology to construct an intelligent vehicle violation detection system. When an illegal car enters the area of interest and is illegally parked, the target car would stay for a period of time, so the problem is transformed into detecting a stationary target. Usually, the pixel values of each point in the background image of the region of interest can remain unchanged for a long time, but the background itself can change in a short period of time due to environmental influences. Therefore, when an object moves, the pixel value would undergo a significant change, and this change would be greater than the amplitude change of the background pixel itself [38, 39]. Therefore, when significant changes are detected in pixels within the region of interest, it can be determined that the target in front has entered the region of interest. When the background value pixel changes rapidly and significantly, and returns to the initial background state value in a short period of time, it indicates that a moving target has passed through the region of interest but has not stopped. When the background value changes rapidly and its pixel value remains unchanged for a period of time, it indicates that a moving object has entered and stopped, and there may be a violation of parking regulations.

4.3 System Interface Under Human–Computer Interaction

Each system is an implementation of human–computer interaction. The user interface is a platform for sending and exchanging information between users and computers. This platform should not only meet the needs of work information interaction, but also interact without the need for information, and the information processing speed should also meet the requirements. The intelligent vehicle violation detection system based on human–computer interaction and computer vision technology is similar to traffic police speed measurement for speeding recognition. Each intersection equipped with detection equipment is divided into two groups. Based on the driving distance and required speed of the two intersections, the starting time for the same vehicle to pass through a single intersection machine can be set. The intersection compares the difference time with the starting time based on the vehicle. If the measured value is greater than the starting time, it is considered that the vehicle is driving normally. If the measured value is less than the starting time, it is considered that the vehicle is speeding and an alarm is sent to the traffic police teams closest to the intersection where the vehicle passes through based on the network. At the same time, photos of passing through two intersections are stored as law enforcement evidence. The software system processing interface for speeding vehicles is shown in Fig. 8.

5 Performance of Intelligent Vehicle Violation Detection System

For intelligent vehicle violation detection systems, configuring advanced computers can ensure the success of training and the accuracy of results, and training reports require both the memory and computing power of the graphics card. Therefore, this training should be run on a laboratory server. The hardware configuration, system used, and related software environment of the laboratory server are shown in Table 3.

Parameter updates or learning rules are a key part in machine learning that describes how a model adjusts its internal parameters to improve performance against new data. In supervised learning, this often involves minimizing a loss function that measures the difference between the model predictions and the actual labels. For the vehicle violation detection system, these labels indicate whether there is any violation in the image and what type of violation it is. Invite professional annotators or teams to mark the collected vehicle images one by one. The labeling process needs to follow the unified labeling criteria to ensure the accuracy of each sample label. To ensure the accuracy of annotation, annotated data can be spot-sampled and verified to detect and correct possible errors.

This article uses computer vision technology to process vehicle violation images, and then combines human–computer interaction to construct a system. The constructed system is more excellent than other traditional detection technologies. In order to capture illegal vehicles, the system can install cameras on the side or above the road for image collection, and then use a converter in the computer platform to convert it into an image. It is stored in computer memory. Then, this article uses computer vision technology to process the collected video images to see if the vehicle has engaged in illegal behavior, and at the same time, it is necessary to promptly handle the fault once it is discovered. In order to further demonstrate the performance superiority of the system studied in this article, it is compared with an intelligent vehicle violation detection system based on Video Image Processing (VIP) technology, Magnetic Induction Coil (MIC) technology, and Digital Image Processing (DIP) technology. The specific performance comparison is shown in Table 4.

As shown in Table 4, compared with other vehicle violation detection systems, the overall system performance of the vehicle violation detection system constructed in this article using human–computer interaction and computer vision technology is more superior, with significant advantages. The system studied in this article has a simple operating interface and complete functions such as recognition and tracking of various vehicle violation detection behaviors. It does not require users to spend a lot of time familiarizing themselves and is easy to get started with. At the same time, the detection accuracy is higher than other systems, and the stability is also better. The accuracy of warning for illegal vehicles is also higher. It can be all-weather tested and has excellent scalability.

The training set is a dataset used to train and optimize the vehicle violation detection system. It should contain various types of violations to ensure that the system can identify and classify various situations. The data in the training set should be annotated, that is, each sample should have a corresponding label, indicating whether it is a violation. The test set is used to evaluate the performance of the vehicle violation detection system, i.e., through the test set to measure the performance of the system on unseen data. The validation set was used to adjust the hyperparameters and to select the best model. By evaluating the performance of the model on the validation set, the optimal hyperparameter combination and the best model can be found. Updated regularly to reflect changes in the data. This study focuses on training accuracy.

There are many types of vehicle violations, such as retrograde, pressing yellow line, illegal parking, block license plates, running red lights, speeding, cut into a lane, and not polite to pedestrians. It is very important to detect these violations. This article utilizes Computer Vision (CV) technology to construct an intelligent vehicle violation detection system, which has high accuracy in detecting these types of violations. The research data are sourced from the database images mentioned above, and these different captured photos are tested. The experimental data of the above 8 violations are shown in Table 5.

As shown in Table 5, a total of 8 violations are selected. This article has numbered these 8 violations from 1 to 8, and each violation was detected by extracting a different amount of image data, totaling 49,521 images. Among them, there are 9687 images of people who are not polite to others. The image data for illegal parking is the least, with only 2681 images.

This article uses the research to detect 8 types of violations in the violation detection system, and conducts 100 experiments. The final result is the average accuracy of 100 experimental detections. It compares the results with violation detection systems based on VIP, MIC, and DIP, and the specific comparison results are shown in Fig. 9.

In Fig. 9, the x-axis represents the number of violations, while the y-axis represents the accuracy of the detection. The vehicle violation detection system in this study detects different types of violations with much higher accuracy than other violation detection systems. Among them, the CV based violation detection system has an accuracy of over 96.86% for detecting the 8 types of violations extracted. The accuracy of violation detection systems based on VIP, MIC, and DIP for violation behavior detection is below 91.92%, 87.27%, and 92.35%, respectively. The accuracy of the system studied in this article for detecting illegal behavior of running red lights is 99.69%, approaching 100%. It is 8.81%, 14.51%, and 10.4% higher than the violation detection systems based on VIP, MIC, and DIP, respectively. The average accuracy of the violation detection system based on CV for 8 types of violations is 98%. It is 7.55%, 12.49%, and 7.87% higher than the violation detection systems based on VIP, MIC, and DIP, respectively.

With the continuous increase in the number of cars, more and more image data are generated. It is crucial to quickly find evidence of vehicle violations from massive image data, in order to search for and punish driver violations, and to reduce accident rates. The vehicle violation detection system studied in this article has a faster speed and shorter detection time for image detection. In order to further highlight the superiority of the system studied in this article in terms of image detection speed, this article compares it with the violation detection systems of VIP, MIC, and DIP. The specific comparison results are shown in Fig. 10.

In Fig. 10, the x-axis represents the number of images to be detected, while the y-axis represents the time required for detection. As shown in Fig. 10, the system studied in this article takes much less time to detect extracted images than other violation detection systems, and the detection speed is faster. Among them, the detection system built based on MIC takes much longer than the other three detection systems. When the number of detected images is 150 or less, the time required for a VIP based detection system is higher than that for a CV based system. However, it is lower than systems built on MIC and DIP. When the number of detected images is 160 or more, the time required for a VIP based detection system is higher than that of a CV and DIP based system, but lower than that of a MIC based system. Meanwhile, when the number of detected images is 10, the time required for the system detection studied in this article is 5.54 ms. It is 1.58 ms, 4.24 ms, and 3.05 ms lower than the violation detection systems based on VIP, MIC, and DIP, respectively. When the number of detected images is 200, the time required for the system detection studied in this article is 68.39 ms. It is 48.03 ms, 55.17 ms, and 28.73 ms lower than the violation detection systems based on VIP, MIC, and DIP, respectively.

6 Conclusions

The number of deaths caused by traffic accidents in China each year exceeds hundreds of thousands, ranking among the top in the world, most of which are caused by driver violations. Therefore, if computer vision technology can be used to detect violations of certain vehicles and record and punish them, it is of great significance for reducing law enforcement, managing the traffic environment, preventing accidents, and ensuring pedestrian safety. The intelligent vehicle violation detection system based on human–computer interaction and computer vision is a system that detects and records vehicle violations through computer vision and human–computer interaction technology. The system studied in this article utilized computer vision technology to preprocess the extracted images, and then used Kalman filtering to track vehicles. Then, it utilized the intelligent interaction interface of human–computer interaction technology system to detect vehicle violations, record and count vehicle violations, and presented the final recorded results to the administrator. Therefore, the research system in this article can recognize traffic signs, detect vehicle violations, and then provide useful information and warnings to drivers through human–computer interaction. This can effectively improve traffic safety, reduce manual patrol and monitoring costs, and improve the accuracy and efficiency of violation detection. Intelligent vehicle violation detection systems based on human–computer interaction and computer vision may encounter many difficulties in the research. Here are the possible challenges and the corresponding solutions: for computer vision systems, there is a lot of annotated data for model training. If the actual annotation data is limited available, the dataset can be augmented by using techniques such as data augmentation. Also, consider using semi-supervised or unsupervised learning to effectively utilize unannotated data. To make the system easy to use and understand, an efficient and intuitive human–machine interface needs to be designed. This can find the optimal design scheme through user research and design thinking.

Data Availability

Data are available upon reasonable request.

References

Lv, Z., Qiao, L., You, I.: 6G-Enabled network in box for internet of connected vehicles. IEEE Trans. Intellig. Transport. Syst. (2020). https://doi.org/10.1109/TITS.2020.3034817

Asadianfam, S., Shamsi, M., Rasouli Kenari, A.: Big data platform of traffic violation detection system: identifying the risky behaviors of vehicle drivers. Multimedia Tools Appl. 79(33–34), 24645–24684 (2020). https://doi.org/10.1007/s11042-020-09099-8

Sahraoui, Y., Kerrache, C. A., Korichi, A., Nour, B., Adnane, A., Hussain, R.: “DeepDist: a deep-learning-based IoV framework for real-time objects and distance violation detection.” IEEE Internet Things Magaz. 33, 30–34 (2020) https://doi.org/10.1109/IOTM.0001.2000116

Maha Vishnu, V.C., Rajalakshmi, M., Nedunchezhian, R.: Intelligent traffic video surveillance and accident detection system with dynamic traffic signal control. Cluster Comput. 215, 135–147 (2018). https://doi.org/10.1007/s10586-017-0974-5

Zhang, R., Ishikawa, A., Wang, W., Striner, B., Tonguz, O.K.: Using reinforcement learning with partial vehicle detection for intelligent traffic signal control. IEEE Trans. Intellig. Transport. Syst. 22(1), 404–415 (2020). https://doi.org/10.1109/TITS.2019.2958859

Liu Shuo., Gu Yuhai., Rao Wenjun., Wang Juyuan.: “A method for detecting illegal vehicles based on the optimized YOLOv3 algorithm”. J. Chongqing Univer. Technol. (Nat. Sci.) 35.4, 135–141 (2021). https://doi.org/10.3969/j.issn.1674-8425(z).2021.04.018

Alagarsamy, S., Ramkumar, S., Kamatchi, K., Shankar, H., Kumar, A., Karthick, S., Kumar, P.: “Designing a advanced technique for detection and violation of traffic control system.” J. Crit. Rev. 7.8, 2874–2879 (2020). https://doi.org/10.31838/jcr.07.08.473

Bhat, A.T., Rao, M.S., Pai, D.G.: Traffic violation detection in India using genetic algorithm. Glob. Trans. Proc. 2(2), 309–314 (2021). https://doi.org/10.1016/j.gltp.2021.08.056

Charran, R. S., Dubey. R. K.: “Two-Wheeler Vehicle Traffic Violations Detection and Automated Ticketing for Indian Road Scenario.” IEEE Trans. Intellig. Transport. Syst. 23.11, 22002–22007 (2022). https://doi.org/10.1109/TITS.2022.3186679

Ozkul, M., Çapuni, I.: Police-less multi-party traffic violation detection and reporting system with privacy preservation. IET Intellig. Trans. Syst. 12(5), 351–358 (2018). https://doi.org/10.1049/iet-its.2017.0122

Abbas, A. F., Sheikh, U. U., Al-Dhief, F. T., Mohd, M. N. H.: “A comprehensive review of vehicle detection using computer vision.” TELKOMNIKA (Telecommunication Computing Electronics and Control) 19.3, 838–850 (2021). https://doi.org/10.12928/telkomnika.v19i3.12880

Guo, M.-H., Xu, T.X., Liu, J.J., Liu, Z.N., Jiang, P.T., Mu, T.J., et al.: Attention mechanisms in computer vision: a survey. Comput. Visual Media 8(3), 331–368 (2022). https://doi.org/10.1007/s41095-022-0271-y

Yang, Z., Pun-Cheng, L.S.C.: Vehicle detection in intelligent transportation systems and its applications under varying environments: a review. Image Vis. Comput. 6(9), 143–154 (2018). https://doi.org/10.1016/j.imavis.2017.09.008

Kumar, A., Kundu, S., Kumar, S., Tiwari, U. K., Kalra, J.: “S-tvds: Smart traffic violation detection system for Indian traffic scenario.” Int. J. Innovat. Technol. Explor. Eng. (IJITEE) 8.4S3, 6–10 (2019). https://doi.org/10.35940/ijitee.D1002.0384S319

Tutsoy, O., Tanrikulu. M. Y.: “Priority and age specific vaccination algorithm for the pandemic diseases: a comprehensive parametric prediction model.” BMC Med. Inform. Decis. Mak. 22.1, 4 (2022). https://doi.org/10.13140/RG.2.2.25044.32646

Tutsoy, O.: Graph theory based large-scale machine learning with multi-dimensional constrained optimization approaches for exact epidemiological modelling of pandemic diseases. IEEE Trans. Pattern Analysis Mach. Intelligence (2023). https://doi.org/10.1109/TPAMI.2023.3256421

Arabi, S., Haghighat, A., Sharma, A.: A deep-learning-based computer vision solution for construction vehicle detection. Comput. Aided Civil Infrastr. Eng. 35(7), 753–767 (2020). https://doi.org/10.1111/mice.12530

Wiley, V., Lucas, T.: “Computer vision and image processing: a paper review.” Int. J. Artif. Intellig. Res. 2.1, 29–36 (2018).https://doi.org/10.29099/ijair.v2i1.42

Tian, H., Wang, T., Liu, Y., Qiao, X., Li, Y.: Computer vision technology in agricultural automation—a review. Inform. Process. Agric. 7(1), 1–19 (2020). https://doi.org/10.1016/j.inpa.2019.09.006

Qianlong, D., Wei, S.: Model recognition based on improved sparse stack coding. Comput. Eng. Appl. 56(1), 136–141 (2020). https://doi.org/10.1109/ACCESS.2020.2997286

Huang, X., Wang, P., Cheng, X., Zhou, D., Geng, Q., Yang, R.: The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 42(10), 2702–2719 (2019). https://doi.org/10.1109/TPAMI.2019.2926463

Rakshit, M., Das, S.: An efficient ECG denoising methodology using empirical mode decomposition and adaptive switching mean filter. Biomed. Signal Process. Control 40(3), 140–148 (2018). https://doi.org/10.1016/j.bspc.2017.09.020

Shengchun, W., Jin, Li., Shanshan, Hu.: A calculation method for floating datum of complex surface area based on non-local mean filtering. Prog. Geophys. 33(5), 1985–1988 (2018). https://doi.org/10.6038/pg2018CC0132

Dyke, R.M., Hormann, K.: Histogram equalization using a selective filter. Vis. Comput. 39(12), 6221–6235 (2023). https://doi.org/10.1007/s00371-022-02723-8

Agrawal, S., Panda, R., Mishro, P. K., Abraham, A.: “A novel joint histogram equalization based image contrast enhancement.” J. King Saud University Comput. Inform. Sci. 34.4, 1172–1182 (2022). https://doi.org/10.1016/j.jksuci.2019.05.010

Vijayalakshmi, D., Nath, M.K.: A novel contrast enhancement technique using gradient-based joint histogram equalization. Circuits Syst. Signal Process. 40(8), 3929–3967 (2021). https://doi.org/10.1007/s00034-021-01655-3

Pei, Y., Biswas, S., Fussell, D.S., Pingali, K.: An elementary introduction to Kalman filtering. Commun. ACM 62(11), 122–133 (2019). https://doi.org/10.1145/3363294

Fang, H., Tian, N., Wang, Y., Zhou, M., Haile, M.A.: Nonlinear Bayesian estimation: From Kalman filtering to a broader horizon. IEEE/CAA J. Automat. Sinica 5(2), 401–417 (2018). https://doi.org/10.1109/JAS.2017.7510808

Liu, S., Wang, Z., Chen, Y., Wei, G.: Protocol-based unscented Kalman filtering in the presence of stochastic uncertainties. IEEE Trans. Autom. Control 65(3), 1303–1309 (2019). https://doi.org/10.1109/TAC.2019.2929817

Huang, Y., Zhang, Y., Zhao, Y., Shi, P., Chambers, J.A.: A novel outlier-robust Kalman filtering framework based on statistical similarity measure. IEEE Trans. Autom. Control 66(6), 2677–2692 (2020). https://doi.org/10.1109/TAC.2020.3011443

Xiangyu, K., Xiaopeng, Z., Xuanyong, Z., et al.: Adaptive dynamic state estimation of distribution network based on interacting multiple model [J]. IEEE Trans. Sustain. Energy APR 13(2), 643–652 (2022)

Go, M.J., Park, M., Yeo, J.: “Detecting vehicles that are illegally driving on road shoulders using faster R-CNN.” J. Korea Instit. Intellig. Trans. Syst. 21.1, 105–122 (2022). https://doi.org/10.12815/kits.2022.21.1.105

Saritha, M., Rajalakshmi, S., Angel Deborah, S., Milton, R.S., Thirumla Devi, S., Vrithika, M., et al.: RFID-based traffic violation detection and traffic flow analysis system. Int. J. Pure Appl. Math. 118(20), 319–328 (2018). https://doi.org/10.1007/s11042-020-09714-8

Agarwal, P., Chopra, K., Kashif, M., Kumari, V.: Implementing ALPR for detection of traffic violations: a step towards sustainability. Procedia Comput. Sci. 13(2), 738–743 (2018). https://doi.org/10.1016/j.procs.2018.05.085

Santhosh, K.K., Dogra, D.P., Roy, P.P.: Anomaly detection in road traffic using visual surveillance: A survey. ACM Comput. Surveys (CSUR) 53(6), 1–26 (2020). https://doi.org/10.1145/3417989

Kousar, S., Aslam, F., Kausar, N., Pamucar, D., Addis, G.M.: Fault diagnosis in regenerative braking system of hybrid electric vehicles by using semigroup of finite-state deterministic fully intuitionistic fuzzy automata. Comput. Intellig. Neurosci. (2022). https://doi.org/10.1155/2022/3684727

Liu, Y., Zhong, S., Kausar, N., Zhang, C., Mohammadzadeh, A., Pamucar, D.: “A stable fuzzy-based computational model and control for inductions motors.” Cmes-Comput. Model. Eng. Sci. 138, 793–812 (2024). https://doi.org/10.32604/cmes.2023.028175

Rafiq, N., Yaqoob, N., Kausar, N., Shams, M., Mir, N.A., Gaba, Y.U., Khan, N.: Computer-based fuzzy numerical method for solving engineering and real-world applications. Math. Prob. Eng. (2021). https://doi.org/10.1155/2021/6916282

Shams, M., Rafiq, N., Kausar, N., Mir, N. A., Alalyani, A.: “Computer oriented numerical scheme for solving engineering problems.” Comput. Syst. Sci. Eng. 42.2, 689–701 (2022). https://doi.org/10.32604/csse.2022.022269.

Funding

This work was supported by City University of Seattle.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

There are no potential competing interests in my paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ren, Y. Intelligent Vehicle Violation Detection System Under Human–Computer Interaction and Computer Vision. Int J Comput Intell Syst 17, 40 (2024). https://doi.org/10.1007/s44196-024-00427-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00427-6