Abstract

Picture fuzzy set (PFS) can intuitively express the answers of “yes”, “neutral”, “no” and “reject”, which have strong advantages in solving uncertain information. The similarity measure is an effective tool to determine the relationship between two picture fuzzy sets (PFSs). In this paper, we propose a hybrid picture fuzzy (PF) similarity measure which combines the Hamming distance and the transformed tetrahedral centroid distance and verifies that it satisfies the four properties of the similarity measure. The proposed and existing picture fuzzy similarity measures are compared and investigated through numerical examples and some applications of pattern recognition. The results show that the proposed similarity measure not only produces no unreasonable results, but also overcomes the shortcomings of the existing similarity measures. Furthermore, we investigate an improved VIKOR method based on the proposed similarity measure of PFS. Finally, through an example, several multi-attribute decision-making (MADM) methods are compared and analyzed to illustrate the effectiveness and practicability of the improved VIKOR method.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Zadeh [1] introduced the concept of fuzzy set (FS) in 1965, a fuzzy set is mainly understood and represented by membership degree function. The emergence of fuzzy sets can handle the uncertain information that occurs in the real world. Fuzzy integrodifferential equations theory has been studied to adapt to model various phenomena under uncertainty, thereby solving more complex engineering and applied science problems [2,3,4,5]. Scholars have promoted and developed fuzzy set theory, among which the intuitionistic fuzzy set (IFS) proposed by Atanassov [6] in 1986 is an effective extension of fuzzy set theory. Intuitionistic fuzzy sets are composed of membership and non-membership degree functions and can overcome some disadvantages of fuzzy sets. Because of the effectiveness and superiority of intuitionistic fuzzy sets, they have been broadly used in cluster analysis [7,8,9,10,11], pattern recognition [12,13,14], group decision-making [11, 15, 16] and other fields. Although intuitionistic fuzzy sets have greater advantages than fuzzy sets in expressing uncertain information, there are still some situations that cannot be described by intuitionistic fuzzy sets in real life, such as human voting, medical diagnosis and other issues. In the voting model, there are four results when voters vote for candidates: “vote for”, “abstain”, “vote against” and “refuse to vote”. Among medically diagnosed problems: symptoms of cough and headache may have little effect on chest and stomach illnesses. Likewise, symptoms of chest and stomach pain may have a neutral effect on disorders of the head and lungs. The neutral attitude of voter abstention, and the neutral influence of some symptoms on specific diseases, so we can see that the degree of neutrality plays a significant role in the decision making process, which cannot be solved by intuitionistic fuzzy sets. To address this type of problem, Cuong and Kreinovich [17] proposed a new generalization of fuzzy sets and intuitionistic fuzzy sets in 2013, called picture fuzzy sets. The picture fuzzy set consists of three functions: membership degree function, neutral degree function and non-membership degree function. In [17, 18], Coung and Kreinovich gave some basic operations and relations of picture fuzzy sets.

When using picture fuzzy sets to solve practical problems such as pattern recognition, cluster analysis, information retrieval, image processing, medical diagnosis, and decision-making, it is necessary to measure the connection and difference between two picture fuzzy sets. Similarity measures and distance measures are effective tools to determine the degree of connection and difference between two sets or objects. Cuong [17] first gave the Hamming distance and Euclidean distance of picture fuzzy sets in 2013. Wei [19] proposed eight kinds of picture fuzzy similarity measures based on cosine function, and proved the effectiveness and practicability of the proposed similarity measures by applying it to an example of selecting the optimal production. Similarity measures of cosine, weighted cosine, set theory, weighted set theory, gray and weighted gray were proposed by Wei [20] in the picture fuzzy environment, and were effectively applied to building material identification and mineral field identification. Wei and Gao [21] proposed picture fuzzy generalized Dice similarity measure, and proved the effectiveness of the picture fuzzy generalized Dice similarity measure in the application of pattern recognition through the selection of building materials. In [22], Singh et al. gave a geometric interpretation of picture fuzzy sets and proposed several distance and similarity measures for picture fuzzy sets, which were applied in flood disaster risk analysis. The picture fuzzy similarity measure composed of three functions was proposed by Luo et al. [23] and applied to medical diagnosis. Ganie and Singh [24] studied a picture fuzzy similarity measure based on the upper and lower bounds of membership degree, non-membership degree, neutral degree and rejection degree, and proposed a new method of picture fuzzy inferior ratio, which was applied in multi-attribute decision-making. Singh and Ganie [25] also continued to study some similarity measures that can distinguish highly similar picture fuzzy sets, and developed a maximum spanning tree clustering method for picture fuzzy. Bi-parametric picture fuzzy similarity and distance measures based on the tetrahedral centroid were proposed by Khan [26] and applied to medical diagnosis and pattern recognition. There are also many studies and applications on the similarity and distance measures of picture fuzzy sets in the literature [27,28,29,30,31,32,33].

It is of great significance to deal with multi-attribute decision making problems with picture fuzzy sets as the background. Wang [34] et al. applied the developed picture fuzzy geometric operator to multi-attribute decision making problems, and verified the practicability of the method through an example. In [35], the authors build a projection model to measure the similarity of each scheme to the ideal point of picture fuzzy set, rank the given options according to the projection model, and then select the most ideal option. Due to the advantages of VIKOR method proposed by Opricovic [36], scholars are attracted to study and apply it to decision-making problems. Wang and Zhang et al. [37] proposed the picture fuzzy normalized projection (PFNP) model, which overcomes the limitations of [35], and combined the PFNP model with the VIKOR method to construct a picture fuzzy normalization based Projected VIKOR method, applied to multiple attribute decision problems. Yue [38] applied the proposed picture fuzzy normalization projection and extended VIKOR method to software reliability evaluation. Tian et al. [33] proposed a corresponding WET-PPP project sustainability evaluation method based on the picture fuzzy similarity VIKOR method. In [39], the PFNs algorithm operator is used, and the VIKOR method is applied to evaluate citizens’ satisfaction with municipal services.

Ganie and Singh [24] studied the existing PF similarity measure [19,20,21,22] and found that there was a counterintuitive situation. First of all, in the process of research and learning, we found that some PF similarity measures proposed in the past two years [23,24,25,26] [33] cannot distinguish the similarity degree between different PFSs in some cases. And when dealing with pattern recognition problems, the problem of classification failure often occurs. Second, Khan et al. [26] transformed PFS into a tetrahedron model and constructed a bi-parametric similarity measure of PFS based on the distance between the centroids of two tetrahedrons. However, the similarity measure formula given at the end changes the sign in two directions, then there are two directions that are not the distance between the centroids. To overcome the above existing problems, we propose a PF similarity measure that mixes Hamming distance and transformed tetrahedral centroid distance. When we carefully study the extended VIKOR method based on picture fuzzy similarity by Tian et al. [33] their method only considers group utility and individual regret that are close to the positive ideal, and obtains VIKOR values that are only close to the positive ideal. Therefore, based on the proposed picture fuzzy similarity measure and the extended VIKOR method, we investigate an improved VIKOR method that considers VIKOR values close to the positive ideal and negative ideal. Finally, TOPSIS method is used to calculate the relative closeness coefficient of candidate schemes and sort them.

The main contributions of this paper are as follows:

-

1.

We propose a hybrid picture fuzzy similarity measure which combines the Hamming distance and the transformed tetrahedral centroid distance, and verifies that it satisfies the four properties of similarity measure.

-

2.

Numerical examples illustrate the rationality of the proposed picture fuzzy similarity measure, overcoming the counter-intuitive situation of existing picture fuzzy similarity measures.

-

3.

The superiority of the new picture fuzzy similarity measure is further verified by pattern recognition.

-

4.

Research an improved VIKOR method based on the proposed picture fuzzy similarity measure, and illustrate its practicability and effectiveness through comparative analysis of examples.

The remainder of this article is structured as follows: in the second part, we review some basic concepts and properties of picture fuzzy sets, give some existing picture fuzzy similarity measures. In the third part, we propose and verify a new picture similarity measure and a weighted picture fuzzy similarity measure. In the fourth part, we apply the proposed and existing PF similarity measure to some instances and pattern recognition, compare the results and illustrate the advantages of the proposed similarity measure. In the fifth part, the algorithm steps of the improved VIKOR method are given and applied to the examples in the literature [34] for comparative analysis. In the sixth part, it summarizes the full text and looks forward to the future.

2 Preliminaries

In this section, we will briefly give the basic definition, operation of picture fuzzy set and some important PF similarity measures.

2.1 Basic Definitions

Definition 1

[17] Let \(X = \left\{ {{x_1},{x_2}, \ldots ,{x_n}} \right\} \) be a universe of discourse, a PFS B in X is defined as \(B = \left\{ {\left\langle {{x_k},{m_B}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) } \right\rangle \left| {{x_k} \in X} \right. } \right\} \), where \({m_B}\left( {{x_k}} \right) \), \({\eta _B}\left( {{x_k}} \right) \) and \({v_B}\left( {{x_k}} \right) \) represents the membership degree, neutral degree and non-membership degree, respectively, of the element \({x_k} \in X\) in the set B such that \(0 \le {m_B}\left( {{x_k}} \right) + {\eta _B}\left( {{x_k}} \right) + {v_B}\left( {{x_k}} \right) \le 1\).The refusal degree of element \({x_k}\) belonging to the PFS B is denoted by \({\rho _B}\left( {{x_k}} \right) = 1 - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) \). For the sake of simplicity, the triad \(\left( {{m_B}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) } \right) \) represents picture fuzzy value.

Definition 2

[18] For any two PFSs B and C in X, the operations of complement, equality, inclusion, union, and intersection are defined as:

-

(1)

\({\left( B \right) ^C} = \left\{ {\left\langle {{x_k},{v_B}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) ,{m_B}\left( {{x_k}} \right) } \right\rangle \left| {{x_k} \in X,k = 1,2, \ldots ,n} \right. } \right\} \)

-

(2)

\(B=C\) if and only if, \({m_B}\left( {{x_k}} \right) = {m_C}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) = {\eta _C}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) = {v_C}\left( {{x_k}} \right) ,\) \(\forall {x_k} \in X.\)

-

(3)

\(B \subseteq C\) if and only if, \({m_B}\left( {{x_k}} \right) \le {m_C}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) \le {\eta _C}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) \ge {v_C}\left( {{x_k}} \right) ,\) \(\forall {x_k} \in X.\)

-

(4)

\(B\cup C=\{\langle x_k,max(m_B(x_k),m_C(x_k)),min(\eta _B(x_k),\eta _C(x_k)),min(v_B(x_k), v_C(x_k))\rangle \vert x_k\in X\}.\)

-

(5)

\(B\cap C=\{\langle x_k,min(m_B(x_k),m_C(x_k)),min(\eta _B(x_k),\eta _C(x_k)),max(v_B(x_k), v_C(x_k))\rangle \vert x_k\in X\}.\)

Definition 3

[20] Let B, C and D be PFSs defined on the universe of discourse X. A function \(S\left( {B,C} \right) \) is called a PF similarity measure if it satisfies:

-

(1)

\(0 \le S\left( {B,C} \right) \le 1\);

-

(2)

\(S\left( {B,C} \right) = S\left( {C,B} \right) \);

-

(3)

\(S\left( {B,C} \right) = 1\), if and only if \(B=C\);

-

(4)

If \(B \subseteq C \subseteq D\), then \(S\left( {B,D} \right) \le S\left( {B,C} \right) \) and \(S\left( {B,D} \right) \le S\left( {C,D} \right) \).

2.2 The Existing PF Similarity Measures

Next, we write the existing PF similarity measure in [19,20,21,22,23,24,25,26] [33]. Some of the shortcomings and limitations of these PF similarity measures will be illustrated by comparative analysis in Sect 4.

PF similarity measures \({S_{W1}},{S_{W2}},{S_{W3}},{S_{W4}}\) [19]:

PF similarity measures \({S_{W5}}\) [20]:

PF similarity measures \({S_{WG1}},{S_{WG2}}\) [21]:

PF similarity measures \({S_{SMK1}},{S_{SMK2}},{S_{SMK3}}\) [22]:

PF similarity measure \({S_{GS1}}\) [24]:

PF similarity measures \({S_{GS2}},{S_{GS3}},{S_{GS4}},{S_{GS5}}\) [25]:

PF similarity measure \({S_{LZ}}\) [23]:

PF similarity measure \({S_{KK}}\) [26]:

Where \(t = 3,4, \cdots \) and \(p = 1,2,3, \cdots \).

PF similarity measure \({S_{TP}}\) [33]:

Where \(t = 2,3,4, \cdots \) and \(p = 1,2,3, \cdots \).

3 A Hybrid Similarity Measures for PFSs

3.1 Background

Let B and C be two PFSs in universe of discourse X, where \(B = \left\{ {\left\langle {{x_k},{m_B}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) } \right\rangle } \right\} \) and \(C = \left\{ {\left\langle {{x_k},{m_C}\left( {{x_k}} \right) ,{\eta _C}\left( {{x_k}} \right) ,{v_C}\left( {{x_k}} \right) } \right\rangle } \right\} \).

Due to \(0 \le {m_B}\left( {{x_k}} \right) + {\eta _B}\left( {{x_k}} \right) + {v_B}\left( {{x_k}} \right) \le 1\) and \({\rho _B}\left( {{x_k}} \right) = 1 - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) \), the membership degree \({m_B}\left( {{x_k}} \right) \) can be taken up to \({m_B}\left( {{x_k}} \right) + {\rho _B}\left( {{x_k}} \right) \). In the same way, the neutral degree \({\eta _B}\left( {{x_k}} \right) \) can be taken up to \({\eta _B}\left( {{x_k}} \right) + {\rho _B}\left( {{x_k}} \right) \), the non-membership degree \({v_B}\left( {{x_k}} \right) \) can be taken up to \({v_B}\left( {{x_k}} \right) + {\rho _B}\left( {{x_k}} \right) \). We establish a spatial Cartesian coordinate system with membership, neutrality and non-membership as three directions. Take points \(B_1(m_B(x_k),\eta _B(x_k),v_B(x_k))\), \(B_2(m_B(x_k)+\rho _B(x_k),\eta _B(x_k),v_B(x_k))\), \(B_3(m_B(x_k),\eta _B(x_k)+\rho _B(x_k),v_B(x_k))\), \(B_4(m_B(x_k),\eta _B(x_k),v_B(x_k)+\rho _B(x_k))\), and the tetrahedron obtained by connecting the four points is all the value ranges of PFS B. Since the centroid of the tetrahedron has the largest amount of information, the centroid of the tetrahedron is taken as \(\left( {{m_B}\left( {{x_k}} \right) + \frac{{{\rho _B}\left( {{x_k}} \right) }}{4},} \right. {\eta _B}\left( {{x_k}} \right) + \frac{{{\rho _B}\left( {{x_k}} \right) }}{4},\left. {{v_B}\left( {{x_k}} \right) + \frac{{{\rho _B}\left( {{x_k}} \right) }}{4}} \right) \). Since \({\rho _B}\left( {{x_k}} \right) = 1 - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) \), therefore, the centroid can be expressed as \(\left( {\frac{{1 + 3{m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) }}{4},\frac{{1 + 3{\eta _B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) }}{4},\frac{{1 + 3{v_B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) }}{4}} \right) \). Similarly, the tetrahedral centroid formed by the membership degree, neutral degree and non-membership degree of PFSs C is expressed as \(\left( {\frac{{1 + 3{m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) }}{4},\frac{{1 + 3{\eta _C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) }}{4},\frac{{1 + 3{v_C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) }}{4}} \right) \).

The distance between the two centroids in the membership degree direction is \( \left| {\frac{{1 + 3m_{B} \left( {x_{k} } \right) - \eta _{B} \left( {x_{k} } \right) - v_{B} \left( {x_{k} } \right)}}{4} - \frac{{1 + 3m_{C} \left( {x_{k} } \right) - \eta _{C} \left( {x_{k} } \right) - v_{C} \left( {x_{k} } \right)}}{4}} \right| = \frac{{\left| {3\left( {m_{B} \left( {x_{k} } \right) - m_{C} \left( {x_{k} } \right)} \right) - \left( {\eta _{B} \left( {x_{k} } \right) - \eta _{C} \left( {x_{k} } \right)} \right) - \left( {v_{B} \left( {x_{k} } \right) - v_{C} \left( {x_{k} } \right)} \right)} \right|}}{4} \). The distance between the two centroids in the neutral degree direction is \(\left| {\frac{{1 + 3{\eta _B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) }}{4} - \frac{{1 + 3{\eta _C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) }}{4}} \right| = \frac{{\left| {3\left( {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) - \left( {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right) } \right| }}{4}\). The distance between the two centroids in the non-membership degree direction is \( \left| {\frac{{1 + 3v_{B} \left( {x_{k} } \right) - m_{B} \left( {x_{k} } \right) - \eta _{B} \left( {x_{k} } \right)}}{4} - \frac{{1 + 3v_{C} \left( {x_{k} } \right) - m_{C} \left( {x_{k} } \right) - \eta _{C} \left( {x_{k} } \right)}}{4}} \right| = \frac{{\left| {3\left( {v_{B} \left( {x_{k} } \right) - v_{C} \left( {x_{k} } \right)} \right) - \left( {m_{B} \left( {x_{k} } \right) - m_{C} \left( {x_{k} } \right)} \right) - \left( {\eta _{B} \left( {x_{k} } \right) - \eta _{C} \left( {x_{k} } \right)} \right)} \right|}}{4} \).

Khan et al. [26] constructed a similarity measure \({S_{KK}}\) for PFSs based on the distance between the two tetrahedral centroid. However, the similarity measure formula (17) given at the end changes the sign in the direction of neutral and non-membership degree, then these two directions are not the distance between the centroids.

When Ganie and Singh [24] studied PF similarity measure based on Hamming distance [22], they found some unreasonable results in calculating different similarity measure. In addition, the application in the pattern recognition problem will have unclassifiable results. Due to the shortcomings of the PF similarity measure based on the tetrahedral centroid distance proposed by Khan et al and the Hamming distance proposed by Singh et al, we next consider a hybrid PF similarity measure which combines the Hamming distance and the transformed tetrahedral centroid distance.

3.2 PF Similarity Measure Combines the Hamming Distance and the Transformed Tetrahedral Centroid Distance

In this section, we define a new PF similarity measure and verify that definition 3 is satisfied.

Let B and C be two PFSs in universe of discourse X, where \(B = \left\{ {\left\langle {{x_k},{m_B}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) } \right\rangle } \right\} \) and \(C = \left\{ {\left\langle {{x_k},{m_C}\left( {{x_k}} \right) ,{\eta _C}\left( {{x_k}} \right) ,{v_C}\left( {{x_k}} \right) } \right\rangle } \right\} \). We introduce a hybrid PF similarity measure which combines the Hamming distance and the transformed tetrahedral centroid distance between the PFSs B and C as

Where \(\frac{{\left| {3\left( {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right) - \left( {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) } \right| }}{4}\) denotes the distance of the two centroids in the non-membership degree direction. Where \(\frac{{\left| {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right| + \left| {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right| + \left| {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right| }}{2}\) denotes the Hamming distance base on membership degree, neutral degree and non-membership degree. Where \(\frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}\) and \(\left( {1 - \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}} \right) \) denotes the geometric mean of rejection degree as the weight.

Theorem 1

\({S_L}\left( {B,C} \right) \) is a PF similarity measure between the two PFSs B and C, due to satisfying the four properties of definition 3.

Proof

(1) \(0 \le {S_L}\left( {B,C} \right) \le 1\)

We can write the following equations:

Since from the definition 1 of PFS, we have

\(0 \le {m_B}\left( {{x_k}} \right) \le 1,0 \le {\eta _B}\left( {{x_k}} \right) \le 1, 0 \le {v_B}\left( {{x_k}} \right) \le 1, 0 \le {m_C}\left( {{x_k}} \right) \le 1, 0 \le {\eta _C}\left( {{x_k}} \right) \le 1, 0 \le {v_C}\left( {{x_k}} \right) \le 1\), \(0 \le {m_B}\left( {{x_k}} \right) + {\eta _B}\left( {{x_k}} \right) + {v_B}\left( {{x_k}} \right) \le 1, 0 \le {m_C}\left( {{x_k}} \right) + {\eta _C}\left( {{x_k}} \right) + {v_C}\left( {{x_k}} \right) \le 1\).

Therefore, we have the following inequalities:

\(\begin{array}{l} - 1 \le 3{v_B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) \le 3;\\ - 3 \le - \left( {3{v_C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) \le 1 \end{array}\);

\(0 \le \left| {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right| + \left| {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right| + \left| {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right| \le 2\),

then we have

\(- 4 \le \left( {3{v_B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) } \right) - \left( {3{v_C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) \le 4\).

So, we have the following inequalities:

\(0 \le \frac{{\left| {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right| + \left| {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right| + \left| {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right| }}{2} \le 1\);

\(0 \le \frac{{\left| {3\left( {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right) - \left( {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) } \right| }}{4} \le 1\).

Due to \({\rho _B}\left( {{x_k}} \right) = 1 - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) - {v_B}\left( {{x_k}} \right) \) and \({\rho _C}\left( {{x_k}} \right) = 1 - {m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) \), we obtain \(0 \le \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} \le 1\), furthermore, we have:

\(0 \le \frac{{\left| {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right| + \left| {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right| + \left| {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right| }}{2} \times \left( {1 - \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}} \right) \le 1 - \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}\);

\(0 \le \frac{{\left| {3\left( {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right) - \left( {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) } \right| }}{4} \times \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} \le \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}\).

It means that

Then we have the following inequality:

Finally, \(0 \le {S_L}\left( {B,C} \right) \le 1\).

(2) \({S_L}\left( {B,C} \right) ={S_L}\left( {C,B} \right) \)

\(= S{}_L\left( {B,C} \right) \).

(3) \({S_L}\left( {B,C} \right) = 1\), if and only if \(B=C\).

“ \(\Rightarrow \)” If \({S_L}\left( {B,C} \right) = 1\), according to formula (19), we have:

\(\frac{{\left| {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right| + \left| {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right| + \left| {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right| }}{2} \times \left( {1 - \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}} \right) = 0\) and \(\frac{{\left| {3\left( {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right) - \left( {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) } \right| }}{4} \times \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 0\).

If \(\frac{{\left| {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right| + \left| {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right| + \left| {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right| }}{2} \times \left( {1 - \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2}} \right) = 0\), then we can get \({m_B}\left( {{x_k}} \right) = {m_C}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) = {\eta _C}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) = {v_C}\left( {{x_k}} \right) \) mean \(B=C\) or \(\frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 1\).

If \(\frac{{\left| {3\left( {{v_B}\left( {{x_k}} \right) - {v_C}\left( {{x_k}} \right) } \right) - \left( {{m_B}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{\eta _B}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) } \right| }}{4} \times \frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 0\), then we can get \(3{v_B}\left( {{x_k}} \right) -{m_B}\left( {{x_k}} \right) -{\eta _B}\left( {{x_k}} \right) = 3{v_C}\left( {{x_k}} \right) -{m_C}\left( {{x_k}} \right) -{\eta _C}\left( {{x_k}} \right) \) or \(\frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 0\).

Because \(\frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 1\) and \(\frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 0\) are disjoint events, we talk about the following one case: If \(3{v_B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) = 3{v_C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) \) and \(\frac{{{\rho _B}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) }}{2} = 1\), then we can get \({\rho _B}\left( {{x_k}} \right) = {\rho _C}\left( {{x_k}} \right) = 1\), it means that \({v_B}\left( {{x_k}} \right) = {m_B}\left( {{x_k}} \right) = {\eta _B}\left( {{x_k}} \right) = {v_C}\left( {{x_k}} \right) = {m_C}\left( {{x_k}} \right) = {\eta _C}\left( {{x_k}} \right) = 0\). Therefore, we have \(B=C\).

Accordingly, we have proved that if \({S_L}\left( {B,C} \right) = 1\) then \(B=C\).

“ \(\Leftarrow \)” If \(B=C\), according to definition 2, we have: \({m_B}\left( {{x_k}} \right) = {m_C}\left( {{x_k}} \right) ,{\eta _B}\left( {{x_k}} \right) = {\eta _C}\left( {{x_k}} \right) ,{v_B}\left( {{x_k}} \right) = {v_C}\left( {{x_k}} \right) ,{\rho _B}\left( {{x_k}} \right) = {\rho _C}\left( {{x_k}} \right) \). According to formula (19), we have \({S_L}\left( {B,C} \right) = 1\).

(4) If \(B \subseteq C \subseteq D\), then \({S_L}\left( {B,C} \right) \ge {S_L}\left( {B,D} \right) \) and \({S_L}\left( {C,D} \right) \ge {S_L}\left( {B,D} \right) \).

According to formula (19), we know that:

We can write the following equations:

If \(B \subseteq C \subseteq D\), according to definition 2, we have: \(0 \le {m_B}\left( {{x_k}} \right) \le {m_C}\left( {{x_k}} \right) \le {m_D}\left( {{x_k}} \right) \le 1,0 \le {\eta _B}\left( {{x_k}} \right) \le {\eta _C}\left( {{x_k}} \right) \le {\eta _D}\left( {{x_k}} \right) \le 1\) and \(1 \ge {v_B}\left( {{x_k}} \right) \ge {v_C}\left( {{x_k}} \right) \ge {v_D}\left( {{x_k}} \right) \ge 0\). Therefore, we have the following inequalities:

\(3{v_B}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) \ge 3{v_C}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) \ge 3{v_D}\left( {{x_k}} \right) - {m_D}\left( {{x_k}} \right) - {\eta _D}\left( {{x_k}} \right) \).

Hence, we have

Similarly, we can obtain:

\({S_L}\left( {B,D} \right) = 1 - \frac{1}{n}\sum \limits _{k = 1}^n {\left[ {\frac{{\left( {{m_D}\left( {{x_k}} \right) - {m_B}\left( {{x_k}} \right) } \right) + \left( {{\eta _D}\left( {{x_k}} \right) - {\eta _B}\left( {{x_k}} \right) } \right) + \left( {{v_B}\left( {{x_k}} \right) - {v_D}\left( {{x_k}} \right) } \right) }}{2} - \frac{{{\rho _B}{{\left( {{x_k}} \right) }^2} - {\rho _D}{{\left( {{x_k}} \right) }^2}}}{8}} \right] } \).

Accordingly, we have

\( = \frac{1}{n}\sum \limits _{k = 1}^n {\left[ \begin{array}{l} \frac{{4\left[ {\left( {{m_D}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) + \left( {{\eta _D}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) + \left( {{v_C}\left( {{x_k}} \right) - {v_D}\left( {{x_k}} \right) } \right) } \right] }}{8}\\ + \frac{{\left( {{\rho _D}\left( {{x_k}} \right) - {\rho _C}\left( {{x_k}} \right) } \right) \left( {{\rho _D}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) } \right) }}{8} \end{array} \right] } \)

\(= \frac{1}{{8n}}\sum \limits _{k = 1}^n {\left\{ \begin{array}{l} 4\left[ {\left( {{m_D}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) + \left( {{\eta _D}\left( {{x_k}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) + \left( {{v_C}\left( {{x_k}} \right) - {v_D}\left( {{x_k}} \right) } \right) } \right] + \\ \left[ {\left( {{v_C}\left( {{x_k}} \right) - {v_D}\left( {{x_k}} \right) } \right) - \left( {{m_D}\left( {{x_k}} \right) - {m_C}\left( {{x_k}} \right) } \right) - \left( {{\eta _D}\left( {{x_kv}} \right) - {\eta _C}\left( {{x_k}} \right) } \right) } \right] \times \left( {{\rho _D}\left( {{x_k}} \right) + {\rho _C}\left( {{x_k}} \right) } \right) \end{array} \right\} }\)

Because of \({m_C}\left( {{x_k}} \right) \le {m_D}\left( {{x_k}} \right) ,{\eta _C}\left( {{x_k}} \right) \le {\eta _D}\left( {{x_k}} \right) ,{v_C}\left( {{x_k}} \right) \ge {v_D}\left( {{x_k}} \right) \) and \(0 \le {\rho _C}\left( {{x_k}} \right) + {\rho _D}\left( {{x_k}} \right) \le 2\), we can get \({S_L}\left( {B,C} \right) - {S_L}\left( {B,D} \right) \ge 0\), so \({S_L}\left( {B,C} \right) \ge {S_L}\left( {B,D} \right) \).

Similarly, we can prove \({S_L}\left( {C,D} \right) \ge {S_L}\left( {B,D} \right) \).

If we consider the weights \(w_k\) of \(x_k\) such that \({w_k} \in \left[ {0,1} \right] \) and \(\sum \limits _{k = 1}^n {{w_k} = 1}\) , then the PF weighted similarity measure between the PFSs B and C can be defined as

Theorem 2

\({S_{WL}}\left( {B,C} \right) \) is a PF similarity measure between the two PFSs B and C.

Proof

According to the above proof process and definition 3, it is straightforward.

4 Experiments and Analysis

In this section, to verify the rationality and superiority of our proposed PF similarity measure in practical cases, the proposed and existing PF similarity measure formulas (1)-(19) are compared and analyzed through numerical examples and pattern recognition.

4.1 Numerical Comparisons

In the previous section, the newly proposed PF similarity measure combines the Hamming distance and the transformed tetrahedral centroid distance. Next, we use an example to illustrate the counterintuitive situation of the PF similarity measure proposed by Khan et al. [26] based on the transformed tetrahedral centroid, and the insufficiency of the PF similarity measure proposed by Singh et al. [22] based on the Hamming distance.

Example 1

Let us consider the following three PFSs in the universe of discourse \(X =\left\{ x \right\} \), where \(B = \left\{ {\left\langle {0.1,0.1,0.4} \right\rangle } \right\} \), \(C = \left\{ {\left\langle {0.1,0.6,0.3} \right\rangle } \right\} \) and \(D = \left\{ {\left\langle {0.1,0.6,0.2} \right\rangle } \right\} \). By definition 2(3), the relationship among PFSs B, C and D is obtained as \(B \subseteq C \subseteq D\). It can be obtained from definition 3(4): \(S\left( {B,C} \right) \ge S\left( {B,D} \right) \). Using the PF similarity measure formula (8) based on the Hamming distance, we calculate \({S_{SMK1}}\left( {B,C} \right) = 0.75\) and \({S_{SMK1}}\left( {B,D} \right) = 0.75\). Using the PF similarity measure formula (17) based on the transformed tetrahedral centroid distance, we calculate \({S_{KK}}\left( {B,C} \right) = 0.833\) and \({S_{KK}}\left( {B,D} \right) = 0.858\). Using our proposed PF similarity measure formula (19) that combines the Hamming distance and the transformed tetrahedral centroid distance, we calculate \({S_L}\left( {B,C} \right) = 0.72\) and \({S_L}\left( {B,D} \right) = 0.669\).

From the above calculation results, we can see that the similarity measure \({S_{KK}}\) based on the tetrahedral transformation centroid distance violates Definition 3(4), which is obviously counter-intuitive. Although the similarity measure \({S_{SMK1}}\) based on Hamming distance does not violate the basic definition, it will be difficult to attribute PFS B to C or D if it is applied to pattern recognition problems. And our proposed similarity measure \({S_L}\) not only has no unreasonable results, but also clearly sees that PFSs B and C are more similar. \({S_L}\) is proposed by combining the Hamming distance and the transformed tetrahedral centroid distance. It not only combines the advantages of the two distances but also overcomes their shortcomings.

The next two examples further illustrate some of the advantages of our proposed PF similarity measure through comparative analysis with existing PF similarity measures.

Example 2

Let us consider the following three PFSs in the universe of discourse \(X =\left\{ x \right\} \), where \(B = \left\{ {\left\langle {0.0,0.5,0.0} \right\rangle } \right\} \), \(C = \left\{ {\left\langle {0.0,0.0,1.0} \right\rangle } \right\} \) and \(D = \left\{ {\left\langle {0.0,0.0,0.0} \right\rangle } \right\} \). Apparently PFS D is closer to B than to C. So we can get that the similarity measure between B and D is higher than that between C and D, that is, \(S\left( {B,D} \right) > S\left( {C,D} \right) \). Table 1 is a detailed comparison of the results of calculating similarity measure data using formulas (1)–(19).

In Table 1, it can be clearly seen that the PF similarity measures \({S_{W2}}\), \({S_{W3}}\), \({S_{W4}}\), \({S_{W5}}\), \({S_{WG1}}\), \({S_{WG2}}\), \({S_{SMK1}}\), \({S_{SMK2}}\), \({S_{SMK3}}\), \({S_{GS3}}\), \({S_{GS4}}\) and \({S_{GS5}}\) appear unreasonable cases, that is, \(S\left( {B,D} \right) =S\left( {C,D} \right) \). This is the same as Contrary to our above analysis, it is obviously impossible to distinguish the similarity of PFSs B, C and D, respectively. However, there is no unreasonable situation between our proposed similarity measure \({S_L}\) and the existing similarity measures \({S_{W1}}\), \({S_{GS1}}\), \({S_{GS2}}\), \({S_{LZ}}\), \({S_{KK}}\) and \({S_{TP}}\), which shows that our proposed similarity measure is effective in the instance.

Example 3

Let us consider the following three PFSs in the universe of discourse \(X =\left\{ x \right\} \), where \(B = \left\{ {\left\langle {0.4,0.1,0.2} \right\rangle } \right\} \), \(C = \left\{ {\left\langle {0.5,0.1,0.2} \right\rangle } \right\} \) and \(D = \left\{ {\left\langle {0.5,0.1,0.1} \right\rangle } \right\} \). By definition 2(3), the relationship among PFSs B, C and D is obtained as \(B \subseteq C \subseteq D\) . Therefore, according to definition 3(4), the similarity measure between these three PFSs should follow the following size relationships \(S\left( {B,C} \right) \ge S\left( {B,D} \right) \) and \(S\left( {C,D} \right) \ge S\left( {B,D} \right) \). Table 2 is a detailed comparison of the results of calculating similarity measure data using formulas (1)-(19).

In Table 2, it can be clearly seen that the PF similarity measures \({S_{W5}}\) and \({S_{GS2}}\) appear counter-intuitive, that is, \(S\left( {B,C} \right) < S\left( {B,D} \right) \). This violates the fourth property of similarity measure definition 3. We observe that the PF similarity measures \({S_{W1}}\), \({S_{W2}}\), \({S_{W3}}\), \({S_{W4}}\), \({S_{SMK1}}\), \({S_{SMK2}}\), \({S_{SMK3}}\), \({S_{GS2}}\), \({S_{GS4}}\) and \({S_{GS5}}\) all show the result of \(S\left( {B,C} \right) = S\left( {C,D} \right) = S\left( {B,D} \right) \), so none of them can distinguish between the three PFSs degree of similarity. The PF similarity measures \({S_{GS3}}\), \({S_{KK}}\) and \({S_{TP}}\) showed the result of \(S\left( {B,C} \right) = S\left( {C,D} \right) \) , so none of them could distinguish the similarity degree between C and different PFSs B and D. The above-mentioned thirteen similarity measure formulas all appear in weak order. If they are applied to decision-making problems, there may be a problem that the best solution cannot be selected. However, our proposed PF similarity measure \({S_L}\) and the existing \({S_{WG1}}\), \({S_{GS1}}\), \({S_{LZ}}\) not only do not violate Definition 3(4), but also produce strong ranking results. The ranking result of \({S_L}\) is the same as the ranking order of \({S_{GS1}}\) and \({S_{LZ}}\), which is \(S\left( {B,C} \right)> S\left( {C,D} \right) > S\left( {B,D} \right) \). From this result, the similarity measure we propose is stronger than most of the existing ones, and it is in line with the sorting problem that occurs in actual decision-making.

From the comparative analysis of the experimental results of the first three examples, it is not difficult to find that most of the existing PF similarity measures are counter-intuitive, unreasonable, and unable to be sorted in practice. Only a few PF similarity measures can handle the above problem and achieve satisfactory results, including our proposed similarity measure formula \({S_L}\). Next, we apply these formulations (1)-(19) to a pattern recognition problem to test the practicability of formulation \({S_L}\).

4.2 Application in Pattern Recognition

Pattern recognition is the problem of classifying an unknown pattern into known patterns. Due to its practicality, it has been applied to practical problems such as medical diagnosis and corporate decision-making. In solving the problem of pattern recognition in PF environment, many measurement tools can be used to classify unknown patterns, such as distance measure, accuracy measure, divergence measure, similarity measure and correlation measure. We employ the similarity measure as a tool to solve the pattern recognition problem for the PF similarity measure formula (19) provided in this research, and we apply the PF similarity measure (1-19) to the pattern recognition problem to compare and analyze its benefits and drawbacks.

In PF environment, using similarity measure to solve pattern recognition problem is formulated as follows:

Let \({B_i}\left( {i = 1,2, \cdots ,n} \right) \) be some known patterns and C be an unknown pattern, where \({B_i}\) and C are given in the form of PFSs as follows:

\({B_i} = \left\{ {\left\langle {{x_k},{m_{{B_i}}}\left( {{x_k}} \right) ,{\eta _{{B_i}}}\left( {{x_k}} \right) ,{v_{{B_i}}}\left( {{x_k}} \right) } \right\rangle \left| {{x_k} \in X,k = 1,2, \ldots ,n} \right. } \right\} \);

\(C = \left\{ {\left\langle {{x_k},{m_C}\left( {{x_k}} \right) ,{\eta _C}\left( {{x_k}} \right) ,{v_C}\left( {{x_k}} \right) } \right\rangle \left| {{x_k} \in X} \right. } \right\} \).

To classify the unknown pattern C into one of the known patterns \({B_i}\left( {i = 1,2, \cdots ,n} \right) \), the similarity measure formula (1-19) is used to calculate the similarity degree between and each unknown patterns, namely \(S\left( {{B_i},C} \right) \). The calculated maximum similarity value \(S\left( {{B_{{i^*}}},C} \right) \) represents C being classified into \({B_{{i^*}}}\), where \({i^*} = \arg \max \left\{ {S\left( {{B_i},C} \right) } \right\} ,i = 1,2, \cdots ,n.\)

Example 4

Let us consider the following four PFSs in the universe of discourse \(X = \left\{ {{x_1},{x_2},{x_3},{x_4},{x_5}} \right\} \), where \({B_1} = \left\{ {\left\langle {0.4,0.1,0.3} \right\rangle ,\left\langle {0.5,0.2,0.3} \right\rangle ,\left\langle {0.4,0.0,0.3} \right\rangle ,\left\langle {0.7,0.2,0.0} \right\rangle ,\left\langle {0.6,0.1,0.1} \right\rangle } \right\} \), \({B_2} = \left\{ {\left\langle {0.7,0.1,0.1} \right\rangle ,\left\langle {0.2,0.4,0.3} \right\rangle ,\left\langle {0.2,0.5,0.1} \right\rangle ,\left\langle {0.1,0.2,0.5} \right\rangle ,\left\langle {0.3,0.3,0.3} \right\rangle } \right\} \), \({B_3} = \left\{ {\left\langle {0.1,0.4,0.3} \right\rangle ,\left\langle {0.4,0.1,0.3} \right\rangle ,\left\langle {0.3,0.2,0.4} \right\rangle ,\left\langle {0.2,0.3,0.5} \right\rangle ,\left\langle {0.5,0.1,0.3} \right\rangle } \right\} \), \(C = \left\{ {\left\langle {0.6,0.1,0.2} \right\rangle ,\left\langle {0.3,0.2,0.4} \right\rangle ,\left\langle {0.4,0.2,0.3} \right\rangle ,\left\langle {0.7,0.0,0.1} \right\rangle ,\left\langle {0.4,0.2,0.2} \right\rangle } \right\} \) from literature [40]. Now our purpose is to classify unknown pattern C into known patterns \({B_1}\), \({B_2}\), \({B_3}\), and calculate the similarity measure of C and each known pattern, respectively. C belongs to the pattern with the largest similarity measure. The classification results of different similarity measures are shown in Table 3.

In Table 3, we can clearly see that the existing and our proposed PF similarity measure classifies C to known patterns \({B_1}\), except that the PF similarity measure \({S_{SMK3}}\) has different classification results. This result shows that the application of our proposed similarity measure \({S_L}\) is effective on pattern recognition problems.

Example 5

Let us consider the following three PFSs in the universe of discourse \(X = \left\{ x \right\} \), where \({B_1} = \left\{ {\left\langle {0.2,0.0,0.3} \right\rangle } \right\} \), \({B_2} = \left\{ {\left\langle {0.1,0.0,0.4} \right\rangle } \right\} \) and \(C = \left\{ {\left\langle {0.0,1.0,0.0} \right\rangle } \right\} \). Now our purpose is to classify unknown pattern C into known patterns \({B_1}\), \({B_2}\), and calculate the similarity measure of C and each known pattern, respectively. C belongs to the pattern with the largest similarity measure. The classification results of different similarity measures are shown in Table 4.

In Table 4, we can clearly observe that the PF similarity measures \({S_{W1}}\), \({S_{W2}}\) ,\({S_{W3}}\), \({S_{W4}}\), \({S_{W5}}\), \({S_{WG1}}\), \({S_{WG2}}\), \({S_{SMK1}}\), \({S_{SMK2}}\), \({S_{GS1}}\), \({S_{GS2}}\), \({S_{GS3}}\), \({S_{GS4}}\), \({S_{GS5}}\) and \({S_{LZ}}\) all have failed classifications. While the PF similarity measures \({S_{SMK3}}\), \({S_{KK}}\), \({S_{TP}}\) and \({S_{L}}\) all successfully classified the unknown pattern C, but most similarity measures classified C to \({B_1}\). From the above situation, we can see that our proposed similarity measure is not only successful in classification, but also the classification results are consistent with most similarity measures. This shows that the PF similarity measure \({S_{L}}\) has a superior function in the pattern recognition problem.

Example 6

Let us consider the following four PFSs in the universe of discourse \(X = \left\{ {{x_1},{x_2},{x_3}} \right\} \), where \({B_1} = \left\{ {\left\langle {0.5,0.1,0.1} \right\rangle ,\left\langle {0.3,0.1,0.3} \right\rangle ,\left\langle {0.3,} \right. } \right. \) \(\left. {\left. {0.1,0.4} \right\rangle } \right\} \), \({B_2} = \left\{ {\left\langle {0.4,0.3,0.2} \right\rangle ,\left\langle {0.3,0.2,0.5} \right\rangle ,\left\langle {0.4,0.1,0.3} \right\rangle } \right\} \), \({B_3} = \left\{ {\left\langle {0.2,0.4,} \right. } \right. \) \(\left. {\left. {0.3} \right\rangle ,\left\langle {0.1,0.4,0.4} \right\rangle ,\left\langle {0.0,0.0,0.0} \right\rangle } \right\} \) and \(C = \left\{ {\left\langle {0.3,0.2,0.3} \right\rangle ,\left\langle {0.4,0.1,0.3} \right\rangle ,\left\langle {0.4,} \right. } \right. \) \(\left. {\left. {0.2,0.3} \right\rangle } \right\} \). Now our purpose is to classify unknown pattern C into known patterns \({B_1}\), \({B_2}\), \({B_3}\), and calculate the similarity measure of C and each known pattern, respectively. C belongs to the pattern with the largest similarity measure. The classification results of different similarity measures are shown in Table 5.

In Table 5, we clearly see that the unknown pattern C are classified into \(B_2\) according to our proposed similarity measure \(S_L\). These existing similarity measures \(S_{W5}\), \(S_{WG1}\), \(S_{WG2}\), \(S_{GS1}\), \(S_{GS3}\), \(S_{LZ}\), \(S_{KK}\) and \(S_{TP}\) are consistent with the proposed \(S_L\) classification results. Since the similarity measures \(S_{W1}\), \(S_{W2}\), \(S_{W3}\), \(S_{W4}\), \(S_{SMK1}\), \(S_{SMK2}\), \(S_{SMK3}\), \(S_{GS2}, S_{GS4}\) and \(S_{GS5}\) respectively calculate the known patterns \(B_1\) and \(B_2\) with the same similarity value as the unknown pattern C, these existing similarity measures cannot classify the unknown pattern C. Therefore, the similarity measure \(S_L\) proposed by us can not only correctly classify unknown patterns, but also solve some pattern recognition problems that cannot be classified by existing similarity measures.

From the comparative analysis of the experimental results of different similarity measures applied to pattern recognition in Table 3, Table 4 and Table 5, it can be found that most of the existing PF similarity measures have classification failures. However, only the PF similarity measures \({S_{TP}}\) and \({S_{L}}\) can well address the above shortcomings and achieve the same classification results as most existing similarity measures. It can be seen that our proposed PF similarity measure is more effective than most existing PF similarity measures in classifying the best attribution patterns in pattern recognition problems.

In the six examples given above, applying the existing PF similarity measure formula (1)-(18) and our proposed PF similarity measure formula (19), we can clearly see that the similarity measure \({S_{L}}\) has the following advantages:

-

1.

Our proposed PF similarity measure combines Hamming distance and transformed tetrahedral centroid distance. While retaining the advantages of the two distances, it also overcomes the shortcomings of the PF similarity measure proposed by the two distances.

-

2.

Most PF similarity measures cannot distinguish the similarity measures between different PFSs, while our proposed similarity measure not only can distinguish, but also achieves more reasonable results compared with a small number of similar measures that can distinguish.

-

3.

For some highly similar but different PFSs, there is inclusion relationship. Most PF similarity measures cannot distinguish the degree of connection between these PFSs, and even violate the similarity measure definition 3 (3). Our proposed PF similarity measure can perfectly solve the above existing problems, indicating that it has better compatibility.

-

4.

In the pattern recognition problem, the PF similarity measure proposed by us can maintain the same classification results as most PF similarity measures in the examples proposed by other literature, indicating that it has good consistency.

-

5.

In pattern recognition problems, it can be seen from many examples that most of the existing PF similarity measures have failed in classification, while our proposed PF similarity measure can accurately classify. It can be seen from the above situation that \({S_{L}}\) has more obvious advantages in pattern recognition than the existing similarity measure.

-

6.

From the above six examples, it can be clearly seen that the existing PF similarity measures has some unreasonable or even wrong results, while our proposed PF similarity measure can handle all cases correctly, and its stability is better.

Based on the advantages of the PF similarity measure \({S_{L}}\), we next apply it to multi-attribute decision-making problems and propose an improved VIKOR method.

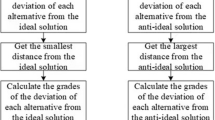

5 An Improved VIKOR Method Based on the Proposed Similarity Measure of PFS

In the PF environment, we propose an improved VIKOR method based on the similarity measure \({S_{L}}\) to solve the MADM problem. In [33], Tian et al. proposed a VIKOR method in the PF environment, which only considers group utility and individual regret that are close to the positive ideal, and then obtains VIKOR values for the alternatives that are only close to the positive ideal. We improve the VIKOR method based on Tian et al. [33], which obtains an optimal alternative that considers not only the closest to the positive ideal solution, but also the farthest from the negative ideal solution. The proposed similarity measure \({S_{L}}\) is used as a tool to calculate the group utility and individual regret values closest to the positive and negative ideals, and then the VIKOR values of the alternatives closest to the positive and negative ideals are obtained. Finally, the concept of the TOPSIS method is used to calculate the relative closeness coefficient of each alternative. The smaller the relative closeness coefficient, the better the order of the alternatives.

To verify the effectiveness and practicability of the improved VIKOR method, this method is applied to the MADM problem of literature [34], and the prioritization of the obtained alternatives is compared and analyzed with the decision method of literature [33,34,35] [37].

Below we will give the specific steps and flowchart to improve the VIKOR method.

5.1 Algorithmic Steps of the Improved VIKOR Method

Suppose the expert evaluates m alternatives based on n attributes, where \(m = \left\{ {{A_1},{A_2}, \cdots ,{A_m}} \right\} \), \(n = \left\{ {{C_1},{C_2}, \cdots ,{C_n}} \right\} \). Let \(B = {\left( {{b_{ij}}} \right) _{m \times n}}\) \(\left( {0 \le i \le m,0 \le j \le n} \right) \) be the PF decision matrix obtained from the expert evaluation results as follows:

\(B = {\left( {{b_{ij}}} \right) _{m \times n}} = \left[ {\begin{array}{*{20}{c}} {{b_{11}}}&{}{{b_{12}}}&{} \cdots &{}{{b_{1n}}}\\ {{b_{21}}}&{}{{b_{22}}}&{} \cdots &{}{{b_{2n}}}\\ \vdots &{} \vdots &{} \ddots &{} \vdots \\ {{b_{m1}}}&{}{{b_{m2}}}&{} \cdots &{}{{b_{mn}}} \end{array}} \right] \),

where \({b_{ij}} = \left( {{m_{ij}},{\eta _{ij}},{v_{ij}}} \right) \) is an evaluation value expressed by picture fuzzy values. \({m_{ij}},{\eta _{ij}}\) and \({v_{ij}}\) represent the membership degree, neutral degree and non-membership degree of the alternative \(A_i\) satisfies attribute \(C_j\). The rows of matrix B represent alternatives, and the columns represent attributes.

Step1: Constructing normalized picture fuzzy decision matrix.

In the decision matrix B, there are two types of attributes, namely benefit attribute and cost attribute. To make the following calculation simple, we uniformly transform the cost attribute into the benefit attribute according to Definition 2 (1). Therefore, the normalized picture fuzzy decision matrix \(D = {\left( {{d_{ij}}} \right) _{m \times n}}\) is shown below, where the rows represent alternatives and the columns represent attributes:

Step2: Calculate the weights of attributes.

In the multi-attribute decision making problem, there is not much difference in the evaluation value of the alternatives under the same attribute, so we think that this attribute is not important here; On the contrary, under the same attribute, the evaluation value of the alternatives is greatly different, so we consider this attribute to be more important. Therefore, it is necessary to calculate the weight of each attribute to express its importance, and here we use picture fuzzy entropy [37] to calculate the attribute weights. The weight \( w_j\) of attribute \(C_j\) is given by the following formula:

Here \(E_j\) stands for picture fuzzy entropy [37] of attribute \(C_j\):

Step3: Determine the positive and negative ideal vectors for each attribute.

According to definitions 2(4) and (5), construct positive ideal value picture fuzzy vector \(F^+\) and negative ideal value picture fuzzy vector \(F^-\), respectively, as follows:

Step4: Obtain the positive ideal similarity measure matrix \(H^+\) and the negative ideal similarity measure matrix \(H^-\).

Based on the similarity measure formula (19), calculate the similarity measure of \(F^+\) and D under each attribute, calculate the similarity measure of \(F^-\) and D under each attribute, and obtain the positive ideal similarity measure matrix \(H^+\) and the negative ideal similarity measure matrix \(H^-\).

Step5: Determine the similarity measure vectors \(P^+\) and \(P^-\) closest to and farthest from the positive ideal for all attributes; Determine the similarity measure vectors \(K^+\) and \(K^-\) closest to and farthest from the negative ideal for all attributes.

Step6: Calculate the normalized closest positive ideal group utility value \({PS_i}\) and the normalized closest positive ideal individual regret value \({PR_i}\) of alternative \(A_i\), respectively, shown as follows:

Calculate the normalized closest negative ideal group utility value \({KS_i}\) and the normalized closest negative ideal individual regret value \({KR_i}\) of alternative \(A_i\), respectively, shown as follows:

Step7: Compute the closest positive ideal VIKOR index \(Q_i^ +\) and the closest negative ideal VIKOR index \(Q_i^ -\) of alternative \(A_i\), respectively, shown as follows:

The values of \(\lambda \) and \(1 - \lambda \) are the weight of the strategy of “ the majority of attribute ” and “ the individual regret ”, respectively. In general, the value of \(\lambda = 0.5\) is adopted.

Step8: Calculate the relative closeness coefficient \({DQ_i}\) of alternative \(A_i\), shown as follows:

The smaller the degree of relative closeness \({DQ_i}\) , the better the preference order of alternative \(A_i\), where \(1 \le i \le m\).

To understand the steps of improving VIKOR method given above, the flowchart is shown in Figure 1.

5.2 Experimental Results and Comparison

Next, to demonstrate the effectiveness of the improved VIKOR method, we use numerical examples from [34] for verification to obtain the preference order of the alternatives.

Example 7

Suppose the expert evaluates five alternatives based on four attributes, where five alternatives \(= \left\{ {{A_1},{A_2}, {A_3},{A_4},{A_5}} \right\} \), four attributes \( = \left\{ {{C_1},{C_2},{C_3},{C_4}} \right\} \). PF decision matrix \(B = {\left( {{b_{ij}}} \right) _{5 \times 4}}\) of experts evaluating alternatives based on attributes is obtained from the literature [34] as follows:

where the rows represent alternatives and the columns represent attributes.

Step1: In the literature [34], we know that attributes are all benefit attributes, and the normalized picture fuzzy decision matrix \(D=B\) is obtained by formula (21).

Step2: Using formula (22) and (23), the weight of all attributes are calculated as \({w_1} = 0.2027,{w_2} = 0.3108,{w_3} = 0.2568\) and \({w_4} = 0.2297\).

Step3: Using formula (24), write the positive and negative ideal vectors, respectively, as follows:

Step4: Through our proposed similarity measure formula (19), calculate the similarity measure of \(F^+\) and D under each attribute, calculate the similarity measure of \(F^-\) and D under each attribute. Using formula (25), obtain the positive ideal similarity measure matrix \(H^+\) and the negative ideal similarity measure matrix \(H^-\) as follows:

Step5: Using formula (26), write the similarity measure vectors\( P^+\) and \(P^-\) closest to and farthest from the positive ideal for all attributes, respectively, as follows:

Using formula (26), write the similarity measure vectors \(K^+\) and \(K^-\) closest to and farthest from the negative ideal for all attributes, respectively, as follows:

Step6: Using formula (27), calculate the normalized closest positive ideal group utility value \({PS_i}\) and the normalized closest positive ideal individual regret value \({PR_i}\) of alternative \(A_i\), respectively, shown as follows:

Using formula (28), calculate the normalized closest negative ideal group utility value \({KS_i}\) and the normalized closest negative ideal individual regret value \({KR_i}\) of alternative \(A_i\), respectively, shown as follows:

Step7: Using formula (29), compute the closest positive ideal VIKOR index \(Q_i^ +\) and the closest negative ideal VIKOR index \(Q_i^ -\) of alternative \(A_i\), respectively, shown as follows:

Step8: Using formula (30), calculate the degree of relative closeness \({DQ_i}\) of alternative \(A_i\), shown as follows:

Because \(D{Q_4}< D{Q_3}< D{Q_1}< D{Q_5} < D{Q_2}\) , the preference order of the alternatives \(A_1\), \(A_2\), \(A_3\), \(A_4\) and \(A_5\) is: \({A_4}> {A_3}> {A_1}> {A_5} > {A_2}\).

Tian et al. [33] used their own similarity measure (18) combined with the traditional VIKOR method, which is widely used in multi-attribute decision-making problems in PF environments. In [34], Wang et al. adopted the developed picture fuzzy weighted geometric (PFWG) operator for multi-attribute decision-making. Wei et al. [35] ranked the given options according to an extended projection model of picture fuzzy set, and then selected the most ideal option. In the literature [37], the author proposed the picture fuzzy normalized projection (PFNP) model, combined the PFNP model with the VIKOR method, constructed a VIKOR method based on picture fuzzy normalized projection and applied it in multi-attribute decision-making. We apply these methods to example 7 and obtain the prioritization of alternatives in Table 6.

It can be seen from Table 6 that the best alternatives obtained by the five multi-attribute decision-making methods are consistent. And the improved VIKOR method, the PFWG operator method and the PFNP-VIKOR method have the same ordering method for the alternatives. Among the extant projection model methods, only the second and third alternatives are ranked differently, and the ranking results of other alternatives are consistent with other methods. In the VIKOR method in the PF environment, the ranking of all the alternatives except the best alternative is inconsistent. If the first two best alternatives are selected in the actual decision-making problem, the VIKOR method in the PF environment will have a different choice than other schemes. Therefore, the improved VIKOR method based on PF similarity measure \({S_L}\) is not only effective in multi-attribute decision-making problems, but also better than the traditional VIKOR method in PF environment proposed by Tian et al. [33].

6 Conclusion

This paper proposes a hybrid PF similarity measure combining Hamming distance and transformed tetrahedron centroid distance, and proves that it satisfies the property of similarity measure. We find that the hybrid PF similarity measure can not only combine the advantages of the two distances, but also overcome the shortcomings of the PF similarity measure proposed by the two distances. The existing and proposed PF similarity measures are applied to numerical examples and pattern recognition problems. The experimental results show that the proposed PF similarity measures have more advantages than the existing ones. Based on the hybrid PF similarity measure, we study the application of an improved VIKOR method in PF environment, and compare it with other MADM methods through examples. The results verify the practicability and effectiveness of the improved VIKOR method. In addition, we do not give a geometric image explanation of the hybrid PF similarity measure, which is missing some confidence. Future research work will be carried out in the following areas:

1. The hybrid PF similarity measure is applied to medical diagnosis, cluster analysis and image processing.

2. Like Rong et al. [41, 42] applied the proposed hybrid PF similarity measure to the MARCOS method for multi-criterion group decision-making.

3. Extend the improved VIKOR method to interval picture fuzzy set, generalized picture fuzzy soft set and other fields.

Availability of Data and Materials

Not applicable.

Abbreviations

- FS:

-

Fuzzy set

- IFS:

-

Intuitionistic fuzzy set

- PF:

-

Picture fuzzy

- PFS:

-

Picture fuzzy set

- PFSs:

-

Picture fuzzy sets

- MADM:

-

Multi-attribute decision-making

- PFWG:

-

Picture fuzzy weighted geometric

- PFNP:

-

Picture fuzzy normalized projection

References

Zadeh, L.A.: Fuzzy sets. Inf. Control 8, 338–353 (1965)

Abu Arqub, O.: Adaptation of reproducing kernel algorithm for solving fuzzy Fredholm-Volterra integrodifferential equations. Neural Comput. Appl. 28(7), 1591–1610 (2017)

Alshammari, M., Al-Smadi, M., Abu Arqub, O., et al.: Residual series representation algorithm for solving fuzzy Duffing oscillator equations. Symmetry 12(4), 572 (2020)

Abu Arqub, O., Singh, J., Alhodaly, M.: Adaptation of kernel functions-based approach with Atangana-Baleanu-Caputo distributed order derivative for solutions of fuzzy fractional Volterra and Fredholm integrodifferential equations. Math. Methods Appl. Sci. 1–28 (2021). https://doi.org/10.1002/mma.7228

Abu Arqub, O., Singh, J., Maayah, B., et al.: Reproducing kernel approach for numerical solutions of fuzzy fractional initial value problems under the Mittag-Leffler kernel differential operator. Math. Methods Appl. Sci. (2021). https://doi.org/10.1002/mma.7305

Atanassov, K.: Intuitionistic fuzzy sets. Fuzzy Sets Syst. 20, 87–96 (1986)

Chaira, T.: A novel intuitionistic fuzzy C means clustering algorithm and its application to medical images. Appl. Soft Comput. 11(2), 1711–1717 (2011)

Wang, Z., Xu, Z., Liu, S., Tang, J.: A netting clustering analysis method under intuitionistic fuzzy environment. Appl. Soft Comput. 11(8), 5558–5564 (2011)

Xu, D., Xu, Z., Liu, S., Zhao, H.: A spectral clustering algorithm based on intuitionistic fuzzy information. Knowl.-Based Syst. 53, 20–26 (2013)

Wang, Z., Xu, Z., Liu, S., Yao, Z.: Direct clustering analysis based on intuitionistic fuzzy implication. Appl. Soft Comput. 23, 1–8 (2014)

Singh, S., Sharma, S., Lalotra, S.: Generalized correlation coefficients of intuitionistic fuzzy sets with application to MAGDM and clustering analysis. Int. J. Fuzzy Syst. 22, 1582–1595 (2020)

Chen, S.M., Cheng, S.H., Lan, T.C.: A novel similarity measure between intuitionistic fuzzy sets based on the centroid points of transformed fuzzy numbers with applications to pattern recognition. Inf. Sci. 343, 15–40 (2016)

Xiao, F.: A distance measure for intuitionistic fuzzy sets and its application to pattern classification problems. IEEE Trans. Syst. Man Cybern. Syst. 51(6), 3980–3992 (2019)

Chen, Z., Liu, P.: Intuitionistic fuzzy value similarity measures for intuitionistic fuzzy sets. Comput. Appl. Math. 41(1), 1–20 (2022)

Chen, S.M., Cheng, S.H., Chiou, C.H.: Fuzzy multiattribute group decision making based on intuitionistic fuzzy sets and evidential reasoning methodology. Inform. Fusion 27, 215–227 (2016)

Cali, S., Balaman, S.Y.: A novel outranking based multi criteria group decision making methodology integrating ELECTRE and VIKOR under intuitionistic fuzzy environment. Expert Syst. Appl. 119, 36–50 (2019)

Cuong, B.C., Kreinovich, V.: Picture fuzzy sets-a new concept for computational intelligence problems. 2013 third world congress on information and communication technologies (WICT 2013). IEEE, 2013: 1-6 (2013)

Guong, B.C., Kreinovich, V.: Picture fuzzy sets. J. Comput. Sci. Cybern. 30(4), 409–420 (2014)

Wei, G.: Some cosine similarity measures for picture fuzzy sets and their applications to strategic decision making. Informatica 28(3), 547–564 (2017)

Wei, G.: Some similarity measures for picture fuzzy sets and their applications. Iran. J. Fuzzy Syst. 15(1), 77–89 (2018)

Wei, G., Gao, H.: The generalized Dice similarity measures for picture fuzzy sets and their applications. Informatica 29(1), 107–124 (2018)

Singh, P., Mishra, N.K., Kumar, M., et al.: Risk analysis of flood disaster based on similarity measures in picture fuzzy environment. Afr. Mat. 29(7), 1019–1038 (2018)

Luo, M., Zhang, Y.: A new similarity measure between picture fuzzy sets and its application. Eng. Appl. Artif. Intell. 96, 103956 (2020)

Ganie, A.H., Singh, S.: A picture fuzzy similarity measure based on direct operations and novel multi-attribute decision-making method. Neural Comput. Appl. 33(15), 9199–9219 (2021)

Singh, S., Ganie, A.H.: Applications of picture fuzzy similarity measures in pattern recognition, clustering, and MADM. Expert Syst. Appl. 168, 114264 (2021)

Khan, M.J., Kumam, P., Deebani, W., et al.: Bi-parametric distance and similarity measures of picture fuzzy sets and their applications in medical diagnosis. Egypt. Inform. J. 22(2), 201–212 (2021)

Dutta, P.: Medical diagnosis via distance measures on picture fuzzy sets. AMSE J. AMSE IIETA Publ. Ser. Adv. A 54(2), 657–672 (2017)

Van Dinh, N., Thao, N.X.: Some measures of picture fuzzy sets and their application in multi-attribute decision making. Int. J. Math. Sci. Comput. (IJMSC) 4(3), 23–41 (2018)

Thao, N.X.: Similarity measures of picture fuzzy sets based on entropy and their application in MCDM. Pattern Anal. Appl. 23(3), 1203–1213 (2020)

Riaz, M., Garg, H., Farid, H.M.A., et al.: Multi-criteria decision making based on bipolar picture fuzzy operators and new distance measures. Comput. Model. Eng. Sci. 127(2), 771–800 (2021)

Pinar, A., Boran, F.E.: A novel distance measure on q-rung picture fuzzy sets and its application to decision making and classification problems. Artif. Intell. Rev. 55(2), 1–34 (2021)

Chau, N.M., Lan, N.T., Thao, N.X.: A new similarity measure of picture fuzzy sets and application in pattern recognition. Am. J. Bus. Oper. Res. 1(1), 5–18 (2021)

Tian, C., Peng, J., Zhang, S., et al.: A sustainability evaluation framework for WET-PPP projects based on a picture fuzzy similarity-based VIKOR method. J. Clean. Prod. 289, 125130 (2021)

Wang, C., Zhou, X., Tu, H., et al.: Some geometric aggregation operators based on picture fuzzy sets and their application in multiple attribute decision making. Ital. J. Pure Appl. Math 37, 477–492 (2017)

Wei, G., Alsaadi, F.E., Hayat, T., et al.: Projection models for multiple attribute decision making with picture fuzzy information. Int. J. Mach. Learn. Cybern. 9(4), 713–719 (2018)

Opricovic, S., Tzeng, G.H.: Compromise solution by MCDM methods: A comparative analysis of VIKOR and TOPSIS. Eur. J. Oper. Res. 156(2), 445–455 (2004)

Wang, L., Zhang, H., Wang, J., et al.: Picture fuzzy normalized projection-based VIKOR method for the risk evaluation of construction project. Appl. Soft Comput. 64, 216–226 (2018)

Yue, C.: Picture fuzzy normalized projection and extended VIKOR approach to software reliability assessment. Appl. Soft Comput. 88, 106056 (2020)

Yildirim, B.F., Yildirim, S.K.: Evaluating the satisfaction level of citizens in municipality services by using picture fuzzy VIKOR method: 2014–2019 period analysis. Decis. Mak. Appl. Manage. Eng. 5(1), 50–66 (2022)

Ganie, A.H., Singh, S., Bhatia, P.K.: Some new correlation coefficients of picture fuzzy sets with applications. Neural Comput. Appl. 32(16), 12609–12625 (2020)

Rong, Y., Niu, W., Garg, H., et al.: A hybrid group decision approach based on MARCOS and regret theory for pharmaceutical enterprises assessment under a single-valued neutrosophic scenario. Systems 10(4), 106 (2022)

Rong, Y., Yu, L., Niu, W., et al.: MARCOS approach based upon cubic Fermatean fuzzy set and its application in evaluation and selecting cold chain logistics distribution center. Eng. Appl. Artif. Intell. 116, 105401 (2022)

Funding

No funding was received.

Author information

Authors and Affiliations

Contributions

ZC conceived the study and helped to review the manuscript. LL drafted the manuscript, studied the picture fuzzy similarity measure based on Hamming distance and transformed tetrahedron centroid distance, and implemented its application. XJ and LL proved the theorems and properties in the manuscript. XJ and LL consulted relevant literature and research status at home and abroad. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no conflict of interest to declare.

Ethical Approval and Consent to Participate

Not applicable.

Consent for Publication

I would like to declare on behalf of my co-authors that the work described was original research that has not been published previously, and is not under consideration for publication elsewhere, in whole or in part. All the authors listed have approved the manuscript that is enclosed.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, L., Chen, Z. & Jiang, X. A Hybrid Picture Fuzzy Similarity Measure and Improved VIKOR Method. Int J Comput Intell Syst 15, 113 (2022). https://doi.org/10.1007/s44196-022-00165-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-022-00165-7