Abstract

In this study, we propose a framework that enhances breast cancer classification accuracy by preserving spatial features and leveraging in situ cooling support. The framework utilizes real-time thermography video streaming for early breast cancer detection using Deep Learning models. Inception v3, Inception v4, and a modified Inception Mv4 were developed using MATLAB 2019. However, the thermal camera was connected to a mobile phone to capture images of the breast area for classification of normal and abnormal breast. This study’s training dataset included 1000 thermal images, where a FLIR One Pro thermal camera connected to a mobile device was used for the imaging process. Of the 1000 images obtained, 700 images were considered for the normal breast thermography class while the 300 images were suitable for the abnormal class. We evaluate Deep Convolutional Neural Network models, such as Inception v3, Inception v4, and a modified Inception Mv4. Our results demonstrate that Inception Mv4, with real-time video streaming, efficiently detects even the slightest temperature contrast in breast tissue sequences achieving a 99.748% accuracy in comparison to a 99.712% and 96.8% for Inception v4 and v3, respectively. The use of in situ cooling gel further enhances image acquisition efficiency and detection accuracy. Interestingly, increasing the tumor surface temperature by 0.1% leads to an average 7% improvement in detection and classification accuracy. Our findings support the effectiveness of Inception Mv4 for real-time breast cancer detection, especially when combined with in situ cooling gel and varying tumor temperatures. In conclusion, future research directions should focus on incorporating thermal video clips into the thermal images database, utilizing high-quality thermal cameras, and exploring alternative Deep Learning models for improved breast cancer detection.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Breast cancer is a collective phrase for a disease characterized by abnormal cell growth in the breast. People’s effective therapy and life chances are stage-dependent. Early detection of breast cancer improves treatment options and increases the chances of survival. Regular screening remains a highly effective public health strategy for reducing breast cancer’s impact on health and mortality. Breast thermography has the ability to identify heat features associated with breast tumors that are less likely to be obscured by thick breast tissue [1]. Thermography, as a non-invasive imaging technique that can detect subtle temperature changes associated with breast tissue abnormalities. Early detection of breast cancer significantly increases the chances of successful treatment and survival. Thermographic imaging offers a non-invasive and radiation-free method for screening, which can be particularly beneficial for frequent monitoring. Our research aims to leverage the capabilities of deep learning to enhance the accuracy and efficiency of thermographic imaging for breast cancer detection. Our deep learning model analyzes these thermographic images with high sensitivity, allowing for the early detection of potential abnormalities that may indicate early stages of breast cancer. By integrating deep learning algorithms into the analysis of thermographic images, our method improves the accuracy of breast cancer screening and risk assessment. This enhanced screening capability enables healthcare providers to identify at-risk individuals earlier, leading to timely interventions and preventive measures. Early detection through thermography and deep learning translates into cost savings by reducing the need for invasive diagnostic procedures and intensive treatments that are typically required at advanced stages of breast cancer. Moreover, our approach empowers personalized medicine strategies, optimizing resource allocation and treatment plans for improved patient outcomes. This proactive approach not only saves lives but also reduces healthcare costs associated with late-stage treatments. Regarding thermal images, the term “healthy” might refer to the body parts having optimal thermal patterns, demonstrating a favourable physiological process. These parts are described in the uniform distribution of temperature and do not feature any obvious anomalies or discontinuities. On the contrary, “tumor” in thermal images refers to the opposite phenomenon—any apparent disruption or deviation in the optimal thermal pattern. Thermal images identify “tumor” from the increased or decreased thermal pattern localized in a region near healthy tissue, depending on the actual tumor behaviour. Tumors impact blood streaming and metabolism, which results in noticeable temperature differences when captured using a thermal camera. The proposed structure starts with the importance of early detection in clinical settings and calls for advanced, mobile technologies with considerable computational power. That provides the smooth transition into the issues with the current methods, namely insufficient usability, real-time detection, and privacy. A small reference to the connected works will help to position the gap targeted by the projected research. External factors such as ambient temperature, patient positioning, and menstrual cycle variations can influence thermographic results, potentially affecting accuracy and reproducibility. Applying creams or lotions to the breasts before a thermographic scan can alter surface temperatures and affect image interpretation. Engaging in activities such as exercise, drinking alcohol, or consuming hot drinks within two hours of a thermography session can influence breast temperature patterns, leading to inaccurate readings. These limitations were discussed in [2].

The related works are summarized as follows. Recent research works have presented a range of deep learning techniques and datasets to enhance breast cancer detection in thermal imaging. As for study [3], a multi convolutional neural network employed batch normalization and dropout layers with thermal images and patient data achieved an accuracy rate of over 97% and an AUC of 0.99. In the same vein, study [4] utilized spatial pyramid pooling combined with U-Net structure redesign from residual network model to achieve an accuracy of 96.13% on thermography images. On the other hand, study [5] applied the usage of multiple datasets with deep convolutional neural network obtained up to 96% of accuracy with ResNet50. Meanwhile, study [6] has leveraged CNN, SVM, and Random Forest classifiers with Kaggle dataset that reached 99.65% for the CNN classifier. With the use of Inception-v3 for classifying thermal images, study [7] reached 80% with the use of preprocessing techniques, including contrast limited adaptive histogram equalization. Furthermore, the DMR-IR dataset was used by study [8] via various CNN models obtaining 92% of accuracy with data augmentation. Apart from that, the Mask R-CNN on ResNet-50 used by study [9] achieved 97.1% accuracy in breast tumor detection work. Other approaches are study [10] employed threshold-based asymmetry analysis, reached an accuracy of 96.08%; this mechanism was used to detect abnormal asymmetric regions. Another study [11] proposed 4D U-net segmentation, Glowworm Swarm Optimization, and Binarized Spiking Neural Network approach and made significant improvements. The medical IoT-based diagnostic system was developed by study [12], which determines 98.5% with CNN and 99.2% with ANN. Study [13] introduced a smartphone approach via the cloud, which saved data and found high accuracy with minimal image quality loss. Study [14] optimized near infrared with a 21% enhanced accuracy mechanization system to get full sources and detectors. Study [15] combined CNNs with Bayesian Networks and detected up to 93%. Focus is likewise on small-sized cells and cells, studied [16] proposed AUC 96.15% through a hybrid model, entirely based on dilation. Study [17] presented Intelligence-based Thermography with real-time focused ultrasound therapy temperature monitoring has been correctly described and handled. Finally, a CAD system designed an AI classifier on thermography by study [18], which obtained 94.4% for classifying unhealthy and healthy thermograms.

1.1 Objectives formulation

The motivation behind the developed real-time breast cancer detection system comes from the need for earlier and more accurate diagnosis, which can lead to better treatment outcomes and higher survival rates. Traditional screening methods like mammography can miss up to 20% of breast cancers, and some studies have shown that real-time detection methods can improve detection rates [19]. The use of a real-time breast cancer detection system can improve patient outcomes through earlier and more accurate diagnosis. The novelty of this work is the development of real-time breast cancer detection using Inception v3, Inception V4, and modified Inception MV4 deep learning neural networks which were trained on large datasets of thermal images to detect subtle changes that may be indicative of cancer. This work introduces real-time breast cancer detection using Inception v3, Inception V4, and a modified Inception Mv4 deep learning neural network. These models were trained on large datasets of thermal images to detect subtle changes that may indicate the presence of cancer. Combining real-time thermographic imaging with deep learning techniques provides real-time feedback on tis-sue properties that may be indicative of cancer.

1.2 Research gap

The objective of the study is to design a breast cancer real-time detection system utilizing thermography and deep learning. The contributions of this article are manifold. Firstly, a real-time thermographic image acquisition procedure using a thermal camera is proposed and utilized to acquire thermal images of patients’ breasts. Secondly, Inception v3, Inception V4, and modified Inception MV4 deep learning convolutional neural networks were implemented and evaluated in conjunction with real-time imaging to detect early breast cancer. Thirdly, a comprehensive performance evaluation was carried out to assess the potential of such a detection system resulting in achieving a high accuracy rate of x% for the modified Inception v4 in comparison to other models studied.

Limitations of Previous Studies: As we have seen from related works, a thorough examination of current literature highlights several significant limitations, emphasizing the necessity for our proposed approach. Firstly, many previous studies have predominantly relied on static or prerecorded datasets, neglecting the dynamic nature of breast tissue. This static methodology may not fully capture the intricate changes in tissue characteristics in real-time, resulting in a detection system that is less responsive and adaptable. Secondly, the absence of real-time processing hinders the potential for prompt identification of evolving cancerous lesions, a critical aspect for early intervention and enhanced patient outcomes. Moreover, the existing literature frequently overlooks a comprehensive exploration of contrast enhancement techniques tailored specifically for breast cancer detection and improving the contrast of tumor locations to facilitate more accurate localization. By addressing these gaps in the literature, our work seeks to advance breast cancer detection methodologies. We aim to provide a solution that not only considers the dynamic nature of breast tissue but also integrates real-time processing and contrast-enhancement strategies for more robust and timely diagnoses.

The application of situ cooling in thermal imaging for tumor detection in the breast is a technique aimed at improving accuracy by mitigating factors that can influence thermal patterns observed. Also, it involves maintaining a consistent and controlled temperature in the imaging environment and this stability provides a reliable baseline for temperature measurements, allowing for accurate comparison of thermal patterns. Fluctuations in ambient temperature can introduce variability that might be erroneously interpreted as abnormal, impacting the accuracy of tumor detection. Moreover, tumors often exhibit temperature differences compared to surrounding healthy tissue, and situ cooling helps accentuate these differences. In addition, it reduces environmental noise in thermal images, by minimizing temperature fluctuations and external interference, the imaging system can capture clearer thermal patterns. But there are some limitations such as: if patients are uncomfortable or reluctant to undergo cooling procedures, it may impact their cooperation during imaging and Small or subtle tumors might still present challenges even with enhanced contrast.

2 Related works

The study in Sánchez-cauce et al. [3] employed a thermal camera for breast cancer detection using a multi-convolutional neural network, incorporating batch normalization and a dropout layer with a rate of 0.5 in the classification layers. They used two data sets: one with thermal images and another with patient data. The thermal images database was split into 70% for training, 15% for validation, and 15% for testing, utilizing 171 healthy images and 41 breast cancer images. Thermal images were collected from various angles, and the model achieved a 97% accuracy, an area under the curve of 0.99, 100% specificity, and 83% sensitivity.

In Kanimozhi et al. [4], a convolutional neural network was used for thermal feature extraction, achieving reliable results by combining spatial pyramid pooling with a redesigned U-Net structure. This hybrid classification technique outperformed others like K-Means and fuzzy C-means, with diagnostic thermography images providing a more innovative approach. The model reached 96.13% accuracy with a Residual network model, demonstrating its effectiveness in breast cancer detection. The work in Kanimozhi et al. [5] utilized thermography from various datasets, transforming infrared images to RGB and normalizing them to 128 × 128 pixels using MATLAB. They employed a deep convolutional neural network for segmentation, focusing on feature extraction using Hu Moments and Color Histograms. Classifiers such as Random Forest, SVM, and Gaussian Naive Bayes were used, with ResNet50 achieving 96% accuracy and Gaussian Naive Bayes achieving 83%.

In Lahane et al. [6], a computer-aided diagnosis technique was proposed, classifying thermal images into cancerous, non-cancerous, and healthy categories. The study used CNN, SVM, and Random Forest classifiers on a thermal images database from Kaggle, consisting of images from approximately 150 individuals. CNN achieved an accuracy of 99.65%, significantly outperforming SVM and Random Forest, which achieved 89.84% and 90.55%, respectively. The study in Farooq and Corcoran [7] analyzed thermography from 40 individuals using a dynamic research method, classifying benign and cancerous conditions with an Inception-v3 model. Preprocessing involved sharpening filters and histogram equalization, with the final layers of the model retrained for custom breast cancer classification. The results showed 80% accuracy and 83.33% sensitivity.

In Masry et al. [8], multiple CNN-based studies for breast cancer diagnosis using the DMR-IR dataset were implemented. The image augmentation process included flips, rotations, zoom, and noise normalization. The best CNN framework achieved 92% accuracy, 94% precision, 91% sensitivity, and 92% F1-score. This study introduced modern standards for CNN models using thermal images. The proposal in Civilibal et al. [9] involved using the Mask R-CNN technique on thermal images for breast tumor diagnosis, with ResNet-50 and ResNet-101 architectures achieving high accuracy. ResNet-50 reached 97.1% accuracy, outperforming existing literature on thermal breast image studies. The approach in Dey et al. [10] utilized threshold-based asymmetry analysis with textural features to evaluate contralateral symmetry in breast thermograms. The methodology achieved 96.08% accuracy, 100% sensitivity, and 93.57% specificity. A novel SVD-based technique for tumor detection was briefly introduced and evaluated.

The authors in Gomathi et al. [11] proposed a 4D U-Net segmentation approach for breast cancer diagnosis using thermography images. The process involved preprocessing with the APPDRC method and segmentation using 4D U-Net. The Glowworm Swarm Optimization Algorithm and BSNN were used for early-stage classification, achieving superior performance compared to existing methods. In Ogundokun et al. [12], an IoT-based diagnostic system using ANN and CNN with hyperparameter optimization was proposed for classifying malignant versus benign cases. The system achieved high accuracy with CNN (98.5%) and ANN (99.2%) on the WDBC dataset, emphasizing the importance of hyperparameter optimization and feature selection. In Al Husaini et al. [13], a tool for early-stage breast cancer detection was introduced, combining thermography, deep learning models, smartphone apps, and cloud computing. The system used the DMR-IR database and Inception V4 (MV4) for classification, achieving high accuracy. The app enabled rapid diagnostic processing and result transfer, maintaining image quality.

The research in Noori Shirazi et al. [14] introduced a 3D system using near-infrared light emission for accurate breast tumor diagnosis. Optimal placement of sources and detectors significantly reduced error rates, enhancing diagnosis accuracy by 21%. In Aidossov et al. [15], a smart diagnostic system combining CNNs and Bayesian Networks was proposed for early breast cancer detection. The system achieved high accuracy (91%-93%), precision (91%-95%), sensitivity (91%-92%), and specificity (91%-97%), demonstrating the effectiveness of integrating thermograms and medical history. The study in Aldhyani et al. [16] utilized deep learning models for detecting small-sized cancer cells using the BreCaHAD dataset. The hybrid dilation deep learning model addressed issues related to color divergence and achieved an AUC of 96.15%.

In Sadeghi-Goughari et al. [17], an Intelligence-based Thermography (IT) technique was introduced to monitor FUS therapy for breast cancer treatment. The system combined thermal imaging with AI for real-time monitoring, demonstrating its feasibility and efficiency. The researcher in Chebbah et al. [18] introduced a novel CAD system using AI and thermography for breast cancer detection. A deep learning algorithm (U-net model) was used for automatic segmentation, achieving an accuracy of 94.4% and a precision of 96.2%.

In conclusion, the proposed technique utilizes a video infrared camera for home-based breast cancer detection, offering a cost-effective and accessible diagnostic tool. This approach has the potential to significantly improve breast cancer detection and treatment, contributing to better health outcomes.

3 Materials and methods

The suggested framework is designed to meet the helpful requirements of all popular technic used and high-quality input video in real-time. The suggested structure was modeled in MATLAB 2019 and was compatible with the majority of standard Desktop with thermal camera installed. It takes real-time video in the higher level of quality style as input and outputs defined video files with classification characterizing normal breast or abnormal. It was divided into four blocks: thermal images database, pre-processing, feature extraction and classification. The concept of "moderately preserving spatial features" refers to our approach to balancing spatial information retention within convolutional neural network (CNN) architectures. Specifically, we investigated techniques to maintain important spatial details while leveraging the benefits of dimensionality reduction for computational efficiency. For a comprehensive exploration of this concept and its impact on model performance, readers are referred to Al Husaini et al. [2] where a deeper dive into the methodologies and experimental results demonstrates the effectiveness of our approach. In our current study, we build upon this prior research by integrating these insights into the design of our model, aiming to strike a balance between spatial fidelity and computational efficiency.

3.1 Inception v3

Convolutional Neural Network Inception v3 consists of 22 layers, which were increased in size and depth. It was one of the most important networks approved by Google. Moreover, it consists of Input unit, 3 Inception A, Reduction A, 4 Inception B, Reduction B, 2 Inception C, and Classification Units. Also, these layers consist of 5 * 5, 3*3 and 1*1 size of filters and Stred 3. In addition to layers consisting of different number of filters such as 128, 98, 256 and 386 as shows in Fig. 1. Moreover, there was a fully connected layer, activation layer, average pooling layer and a dropout layer of 0.7 in classification unit at end of Inception v3 [20].

Deep convolutional neural network Inception V3 [11]

3.2 Inception v4

Inception V4 is a member of Inception v3 family of Deep Convolutional Neural Networks, but it has the most depth (more number of Inception A, B and C). It consists of Stem Unit, 4 Inception A, Reduction A, 7 Inception B, Reduction B, 3 Inception C, and Classification Unit. In addition, all filters size were 1 * 1 and 3 * 3, and number of filters was between 32 and 386 in each layer. Moreover, there were a few average pooling layer and max pool layer as shows in Fig. 2. Finally, there was a fully connected layer, an activation layer, an average pooling layer, and a 0.8 dropout layer in classification unit at end of Inception 4 [21].

3.3 Inception modified mv4

The new Inception MV4 model is a family of deep convolutional neural networks. It is a deep network modified from the Inception v4 model. The main features of the modified model are maintaining the different number of filters but utilizing same filter sizes while the number of layers in each unit is reduced. This facilitates saving time and maintaining high accuracy. The modified Inception MV4 deep convolutional neural network consists of the 1 ×Steam unit, 4 ×Inception A, Reduction A, 7 ×Inception B, Reduction B, 3 ×Inception C, and Classification unit preceded by a fully connected layer. In addition, the number of filters per layer is changed from 32 to 386 filters, while the filter sizes are between 1 × 1 and 3 × 3. Moreover, the classification unit consists of the middle group layer, the SoftMax layer, and the dropout layer with a ratio of 0.8 as shown in Fig. 3 [22].

Added one convolutional layer under average pooling with a size of 3 × 3 and 256 filters.

Increased the number of filters from 128 to 256 to maintain the number of extracted features.

Added two parallel convolutional layers with 192 filters each, both using a 3 × 3 size and 256 filters.

Removed the remaining layers in Inception B to preserve a total of 1,024 features.

Inception B consists of 7 groups, all modified according to the above settings.

where:

W = input size.

F = filter size.

P = padding setting.

S = stride setting.

We developed classifiers using deep convolutional neural networks based on Inception V3, Inception V4, and a modified version called Inception MV4 for the proposal evaluation. MV4 was created to share the computational costs evenly amongst all layers by dividing the resulting number of features and pixel positions. The results of these deep learning models based on the DMR databases in thermal images classification of healthy and sick patients. Training was implemented with epochs from 3 to 30 and learning rates of 1 × 10−3, 1 × 10−4, and 1 × 10−5, using minibatch size of 10 and different optimization techniques. Based on the results of the training, it was discovered that both Inception V4 and MV4 achieved extraordinary results in terms of prediction accuracy when using a velocity color image due to 1 × 10−4 learning rate and SGDM optimization set. This training result can be replicated for epoch ranges. In V3’s case, grayscale images succeeded in producing a higher prediction accuracy compared to V4 and MV4 irrespective of optimization selection. More specifically, for Inception V3 grayscale, the 20 to 30 epochs needed to be achieved to reach the Inception V4 and MV4 color performance levels. Inception MV4 delivered a 7% performance boost over V4, allowing for a faster classification response time that improves energy and smoother arithmetic graphic processor operations than previous Inception v4. This meant that there was no direct implication that adding layers would increase positive gains in performance [23].

3.4 Database

The FLIR One Pro serves as a thermography camera specified to connect to a mobile phone, enabling users to capture and analyse thermal images and videos. It utilizes a combination of thermal and visible light cameras; it generates images emphasizing temperature variations in the scene. The image features a temperature range of − 4°F to 752°F (− 20 °C to 400 °C), a thermal sensitivity of 70 mK, and can detect temperature differences as small as 0.18 °F (0.1 °C). With a resolution of 160 × 120 pixels, thermal pixel size of 12 µm, frame rate of 8.7 Hz, and a 55-degree field of view, it incorporates adjustable thermal span and level features. The FLIR One Pro also employs FLIR’s patented MSX technology, overlaying visible light details onto thermal images for additional context. During experimentation, the infrared camera recorded temperature profiles of a silicone breast every 5 min, maintaining a room temperature between 22 °C and 26 °C with situ-cooling gel. The FLIR camera was positioned 1 m away from the breast, with a simulated tumor set at 1 cm and varying depths. The recording was conducted in three dimensions (3D) using two methods for both healthy and cancer conditions: firstly, with and without the application of a cooling gel while switching off the lamp as Healthy condition, and secondly, with and without using a cooling gel while switching on the lamp with a gradually increasing power supply [2]. 1000 thermal images (700 images for normal and 300 thermal images for abnormal)were taken by the thermal camera model FLIR One Pro which connected to mobile.

3.5 Preprocessing

Videos are composed of frames, and each frame is a 3D image. The first step is to extract individual frames from the video. Deep CNNs often require fixed-size input as Inception mv4 was used and resizing frames to a consistent resolution is crucial. Inception mv4 has fixed sizes for input 299 × 299 pixels. In addition, pixel values were normalized to ensure that they fall within a specific range [− 1, 1] and it will help the neural network converge faster during training and can improve overall performance. Moreover, to increase the diversity of the training dataset and improve the model's generalization, data augmentation techniques were applied and common augmentations used for video data include random cropping, flipping, rotation, and changes in brightness and contrast.

Feature extraction from pre-processed video frames involves transforming raw pixel data into a representation that captures relevant information for a given task and one of the most common approaches is to use pre-trained CNNs, such as Inception mv4. The earlier layers of these networks capture low-level features like edges and textures, while deeper layers represent more complex patterns [23].

3.6 Classification

The classification module in a video analysis system is responsible for categorizing input video frames into predefined classes, such as “normal” or “abnormal” and the choices include Convolutional Neural Networks (CNNs) such as Inception mv4. This model was Designed the output layer to have binary classification (normal/abnormal), a single output node with a sigmoid activation function can be used and a SoftMax activation was employed. In addition, SGDM was Selected an optimizer to minimize chosen loss function and optimizer adjusts the model's parameters during training to improve classification performance. Moreover, an initial learning rate was set to 1e−4. The learning rate affects the size of parameter updates and influences the convergence of the model. Also, thermal images database was split into training, validation, which can set help tune hyperparameters and evaluate final model performance. The extracting features system was set with the responsibility of estimating color–texture computes from pre-processed video form. More precisely, each frame featured vector checked utilizing Inception v3 for extracting features automatically. The set of features generated for each camera shot was determined by the frame dimension. Finally, the classification module was capable of classifying input video frame into one of two categories: normal or abnormal as shown in Fig. 4. The classification unit calculates its actual variables throughout training by analyzing the obtainable training datasets. The test samples were perpetrated and classified as normal or abnormal.

3.7 Experiment setup

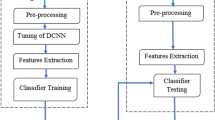

Training and testing were carried out on a desktop computer with 32 GB RAM, intel core i7 processor with GPU Asus GeForce GTX 1660 memory 6 GB equipped, MATLAB 2019a and a thermal camera model FLIR One Pro. Figure 5 illustrates the training and testing technique on deep convolutional neural network Inception v3, Inception v4 and Inception Mv4. Artificial intelligence is an offshoot of machine learning in which features were extracted immediately from a dataset utilizing deep convolutional neural networks. As a result, it accomplishes superior detection accuracy to that of humans. To train a deep convolutional neural network a sequence of datasets containing infrared pictures of normal and abnormal breasts were required. 1000 thermal images (700 images for normal and 300 thermal images for abnormal) were taken by the thermal camera model FLIR One Pro which connected to mobile.

In the first part of experiment, thermal camera model FLIR One Pro was installed in smartphone (Fig. 6e) and placed in handle parallel to level of breast with distance of 1 m from human body as shown in Fig. 6d. Second, the room temperature was measured between 22 and 26 degrees Celsius. Third, silicone breast 17 cm long was attached to doll in chest area. Fourth, lamp (tumor) was placed with diameter of 1 cm in another handle and placed inside breast at depth T1 and T9 from surface of breast as shows in Figs. 7 and 8. Fifth: lamp (Fig. 6f) was connected to DC Power Supply and changed voltage values from 0.5 V to 9 V Fig. 6c. video was recorded in three dimension (3D) in two ways, first without cooling and second using a cooling gel Fig. 6b. As for the second part of experiment, deep convolutional neural networks model Inception v3, Inception v4 and Inception Mv4 were designed using MATLAB 2019. These models of deep convolutional neural networks were trained by using thermal images databases, 70% for training and 30% for testing.

Tumor in different location [19]

We have developed a new thermal images thermal images database to conduct this study. Tumor size was simulated by adjusting the voltage applied to an LED, controlling its intensity and generated heat, with 0 V representing a healthy breast and higher voltages indicating varying tumor sizes. Measurements were taken after the sensor readings stabilized, typically after a 1–2 min delay. Additionally, the LED depth was varied from 2 to 10 cm to study its impact [2]. These thermal images database includes 1000 thermal pictures of cancers and 800 thermal images of healthy with a resolution of 160 × 120 pixels (visual resolution) that were classified into two parts: Cancer and Healthy.

In another effort to fine-tune the proposed framework, we varied the dataset partitions for training and testing to 80/20, 70/30, 60/40, 50/50, and 40/60 percentages, respectively. The Inception MV4 model achieved its best results with a partition of 70% for training and 30% for testing [23]. 70% of thermal pictures were already separated for training purposes, while the rest 30% have been used for testing purposes. The pre-processing system is responsible for extracting video sets with a customer video quality and size that correlate to an area of interest within the source video clips. Another task of the pre-processing module is to resize the video frame before interring to deep convolutional neural network Inception v3.

4 Results and discussion

The advantage of utilizing a video stream lies in its ability to capture temporal information of tumor compared to single image, which can enhance the accuracy of tumor detection algorithms shoes in Fig. 9. By analyzing multiple frames in succession, algorithms can track tumor across time, improving their ability to differentiate between healthy frames and unhealthy frames. Moreover, real-time video streams allow for quicker responses to changing scenarios after adding situ cooling, making them essential for applications requiring immediate actions, such as surveillance for heat generation healthy cells and tumor cells. The maximum differences in skin temperatures between breasts with tumors and those without can vary from 0.274 °C to 2.58 °C. For more extensive insightful discussions on the sensitivity thresholds of our thermographic imaging system, the criteria used to determine the threshold for detecting temperature contrasts relevant to breast abnormalities, the quantification of the impact of in situ cooling gel on accuracy, detailed experimental setup, measurement protocols, and statistical analyses conducted to assess how the use of cooling gel influences the accuracy and reliability of thermographic imaging for breast cancer detection, readers are referred to Al Husaini et al. [2]. When comparing the temperature differences between healthy areas and tumor regions, it was observed that without situ-cooling, the temperature difference at T1 was minimal, at just 0.2 °C. However, with situ-cooling, the temperature difference significantly increased to 10.6 °C. Finally, while single images offer static snapshots of heat contrast in the breast, real-time video streams provide dynamic context and temporal information that significantly enhance the accuracy of tumor detection algorithms.

Table 1 shows a comparison of deep convolutional neural networks Inception v3, Inception V4, and Inception MV4, which were used for breast cancer detection using thermography. As illustrated by the results presented in Table 1, it motivates selecting the modified Inception Mv4 model for the further evaluation. Three learning rates were used for optimizing the performance of the models namely; 1e−3, 1e−4, and 1e−5. Further, optimization algorithms such as ADAM, SGDM and RMSPROP were used in the experiment. However, the best accuracy results for the modified Inception Mv4 were achieved using the SGDM optimizer and a 1e−4 learning rate [23].

Also, we trained and validated the thermal images database for 100 epochs using the three models. To maintain smoothness, we took the number of feature maps equal to the number extracted automatically by the three models. The outcomes of our deep learning with a set of values in real-time are summarized in Tables 2, 3, 4, 5, 6 and 7. These results illustrate the variations in validation accuracies as tumor temperature increases using a set of values. Finally, live video was used in our system to detect breast cancer and record detection accuracy with breast cooling and without cooling. The simulation study demonstrates that heat transfer in the breast is influenced by factors like tumor depth, size, and breast shape. Additionally, situ-cooling was employed to increase temperature contrast, thereby enhancing breast cancer detection accuracy. This approach is crucial, as advancements in finite element-based infrared modelling could greatly improve the precision of tumor detection using thermography and situ cooling [2].

Table 2 illustrates utilizing deep learning model Inception v3, for real-time scanning of breast tumor detection. These results show the correlation between tumor size (simulated through voltage values), thermal videos and detection accuracy. In addition, the detection accuracy increased from 65 to 79%, as the tumor size increases when observed from frontal aspect. However, it is noteworthy that this accuracy was slightly decreased by 0.001%, when scrutinizing breast from the right and left sides. Moving on to Table 3, it becomes evident that employing Inception v3 model with situ cooling to contribute real-time detection significantly elevates accuracy. Moreover, this augmentation exceeds 10% in comparison to the same location T1 and same tumor size of 0.5 V. On the other hand, the detection accuracy displays fluctuations due to thermal variations during video recording and variations in tumor size. Also, detection accuracy at 0.5 V without situ cooling was 65%, whereas it reached 77.6% with situ cooling. Moreover, an intriguing observation emerges higher temperatures correspond to enhanced detection accuracy. Additionally, the thermal recordings from three sides exhibit minimal discrepancies that exert negligible influence on detection accuracy of about 0.001%.

Table 3 illustrate evaluating the performance of the Inception v3 model in detecting tumor depth (T9) using infrared thermography, different size of the tumor and impact of situ-cooling on detection accuracy is examined, aiming to enhance the reliability of the detection process in real-time. It is evident from Table 3 that the accuracy of tumor depth detection improved significantly when situ-cooling was applied in real-time. Higher voltage values generally correlated with enhanced detection accuracy, with the front side consistently showing the highest accuracy compared to right side and left side of the breast with slightly different detection accuracy around 0.0011%. Therefore, detection accuracy increased from 49 to 60% without using situ cooling, but it increased 62% to 72% with situ cooling. Moreover, the results indicate that when the tumor was small and deeper in location, cannot be detected and it was lower than 50%. But after adding situ cooling will contribute to detect and it was 62%. Therefore, detection accuracy in-creased more than 11%.

In Table 4, the utilization of Inception V4 for tumor detection at depth T1 is elucidated, both without situ cooling and with adding situ cooling and thermal video camera. In addition, tumor sizes verified up to 20 sizes (voltage values) at the specific position T1. The outcomes demonstrate the precision of Inception V4 and the clarity of accuracy, reaching up to 100% at a value of 9.5 and 10 V. However, the table shows a very low detection accuracy at voltage values from 0.5 to 2 V, not surpassing 59%, even with the addition of situ cooling. This indicates a limited detection rate. Furthermore, the detection accuracy percentages are closely aligned when thermal video recording is done from the front, right, and left sides of the breast, with minor differences. Moreover, situ cooling significantly contributes to improving tumor detection by percentages ranging from 5 to 8%. Additionally, situ cooling has played a role in elevating accuracy to 100% for tumor sizes of 7.5, 8, and 8.5 V.

Table 5 lists the comparison results of tumor size and effect of situ cooling on real-time tumor detection accuracy from 3D at a depth of T9 using deep learning Inception v4. The results indicate that the inability of Inception v4 to detect tumors with size from 0.5 to 2.5 V, where the detection accuracy ranged from 36.817 to 47.377%. However, by adding situ cooling to the breast for two minutes, the contribution of situ cooling in real-time in increasing accuracy of detecting tumors of size 2 V and 2.5 V becomes clear, ranging from 44.487 to 50.066%, and from 47.377 to 52.956%, respectively. Additionally, it improves the detection accuracy from both the right and left sides of the breast. Furthermore, the capability of deep learning Inception v4 is evident in detecting medium and large-sized tumors at depth of T9, around 86.547% without situ cooling and 92.126% with situ cooling.

It can be found from Table 6 that deep learning Inception MV4 exhibits a high capability in detecting small tumors at T1 depth such as 0.5 V, achieving an accuracy rate of 88.43%, which further increases to 91.808% with adding situ cooling for two minutes. Moreover, there is an observable trend of increasing detection accuracy as the tumor size grows, reaching 100% when the tumor size was 7 V without using situ cooling, and reaching 100% when the tumor size was 5.5 V with adding situ cooling.

From Table 7, it is found that the highest classification accuracy of deep learning Inception MV4 in real-time at tumor T9 is achieved. for example, the detection accuracy for smallest tumor, 0.5 V, reached 82.073%, 82.063%, and 82.052% from the front, right, and left of the breast respectively, utilizing deep learning Inception MV4 without adding situ cooling. However, with the inclusion of situ cooling, the detection accuracy percentages increased to 85.451%, 85.491%, and 85.473%, respectively. Furthermore, the detection accuracy improves with the increase in tumor size, reaching 100% at tumor size of 9 V without cooling, and 100% at tumor size of 8 V with adding situ cooling.

The results indicate that deep learning Inception mv4 is capable of real-time detection of small-sized tumors at depth T1without using situ cooling, in comparison to Inception v3 and Inception v4, achieving detection accuracies of 88.43%, 65.25%, and 50.27% respectively, as shows in Fig. 10. Moreover, Inception v3 outperforms Inception v4 in the detection accuracy in the range of tumor sizes from 0.5 to 4 V.

By adding situ cooling to the breast, detection accuracy increases over three models in real time, but Inception MV4 is still highly accurate as shown in Figs. 11 and 12. However, Inception v3 is more improved compared to Inception v4, as shown in Fig. 10.

The application of situ cooling in thermal imaging for tumor detection in the breast is a technique aimed at improving accuracy by mitigating factors that can influence thermal patterns observed. Also, it involves maintaining a consistent and controlled temperature in the imaging environment and this stability provides a reliable baseline for temperature measurements, allowing for accurate comparison of thermal patterns. Fluctuations in ambient temperature can introduce variability that might be erroneously interpreted as abnormal, impacting the accuracy of tumor detection. Moreover, tumors often exhibit temperature differences compared to surrounding healthy tissue, and situ cooling helps accentuate these differences. In addition, it reduces environmental noise in thermal images, by minimizing temperature fluctuations and external interference, the imaging system can capture clearer thermal patterns. But there are some limitations such as: if patients are uncomfortable or reluctant to undergo cooling procedures, it may impact their cooperation during imaging and Small or subtle tumors might still present challenges even with enhanced contrast.

The following link explains the process of diagnosing breast cancer. Where we used deep convolutional neural network Inception MV4 model to classify video in real-time. For the first minute, the tumor was put to rest, and then we gradually started increasing voltage.

https://drive.google.com/file/d/1x7VZ4_cNVtFciS29pYuN3XBqLNawhmq5/view?usp=sharing

Table 8 presents the performance metrics of three deep learning models Inception v3, Inception v4, and Inception mv4 based on their evaluation using various criteria. These models demonstrate varying levels of accuracy, with Inception v4 and Inception mv4 achieving notably high accuracy of 99.712% and 99.748%, respectively. In addition, sensitivity, specificity, precision, and negative predictive value are consistently high across all models, indicating their ability to effectively classify true positives and negatives. Moreover, the area under curve (AUC) values, ranging from 0.967 to 0.997, signify excellent discriminative ability as shown in Fig. 12 (see Table 9). Finally, these metrics collectively illustrate the robust performance of the Inception mv4 model in image classification.

5 Conclusions

Based on our findings, we conclude that Inception Mv4 can be used in real-time to detect breast cancer and reaches the best efficiency and performance increases even more with using situ cooling. Additionally, we have observed that increasing tumor temperature improves detection and classification accuracy. Several factors do affect the performance of the Neural Network used, such as Thermal images database, optimization method, Network model and extracted features [24]. In addition, there are some factors such as blood perfusion, size, depth, and thermal conductivity on breast size [2]. Also, four factors have been added to influence the accuracy of thermal imaging diagnostics (blurry images, flipped images, tilted images, and shaken images) [13]. In future work, need to study the thermal images database by adding thermal video clips and more research by adding good quality thermal cameras. Moreover, new types of deep convolutional neural networks can be used in future work such as spiking models. Replicating the results of the presented approach for thermal imaging in breast cancer detection with situ cooling can be achieved thoroughly document all aspects of the experimental setup, including the specifications of the thermal imaging equipment and situ cooling system. In addition, establish standardized procedures for situ cooling, image acquisition, and data processing. Moreover, ensure that dataset is representative of diverse clinical scenarios and design experiments to evaluate the impact of situ cooling on thermal image quality and detection accuracy. Also, consider variations in tumor size, depth, and location within the breast. In addition, maintain a controlled environment during experiments, paying attention to factors such as ambient temperature, humidity, and lighting conditions and validate approach using larger and more diverse datasets to ensure the generalizability of the results across different patient populations and clinical scenarios. Finally, identify and address potential limitations and challenges associated with the approach.

Data availability

Data sets generated during the current study are available from the corresponding author upon reasonable request. The DMR IR dataset used in this study is available publicly at https://visual.ic.uff.br/dmi/

References

Gonzalez-hernandez J, Recinella AN, Kandlikar SG, Dabydeen D, Medeiros L, Phatak P. Technology, application and potential of dynamic breast thermography for the detection of breast cancer. Int J Heat Mass Transf. 2019;131:558–73. https://doi.org/10.1016/j.ijheatmasstransfer.2018.11.089.

Al Husaini MAS, Habaebi MH, Suliman FM, Islam MR, Elsheikh EAA, Muhaisen NA. Influence of tissue thermophysical characteristics and situ-cooling on the detection of breast cancer. Appl Sci. 2023;13:8752. https://doi.org/10.3390/app13158752.

Sánchez-cauce R, Pérez-martín J, Luque M. Multi-input convolutional neural network for breast cancer detection using thermal images and clinical data. Computer Methods Progr Biomed. 2021. https://doi.org/10.1016/j.cmpb.2021.106045.

Kanimozhi P, Sathiya S, Balasubramanian M, Sivaraj P. Novel segmentation method to diagnose breast cancer in thermography using deep convolutional neural network. Ann Rom Soc Cell Biol. 2021;25(4):6010–25.

Kanimozhi P, Sathiya S, Sivaguru MBP, Sivaraj P. Evaluation of machine learning and deep learning approaches to classify breast cancer using thermography. Psychol Educ J. 2021;58:8796–813.

Lahane SR, Chavan PN, Madankar PM. Classification of thermographic images for breast cancer detection based on deep learning. Ann Rom Soc Cell Biol. 2021;25(6):3459–66.

Farooq MA, Corcoran P. Infrared imaging for human thermography and breast tumor classification using thermal images. 2020.

Masry Z, Al Benaggoune K, Meraghni S, Zerhouni N. A CNN-based methodology for breast cancer diagnosis using thermal images ARTICLE HISTORY. Computer Methods Biomech Biomed Eng Imaging Vis. 2021;9(2):131–45. https://doi.org/10.1080/21681163.2020.1824685.

Civilibal S, Cevik KK, Bozkurt A. A deep learning approach for automatic detection, segmentation and classification of breast lesions from thermal images. Expert Syst Appl. 2023;212:118774. https://doi.org/10.1016/j.eswa.2022.118774.

Dey A, Ali E, Rajan S. Bilateral symmetry-based abnormality detection in breast thermograms using textural features of hot regions. IEEE Open J Instrum Meas. 2023;2:1–14. https://doi.org/10.1109/ojim.2023.3302908.

Gomathi P, Muniraj C, Periasamy PS. Digital infrared thermal imaging system based breast cancer diagnosis using 4D U-Net segmentation. Biomed Signal Process Control. 2023;85:104792. https://doi.org/10.1016/j.bspc.2023.104792.

Ogundokun RO, Misra S, Douglas M, Damaševičius R, Maskeliūnas R. Medical internet-of-things based breast cancer diagnosis using hyperparameter-optimized neural networks. Future Internet. 2022. https://doi.org/10.3390/fi14050153.

Al Husaini MAS, Habaebi MH, Islam MR, Gunawan TS. Self-detection of early breast cancer application with infrared camera and deep learning. Electronics. 2021. https://doi.org/10.3390/electronics10202538.

Noori Shirazi Y, Esmaeli A, Tavakoli MB, Setoudeh F. Improving three-dimensional near-infrared imaging systems for breast cancer diagnosis. IETE J Res. 2023;69(4):1906–14. https://doi.org/10.1080/03772063.2021.1878064.

Aidossov N, et al. An integrated intelligent system for breast cancer detection at early stages using IR images and machine learning methods with explainability. SN Comput Sci. 2023;4(2):1–16. https://doi.org/10.1007/s42979-022-01536-9.

Aldhyani THH, Nair R, Alzain E, Alkahtani H, Koundal D. Deep learning model for the detection of real time breast cancer images using improved dilation-based method. Diagnostics. 2022. https://doi.org/10.3390/diagnostics12102505.

Sadeghi-Goughari M, Han SW, Kwon HJ. Real-time monitoring of focused ultrasound therapy using in-telligence-based thermography: a feasibility study. Ultrasonics. 2023;134:107100. https://doi.org/10.1016/j.ultras.2023.107100.

Chebbah NK, Ouslim M, Benabid S. New computer aided diagnostic system using deep neural network and SVM to detect breast cancer in thermography. Quant Infrared Thermogr J. 2023;20(2):62–77. https://doi.org/10.1080/17686733.2021.2025018.

Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. 2016. https://doi.org/10.1109/CVPR.2016.91.

Wahed MRBin, Chakrabarty A, Mostakim M. Comparative analysis between Inception-v3 and other learning systems using facial expressions detection. 2016.

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA. Inception-v4, Inception-ResNet and the impact of residual connections on learning. In: Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17) In-ception-v4. 2017. p. 4278–84.

Husaini MAS, Al Habaebi MH, Gunawan TS, Islam MR, Hameed SA. Automatic breast cancer detection using Inception v3 in thermography. In: 2021 8th International Conference on Computer and Communication Engineering (ICCCE) Automatic. 2021. p. 31–4. https://doi.org/10.1109/ICCCE50029.2021.9467231.

Al Husaini MAS, Habaebi MH, Gunawan TS, Islam MR, Elsheikh EAA, Suliman FM. Ther-mal-based early breast cancer detection using Inception v3, Inception V4 and modified Inception MV4. Neural Comput Appl. 2022;34(1):333–48. https://doi.org/10.1007/s00521-021-06372-1.

Abdulla M, Habaebi MH, Hameed SA, Islam MR, Gunawan TS. A systematic re-view of breast cancer detection using thermography and neural networks. IEEE Access. 2020;8:208922–37. https://doi.org/10.1109/ACCESS.2020.3038817.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

MAS contributed to the paper by conducting the literature review, writing the manuscript, revising it, creating figures and tables, interpreting the results, and providing critical feedback and review. MHH contributed to the paper by conducting the literature review, writing the manuscript, revising it, creating figures and tables, interpreting the results, and providing critical feedback and review. MdR contributed to the paper by conducting the literature review, writing the manuscript, revising it, creating figures and tables, interpreting the results, and providing critical feedback and review.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Al Husaini, M.A.S., Habaebi, M.H. & Islam, M.R. Real-time thermography for breast cancer detection with deep learning. Discov Artif Intell 4, 57 (2024). https://doi.org/10.1007/s44163-024-00157-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44163-024-00157-w