Abstract

For a complex number \(\alpha \), we consider the sum of the \(\alpha \)th powers of subtree sizes in Galton–Watson trees conditioned to be of size n. Limiting distributions of this functional \(X_n(\alpha )\) have been determined for \({\text {Re}}\alpha \ne 0\), revealing a transition between a complex normal limiting distribution for \({\text {Re}}\alpha < 0\) and a non-normal limiting distribution for \({\text {Re}}\alpha > 0\). In this paper, we complete the picture by proving a normal limiting distribution, along with moment convergence, in the missing case \({\text {Re}}\alpha = 0\). The same results are also established in the case of the so-called shape functional \(X_n'(0)\), which is the sum of the logarithms of all subtree sizes; these results were obtained earlier in special cases. In addition, we prove convergence of all moments in the case \({\text {Re}}\alpha < 0\), where this result was previously missing, and establish new results about the asymptotic mean for real \(\alpha < 1/2\).

A novel feature for \({\text {Re}}\alpha =0\) is that we find joint convergence for several \(\alpha \) to independent limits, in contrast to the cases \({\text {Re}}\alpha \ne 0\), where the limit is known to be a continuous function of \(\alpha \). Another difference from the case \({\text {Re}}\alpha \ne 0\) is that there is a logarithmic factor in the asymptotic variance when \({\text {Re}}\alpha =0\); this holds also for the shape functional.

The proofs are largely based on singularity analysis of generating functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

This paper is a sequel to [5]. As there, we consider a conditioned Galton–Watson tree \(\mathcal {T}_n\) of size n, and the random variables

where \(\mathcal {T}_{n,v}\) is the fringe subtree of \(\mathcal {T}_n\) rooted at a vertex \(v\in \mathcal {T}_n\), i.e., the subtree consisting of v and all its descendants. This is a special case of what is known as an additive functional: a functional associated with a rooted tree T that can be expressed in the form

for a certain toll function f. Thus, \(F_\alpha \) is the additive functional on rooted trees defined by the toll function \(f_\alpha (T):=|T|^\alpha \). As in [5], we allow the parameter \(\alpha \) to be any complex number; this is advantageous, even for the study of real \(\alpha \), since it allows us to use powerful results from the theory of analytic functions in the proofs, and it also yields new phenomena for non-real \(\alpha \), for example, Theorem 1.4 below for purely imaginary \(\alpha \). (See further Sect. 2 for the notation used here and below.)

In [5], it is assumed that the conditioned Galton–Watson tree \(\mathcal {T}_n\) is defined by some offspring distribution \(\xi \) with \({\mathbb E{}}\xi =1\) and \(0< \sigma ^2:= {\text {Var}}\xi < \infty \). The main results are limit theorems showing that then the random variables \(X_n(\alpha )\) converge in distribution after suitable normalization. The results differ for the two cases \({\text {Re}}\alpha <0\) and \({\text {Re}}\alpha >0\): Typical results are the following (here somewhat simplified), where

For further related results, and references to previous work, see [5].

Theorem 1.1

([5, Theorem 1.1]) If \({\text {Re}}\alpha <0\), then

where \(\widehat{X}(\alpha )\) is a centered complex normal random variable with distribution depending on the offspring distribution \(\xi \).

Theorem 1.2

([5, Theorem 1.2]) If \({\text {Re}}\alpha >0\), then

where \({\widetilde{Y}}(\alpha )\) is a centered random variable with a (non-normal) distribution that depends on \(\alpha \) but does not depend on the offspring distribution \(\xi \).

Note the three differences between the two cases:

-

(i)

the normalization is by different powers of n, with the power constant for \({\text {Re}}\alpha <0\) but not for \({\text {Re}}\alpha >0\);

-

(ii)

the limit is normal for \({\text {Re}}\alpha <0\) but not for \({\text {Re}}\alpha >0\);

-

(iii)

the limit distribution is universal for \({\text {Re}}\alpha >0\) in the sense that it depends on \(\xi \) only by the scale factor \(\sigma ^{-1}\), but for \({\text {Re}}\alpha <0\), the distribution seems to depend on the offspring distribution \(\xi \) in a more complicated way. (In the latter case, the distribution is complex normal, so it is determined by the covariance matrix of \(\bigl ({\text {Re}}\widehat{X}(\alpha ),{\text {Im}}\widehat{X}(\alpha )\bigr )\); a complicated formula for covariances is given in [5, Remark 5.1], but we do not know how to evaluate it for concrete examples, not even when \(\alpha <0\) is real and, thus, \(\widehat{X}(\alpha )\) is a real random variable.)

The results above leave a gap: the case \({\text {Re}}\alpha =0\), and the main purpose of the present paper is to fill this gap, and to compare the results with the cases above. The case \(\alpha =0\) is trivial, since \(X_n(\alpha )=n\) is non-random. (If \(\alpha =0\), then each vertex v of the tree contributes \(|\mathcal {T}_{n,v}|^\alpha = 1\) to (1.1).) However, in this case we instead study the derivative

which is known as the shape functional. This functional was introduced by Fill [3] in the (different) context of binary search trees under the random permutation model, for which he argued that the functional serves as a crude measure of the “shape” of a random tree, and then studied in some special cases of simply generated trees in e.g. [1, 4, 7, 16, 19], see Sect. 3.

Another gap in [5] is that moment convergence was proved for \({\text {Re}}\alpha >0\) (Theorem 1.2) but not for \({\text {Re}}\alpha <0\) (Theorem 1.1). We fill that gap too.

For technical convenience, we assume throughout the paper the weak extra moment condition

for some \(\delta >0\); we also continue to assume \({\mathbb E{}}\xi =1\). We let \(\mathcal {T}\) be an unconditioned Galton–Watson tree with offspring distribution \(\xi \), and define, for complex \(\alpha \) with \({\text {Re}}\alpha <\frac{1}{2}\),

(The sum (1.8) converges for \({\text {Re}}\alpha <\frac{1}{2}\), since \({\mathbb P{}}(|\mathcal {T}|=n)=O(n^{-3/2})\); see (2.25).)

Our main results are the following. Note that \(X_n'(0)\) is a real random variable, while \(X_n(\textrm{i}t)\) and \(X_n(\alpha )\) for \(\alpha \notin \mathbb R\) are non-real except in trivial cases. As said above, special cases of Theorem 1.3 have been proved by Pittel [19], Fill and Kapur [7], and Caracciolo et al. [1].

Theorem 1.3

Assume (1.7) with \(\delta >0\). Then,

together with convergence of all moments.

Theorem 1.4

Assume (1.7) with \(\delta >0\). Then, for any real \(t\ne 0\),

together with convergence of all moments, where \(\zeta _{\textrm{i}t}\) is a symmetric complex normal variable with variance

Theorem 1.5

Assume (1.7) with \(\delta >0\). Then, for any complex \(\alpha \) with \({\text {Re}}\alpha <0\),

together with convergence of all moments, where \(\widehat{X}(\alpha )\) is a centered complex normal random variable with positive variance and distribution depending on the offspring distribution \(\xi \). Hence, (1.4) holds with convergence of all moments.

Remark 1.6

By “convergence of all moments,” we mean in the case of complex variables, \(Z_n\) say, convergence of all mixed moments of \(Z_n\) and \(\overline{Z_n}\), which is equivalent to convergence of all mixed moments of \({\text {Re}}Z_n\) and \({\text {Im}}Z_n\). Since we have convergence in distribution, this is by a standard argument using uniform integrability also equivalent to convergence of all absolute moments.

Note that, conversely, by the method of moments applied to \(({\text {Re}}Z_n,{\text {Im}}Z_n)\), this implies convergence in distribution of \(Z_n\), provided, as is the case here, the limit distribution is determined by its moments. Thus, our proof of moment convergence provides a new proof of Theorem 1.1, very different from the proof in [5]. \(\square \)

Remark 1.7

Since the statements include convergence of the first moments (to 0), we may in Theorems 1.3–1.5 replace \(\mu 'n\), \(\mu (\textrm{i}t)n\), and \(\mu (\alpha )n\) by the expectations \({\mathbb E{}}X_n'(0)\), \({\mathbb E{}}X_n(\textrm{i}t)\), and \({\mathbb E{}}X_n(\alpha )\), respectively; in particular, this gives the last sentence in Theorem 1.5. More precise estimates of the expectations are given in (3.11), (3.20), (4.14), and (5.10). \(\square \)

Theorems 1.3 and 1.4 combine some of the features found for \({\text {Re}}\alpha <0\) and \({\text {Re}}\alpha >0\) in (i)-(iii) above. First, the variances in Theorems 1.3 and 1.4 are of order \(n\log n\). This might be a surprise since it is not what a naive extrapolation from either \({\text {Re}}\alpha <0\) in Theorem 1.1 or \({\text {Re}}\alpha >0\) in Theorem 1.2 would yield, where the variances are of order n (\({\text {Re}}\alpha <0\)) and \(n^{1+2{\text {Re}}\alpha }\) (\({\text {Re}}\alpha >0\)); however, it is not surprising that a logarithmic factor appears when the two different expressions meet. (Compare for instance the result on the mean of \(X_n(\alpha )\) in [5, Theorem 1.7], or the discussion of the binary search tree recurrence in [9, Example VI.15, pp. 428–429], where the emergence of similar logarithmic factors is observed.) Second, the limits are normal, as heuristically would be expected by “continuity” from the left, see (ii). Third, the limits are universal and depend only on \(\sigma \) as a scale factor, as heuristically would be expected by “continuity” from the right, see (iii).

The proofs in [5] use two different methods, which are combined to yield the full results: (1) methods using complex analysis and the fact that \(X_n(\alpha )\) is an analytic function of \(\alpha \), and (2) analysis of moments for a fixed \(\alpha \) using singularity analysis of generating functions based on results of Fill et al. [4], also presented in [9, Section VI.10]. In the present paper, we will use only the second method. We follow the proofs in [5] with some variations (see also [6] and [7]). However, some new leading terms will appear in the singular expansions of the generating functions, which will dominate the terms that are leading in [5]; this explains both the logarithmic factors in the variance (and in higher moments) in Theorems 1.3 and 1.4, and the fact that these theorems yield normal limits while Theorem 1.2 does not.

After some preliminaries in Sect. 2, we first study the shape functional and prove Theorem 1.3 in Sect. 3; we then study the case of imaginary exponents and prove Theorem 1.4 in Sect. 4; after that, we consider the case \({\text {Re}}\alpha <0\) and prove Theorem 1.5 in Sect. 5. These three sections use the same method (from [7] and [5]) and are, thus, quite similar, but some details differ. The differences arise partly because \(X_n'(0)\) is real, while \(X_n(\textrm{i}t)\) and (in general) \(X_n(\alpha )\) are not; we will also see that the logarithmic factors in the first two cases appear in the moments in somewhat different ways, and that there is a cancellation of some leading terms in our induction for the first and third cases, but not for \(X_n(\textrm{i}t)\). For this reason, we give complete arguments for all three cases, and we encourage the reader to compare them and see both similarities and differences.

In Sect. 6, we show how the centering functions (1.8) and (1.9) can be compared across variation in the offspring distribution when (real) \(\alpha \) satisfies \(\alpha < \frac{1}{2}\).

Remark 1.8

The results in [5] show also joint convergence for different \(\alpha \) in Theorems 1.1 and 1.2, with limits \(\widehat{X}(\alpha )\) and \({\widetilde{Y}}(\alpha )\) that are analytic, and in particular continuous, random functions of the parameter \(\alpha \) in the half-planes \({\text {Re}}\alpha <0\) and \({\text {Re}}\alpha >0\), respectively. This does not extend to the imaginary axis \({\text {Re}}\alpha =0\); we will see in Theorem 4.2 that \(X_n(\alpha )\) for different imaginary \(\alpha \) are asymptotically independent (for \({\text {Im}}\alpha >0\)), and thus, it is not possible to have joint convergence to a continuous random function. \(\square \)

Remark 1.9

Let \(\alpha =s+\textrm{i}t\), where t is real and fixed, and let \(s\searrow 0\). (Thus, \(s>0\) is real.) It is shown in [5, Appendix D] that if \(t\ne 0\), then the limit \({\widetilde{Y}}(s+\textrm{i}t)\) diverges (in probability, say) as \(s\searrow 0\), and that \(s^{1/2}{\widetilde{Y}}(s+\textrm{i}t)\overset{\textrm{d}}{\longrightarrow }\zeta \), where \(\zeta \) is a symmetric complex normal variable with

(However, unfortunately there is a typographical error in [5, (D.2)], see the corrigendum to [5] for a correction.) Similarly, it is shown in [5, Appendix C] that \(s^{-1/2}{\widetilde{Y}}(s)\overset{\textrm{d}}{\longrightarrow }N(0,2(1-\log 2))\) as \(s\searrow 0\); in particular \(s^{-1}{\widetilde{Y}}(s)\) diverges.

These results may be compared to Theorems 1.3–1.4; note that the limits are the same, except that the variances in both cases differ by a factor 1/2 (which of course depends on the chosen normalizations). Both sets of results can be regarded as iterated limits of \({\widetilde{X}}_n(s+\textrm{i}t)\), taking \({n\rightarrow \infty }\) and \(s\searrow 0\) in different orders. The divergence of \({\widetilde{Y}}(s+\textrm{i}t)\) as \(s\searrow 0\) (for fixed \(t\ne 0\)) thus seems to be related to the fact that the asymptotic variance in Theorem 1.3 is of greater order than n, and similarly the divergence as \(s\searrow 0\) of \(s^{-1}{\widetilde{Y}}(s)\) (which loosely might be regarded as an approximation of \(n^{-1/2}{\widetilde{X}}_n'(0)\)) seems related to Theorem 1.4. However, we do not see why the factors \(s^{\pm \frac{1}{2}}\) in these limits should correspond to the factor \((\log n)^{1/2}\) in Theorems 1.3 and 1.4 [or more precisely to the factor \((2 \log n)^{1/2}\), to get exactly the same limit distributions]. \(\square \)

We end with some problems suggested by the results and comments above.

Problem 1.10

Is there a simple explanation of the equality discussed in Remark 1.9 of iterated limits in different orders, but with different normalizations? Is this an instance of some general phenomenon? What happens if \(s\searrow 0\) and \({n\rightarrow \infty }\) simultaneously, i.e., for \({\widetilde{X}}_n(s_n+\textrm{i}t)\) where \(s_n\searrow 0\) at some appropriate rate?

The asymptotic independence of \(X(\textrm{i}t)\) for \(t>0\) mentioned in Remark 1.8 suggests informally that the stochastic process \(({\widetilde{X}}_n(\textrm{i}t): t\geqslant 0)\) asymptotically looks something like white noise. This might be investigated further, for example as follows.

Problem 1.11

Consider the integrated process \(\int _0^t {\widetilde{X}}_n(\textrm{i}u)\,\textrm{d}u\). What is the order of its variance? Does this process after normalization converge to a process with paths that are continuous in t?

The moment assumption (1.7) is used repeatedly to control error terms, but it seems convenient rather than necessary.

Problem 1.12

We conjecture that Theorems 1.3–1.5 hold also without the assumption (1.7). Prove (or disprove) this!

2 Notation and Preliminaries

2.1 General Notation

As said above, \(\mathcal {T}\) is a Galton–Watson tree defined by an offspring distribution \(\xi \) with mean \({\mathbb E{}}\xi =1\) and finite non-zero variance \(\sigma ^2:={\text {Var}}\xi <\infty \), and we assume \( {\mathbb E{}}\xi ^{2+\delta }<\infty \) for some \(\delta >0\). Furthermore, the conditioned Galton–Watson tree \(\mathcal {T}_n\) is defined as \(\mathcal {T}\) conditioned on \(|\mathcal {T}|=n\). We assume for simplicity that \(\xi \) has span 1; the general case follows by standard (and minor) modifications. (Recall that the span of an integer-valued random variable \(\xi \), denoted \({\text {span}}(\xi )\), is the largest integer h such that \(\xi \in a+h\mathbb Z\) a.s. for some \(a\in \mathbb Z\); we consider only \(\xi \) with \({\mathbb P{}}(\xi =0)>0\) and then the span is the largest integer h such that \(\xi /h\in \mathbb Z\) a.s., i.e., the greatest common divisor of \(\{n:{\mathbb P{}}(\xi =n)>0\}\).)

In the sequel, \(\Gamma (z)\) denotes the Gamma function, \(\psi (z):=\Gamma '(z)/\Gamma (z)\) is its logarithmic derivative, and \(\gamma =-\psi (1)\) is Euler’s constant.

A random variable \(\zeta \) has a complex normal distribution if it takes values in \(\mathbb C\) and \(({\text {Re}}\zeta ,{\text {Im}}\zeta )\) is a 2-dimensional normal distribution (with arbitrary covariance matrix). In particular, \(\zeta \) is symmetric complex normal if further \({\mathbb E{}}\zeta =0\) and \(({\text {Re}}\zeta ,{\text {Im}}\zeta )\) has covariance matrix \(\left( {\begin{matrix}\varsigma ^2/2&{}0\\ 0&{}\varsigma ^2/2\end{matrix}}\right) \) for some \(\varsigma ^2={\mathbb E{}}|\zeta |^2\), which is called the variance; equivalently, \({\mathbb E{}}\zeta =0\), \({\mathbb E{}}\zeta ^2=0\), and \({\mathbb E{}}|\zeta |^2=\varsigma ^2\). (See e.g. [11, Proposition 1.31].) A symmetric complex normal distribution with variance \(\varsigma ^2\) is determined by the mixed moments of \(\zeta \) and \(\overline{\zeta }\), which are given by (see [11, p. 14])

Unspecified limits are as \({n\rightarrow \infty }\). We let \(\overset{\textrm{d}}{\longrightarrow }\) denote convergence in distribution.

For real x and y, we denote \(\min (x,y)\) by \(x\wedge y\).

The semifactorial \(\ell !!\) is defined for odd integers \(\ell \) (the only case that we use) by

Note that \((-1)!!=1!!=1\).

Throughout, \(\varepsilon \) denotes an arbitrarily small fixed number with \(\varepsilon >0\). (We will tacitly assume that \(\varepsilon \) is sufficiently small when necessary.)

We let C and c denote unimportant positive constants, possibly different each time; these may depend on the parameter \(\alpha \) (or \(\alpha _1,\alpha _2\) below). We sometimes use c with subscripts; these keep the same value within the same section.

2.2 \(\Delta \)-domains and Singularity Analysis

A \(\Delta \)-domain is a complex domain of the type

where \(R>1\) and \(0<\theta <\pi /2\), see [9, Section VI.3]. A function is \(\Delta \)-analytic if it is analytic in some \(\Delta \)-domain (or can be analytically continued to such a domain).

Our proofs are based on singularity analysis of various generating functions (see [9, Chapter VI]), using estimates as \(z\rightarrow 1\) in a suitable \(\Delta \)-domain; the domain may be different each time. In particular, we use repeatedly [9, Theorem VI.3, p. 390] to estimate error terms when we identify coefficients. All estimates below of analytic functions tacitly are valid in some \(\Delta \)-domains (possibly different ones for different functions), even when that is not said explicitly.

2.3 Polylogarithms

\({\text {Li}}_\alpha (z)\) and \({\text {Li}}_{\alpha ,r}(z)\) denote polylogarithms and generalized polylogarithms, respectively; they are defined for \(\alpha \in \mathbb C\) and \(r=0,1,\dots \) by the power series

for \(|z|<1\), and then extended analytically to \(\mathbb C\setminus [0,\infty )\) (in particular they are \(\Delta \)-analytic); see e.g. [9, Section VI.8]. Note that \({\text {Li}}_{\alpha ,0}(z)={\text {Li}}_\alpha (z)\). We will also use the notation

We will use singular expansions of polylogarithms and generalized polylogarithms into powers of \(1-z\), possibly including powers of L(z). Infinite singular expansions of polylogarithms and generalized polylogarithms are given by Flajolet [8, Theorem 1] (also [9, Theorem VI.7]); we will mainly use only the following simple versions keeping only the main terms.

For any real a, let \(\mathcal {P}_a\) be the set of all polynomials in z of degree \(<a\). In particular, if \(a\leqslant 0\), then \(\mathcal {P}_a=\{0\}\). If \(0\leqslant a\leqslant 1\), then every polynomial in \(\mathcal {P}_a\) is constant. These simple cases are the ones of most interest to us.

We then have, for each \(\alpha \notin \{1,2,\dots \}\),

for some \( P(z)\in \mathcal {P}_{{{\text {Re}}\alpha }}\).

Moreover, in our proofs, we will often go back and forth between expansions in powers of \(1-z\) (including powers of L(z)) and expansions in (generalized) polylogarithms, using the following simple consequence of the singular expansions of generalized polylogarithms, proved in [7]. (Here slightly simplified.)

Lemma 2.1

([7, Lemmas 2.5 and 2.6]) Suppose that \({\text {Re}}\alpha <1\). Then, for each \(r\geqslant 0\), in any fixed \(\Delta \)-domain and for any \(\varepsilon >0\),

for some coefficients \(\rho _{r,j}(\alpha )\) and \(c_r(\alpha )\), with leading coefficient

Conversely,

for some coefficients \(\hat{\rho }_{r,j}(\alpha )\) and \(\hat{c}_r(\alpha )\), with

Remark 2.2

The lemmas in [7] are stated for real \(\alpha \), but the proofs hold also for complex \(\alpha \). Moreover, the results extend to \(\alpha \) with \({\text {Re}}\alpha \geqslant 1\), assuming \(\alpha \notin \{1,2,\dots \}\), provided the error terms \(O\bigl (|1-z|^{{\text {Re}}\alpha -\varepsilon }\bigr )\) are replaced by \(O(|1-z|)\) when \({\text {Re}}\alpha >1\). \(\square \)

2.4 Hadamard Products

Recall that the Hadamard product \(A(z)\odot B(z)\) of two power series \(A(z)=\sum _{n=0}^\infty a_n z^n\) and \(B(z)=\sum _{n=0}^\infty b_n z^n\) is defined by

(See e.g. [4] or [9].) As a simple example, for any complex \(\alpha \) and \(\beta \),

and, more generally, by (2.5),

We note also, for any constant c and power series \(A(z)=\sum _{n=0}^\infty a_nz^n\), the trivial result

For our error terms, we will use the following lemma; it is part of [5, Lemma 12.2] and taken from [4, Propositions 9 and 10(i)] and [9, Theorem VI.11, p. 423]. (Further related results are given in [4, 9, Section VI.10.2], and [5].)

Lemma 2.3

([4, 9]) If g(z) and h(z) are \(\Delta \)-analytic, then \(g(z)\odot h(z)\) is \(\Delta \)-analytic. Moreover, suppose that \(g(z)=O(|1-z|^a)\) and \(h(z)=O(|1-z|^b)\), where a and b are real with \(a+b+1\notin \{0,1,2,\dots \}\); then, as \(z\rightarrow 1\) in a suitable \(\Delta \)-domain,

for some \( P(z)\in \mathcal {P}_{a+b+1}\).

2.5 Generating Functions for Galton–Watson trees

Let \(p_k:={\mathbb P{}}(\xi =k)\) denote the values of the probability mass function for the offspring distribution \(\xi \), and let \(\Phi \) be its probability generating function:

Similarly, let \(q_n:={\mathbb P{}}(|\mathcal {T}|=n)\), and let y denote the corresponding probability generating function:

As is well known, then

Under our assumptions \({\mathbb E{}}\xi =1\) and \(0<{\text {Var}}\xi <\infty \), the generating function y(z) extends analytically to a \(\Delta \)-domain and is, thus, \(\Delta \)-analytic; see [12, Lemma A.2] and [5, §12.1] (and under stronger assumptions [9, Theorem VI.6, p. 404]). Furthermore, see again [12, Lemma A.2], there exists a \(\Delta \)-domain where \(|y(z)|<1\), and thus, \(\Phi (y(z))\) is \(\Delta \)-analytic, as well as \(\Phi ^{(m)}\bigl (y(z)\bigr )\) for every \(m\geqslant 1\).

We note some useful consequence of our extra moment assumption (1.7); we may without loss of generality assume \(\delta \leqslant 1\). (Compare [5, (12.5), (12.30), and (12.31)] without the assumption (1.7) but with weaker error terms, and [9, Theorem VI.6] with stronger results under stronger assumptions.)

Lemma 2.4

If (1.7) holds with \(0<\delta \leqslant 1\), then, for z in some \(\Delta \)-domain,

In particular, all three functions are \(\Delta \)-analytic.

Proof

That y(z) is \(\Delta \)-analytic was noted above, and the estimate (2.20) was shown in [5, Lemma 12.15]. A differentiation then yields (2.21) in a smaller \(\Delta \)-domain, using Cauchy’s estimates for a disk with radius \(c|1-z|\) centered at z (see [9, Theorem VI.8, p. 419]).

Note that \(zy'(z)/y(z)\) is analytic in any domain where y is defined and analytic with \(|y(z)|<1\), since then (2.19) holds in the domain and implies that \(y(z)\ne 0\) for \(z\ne 0\), and also that z/y(z) is analytic at \(z=0\). Hence, also \(zy'(z)/y(z)\) is \(\Delta \)-analytic. Finally, (2.22) follows from (2.20) and (2.21). \(\square \)

By (2.7), and using \(\Gamma (-1/2)=-2\sqrt{\pi }\), we can rewrite (2.20) as

where (although we do not need it) \(c=1-\zeta (3/2)/\sqrt{2\pi \sigma ^2}\). Furthermore, by (2.4) and singularity analysis [9, Theorem VI.3, p. 390], (2.23) implies

Remark 2.5

It is well known that the asymptotic formula

holds with a weaker error bound than (2.24), assuming only \({\text {Var}}\xi <\infty \) (and \({\mathbb E{}}\xi =1\)); see e.g. [18] (assuming an exponential moment), [15, Lemma 2.1.4], or [13, Theorem 18.11] (with \(\tau =\Phi (\tau )=1\)) and the further references given there. \(\square \)

Lemma 2.6

Assume (1.7) with \(0<\delta \leqslant 1\). Then, for z in some \(\Delta \)-domain,

and, for each fixed \(m\geqslant 3\),

Proof

The assumption (1.7) implies the estimate, see e.g. [5, Lemma 12.14],

By differentiation of (2.30), for the remainder term using Cauchy’s estimates for a disk with radius \((1-|z|)/2\) centered at z, we obtain for all z with \(|z|<1\), and each fixed \(m\geqslant 3\),

For z in a suitable \(\Delta \)-domain we have (2.20), and as a consequence, if \(|1-z|\) is small enough,

The result follows by (2.30)–(2.34). \(\square \)

Remark 2.7

In fact, (2.27) holds without the extra assumption (1.7), assuming only \({\mathbb E{}}\xi ^2<\infty \), because then \(\Phi \) is twice continuously differentiable in the closed unit disk with \(\Phi '(1)=1\), and \(y(z)=1-\sqrt{2}\sigma ^{-1}(1-z)^{1/2}+o(|1-z|^{1/2})\) as is shown in [12, Lemma A.2], see also [5, (12.5)]. \(\square \)

3 The Shape Functional

We consider here the shape functional \(X_n'(0)\). Asymptotics for the mean and variance of this functional were found by Fill [3] in the case of binary search trees under the random permutation model; these are not simply generated trees. Asymptotics for the mean and variance were found by Meir and Moon [16] for simply generated trees under a condition equivalent to our conditioned Galton–Watson trees with \(\xi \) having a finite exponential moment \({\mathbb E{}}e^{r\xi }<\infty \) for some \(r>0\). Pittel [19] showed asymptotic normality in the case of uniform labelled trees [the case \(\xi \sim {\text {Po}}(1)\)] by estimating cumulants. Fill and Kapur [7] considered uniform binary trees [\(\xi \sim {\text {Bi}}(2,\frac{1}{2})\)] and showed asymptotic normality by estimating moments by singularity analysis, see also Fill et al. [4]. Asymptotic normality has recently been shown, by similar methods, also for uniformly random ordered trees [the case \(\xi \sim {\text {Ge}}(\tfrac{1}{2})\)] by Caracciolo et al. [1], who further [personal communication] have extended the results to arbitrary offspring distributions \(\xi \) (with \({\mathbb E{}}\xi =1\) as here), at least provided that \(\xi \) has a finite exponential moment \({\mathbb E{}}e^{r\xi }<\infty \) for some \(r>0\).

We will here extend these results to any offspring distribution \(\xi \) satisfying the standard condition \({\mathbb E{}}\xi =1\) and the weak moment condition (1.7) for some \(\delta >0\). We assume without loss of generality that \(0<\delta \leqslant 1\). We will use singularity analysis to estimate moments, in the same way as [1, 4, 7].

In this section, we define (corresponding to [5, (12.46)])

where \(\mu '={\mathbb E{}}\log |\mathcal {T}| = \sum _{n=1}^\infty q_n\log n \) as in (1.9), and we let F be the additive functional defined by the toll function \(f(T):=b_{|T|}\). Thus, by (1.6),

The generating function of \(b_n\) is, by (2.4)–(2.5) and noting \({\text {Li}}_0(z)=z/(1-z)\),

Hence, by Lemma 2.1 (or [9, Figure VI.11, p. 410] with more terms),

We define the generating functions, for \(\ell \geqslant 1\),

These generating functions can be calculated recursively by the following formula (valid for any sequence \(b_n\)) from [5], where \(A(z)^{\odot \ell }\) denotes the \(\ell ^\textrm{th}\) Hadamard power of a power series A(z).

Lemma 3.1

([5, Lemma 12.4]) For every \(\ell \geqslant 1\),

where \(\mathop {\mathrm {\sum \nolimits ^{**}}}\limits \) is the sum over all \((m+1)\)-tuples \((\ell _0,\dots ,\ell _m)\) of non-negative integers summing to \(\ell \) such that \(1\leqslant \ell _1,\dots ,\ell _m<\ell \).

Note that B(z) is \(\Delta \)-analytic by (3.3); furthermore, \(zy'(z)/y(z)\) and \(\Phi ^{(m)}\bigl (y(z)\bigr )\) are also \(\Delta \)-analytic, see Sect. 2.5. Hence, (3.7) and induction using Lemma 2.3 show that every \(M_\ell (z)\) is \(\Delta \)-analytic.

It will be convenient to denote the sum in (3.7) by \(R_\ell (z)\). Thus,

3.1 The Mean

We begin with the mean \({\mathbb E{}}X'_n(0)\) and the corresponding generating function \(M_1(z)\). The following result includes earlier results for special cases in [1, 3, 4, 7, 16, 19], but our error term is weaker [since we have the weaker moment assumption (1.7)]. Recall that \(\psi (z):=\Gamma '(z)/\Gamma (z)\), and note that

see [17, 5.5.2 and 5.4.13].

Lemma 3.2

Assume (1.7) with \(0<\delta \leqslant 1\). Then, for any \(\varepsilon >0\),

and

Proof

For \(\ell =1\), the sums in (3.7) reduce to a single term with \(m=0\) and \(\ell _0=1\), and thus, as in [5, (12.29)], using (2.19),

By (2.14), (2.15), (3.3), and (2.23), we obtain, using Lemma 2.3 and (3.5) for the error term,

Further, by our choice (1.9) of \(\mu '\),

By (2.7), we have

Moreover, by [9, Theorem VI.7, p. 408] (or [8, Theorem 1]),

Hence, (3.13) and (3.15)–(3.16) yield, using (3.14) to see that the constant terms cancel,

Finally, (3.12), (2.22), and (3.17) yield (3.10).

Since \(L(z)=\sum _{n=1}^\infty z^n/n\), (3.10) yields by standard singularity analysis, recalling the definition (3.6),

Hence, using also (2.24),

and (3.11) follows by (3.2). \(\square \)

Remark 3.3

Under stronger moment conditions on the offspring distribution \(\xi \), we may in the same way obtain an expansion of the mean \({\mathbb E{}}X_n'(0)\) with further terms. For example, if \({\mathbb E{}}\xi ^{3+\delta }<\infty \), then the same argument yields

In the special case of binary trees, this was given in [7, (4.2)]. Note that the coefficient of \(\log n\) in (3.20) vanishes for binary trees, but not in general. \(\square \)

3.2 The Second Moment

Lemma 3.4

Assume (1.7) with \(0<\delta \leqslant 1\). Then, for any \(\varepsilon >0\),

Proof

We use Lemma 3.1 with the notation \(R_\ell (z)\) as in (3.8). For \(\ell =2\), Lemma 3.1 shows using (2.19) that

We consider the three terms separately.

First, by (3.5), (2.20), and Lemma 2.3 (twice), we have

For the remaining two terms, we have to be more careful, since it will turn out that their main terms cancel.

For the second term, we note first that (3.10) implies \(M_1(z)=O\bigl (|1-z|^{-\varepsilon }\bigr )\), and thus, (2.27) yields

Hence, (3.5) and Lemma 2.3 yield

This implies, using (3.5), (3.10), and Lemma 2.3 again, followed by (3.3), and recalling \({\text {Li}}_{0,1}\odot L(z)={\text {Li}}_{0,1}\odot {\text {Li}}_{1,0}(z)={\text {Li}}_{1,1}(z)\),

We use the singular expansion of \({\text {Li}}_{1,1}(z)\):

which follows from [8, p. 380] and is given in [7, p. 96], except that the error term there should be \(O(|(1 - z) L(z)|)\), not \(O(|1 - z|)\). Consequently, (3.26) yields

For the third term in (3.22), we have by (2.28) and (3.10), again using \(M_1(z)=O\bigl (|1-z|^{-\varepsilon }\bigr )\),

Finally, (3.22) yields, by summing (3.23), (3.28) (twice), and (3.29), recalling (3.9),

The result (3.21) now follows by (3.30), (3.8), and (2.22), and replacing \(\varepsilon \) by \(\varepsilon /2\) (as we may because \(\varepsilon \) is arbitrary). \(\square \)

This gives the asymptotics for the second moment of the shape functional. Again, the result includes earlier results for special cases in [1, 3, 7, 16, 19]. Recall from (3.2) that \(F(\mathcal {T}_n)=X_n'(0)-\mu 'n\).

Lemma 3.5

Assume (1.7) with \(\delta >0\). Then

and thus,

Proof

We may assume \(\delta \leqslant 1\). The definition (3.6) and the singular expansion (3.21) yield by standard singularity analysis (using (2.10)–(2.11) or [9, Figure VI.5, p. 388])

Hence, (3.31) follows by (2.24). Finally, (3.32) follows by (3.31) and (3.19). \(\square \)

3.3 Higher Moments

We extend the results above to higher moments, using the method used earlier for special cases in [1, 7]; see also [19] for a different method (in another special case).

We prove the following analogue of [5, Lemma 12.8]. Note that (3.34) is not true for \(\ell =1\), since the leading power of L(z) in that case is \(L(z)^1\) by (3.10). (Also (3.35) fails for \(\ell =1\) in general.)

Lemma 3.6

Assume (1.7) with \(0<\delta \leqslant 1\). Then, for every \(\ell \geqslant 2\), \(M_\ell (z)\) is \(\Delta \)-analytic, and, for any \(\varepsilon >0\),

for some coefficients \(\kappa _{\ell ,j}\) and \(\widehat{\kappa }_{\ell ,j}\). The leading coefficients \(\kappa ^*_{2k}:=\kappa _{2k,k}\) in the case that \(\ell = 2 k\) is even are given by the recursion

Furthermore,

Proof

Note first that (3.34) and (3.35) are equivalent by Lemma 2.1, and that (3.38) follows using (2.11).

We use induction on \(\ell \). The base case \(\ell =2\) (including (3.36)) is Lemma 3.4, so we assume \(\ell \geqslant 3\). We follow the proof of [5, Lemma 12.8], mutatis mutandis.

We first note that \(L(z)=O\bigl (|1-z|^{-\varepsilon }\bigr )\). Hence, for every \(\ell '<\ell \), the induction hypothesis and (for the case \(\ell '=1\)) Lemma 3.2 show that

(Here and in the sequel we replace without further comment, as we may, \(c\varepsilon \) by \(\varepsilon \), for any constant c possibly depending on \(\ell \).) Hence, using Lemma 2.6, for a typical term in (3.7) (with \(m\geqslant 0\)),

Since \(\ell - \ell _0 \geqslant m\), the exponent here is \(<0\). Hence, (3.5) and Lemma 2.3 applied \(\ell _0\) times yield

If \(m=0\), then \(\ell _0=\ell \geqslant 3\), and if \(m=1\), then \(\ell _1<\ell \) and thus, \(\ell _0=\ell -\ell _1\geqslant 1\). Hence, except in the two cases (1) \(m=1\) and \(\ell _0=1\) and (2) \(m=2\) and \(\ell _0=0\), we have \(m+\ell _0\geqslant 3\), and then the exponent in (3.41) is \(\geqslant -\frac{1}{2}\ell +1+\frac{\delta }{2}-\varepsilon \). Consequently, by (3.7)–(3.8),

By (2.27), (3.39), (3.5), and Lemma 2.3, we have, similarly to (3.25),

Hence, using also (2.28) and (again) (3.39), we can simplify (3.42) to

In the remaining estimates, we have to be more careful, in particular since there will be important cancellations. (This is as in the case \(\ell =2\) treated earlier, but somewhat different.)

Consider first the Hadamard product in (3.44) (the case \(m=1\) and \(\ell _0=1\) above). We now use the induction hypothesis in the form (3.35) and obtain by (2.14) and (3.3), using again (3.5) and Lemma 2.3 for the error term, and finally rewriting by (2.8),

where the leading coefficient in the sum is, using (2.9) and (2.11),

The leading term in (3.45) is, thus,

Consider now the terms with \(j=1\) and \(j=\ell -1\) in the sum in (3.44). By Lemma 3.2 and the induction hypothesis, we have

Note that the leading term in (3.48) cancels (3.47). Consequently, (3.45)–(3.48) yield

The remaining terms in (3.44) yield immediately, by the induction hypothesis,

Finally, (3.44) and (3.49)–(3.50) yield

and (3.34) follows by (3.8) and (2.22), which completes the induction step.

It remains only to show the recursion (3.37) for the leading coefficients. If \(\ell =2k\) is even, with \(\ell \geqslant 4\), then (3.49) does not contribute to \(c^{(5)}_{2k,k}\) nor, thus, to \(\kappa _{2k,k}\), and neither do the terms in (3.50) with j odd. Hence, the argument above yields

and, thus, recalling again (2.22),

which is (3.37). \(\square \)

The recursion (3.37) is the same as [5, (C.35)] and, thus, has the same solution [5, (C.40)], i.e.

with, see [5, (C.36)] and (3.36),

This is what we need to complete the proof of the asymptotic normality of \(F(\mathcal {T}_n)\).

Proof of Theorem 1.3

If \(\ell \geqslant 2\), then (3.6), the expansion (3.35), (2.5), and standard singularity analysis yield

Hence, using (2.24),

Consequently,

Furthermore, (3.58) holds also for \(\ell =1\) (with limit 0) by (3.19).

For even \(\ell =2k\), the limit in (3.58) is by (3.38), (3.54), and (3.55), cf. [5, (C.41)],

Consequently, the limits appearing in (3.58) are the moments of a normal distribution \(N\bigl (0,4(1-\log 2)\sigma ^{-2}\bigr )\), and thus, (1.10) follows by the method of moments. (Recall that \(F(\mathcal {T}_n)=X_n'(0)-\mu 'n\) by (3.2).) \(\square \)

4 Imaginary Powers

In this section, we consider \(X_n(\alpha )\) in (1.1) when the exponent \(\alpha \) is purely imaginary, i.e., \({\text {Re}}\alpha =0\). We exclude the trivial case \(\alpha =0\), when \(X_n(\alpha )=n\) is non-random. We assume throughout the section that \(0<\delta <1\) and that (1.7) holds. As above, \(\varepsilon \) is an arbitrarily small positive number, and we replace \(c\varepsilon \) by \(\varepsilon \) without comment.

We follow rather closely the argument for the case \(0<{\text {Re}}\alpha <1/2\) in [5, §§12.4–6], but we will see new terms appearing that will lead to the dominating terms with logarithmic factors for the moments; this is very similar to the argument in Sect. 3, but we will see some differences. (Notably, there are no cancellations of leading terms like those in Sect. 3.)

As in [5, §12.4], we define

with the following generating function (cf. [5, (12.44)] and (2.7), and note \({\text {Li}}_0(z)=z(1-z)^{-1}\)):

Let now \(F(T)=F_\alpha (T)\) denote the additive functional defined by the toll function \(f_\alpha (T):=b_{|T|}\). Thus,

4.1 The Mean

For the mean, we define the generating function

We then have, as in (3.12) and [5, (12.29)],

Thus, \(M_\alpha (z)\) is \(\Delta \)-analytic. Further, we have by (2.13), (4.2), and (2.23), using (4.4) and Lemma 2.3 for the error term in (2.23), and then using for the second line (2.7) and \(\Gamma (-\frac{1}{2})=-2\sqrt{\pi }\),

Further, similarly to (3.14),

Thus, letting \(z\rightarrow 1\) in (4.8) shows that \(c_2=(B_\alpha \odot y)(1)=0\).

Finally, (4.7), (2.22), and (4.8) yield, using (2.7) again,

Singularity analysis now yields, from (4.6) and (4.11),

and, thus, by (2.24),

Hence, recalling (4.5),

This agrees with [5, Theorem 1.7(ii)] (proved without (1.7), and by different methods), except that the error estimate here is smaller.

4.2 Higher Moments

For higher moments, we need mixed moments for \(\alpha \) and \(\overline{\alpha }=-\alpha \). Thus, somewhat more generally, fix \(\alpha _1\) and \(\alpha _2\) with \({\text {Re}}\alpha _1={\text {Re}}\alpha _2=0\) but \(\alpha _1\ne 0\ne \alpha _2\). We define, for integers \(\ell _1,\ell _2\geqslant 0\), the generating function

Thus, \(M_{1,0}=M_{\alpha _1}\) and \(M_{0,1}=M_{\alpha _2}\) are given by (4.7). The functions \(M_{\ell ,r}\) can then be found by the following recursion, given in [5, (12.75)], for every \(\ell ,r\geqslant 0\) with \(\ell +r\geqslant 1\):

where \(\mathop {\mathrm {\sum \nolimits ^{**}}}\limits \) is the sum over all pairs of \((m+1)\)-tuples \((\ell _0,\dots ,\ell _m)\) and \((r_0,\dots ,r_m)\) of non-negative integers that sum to \(\ell \) and r, respectively, such that \(1\leqslant \ell _i+r_i<\ell + r\) for every \(i\geqslant 1\). (Note that there are two typographical errors in [5]: the lower summation limit should be \(m=0\), and the final qualification “\(i\geqslant 1\)” is missing there.) It follows by induction that every \(M_{\ell ,r}\) is \(\Delta \)-analytic.

We define for convenience \(R_{\ell ,r}(z)\) as the sum in (4.16); thus,

Let us first consider second moments. Taking \(\ell =r=1\) in (4.16) yields, recalling (2.19),

The first term is, by (4.4) and (4.8) (where \(c_2=0\) by (4.9)) together with Lemma 2.3,

For the other terms in (4.18), we first note from (4.10) that \(M_{1,0}(z)=M_{\alpha _1}(z)=O(1)\) and \(M_{0,1}(z)=M_{\alpha _2}(z)=O(1)\). Thus, using also (2.27)–(2.28), (4.4), and Lemma 2.3, we may simplify to

Furthermore, (4.10) yields

We compute the Hadamard products in (4.20) by (2.13), (4.2), and (4.11), using again (4.4) and Lemma 2.3 for the error term. Together with (4.21), this yields from (4.20) a result that we write, using (2.7), as

If \(\alpha _1+\alpha _2\ne 0\), we use (2.7) also on the first term and obtain

On the other hand, if \(\alpha _1+\alpha _2=0\), we recall that \({\text {Li}}_1(z)=L(z)\), and thus, (4.22) yields

We can now obtain \(M_{1,1}(z)\) from (4.23)–(4.24) by (4.17) and (2.22). We do not state the result separately, but proceed immediately to a general formula.

Lemma 4.1

Let \(\alpha \ne 0\) with \({\text {Re}}\alpha =0\), and take \(\alpha _1=\alpha \) and \(\alpha _2=\overline{\alpha }=-\alpha \). Then, for each pair of integers \(\ell ,r\geqslant 0\) with \(\ell +r\geqslant 2\), \(M_{\ell ,r}(z)\) is \(\Delta \)-analytic and we have, for some coefficients \(\varkappa _{\ell ,r;j,k}\) and \(\widehat{\varkappa }_{\ell ,r;j,k}\), and every \(\varepsilon >0\),

where the sums are over integers j and k with \(-\ell \leqslant j\leqslant r\) and \(0\leqslant k\leqslant \ell \wedge r\).

Furthermore, if \(\ell +r=1\), then (4.25) holds (but not (4.26)).

If \(\ell =r\), then the only non-zero coefficients with \(k=\ell =r\) are

where \(\varkappa ^*_\ell \) is given by the recursion

Proof

Note first that for \(\ell +r=1\), (4.25) follows from (4.10). (We see also from (4.11) that (4.26) would hold if we add a constant term; the problem is that \({\text {Li}}_1(z)\) is L(z) and not a constant.)

Assume in the rest of the proof that \(\ell +r\geqslant 2\). Then the expansions (4.25) and (4.26) are equivalent by Lemma 2.1; furthermore, for the leading terms, (4.27) and (4.28) are equivalent by (2.11).

Consider next the case \(\ell +r=2\). If \((\ell ,r)=(2,0)\) or (0, 2), we can obtain the functions \(M_{2,0}(z)\) and \(M_{0,2}(z)\) as special cases of \(M_{1,1}(z)\) where \(\alpha _1 = \alpha _2 = \pm \alpha \) and, thus, use (4.23) with \(\alpha _1=\alpha _2=\pm \alpha \) and obtain (4.25) by (4.17) and (2.22). (Now only terms with \(k=0\) appear.)

If \(\ell =r=1\), we similarly use (4.24), (4.17), and (2.22) and obtain (4.25) including a single term with \(k=1\), viz. \(\varkappa _{1,1;0,1}L(z)(1-z)^{-1/2}\) with \(\varkappa _{1,1;0,1}\) given by (4.27) and (4.29).

For \(\ell +r\geqslant 3\), we use induction on \(\ell +r\). By the induction hypothesis (4.25) (including the case \(\ell +r=1\) just proved by (4.10)), we have for every \((\ell ',r')\) with \(1\leqslant \ell '+r'<\ell +r\),

Consequently, for a typical term in (4.16), as in (3.40) and using again Lemma 2.6,

Again the exponent here is \(<0\), and it follows by (4.4) and Lemma 2.3 that

As in the proof of Lemma 3.6, except in the two cases (1) \(m=1\) and \(\ell _0+r_0=1\) and (2) \(m=2\) and \(\ell _0=r_0=0\) we have \(m+\ell _0+r_0\geqslant 3\), and then the exponent in (4.33) is \(\geqslant -\frac{1}{2}(\ell +r)+1+\frac{\delta }{2}-\varepsilon \). Consequently, by (4.16)–(4.17),

As in (3.42)–(3.44) and (4.18)–(4.20), this can be simplified, using (2.27)–(2.28), (4.31), (4.4), and Lemma 2.3, and we obtain

By the induction hypothesis in the form (4.26) and (4.2), using as always Lemma 2.3 for the error term, we have

summing over \(-(\ell -1)\leqslant j\leqslant r\) and \(0\leqslant k\leqslant (\ell -1)\wedge r\). By (2.14), this can be rearranged as follows:

now summing over \(-\ell \leqslant j\leqslant r\) and \(0\leqslant k\leqslant (\ell -1)\wedge r\). By Lemma 2.1, this can also be written

still summing over \(-\ell \leqslant j\leqslant r\) and \(0\leqslant k\leqslant (\ell -1)\wedge r\).

By symmetry, \(B_{\alpha _2}(z) \odot M_{\ell ,r-1}(z) \) can also be written as (4.38) (with different coefficients \(c^{(2)}_{\ell ,r;j,k}\)), now summing over \(-\ell \leqslant j\leqslant r\) and \(0\leqslant k\leqslant \ell \wedge (r-1)\).

Finally, the double sum in (4.35) can by the induction hypothesis (4.25) also be written as (4.38), summing over \(-\ell \leqslant j\leqslant r\) and \(0\leqslant k\leqslant \ell \wedge r\).

Consequently, (4.35) yields

summing over \(-\ell \leqslant j\leqslant r\) and \(0\leqslant k\leqslant \ell \wedge r\). By (4.17) and (2.22), this implies (4.25), which completes the induction proof of (4.25)–(4.26).

Now consider the case \(\ell =r\geqslant 2\). We see that then the only terms above with \(k=\ell =r\) come from the double sum in (4.35); moreover, they appear only for terms there with \(i=j\), and we obtain by induction that the only non-zero coefficient in (4.39) with \(k=\ell \) is, using (4.27),

Hence, when deriving (4.25) from (4.39) by (4.17) and (2.22), we also find that the only non-zero coefficient with \(k=\ell \) is

This proves (4.27) and (4.30). \(\square \)

The recursion (4.30) is the same as [5, (D.6)], and thus has the same solution [5, (D.10)]

with, by [5, (D.9)] and (4.29),

Proof of Theorem 1.4

We have \(\alpha =\textrm{i}t\). If \(\ell +r\geqslant 2\), then (4.15), (4.26), (2.5), and singularity analysis yield

When \(\ell =r\), we find more precisely

Hence, using (2.24) and (4.28),

Consequently,

Furthermore, (4.47) holds also for \(\ell +r=1\) by (4.13).

For \(\ell =r\), the limit in (4.47) is by (4.42) and (4.43), cf. [5, (D.11)],

Consequently, by (2.1), the limits in (4.47) are the moments of a symmetric complex normal distribution with variance (1.12), and thus, (1.11) follows by the method of moments. (Recall that \(F_\alpha (\mathcal {T}_n)=X_n(\alpha )-\mu (\alpha )n\) by (4.5).)

Finally, the claim in (1.12) that the variance is non-zero follows from the same claim in (1.14) (where the variance is the same up to a factor \(\sigma ^2 / 2\)), which is shown in [5, Theorem D.1, as corrected in the corrigendum]. \(\square \)

4.3 Joint Distributions

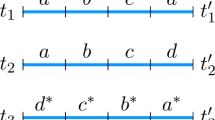

We can extend the arguments above to joint distributions of several \(X_n(\alpha )\) with different imaginary \(\alpha \). Since we have \(X_n(\overline{\alpha })=\overline{X_n(\alpha )}\), it suffices to consider the case \({\text {Im}}\alpha >0\). In this case, different \(X_n(\alpha )\) are asymptotically independent, as is stated more precisely in the following theorem.

Theorem 4.2

For any finite set \(t_1,\dots ,t_r\) of distinct positive numbers, the complex random variables \(\bigl (X_n(\textrm{i}t_k)-\mu (\textrm{i}t_k)n\bigr )/\sqrt{n\log n}\) converge, as \({n\rightarrow \infty }\), jointly in distribution to independent symmetric complex normal variables \(\zeta _{\textrm{i}t_k}\) with variances given by (1.12).

This can be interpreted as joint convergence (in the product topology) of the entire family \(\{X_n(\textrm{i}t):t>0\}\) of random variables, after normalization, to an (uncountable) family of independent symmetric complex normal variables \(\zeta _{\textrm{i}t}\). As said in Remark 1.8, this behavior is strikingly different from the cases \({\text {Re}}\alpha <0\) and \({\text {Re}}\alpha >0\), where we have joint convergence to analytic random functions of \(\alpha \).

Proof

We argue as above, using the method of moments and singularity analysis of generating functions, with mainly notational differences. We give only a sketch, leaving further details to the reader.

For a sequence of arbitrary non-zero imaginary numbers \(\alpha _1,\dots ,\alpha _\ell \) (allowing repetitions), define the generating function

When \(\ell =1\) and 2, these are the same as \(M_{\alpha _1}(z)\) or \(M_{1,1}(z)\) in the notation used above. The recursion (4.16) extends as follows. We write again

Then, by a straightforward extension of the proof of [5, Lemma 12.4], cf. (4.16),

where we sum over all partitions of \([\ell ]:=\{1,\dots ,\ell \}\) into an ordered sequence of \(m+1\) sets \(I_0,\dots ,I_m\) with \(I_1,\dots ,I_m\) neither empty nor equal to the full set \([\ell ]\) (while \(I_0\) may be empty or equal to \([\ell ]\)), and \(i_j\) are defined by \(I_0=\{i_1,\dots ,i_q\}\) and, for \(1\leqslant j\leqslant m\), \(A_j\) is the sequence \((\alpha _i:i\in I_j)\).

As in Lemma 4.1, it follows by induction that for any sequence \(A=(\alpha _1,\dots ,\alpha _\ell )\) of length \(|A|=\ell \geqslant 2\),

where we sum over \(0\leqslant k\leqslant \ell /2\) and all \(\beta \) such that \(-\beta \) equals the sum of some subsequence of A. (The two expansions are equivalent by Lemma 2.1.) The base case \(\ell =2\) follows from (4.23)–(4.24) by (4.17) and (2.22); note also that (4.52) (but not (4.53)) holds for \(\ell =1\) by (4.10). The induction then proceeds for \(\ell \geqslant 3\) as in the proof of Lemma 4.1; note that the notation has changed slightly: \(\ell \) here corresponds to \(\ell +r\) there (so r should now be ignored), and \(q=\ell _0 \). With these and other notational changes, (4.31)–(4.33) still hold, and as there the only significant contributions in (4.51) come from the cases (1) \(m=1\) and \(q=1\) and (2) \(m=2\) and \(q=0\); it follows as in (4.36)–(4.39) that (4.52) and (4.53) hold for all \(\ell \). Moreover, we see from (4.36) that for the terms with \(m=1\) and \(q=1\), and thus, \(|A_1|=\ell -1\), the index (exponent) k is not increased, and thus, by induction, these terms only contribute to \(k\leqslant (\ell -1)/2<\ell /2\). Hence, terms with \(k=\ell /2\) come only from the case \(m=2\) and \(q=0\) in (4.51), when the sequence A (regarded as a multiset) is partitioned into two nonempty parts \(A_1\) and \(A_2\); each such partition yields the contribution \(\frac{1}{2}M_{A_1}(z)M_{A_2}(z)\) + lower order terms to (4.51). Furthermore, it follows that contributions to \(\varkappa _{A;\beta ,k}\) with \(k=\ell /2\) come only from \(\varkappa _{A_j;\beta _j,k_j}\) (with \(j=1,2\)) where \(k_j=|A_j|/2\). This is obviously possible only when both \(\ell _j:=|A_j|\) are even, and an induction shows, again using (4.23)–(4.24) for the base case \(\ell =2\), that the contribution is non-zero only if A is balanced in the sense that it can be partitioned into \(\ell /2\) pairs \(\{\alpha _i,-\alpha _i\}\); moreover, we must have \(\beta =0\). We now write \(\varkappa ^*_A:=\varkappa _{A;0,k}\) if A is balanced with \(|A|=2k\). (We let \(\varkappa ^*_A:=0\) if A is not balanced.) For \(|A|\geqslant 4\), we, thus, obtain the recurrence, from the case \(m=2\) and \(q=0\) in (4.51), and recalling (4.50) and (2.22),

summing over all partitions of A into two nonempty sets \(A_1\) and \(A_2\) that both are balanced.

It follows by induction from (4.54) that if \(|A|=2k\geqslant 2\), then \(\varkappa ^*_A\) can be written as a sum

where we sum over full binary trees with k leaves, where each leaf is labelled by a pair \(I_j\) of indices such that \(I_1,\dots ,I_k\) form a partition of [2k], and furthermore the corresponding sets \(A_j\) are balanced, i.e., \(\alpha _i+\alpha _{i'}=0\) if \(I_j=\{i,i'\}\).

Let \(A=(\alpha _1,\dots ,\alpha _{2k})\) consist of the numbers \(\textrm{i}t_j\) and \(-\textrm{i}t_j\) repeated \(k_j\) times each, for \(j=1,\dots ,r\), where \(t_1,\dots ,t_r\) are distinct and positive; thus, \(|A|=2k\) with \(k=\sum _j k_j\). Then there are \(\prod _j k_j!\) ways to partition A into balanced pairs, and for each binary tree with k leaves, these pairs can be assigned to the k leaves in k! ways. Each tree and each assignment of balanced pairs \(A_i\) gives the same contribution to the sum (4.55), and we obtain, since there are \(C_{k-1}=(2k-2)!/(k!(k-1)!)\) full binary trees with k leaves,

Let \(\sigma ^2_{\textrm{i}t}\) be the variance of \(\zeta _{\textrm{i}t}\) in (1.12). For the case \(A=\{\textrm{i}t,-\textrm{i}t\}\), Lemma 4.1 applies and we have by (4.27) and (4.29), in the present notation,

Hence, (4.56) yields

Since \(\widehat{\varkappa }_{A;0,k}=\Gamma (k-\frac{1}{2})^{-1}\varkappa ^*_A\), we finally obtain from (4.53), using (2.24), that

which equals the corresponding mixed moment \({\mathbb E{}}\bigl (\zeta _{\alpha _1}\cdots \zeta _{\alpha _{2k}}\bigr ) =\prod _j{\mathbb E{}}|\zeta _{\textrm{i}t_j}|^{2k_j}\), see (2.1). Similarly, all mixed moments with unbalanced indices converge after normalization to 0. Hence, the result follows by the method of moments. \(\square \)

Note that the combinatorial argument in the final part of the proof (restricted to the case \(r=1\)) yields an alternative proof that the recursion (4.41) is solved by (4.42)–(4.43). Conversely, the argument above without detailed counting of possibilities shows that the left-hand side of (4.59) converges to \(c_k\) times the right-hand side, for some combinatorial constant \(c_k\) not depending on \(k_1,\dots k_r\). Since (4.47) shows that the formula is correct for \(r=1\), we must have \(c_k=1\), and thus, (4.59) holds.

5 Negative Real Part

In this section, we consider the case that \(\alpha \) in (1.1) has negative real part. Applying the same approach as in previous sections, we prove convergence of all moments for the normalized random variable. As before, we assume throughout the section that (1.7) holds with \(0<\delta <1\). Again, we set

with the generating function

In contrast to Sect. 4, the term \(\mu (\alpha ){\text {Li}}_0(z) = \mu (\alpha )z(1-z)^{-1}\) now dominates. For later convenience, we let \(\eta := \min (-{\text {Re}}\alpha ,\,\delta /2)\), and note that \(0<\eta <\frac{1}{2}\) (assuming again \(\delta <1\) as we may). Then (2.7) implies

This is even true for \(\alpha \in \{-1,-2,\dots \}\), where logarithmic terms occur in the asymptotic expansion of \({\text {Li}}_{-\alpha }\), due to the aforementioned fact that \(\eta < \frac{1}{2}\).

Once again, we let \(F(T)=F_\alpha (T)\) denote the additive functional defined by the toll function \(f_\alpha (T):=b_{|T|}\), so that

5.1 The Mean

We use the same notation for the generating function of the mean as in Sect. 4, i.e.,

and note that (4.7) still holds:

Thus, \(M_\alpha (z)\) is still \(\Delta \)-analytic. In analogy with (4.8), we now have

Moreover, (4.9) still holds, so \(c_{2} = 0\). Combining this with (2.22) now yields

Applying singularity analysis and (2.24), we find that

or equivalently

5.2 Higher Moments

As in Sect. 4.2, we consider the mixed moments of \(F_{\alpha _1}(\mathcal {T}_n)\) and \(F_{\alpha _2}(\mathcal {T}_n)\) for two complex numbers \(\alpha _1\) and \(\alpha _2\) that are now both assumed to have negative real part. In particular, this includes the special case that \(\alpha _2 = \overline{\alpha }_1\). We are, thus, interested in the generating function

for integers \(\ell _1,\ell _2 \geqslant 0\), cf. (4.15). In particular, we have \(M_{1,0}=M_{\alpha _1}\) and \(M_{0,1}=M_{\alpha _2}\). Set \(\eta := \min (-{\text {Re}}\alpha _1,-{\text {Re}}\alpha _2,\,\delta /2)\) (again noting that \(\eta < \frac{1}{2}\)). Then by (5.8) we have

In order to deal with higher moments, we make use of the recursion (4.16). Let us start with second-order moments: here, we obtain

In view of (5.8), (2.20), (2.27), and (2.28), the functions y(z), \(zM_{0,1}(z)\Phi '\bigl (y(z)\bigr )\), \(zM_{1,0}(z)\Phi '\bigl (y(z)\bigr )\), and \(z M_{1,0}(z)M_{0,1}(z) \Phi ''\bigl (y(z)\bigr )\) are all of the form \(c + O\bigl (|1-z|^{\eta }\bigr )\), and taking the Hadamard product with \(B_{\alpha _1}(z)\) or \(B_{\alpha _2}(z)\) does not change this property. Combining this with (2.22) we conclude that there is a constant \(\varkappa _{1,1}\) such that

which implies by virtue of singularity analysis and (2.24) that

We can obtain the functions \(M_{2,0}(z)\) and \(M_{0,2}(z)\) as special cases of \(M_{1,1}(z)\) where \(\alpha _1 = \alpha _2\). Hence there are also constants \(\varkappa _{2,0}\) and \(\varkappa _{0,2}\) such that

and

and, thus,

We will use these as the base case of an inductive proof of the following lemma.

Lemma 5.1

Suppose that \({\text {Re}}\alpha _1 < 0\) and \({\text {Re}}\alpha _2 < 0\), and let

be as above. Then, for all non-negative integers \(\ell \) and r with \(s = \ell +r \geqslant 1\), the function \(M_{\ell ,r}(z)\) is \(\Delta \)-analytic and we have

where \(\widehat{\varkappa }_{1,0}=\sigma ^{-2}\mu (\alpha _1)\), \(\widehat{\varkappa }_{0,1}=\sigma ^{-2}\mu (\alpha _2)\), and, for \(s\geqslant 2\),

if s is even, and \(\widehat{\varkappa }_{\ell ,r} = 0\) otherwise.

Proof

We prove the statement by induction on \(s = \ell + r\). Note that (5.12) as well as (5.14), (5.16), and (5.17) are precisely the cases \(s=1\) and \(s = 2\), respectively.

For the induction step, we take \(s \geqslant 3\) and use recursion (4.16). It follows immediately from this recursion that all \(M_{\ell ,r}\) are \(\Delta \)-analytic, so we focus on the asymptotic behavior at 1. Let us first consider the product

where all \(\ell _i\) and \(r_i\) are non-negative integers, \(1 \leqslant \ell _i + r_i < s\) for every \(i \geqslant 1\), \(\ell _0 + \ell _1 + \cdots + \ell _m = \ell \), and \(r_0 + r_1 + \cdots + r_m = r\). By the induction hypothesis, \(M_{\ell _i,r_i}(z) = O\bigl (|1-z|^{(1-\ell _i-r_i)/2}\bigr )\) for all \(i \geqslant 1\), which can be improved to \(M_{\ell _i,r_i}(z) = O\bigl (|1-z|^{(1-\ell _i-r_i)/2 + \eta }\bigr )\) if \(\ell _i + r_i\) is odd and greater than 1. Combining with (2.29), we obtain

for \(m \geqslant 3\). By (5.3) and repeated use of Lemma 2.3, this estimate continues to hold after taking the Hadamard product with \(B_{\alpha _1}(z)^{\odot \ell _0} \odot B_{\alpha _2}(z)^{\odot r_0}\), and the factor \(\frac{z y'(z)}{y(z)}\) in (4.16) contributes \(-\frac{1}{2}\) to the exponent by (2.22). Since \(\ell _0\) and \(r_0\) are non-negative, it follows that the total contribution of all terms with \(m \geqslant 3\) is \(O\bigl (|1-z|^{(1- s)/2 + \eta }\bigr )\) and, thus, negligible. We can, therefore, focus on the cases \(m = 0\), \(m=1\), and \(m=2\). Here, \(\Phi ^{(m)}\bigl (y(z)\bigr )\) is O(1) in all cases by (2.26)–(2.28), and we obtain

Terms with \(m + \ell _0 + r_0 \geqslant 3\) are negligible for the same reason as before. Likewise, terms with \(m + \ell _0 + r_0 = 2\) are negligible if at least one of the sums \(\ell _i + r_i\) with \(i \geqslant 1\) is odd and greater than 1, as we can then improve the bound on \(M_{\ell _i,r_i}(z)\). Let us determine all remaining possibilities:

-

\(m = 0\) implies \(m + \ell _0 + r_0 = \ell + r = s \geqslant 3\), so we have already accounted for this negligible case.

-

\(m = 1\) gives us \(\ell _0 + \ell _1 = \ell \) and \(r_0 + r_1 = r\) with \(1 \leqslant \ell _1 + r_1 < \ell + r\), thus, \(\ell _0 + r_0 \geqslant 1\). So we have \((\ell _0,\ell _1,r_0,r_1) = (1,\ell -1,0,r)\) and \((\ell _0,\ell _1,r_0,r_1) = (0,\ell ,1,r-1)\) as the only two relevant possibilities in this case.

-

Finally, if \(m = 2\), we must have \(\ell _0 = r_0 = 0\) and \(\ell _1 + \ell _2 = \ell \) and \(r_1 + r_2 = r\).

Now we divide the argument into two subcases, according as \(s = \ell + r\) is even or odd.

Odd \(s \geqslant 3\). If \(m = 2\), \(\ell _0 = r_0 = 0\), and \(\ell _1 + \ell _2 + r_1 + r_2 = \ell + r = s\), then either \(\ell _1 + r_1\) or \(\ell _2 + r_2\) is odd. Thus, the corresponding term is asymptotically negligible unless \(\ell _1 + r_1 = 1\) or \(\ell _2 + r_2 = 1\). So in this case, there are only four terms that might be asymptotically relevant:

In addition, \(m = 1\) contributes with two terms as mentioned above. Thus, we obtain

By the induction hypothesis, \(M_{\ell -1,r}(z) = \widehat{\varkappa }_{\ell -1,r} (1-z)^{1-\frac{s}{2}} + O\bigl (|1-z|^{1-\frac{s}{2}+\eta }\bigr )\). Consequently, using (5.8), (2.27), and (2.28), we get

Recall from (5.2) that \(B_{\alpha _1}(z) = {\text {Li}}_{-\alpha _1}(z)-\mu (\alpha _1){\text {Li}}_0(z)\). Applying the Hadamard product gives us, using (2.7), (2.13), and Lemma 2.3,

so the first and third terms in (5.26) effectively cancel, and the same argument applies to the second and fourth terms. Hence we have proven the desired statement in the case that s is odd.

Even \(s \geqslant 4\). In this case, we can neglect the terms with \(m = 1\) and \(\ell _1 + r_1 = \ell + r -1 = s-1\), since \(s-1\) is odd and greater than 1. Thus, only terms with \(m = 2\) and \(\ell _0 = r_0 = 0\) matter. For the same reason, we can ignore all terms where \(\ell _1+r_1\) and \(\ell _2+r_2\) are odd: at least one of them has to be greater then 1, making all such terms asymptotically negligible. Hence we obtain

Let us write \(\mathop {\mathrm {\sum \nolimits ^{\circ }}}\limits \) for the sum in (5.30). Plugging in (2.22), (2.28), and the induction hypothesis, we obtain

Thus, we have completed the induction for (5.20) with

In order to verify the formula (5.21) for \(\widehat{\varkappa }_{\ell ,r}\) given in the statement of the lemma, in light of (5.14), (5.16), and (5.17) we need only show that \(\widehat{\varkappa }_{\ell ,r}\) as defined in (5.21) satisfies the recursion (5.32). This is easy to achieve by means of generating functions, as follows. Set

Setting \(\ell -j = 2a\) and \(r-j = 2b\), this can be rewritten as

The recursion (5.32) now follows by comparing coefficients of \(x^{\ell }y^r\) in the identity

This completes the proof of the lemma. \(\square \)

So the functions \(M_{\ell ,r}(z)\) are amenable to singularity analysis, and we obtain the following theorem as an immediate application.

Theorem 5.2

Suppose that \({\text {Re}}\alpha _1 < 0\) and \({\text {Re}}\alpha _2 < 0\). Then there exist constants \(\varkappa _{2,0}\), \(\varkappa _{1,1}\), and \(\varkappa _{0,2}\) such that, for all non-negative integers \(\ell \) and r,

as \(n \rightarrow \infty \) if \(\ell +r\) is even, and \(\frac{{\mathbb E{}}[F_{\alpha _1}(\mathcal {T}_n)^{\ell }F_{\alpha _2}(\mathcal {T}_n)^{r}]}{n^{(\ell +r)/2}} \rightarrow 0\) otherwise.

Proof

In view of Lemma 5.1, singularity analysis gives us

for \(s = \ell + r \geqslant 2\), so, using (2.24),

Since \(\Gamma ((s-1)/2) =2^{1-(s/2)}\sqrt{\pi }(s-3)!!\) for even s (recall (2.2)), the statement follows immediately from the formula for \(\widehat{\varkappa }_{\ell ,r}\) in Lemma 5.1 for all \(s \geqslant 2\) and from (5.9) for \(s = 1\). \(\square \)

The following lemma will be used in the proof of Theorem 1.5 to establish that the limiting variance is positive. Recall the notation (1.1) and \(q_k = {\mathbb P{}}(|\mathcal {T}| = k)\).

Lemma 5.3

Consider any complex \(\alpha \) with \({\text {Re}}\alpha \ne 0\). Then there exists k such that \(q_k > 0\) and \(F_{\alpha }(\mathcal {T}_k)\) is not deterministic.

Proof

We know that \(p_0 > 0\) and that \(p_j > 0\) for some \(j \geqslant 2\). Fix such a value j. Let \(k = 3 j + 1 \geqslant 7\). Consider two realizations of the random tree \(\mathcal {T}_k\), each of which has positive probability. Tree 1 has j children of the root, and precisely two of those j children have j children each; the other \(j - 2\) have no children. Tree 2 also has j children of the root; precisely one of those j children (call it child 1) has j children, while the other \(j - 1\) have no children; precisely one of the children of child 1 has j children, while the others have no children.

Then the values of \(F_{\alpha }\) for Tree 1 and Tree 2 are, respectively,

and

These values can’t be equal, because otherwise we would have \((j + 1)^{\alpha } = (2 j + 1)^{\alpha }\); but the two numbers here have unequal absolute values. \(\square \)

Proof of Theorem 1.5

The limit in (5.36) equals the mixed moment \({\mathbb E{}}\bigl [\zeta _1^\ell \zeta _2^r\bigr ]\), where \(\zeta _1\) and \(\zeta _2\) have a joint complex normal distribution and \({\mathbb E{}}\zeta _1^2=\varkappa _{2,0}\), \({\mathbb E{}}\zeta _1\zeta _2=\varkappa _{1,1}\), and \({\mathbb E{}}\zeta _2^2=\varkappa _{0,2}\); this follows by Wick’s theorem [11, Theorem 1.28 or Theorem 1.36] by noting that the factor \(\left( {\begin{array}{c}\ell \\ j\end{array}}\right) \left( {\begin{array}{c}r\\ j\end{array}}\right) j!\, (\ell -j-1)!!\,(r-j-1)!!\) in (5.36) is the number of perfect matchings of \(\ell \) (labelled) copies of \(\zeta _1\) and r copies of \(\zeta _2\) such that there are j pairs \((\zeta _1,\zeta _2)\).

Hence, Theorem 1.5, except for the assertion of positive variance addressed next, follows by the method of moments, taking \(\alpha _1:=\alpha \) and \(\alpha _2:=\overline{\alpha }\), cf. Remark 1.6.

We already know from Theorem 5.2 that \({\text {Var}}F_{\alpha }(\mathcal {T}_n) = \gamma ^2\,n + o(n)\) for some \(\gamma \geqslant 0\); we need only show that \(\gamma > 0\). Fix k as in Lemma 5.3. Write \(v_k > 0\) for the variance of \(F_{\alpha }(\mathcal {T}_k)\). Let \(N_{n, k}\) denote the number of fringe subtrees of size k in \(\mathcal {T}_n\). It follows from [14, Theorem 1.5(i)] that

as \(n \rightarrow \infty \). If for \(\mathcal {T}_n\) we condition on \(N_{n, k} = m\) and all of \(\mathcal {T}_n\) except for fringe subtrees of size k, then the conditional variance of \(F_{\alpha }(\mathcal {T}_n)\) is the variance of the sum of m independent copies of \(F_{\alpha }(\mathcal {T}_k)\), namely, \(m v_k\). Thus,

so the constant \(\gamma ^2\) mentioned at the start of this paragraph satisfies \(\gamma ^2 \geqslant v_k q_k > 0\). \(\square \)

Remark 5.4

The same idea used at the end of the proof of Theorem 1.5 can be used to give an answer to a question raised in [14, Remark 1.7], in the special case that the toll function f depends only on tree size. Indeed, the same proof shows that (with no other conditions on f) if F is not deterministic for all fixed tree sizes—more precisely, if \(q_k > 0\) and \(v_k:= {\text {Var}}F(\mathcal {T}_k) > 0\) for some k, then

\(\square \)

Remark 5.5

(a) It is by no means immediately clear that the constant \(\varkappa _{1,1}\) appearing in (5.14)–(5.15) agrees with the value produced in [5, Remark 5.1]. Appendix D provides a reconciliation.

(b) Using the results of Appendix D, in Appendix E we discuss for real \(\alpha < 0\) calculation of the variance in Theorem 1.5. \(\square \)

Remark 5.6

We recall that asymptotic normality of \(X_n(\alpha )\), or equivalently of \(F_{\alpha }(\mathcal {T}_n)\), is already proven in [5, Theorem 1.1]. Furthermore, [5, Section 5] shows joint asymptotic normality for several \(\alpha \) with \({\text {Re}}\alpha <0\), which for the case of two values \(\alpha _1\) and \(\alpha _2\) is consistent with (5.36) (by the argument in the proof of Theorem 1.5 above). It would certainly be possible to generalize the moment convergence results in this section to convergence of mixed moments for combinations of several \(\alpha _i\), similarly to Sect. 4.3, including also the possibility \({\text {Re}}\alpha _i\geqslant 0\) for some values of i. However, this would require a lengthy case distinction (depending on the signs of the values \({\text {Re}}\alpha _i\)), so we did not perform these calculations explicitly. Instead we just note that if we consider only the case \({\text {Re}}\alpha _i<0\), then convergence of all mixed moments follows from the joint convergence in (1.4) for several \(\alpha _i\) shown in [5, Section 5] together with the uniform integrability of \(|n^{-1/2}[X_n(\alpha ) - \mu (\alpha )n]|^r\) for arbitrary \(r>0\) that follows from Theorem 1.5 (see Remark 1.6). \(\square \)

6 Fractional Moments (Mainly of Negative Order) of Tree Size: Comparisons Across Offspring Distributions

Recall from [5, Theorem 1.7] that the \(\alpha \)th moment \(\mu (\alpha ) = {\mathbb E{}}|\mathcal {T}|^{\alpha }\) of tree size defined at (1.8) is the slope in the lead-order linear approximation \(\mu (\alpha ) n\) of \({\mathbb E{}}X_n(\alpha )\) whenever \({\text {Re}}\alpha < \tfrac{1}{2}\); and from Theorem 1.5 that this linear approximation suffices as a centering for \(X_n(\alpha )\) in order to obtain a normal limit distribution when \({\text {Re}}(\alpha ) < 0\). (See also Remark 1.7.) It is, therefore, of interest to compute \(\mu (\alpha )\) and, similarly, the constant \(\mu ' = {\mathbb E{}}\log |\mathcal {T}|\) defined at (1.9), which serves as the centering slope in Theorem 1.3.

In [5, Appendix A] it is noted that although \(\mu (\alpha )\) can be evaluated numerically, no exact values for important examples of Galton–Watson trees are known in any simple form except in the case that \(\alpha \) is a negative integer. This section is motivated by our having noticed that for all such values (for small k) reported for four examples in that appendix, \(\mu (-k)\) is smallest for binary trees [5, Example A.3], second smallest for labelled trees [5, Example A.1], second largest for full binary trees [5, Example A.4], and largest for ordered trees [5, Example A.2]. We wanted to understand why this ordering occurs and whether any such ordering could be predicted for the values \(\mu '\) defined at (1.9).

In Sect. 6.1 we give a sufficient condition ((6.25) in Theorem 6.8) for such (strict) orderings that is fairly easy to check. In Sect. 6.2 we give a class of examples extending the four in [5, Appendix A] where this condition is met. In Sect. 6.3 we discuss numerical computation of \(\mu '\), which we carry out for the four examples in [5, Appendix A] and some additional examples.

The results of this Sect. 6 do not require (1.7).

6.1 Comparison Theory

The main results of this section are in Theorem 6.8. Working toward those results, we begin by recalling from [5, (A.6)] (where y is called “g” and (1.7) is not required) that for \({\text {Re}}\alpha < \tfrac{1}{2}\) we have

To utilize (6.1) directly, even merely to obtain inequalities across models for real \(\alpha \), one needs to compute the derivative of the tree size probability generating function y, or at least to compare the functions \(y'\) for the compared models. This is nontrivial, since explicit computation of \(y'\) (or y) is difficult or even infeasible in examples such as m-ary trees and full m-ary trees when \(m > 2\). Fortunately, according to (6.3) (and similarly (6.4) in regard to \(\mu '\)) to follow, one need only treat the simpler offspring probability generating function(s) \(\Phi \).

Before proceeding to our main results, we present a simple lemma, a recasting of (6.1), and a definition.

Lemma 6.1

The function \(t \mapsto t / \Phi (t)\) is the inverse function of \(y:[0,1]\rightarrow [0,1]\), and it increases strictly from 0 to 1 for \(t \in [0, 1]\).

Proof

It is obvious from (2.18) that y(z) is continuous and strictly increasing for \(z\in [0,1]\) with \(y(0)=0\) and \(y(1)=1\). Hence its inverse is also strictly increasing from 0 to 1 on \([0,1]\). Finally, (2.19) shows that the inverse is \(t \mapsto t / \Phi (t)\). \(\square \)

We will henceforth write

this strictly decreasing function R will appear on several occasions in the sequel, especially in Appendix A.2.

It follows from (6.1), Lemma 6.1, a change of variables from t to \(\eta = y(t)\), and (2.19) that

Further, differentiation with respect to \(\alpha \) at \(\alpha = 0\) gives

For the remainder of Sect. 6 we focus on real \(\alpha \) and utilize the following notation.

Definition 6.2

For two real-valued functions \(g_1\) and \(g_2\) defined on (0, 1), write \(g_1 \leqslant g_2\) to mean that \(g_1(t) \leqslant g_2(t)\) for all \(t \in (0, 1)\); write \(g_1 < g_2\) to mean that \(g_1 \leqslant g_2\) but \(g_2 \not \leqslant g_1\) (equivalently, that \(g_1(t) \leqslant g_2(t)\) for all \(t \in (0, 1)\), with strict inequality for at least one value of t); and write \(g_1 \prec g_2\) to mean that \(g_1(t) < g_2(t)\) for all \(t \in (0, 1)\).

Consider two Galton–Watson trees, \(\mathcal {T}^{(1)}\) and \(\mathcal {T}^{(2)}\), with respective offspring distributions \(\xi _1\) and \(\xi _2\). Denote the trees’ respective \(\Phi \)-functions by \(\Phi _1\) and \(\Phi _2\), and use similarly subscripted notation for other functions associated with the trees.

We note in passing that, as a simple consequence of Lemma 6.1 whose proof is left to the reader,

and hence also

The result (6.5) is perhaps of some independent interest but is used in the sequel mainly in the proof of Theorem 6.5.

Theorem 6.3

Consider two Galton–Watson trees, \(\mathcal {T}^{(1)}\) and \(\mathcal {T}^{(2)}\). Suppose

-

(i)

If \(\alpha < 0\), then

$$\begin{aligned} \mu _1(\alpha ) \leqslant \mu _2(\alpha ). \end{aligned}$$(6.8) -

(ii)

If \(0< \alpha < \frac{1}{2}\), then

$$\begin{aligned} \mu _1(\alpha ) \geqslant \mu _2(\alpha ). \end{aligned}$$(6.9) -

(iii)

The centering constants for the corresponding shape functionals satisfy

$$\begin{aligned} \mu _1' \geqslant \mu _2'. \end{aligned}$$(6.10)

Proof

This is immediate from (6.3) and (6.4). \(\square \)

Note that, by considering difference quotients, each of (i) and (ii) in Theorem 6.3 implies (iii) there; one does not need the stronger hypothesis (6.7) for this conclusion.

Remark 6.4

The conclusions in Theorem 6.3 do not always extend from \(\mu (\alpha )\) to \({\mathbb E{}}X_n(\alpha )\) for finite n. A counterexample with \(n = 3\) is provided by taking \(\xi _1 \sim 2 {\text {Bi}}(1, \tfrac{1}{2})\) (corresponding to uniform full binary trees, with \(X_3(\alpha )\) concentrated at \(2 + 3^{\alpha }\)) and \(\xi _2 \sim {\text {Ge}}(\tfrac{1}{2})\) (corresponding to ordered trees, with \({\mathbb E{}}X_3(\alpha ) = \frac{3}{2} + \tfrac{1}{2}2^{\alpha } + 3^{\alpha }\)). As shown in Lemma 6.11, we have \(\Phi _1 \leqslant \Phi _2\), but Theorem 6.3(i)–(ii) with \({\mathbb E{}}X_3(\alpha )\) in place of of \(\mu (\alpha )\) fails for every value of \(\alpha \), as does (6.10). \(\square \)

The converse to Theorem 6.3 fails. That is, Theorem 6.3(i)–(ii) do not imply that (6.7) does, too. A counterexample is provided in Appendix A.2. However, as the next theorem shows, (6.7) has for \(\alpha < 0\) a stronger consequence than Theorem 6.3(i), and this stronger consequence yields a converse result:

Theorem 6.5

We have

if and only if (6.7) holds, in which case the inequality in (6.11) also holds for all real \(\alpha < 0\) and all \(t \in (0, \infty )\).

Proof

Setting \(\alpha = -k\) in (6.3) and summing over positive integers k, for complex z in the open unit disk let us define the function H as at [5, (A.7)]:

Changing variables (back) from \(\eta \) to \(t = y^{-1}(\eta ) = 1 / R(\eta )\), we then find

with the last equality, resulting from integration by parts, as noted at [5, (A.9)]; thus,

Since both the first and third expressions in (6.14) are analytic for all z with \({\text {Re}}z < 1\), they are equal in this halfplane. Changing variables, we then find, for \({\text {Re}}z > -1\), that

In particular, if (6.7) holds, then (recalling (6.5))

for real \(t > 0\).

But more is true. Let \(\Delta (z):= e^z [y_2(e^{-z}) - y_1(e^{-z})]\). Then for \(t > 0\) we have that

is the Laplace transform of the bounded continuous function \(\Delta \) on \((0, \infty )\). It follows from the Bernstein–Widder theorem (e.g., [2, Theorem XIII.4.1a]) that h satisfies the (weak) complete monotonicity inequalities (6.11), i.e.,

if and only if \(\Delta (x) \geqslant 0\) for a.e. \(x > 0\), which in turn is true if and only if \(y_1 \leqslant y_2\), or (by (6.5)) equivalently (6.7), holds.

Next, if (6.7) holds, then for real \(\alpha < 0\) and \(t \in (0, 1]\) we have

where the inequality holds by Theorem 6.3(i).

Finally, if (6.7) holds, then for real \(\alpha < 0\) and \(t > 1\), Theorem B.1 in Appendix B implies that for \(j \in \{1, 2\}\) we have

where \(c_j\) is the incomplete gamma function value

and from (6.20) it is evident that \({\mathbb E{}}(|\mathcal {T}_1| - 1 + t)^{\alpha } \leqslant {\mathbb E{}}(|\mathcal {T}_2| - 1 + t)^{\alpha }\). \(\square \)

Remark 6.6

This remark concerns sufficient conditions for (6.7) (equivalently, by (6.5), for \(y_1 \leqslant y_2\)).

(a) The condition

is stronger than \(y_1 \leqslant y_2\) and is of course equivalent to the condition that

for every non-negative nondecreasing function g defined on the positive integers. In particular, (6.22) implies the conclusions of Theorem 6.3 and (6.11) in Theorem 6.5.

Note, however, that (6.22) is strictly stronger than \(y_1 \leqslant y_2\). While the stronger condition (6.22) holds for some of the comparisons in Sect. 6.2 (for example, binary trees vs. labelled trees, for which there is monotone likelihood ratio (MLR); and full binary trees vs. ordered trees, for which there is no MLR but still stochastic ordering), an example satisfying (6.7) (see Lemma 6.11 for a proof) but not (6.22) is \(\xi _1 \sim {\text {Po}}(1)\) (labelled trees) and \(\xi _2 \sim 2 {\text {Bi}}(1, \tfrac{1}{2})\) (full binary trees), because \({\mathbb P{}}(|\mathcal {T}^{(1)}| \leqslant 2) = e^{-1} + e^{-2} > \frac{1}{2} = {\mathbb P{}}(|\mathcal {T}^{(2)}| \leqslant 2)\).

(b) Similarly, the condition

is stronger than (6.7); indeed, it’s even stronger than (6.22). But this stochastic ordering of offspring distributions can only hold if \(\xi _1\) and \(\xi _2\) have the same distribution, because \({\mathbb E{}}\xi _1 = {\mathbb E{}}\xi _2 = 1\). \(\square \)

Remark 6.7

This remark concerns necessary conditions for (6.7).

(a) If (6.7) holds, then by a Taylor expansion near \(t = 1\) [12, (A.6)] (or, alternatively, recalling (6.5), by \(y_1 \leqslant y_2\) and [12, (A.5)]), \(\sigma _1^2 \leqslant \sigma _2^2\). (This does not require the assumption (1.7); when (1.7) holds, we can also use [5, Lemma 12.14] or (2.20).)