Abstract

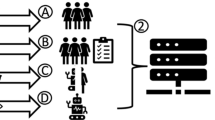

Algorithmic decision-making is now widespread, ranging from health care allocation to more common actions such as recommendation or information ranking. The aim to audit these algorithms has grown alongside. In this article, we focus on external audits that are conducted by interacting with the user side of the target algorithm, and hence considered a black box. Yet, the legal framework in which these audits take place is mostly ambiguous to researchers developing them: on the one hand, the legal value of the audit outcome is uncertain; on the other hand, the auditors’ rights and obligations are unclear. The contribution of this article is to articulate two canonical audit forms to law, to shed light on these aspects: 1) the first audit form (we coin the Bobby audit form) checks a predicate against the algorithm, while the second (Sherlock) is looser and opens up to multiple investigations. We find that: Bobby audits are more amenable to prosecution, yet are delicate as operating on real user data. This can lead to rejection by a court (notion of admissibility). Sherlock audits craft data for their operation, most notably to build surrogates of the audited algorithm. It is mostly used for acts for whistleblowing, as even if accepted as proof, the evidential value will be low in practice. 2) these two forms require the prior respect of a proper right to audit, granted by law or by the platform being audited; otherwise, the auditor will be also prone to prosecutions regardless of the audit outcome. This article thus highlights the relation of current audits with law, to structure the growing field of algorithm auditing.

Similar content being viewed by others

Notes

Proposal for a REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL on a Single Market For Digital Services (Digital Services Act) and amending Directive 2000/31/EC COM/2020/825 final.

Proposal for a REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL LAYING DOWN HARMONISED RULES ON ARTIFICIAL INTELLIGENCE (ARTIFICIAL INTELLIGENCE ACT).

Règlement (UE) 2016/679.

COMPAS stands for ”Correctional Offender Management Profiling for Alternative Sanctions”. About the 2016 analysis: https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm.

Legal requirement in article 31 of the French code of civil procedure.

GDPR defines personal data as ”any information relating to an identified or identifiable natural person” which is an extremely wide definition.

Article 4 of the GDPR defines processing as ”any operation or set of operations which is performed on personal data”, e.g., collection, recording, consultation, alteration, use, etc.

Violation of the GDPR can lead to administrative fines up to 20 million of euros or 4% of the total worldwide annual turnover.

Article 9: ”Each party has the burden of proving in accordance with the law the facts necessary for the success of its claim.”

About the sanctions of fraudulent access in an IT system, see art 323-1 of the French penal code.

These notions of necessity and proportionality of legal evidence have been admitted in the first place by the European Court of Justice. For more information, see J. Van Compernolle, ”Les exigences du procès équitable et l’administration des preuves dans le procès civil”, RTDH 2012. 429.

G. Lardeux, “Le droit à la preuve: tentative de systématisation”, RTD civ. 2017. 1.

On the importance of DNA in a paternity test, see, among others: Cour de cassation, civile, Chambre civile 1, 25 September 2013, 12\(-\)24.588, Inédit, 2013. and Cour de cassation, civile, Chambre civile 1, 25 September 2013, 12\(-\)24.588, Inédit, 2013.

Because DNA does not provide all the elements necessary to establish guilt, its usefulness and utilization are actually limited. See Julie Leonhard, ” La place de l’ADN dans le procès pénal ”, Cahiers Droit, Sciences & Technologies, 9, 2019, 45–56.

In France, a legal protection is granted in 2016 through a law for transparency and against corruption.

Article 4 ”personal scope of the European directive”

Evaluation report on Recommendation CM/Rec(2014)7 on the protection of whistle-blowers https://www.coe.int/en/web/cdcj/activities/protecting-whistleblowers

Guidelines of the Committee of Ministers of the Council of Europe on public ethics (2020), E.h Section.

Term defined by article 25 of the DSA as ”online platforms which provide their services to a number of average monthly active recipients of the service in the Union equal or higher than 45 million [...].”

Article 38 of the Digital Services Act.

Rights from articles 7, 11, 21, and 24 of the Charter of Fundamental Rights of the European Union.

References

Diakopoulos, N.: Accountability in algorithmic decision making. Commun. ACM 59(2), 56–62 (2016). https://doi.org/10.1145/2844110

Ledford, H.: Millions of black people affected by racial bias in health-care algorithms. Nature 574(7780), 608–610 (2019)

Carrillo, M.R.: Artificial intelligence: From ethics to law. Telecommunications Policy 44(6), 101937 (2020)

Klonick, K.: Content moderation modulation. Commun. ACM 64(1), 29–31 (2020). https://doi.org/10.1145/3436247

Metcalf, J., Moss, E., Watkins, E.A., Singh, R., Elish, M.C.: Algorithmic impact assessments and accountability: The co-construction of impacts. In: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. FAccT ’21, pp. 735–746. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3442188.3445935

Mökander, J., Floridi, L.: Operationalising ai governance through ethics-based auditing: an industry case study. AI and Ethics 3(2), 451–468 (2023)

Moore, E.F.: Gedanken-experiments on sequential machines, 129–154 (2016)

Lécuyer, M., Ducoffe, G., Lan, F., Papancea, A., Petsios, T., Spahn, R., Chaintreau, A., Geambasu, R.: Xray: Enhancing the web’s transparency with differential correlation. In: 23rd USENIX Security Symposium (USENIX Security 14), pp. 49–64. USENIX Association, San Diego, CA (2014). https://www.usenix.org/conference/usenixsecurity14/technical-sessions/presentation/lecuyer

Aïvodji, U., Arai, H., Fortineau, O., Gambs, S., Hara, S., Tapp, A.: Fairwashing: the risk of rationalization. In: International Conference on Machine Learning, pp. 161–170 (2019)

Buolamwini, J., Gebru, T.: Gender shades: Intersectional accuracy disparities in commercial gender classification. In: Conference on Fairness, Accountability and Transparency, pp. 77–91 (2018)

Ribeiro, M.T., Singh, S., Guestrin, C.: "why should i trust you?" explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1135–1144 (2016)

Chen, L., Mislove, A., Wilson, C.: Peeking beneath the hood of uber. In: Proceedings of the 2015 Internet Measurement Conference, pp. 495–508 (2015)

Ribeiro, M.H., Ottoni, R., West, R., Almeida, V.A.F., Meira, W.: Auditing radicalization pathways on youtube. In: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency. FAT* ’20, pp. 131–141. Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3351095.3372879

Bandy, J., Diakopoulos, N.: Auditing news curation systems: a case study examining algorithmic and editorial logic in apple news. Proc. Int. AAAI Conf. Web Soc. Media 14(1), 36–47 (2020)

Galdon Clavell, G., Martín Zamorano, M., Castillo, C., Smith, O., Matic, A.: Auditing algorithms: On lessons learned and the risks of data minimization. In: Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society. AIES ’20, pp. 265–271. Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3375627.3375852

Panigutti, C., Perotti, A., Panisson, A., Bajardi, P., Pedreschi, D.: Fairlens: auditing black-box clinical decision support systems. Inf. Process. Manag. 58(5), 102657 (2021). https://doi.org/10.1016/j.ipm.2021.102657

Huszár, F., Ktena, S.I., O’Brien, C., Belli, L., Schlaikjer, A., Hardt, M.: Algorithmic amplification of politics on twitter. arXiv:2110.11010 (2021)

Kaiser, J., Rauchfleisch, A.: The implications of venturing down the rabbit hole. Int. Policy Rev. 8(2), 1–22 (2019)

Petropoulos, G.: A European union approach to regulating big tech. Commun. ACM 64(8), 24–26 (2021). https://doi.org/10.1145/3469104

Falco, G., Shneiderman, B., Badger, J., Carrier, R., Dahbura, A., Danks, D., Eling, M., Goodloe, A., Gupta, J., Hart, C., et al.: Governing ai safety through independent audits. Nat. Mach. Intell. 3(7), 566–571 (2021)

UNESCO, C.: Recommendation on the ethics of artificial intelligence. UNESCO France (2021)

Jobin, A., Ienca, M., Vayena, E.: The global landscape of ai ethics guidelines. Nat. Mach. Intell. 1(9), 389–399 (2019)

Raji, I.D., Xu, P., Honigsberg, C., Ho, D.: Outsider oversight: Designing a third party audit ecosystem for ai governance. In: Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, pp. 557–571 (2022)

Mökander, J., Schuett, J., Kirk, H.R., Floridi, L.: Auditing large language models: a three-layered approach. AI and Ethics, 1–31 (2023)

Matte, C., Bielova, N., Santos, C.: Do cookie banners respect my choice? Measuring legal compliance of banners from IAB Europe’s transparency and consent framework. In: 2020 IEEE Symposium on Security and Privacy (SP), pp. 791–809 (2020). IEEE

Le Merrer, E., Trédan, G.: Remote explainability faces the bouncer problem. Nat Mach Intell 2, 529–539 (2020). https://doi.org/10.1038/s42256-020-0216-z

Adi, Y., Baum, C., Cisse, M., Pinkas, B., Keshet, J.: Turning your weakness into a strength: Watermarking deep neural networks by backdooring. In: 27th USENIX Security Symposium (USENIX Security 18), pp. 1615–1631. USENIX Association, Baltimore, MD (2018). https://www.usenix.org/conference/usenixsecurity18/presentation/adi

Feldman, M., Friedler, S.A., Moeller, J., Scheidegger, C., Venkatasubramanian, S.: Certifying and removing disparate impact. In: Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 259–268 (2015)

Urman, A., Makhortykh, M., Ulloa, R.: Auditing source diversity bias in video search results using virtual agents. In: Companion Proceedings of the Web Conference 2021, pp. 232–236 (2021)

Maho, T., Furon, T., Le Merrer, E.: Surfree: a fast surrogate-free black-box attack. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10430–10439 (2021)

Sandvig, C., Hamilton, K., Karahalios, K., Langbort, C.: Auditing algorithms: Research methods for detecting discrimination on internet platforms. Data and discrimination: converting critical concerns into productive inquiry 22, 4349–4357 (2014)

Réglement (UE) 2016/679 du Parlement Européen et du Conseil du 27 Avril 2016 Relatif à la Protection des Personnes Physiques à L’égard du Traitement des Données à Caractére Personnel et à la Libre Circulation de Ces Données, et Abrogeant la Directive 95/46/CE (règlement Général sur la Protection des Données) (Texte Présentant de L’intérêt Pour l’EEE). http://data.europa.eu/eli/reg/2016/679/oj/fra Accessed 2020-02-26

Directive 96/9/EC of the European Parliament and of the Council of 11 March 1996 on the Legal Protection of Databases. http://data.europa.eu/eli/dir/1996/9/2019-06-06/eng. Accessed 09 May 2022

Raghavan, M., Barocas, S., Kleinberg, J.M., Levy, K.: Mitigating bias in algorithmic employment screening: evaluating claims and practices. arXiv:1906.09208 (2019)

Boot, E.R.: The Ethics of Whistleblowing. Routledge (2019)

Directive (EU) 2019/1937 of the European Parliament and of the Council of 23 October 2019 on the Protection of Persons Who Report Breaches of Union Law. Code Number: 305. http://data.europa.eu/eli/dir/2019/1937/oj/eng. Accessed 02 Dec 2021

Commission Européenne: Proposal for a regulation of the european parliament and of the council laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts com/2021/206 final. In: 2021/0106 (2020)

Johnson, D.G., Verdicchio, M.: Ethical ai is not about ai. Commun. ACM 66(2), 32–34 (2023). https://doi.org/10.1145/3576932

Brown, S., Davidovic, J., Hasan, A.: The algorithm audit: Scoring the algorithms that score us. Big Data & Soc 8(1) (2021). https://doi.org/10.1177/2053951720983865

Mahmood, K., Mahmood, R., Rathbun, E., Dijk, M.: Back in black: a comparative evaluation of recent state-of-the-art black-box attacks. arXiv:2109.15031 (2021)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare there are no competing interests with this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Le Merrer, E., Pons, R. & Tredan, G. Algorithmic audits of algorithms, and the law. AI Ethics (2023). https://doi.org/10.1007/s43681-023-00343-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43681-023-00343-z