Abstract

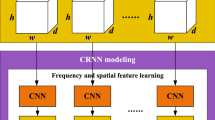

Depression is one of the most common mental disorders, and rates of depression in individuals increase each year. Traditional diagnostic methods are primarily based on professional judgment, which is prone to individual bias. Therefore, it is crucial to design an effective and robust diagnostic method for automated depression detection. Current artificial intelligence approaches are limited in their abilities to extract features from long sentences. In addition, current models are not as robust with large input dimensions. To solve these concerns, a multimodal fusion model comprised of text, audio, and video for both depression detection and assessment tasks was developed. In the text modality, pre-trained sentence embedding was utilized to extract semantic representation along with Bidirectional long short-term memory (BiLSTM) to predict depression. This study also used Principal component analysis (PCA) to reduce the dimensionality of the input feature space and Support vector machine (SVM) to predict depression based on audio modality. In the video modality, Extreme gradient boosting (XGBoost) was employed to conduct both feature selection and depression detection. The final predictions were given by outputs of the different modalities with an ensemble voting algorithm. Experiments on the Distress analysis interview corpus wizard-of-Oz (DAIC-WOZ) dataset showed a great improvement of performance, with a weighted F1 score of 0.85, a Root mean square error (RMSE) of 5.57, and a Mean absolute error (MAE) of 4.48. Our proposed model outperforms the baseline in both depression detection and assessment tasks, and was shown to perform better than other existing state-of-the-art depression detection methods.

Similar content being viewed by others

Data Availability

The data sets used in the current study are available from https://dcapswoz.ict.usc.edu/.

Abbreviations

- BiLSTM:

-

Bidirectional long short-term memory

- PCA:

-

Principal component analysis

- SVM:

-

Support vector machine

- XGBoost:

-

Extreme gradient boosting

- DAIC-WOZ:

-

Distress analysis interview corpus wizard-of-oz

- RMSE:

-

Root mean square error

- MAE:

-

Mean absolute error

- PHQ-8:

-

Patient health questionnaire-8

- BDI:

-

Beck's depression inventory

- AI:

-

Artificial intelligence

- ML:

-

Machine learning

- GloVe:

-

Global vectors

- CNN:

-

Convolutional neural network

- MFCC:

-

Mel-frequency cepstral coefficient

- COVAREP:

-

Cooperative voice analysis repository

- MHI:

-

Motion history image

- AVEC:

-

Audio/visual emotion challenge

- LSTM:

-

Long short-term memory

- COVID-19:

-

Coronavirus disease 2019

- BERT:

-

Bidirectional encoder representations from transformers

- USE:

-

Universal sentence encoder

- MSE:

-

Mean squared error

- BCE:

-

Binary cross entropy

- VUV:

-

Voiced/unvoiced

- F0:

-

Fundamental frequency

- NAQ:

-

Normalized amplitude quotient

- QOQ:

-

Quasi-open quotient

- H1H2:

-

First two harmonics of the differentiated glottal source spectrum

- PSP:

-

Parabolic spectral parameter

- MDQ:

-

Maxima dispersion quotient

- MCEP:

-

Mel cepstral coefficient

- HMPDM:

-

Harmonic model and phase distortion mean

- HMPDD:

-

Harmonic model and phase distortion deviation

- FAU:

-

Facial action unit

- KNN:

-

K-nearest neighbors

References

Albadr MAA, Tiun S (2020) Spoken language identification based on particle swarm optimisation–extreme learning machine approach. Circuits Syst Signal Process 39:4596–4622. https://doi.org/10.1007/s00034-020-01388-9

Albadr MA, Tiun S, Ayob M, Al-Dhief F (2020) Genetic algorithm based on natural selection theory for optimization problems. Symmetry (basel) 12:1758. https://doi.org/10.3389/fonc.2023.1150840

Albadr MAA, Ayob M, Tiun S, Al-Dhief FT, Hasan MK (2022a) Gray wolf optimization-extreme learning machine approach for diabetic retinopathy detection. Front Public Health 10:925901. https://doi.org/10.3389/fpubh.2022.925901

Albadr MAA, Tiun S, Ayob M, Al-Dhief FT (2022b) Particle swarm optimization-based extreme learning machine for covid-19 detection. Cognit Comput. https://doi.org/10.1007/s12559-022-10063-x

Albadr MAA, Ayob M, Tiun S, Al-Dhief FT, Arram A, Khalaf S (2023a) Breast cancer diagnosis using the fast learning network algorithm. Front Oncol 13:1150840. https://doi.org/10.3389/fonc.2023.1150840

Albadr MAA, Tiun S, Ayob M, Nazri MZA, Al-Dhief FT (2023b) Grey wolf optimization-extreme learning machine for automatic spoken language identification. Multimed Tools Appl 82:27165–27191. https://doi.org/10.1007/s11042-023-14473-3

Albadr MAA, Tiun S, Ayob M, Al-Dhief FT, Abdali T-AN, Abbas AF (2021) Extreme learning machine for automatic language identification utilizing emotion speech data. In: 2021 international conference on electrical, communication, and computer engineering (ICECCE). IEEE, pp 1–6. https://doi.org/10.1109/icecce52 056.2021.9514107

Alhanai T, Ghassemi MM, Glass JR (2018) Detecting Depression with Audio/Text Sequence Modeling of Interviews. In: Interspeech. pp 1716–1720. https://doi.org/10.21437/Interspeech.2018-2522

Amiriparian S, Gerczuk M, Ottl S, Cummins N, Freitag M, Pugachevskiy S, Baird A, Schuller B (2017) Snore sound classification using image-based deep spectrum features. Interspeech Proc. https://doi.org/10.21437/interspeech.2017-434

Ansari H, Vijayvergia A, Kumar K (2018) DCR-HMM: Depression detection based on Content Rating using Hidden Markov Model. In: 2018 Conference on Information and Communication Technology (CICT). IEEE, pp 1–6. https://doi.org/10.1109/infocomtech.2018.8722410

Aytar Y, Vondrick C, Torralba A (2016) Soundnet: Learning sound representations from unlabeled video. Adv Neural Inf Process Syst 29:892–900. https://doi.org/10.48550/arXiv.1610.09001

Bailey A, Plumbley MD (2021) Gender bias in depression detection using audio features. In: 2021 29th European Signal Processing Conference (EUSIPCO). IEEE, pp 596–600. https://doi.org/10.23919/eusipco54536.2021.9615933

Baltrušaitis T, Robinson P, Morency L-P (2016) Openface: an open source facial behavior analysis toolkit. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, pp 1–10. https://doi.org/10.1109/WACV.2016.7477553

Bobick A, Davis J (1996) Real-time recognition of activity using temporal templates. In: Proceedings Third IEEE Workshop on Applications of Computer Vision. WACV’96. IEEE, pp 39–42. https://doi.org/10.1109/acv.1996.571995

Burne L, Sitaula C, Priyadarshi A, Tracy M, Kavehei O, Hinder M, Withana A, McEwan A, Marzbanrad F (2022) Ensemble approach on deep and handcrafted features for neonatal bowel sound detection. IEEE J Biomed Health Inform. https://doi.org/10.1109/jbhi.2022.3217559

Carey M, Jones K, Meadows G, Sanson-Fisher R, D’Este C, Inder K, Yoong SL, Russell G (2014) Accuracy of general practitioner unassisted detection of depression. Aust N Z J Psychiatry 48:571–578. https://doi.org/10.1177/0004867413520047

Cer D, Yang Y, Kong S, Hua N, Limtiaco N, John RS, Constant N, Guajardo-Céspedes M, Yuan S, Tar C (2018) Universal sentence encoder. arXiv Preprint. https://doi.org/10.48550/arXiv.1803.11175

Chao L, Tao J, Yang M, Li Y (2015) Multi task sequence learning for depression scale prediction from video. In: 2015 International Conference on Affective Computing and Intelligent Interaction (ACII). IEEE, pp 526–531

Chiu CY, Lane HY, Koh JL, Chen ALP (2021) Multimodal depression detection on instagram considering time interval of posts. J Intell Inf Syst 56:25–47. https://doi.org/10.1007/s10844-020-00599-5

Cohn JF, Kruez TS, Matthews I, Yang Y, Nguyen MH, Padilla MT, Zhou F, de la Torre F (2009) Detecting depression from facial actions and vocal prosody. In: 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops. IEEE, pp 1–7. https://doi.org/10.1109/acii.2009.5349358

Conneau A, Kiela D, Schwenk H, Barrault L, Bordes A (2017) Supervised learning of universal sentence representations from natural language inference data. arXiv Preprint. https://doi.org/10.18653/v1/d17-1070

Cook IA, Hunter AM, Caudill MM, Abrams MJ, Leuchter AF (2020) Prospective testing of a neurophysiologic biomarker for treatment decisions in major depressive disorder: the PRISE-MD trial. J Psychiatr Res 124:159–165. https://doi.org/10.1016/j.jpsychires.2020.02.028

Cowie R, Douglas-Cowie E, Tsapatsoulis N, Votsis G, Kollias S, Fellenz W, Taylor JG (2001) Emotion recognition in human-computer interaction. IEEE Signal Process Mag 18:32–80. https://doi.org/10.1109/79.911197

Cummins N, Scherer S, Krajewski J, Schnieder S, Epps J, Quatieri TF (2015) A review of depression and suicide risk assessment using speech analysis. Speech Commun 71:10–49. https://doi.org/10.1016/j.specom.2015.03.004

Cummins N, Vlasenko B, Sagha H, Schuller B (2017) Enhancing speech-based depression detection through gender dependent vowel-level formant features. In: ten Teije A, Popow C, Holmes JH, Sacchi L (eds) Conference on artificial intelligence in medicine in Europe. Springer, Cham, pp 209–214. https://doi.org/10.1007/978-3-319-59758-4_23

Degottex G, Kane J, Drugman T, Raitio T, Scherer S (2014) COVAREP—A collaborative voice analysis repository for speech technologies. In: 2014 ieee international conference on acoustics, speech and signal processing (icassp). IEEE, pp 960–964. https://doi.org/10.1109/icassp.2014.6853739

Devlin J, Chang M-W, Lee K, Toutanova K (2018) Bert: PRE-training of deep bidirectional transformers for language understanding. arXiv Preprint. https://doi.org/10.48550/arXiv.1810.04805

Doulamis N (2006) An adaptable emotionally rich pervasive computing system. In: 2006 14th European Signal Processing Conference. IEEE, pp 1–5. https://zenodo.org/records/52799

Dutta P, Saha S (2020) Amalgamation of protein sequence, structure and textual information for improving protein-protein interaction identification. In: Proceedings of the 58th annual meeting of the association for computational linguistics. pp 6396–6407. https://doi.org/10.18653/v1/2020.acl-main.570

Fan W, He Z, Xing X, Cai B, Lu W (2019) Multi-modality depression detection via multi-scale temporal dilated cnns. In: Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop. pp 73–80. https://doi.org/10.1145/3347320.3357695

Fava M, Kendler KS (2000) Major depressive disorder. Neuron 28:335–341. https://doi.org/10.1016/s0896-6273(00)00112-4

Gallos P, Menychtas A, Panagopoulos C, Kaselimi M, Temenos A, Rallis I, Maglogiannis I (2022) Using mHealth technologies to promote public health and well-being in urban areas with blue-green solutions. Stud Health Technol Inform 295:566–569. https://doi.org/10.3233/shti220791

Gong Y, Poellabauer C (2017) Topic modeling based multi-modal depression detection. In: Proceedings of the 7th Annual Workshop on Audio/Visual Emotion Challenge. pp 69–76. https://doi.org/10.1145/3133944.3133945

Gratch J, Artstein R, Lucas GM, Stratou G, Scherer S, Nazarian A, Wood R, Boberg J, DeVault D, Marsella S (2014) The distress analysis interview corpus of human and computer interviews. In: LREC. pp 3123–3128

Guntuku SC, Yaden DB, Kern ML, Ungar LH, Eichstaedt JC (2017) Detecting depression and mental illness on social media: an integrative review. Curr Opin Behav Sci 18:43–49. https://doi.org/10.1016/j.cobeha.2017.07.005

Haque A, Guo M, Miner AS, Fei-Fei L (2018) Measuring depression symptom severity from spoken language and 3D facial expressions. arXiv Preprint. https://doi.org/10.48550/arXiv.1811.08592

Hawton K, Comabella CC, Haw C, Saunders K (2013) Risk factors for suicide in individuals with depression: a systematic review. J Affect Disord 147:17–28. https://doi.org/10.1016/j.jad.2013.01.004

He L, Cao C (2018) Automated depression analysis using convolutional neural networks from speech. J Biomed Inform 83:103–111. https://doi.org/10.1016/j.jbi.2018.05.007

Huang Z, Dong M, Mao Q, Zhan Y (2014) Speech emotion recognition using CNN. In: Proceedings of the 22nd ACM international conference on Multimedia. pp 801–804. https://doi.org/10.1109/ISCSLP.2018.8706610

Islam MR, Kabir MA, Ahmed A, Kamal ARM, Wang H, Ulhaq A (2018) Depression detection from social network data using machine learning techniques. Health Inf Sci Syst 6:1–12. https://doi.org/10.1007/s13755-018-0046-0

Joulin A, Grave E, Bojanowski P, Mikolov T (2016) Bag of tricks for efficient text classification. arXiv Preprint. https://doi.org/10.18653/v1/e17-2068

Kächele M, Glodek M, Zharkov D, Meudt S, Schwenker F (2014) Fusion of audio-visual features using hierarchical classifier systems for the recognition of affective states and the state of depression. Depression 1:671–678. https://doi.org/10.5220/0004828606710678

Knyazev GG, Savostyanov AN, Bocharov AV, Aftanas LI (2019) EEG cross-frequency correlations as a marker of predisposition to affective disorders. Heliyon 5:e02942. https://doi.org/10.1016/j.heliyon.2019.e02942

Kroenke K, Strine TW, Spitzer RL, Williams JBW, Berry JT, Mokdad AH (2009) The PHQ-8 as a measure of current depression in the general population. J Affect Disord 114:163–173. https://doi.org/10.1016/j.jad.2008.06.026

Lam G, Dongyan H, Lin W (2019) Context-aware deep learning for multi-modal depression detection. In: ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 3946–3950. https://doi.org/10.1109/icassp.2019.8683027

Le Q, Mikolov T (2014) Distributed representations of sentences and documents. In: International conference on machine learning. PMLR, pp 1188–1196. https://doi.org/10.48550/arXiv.1405.4053

Lépine J-P, Briley M (2011) The increasing burden of depression. Neuropsychiatr Dis Treat 7:3. https://doi.org/10.2147/ndt.s19617

Li X, Tan W, Liu P, Zhou Q, Yang J (2021) Classification of COVID-19 chest CT images based on ensemble deep learning. J Healthc Eng 2021:1–7. https://doi.org/10.1155/2021/5528441

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Levy O, Lewis M, Zettlemoyer L, Stoyanov V (2019) Roberta: a robustly optimized bert pretraining approach. arXiv Preprint. https://doi.org/10.48550/arXiv.1907.11692

Ma X, Yang H, Chen Q, Huang D, Wang Y (2016) Depaudionet: An efficient deep model for audio based depression classification. In: Proceedings of the 6th international workshop on audio/visual emotion challenge. pp 35–42. https://doi.org/10.1145/2988257.2988267

Mallol-Ragolta A, Zhao Z, Stappen L, Cummins N, Schuller B (2019) A hierarchical attention network-based approach for depression detection from transcribed clinical interviews. https://doi.org/10.21437/interspeech.2019-2036

Meng H, Huang D, Wang H, Yang H, Ai-Shuraifi M, Wang Y (2013) Depression recognition based on dynamic facial and vocal expression features using partial least square regression. In: Proceedings of the 3rd ACM international workshop on Audio/visual emotion challenge. pp 21–30. https://doi.org/10.1145/2512530.2512532

Muzammel M, Salam H, Hoffmann Y, Chetouani M, Othmani A (2020) AudVowelConsNet: a phoneme-level based deep CNN architecture for clinical depression diagnosis. Mach Learn Appl 2:100005. https://doi.org/10.1016/j.mlwa.2020.100005

Nasir M, Jati A, Shivakumar PG, Nallan Chakravarthula S, Georgiou P (2016) Multimodal and multiresolution depression detection from speech and facial landmark features. In: Proceedings of the 6th international workshop on audio/visual emotion challenge. pp 43–50. https://doi.org/10.1145/2988257.2988261

Niu M, Tao J, Liu B, Huang J, Lian Z (2020) Multimodal spatiotemporal representation for automatic depression level detection. IEEE Trans Affect Comput. https://doi.org/10.1109/taffc.2020.3031345

Niu M, Chen K, Chen Q, Yang L (2021) HCAG: A Hierarchical Context-Aware Graph Attention Model for Depression Detection. In: ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, pp 4235–4239. https://doi.org/10.1109/icassp39728.2021.9413486

Orabi AH, Buddhitha P, Orabi MH, Inkpen D (2018) Deep learning for depression detection of twitter users. In: Proceedings of the Fifth Workshop on Computational Linguistics and Clinical Psychology: From Keyboard to Clinic. pp 88–97. https://doi.org/10.18653/v1/w18-0609

Pampouchidou A, Simantiraki O, Fazlollahi A, Pediaditis M, Manousos D, Roniotis A, Giannakakis G, Meriaudeau F, Simos P, Marias K (2016) Depression assessment by fusing high and low level features from audio, video, and text. In: Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge. pp 27–34. https://doi.org/10.1145/2988257.2988266

Pampouchidou A, Simantiraki O, Vazakopoulou C-M, Chatzaki C, Pediaditis M, Maridaki A, Marias K, Simos P, Yang F, Meriaudeau F (2017) Facial geometry and speech analysis for depression detection. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, pp 1433–1436. https://doi.org/10.1109/embc.2017.8037103

Pennington J, Socher R, Manning CD (2014) Glove: Global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP). pp 1532–1543. https://doi.org/10.3115/v1/D14-1162

Rafiei A, Zahedifar R, Sitaula C, Marzbanrad F (2022) Automated detection of major depressive disorder with EEG signals: a time series classification using deep learning. IEEE Access 10:73804–73817. https://doi.org/10.1109/access.2022.3190502

Ray A, Kumar S, Reddy R, Mukherjee P, Garg R (2019) Multi-level attention network using text, audio and video for depression prediction. In: Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop. pp 81–88. https://doi.org/10.1145/3347320.3357697

Reimers N, Gurevych I (2019) Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv Preprint. https://doi.org/10.48550/arXiv.1908.10084

Resnik P, Garron A, Resnik R (2013) Using topic modeling to improve prediction of neuroticism and depression in college students. In: Proceedings of the 2013 conference on empirical methods in natural language processing. pp 1348–1353. https://doi.org/10.3389/fpubh.2022.1003553

Ringeval F, Schuller B, Valstar M, Gratch J, Cowie R, Scherer S, Mozgai S, Cummins N, Schmitt M, Pantic M (2017) Avec 2017: Real-life depression, and affect recognition workshop and challenge. In: Proceedings of the 7th Annual Workshop on Audio/Visual Emotion Challenge. pp 3–9. https://doi.org/10.1145/3133944.3133953

Ringeval F, Schuller B, Valstar M, Cummins N, Cowie R, Tavabi L, Schmitt M, Alisamir S, Amiriparian S, Messner E-M (2019) AVEC 2019 workshop and challenge: state-of-mind, detecting depression with AI, and cross-cultural affect recognition. In: Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop. pp 3–12. https://doi.org/10.1145/3347320.3357688

Rodrigues Makiuchi M, Warnita T, Uto K, Shinoda K (2019) Multimodal fusion of bert-cnn and gated cnn representations for depression detection. In: Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop. pp 55–63. https://doi.org/10.1145/3347320.3357694

Rohanian M, Hough J, Purver M (2019) Detecting Depression with Word-Level Multimodal Fusion. In: INTERSPEECH. pp 1443–1447. https://doi.org/10.1016/j.cmpb.2023.107702

Rude S, Gortner E-M, Pennebaker J (2004) Language use of depressed and depression-vulnerable college students. Cogn Emot 18:1121–1133. https://doi.org/10.1080/02699930441000030

Saidi A, Othman S ben, Saoud S ben (2020) Hybrid CNN-SVM classifier for efficient depression detection system. In: 2020 4th International Conference on Advanced Systems and Emergent Technologies (IC_ASET). IEEE, pp 229–234. https://doi.org/10.1109/ic_aset49463.2020.9318302

Sakhovskiy A, Tutubalina E (2022) Multimodal model with text and drug embeddings for adverse drug reaction classification. J Biomed Inform 135:104182. https://doi.org/10.1016/j.jbi.2022.104182

Scherer S, Stratou G, Lucas G, Mahmoud M, Boberg J, Gratch J, Morency L-P (2014) Automatic audiovisual behavior descriptors for psychological disorder analysis. Image vis Comput 32:648–658. https://doi.org/10.1016/j.imavis.2014.06.001

Shen G, Jia J, Nie L, Feng F, Zhang C, Hu T, Chua T-S, Zhu W (2017) Depression Detection via Harvesting Social Media: A Multimodal Dictionary Learning Solution. In: IJCAI. pp 3838–3844. https://doi.org/10.24963/ijcai.2017/536

Shickel B, Loftus TJ, Ozrazgat-Baslanti T, Ebadi A, Bihorac A, Rashidi P (2018) DeepSOFA: a real-time continuous acuity score framework using deep learning. ArXiv e-Prints. https://doi.org/10.1038/s41598-019-38491-0

Sitaula C, Basnet A, Aryal S (2021a) Vector representation based on a supervised codebook for Nepali documents classification. PeerJ Comput Sci 7:e412. https://doi.org/10.7717/peerj-cs.412

Sitaula C, Basnet A, Mainali A, Shahi TB (2021b) Deep learning-based methods for sentiment analysis on Nepali COVID-19-related tweets. Comput Intell Neurosci. https://doi.org/10.1155/2021/2158184

Sitaula C, He J, Priyadarshi A, Tracy M, Kavehei O, Hinder M, Withana A, McEwan A, Marzbanrad F (2022) Neonatal bowel sound detection using convolutional neural network and Laplace hidden semi-Markov model. IEEE/ACM Trans Audio Speech Lang Process 30:1853–1864. https://doi.org/10.1109/taslp.2022.3178225

Smys S, Raj JS (2021) Analysis of deep learning techniques for early detection of depression on social media network-a comparative study. J Trends Comput Sci Smart Technol (TCSST) 3:24–39. https://doi.org/10.36548/jtcsst.2021.1.003

Tong L, Zhang Q, Sadka A, Li L, Zhou H (2019) Inverse boosting pruning trees for depression detection on Twitter. ArXiv Preprint arXiv. https://doi.org/10.1109/taffc.2022.3145634

Valstar M, Schuller B, Smith K, Eyben F, Jiang B, Bilakhia S, Schnieder S, Cowie R, Pantic M (2013) Avec 2013: the continuous audio/visual emotion and depression recognition challenge. In: Proceedings of the 3rd ACM international workshop on Audio/visual emotion challenge. pp 3–10. https://doi.org/10.1145/25125 30.2512533

Valstar M, Gratch J, Schuller B, Ringeval F, Lalanne D, Torres Torres M, Scherer S, Stratou G, Cowie R, Pantic M (2016) Avec 2016: Depression, mood, and emotion recognition workshop and challenge. In: Proceedings of the 6th international workshop on audio/visual emotion challenge. pp 3–10. 10.1145/ 2988257.2988258

Villatoro-Tello E, Dubagunta SP, Fritsch J, Ramírez-de-la-Rosa G, Motlicek P, Magimai-Doss M (2021) Late Fusion of the Available Lexicon and Raw Waveform-Based Acoustic Modeling for Depression and Dementia Recognition. In: Interspeech. pp 1927–1931. https://doi.org/10.21437/interspeech.2021-1288

Vonikakis V, Yazici Y, Nguyen VD, Winkler S (2016) Group happiness assessment using geometric features and dataset balancing. In: Proceedings of the 18th ACM International Conference on Multimodal Interaction. pp 479–486. https://doi.org/10.1145/2993148.2997633

Wang Y, Ma J, Hao B, Hu P, Wang X, Mei J, Li S (2020) Automatic Depression Detection via Facial Expressions Using Multiple Instance Learning. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). IEEE, pp 1933–1936. https://doi.org/10.1109/isbi45749.2020.9098396

World Health Organization (2017) Depression and other common mental disorders: global health estimates. World Health Organization

World Health Organization (2019) Depression. World Health Organization

World Health Organization (2021) World health statistics 2021. World Health Organization

Yin S, Liang C, Ding H, Wang S (2019) A multi-modal hierarchical recurrent neural network for depression detection. In: Proceedings of the 9th International on Audio/Visual Emotion Challenge and Workshop. pp 65–71. 10. 1145/3347320.3357696

Acknowledgements

We would like to acknowledge the funding support from MITACS, Canada. The authors would also like to thank Ryan Corpuz for proofreading the manuscript.

Funding

China Scholarship Council, 201606280044, Wei Zhang.

Author information

Authors and Affiliations

Contributions

WZ: Conceptual and experimental design, data analysis, manuscript preparation. KM: Data analysis. JC: Conceptual design, project supervision, obtaining funding, manuscript preparation.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that there is no conflict of interest. Jie Chen is the Editorial Board member of Phenomics, and he was not involved in reviewing this paper.

Ethical Approval

All the methods were performed in accordance with the relevant guidelines and regulations.

Consent to Participate

All volunteers provided written informed consent.

Consent for Publication

Not applicable.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, W., Mao, K. & Chen, J. A Multimodal Approach for Detection and Assessment of Depression Using Text, Audio and Video. Phenomics (2024). https://doi.org/10.1007/s43657-023-00152-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43657-023-00152-8