Abstract

There is a global competitive demand for graduates with soft skills, and higher education institutions are tasked to reduce the employee skill gap. Thus, we investigated the students' perceptions of peer assessment in facilitating engagement in soft-skill development through group work activities. Using group work to measure the effectiveness of students' feedback on their assessment, we posit that students perceive self-assessment in group work as a tool that represents fairness. By focusing on learning in a peer-assisted learning environment, the study is a two-period different observation on the effectiveness and validity of peer assessment practice. We applied a group learning model over two academic sessions to investigate if students can self-evaluate accurately in a peer-learning environment. The employed methods included both qualitative and quantitative analysis. The findings of the study differ from previous findings that students cannot self-assess accurately. Empirically, there was no significant difference between the peer marks and tutor marks. The study also found that peer learning improves students' quality of assessment as they reflect on their work better.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In learning, students adopt different approaches based on their prior experiences and the environments they find themselves. Investigating students' way of adoption to learning can lead to inconsistent and unclear outcomes. Students' learning experience is a function of their present learning environment as well as their prior. Modern teaching methods follow a student-centred approach, a philosophy that has gained prominence in UK higher education. The idea of active learning, based on constructivism, is one aspect of the student-centred approach adopted for teaching. Objectively, teachers are concerned about how students learn instead of what they learn. However, the literature on active learning in higher education is limited (Godoy et al. 2015). Our study focuses on group work, student perception on peer assessment, measuring accuracy of self-assessment, and effective feedback to contribute to the literature.

Feedback is effective when students able to receive the information and adjust their behaviour accordingly. Achieving effective feedback involves being non-critical, non-forceful, honest, and specific on how students can build on their strengths to achieve a good outcome (Jeffery 2020). Peer assessment is an assessment strategy that encourages students to critique and provide feedback on their peers' work. Self-assessment is the strategy of engaging students to make judgements about the outcomes of their learning. Effective use of peer and self-assessment can improve student learning and student satisfaction to the learning experience (Wride 2017). Improving student satisfaction can improve the overall marks National Student Survey (NSS), which is part of the action plans of most Universities in the United Kingdom (UK). The NSS is a valid measurement of perceived academic teaching quality (Martins et al. 2019; Richardson et al. 2007). Quality assurance in teaching and learning in the UK higher education, as in most developed countries, has been a topic of continuous reflection with debates, studies and interest in the past decade (Barrie et al. 2006; Duhs and Guest 2003; Harvey and Knight 1996; Woodhouse 1999).

Group work is a challenging task for the teachers in terms of design, facilitation, and implementation. Making a presentation is a skill desired to be achieved. Thus, students found making a group presentation to be a huge task. Some students dislike group assessment because their marks depend on other group members' contribution or performance (Tenorio et al. 2016). Group work can create dysfunctional teaching sessions, leading to student apathy and free riding and expected (Williams and Kane 2009). Following Homberg and Takeda 2013, we introduced some changes to the content and delivery of teaching to improve the students' learning environment, enhance engagement, and develop employability skills. We use Active learning as the proposed teaching style.

Self-evaluation is a collaborative, reflective process of learning. The method includes engaging students to mark their work. Guest and Riegler (2017) found that students could not accurately self-assess. Their study opined that brighter students might better understand and act upon the tutor's feedback against weaker students. Studies have found that an immaculately designed evaluation system aligned to professional learning and development outline will add to the desired improvements in teaching quality and increase student achievement (Sadler 1989; Looney 2011).

Educational literature usually investigates the effectiveness of teaching and feedback from one actor, either students or teachers. Le et al. (2018) demonstrated that both actors could study teaching effectiveness simultaneously. Their study found that collaborative learning has increased teaching and learning skills. The case study of McConlogue (2015) found that effective feedback can support the student's progression. The study proposed that peer assessors' comments could only develop over time.

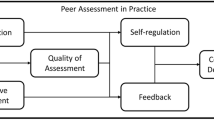

It is not uncommon with group assessment to include some peer-assessment as well as self-assessment. Successful implementation of the peer assessment practice serves the student-centred pedagogy through its essential outcomes, like developing the students' judgment and assessment skills, motivating them, and improving their interaction and participation. This eventually helps create an engagement culture (Tenorio et al. 2016). Peer assessment enables students' active involvement in assessing their peers' performance (Van Gennip et al. 2010). Willey and Gardner (2010) found that students focus on overcoming free-rider problems when engaged in group assessments instead of learning.

Brokaw et al. (2017) found peer and self-assessment as a model for feedback to professionals. In the real world, peers are often in the best position to assess each other's professionalism. The advocates of Peer- and self-assessment claimed that it makes students more familiar with the assessment culture and consequently gaining a better understanding of the assessment tasks, encourages students to absorb the assessment criteria deeply and finally allows students to learn from each other's weaknesses and successes (Race 2007).

There is a demand to reduce the employee skill gap by increasing university graduate skills to compete in a global and competitive workplace (Nealy 2005). However, tutors may not welcome the idea of giving up traditional learning models. Nonetheless, one cannot deny that utilising soft skills, often referred to as interpersonal skills, are essential in the twenty-first-century workplace. Soft skills include listening, problem-solving by a team, communicating, understanding cross-cultural relationship. Coffman (2011) argued that students' culture could affect their engagement and overall success. The group learning model of Crockett and Peter (2002) found that integrated collaborative learning enhances soft skills for future successes. We follow the intersubjective approach (Cunliffe 2008) of social reality view and assume students' sense of the social world emerges as they interact with others. This body of literature has influenced our pedagogical practices to develop a novel study. Thus, in this study, we apply a group-learning model by integrating learning and incentivising engagement.

We aim to investigate students' perceptions of peer assessment and facilitate student engagement for developing soft skills through group work activities. To achieve this, we design a group-learning model to deliver a flexible way to facilitate students' peer-learning environment. We do this by integrating pedagogic research on group work, assessed presentations, soft skill development. We conduct a series of formative assessment sessions that include group work before summative assessments. We then observe student's responses following effective feedback.

Based on the above, our study will present a case study that describes the changes following sessions of the enhanced learning procedure. The outcome is presented as assessment marks. “Materials and methodology” section explains the material design and the methodology. The section discusses the learning activities and assessment process we conducted in a peer-learning environment. “Findings” section presents a series of quantitative data analysis as we measure the accuracy of self-evaluation. We also discussed the findings and qualitative data analysis in “Discussions” section. Finally, we make concluding remarks based on our results and discussions in “Conclusion” section.

Materials and methodology

We designed a group-learning model to deliver a flexible way to facilitate students and achieve our research aim. First, the study will develop a group-learning model that will build group cohesion, ensuring individual contributions and accountability. Secondly, create an enabling environment to measure the effectiveness of feedback and the ability to self-assess. Finally, test the validity of peer- and self-assessment in the context of an active learning environment.

On reflection of McConlogue (2015), we designed a two-session project to allow the tutors to develop expertise on the project and support students' feedback composition. Following Johnson and Bradbury (2015), we developed a pair of cohorts and small groups to apply the social constructivism theory. For tutors, we focused on facilitating and stimulating conversations (Kalina and Powell 2009). We took the time to enlighten the students about the marking criteria. The student could challenge us with critical questions about the marking criteria. Thus, in the end, the method shows a transparency plan that is inclusive to everyone.

Activities and assessment process

We conducted a two-stage assessment plan on two cohorts of students over two semesters. In both cohorts, we use a formative assessment as the first stage to prepare the students for their second stage, i.e. summative assessment. The formative assessment is conducted over the academic session, about twenty sessions of a 10-min presentation by selected groups. In the summative assessment, students are grouped to apply inclusive curriculum based on ability and engagement level. The assessment task is a group-based 30-min oral presentation to be prepared and delivered collaboratively to the tutor and the other groups. For peer assessment, each attending group and involving them in developing the marking criteria were given a marking scheme to provide feedback and grade their peers along with the tutors. The marks and feedback provided by peers and tutors were for the whole group.

Successful implementation of peer assessment requires using clear marking criteria and involving students in developing them (Orsmond et al. 2002; Rubin and Tuner 2012). To facilitate the development of marking criteria, the tutors followed Race's (2007) work, including a series of steps.Footnote 1 The process gave the students a deep understanding of what is expected of them before the summative assessment with a peer assessment practice. Also, it resulted in a set of assessment criteriaFootnote 2 clearly defined and visible to the whole student groups and aligned with this module's learning outcomes. Student self-assessment occurred after feedback. It is done to evaluate their understanding and measure the effectiveness of the feedback. First, it is rewarding for every teacher to see students becoming more interested and eager to learn and develop skills. (Heppner and Johnston 1994).

The learning process requires students to reflect on their weaknesses and strengths as students tend to be more critical of their presentations. At the same time, the comparison of peer-assessment encourages their performances, as the study finds. Confidence to self-assess demonstrates independence and competence (Sadler 2013). On reflection, the second cohort tutors aimed to create an active learning environment to have more involvement and engagement. Thus, students were asked to self-assess their performance before receiving the tutors and peers' mark and feedback.

Sample size

With an epistemological research philosophy, our focus is on the macro-level of social constructivism. We follow an inductive approach to test theories like constructivism, active learning and test self-evaluation accuracy. We adopt a case study research methodology. We used a convenient sampling technique and targeted the entire cohort to ensure that sampling bias is eliminated.

Two separate cohorts of second-year students participated in this study, cohort 1 and cohort 2. Cohort 1 had a total of twenty-four students, while cohort 2 had a total of 37 students. We were only able to sample six groups as those were the students available for the assessment during that term. For the summative assessments, there were fourteen groups combined. Cohort 1 had eight groups, made of three students each. Cohort 2 had six groups, which consist of four or five students in the group. Taking the macro-level focus, Cunliffe (2008), we study the student cohort, not the behaviour of a particular student group within a cohort. We also analysed the data of each student cohort independently.

For questionnaires independent responses, a total of 20 students responded to the questions from cohort 1. The responses provided additional insight and reflection, which we used for cohort 2. The data collection was voluntary, and all professional, ethical procedures were applied.

The study collected data from two cohorts which were divided into small study groups of students. The first cohort had twenty students, and the second cohort had 37 students. The presentation is 20% of the module's assessment. Each group's peer mark weighs 30% of the assessment, while the tutor's mark is weighted at 70%. Each student's actual mark is the weighted average of the peer mark and the tutor's mark. The actual mark provided an incentive for students to participate and become more competitive.

Methods

We conducted the study over two academic sessions to improve our research findings' validity and reflect on improving our teaching methods. Modifications were applied to the second cohort after reflecting on the first cohort. In the first cohort, the summative assessment was conducted by asking each group to present in front of us (the tutors) and their colleagues. Both the tutors and the attending groups completed the marking scheme that requires grading and providing feedback on each presenting group. All the groups gave a copy of tutors' and peers' assessment sheets after completing the whole presentations.

For the formative assessment, the students were asked to work on a group-based oral presentation and delivered in front of the tutor and their peers so that each student should attempt at least one formative before the summative assessment. Students switched groupsFootnote 3 almost every session. Students also allowed themselves to be challenged without any pressure. Students not only receive formative feedback from tutors but from fellow students as well. The seminar class averaged around sixteen individuals, and students asked to select groups of three each week randomly. We also set up an online doodle poll for grouping and timing. Traditionally, students share their answers for the formative assessment questions, while other students discuss how they agree or differ. These traditional methods resulted in random answers from the few students who had attempted to answer the questions briefly without much thought, or responses may come from the brighter and more hard-working students.

In some cases, a specific group of students are asked to provide a set of answers. We find those students responding or deciding not to turn up at all. Additionally, in most cases, most other students do not prepare for the seminar session. Students reported the burden of that formative assessment had been lifted as the one whose obligation was to present for that week takes the responsibility. To improve engagement, we provide e-learning resources and ask the students to use electronic devices, like laptops and phones, while discussing. The flexibility of accessing learning resources allowed active learning to be implemented.

For the second cohort, comprehensive feedback was given to each group after the summative assessment activity. The feedback was verbal, with students allowed to take notes and ask further critical questions. The innovation here was to introduce an informal but effective method of feedback. This feedback concept allowed students to reflect on their performances and make comments on other groups as a means of comparison. We allowed students to reflect on the feedback. Then we asked them to make a self-assessment of the performance as a group and finally collected data by administering questionnaires. We also provided end-of-term prizes as an incentive for engagement awarded to the first three top-performers based on a league table mark. Evidence (like Coffman 2011) show that students engage if there is compensation. The performers are rated by attendance, engagement, and communication with the audience.

We employ both qualitative and quantitative method in our study. Following the phenomenological approach,Footnote 4 the qualitative method includes administering questionnairesFootnote 5 to groups (Appendix C). As it is with data collection, the questionnaire included open-ended questions and direct answers. We used open-ended questions to study students' personal view and to make the responses participating. Students were probed on what they learnt and what they did best to show their strengths and weaknesses. We analysed the group-learning model using interpretative phenomenological analysis.

The quantitative method uses the marks from current and past summative assessments to find accuracy in peer assessment. Data was collected as students were asked to and peer-assess (first cohort) and self-assess (second cohort). This observation is collected to compare with the actual mark and peer assessment mark. We conduct a descriptive analysis of the data and run a test of association to find a correlation between the actual and expected marks.

The peer assessment practice evaluation was established by comparing each presenting group's mark to the tutor mark to measure peer marking validity. We also used the questionnaire to test the students' satisfaction and feedback about the peer assessment process.

Findings

In both cohorts, some students initially did not like the idea of peer-assessment and group work, which is consistent with some of the previous studies as reviewed recently by Tenorio et al. (2016). Students suggested that their "marks should not depend on other students' abilities." The suggestion came from a few of the brightest students and regular seminar attendants. An action is taken in the second cohort by taking an online poll to ask students whether they support peer assessment or not. Table 1 shows the responses to peer assessment, with 45% in agreement and 30% indifferent. Thus, the perception of fairness to working with peers is assured, and deterring free-rider problems is considered to give the brighter students and the regular attendees confidence.

The poll results are based on the understanding that peer assessment allows students to reflect and feedback on learning (Willey and Gardner 2010). Following the student's participation in the first presentation, they provided feedback on how the first group could have performed better. Thus, the feedback sparked much enthusiasm across the groups. After the assessment, students were also asked whether peer assessment is a good idea in the future, and 95% thought it is a good idea.

Student's improvement from previous year

For students to accurately self-assess and group-assess, we observed the second cohort's first-year marks to assess their abilities as we analyse their responses. We analyse these marks based on the key principle of social constructivism such that students require background knowledge of a subject to develop further (Kalina and Powell 2009). Table 2 shows the performance of our sample students in their first year of study.

The average mark of first-year mark for the second cohort is below the School's average mark of 54%. The lower average mark is due to many unrelated factors to this study, such as their entry qualification mark to study at the university level and their prior knowledge of their study modules. A higher mark would mean higher learning abilities. The minimum mark is recorded from the first assessment. A student who failed at the first attempt was re-assessed to ensure they pass the module with an average mark of 40%. The maximum mark shows that the sample has very good students with high abilities. The disparity of Maximum and Minimum is what is highlighted in the standard deviation. The performance of this sample is one motivation for introducing innovative ways of teaching.

Table 3 compares the statistics across the cohorts. The data includes the peer mark by other groups and the tutor's mark. The weighted average of the peer (30%) and tutor (70%) marks gives the actual mark. For the second cohort, a self-evaluation mark—referred to as an expected mark—for each group was collected to demonstrate the value of feedback and lessons learnt.

Although the standard deviation is within the sector’s average of 10% (Bachan 2015), cohort 2 remains lower. It is also evident that there is a wider variance in cohort 1 as some students seem to comprehend the instructions while some did not. Thus, the peer mark, before feedback, students have the highest standard deviation. For cohort 2, as seen in Fig. 1, there are little changes across the marks because of the lessons learnt by tutors and the students' feedback. The marks indicate that group work techniques with peer- and self-assessment introduced in this study have helped improve the students' achieved marks. More involvement, engagement, and comprehending the students' marking criteria are examples among the others behind this outcome.

Students' evaluation of peer assessment

This section summarises the descriptive analysis of the data collected on the perception of peer assessment practice. The results from Table 4 show that more than three-quarters of the students (80%) were at least highly satisfied with getting their peers involved in assessing and commenting on their performance. Similar rates were found about the level of students' understanding of the implementation process of peer assessment. We also examine whether the peer assessment and feedback process has helped to clarify the assessment requirements. Table 4 shows that 80% of the participants agree that peer assessment and feedback are good learning tools, helping to better understand the assessment criteria, leading to a higher learning and engagement level.

Furthermore, 70% of the students advised that the feedback provided by their peers is very helpful for further assignments. The result is consistent with Suñol et al. (2016), who reported similar findings.

The analysis of data collected from the two open questions (Appendix D) shows overall that the students' comments agree with the literature in the regard that peer assessment and feedback practice helps the students to gain new ideas, pay more attention to presentation, engage more in the assessment process, improve communication with group members and enhance confidence. A few points against the learning activity were stated too. Like feeling under pressure to perform well make them more nervous and uncomfortable being assessed by peers.

To confirm the idea that students' understanding of the peer assessment and feedback process (as measured in Q2, Appendix C) helps improve their satisfaction level of the practice, we tested the association between the two factors. Our test uses the Spearman correlation coefficient, a non-parametric test, to measure the strength and direction of the association between the two variables.

Table 5 shows the Spearman's correlation test, Sig (2-tailed) value = 0.018 < 0.05, which infers a significant association between the extent of students' understanding of peer assessment and feedback application and the level of their satisfaction. Therefore, students' perceptions of peer assessment are evident. Overall, students who grasped the application steps of peer assessment were highly satisfied with its implementation.

Peer marking analysis

We tested the peer marks' validity by comparing with the tutors' moderated marks as two dependent samples using their average. We used the WilcoxonFootnote 6 sign test as we have only one subject (the oral presentation). We test the two marking methods, peers and tutor mark, for a significant difference between them.

Table 6 shows the number of negative ranks (these are subgroups for whom the peer mark 100% was greater than the tutor mark 100%) and the number of positive ranks (subgroups for which the tutor mark 100% was greater than the peer mark 100%). The Wilcoxon signed-rank test shows that the observed difference between the two marks is insignificant (average rank of 4.40 vs average rank of 4.67). Thus, we do not reject the null hypothesis that both marks are insignificantly different, and we might assume that the marks awarded by peers are valid. Interestingly, there are no tied ranks, i.e. no groups given the same mark by peers and tutors. The result confirms some previous studies (e.g., Freeman 1995; De Grez et al. 2012). Conversely, the result is inconsistent with studies that found a significant difference between the tutor and peers marks (e.g., Cheng and Warren 1999; Suñol et al. 2016).

Students were also probed on their perception about what a fair mark should be after receiving feedback. The feedback has lowered the student's expectations, but with evidence from Table 5, their satisfaction level remains high due to the marking criteria' transparent nature. Unlike Guest and Riegler (2017), who collected data as the students handed in their coursework, our data is collected following verbal feedback. Students' grouping is done with inclusiveness in mind, given our experience at the formative assessment level.

We conducted a correlation test of the actual mark and the expected mark to find the accuracy of self-evaluation based on the positive impact of students' academic ability. We conducted a test of association to evaluate the relationship between the actual mark and the expected mark.

Table 7 shows a significant relationship between the expected mark and the actual mark with a coefficient as high as 98%. The result does not mean the student expectation causes the result, but given the strong relationship, one can infer that the student mark expectations are met. We conclude no significant difference between the actual mark and the expected mark of students. Thus, students can self-assess accurately with our proposed active learning activity, group work.

Discussions

For some students, the hardest part of the presentation was linking theories to practice. Such problems are not uncommon in the second year of study. The transition from first-year, where students move from understanding and comprehending theories to application and analysis of theories, can be challenging. Group work, assessed presentations, and soft skill development are pedagogical ways of enhancing students' thinking skills. Students are provided with various topics, and they will have to be creative to link their discussions within a context.

The effect of developing soft skills was evident. Students could listen, communicate their ideas, ask questions, relate to team demands, and understand cross-cultural relationships. Following Nealy (2005), we find matured students taking the initiative and responding to group demands' initial proposition. Few respondents mentioned how working with the group was difficult with unclear direction, collaborating everyone's efforts and getting everyone together to run through discussions and arguments. The responses have satisfied some of the objective stated above: building group cohesion and ensuring individual contributions and accountability. Other students responded with the difficulty of communicating effectively and getting nervous in front of colleagues. Such issues have been overcome as the following year's presentation shows their improvement as they presented individually.

There was a demonstration of positive active learning from our sample, demonstrating that students have learnt while engaging in the group activity. Prince (2004) sees the outcome from group work best promote achievement. We also support the idea of challenging traditional learning assumptions, like working independently and competing, as they do not promote students' best achievement. Students understood that becoming experts and developing skills is critical. Some responses mentioned that they learnt to add theory and use of the concept as essential against writing without terminologies. Some mentioned how they learnt about international economies such as Saudi Arabia. Most of the respondents agreed they have learnt how to apply models and the importance of being organised when delivering a presentation. The objective of supporting the learning of needed concepts has been achieved here too. However, Godoy et al. (2015) argue that active learning is a more effective instruction method than a traditional approach. They found that learning gains are most likely a result of the tutor's active-learning style of instruction instead of how the tutor participated in the teaching. In our study, tutors participated as facilitators even though we designed the instructions with flexibility, like switching groups and increasing the students within the second cohort groups.

We ask our sample to pick a specific achievement in the activity. Initially, some students were reluctant to participate in group work, as presented in Table 1, for fear of free-riding or individual assessments preference (Tenorio et al. 2016). We found responses to be diverse in a positive manner. These responses met one of our objectives, to teach students the positive value of working in groups. Some responses include how students prepare to present to an audience, collect and construct data, and then analyse it. Students develop their analytical and critical thinking skills with problem-based learning, as evidence shows. For comparison, we asked the students about their performance at the presentation. The presentation is one of the intra-peer assessment points as one could look at self and group critically. Like Suñol et al. (2016), over 70% of the students (see Table 4) agreed that the group assessment process is a useful learning and engagement method. Sadler (2013) opined that a precise and dispassionate judgement of one's work requires looking from the outside. Hence, group work can allow students to critically analyse their group after receiving their grades. The responses came from doing the presentation better with an application, over-complicating the model and making the audience more interested. Students' responses show how they can self-assess their work and cohort's. They can detect peculiar concepts, like application, descriptive data, evaluation and creativity. The literature (e.g. Kuhn and Rundle-Thiele 2009) agrees with our conclusion that this teaching innovation guarantees students to meet their learning outcomes.

We created a group-based learning environment by introducing a substantial end-of-term prize to the group's formative assessment contribution. Coffman (2011) found that students anticipate a payment for their work, and we have seen a high rate of engagement. Thus, students perceive their sense of social world emerges continually as they interact with others (Cunliffe 2008). The evidence from student responses (Table 4) also suggests that good academic preparation improve their cognitive abilities such as application. Likewise, a good social network plays a crucial role in adjusting to their new learning environment and enhancing cognitive abilities development. Kalina and Powell (2009) find that interactions with tutors, peers, and family is the primary way of learning for students. As consistent with the literature, we find our students creating social media accounts for discussions, which added flexibility in accessing learning resources.

Unlike Guest and Riegler (2017), students' self-assessing ability is accurate when teachers combine active learning and useful feedback. The self-assessment also gives students the confidence to perceive fairness in their assessments. These activities are developed to enhance student learning through more physical engagement and move away from depending on the tutor as the central learning resource with a student-centred learning approach. To this end, we find that students were keen to improve their group work skills by discussing peers' work while learning from each other.

Conclusion

The study aimed to investigate students' perceptions of peer assessment in facilitating engagement in soft-skill development through group work activities. We augmented the teaching delivery methods to improve the students' learning environment and expectations with group work. The study introduced a group-learning model in a group-oriented, student-centred learning environment using group presentation. We understood that the learning experience is a function of students' present learning environment and their prior. The model allowed students to form a diverse bond across the cohort. It also contributed to the learning and development of soft skills. Empirical evidence shows student satisfaction with the learning technique. Qualitative evidence shows significant improvement, and students' responses are encouraging. Students took the opportunity to engage in teaching sessions without pressure actively.

From the teachers' side, the reduction of pressure from lack of engagement and taking the responsibility to do most of the formative assessment work, which turns out to be another lecture session, has been curbed, hence, higher satisfaction. The evaluation of the teaching innovation tested peer assessment validity by measuring self-assessment accuracy after providing effective feedback. Overall, our results imply that students can gain better learning experience and develop employability skills, and the teachers can apply the improved version of group learning with fewer students' challenge of the process. Based on the law of averages, our empirical results show that students can self-assess accurately based on our results. However, being the study's focus is to evaluate the application of suggested teaching and learning approaches, we cannot determine this activity's causation.

Students can confidently view group work self-assessment as a tool that represents fairness. Due to reasons out of our control related to the programme size, the result is far from being generally accepted as small cohorts. However, based on the social constructivism theory, the small size has favoured our practice. From what we observe and on reflection, we will be using the following teaching technique for a considerable time and will continue to collect data to evaluate students' development. For consistency, further study could be applied to a larger cohort throughout a degree programme. Students will develop expertise in feedback and the subject matter while the accuracy of self-assessment is observed.

Data availability

Data available on request and must not be used for commercial purpose.

Code availability

Not applicable.

Notes

See Appendix A.

See Appendix B.

We consider a group when students are three or more.

Phenomenological approach aims to describe a phenomenon by examining it from the subjective experience of people who have experienced it (Saunders et al., 2009).

In the questionnaire, the authors asked questions for example about the extent of, peer assessment satisfaction, understanding the suggested assessment process, peer assessment has helped in better understanding of the assessment requirements itself, peer assessment has helped in discovering new ideas and/or avoiding probable mistakes and benefits from peers’ feedback. Also, whether happy of not to practice peer assessment and feedback again.

It tests the null hypothesis that the average signed rank of two dependent samples is zero.

References

Bachan R (2015) Grade inflation in UK higher education. Stud High Educ 42(8):1580–1600

Barrie S, Ginns P, Prosser M (2006) Early impact and outcomes of an institutionally aligned, student-focused learning perspective on teaching quality assurance. Assess Eval High Educ 30(6):641–656

Brokaw JJ, Frankel RM, Hoffman LA, Shew RL, Vu TR (2017) The association between peer and self-assessments and professionalism lapses among medical students. Eval Health Prof 40(2):219–243

Cheng W, Warren M (1999) Peer and teacher assessment of the oral and written tasks of a group project. Assess Eval High Educ 24(3):301–314

Coffman S (2011) A social constructionist view of issues confronting first-generation college students. New Dir Teach Learn. https://doi.org/10.1002/tl.459

Crockett G, Peter V (2002) Peer assessment and group work as a professional skill in a second year economic unit. Teaching Learning Forum, published refereed proceedings, Western Australia

Cunliffe AL (2008) Orientations to social constructionism: relationally responsive social constructionism and its implications for knowledge and learning. Manag Learn 39(2):123–139

De Grez L, Valcke M, Roozen I (2012) How effective are self- and peer assessment of oral presentation skills compared with teachers’ assessments? Act Learn High Educ 13(2):129–142. https://doi.org/10.1177/1469787412441284

Duhs A, Guest R (2003) Quality assurance and the quality of university teaching. Aust J Educ 47:40–57

Freeman M (1995) Peer assessment by groups of group work. Assess Eval High Educ 20(3):289–301. https://doi.org/10.1080/0260293950200305

Godoy PM, Jensen JL, Kummer TA (2015) Improvements from a flipped classroom may simply be the fruits of active learning. CBE Life Sci Educ 14:1

Guest J, Riegler R (2017) Learning by doing: do economics students self-evaluation skills improve? Int Rev Econ Educ 24:50–64

Harvey L, Knight P (1996) Transforming higher education. Society for Research into Higher Education. Open University Press, Buckingham

Heppner PP, Johnston JA (1994) Peer consultation: faculty and students working together to improve teaching. J Couns Dev 72(5):492–500

Homberg S, Takeda F (2013) The effects of gender on group work process and achievement: an analysis through self- and peer-assessment. Br Edu Res J 40(2):373–396. https://doi.org/10.1002/berj.3088

Jeffery S (2020) How to give effective feedback. https://scottjeffrey.com/effective-feedback/#Read_More_Self-Development_Guides. Accessed 30 Oct 2020

Johnson MD, Bradbury TN (2015) Contributions of social learning theory to the promotion of healthy relationships: asset or liability? J Family Theory Rev 7:13–27. https://doi.org/10.1111/jftr.12057

Kalina CJ, Powell K (2009) Cognitive and social constructivism: developing tools for an effective classroom. Education 130(2):241–250

Kuhn KAL, Rundle-Thiele S (2009) Curriculum alignment: student perception of learning achievement measures. Int J Teach Learn High Educ 21(3):351–361

Le H, Janssen J, Wubbels T (2018) Collaborative learning practices: teacher and student perceived obstacles to effective student collaboration. Camb J Educ 48(1):103–122. https://doi.org/10.1080/0305764X.2016.1259389

Looney J (2011) Developing high-quality teachers: teacher evaluation for improvement. Eur J Educ 46:440–455. https://doi.org/10.1111/j.1465-3435.2011.01492.x

Martins MJ et al (2019) The national student survey: validation in Portuguese medical students. Assess Eval High Educ 44(1):66–79. https://doi.org/10.1080/02602938.2018.1475547

McConlogue T (2015) Making judgements: Investigating the process of composing and receiving peer feedback. Stud High Educ 40(9):1495–1506. https://doi.org/10.1080/03075079.2013.868878

Nealy C (2005) Integrating soft skills through active learning in the management classroom. J Coll Teach Learn https://doi.org/10.19030/tlc.v2i4.1805

Orsmond P, Merry S, Reiling K (2002) The use of exemplars and formative feedback when using student derived scoring criteria in peer and self-assessment. Assess Eval High Educ 27(4):309–323. https://doi.org/10.1080/0260293022000001337

Prince M (2004) Does active learning work? A review of the research. J Eng Educ 93:223–231. https://doi.org/10.1002/j.2168-9830.2004.tb00809.x

Race P (2007) The Lecturer’s Toolkit: a practical guide to assessment, learning and teaching, 3rd edn. Routledge

Richardson JTE, Slater JB, Wilson J (2007) The national student survey: development, findings and implications. Stud High Educ 32(5):557–580. https://doi.org/10.1080/03075070701573757

Rubin RF, Turner T (2012) Student performance on and attitudes toward peer assessments on advanced pharmacy practice experience assignments. Curr Pharm Teach Learn 4(2):113–121. https://doi.org/10.1016/j.cptl.2012.01.011

Sadler DR (1989) Formative assessment and the design of instructional systems. Instr Sci 18:119–144

Sadler DR (2013) Opening up feedback: teaching learners to see. In: Merry S, Price M, Carless D, Taras M (eds) Reconceptualising feedback in higher education: developing dialogue with students. Routledge, London

Saunders M, Lewis P, Thornhill A (2009) Research methods for business students. Pearson Education Limited, England

Suñol JJ, Arbat G, Pujol J, Feliu L, Fraguell RM, Planas-Lladó A (2016) Peer and self-assessment applied to oral presentations from a multidisciplinary perspective. Assess Eval High Educ 41(4):622–637. https://doi.org/10.1080/02602938.2015.1037720

Tenorio T, Bittencourt II, Isotani S, Silva AP (2016) Does peer assessment in online learning environments work? A systematic review of the literature. Comput Human Behav 64:94–107

Van Gennip NAE, Segers MSR, Tillema HH (2010) Peer assessment as a collaborative learning activity: the role of interpersonal variables and conceptions. Learn Instr 20(4):280–290. https://doi.org/10.1016/j.learninstruc.2009.08.010

Willey K, Gardner A (2010) Investigating the Capacity of self and peer assessment activities to engage students and promote learning. Eur J Eng Educ 35(4):429–443

Williams J, Kane D (2009) Assessment and feedback: institutional experiences of student feedback, 1996 to 2007. High Educ Q 63:264–286

Woodhouse D (1999) Quality and quality assurance. In: Knight J, de Wit H (eds) quality and internationalisation in higher education. OECD, Paris

Wride M (2017) Guide to peer assessment. Dublin: Trinity College. https://www.academia.edu/32066589/Guide_to_Self-Assessment. Accessed 31 Oct 2020

Funding

Not applicable. No funding was received.

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Conflicts of interest

On behalf of all authors, the corresponding author states no conflict of interest.

Additional information

This paper was originally written for the Quality Enhancement Directorate Seminar Series 2018. Since then, a version of this paper has been accepted for the Advance HE Conference 2020 and the Advances in Management and Innovation Conference at Cardiff Metropolitan University in 2020, under the title 'Measuring Effective Feedback and Self-evaluation in a Peer-learning Environment. We have also based several seminars on various parts of this material. We are grateful to helpful comments of David Brooksbank, Sue Tangey, among many others.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aminu, N., Hamdan, M. & Russell, C. Accuracy of self-evaluation in a peer-learning environment: an analysis of a group learning model. SN Soc Sci 1, 185 (2021). https://doi.org/10.1007/s43545-021-00152-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43545-021-00152-3