Abstract

Worldwide, scholars and public institutions are embracing behavioural insights to improve public policy. Multiple frameworks exist to describe the integration of behavioural insights into policy, and behavioural insights teams (BITs) have specialised in this. Yet, it often remains unclear how these frameworks can be applied and by whom. Here, we describe and discuss a comprehensive framework that describes who does what and when to integrate behavioural insights into policy. The framework is informed by relevant literature, theorising, and experience with one BIT, the Behavioural Insights Group Rotterdam. We discuss how the framework helps to overcome some challenges associated with integrating behavioural insights into policy (an overreliance on randomised control trials, a limited understanding of context, threats to good scientific practice, and bounded rationality of individuals applying behavioural insights).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Worldwide, scholars and public institutions are acknowledging that a better understanding of human behaviour can improve public policy (e.g., Executive Order No. 13707, 2015; Netherlands Scientific Council for Government Policy, 2014; World Bank, 2015). Drawing from research in the behavioural sciences, scholars and public institutions aim to understand why humans behave as they do and use these behavioural insights (BIs) to develop, test, and implement behavioural solutions for policy issues. Here, BIs refer to an understanding of behavioural determinants as well as how behaviour may be changed. The publication of the book Nudge (Thaler & Sunstein, 2008) can be seen as a renewed starting point and catalyst for the worldwide interest in the integration of BIs into public policy (Whitehead et al., 2018). This integration often includes the use of nudges: small and seemingly irrelevant changes in choice contexts that exploit behavioural automatisms such as biases, habits, and heuristics to programme behaviour (Thaler & Sunstein, 2008). To illustrate, BIs have been used to increase the rate of organ donors by changing from opt-in to opt-out defaults (Johnson & Goldstein, 2003), and to improve tax compliance by communicating social norms (“nine out of ten people pay their tax on time”; Hallsworth et al., 2017, p. 16).

Public institutions often employ so-called behavioural insights teams (BITs) as a centralised way of integrating BIs into public policy (as “public sector innovation labs”; McGann et al., 2018). These teams typically combine behavioural research and policy expertise to address policy issues with a behavioural dimension on a case-by-case basis (John & Stoker, 2019; Strassheim et al., 2015). BITs are often modelled after the first BIT which was set up within the United Kingdom Cabinet Office in 2010 (John, 2014). By now, several hundred BITs exist around the globe at various institutions (Afif et al., 2018; Manning et al., 2020; OECD, 2017). So far, little is known about the effectiveness of those teams, but evidence shows that interventions designed by BITs can result in effective policy changes (Benartzi et al., 2017; DellaVigna & Linos, 2020; Hummel & Maedche, 2019; Szaszi et al., 2018). Applying BIs in practice often goes hand in hand with a strong commitment to evidence-based practices of policy making and employing scientific methods to trial interventions (Einfeld, 2019; Haynes et al., 2012; John, 2014).

Multiple frameworks describe the integration of BIs into public policy. They have in common that they inform readers about what should be done for the successful integration of BIs and when it needs to be done. Illustrative and popular examples are the “easy, attractive, social, and timely” (EAST) formula developed by the BIT (2014) in the United Kingdom and the “behaviour, analysis, strategy, intervention, and change” (BASIC) step-by-step process developed by the Organisation for Economic Co-operation and Development (OECD, 2019). EAST answers the what-question by summarising the notion that to promote a target behaviour, interventions should make the behaviour easy to perform, appear attractive, be encouraged by the social environment, and stimulated in a timely manner. BASIC answers the when-question by prescribing a sequence of steps that lead to the integration of BIs. Namely, identification of a target behaviour, analysis of this behaviour, identification of behaviour change strategies, testing of interventions, and implementation of effective interventions to achieve change. Yet, these existing frameworks fall short to answer who needs to be involved in the integration, and the process of bringing it all together. Relatedly, there is little research on how BITs operate and use those frameworks (Ball & Head, 2021). As a consequence, practitioners are often left wondering who should do what and when for the successful integration of BIs into policy. This paper aims to overcome this shortcoming by introducing a more comprehensive framework.

This framework relates to implementation science (e.g., Nilsen, 2015; Ogden & Fixsen, 2014) because it relies on evidence-based practices and their uptake in policy practice. Specifically, it can be interpreted as a determinant framework as well as a process model which outlines implementation steps. To the best of our knowledge, this is the first attempt to bridge implementation science and the emerging field of behavioural public policy.

We believe this study to be relevant for BIs practitioners, as well as scholars interested in behavioural public policy and behavioural public administration because the framework tackles some common challenges associated with the integration of BIs into policy that we summarise below.

BIs Challenges

Based on the literature we identify four challenges associated with the integration of BIs into policy: (1) an overreliance on randomised control trials; (2) a limited understanding of context; (3) threats to good scientific practice; and (4) bounded rationality of professionals applying BIs. Strategies for overcoming the challenges are described when presenting the framework and in the Discussion.

Overreliance on Randomised Control Trials

From early on, the integration of BIs into policy within BITs has been coupled with the use of randomised control trials (RCTs) to build up knowledge about what works (Einfeld, 2019; Halpern & Mason, 2015; John, 2014). The coupling implied for some BITs that they would only conduct projects where a RCT was possible (Ball & Head, 2021). However, there is increasing recognition that integrating BIs into policy is not conditioned on RCTs and that the scope of integrating BIs increases once BITs also embrace other evaluative methods (Ball & Head, 2021; Einfeld, 2019; Ewert & Loer, 2021). Relatedly, an overreliance on RCTs has been associated with an insufficient appreciation of local and contextual factors affecting effectiveness of interventions (Pearce & Raman, 2014) which in turn limits the capacity of BITs to learn about working mechanisms and tailor interventions.

Limited Understanding of Context

Behaviour is embedded in and contingent on various levels of context reaching from the immediate material and social context to larger social structures, as well as historic and developmental dynamics (e.g,. Bronfenbrenner, 1977). However, current practices of integrating BIs into policy tend to simplify or neglect contextual features that are more remote in space and time (e.g,. early childhood, institutional contexts) for the analysis of behaviour, efforts to change it, and evaluations of interventions. Based on over 100 interviews with BIs experts, Whitehead and colleagues (2017, 2018) conclude that the neglect stems from an overreliance on knowledge and methods from behavioural economics instead of other relevant disciplines embracing broader understandings of context, such as sociology and geography. In a similar vein, Muramatsu and Barbieri (2017) argue that interventions should not be based on overgeneralisations but be made on a case-specific basis, integrating for instance knowledge from practice. In addition, multiple authors have highlighted the importance of diagnostic research to develop interventions that are tailored to their contexts (e.g., Hauser et al., 2018; Meder et al, 2018; Strassheim, 2019; Weaver, 2015).

Threats to Good Scientific Practice

Bolton and Newell (2017) have outlined five threats to good scientific practice for BITs. These threats stem from the culture of public institutions and include the following: (1) the pressure to develop effective solutions at low-cost; (2) the pressure to generate quick results and meeting deadlines; (3) inappropriate power assertions corrupting public policy or science; (4) difficulties to meet open science standards; and (5) limitations related to adequate operationalisations and good research designs. The last two threats have also been highlighted by the BIT in the United Kingdom (Sanders et al., 2018). In addition, several authors have highlighted the poor quality of reporting of trials executed by BITs (e.g., Cotterill et al., 2021; Osman et al., 2018).

Bounded Rationality of BIs Professionals

Integrating BIs into policy is often presented as an effort to stimulate rational behaviour that compensates for the bounded rationality of individuals (i.e., the notion that decision-making is impaired by limited cognitive capacities; Thaler & Sunstein, 2008). Yet, this has provoked scepticism concerning the capacity to steer rational behaviour when those steering it are not fully rational themselves (Lodge & Wegrich, 2016; Thomas, 2019). Indeed, individual personality traits, cue-taking, fast and intuitive thinking, and emotions were said to limit the potential for rational policy design (Nørgaard, 2018). In addition, the straightforward and rational design processes propagated by BITs were found to be adapted and subjected to organisational logics when introduced to policy practice (Feitsma, 2020) further feeding the scepticism concerning the capacity of BITs to act rational.

Comprehensive Integration Framework

Our comprehensive framework for the integration of BIs into policy making and policy implementation aims to overcome the challenges. The framework describes the ingredients required for the integration and the procedure for combining these ingredients. Following the presentation of the framework, we illustrate how it was put to practice within one BIT, the Behavioural Insights Group Rotterdam.

Development of the Framework

The framework was developed in an iterative process of consulting relevant literature, theorising, and practical experience. Initially, the then available literature about BIs and BITs (e.g., Ames & Hiscox, 2016; BIT, 2014; Congdon & Shankar, 2015; Halpern, 2015; Halpern & Sanders, 2016; Haynes et al., 2012; Thaler & Sunstein, 2008) was used eclectically to theorise a preliminary framework. Thaler and Sunstein (2008) can be seen as a groundwork highlighting the importance of avoiding misconceptions about behaviour when formulating or implementing policy. To overcome such misconceptions, the idea of using a linear and rational procedure for integrating BIs into policy was based on the procedures of other BITs (Ames & Hiscox, 2016; BIT, 2014). A focus on generating evidence and conducting trials was taken from other BITs as well (Congdon & Shankar, 2015; Haynes et al., 2012), however, it was complemented with a pragmatic perspective employing different evaluative methods (Morgan, 2007, 2014). The framework then guided more than three years of practical experience within one BIT, the Behavioural Insights Group Rotterdam (BIG’R), during which the framework was regularly discussed with BIG’R members and updated.

Ingredients

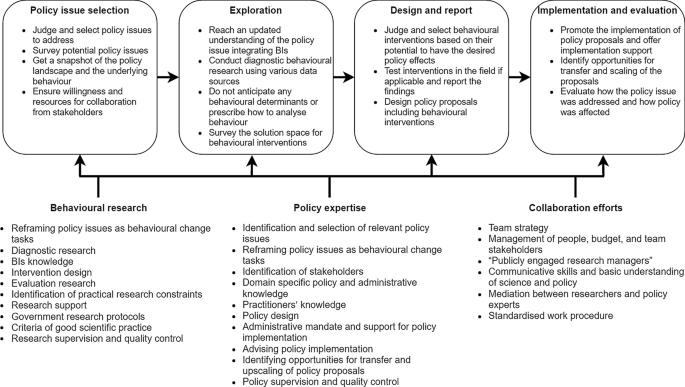

We differentiate between the ingredients behavioural research, policy expertise, and collaboration efforts. Competences needed for behavioural research and policy expertise are associated with different professions and individuals, making collaboration efforts to stimulate interaction and coordination between them a necessity. Integrating knowledge from behavioural research and policy expertise helps to overcome a limited understanding of context. The ingredients are displayed in Fig. 1.

Behavioural Research

To address policy issues using BIs, such issues need to be translated and reframed as behaviour change tasks (e.g., “too much energy is produced” to “individuals shower too long and hot”). This requires an understanding of what constitutes behaviour, the identification of target behaviours that underly policy issues, and behavioural terminology (Olejniczak et al., 2020). Inherently linked to this is the conduction of diagnostic research to better understand the target behaviour, its determinants, and its context utilising local knowledge. Bringing about behaviour change also requires knowledge about robust behaviour change techniques that likely work in the respective context and can guide the design of interventions. Some of these techniques are popularised in frameworks like EAST (BIT, 2014). However, these frameworks rarely explain when which technique works, making expert behavioural knowledge a necessity to choose or design interventions on a case-by-case basis.

Similar to interventions, the evaluation of interventions needs to be adjusted to their context and take into account practical research constraints (e.g., limited resources, access to participants). As practical constraints can determine what kind of evaluation research is feasible, a pragmatic approach to research (Morgan, 2007, 2014) using multiple methods allows one to adjust the research to its context and overcome the overreliance on RCTs to evaluate interventions. In the context of BITs, research is often contingent on public servants for its support and assistance (e.g., entry to the field and assistance with data collection). Whatever research conducted, it should adhere to criteria for good scientific practice and research protocols of the public institution employing the BIT. Adherence to these criteria and protocols requires scientific training and expert knowledge (i.e., professional researchers) as well as independence of researchers, peer supervision, and quality control of the conducted research. Therefore, BITs need to have an organisational mandate to enforce and ensure adherence to these criteria and protocols.

Policy Expertise

Integrating BIs into policy requires expertise from policy for various reasons. Specifically, policy expertise is required to identify and select policy issues with a behavioural dimension of societal and administrative relevance. These issues need to be associated with distinct target behaviours to reframe the issues as a behavioural change task. Once issues have been selected, policy expertise (e.g., from front-line workers, policy advisors) helps to identify relevant stakeholders of issues to ensure their adequate involvement. Policy expertise and stakeholder input together help to better understand the context of target behaviours to tailor interventions, and take into account the local policy and administrative landscape when conducting research and testing interventions. Moreover, practitioners’ knowledge often helps to avoid common mistakes and learn from earlier attempts to change the behaviour (Halpern & Sanders, 2016).

Ultimately, results from intervention evaluations need to feed into the design of actionable policy proposals. For this, behavioural research needs to be translated and embedded in the local administrative and policy landscape (Feitsma, 2019). Only thereafter, can effective interventions be implemented using adequate policy tools to enable and maintain behaviour change (Michie et al., 2011). For this, policy expertise is required to secure an administrative mandate and support (e.g., budget) and advise throughout the implementation process. The former includes advocating for the integration of BIs in general and the implementation of policy proposals in particular, both using strategies of policy entrepreneurs (e.g., selling the effectiveness of behavioural interventions, identifying policy issues of political importance; Huitema & Meijerink, 2010). To maximise the impact of policy proposals, policy expertise can identify opportunities for transfer as well as upscaling of proposals. Similarly to the behavioural research ingredient, policy expertise requires supervision and quality control of outputs (e.g., policy proposals).

Collaboration Efforts

As a third ingredient, collaboration efforts are needed to combine behavioural research and policy expertise (Halpern & Sanders, 2016). This ingredient encompasses strategic direction, management, communicative skills and mutual understanding, mediation, and a standardised working method. Concerning the first, strategic direction establishes goals and how to achieve them. For instance, in its early days the BIT in the United Kingdom focussed on “quick wins” where minor changes promised to produce obvious revenues or savings to get political buy in and support (Halpern, 2015; Sanders et al., 2018). Such a strategy ensures that different team members work towards a shared goal. Second, the team, its people, budget, and stakeholders need to be managed actively. This task combines the delivery of research projects with a focus on public management and requires “publicly engaged research managers”, with important skills such as “diplomacy and negotiation” (Dunleavy et al., 2019).

Information does not flow between scientists and public servants in a linear and straightforward manner. It first needs to be translated and contextualised (Feitsma, 2019). Consequently, as a third aspect of collaboration efforts, members of BITs are required to possess strong communication skills and a basic understanding of both science and policy. In case of disagreements and conflicts (e.g., concerning timeframes), it may even require mediation between researchers and policy experts, or a shared authority that can be consulted. At the BIT of the Australian Government for instance, there was a dual management with a Research Director and a Managing Director more embedded in the policy context as a shared authority (Ball et al., 2017). Involving both behavioural research and policy expertise assures that experiments are “co-produced” (Voß & Simons, 2018) aiming to solve local policy problems rather than translating abstract knowledge to fit generalised problem definitions (Gibbons et al., 1994). Finally, a standardised procedure or working method that instructs researchers and policy experts can ease collaboration. Such a procedure is outlined in the following section. It also helps to overcome the bounded rationality of BIs professionals because it provides a systematic approach to first explore a policy issue before proceeding to solutions.

Procedure

The procedure specifies how the ingredients from the framework are to be combined. It encompasses four separate phases that together form a logical and temporal sequence where the results from earlier phases feed into later ones (see Fig. 1). To be more precise, it prescribes a sequence of (1) policy issue selection; (2) efforts to understand the underlying behaviour; (3) design of policy proposals, and (4) implementation and evaluation.

Policy Issue Selection

The objective of the policy issue selection phase is to select adequate policy issues. As mentioned above, a BIT’s strategy may have a significant impact on which policy issues are selected. For the selection, a snapshot of the policy landscape related to a policy issue is needed to judge its relevance. Moreover, an initial reflection on the behaviour, its plausible determinants, and its context are required to judge the potential to effectively change the behaviour. In most cases, there will be multiple behaviours underlying a policy issue and BITs will need to focus on a small number of them given available resources. Furman (2016) has argued that starting with a policy issue (rather than starting with BIs and then looking for a suitable policy issue) holds the potential for BIs to play a more important role in public policy.

The use of the framework presumes the willingness of public servants to collaborate. The Australian BIT ensured this willingness by co-funding from project partners which “encouraged a stronger sense of engagement” (Ball et al., 2017). Ensuring buy-in from public servants and their support (e.g., financially) is thus of high importance especially since structural long-term partnerships, and equality and reciprocity between partners were found to ease implementation (e.g., Ansell et al., 2017; Torfing, 2019).

Exploration

The objective of the exploration phase is to reach an updated understanding of the policy issue by integrating BIs into this understanding. Research has shown that public servants sometimes use wrongful assumptions when making inferences about behaviour (Hall et al., 2014; Howlett, 2018) and, in addition, that problem definitions based on singular perspectives increase the likelihood of solving wrong problems or non-problems (Kilman & Mitroff, 1979). During this phase, diagnostic behavioural research is hence employed making use of various data sources (e.g., observations, interviews) to better understand the target behaviour. To integrate findings from this diagnostic research with policy expertise, findings are validated collaboratively. This step helps to overcome the bounded rationality of BITs using collective scrutiny.

The diagnostic purpose of this phase overlaps largely with, for instance, the Diagnosis step from the guidelines of the Australian BIT (Ames & Hiscox, 2016) and the Analysis step from BASIC (OECD, 2019). In contrast to both, the framework does not anticipate any behavioural determinants or prescribe how to analyse behaviour. This way it can accommodate various behavioural determinants (e.g., choice related, sociological) opening up a large solution space that targets various determinants. To survey this solution space, BITs can use an analytical approach addressing relevant determinants or a designerly approach using participative and creative techniques to generate novel intervention ideas (for a discussion of both approaches see Einfeld & Blomkamp, forthcoming).

Design and Report

The objective of the design and report phase is to design policy proposals that include behavioural interventions and to report research findings. Note, that the first objective is located in the policy sphere implying a responsibility of the BIT that goes beyond the delivery of behavioural interventions alone (Congdon & Shankar, 2015; Hansen, 2018). Rather, interventions need to be translated into policy proposals which are embedded in the institution’s policy and administrative landscape. The literature on knowledge utilisation has identified abstractness as an important barrier for successful utilisation (Rich, 1997). As such, translating interventions to actionable policy proposals is thought to increase the likelihood that decision-makers adopt such proposals compared to a situation where technical evaluations of interventions would be shared (Mead, 2015).

To translate interventions into policy proposals, the best intervention ideas from the exploration phase must be selected. To address the policy issue, the selected interventions should have the potential to not only affect the target behaviour (i.e., statistical relevance) but also have practically meaningful effects on policy outcomes (i.e., clinical significance). In addition, BITs may use other relevant criteria such as affordability, practicability, cost-effectiveness, acceptance, side-effects, and equity (Michie et al., 2011).

With many BITs committed to a scientific agenda and evidence-based practices, they often trial some of their interventions in the field (Haynes et al., 2012). As a consequence, a differentiation can be made between policy proposals that are informed by such field trials (“behaviourally-tested”) and those that are informed by a review of pre-existing evidence (“behaviourally-informed”; Lourenço et al., 2016, pp. 15–16). For a proper reporting of trials, BITs can publish in peer-reviewed academic journals and/or use standardised reporting checklists (Cotterill et al., 2021). For this, it is important that publishing decisions and methodological choices are untouched by unqualified interests (e.g., political) and that conduction of the research is supervised by independent researchers.

Implementation and Evaluation

The objective of the implementation and evaluation phase is twofold. The first objective is to achieve implementation of policy proposals produced during the design and report phase. Acknowledging that implementation is not a onetime event (e.g., sharing the policy proposal), BITs need to support and stimulate an implementation process (Ogden & Fixsen, 2014). For instance, they may need to compete for the attention of decision-makers or advice on adaptations of the proposal. As mentioned earlier, implementation can be improved by collaborating with stakeholders from early on and relying on the strategies of policy entrepreneurs. Moreover, BITs may actively look for transfer and scaling opportunities. It is beneficial for implementation when the same individuals continue to be involved during this phase, because they tend to have developed an understanding of the negotiables and non-negotiables for successful scaling (Al-Ubaydli et al., 2021).

The second objective of this phase is to evaluate how the policy issue had been addressed (i.e., a process evaluation) and how policy was affected (i.e., an outcome evaluation). Conducting such evaluations in a formative way (i.e., informing development) can help BITs to learn and adapt over time. This is important since many new BITs are likely to initially follow popular role models of integrating BIs into policy which, however, often need adjustments when introduced to practice (Feitsma, 2019, 2020). Hence, evaluation and active learning can stimulate a process of diversification where BITs adapt to their contexts.

Illustration of the Framework

As mentioned before, the framework originates in part from the authors’ experience with BIG’R. BIG’R was a collaboration between the Erasmus University Rotterdam and the municipality of Rotterdam with both institutions acting as equal partners (i.e., a “boundary organisation”; Guston, 2001). All authors were BIG’R members for at least one year as university researchers or the academic head. In the following, we illustrate how the framework was used within BIG’R. Specifically, we discuss how different job functions provided the ingredients for the framework and how the BIG’R working method relates to the four phases of the procedure for combining the ingredients. In addition, we refer the reader to the supplementary material for a description of how the framework was applied to one policy issue.

Ingredients: The BIG’R Job Functions

The BIG’R job functions that were relevant for the framework were the BIG’R management, case proposers, municipality and university researchers, and policy domain advisors. To address policy issues on a case-by-case basis, representatives from these functions except the BIG’R management collaborated in so-called policy case teams. Each team consisted of at least two professionals contributing policy expertise (the case proposer and the policy domain advisor) and two researchers who provided checks and balances that could help neutralise the threats from inappropriate power assertions corrupting public policy or science.

BIG’R Management

The BIG’R management consisted of the municipal project leader and the academic head. The former was an experienced project leader from the municipality and the latter a behavioural science professor experienced with field research. This way, the management was able to combine the technical and conceptual knowledge (Katz, 1955) from science and policy that was needed for the selection of policy issues, supervision of BIG’R members, and quality control. The BIG’R management also served as a shared authority for all BIG’R members. By managing the budget, interaction with stakeholders (e.g., municipality alderman), and operations, the management contributed collaboration efforts, especially since it made many decisions employing a participatory rather than a top-down approach. In practice this meant that daily operations were discussed and coordinated during a weekly meeting with representatives from all BIG’R functions.

The management also determined the strategy of BIG’R. Specifically, it set an emphasis on innovation and setting up BIG’R for the first two years, learning how to integrate BIs into policy; and a more production and implementation focussed working mode for the following two years. Importantly, the academic head as part of the management had the authority to create a proper research environment so that threats to good scientific practice could be overcome.

Case Proposer

Any Rotterdam public servant could become a case proposer by submitting a policy issue with a behavioural dimension that was approved by the BIG’R management. To facilitate later implementation, BIG’R ensured at the start that proposers had the mandate and resources for addressing the policy issue (e.g., budget). The collaboration with BIG’R was free for case proposers, however. The case proposers sensing a problem and initiating the policy case ensured their willingness to collaborate.

Case proposers were important members of policy case teams attending meetings and contributing their experience and knowledge about the policy issue, including knowledge about relevant stakeholders. Typically, case proposers had several years of experience with their position and hence the policy issue. This way, the close collaboration with case proposers helped to overcome the challenge of a limited understanding of context. Indeed, from our experience regular interactions were a necessity for the successful completion of policy cases. If the policy case team conducted field research, case proposers identified practical research constraints (e.g., sample size limitations) and provided research support (e.g., obtaining informed consent).

Researchers

BIG’R employed university researchers as well as researchers seconded from the municipality’s research department. All researchers had at least a university degree in a social and behavioural science discipline (e.g., psychology, public health, economics, pedagogy) and were trained in qualitative and quantitative methods. Both types of researchers collaborated on shared research tasks (i.e., diagnostic research, intervention design, intervention evaluation) providing checks and balances. Municipality researchers were in addition responsible for writing policy proposals and other tasks more in the interest of the municipality, particularly contributing knowledge from municipality research and ensuring adherence to municipality research protocols. University researchers were responsible for scientific tasks, particularly contributing scientific knowledge and expertise, meeting the criteria of good scientific practice, and publishing findings in peer-reviewed journals. In an attempt to overcome threats to good scientific practice, their professional responsibilities were with the university only. They were regular members of their department and subjected to the departmental hierarchy and regulations. However, as they regularly worked at and with the municipality, their research can be described as “embedded research” (Ward et al., 2021).

Policy Domain Advisor

The municipality administration encompassed seven subdivisions focussing on relevant aspects of Dutch local governments (e.g., work and income, urban development). In acknowledging the scattered nature of knowledge (Muramatsu & Barbieri, 2017), BIG’R recruited one public servant from each of the seven subdivisions to become a part-time policy domain advisor (PDA) for BIG’R providing specialised policy expertise. At the same time, all PDAs continued with their work (e.g., as legal consultant) within their subdivisions to maintain their network and information channels. From there, PDAs recruited case proposers and identified relevant policy issues that could be addressed by BIG’R.

PDAs stood in-between case proposers and researchers, mediating between them to integrate needs from research and policy. They can be described as knowledge brokers (Feitsma, 2019) with a strong focus on bridging, translating, and facilitating. This way, they helped contextualising both the research and policy proposals in the current policy and administrative landscape whilst also being responsible for writing policy proposals. The focus of PDAs was more process-oriented and to ensure the progress of policy cases. This required project management competencies (e.g., coordination and planning). In addition, PDAs identified practical research constraints and provided research support when needed.

PDAs can be described as policy entrepreneurs aiming to achieve policy change within their policy domains. Specifically, they advocated the implementation of BIG’R policy proposals and the application of BIs in general. For instance, they gave presentations at municipality fairs or informed the senior management about BIG’R. Importantly, describing PDAs as policy entrepreneurs adds the notion of a promotional self-interest to the rather neutral image of the knowledge broker. We believe this aspect to be important for a BIT to achieve change (for an illustration see John, 2014).

Procedure: The BIG’R Working Method

The BIG’R working method equipped the four phases of the framework with distinct steps to be carried out and embedded the framework in the administrative context of the municipality.

After a Rotterdam public servant had proposed a policy issue, a BIG’R researcher and the PDA, whose subdivision the issue concerned, interviewed the case proposer and conducted desk research to fill in a submission form (see supplementary material) that provided a snapshot of the behaviour, its plausible determinants, its context, and its relevance. The form served as input for the BIG’R management to select policy cases based on their societal, administrative, and scientific relevance. The latter aspect was important because BIG’R aimed to publish its findings in academic journals.

Starting with a policy issue rather than a specific BI allowed BIG’R to address a diverse set of policy issues from various stages of the policy cycle (e.g., ex-ante policy appraisal, policy design, policy implementation) and with differing scopes (e.g., one-off behaviours, culture change, intergroup behaviours). Some of them were underexplored by behavioural sciences, such as individuals disposing fat and oil into the sewage system causing expensive blockings (e.g., Mattsson et al., 2014). Yet, requiring case proposers as a starting point meant that most policy issues related to the micro level from proposers’ individual responsibilities (e.g., improving a specific letter) rather than the macro level (e.g., improving general municipal communication).

At the start of the exploration phase, what was known about the target behaviour was largely based on the case proposer as an informant. Diagnostic research was therefore often necessary to reach an updated understanding of the policy issue that integrated BIs. For this, BIG’R reviewed the related literature and often conducted field observations and/or interviewed various stakeholders. To summarise the updated understanding, the policy case teams used different visualisations and descriptions on a case-by-case basis (e.g., user journeys and fuzzy cognitive maps; Kosko, 1986). The fact that BIG’R identified cultural and sociological determinants for multiple cases illustrates that anticipating only choice related determinants can limit the understanding of target behaviours. For instance, when investigating the illegal use of fireworks in a football stadium, BIG’R identified cultural norms and values as major determinants that could hardly be changed using a behaviour change approach (Merkelbach et al., 2021).

The face validity of this understanding and the prospects of a behavioural solution were in most cases then validated during a brainstorming session with other BIG’R members and relevant stakeholders. For one case, the understanding was judged insufficient to proceed during such a session, illustrating that this step helped to overcome the bounded rationality of BIs professionals. In addition, brainstorming sessions served to generate novel intervention ideas and to improve interventions known from the literature or practice. To stimulate creativity during the sessions, participants were primed (Rietzschel et al., 2007) using common BIs. In practice this could mean that they were asked to think of solutions making the target behaviour easier (the “e” in EAST; BIT, 2014).

Policy case teams selected interventions to be included in policy proposals afterwards based on their feasibility (e.g., financial, acceptance) as well as believed effectiveness. Although BIG’R did not pre-commit to any type of interventions, in practice the policy proposals often included nudges (Thaler & Sunstein, 2008). Beyond nudges, BIG’R for instance suggested a participatory programme to define a code of conduct for the use of electric charging stations (see supplementary material). Whenever possible, BIG’R tested (some aspects of) the proposed interventions in the field using both quantitative and qualitative methods (e.g., Dewies et al., 2021).

Policy proposals were presented and discussed with case proposers and other relevant municipality decision-makers to address open questions and ease implementation. However, it was a learning process for BIG’R to prepare policy proposals rather than technical research reports. In the end, proposals sometimes took the format of infographic-like summaries because from our experience case proposers demonstrated little interest in technical aspects of the research, plausibly because most case proposers had not received science training to understand and scrutinise the research. This meant that in practice, case proposers relied on authority-based rather than evidence-based policy making (Mendel, 2018).

In addition to policy proposals, the university researchers aimed to report findings in peer-reviewed academic journals. Importantly, the university and the municipality confirmed that methodological choices and publishing decisions would be the responsibility of the university to overcome threats to good scientific practice. The municipality and the university also agreed on a formal framework for sharing data between the two institutions that included the right to publish anonymous data.

It was beneficial for the implementation of policy proposals that BIG’R was embedded in the municipality administration, and that PDAs could approach proposers naturally after the proposal had been shared. Moreover, PDAs held a legitimate position for advocating the use of BIG’R proposals within their subdivision. PDAs hence acted as policy entrepreneurs during this phase. Ideally, they became part of “implementation teams” and identified opportunities for upscaling. Researchers conducting the original research could easily be consulted for this by the PDAs if needed.

To evaluate the BIG’R working method, the process as well as the outcomes were systematically evaluated on a case-by-case basis (for a research plan see https://osf.io/f3av9). Specifically, policy case team members were invited to fill in questionnaires covering process aspects of the collaboration (e.g., satisfaction with the collaboration and the policy proposal) and case proposers were interviewed to investigate the degree of implementation of policy proposals. However, this evaluation was more summative (i.e., informing assessment) than formative as it took place during the last year of BIG’R.

Discussion

This paper offers a framework for integrating BIs into the making and implementation of public policy. The framework is depicted in Fig. 1 and describes the what, when, and who of integrating BIs into public policy. We believe that the framework is more complete than earlier frameworks which often lack descriptions of actors in the integration. Moreover, the framework incorporates ways to overcome four common challenges associated with the integration of BIs that we discuss below (for a summary see Table 1).

However, as the framework is closely related to our experience and practice within BIG’R, we note that the framework is a solution but not necessarily the solution to overcome these challenges. Future applications of the framework likely require some adaptations. As an example, BIG’R differed from other BITs because it was run by both government and academia. Most other BITs are run by either of these institutions. For instance, BITs run by government likely choose different projects because they can disregard their scientific relevance, and they likely focus more on using BIs rather than generating novel BIs by trialling them in the field. We hence consider the university partnership key for important aspects of the framework.

Overcoming an Overreliance on RCTs

The framework allows BITs to embrace various research methods. It does not deterministically lead to the planning and execution of RCTs, but encourages users to reflect on their methodological choices on a case-by-case-basis. Ewert (2020), and Ewert and Loer (2021) have recently argued for such a methodological diversification in the field of behavioural public policy. In fact, BIG’R conducted multiple cases where RCTs were not feasible or inadequate. This strength came, however, at a cost. First, it required increased efforts in terms of time and interaction for policy case team members to explore and choose research options. In addition, the framework required the researchers to be methodological generalists rather than RCTs specialists. We believe, however, that compared to a situation when only RCTs are used, opening a debate about the most adequate research method(s) leads to more informed and better methodological choices.

Overcoming a Limited Understanding of Context

From our experience with BIG’R, several features of the framework can help to overcome limited understandings of context, enabling the contextualised development and testing of interventions and writing of policy proposals. Importantly, the framework does not entail any (disciplinary) assumptions regarding how to interpret behaviour and how to change it. Earlier frameworks tend to anticipate specific behavioural determinants, namely choice related determinants on the micro level (e.g., food ordering), whilst excluding others, namely structural ones on the macro level (e.g., presence and dispersion of fast food restaurants). This neglection also means pre-committing to interventions that so far have dominated the integration of BIs into policy. In contrast to that, the framework presented here encourages the integration of divergent perspectives and sorts of knowledge from different disciplines. To illustrate, within BIG’R the case proposer typically contributed practitioner’s knowledge, the PDA typically contributed local policy knowledge, the municipal researcher contributed findings from local research, and the university researchers mainly contributed the BIs knowledge and expertise. In practice, it required, however, significant efforts to survey and integrate theories, research findings, and various perspectives on a case-by-case basis.

Overcoming Threats to Good Scientific Practice

Our framework contains multiple aspects that may help to overcome threats to good scientific practice. First, to strengthen the integrity and position of researchers, the framework assumes independence of researchers and an organisational mandate to enforce adherence to criteria of good scientific practice. Within BIG’R, the management possessed the mandate to create an adequate research environment as well as the knowledge that was required for that. Independence further means that methodological choices and publishing decisions for academic articles remain with researchers. Within BIG’R, professional liabilities of university researchers were with the university only which strengthened their position in relation to the municipality and policy practice. A disadvantage of being rigorous about scientific practices may be seen in the fact that all research becomes subject to scientific obligations. Within BIG’R for instance, requirements from the university for informed consents were often stricter than those from the municipality, and all empirical research had to be approved by the university’s ethics committee which sometimes caused short delays in the execution of policy cases. The fact that science slowed down some policy cases can be interpreted, however, as a confirmation that science was not subordinated to municipal deadlines. Some obligations were, however, directly linked to positive outcomes. For instance, the obligations associated with scientific reporting and academic peer-review plausibly improved the reporting and evaluation of trials.

Formal agreements about data sharing practices enable open science practices (e.g., publication of anonymised data sets) to become a routine. Yet, for BIG’R a considerable amount of effort was needed to draft those agreements even with the help of legal experts from both institutions. Moreover, following the agreements caused some extra bureaucracy because forms needed to be filled in and approved by the management for each data sharing event. Some decisions related to good scientific practice still needed to be made on a case-by-case basis though, for instance decisions related to experimental designs.

Overcoming Bounded Rationality

With the lack of both a standard research methodology and no a priori assumptions about how to interpret and change behaviour, the framework deliberately shifts responsibility to team level decision-making. As a result, it can be said to increase the potential for human error and biases, and the risks related to bounded rationality (i.e., decision-making being impaired by limited cognitive capacities). In practice, however, following the framework means that policy issues are investigated from a behavioural perspective as an addition to the standard approach. Rather than fully adopting the policy issue and addressing it with an exclusive focus on BIs, BIs are integrated with existing knowledge and experience concerning the policy issue. For BIG’R this meant that the administrative responsibility for the policy issue remained with the case proposer. Rather than the proposer addressing the policy issue in a standard approach, the BIG’R resources became available to scrutinise that standard approach, aiming for an approach that integrated BIs. We hypothesise that this enhanced the problem-solving capacity of the municipality locally (Gray, 2000; Rogers & Weber, 2010), and that the risks associated with rationally bounded public servants were lowered. However, this required making use of the resources invested in BIG’R.

Comparison With Implementation Science Frameworks

As the framework serves the implementation of evidence-based practices that are informed by BIs, the framework becomes available for a comparison with established and more general implementation frameworks (i.e., Damschroder et al., 2009; Fixsen et al., 2005). Admittedly, the framework lacks specificity and scope in comparison to those frameworks. Nevertheless, we believe the framework to be a valuable contribution because BITs possess a unique position at the science-policy nexus (Mukherjee & Giest, 2020; Strassheim, 2019) which plausibly requires them to utilise specialised frameworks. Borrowing terminology from the Active Implementation Frameworks (Fixsen et al., 2005), at least within BIG’R, “structural features for implementation” (i.e., creating dedicated implementation teams and developing implementation plans) were almost absent and dependent on processes stimulated by BIG’R or case proposers. A comparison with the Consolidated Framework for Implementation Research (Damschroder et al., 2009) points moreover to a lack of dedicated efforts to attract and involve appropriate individuals for implementation (e.g., opinion leaders, champions). This was different for the BIT in the United Kingdom for instance, which was located at the Cabinet Office and in comparison to BIG’R could more easily capitalise on its political support to achieve implementation (John, 2014; Lourenço et al., 2016). BIG’R deliberately aimed to stay away from political dynamics or affiliations to ensure its continued existence. However, this may have weakened its position for achieving implementation. Future use of the framework should take these aspects into account by for instance focussing on politically relevant policy issues.

Conclusion

The popular standard models of applying BIs propagated through the book Nudge (Thaler & Sunstein, 2008) and the BIT in the United Kingdom do not consider all relevant aspects of integrating BIs into public policy. Therefore, we developed a comprehensive framework that deviates from current models to overcome important challenges associated with integrating BIs into public policy, contributing to a recent phase of diversification in BIs integration (Strassheim, 2021). The framework offers more freedom for methodological choices, encourages and strengthens good scientific practice, incorporates a broad understanding of context, and improves the potential for rational decision-making.

Availability of Data and Material

Not applicable.

Code Availability

Not applicable.

References

Afif, Z., Islan, W. W., Calvo-Gonzalez, O., & Dalton, A. G. (2018). Behavioral science around the world: Profiles of 10 countries (Report No. 132610). World Bank. Retrieved from http://documents.worldbank.org/curated/en/710771543609067500/Behavioral-Science-Around-the-World-Profiles-of-10-Countries

Al-Ubaydli, O., Lee, M. S., List, J. A., Mackevicius, C. L., & Suskind, D. (2021). How can experiments play a greater role in public policy? Twelve proposals from an economic model of scaling. Behavioural Public Policy, 5(1), 2–49. https://doi.org/10.1017/bpp.2020.17

Ames, P., & Hiscox, M. (2016). Guide to developing behavioural interventions for randomised controlled trials. Australia Government. Retrieved from https://www.dpc.nsw.gov.au/assets/dpc-nsw-gov-au/publications/Behavioural-Insights-Unit/7091d27d37/BETA-Guide-to-developing-behavioural-interventions-for-randomised-controlled-trials.pdf

Ansell, C., Sørensen, E., & Torfing, J. (2017). Improving policy implementation through collaborative policymaking. Policy & Politics, 45(3), 467–486. https://doi.org/10.1332/030557317x14972799760260

Ball, S., & Head, B. W. (2021). Behavioural insights teams in practice: Nudge missions and methods on trial. Policy & Politics, 49(1), 105–120. https://doi.org/10.1332/030557320x15840777045205

Ball, S., Hiscox, M., & Oliver, T. (2017). Starting a behavioural insights team: Three lessons from the Behavioural Economics Team of the Australian government. Journal of Behavioral Economics for Policy, 1(1), 21–26.

Behavioural Insights Team. (2014). EAST: Four simple ways to apply behavioural insights. Cabinet Office. Retrieved from https://www.bi.team/publications/east-four-simple-ways-to-apply-behavioural-insights/

Benartzi, S., Beshears, J., Milkman, K. L., Sunstein, C. R., Thaler, R. H., Shankar, M., et al. (2017). Should governments invest more in nudging? Psychological Science, 28(8), 1041–1055. https://doi.org/10.1177/0956797617702501

Bolton, A., & Newell, B. R. (2017). Applying behavioural science to government policy: Finding the goldilocks zone. Journal of Behavioral Economics for Policy, 1(1), 9–14.

Bronfenbrenner, U. (1977). Toward an experimental ecology of human development. American Psychologist, 32(7), 513–531. https://doi.org/10.1037/0003-066X.32.7.513

Congdon, W. J., & Shankar, M. (2015). The white house social & behavioral sciences team: Lessons learned from year one. Behavioral Science & Policy, 1(2), 77–86. https://doi.org/10.1353/bsp.2015.0010

Cotterill, S., John, P., & Johnston, M. (2021). How can better monitoring, reporting and evaluation standards advance behavioural public policy? Policy & Politics, 49(1), 161–179. https://doi.org/10.1332/030557320x15955052119363

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexanders, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4, Article 50. https://doi.org/10.1186/1748-5908-4-50

DellaVigna, S., & Linos, E. (2020). RCTs to scale: Comprehensive evidence from two nudge units (NBER Working Paper No. 27594). National Bureau of Economic Research. https://doi.org/10.3386/w27594

Dewies, M., Schop-Etman, A., Rohde, K. I. M., & Denktaş, S. (2021). Nudging is ineffective when attitudes are unsupportive: An example from a natural field experiment. Basic and Applied Social Psychology, 43(4), 213–225. https://doi.org/10.1080/01973533.2021.1917412

Dunleavy, K., Noble, M., & Andrews, H. (2019). The emergence of the publicly engaged research manager. Research for All, 3(1), 105–124. https://doi.org/10.18546/rfa.03.1.09

Einfeld, C. (2019). Nudge and evidence based policy: Fertile ground. Evidence & Policy: A Journal of Research, Debate and Practice, 15(4), 509–524. https://doi.org/10.1332/174426418x15314036559759

Einfeld, C., & Blomkamp, E. (forthcoming). Nudge and co-design: Complementary or contradictory approaches to policy innovation? Policy Studies. https://doi.org/10.1080/01442872.2021.1879036

Ewert, B. (2020). Moving beyond the obsession with nudging individual behaviour: Towards a broader understanding of behavioural public policy. Public Policy and Administration, 35(3), 337–360. https://doi.org/10.1177/0952076719889090

Ewert, B., & Loer, K. (2021). Advancing behavioural public policies: In pursuit of a more comprehensive concept. Policy & Politics, 49(1), 25–47. https://doi.org/10.1332/030557320x15907721287475

Exec. Order No. 13707, 3 C.F.R. 371. (2015). Retrieved from https://www.govinfo.gov/content/pkg/CFR-2016-title3-vol1/pdf/CFR-2016-title3-vol1-eo13707.pdf

Feitsma, J. (2019). Brokering behaviour change: The work of behavioural insights experts in government. Policy & Politics, 47(1), 37–56. https://doi.org/10.1332/030557318x15174915040678

Feitsma, J. (2020). Rationalized incrementalism: How behavior experts in government negotiate institutional logics. Critical Policy Studies, 14(2), 156–173. https://doi.org/10.1080/19460171.2018.1557067

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature (FMHI Publication No. 231). National Implementation Research Network. Retrieved from http://www.fpg.unc.edu/nirn/resources/publications/Monograph/

Furman, J. (2016). Applying behavioral sciences in the service of four major economic problems. Behavioral Science & Policy, 2(2), 1–9. https://doi.org/10.1353/bsp.2016.0011

Gibbons, M., Limoges, C., Nowotny, H., Schwartzman, S., Scott, P., & Trow, M. (1994). The new production of knowledge: The dynamics of science and research in contemporary societies. SAGE Publications.

Gray, B. (2000). Assessing inter-organizational collaboration: Multiple conceptions and multiple methods. In D. Faulkner & M. de Rond (Eds.), Cooperative strategy: Economic, business, and organizational issues (pp. 243–260). Oxford University Press.

Guston, D. H. (2001). Boundary organizations in environmental policy and science: An introduction. Science, Technology, & Human Values, 26(4), 399–408. https://doi.org/10.1177/016224390102600401

Hall, C. C., Galvez, M. M., & Sederbaum, I. M. (2014). Assumptions about behavior and choice in response to public assistance. Policy Insights from the Behavioral and Brain Sciences, 1(1), 137–143. https://doi.org/10.1177/2372732214550833

Hallsworth, M., List, J. A., Metcalfe, R. D., & Vlaev, I. (2017). The behavioralist as tax collector: Using natural field experiments to enhance tax compliance. Journal of Public Economics, 148, 14–31. https://doi.org/10.1016/j.jpubeco.2017.02.003

Halpern, D. (2015). Inside the nudge unit: How small changes can make a big difference. WH Allen.

Halpern, D., & Mason, D. (2015). Radical incrementalism. Evaluation, 21(2), 143–149. https://doi.org/10.1177/1356389015578895

Halpern, D., & Sanders, M. (2016). Nudging by government: Progress, impact, & lessons learned. Behavioral Science & Policy, 2(2), 52–65. https://doi.org/10.1353/bsp.2016.0015

Hansen, P. (2018). What are we forgetting? Behavioural Public Policy, 2(2), 190–197. https://doi.org/10.1017/bpp.2018.13

Hauser, O. P., Gino, F., & Norton, M. I. (2018). Budging beliefs, nudging behaviour. Mind & Society, 17, 15–26. https://doi.org/10.1007/s11299-019-00200-9

Haynes, L., Service, O., Goldacre, B., & Torgerson, D. (2012). Test, learn, adapt: Developing public policy with randomised controlled trials. Behavioural Insights Team. Retrieved from https://www.bi.team/publications/test-learn-adapt-developing-public-policy-with-randomised-controlled-trials/

Howlett, M. (2018). Matching policy tools and their targets: Beyond nudges and utility maximisation in policy design. Policy & Politics, 46(1), 101–124. https://doi.org/10.1332/030557317x15053060139376

Huitema, D., & Meijerink, S. (2010). Realizing water transitions: The role of policy entrepreneurs in water policy change. Ecology and Society, 15(2), Article 26. https://doi.org/10.5751/es-03488-150226

Hummel, D., & Maedche, A. (2019). How effective is nudging? A quantitative review on the effect sizes and limits of empirical nudging studies. Journal of Behavioral and Experimental Economics, 80, 47–58. https://doi.org/10.1016/j.socec.2019.03.005

John, P. (2014). Policy entrepreneurship in UK central government: The behavioural insights team and the use of randomized controlled trials. Public Policy and Administration, 29(3), 257–267. https://doi.org/10.1177/0952076713509297

John, P., & Stoker, G. (2019). Rethinking the role of experts and expertise in behavioural public policy. Policy & Politics, 47(2), 209–226. https://doi.org/10.1332/030557319x15526371698257

Johnson, E. J., & Goldstein, D. (2003). Do defaults save lives? Science, 302(5649), 1338–1339. https://doi.org/10.1126/science.1091721

Katz, R. L. (1955). Skills of an effective administrator. Harvard Business Review Press.

Kilmann, R. H., & Mitroff, I. I. (1979). Problem defining and the consulting/intervention process. California Management Review, 21(3), 26–33. https://doi.org/10.2307/41165304

Kosko, B. (1986). Fuzzy cognitive maps. International Journal of Man-Machine Studies, 24(1), 65–75. https://doi.org/10.1016/S0020-7373(86)80040-2

Likki, T., & Varazzani, C. (2017). Applying behavioural insights to reduce pregnancy- and maternity-related discrimination view and disadvantage. Equality and Human Rights Commission. Retrieved from https://www.equalityhumanrights.com/en/publication-download/applying-behavioural-insights-reduce-pregnancy-and-maternity-related

Lodge, M., & Wegrich, K. (2016). The rationality paradox of nudge: Rational tools of government in a world of bounded rationality. Law & Policy, 38(3), 250–267. https://doi.org/10.1111/lapo.12056

Lourenço, J. S., Ciriolo, E., Almeida, S. R., & Troussard, X. (2016). Behavioural insights applied to policy. Publications Office of the European Union. https://doi.org/10.2760/707591

Manning, L. A., Dalton, A. G., Afif, Z. Vakis, R., Naru, F. (2020). Behavioral science around the world: Volume two—Profiles of 17 international organizations (Report No. 153337). World Bank. Retrieved from http://documents.worldbank.org/curated/en/453911601273837739/Behavioral-Science-Around-the-World-Volume-Two-Profiles-of-17-International-Organizations

Mattsson, J., Hedström, A., Viklander, M., & Blecken, G.-T. (2014). Fat, oil, and grease accumulation in sewer systems: Comprehensive survey of experiences of Scandinavian municipalities. Journal of Environmental Engineering, 140(3), Article 04014003. https://doi.org/10.1061/(asce)ee.1943-7870.0000813

McGann, M., Blomkamp, E., & Lewis, J. M. (2018). The rise of public sector innovation labs: Experiments in design thinking for policy. Policy Sciences, 51, 249–267. https://doi.org/10.1007/s11077-018-9315-7

Mead, L. M. (2015). Only connect: Why government often ignores research. Policy Sciences, 48, 257–272. https://doi.org/10.1007/s11077-015-9216-y

Meder, B., Fleischhut, N., & Osman, M. (2018). Beyond the confines of choice architecture: A critical analysis. Journal of Economic Psychology, 68, 36–44. https://doi.org/10.1016/j.joep.2018.08.004

Mendel, J. (2018). Unpublished policy trials, the risk of false discoveries and the persistence of authority-based policy. Evidence & Policy: A Journal of Research, Debate and Practice, 14(2), 323–334. https://doi.org/10.1332/174426416x14779397727921

Merkelbach, I, Dewies, M, Noordzij, G., & Denktaş, S. (2021). No pyro, no party: Social factors, deliberate choices, and shared fan culture determine the use of illegal fireworks in a soccer stadium [version 1; peer review: awaiting peer review]. F1000Research. https://doi.org/10.12688/f1000research.53245.1

Michie, S., van Stralen, M. M., & West, R. (2011). The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Science, 6, Article 42. https://doi.org/10.1186/1748-5908-6-42

Morgan, D. L. (2007). Paradigms lost and pragmatism regained: Methodological implications of combining qualitative and quantitative methods. Journal of Mixed Methods Research, 1(1), 48–76. https://doi.org/10.1177/2345678906292462

Morgan, D. L. (2014). Pragmatism as a paradigm for social research. Qualitative Inquiry, 20(8), 1045–1053. https://doi.org/10.1177/1077800413513733

Mukherjee, I., & Giest, S. (2020). Behavioural Insights Teams (BITs) and policy change: An exploration of impact, location, and temporality of policy advice. Administration & Society, 52(10), 1538–1561. https://doi.org/10.1177/0095399720918315

Muramatsu, R., & Barbieri, F. (2017). Behavioral economics and Austrian economics: Lessons for policy and the prospects of nudges. Journal of Behavioral Economics for Policy, 1(1), 73–78.

Netherlands Scientific Council for Government Policy. (2014). Met kennis van gedrag beleid maken (Report No. 92). Wetenschappelijke Raad voor het Regeringsbeleid. Retrieved from https://www.wrr.nl/publicaties/rapporten/2014/09/10/met-kennis-van-gedrag-beleid-maken

Nilsen, P. (2015). Making sense of implementation theories, models and frameworks. Implementation Science, 10, Article 53. https://doi.org/10.1186/s13012-015-0242-0

Nørgaard, A. S. (2018). Human behavior inside and outside bureaucracy: Lessons from psychology. Journal of Behavioral Public Administration, 1(1), 1–16. https://doi.org/10.30636/jbpa.11.13

OECD. (2017). Behavioural insights and public policy: Lessons from around the world. OECD Publishing. https://doi.org/10.1787/9789264270480-en

OECD. (2019). Tools and ethics for applied behavioural insights: The BASIC toolkit. OECD Publishing. https://doi.org/10.1787/9ea76a8f-en

Ogden, T., & Fixsen, D. L. (2014). Implementation science: A brief overview and a look ahead. Zeitschrift Für Psychologie, 222(1), 4–11. https://doi.org/10.1027/2151-2604/a000160

Olejniczak, K., Śliwowski, P., & Leeuw, F. (2020). Comparing behavioral assumptions of policy tools: Framework for policy designers. Journal of Comparative Policy Analysis: Research and Practice, 22(6), 498–520. https://doi.org/10.1080/13876988.2020.1808465

Osman, M., Radford, S., Lin, Y., Gold, N., Nelson, W., & Löfstedt, R. (2018). Learning lessons: How to practice nudging around the world. Journal of Risk Research, 24(12), 969–980. https://doi.org/10.1080/13669877.2018.1517127

Pearce, W., & Raman, S. (2014). The new randomised controlled trials (RCT) movement in public policy: Challenges of epistemic governance. Policy Sciences, 47, 387–402. https://doi.org/10.1007/s11077-014-9208-3

Rich, R. F. (1997). Measuring knowledge utilization: Processes and outcomes. Knowledge and Policy, 10, 11–24. https://doi.org/10.1007/bf02912504

Rietzschel, E. F., Nijstad, B. A., & Stroebe, W. (2007). Relative accessibility of domain knowledge and creativity: The effects of knowledge activation on the quantity and originality of generated ideas. Journal of Experimental Social Psychology, 43(6), 933–946. https://doi.org/10.1016/j.jesp.2006.10.014

Rogers, E., & Weber, E. P. (2010). Thinking harder about outcomes for collaborative governance arrangements. The American Review of Public Administration, 40(5), 546–567. https://doi.org/10.1177/0275074009359024

Sanders, M., Snijders, V., & Hallsworth, M. (2018). Behavioural science and policy: Where are we now and where are we going? Behavioural Public Policy, 2(2), 144–167. https://doi.org/10.1017/bpp.2018.17

Strassheim, H. (2019). Behavioural mechanisms and public policy design: Preventing failures in behavioural public policy. Public Policy and Administration, 36(2), 187–204. https://doi.org/10.1177/0952076719827062

Strassheim, H. (2021). Who are behavioural public policy experts and how are they organised globally? Policy & Politics, 49(1), 69–86. https://doi.org/10.1332/030557320x15956825120821

Strassheim, H., Jung, A., & Korinek, R.-L. (2015). Reframing expertise: The rise of behavioural insights and interventions in public policy. In A. Berthoin Antal, M. Hutter, & D. Stark (Eds.), Moments of valuation: Exploring sites of dissonance (pp. 249–268). Oxford University Press. https://doi.org/10.1093/acprof:oso/9780198702504.003.0013

Szaszi, B., Palinkas, A., Palfi, B., Szollosi, A., & Aczel, B. (2018). A systematic scoping review of the choice architecture movement: Toward understanding when and why nudges work. Journal of Behavioral Decision Making, 31(3), 355–366. https://doi.org/10.1002/bdm.2035

Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. Yale University Press.

Thomas, M. D. (2019). Reapplying behavioral symmetry: Public choice and choice architecture. Public Choice, 180, 11–25. https://doi.org/10.1007/s11127-018-0537-1

Torfing, J. (2019). Collaborative innovation in the public sector: The argument. Public Management Review, 21(1), 1–11. https://doi.org/10.1080/14719037.2018.1430248

Voß, J.-P., & Simons, A. (2018). A Novel understanding of experimentation in governance: Co-producing innovations between “lab” and “field.” Policy Sciences, 51, 213–229. https://doi.org/10.1007/s11077-018-9313-9

Ward, V., Tooman, T., Reid, B., Davies, H., & Marshall, M. (2021). Embedding researchers into organisations: A study of the features of embedded research initiatives. Evidence & Policy: A Journal of Research, Debate and Practice. https://doi.org/10.1332/174426421X16165177580453

Weaver, R. K. (2015). Getting people to behave: Research lessons for policy makers. Public Administration Review, 75(6), 806–816. https://doi.org/10.1111/puar.12412

Whitehead, M., Jones, R., Lilley, R., Howell, R., & Pykett, J. (2018). Neuroliberalism: Cognition, context, and the geographical bounding of rationality. Progress in Human Geography, 43(4), 632–649. https://doi.org/10.1177/0309132518777624

Whitehead, M., Jones, R., Lilley, R., Pykett, J., & Howell, R. (2017). Neuroliberalism: Behavioural government in the twenty-first century. Routledge.

World Bank. (2015). World Development Report 2015: Mind, society, and behavior. World Bank. https://doi.org/10.1596/978-1-4648-0342-0

Acknowledgements

We thank Astrid Shop-Etman for her contributions to earlier manuscript versions.

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no relevant financial or non-financial interests to disclose.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dewies, M., Denktaş, S., Giel, L. et al. Applying Behavioural Insights to Public Policy: An Example From Rotterdam. Glob Implement Res Appl 2, 53–66 (2022). https://doi.org/10.1007/s43477-022-00036-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43477-022-00036-5