Abstract

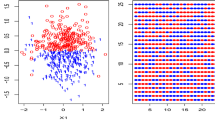

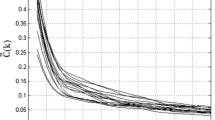

This paper proposes a spatial k-nearest neighbor method for nonparametric prediction of real-valued spatial data and supervised classification for categorical spatial data. The proposed method is based on a double nearest neighbor rule which combines two kernels to control the distances between observations and locations. It uses a random bandwidth in order to more appropriately fit the distributions of the covariates. The almost complete convergence with rate of the proposed predictor is established and the almost sure convergence of the supervised classification rule was deduced. Finite sample properties are given for two applications of the k-nearest neighbor prediction and classification rule to the soil and the fisheries datasets.

Similar content being viewed by others

References

Atteia O, Dubois JP, Webster R (1994) Geostatistical analysis of soil contamination in the swiss jura. Environ Pollut 86:315–327

Biau G, Cadre B (2004) Nonparametric spatial prediction. Stat Inference Stoch Processes 7:327–349

Biau G, Devroye L (2015) Lectures on the nearest neighbor method. Springer

Burba F, Ferraty F, Vieu P (2009) k-nearest neighbour method in functional nonparametric regression. J Nonparametric Stat 21:453–469

Carbon M, Tran LT, Wu B (1997) Kernel density estimation for random fields (density estimation for random fields). Stat Probab Lett 36:115–125

Collomb G (1980) Estimation de la régression par la méthode des k points les plus proches avec noyau: quelques propriétés de convergence ponctuelle. Statistique non Paramétrique Asymptotique , 159–175

Cressie N, Wikle CK (2015) Stat Spatio-Temporal Data. Wiley

Dabo-Niang S, Kaid Z, Laksaci A (2012) Spatial conditional quantile regression: weak consistency of a kernel estimate. Rev Roumaine Math Pures Appl 57:311–339

Dabo-Niang S, Ternynck C, Yao AF (2016) Nonparametric prediction of spatial multivariate data. J Nonparametric Stat 28:428–458

Dabo-Niang S, Yao AF (2007) Kernel regression estimation for continuous spatial processes. Math Methods Stat 16:298–317

Deo, C.M., 1973. A note on empirical processes of strong-mixing sequences. Ann Probab , 870–875

Devroye, L., Gyorfi, L., Krzyzak, A., Lugosi, G., 1994. On the strong universal consistency of nearest neighbor regression function estimates. Ann Stat, 1371–1385

Devroye L, Wagner TJ (1982) 8 nearest neighbor methods in discrimination. Handb Stat 2:193–197

Doukhan P, (1994) Mixing. volume 85 of Lecture Notes in Statistics. Springer-Verlag, New York. http://dx.doi.org/10.1007/978-1-4612-2642-0, https://doi.org/10.1007/978-1-4612-2642-0. properties and examples

Durocher M, Burn DH, Mostofi Zadeh S, Ashkar F (2019) Estimating flood quantiles at ungauged sites using nonparametric regression methods with spatial components. Hydrol Sci J

Fan Z, Xie Jk, Wang Zy, Liu PC, Qu Sj, Huo L (2021) Image classification method based on improved knn algorithm, In: Journal of Physics: Conference Series, IOP Publishing. p 012009

Ferraty F, Vieu P (2006) Nonparametric functional data analysis: theory and practice. Springer Science & Business Media

García-Soidán P, Cotos-Yáñez TR (2020) Use of correlated data for nonparametric prediction of a spatial target variable. Mathematics 8:2077

Goovaerts P (1998) Ordinary cokriging revisited. Math Geol 30:21–42

Gyorfi LDL, Lugosi G, Devroye L (1996) A probabilistic theory of pattern recognition

Hallin M, Lu Z, Tran LT (2004) Local linear spatial regression. Ann Satistics 32:2469–2500

Hastie T, Tibshirani R (1996) Discriminant adaptive nearest neighbor classification and regression, In: Advances in neural information processing systems, pp 409–415

Hengl T, Heuvelink GB, Stein A (2003) Comparison of kriging with external drift and regression kriging. ITC Enschede, The Netherlands

Ibragimov IA, Linnik YV (1971) Independent and stationary sequences of random variables. Wolters-Noordhoff Publishing, Groningen. With a supplementary chapter by I. A. Ibragimov and V. V. Petrov, Translation from the Russian edited by J. F. C. Kingman

Kudraszow NL, Vieu P (2013) Uniform consistency of knn regressors for functional variables. Statistics Probab Lett 83:1863–1870

Li J, Tran LT (2009) Nonparametric estimation of conditional expectation. J Stat Plann Inference 139:164–175

Li W, Zhang C, Tsung F, Mei Y (2020) Nonparametric monitoring of multivariate data via knn learning. Int J Prod Res 59:1–16

Menezes R, Garcia-Soidan P, Ferreira C (2010) Nonparametric spatial prediction under stochastic sampling design. J Nonparametric Stat 22:363–377

Muller S, Dippon J (2011) k-nn kernel estimate for nonparametric functional regression in time series analysis. Fachbereich Mathematik, Fakultat Mathematik und Physik (Pfaffenwaldring 57) 14

Oufdou H, Bellanger L, Bergam A, Khomsi K (2021) Forecasting daily of surface ozone concentration in the grand casablanca region using parametric and nonparametric statistical models. Atmosphere 12:666

Paredes R, Vidal E (2006) Learning weighted metrics to minimize nearest-neighbor classification error. IEEE Transact Pattern Anal Mach Intell 1100–1110

Priambodo B, Ahmad A, Kadir RA (2021) Spatio-temporal knn prediction of traffic state based on statistical features in neighbouring roads. J Intell Fuzzy Syst 40(5):9059–9072

Robinson PM (2011) Asymptotic theory for nonparametric regression with spatial data. J Econ 165:5–19

Shi C, Wang Y (2021) Nonparametric and data-driven interpolation of subsurface soil stratigraphy from limited data using multiple point statistics. Canad Geotech J 58:261–280

Ternynck C (2014) Spatial regression estimation for functional data with spatial dependency. J. SFdS 155:138–160

Tran LT (1990) Kernel density estimation on random fields. J Multivar Anal 34:37–53

Wang H, Wang J (2009) Estimation of the trend function for spatio-temporal models. J Nonparametric Stat 21:567–588

Younso A (2017) On the consistency of a new kernel rule for spatially dependent data. Stat Probab Lett 131:64–71

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

We start by the following technical lemmas that are helpful to handle the difficulties induced by the random bandwidth \(H_{\textbf{n},x}\) in \(r_{\textrm{kNN}}(x)\). They are adaptation of the results given in Collomb (1980) (for independent multivariate data) and their generalized version by Burba et al. (2009), Kudraszow and Vieu (2013) (for independent functional data).

1.1 Technical lemmas

For any random positive variable T, \(\textbf{n}\in \mathbb {N}^{*N}\), and \(x \in D\), we define

Let us set the following sequences, for all \(\textbf{n}\in \mathbb {N}^{*N}\)

and for all \(\beta \in ]0,1[\) and \(x \in D\)

where c is the volume of the unit sphere in \(\mathbb {R}^{d}\). It is clear that

Lemma 3

If the following conditions are verified:

- \((L_{1})\):

-

\(\displaystyle \mathbb {I}_{\left\{ D_{\textbf{n}}^{-} (\beta ,x)\le H_{\textbf{n},x}\le D_{\textbf{n}}^{+}(\beta ,x),\, \forall x \in D \right\} } \;\longrightarrow 1 \quad a.co.\)

- \((L_{2})\):

-

\(\displaystyle \sup _{x \in D}\left| \frac{\sum _{\textbf{i}\in \mathcal {I}_{\textbf{n}},\mathbf {s_0}\ne \textbf{i}}K_{1}\left( \frac{x-X_{\textbf{i}}}{D_{\textbf{n}}^{-}(\beta ,x)}\right) K_{2}\left( h_{\textbf{n},\mathbf {s_0}}^{-1}\left\| \frac{\mathbf {s_0}-\textbf{i}}{\textbf{n}}\right\| \right) }{\sum _{\textbf{i}\in \mathcal {I}_{\textbf{n}},\mathbf {s_0}\ne \textbf{i}}K_{1}\left( \frac{x-X_{\textbf{i}}}{D_{\textbf{n}}^{+}(\beta ,x)}\right) K_{2}\left( h_{\textbf{n},\mathbf {s_0}}^{-1}\left\| \frac{\mathbf {s_0}-\textbf{i}}{\textbf{n}}\right\| \right) }-\beta \right| \longrightarrow 0\quad a.co.\)

- \((L_{3})\):

-

\(\displaystyle \sup _{x \in D}\left| c_{\textbf{n}}\left( D_{\textbf{n}}^{-}(\beta ,x)\right) -r(x)\right| \longrightarrow 0\quad a.co.\), \(\displaystyle \sup _{x\in D} \left| c_{\textbf{n}}\left( D_{\textbf{n}}^{+}(\beta ,x)\right) -r(x) \right| \longrightarrow 0\quad a.co\),

then we have \(\displaystyle \sup _{x \in D} |c_{\textbf{n}}\left( H_{\textbf{n},x}\right) - r(x)|\longrightarrow 0\qquad a.co.\)

Lemma 4

Under the following conditions:

- \((L_{1})\):

-

\(\displaystyle \mathbb {I}_{\left\{ D_{\textbf{n}}^{-} (\beta ,x)\le H_{\textbf{n},x}\le D_{\textbf{n}}^{+}(\beta ,x),\, \forall x \in D \right\} } \;\longrightarrow 1 \quad a.co.\)

- \((L_{2}^{'})\):

-

\(\displaystyle \sup _{x \in D}\left| \frac{\sum _{\textbf{i}\in \mathcal {I}_{\textbf{n}},\mathbf {s_0}\ne \textbf{i}}K_{1}\left( \frac{x-X_{\textbf{i}}}{D_{\textbf{n}}^{-}(\beta ,x)}\right) K_{2}\left( h_{\textbf{n},\mathbf {s_0}}^{-1}\left\| \frac{\mathbf {s_0}-\textbf{i}}{\textbf{n}}\right\| \right) }{\sum _{\textbf{i}\in \mathcal {I}_{\textbf{n}},\mathbf {s_0}\ne \textbf{i}}K_{1}\left( \frac{x-X_{\textbf{i}}}{D_{\textbf{n}}^{+}(\beta ,x)}\right) K_{2}\left( h_{\textbf{n},\mathbf {s_0}}^{-1}\left\| \frac{\mathbf {s_0}-\textbf{i}}{\textbf{n}}\right\| \right) }-\beta \right| =\mathcal {O}(v_{\textbf{n}}) \qquad a.co.\)

- \((L_{3}^{'})\):

-

\(\displaystyle \sup _{x \in D}\left| c_{\textbf{n}}\left( D_{\textbf{n}}^{-}(\beta ,x)\right) -r(x)\right| =\mathcal {O}(v_{\textbf{n}}) \qquad a.co\), \(\displaystyle \sup _{x\in D} \left| c_{\textbf{n}}\left( D_{\textbf{n}}^{+}(\beta ,x)\right) -r(x) \right| =\mathcal {O}(v_{\textbf{n}})\qquad a.co,\)

we have, \(\displaystyle \sup _{x \in D}\left| c_{\textbf{n}}\left( H_{\textbf{n},x}\right) - r(x)\right| =\mathcal {O}(v_{\textbf{n}})\qquad a.co.\)

The proof of Lemma 4 is similar to that of the Lemma in Collomb (1980) (page 162) and Lemma 3 in Burba et al. (2009) (page 1866). Therefore, it is omitted. Lemma 3 is a particular case of of Lemma 4 when we take \(v_{\textbf{n}}=1\).

1.2 Proofs of lemma 1 and lemma 2

Since the proof of Lemma 1 is based on the result of Lemma 3, it is sufficient to check conditions \((L_{1})\), \((L_{2})\) and \((L_{3})\). For the proof of Lemma 2, it suffices to check conditions \((L_{2}')\) and \((L_{3}')\).

To check the condition \((L_1 )\), we need the following two lemmas.

Lemma 5

(Ibragimov and Linnik (1971) or Deo (1973))

-

i)

We assume that the mixing condition (5) is satisfied. We denote by \(\mathcal {L}_{r}\left( \mathcal {F}\right)\) the class of \(\mathcal {F}-\)mesurable random variables X satisfying

$$\begin{aligned} \Vert X\Vert _{r}:=\left( E\left( |X|^{r}\right) \right) ^{1/r}< \infty . \end{aligned}$$Let \(X\in \mathcal {L}_{r}\left( \mathcal {B}(E)\right)\), \(Y\in \mathcal {L}_{s}\left( \mathcal {B}(E')\right)\) and \(1\le r,s,t\le \infty\) such that \(\frac{1}{r}+\frac{1}{s}+\frac{1}{t}=1\), then

$$\begin{aligned} |\textrm{Cov}(X,Y)|\le \Vert X\Vert _{r}\Vert Y\Vert _{s}\left\{ \psi \left( \mathrm {Card(E),Card(E^{'})}\right) \varphi \left( \textrm{dist}(E,E^{'})\right) \right\} ^{1/t}. \end{aligned}$$(17) -

ii)

For random variables X, Y bounded with probability 1, we have

$$\begin{aligned} |\textrm{Cov}(X,Y)|\le C\psi \left( \mathrm {Card(E),Card(E^{'})}\right) \varphi \left( \textrm{dist}(E,E^{'})\right) . \end{aligned}$$(18)

Lemma 6

Under assumptions of Theorem 1, we have

where

\(B(x,\varepsilon )\) denotes the closed ball of \(\mathbb {R}^{d}\) with center x and radius \(\varepsilon\).

1.3 Proof of lemma 6

Let \(\delta _{\textbf{n},\textbf{i}}=\mathbb {P}\left( \Vert X_{\textbf{i}}-x\Vert < D_{\textbf{n}}\right)\), we can deduce that

by the following results.

Firstly, under the Lipschitz condition of f (assumption (H1)), we have

Secondly

Thus, the local stationarity assumption (\(H_8\)) implies

Now for \(\displaystyle R_{\textbf{n}}\), it should be noted that by (H5) and for each \(\textbf{j}\ne \textbf{i}\)

since by (19)

Using Lemma 5 and (19), we can write for \(\displaystyle r=s= 4\)

Let \(q_{\textbf{n}}\) be a sequence of real numbers defined by \(\displaystyle q_{\textbf{n}}^{N}=\mathcal {O}\left( \frac{k^{'}_{\textbf{n}}}{k_\textbf{n}}\right)\). Using the later, we define \(\displaystyle S=\{ \textbf{i},\textbf{j}\in \mathcal {V}_{\mathbf {s_0}},\; 0<\Vert \textbf{i}-\textbf{j}\Vert \le q_{\textbf{n}}\}\) and \(S^{c}\) its complementary in \(\mathcal {V}_{\mathbf {s_0}}\), and rewrite

Firstly, according to the definitions of \(q_{\textbf{n}}\) and S, and equation (22), we have

since \(\displaystyle \delta _{\textbf{n}}=\mathcal {O}(q_{\textbf{n}}^{-N})\) by (19).

Secondly, by (6) and (23), we get

because under assumptions (H6) and (H7), we have \(\theta >(1+\frac{2\gamma }{\gamma -\tilde{\gamma }})N\), thus

Finally, the result follows:

1.4 Verification of \((L_{1})\)

Let \(\varepsilon _\textbf{n}=\frac{1}{2} \varepsilon _0\left( k_\textbf{n}/k^{'}_{\textbf{n}}\right) ^{1/d}\) with \(\varepsilon _{0}>0\) and let \(N_{\varepsilon _\textbf{n}}=\mathcal {O}(\varepsilon _{\textbf{n}}^{-d})\) be a positive integer. Since D is compact, one can cover it by \(N_{\varepsilon _\textbf{n}}\) closed balls in \(\mathbb {R}^d\) of centers \(x_i \in D, \; i=1,\ldots , N_{\varepsilon _\textbf{n}}\) and radius \(\varepsilon _\textbf{n}\). Let us show that

which can be written as, \(\forall \; \eta > 0\),

We have

Let us evaluate the first term in the right-hand side of (24), without ambiguity we ignore the i index in \(x_i\). As justified in the following

where \(\displaystyle \xi _{\textbf{i}}=\Lambda _\textbf{i}-\delta _{\textbf{n},\textbf{i}}\) is centered and \(\Lambda _\textbf{i}\) is defined in Lemma 6 when we replace \(D_{\textbf{n}}\) by \(D_{\textbf{n}}^{-}+2\varepsilon _\textbf{n}\). From (25), we get (26) by (21) while result (26) permits to get (27) by the help of the following.

Actually, according to the definition of \(D_{\textbf{n}}^{-}\) in (16) and replacing \(D_{\textbf{n}}\) by \(D_{\textbf{n}}^{-}+2\varepsilon _\textbf{n}\) in (19), we get

therefore, for all \(\varepsilon _{1}>0\),

Then, for \(\varepsilon _1\) and \(\varepsilon _0\) very small such that \(1-\left( \varepsilon _0 (cf(x))^{1/d} + \beta ^{1/2d}\right) ^{d} - \varepsilon _1>0\), we can find some constant \(C>0\) such that

For the second term in the right-hand side of (24),

where \(\displaystyle \Delta _{\textbf{i}}=\delta _{\textbf{n},\textbf{i}}-\Lambda _{\textbf{i}}\) is centered and \(\Lambda _\textbf{i}\) is defined in Lemma 6 replacing \(D_{\textbf{n}}\) by \(D_{\textbf{n}}^{+} - 2\varepsilon _\textbf{n}\). Result (31) is obtained by (21) while that of (32) is obtained by replacing \(D_{\textbf{n}}\) in (19) by \(D_{\textbf{n}}^{+}-2\varepsilon _\textbf{n}\). Then, we get

Thus for all \(\varepsilon _{2}>0\), it is easy to see that

so for \(\varepsilon _{2}\) and \(\varepsilon _0\) small enough such that \(\left( \left( \beta ^{-1/2d}-\varepsilon _0 (cf(x))^{1/d}\right) ^{d}-1-\varepsilon _{2}\right) >0\), there exists \(C>0\) such that

Now, it suffices to prove that

1.4.1 Let us consider \(P_{1,\textbf{n}}\)

This proof is based on the classical spatial block decomposition of the sum on \(\xi _{\textbf{i}}\) in \(\mathcal {V}_{\mathbf {s_0}}\) similarly to (Tran 1990, page 44). Let \(\mathcal {G}_\textbf{n}\subset \mathcal {I}_{\textbf{n}}\) be the smallest rectangular grid of center \(\mathbf {s_0}\) containing \(\mathcal {V}_{\mathbf {s_0}}\). Without loss of generality, we assume that \(\mathcal {G}_\textbf{n}\) is defined via some \(\textbf{k}=(k_1,\ldots ,k_N)\) where \(1\le k_j\le n_j, j=1,\ldots ,N\). However, by construction \(\mathcal {G}_\textbf{n}\) is of cardinal \(\hat{\textbf{k}}=k_1\times \cdots \times k_N\) satisfying \(k^{'}_{\textbf{n}}=\mathcal {O}(\hat{\textbf{k}})\). In addition, we assume that \(k_{l}=2bq_{l} \; ,\; l=1,\ldots ,N\), where \(q_{l}\) and b are positive integers. Then the decomposition can be presented as follows.

Note that

and that

For each integer \(1\le l \le 2^{N}\), let

Therefore, we have

It follows that

We enumerate in an arbitrary manner the \(\hat{\textbf{q}}=q_{1}\times \ldots \times q_{N}\) terms \(\displaystyle U(1,\textbf{k},\textbf{j})\) of the sum \(T(\textbf{k},1)\) and denote them \(\displaystyle W_{1},\ldots ,W_{\hat{\textbf{q}}}\). Notice that, \(\displaystyle U(1,\textbf{k},\textbf{j})\) is measurable with respect to the field generated by the \(Z_{\textbf{i}}\) with \(\textbf{i}\in \textbf{I}(\textbf{k},\textbf{j})=\{ \textbf{i}\in \mathcal {G}_{\textbf{n}} \; | \;2j_{l}b+1\le i_{l}\le (2j_{l}+1)b ,\;l=1,\ldots ,N\}\), the set \(\textbf{I}(\textbf{k},\textbf{j})\) contains \(b^{N}\) sites and \(\textrm{dist}(\textbf{I}(\textbf{k},\textbf{j}),\textbf{I}(\textbf{k},\textbf{j}^{'}))>b\). In addition, we have \(\mid W_{l}\mid \le b^{N}.\)

According to Lemma 4.5 of Carbon et al. (1997), one can find a sequence of independent random variables \(\displaystyle W_{1}^{*},\ldots ,W_{\hat{\textbf{q}}}^{*}\) where \(W_{l}\) has the same distribution as \(W_{l}^{*}\) and:

Then, we can write

Let \(P_{11,\textbf{n}}=\mathbb {P}\left( \sum _{l=1}^{\hat{\textbf{q}}}\mid W_{l}-W_{l}^{*}\mid >\frac{Ck_\textbf{n}(1-\sqrt{\beta })}{2^{N+1}}\right)\) and \(P_{12,\textbf{n}}=\mathbb {P}\left( \sum _{l=1}^{\hat{\textbf{q}}}\mid W_{l}^{*}\mid >\frac{Ck_\textbf{n}(1-\sqrt{\beta })}{2^{N+1}}\right) .\) It suffices to show that \(\displaystyle \sum _{\textbf{n}\in \mathbb {N}^{*N}}P_{11,\textbf{n}}<\infty\) and \(\displaystyle \sum _{\textbf{n}\in \mathbb {N}^{*N}}P_{12,\textbf{n}}<\infty\).

1.4.2 Let us consider first \(P_{11,\textbf{n}}\)

Using Markov’s inequality, we get

because \(\hat{\textbf{k}}=2^N\hat{\textbf{q}}b^{N}\) by definition and \(k^{'}_{\textbf{n}}=\mathcal {O}(\hat{\textbf{k}})\)

Let us consider that

where \(a=2+(2+s(2-\tilde{\gamma }))d+s(4+2\tilde{\beta }+2\gamma -3\tilde{\gamma })\). Under the assumption on the function \(\psi (n,m)\), we distinguish the following two cases:

Case 1

In this case, we have

Then by using (36) and the definition of \(N_{\varepsilon _\textbf{n}}\), we have

One can show that \(a>2(3+s(2\tilde{\beta }-1))\) and then \(\displaystyle \sum _{\textbf{n}\in \mathbb {N}^{*N}}N_{\varepsilon _\textbf{n}}P_{11,\textbf{n}}<\infty\).

Case 2

\(2<s<\frac{1}{1-\tilde{\gamma }}\). In this case, we have

Then, it follows that \(\displaystyle \sum _{\textbf{n}\in \mathbb {N}^{N}}N_{\varepsilon _\textbf{n}}P_{11,\textbf{n}}<\infty\) when \(\tilde{\beta }<1/\gamma\).

1.4.3 Let us consider \(P_{12,\textbf{n}}\)

Applying Markov’s inequality, we have for \(t>0\):

since the variables \(W_{1}^{*},\ldots ,W_{\hat{\textbf{q}}}^{*}\) are independent.

Let \(r>0\), for \(\displaystyle t=\frac{r\log (\hat{\textbf{n}})}{k_\textbf{n}}\), \(l=1,\ldots ,\hat{\textbf{q}}\), by using (36), we can easily get

where \(\tilde{a} = a\tilde{\gamma } -2(1-s(1-\tilde{\gamma }))>0\) and \(\tilde{a}>0\). However, we have \(t\mid W_{l}^{*}\mid <1\) for \(\textbf{n}\) large enough.

So, \(\exp \left( t W_{l}^{*}\right) \le 1+tW_{l}^{*}+t^{2} W_{l}^{*2}\) then

Therefore,

As \(W_{l}^{*}\) and \(W_{l}\) have the same distribution, we have

From Lemma 6, we obtain

because \(\log (\hat{\textbf{n}})^{2}/k_\textbf{n} \rightarrow 0\) as \(\textbf{n}\rightarrow \infty .\)

Then, we deduce that

Then, we have

Therefore, for some \(r>0\) such that \(\displaystyle \frac{rC(1-\sqrt{\beta })}{2^{N+1}}\tilde{\gamma }-\gamma >1\), we get

By combining the two results on \(P_{11,\textbf{n}}\) and \(P_{12,\textbf{n}}\), we get \(\displaystyle \sum _{\textbf{n}\in \mathbb {N}^{N}} N_{\varepsilon _\textbf{n}}P_{1,\textbf{n}}<\infty\).

Using similar arguments, note that \(\sum _{\textbf{n}\in \mathbb {N}^{N}} N_{\varepsilon _\textbf{n}}P_{2,\textbf{n}}<\infty\).

Now the check of conditions \((L_2)\), \((L_3)\), \((L^{'}_{2})\) and \((L^{'}_{3})\) is based on Theorem 3.1 in Dabo-Niang et al. (2016). We need to show that \(D_{\textbf{n}}^{-}(\beta ,x)\), \(D_{\textbf{n}}^{+}(\beta ,x)\) satisfy assumptions (H6) and (H7) used by these authors for all \((\beta ,x) \in ]0,1[ \times D\). This is proved in the following lemmas where without ambiguity \(D_{\textbf{n}}\) will denote \(D_{\textbf{n}}^{-}(\beta ,x)\) or \(D_{\textbf{n}}^{+}(\beta ,x)\).

Lemma 7

Under assumption (H2) and (H6) on \(\psi (.)\), we have

with

and \(\displaystyle u_{\textbf{n}}=\prod _{i=1}^{N}\left( \log (\log (n_{i}))\right) ^{1+\varepsilon }\log (n_{i})\) for all \(\varepsilon >0\).

1.5 Proof of lemma 7

By the definition of \(D_{\textbf{n}}\) in Lemma 6, hypotheses (H2) and (H6), we have

Note that \(u_{\textbf{n}}\le \log (\tilde{n})^{N(2+\varepsilon )} \Rightarrow \frac{1}{u_{\textbf{n}}^{\theta _3}}\ge \frac{1}{\log (\tilde{n})^{(2+\varepsilon )N\theta _3}}\), where \(\displaystyle \tilde{n}=\max _{k=1,\ldots ,N}n_{k}\), and

Since \(\displaystyle \frac{n_{k}}{n_{i}}\le C, \; \forall \; 1\le k,i \le N\), we deduce that \(\displaystyle \hat{\textbf{n}}\ge C \tilde{n}^{N}\) and

because \((1-s(1-\tilde{\gamma }))\theta >N\left( (2+s(2-\tilde{\gamma }))d+2s(2+\gamma -\tilde{\gamma })\right)\).

Lemma 8

Under assumption (H2) and (H7) on \(\psi (.)\), we have

with

The proof of this lemma is the same as the one of Lemma 7 and is omitted.

1.6 Verification of \((L_{2})\)

Let

and

Under the hypotheses of Lemma 1 and the results of Lemma 7 and Lemma 8 (Dabo-Niang et al. 2016, pages 447-448), we have

then,

1.7 Verification of \((L_{3})\)

Under assumptions of Lemmas 1 and the results of Lemmas 7 and 8, we have by the Theorem 3.1 in Dabo-Niang et al. (2016)

1.8 Proof of lemma 2

The proof of this lemma is based on the results of Lemma 4. It suffices to check the conditions \((L_{2}')\) and \((L_{3}')\). Clearly, similar arguments as those involved to prove \((L_2)\) and \((L_3)\) can be used to obtain the requested conditions.

1.9 Verification of \((L_{2}')\)

Under assumptions of Corollary 1 and Lemmas 7, 8, we have

We conclude that

1.10 Verification of \((L_{3}')\)

It is relatively easy to deduce from Lemmas 7 and 8 (see Remark 4 in Dabo-Niang et al. 2016) that

This yields the proof.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ahmed, MS., N’diaye, M., Attouch, M.K. et al. k-nearest neighbors prediction and classification for spatial data. J Spat Econometrics 4, 12 (2023). https://doi.org/10.1007/s43071-023-00041-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43071-023-00041-2