Abstract

We study a first-order nonlinear partial differential equation and present a necessary and sufficient condition for the global existence of its solution in a non-smooth environment. Using this result, we prove a local existence theorem for a solution to this differential equation. Moreover, we present two applications of this result. The first concerns an inverse problem called the integrability problem in microeconomic theory and the second concerns an extension of Frobenius’ theorem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In microeconomic theory, consumer behavior is described by the following parameterized maximization problem.Footnote 1

The vector \(x=(x_1,...,x_n)\) is called the consumption plan, where \(x_i\ge 0\) denotes the consumption of the i-th commodity. The vector \(p=(p_1,...,p_n)\) denotes the price system, where \(p_i>0\) denotes the price of the i-th commodity. The number \(m>0\) denotes the consumer’s income. Therefore, the last inequality constraint implies that “the cost to buy the consumption vector x does not exceed the income,” and this constraint is called the budget inequality. The objective function v is called the utility function. This function represents the consumer’s preference, and \(v(x)>v(y)\) implies that the consumer prefers x to y. This problem is called the utility maximization problem.

If v is upper semi-continuous, then problem (1) has at least one solution. If, in addition, v is strictly quasi-concave, then the solution to problem (1) is at most one. If there uniquely exists a solution to (1), then we write this solution as \(f^v(p,m)\). The function \(f^v\) is called the demand function corresponding to v.

In consumer theory, it is thought that v is difficult to estimate, because this represents the consumer’s preference, which is hidden in the consumer’s mind. On the other hand, \(f^v\) is easier to estimate than v because this represents the consumer’s purchase behavior, and thus this corresponds to actual purchase data, which can be observed. Therefore, we want to construct a method for reversely calculating v from \(f^v\), and to reveal the consumer’s preference from the consumer’s actual purchase behavior. This inverse problem is called the integrability problem.

To solve this problem, the following partial differential equation (PDE) is essentially important:Footnote 2

If \(f=f^v\), for every (p, m), there exists the unique concave solution u defined on the positive orthant such that \(u(p)=m\). Moreover, using this solution, we can reversely calculate the information contained in v. Therefore, (2) is important for microeconomic theory.

If f is continuously differentiable, then the necessary and sufficient condition for the existence of a local solution to the PDE (2) is well known and is found in Dieudonne [1]. If f is differentiable and locally Lipschitz, a similar result was obtained by Nikliborc [2]. However, in consumer theory, the differentiability of \(f^v\) is not desirable for several reasons. Therefore, when considering the integrability theory, we should consider that f is not differentiable. This makes the problem much more difficult. Therefore, we want to extend the existence theorem for a solution to (2) to the case in which f is not differentiable.

We assume that f is locally Lipschitz, and first provides a necessary and sufficient condition for the existence of a global solution to (2). Once this result is obtained, we find a necessary and sufficient condition for the existence of a local solution to (2). We present an example in which this theorem can be applied appropriately. This example concerns an application in economics.

Using these new results, we can positively solve the integrability problem in consumer theory, that is, for a given candidate of the demand function f, we can provide a necessary and sufficient condition for being able to construct problem (1) so that it actually becomes a demand function. Because our conditions are very simple, we expect that the theory can be applied to many cases in economics.

Our results on PDEs provide another application in differential topology, that is, we can extend Frobenius’ theorem. Frobenius’ theorem, which plays a significant role in the discussion of de Rham cohomology, has a deep connection with (2) when the system is \(C^1\). We have succeeded in showing that it also holds for locally Lipschitz systems. Therefore, we can discuss the conditions for the integrability of vector fields for which the derivative is not necessarily definable.

For the benefit of readers unfamiliar with consumer theory, we devote Section 2 to explaining our research motivation in more detail. In Section 3, we analyze (2) and present our main results. Using the results in Section 3, we derive the results of the consumer theory, which is discussed in Section 4. In Section 5, we present an extension of Frobenius’ theorem. We conclude the paper in Section 6.

2 Motivation

Fix \(n\ge 2\). Throughout this paper, we use the following notation: \(\mathbb {R}^n_+=\{x\in \mathbb {R}^n|x_i\ge 0 \text{ for } \text{ all } i\in \{1,...,n\}\}\), and \(\mathbb {R}^n_{++}=\{x\in \mathbb {R}^n|x_i>0 \text{ for } \text{ all } i\in \{1,...,n\}\}\). The former set is called the nonnegative orthant and the latter set is called the positive orthant.

Recall the utility maximization problem (1):

Let \(f^v(p,m)\) be the solution function of this problem. This function is called the demand function. Our purpose is to derive the information of v from this solution function \(f^v\).

Hosoya [3] solved this problem completely under the condition that the demand function is continuously differentiable. However, there are several problems about this result. The first problem is that even if the objective function is smooth, solving the utility maximization problem above and calculating the demand function often results in a function that is not differentiable. For example, if \(v(x)=\sqrt{x_1}+x_2\), then \(f^v\) is not continuously differentiable.

The second problem is more serious. When we observe a demand function, we can only use a finite number of purchase data. Therefore, when we apply this theory to reality, the demand function is always an estimated one. However, the topology used for the space of demand functions in econometrics is usually a local uniform topology. The space of demand functions that are continuously differentiable with respect to this topology does not have good properties. This poses a major problem for the applicability of the theory.

Therefore, continuous differentiability is not a desirable assumption for the demand function and the result in Hosoya [3] needs to be extended.

To solve this problem, we use a theorem called Shephard’s lemma. Consider the following ‘dual’ problem of problem (1):

This is called the expenditure minimization problem, and the value of this problem is denoted by \(E^x(p)\). In other words,

The function \(E^x:\mathbb {R}^n_{++}\rightarrow \mathbb {R}\) is called the expenditure function.

If v is continuous, then we can easily show that for every \(\bar{p}\in \mathbb {R}^n_{++}\),

Therefore, the function \(x\mapsto E^x(\bar{p})\) has all information of v. Hence, the integrability problem can be solved if \(E^x(\bar{p})\) can be calculated using only information of \(f^v\).

To execute this task, we can use the following fact. Suppose that v is continuous, increasing, and strictly quasi-concave.Footnote 3 Then, \(f^v\) is continuous by Berge’s maximum theorem and satisfies the following equality:

for any \((p,m)\in \mathbb {R}^n_{++}\times \mathbb {R}_{++}\). This equality is called Walras’ law. By Walras’ law, we can easily show that if \(x=f^v(p,m)\), then

Moreover, the function \(E^x\) is a \(C^1\) concave function, and

for every \(q\in \mathbb {R}^n_{++}\). Equation (5) is called Shephard’s lemma.

Note that equation (5) is equivalent to the PDE (2), and (4) can be treated as an initial value condition. If \(f^v\) is locally Lipschitz, then the solution to (5) with (4) is unique, and thus, at first glance, the integrability problem appears to have been resolved positively.Footnote 4

However, in actual situations of microeconomic theory, we can only have purchase data, and thus we can only obtain a candidate of demand f through estimation, and the existence of the continuous objective function v is not verified. Thus, the above method is insufficient. We want a procedure that determines the existence of v.

Hence, we consider that only a function \(f:P\rightarrow \Omega\) is given, where \(P=\mathbb {R}^n_{++}\times \mathbb {R}_{++}\) and \(\Omega =\mathbb {R}^n_+\). We say that f is a candidate of demand (CoD) if and only if the budget inequality

holds for every \((p,m)\in P\). If

holds for every \((p,m)\in P\), then f is said to satisfy Walras’ law. If \(f=f^v\) for some \(v:\Omega \rightarrow \mathbb {R}\), then f is called a demand function corresponding to v.

Our problem is as follows: suppose that f is a CoD that satisfies Walras’ law and that f is not necessarily differentiable but at least locally Lipschitz. Find a condition on f that guarantees the existence of v such that \(f=f^v\). By extended Shephard’s lemma (Lemma 1 of Hosoya [4]), the existence of the concave solution \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) to (2) is at least a necessary condition of this problem. Therefore, we must resolve the existence problem of the global solution to (2) with non-smooth f. This is the motivation of this study.

3 Main Results

Recall the PDE (2):

where \(f:U\rightarrow \mathbb {R}^n\), \(U\subset \mathbb {R}^{n+1}\) is open. If V is an open and connected set in \(\mathbb {R}^n\), then we call \(u:V\rightarrow \mathbb {R}\) a solution to (2) if and only if 1) u is continuously differentiable at V and 2) \(Du(x)=f(x,u(x))\) for all \(x\in V\). If V is not necessarily open but connected, then we call \(u:V\rightarrow \mathbb {R}\) a solution to (2) if and only if for every \(x\in V\), there exists a solution v to (2) defined on some open and connected set W that includes a neighborhood of x such that \(v(y)=u(y)\) for every \(y\in V\cap W\).Footnote 5

Suppose that \(u:V\rightarrow \mathbb {R}\) is a solution to (2). We say that u is a local solution around \(x^*\) if \(x^*\) is in the interior of V. If u is a solution to (2), \(x^*\in V\), and \(u(x^*)=u^*\), then we say that u is a solution to (2) with initial value condition \(u(x^*)=u^*\), or simply, a solution to the following PDE:

Suppose that f is differentiable at (x, u). Define

We say that f is integrable if and only if \(s_{ij}(x,u), s_{ji}(x,u)\) are defined and \(s_{ij}(x,u)=s_{ij}(x,u)\) for each \(i,j\in \{1,...,n\}\) at almost all \((x,u)\in U\).

Note that, if f is locally Lipschitz, then by Rademacher’s theorem, it is differentiable almost everywhere, and therefore \(s_{ij}(x,u)\) is defined almost everywhere. If f is differentiable at \((x^*,u^*)\) and there exists a solution \(u:V\rightarrow \mathbb {R}\) of (6), then clearly u is twice differentiable at \(x^*\). We show later that in this instance,Footnote 6

Therefore, for the existence of a solution to (2) with initial value condition \(u(x^*)=u^*\) for every \((x^*,u^*)\in U\), f must be integrable.

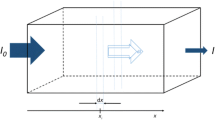

Now, suppose that \(V\subset \mathbb {R}^n\) is star-convex centered at \(x^*\).Footnote 7 If \(u:V\rightarrow \mathbb {R}\) is a solution to (6), then for every \(x\in V\), the following function \(c(t;x,u^*)=u((1-t)x^*+tx)\) satisfies the following ordinary differential equation (ODE):

That is, c is the same as the solution function of (7) on \([0,1]\times V\times \{u^*\}\).Footnote 8 Moreover, \(c(1;x,u^*)\) exists and \(u(x)=c(1;x,u^*)\).

Our main result states that if f is locally Lipschitz and integrable, then the converse is also true.

Theorem 3.1

Suppose that \(n\ge 2\), \(U\subset \mathbb {R}^{n+1}\) is open, \((x^*,u^*)\in U\), \(f:U\rightarrow \mathbb {R}^n\) is locally Lipschitz and integrable, and V is a star-convex set centered at \(x^*\). Then, there exists a solution u to (2) with initial value condition \(u(x^*)=u^*\) defined on V if and only if the solution function c(t; x, w) of the parameterized ODE

is defined on \([0,1]\times V\times \{u^*\}\). Moreover, the solution \(u:V\rightarrow \mathbb {R}\) is unique and \(c(1;x,u^*)=u(x)\) for every \(x\in V\).

Proof

We have proved the ‘only if’ part in the above argument, and hence we only argue the ‘if’ part. First, we prove the following lemma.

Lemma 3.1

Suppose that \(W\subset U\) and the Lebesgue measure of \(U\setminus W\) is zero. Moreover, suppose that \(x\in V\) and \(x_{i^*}\ne x_{i^*}^*\) for \(i^*\in \{1,...,n\}\), and the domain of the solution function c(t; y, w) of the parameterized ODE (8) includes \([0,t^*]\times \bar{P}_1\times \bar{P}_2\) for \(t^*>0\), where \(P_1\) is a bounded open neighborhood of x and \(P_2\) is a bounded open neighborhood of \(u^*\), and \(\bar{P}_j\) denotes the closure of \(P_j\). For every \((t,\tilde{y},w)\subset \mathbb {R}^{n+1}\) such that \(t\in [0,t^*]\), \(y\in P_1\) for

and \(w\in P_2\), define

Then, the Lebesgue measure of \(\xi ^{-1}(U\setminus W)\) is also zero.

Proof (Proof of Lemma 3.1)

Without loss of generality, we assume that \(i^*=n\). Throughout the proof of Lemma 3.1 and the rest of the proof of Theorem 3.1, we use the following notation. If \(z\in \mathbb {R}^n\), then \(\tilde{z}=(z_1,...,z_{n-1})\in \mathbb {R}^{n-1}\). Conversely, if \(\tilde{z}\in \mathbb {R}^{n-1}\), then \(z=(z_1,...,z_{n-1},x_n)\).

Let \(\tilde{P}_1=\{\tilde{y}|y\in P_1\}\). We show that \(\xi\) is one-to-one on the set \(]0,t^*]\times \tilde{P}_1\times P_2\). Suppose that \(t_1\ne 0\ne t_2\) and \(\xi (t_1,\tilde{y}_1,w_1)=\xi (t_2,\tilde{y}_2,w_2)=(z,c)\). Because \(z_n=(1-t_1)x_n^*+t_1x_n=(1-t_2)x_n^*+t_2x_n\) and \(x_n^*\ne x_n\), \(t_1=t_2\). Because \(z_i=(1-t_1)x_i^*+t_1y_{1i}=(1-t_1)x_i^*+t_1y_{2i}\) and \(t_1\ne 0\), \(y_{1i}=y_{2i}\), and thus \(\tilde{y}_1=\tilde{y}_2\). Therefore, it suffices to show that c(t; y, w) is increasing in w. Suppose that \(w_1<w_2\) and \(c(t;y,w_1)\ge c(t;y,w_2)\). Because \(c(0;y,w_1)=w_1<w_2=c(0;y,w_2)\), by the intermediate value theorem, there exists \(s\in [0,t]\) such that \(c(s;y,w_1)=c(s;y,w_2)\). Then, by the Picard-Lindelöf uniqueness theorem, \(w_1=c(0;y,w_1)=c(0;y,w_2)=w_2\), which is a contradiction.

Next, define

We show that \(\xi ^{-1}\) is Lipschitz on \(V^{\ell }\). Define

Suppose that \((v_1,c_1), (v_2,c_2)\in V^{\ell }\) and \((v_j,c_j)=\xi (t_j,\tilde{y}_j,w_j)\). Then, \(t_j=t(v_j)\) and \(\tilde{y}_j=\tilde{y}(v_j)\). Clearly, the functions t(v) and \(\tilde{y}(v)\) are Lipschitz on \(\xi ([\ell ^{-1}t^*,t^*]\times \tilde{P}_1\times P_2)\), and hence they are Lipschitz on \(V^{\ell }\). Next, consider the following ODE:

Let d(s; t, v, c) be the solution function of the above ODE. Define \(\hat{P}_1\) as the closure of \(\tilde{P}_1\). If \((v,c)=\xi (t,\tilde{y},w)\) for some \((t,\tilde{y},w)\in [{\ell }^{-1}t^*,t^*]\times \hat{P}_1\times \bar{P}_2\), then \(d(s;t,v,c)=c(s+t-t_2;y,w)\). Moreover, the set

is compact, and by the usual arguments concerning Gronwall’s inequality, we can easily show that \((t,v,c)\mapsto d(t_2-t;t,v,c)\) is Lipschitz on this set. Therefore,

where \(L,M>0\) are some constants, and therefore our claim is correct.

Now, recall that the Lebesgue measure of \(U\setminus W\) is zero. Because \(\xi ^{-1}\) is Lipschitz on \(V^{\ell }\), the Lebesgue measure of

is zero. Therefore, the Lebesgue measure of

is also zero. Clearly, the Lebesgue measure of

is zero, because this set is included in \(\{(t,\tilde{y},w)|t\in \{0,t^*\}\}\). This completes the proof of Lemma 3.1.

Now, suppose that c(t; y, w) is defined on \([0,1]\times V\times \{u^*\}\). Our purpose is to show that \(u(x)\equiv c(1;x,u^*)\) is a solution to (2) with initial value condition \(u(x^*)=u^*\). Choose any \(x\in V\). First, suppose that \(x_n\ne x_n^*\). Choose any \(P_1\) and \(P_2\) such that \(P_1\) is an open and bounded neighborhood of x, \(P_2\) is an open and bounded neighborhood of \(u^*\), and \(t\mapsto c(t;y,w)\) is defined on [0, 1] for all \((y,w)\in \bar{P}_1\times \bar{P}_2\).Footnote 9 Because of Rademacher’s theorem, f is differentiable almost everywhere. Define \(\tilde{P}_1=\{\tilde{y}|y\in P_1\}\). By Lemma 3.1, for almost all \((t,\tilde{y},w)\in [0,1]\times \tilde{P}_1\times P_2\), f is differentiable and \(s_{ij}=s_{ji}\) for all i, j at \(((1-t)x^*+ty,c(t;y,w))\). Moreover, because c is Lipschitz on \([0,1]\times \bar{P}_1\times \bar{P}_2\), we also have by Rademacher’s theorem that \(\tilde{c}:(t,\tilde{y},w)\mapsto c(t;y,w)\) is differentiable at almost every point on \([0,1]\times \tilde{P}_1\times P_2\). By Fubini’s theorem, for almost all \((\tilde{y},w)\in \tilde{P}_1\times P_2\), f is differentiable and \(s_{ij}=s_{ji}\) for all i, j at \(((1-t)x^*+ty,c(t;y,w))\) and \(\tilde{c}\) is also differentiable at \((t,\tilde{y},w)\) for almost all \(t\in [0,1]\). Let W be the set of such \((\tilde{y},w)\). Choose any \((\tilde{y},w)\in W\) and for \(i\in \{1,...,n-1\}\), define

Note that, if \(e_i\) denotes the i-th unit vector, thenFootnote 10

by the dominated convergence theorem. Therefore, c is partially differentiable with respect to \(y_i\) for all \(t\in [0,1]\) and hence \(\varphi _i\) is well-defined. Moreover, by equation (10), \(t\mapsto \varphi _i(t;\tilde{y},w)\) is absolutely continuous on [0, 1], and thus is differentiable at almost all \(t\in [0,1]\). Furthermore,Footnote 11

where \(a(t,y,w)=\sum _j\frac{\partial f_j}{\partial u}(y_j-x_j^*)\) is bounded and measurable with respect to t. Therefore, \(t\mapsto \varphi _i(t;\tilde{y},w)\) is an absolutely continuous function that is a solution to the linear ODE in (11), where a(t, y, w) is bounded. Thus,

However, it is obvious that \(\varphi _i(0;\tilde{y},w)=0\). Hence, \(\varphi _i\equiv 0\). This implies that

Because W has full-measure in \(\tilde{P}_1\times P_2\), for every \(i\in \{1,...,n-1\}\), there exists \(\varepsilon >0\) and a sequence \((\tilde{y}^k,w^k)\) such that \((\tilde{y}^k,w^k)\rightarrow (\tilde{x},u^*)\) as \(k\rightarrow \infty\) and for almost every \(h\in ]-\varepsilon ,\varepsilon [\), \((\tilde{y}^k+h\tilde{e}_i,w^k)\in W\). Therefore, if \(|h|<\varepsilon\), then

By the dominated convergence theorem,

and thus,

By summarizing the above arguments, we obtain the following result: if \(x_n\ne x_n^*\), then for every \(i\in \{1,...,n-1\}\), the function

is partially differentiable with respect to \(y_i\) at x, and \(\frac{\partial u}{\partial y_i}(x)=f_i(x,u(x))\).

Next, suppose that \(x\in V, x_n=x_n^*\) and \(i\in \{1,...,n-1\}\). Let \(e=(1,1,...,1)\in \mathbb {R}^n\) and define \(x^k=x+k^{-1}e\). Then, by the above result, there exists \(\varepsilon >0\) such that for every sufficiently large k, \([0,1]\times \{x^k+he_i||h|<\varepsilon \}\times \{u^*\}\) is included in the domain of c, and \(\frac{\partial u}{\partial y_i}(x^k+he_i)=f_i(x^k+he_i,u(x^k+he_i))\) for all h with \(|h|<\varepsilon\). Thus, if \(|h|<\varepsilon\),

By the dominated convergence theorem,

and thus u is also partially differentiable with respect to \(y_i\) at x, and \(\frac{\partial u}{\partial y_i}(x)=f_i(x,u(x))\).

Hence, for every \(x\in V\), \(\frac{\partial u}{\partial y_i}(x)=f_i(x,u(x))\) if \(i\ne n\). Replacing n by 1 and repeating the above arguments, we obtain

for every \(x\in V\). Clearly, \(u(x^*)=u^*\), because \(c(t;x^*,u^*)=u^*\) for all \(t\in \mathbb {R}\). Hence, u is a solution to PDE (2) with initial value condition \(u(x^*)=u^*\) defined on V. Now, uniqueness arises from the uniqueness of the solution to (8). This completes the proof.

We note a fact about Lemma 3.1: this result holds even when \(n=1\). Because the proof of this fact is almost trivial, we omit it. However, this result is used in the proof of Lemma 5.1.

Using Theorem 3.1, we immediately obtain the following result.

Corollary 3.1

Suppose that \(n\ge 2\), \(U\subset \mathbb {R}^{n+1}\) is open, and \(f:U\rightarrow \mathbb {R}^n\) is locally Lipschitz. Then, there exists a local solution to (6) around \(x^*\) for every \((x^*,u^*)\in U\) if and only if f is integrable.

Proof

First, we treat the ‘if’ part. The constant function \(t\mapsto u^*\) is a solution to the ODE (8) with \((x,w)=(x^*,u^*)\), for which the domain is \(\mathbb {R}\). Therefore, there exists an open and convex neighborhood V of \(x^*\) such that \([0,1]\times V\times \{u^*\}\) is included in the domain of the solution function c of the ODE (8). By Theorem 3.1, \(u(x)=c(1;x,u^*)\) is a unique solution to (6) defined on V, and thus the ‘if’ part is correct.

Next, we treat the ‘only if’ part. We need a lemma.

Lemma 3.2

Suppose that \(W\subset \mathbb {R}^2\) is open, \(g:W\rightarrow \mathbb {R}\) is differentiable around \((x^*,y^*)\), and both \(\frac{\partial g}{\partial x}\) and \(\frac{\partial g}{\partial y}\) are differentiable at \((x^*,y^*)\in W\). Then, \(\frac{\partial ^2g}{\partial y\partial x}(x^*,y^*)=\frac{\partial ^2g}{\partial x\partial y}(x^*,y^*)\).Footnote 12

Proof (Proof of Lemma 3.2)

Because W is open, there exists \(\varepsilon >0\) such that if \(|h|\le \varepsilon\), then \((x^*+h,y^*+h)\in W\). Define

Let \(\varphi (x)=g(x,y^*+h)-g(x,y^*)\). If \(|h|\le \varepsilon\), then there exists \(\theta \in [0,1]\) such that

Thus,

Because the assumption is symmetric, we can also show that

and thus we have

This completes the proof.

By Rademacher’s theorem, f is differentiable almost everywhere, and thus \(s_{ij}(x,u)\) can be defined at every \(i,j\in \{1,...,n\}\) and almost every \((x,u)\in U\). Suppose that f is differentiable at \((x^*,u^*)\in U\). By assumption, there exists a local solution \(u:V\rightarrow \mathbb {R}\) to the PDE (6) around \(x^*\). Then, clearly u is twice differentiable at \(x^*\), and by Lemma 3.2, the Hessian matrix of u at \(x^*\) is symmetric. However,

and thus \(s_{ij}(x^*,u^*)=s_{ji}(x^*,u^*)\). Because \((x^*,u^*)\) is an arbitrary point in which f is differentiable, we have that f is integrable and the ‘only if’ part is also correct. This completes the proof.

Example 1

Consider the function

defined on \(\mathbb {R}^{n+1}_{++}\). This function satisfies all the requirements of Theorem 3.1 but is not continuously differentiable. Choose any \((p,m)\in \mathbb {R}^{n+1}_{++}\). We attempt to solve (6), where \((x^*,u^*)=(p,m)\).

First, choose any \(q=(q_1,q_2)\). If u is a solution to (6), then \(c(t)=u((1-t)p+tq)\) satisfies the following ODE:

By Theorem 3.1, the converse is also true, that is, if c(t) is a solution to (12) defined on [0, 1], then we can define \(u(q)=c(1)\), and this function u is a solution to (6).

Second, define

and consider

To solve (13), we have

and if \(q_2=p_2\), then

Third, suppose that \(p_2=q_2\), \(4p_1m\le p_2^2\), and \(4q_1c_1(1)\le q_2^2\), where \(c_1(0)=m\). Note that \(4(p_1+t(q_1-p_1))c_1(t)\) is monotone. Thus, in this case, \(c(t)=c_1(t)\) on [0, 1], and

Therefore, we obtain the following candidate for u(q):

if \(2q_1\sqrt{\frac{m}{p_1}}\le q_2\). Because f is homogeneous of degree zero, we can show that u is homogeneous of degree one, and thus we can remove the assumption \(p_2=q_2\). By an easy calculation, we can confirm that u is actually a solution to equation (2) on the set \(\left\{ q\left| 2q_1\sqrt{\frac{m}{p_1}}\le q_2\right. \right\}\).

Fourth, suppose that \(p_2=q_2\), \(4p_1m\le p_2^2\), and \(4q_1c_1(1)>q_2^2\), where \(c_1(0)=m\). Note that \(q_1\ne p_1\). If \(q_1<p_1\), then \(c_1(t)\) is decreasing and \(4q_1c_1(1)<q_2^2\), which is absurd. Thus, \(q_1>p_1\). We guess that \(c(t)=c_1(t)\) on \([0,t^*]\), and \(c(t)=c_2(t)\) on \([t^*,1]\), where \(c(t^*)=c_1(t^*)=c_2(t^*)\) and \(\dot{c}_1(t^*)=\dot{c}_2(t^*)\). Then,

and thus

Then,

and hence, we obtain

Check that \(t^*\in [0,1]\) if and only if \(2q_1\sqrt{\frac{m}{p_1}}\ge q_2\), which is equivalent to \(4q_1c_1(1)\ge q_2^2\). Because we assumed \(4q_1c_1(1)>q_2^2\), this assumption holds. Then,

In particular,

Therefore, we obtain the following candidate for u:

where this form is homogeneous of degree one. Thus, we can guess that

We can check that this u is actually a solution to (2) with \(u(p)=m\).

By a similar argument, we obtain the following candidate for a solution u to (2) even if \(4p_1m>p_2^2\):

It can easily be verified that this u is actually a solution to (2) with \(u(p)=m\).

4 Application to Consumer Theory

Let \(P=\mathbb {R}^n_{++}\times \mathbb {R}_{++}\) and \(\Omega =\mathbb {R}^n_+\). Recall that a function \(f:P\rightarrow \Omega\) is called a candidate of demand (CoD) if and only if the budget inequality

holds for every \((p,m)\in P\). If

holds for every \((p,m)\in P\), then f is said to satisfy Walras’ law.

Let \(\succsim\) be a weak order on \(\Omega\): that is, \(\succsim \subset \Omega ^2\) is complete and transitive. We write \(x\succsim y\) instead of \((x,y)\in \succsim\). If there is a function \(v:\Omega \rightarrow \mathbb {R}\) such that

then we say that v represents \(\succsim\).

Define

If \(f=f^{\succsim }\), then we say that f is a demand function corresponding to \(\succsim\). If v represents \(\succsim\), then \(f^{\succsim }\) is also written as \(f^v\), and if \(f=f^v\), then f is also said to be a demand function corresponding to v. Check that our definition of \(f^v\) is essentially the same as that in Section 2.

Suppose that a CoD f is locally Lipschitz and satisfies Walras’ law. If f is differentiable at \((p,m)\in P\), then define

and let \(S_f(p,m)\) be an \(n\times n\) matrix whose (i, j)-th element is \(s_{ij}(p,m)\). Note that, f is integrable in the sense of Theorem 3.1 if and only if \(S_f(p,m)\) is symmetric for almost all \((p,m)\in P\). This matrix-valued function \(S_f\) is called the Slutsky matrix.

Choose any \((p,m)\in P\), and consider the PDE

with initial value condition \(u(p)=m\). If f is differentiable at (p, m) and a solution u to such a PDE exists, then u is twice differentiable at p and the Hessian matrix coincides with \(S_f(p,m)\). If, additionally, u is concave, then \(S_f(p,m)\) is negative semi-definite. To combine this with the discussion in Section 2, we expect that if f is a demand function, then \(S_f(p,m)\) is symmetric and negative semi-definite for almost all \((p,m)\in P\).

The following theorem states that both the above conjecture and its converse are true.

Theorem 4.1

Suppose that \(f:P\rightarrow \Omega\) is a locally Lipschitz CoD that satisfies Walras’ law. Then, \(f=f^{\succsim }\) for some weak order \(\succsim\) on \(\Omega\) if and only if \(S_f(p,m)\) is symmetric and negative semi-definite for almost all \((p,m)\in P\). Moreover, in this case, \(f=f^v\) for some function \(v:\Omega \rightarrow \mathbb {R}\).

Proof

We first treat the ‘only if’ part. Suppose that \(f=f^{\succsim }\) for some weak order \(\succsim\) on \(\Omega\). Because of Rademacher’s theorem, f is differentiable at almost every \((p,m)\in P\). Choose any \((p,m)\in P\) such that f is differentiable at (p, m). Define \(x=f(p,m)\). By Lemma 1 of Hosoya [4], the function

is concave and solves the PDE (17) with initial value condition \(E(p)=m\). Therefore, it is twice differentiable at p, and the Hessian matrix of E at p coincides with \(S_f(p,m)\). Thus, \(S_f(p,m)\) is symmetric and negative semi-definite. Because (p, m) is arbitrary, \(S_f(p,m)\) is symmetric and negative semi-definite for almost all (p, m).

Next, we treat the ‘if’ part. Suppose that \(S_f(p,m)\) is symmetric and negative semi-definite almost everywhere. Then, f is integrable in the sense of Theorem 3.1. Choose any \((p,m)\in P\), and consider the following ODE:

Let c(t; q, w) be the solution function of the ODE (18). By Theorem 3.1, there exists a solution \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) to (17) with initial value condition \(u(p)=m\) if and only if c(1; q, m) can be defined for all \(q\in \mathbb {R}^n_{++}\). Now, we introduce the following lemmas.

Lemma 4.1

If f is differentiable at (p, m), then

Proof (Proof of Lemma 4.1)

Because of Walras’ law,

To differentiate this formula with respect to \(q_j\) and w at \((q,w)=(p,m)\), we have

Therefore,

and thus,

as desired. This completes the proof.

Lemma 4.2

Suppose that \(t\mapsto c(t;q,m)\) is defined on \([0,t^*]\). Define \(p(t)=(1-t)p+tq\). Then, \(\langle p,f(p(t^*),c(t^*;q,m))\rangle \ge m\) and \(\langle p(t^*),f(p,m)\rangle \ge c(t^*;q,m)\).

Proof (Proof of Lemma 4.2)

We present only the proof of \(\langle p,f(p(t^*),c(t^*;q,m))\rangle \ge m\). The rest can be proved symmetrically. Define \(d(t)=\langle p,f(p(t),c(t;q,m))\rangle\). Because of Walras’ law, \(d(0)=\langle p,f(p,m)\rangle =m\), and thus it suffices to show that d is nondecreasing on \([0,t^*]\).

By Lemma 3.1, there exists a sequence \((q^k,w^k)\) such that \((q^k,w^k)\rightarrow (q,m)\) as \(k\rightarrow \infty\) and for every k, \(t\mapsto c(t;q^k,w^k)\) is defined on \([0,t^*]\), and f is differentiable at \(((1-t)p+tq^k,c(t;q^k,w^k))\) and \(S_f((1-t)p+tq^k,c(t;q^k,w^k))\) is symmetric and negative semi-definite for almost all \(t\in [0,t^*]\). Define \(p^k(t)=(1-t)p+tq^k\) and \(d^k(t)=\langle p,f(p^k(t),c(t;q^k,w^k))\rangle\). Then,

for almost all \(t\in [0,t^*]\).

By Lemma 4.1,

for almost all \(t\in [0,t^*]\). Therefore,

for almost all \(t\in [0,t^*]\), which implies that \(d^k(t)\) is nondecreasing. Because \(d^k(t)\) converges to d(t) for all \(t\in [0,t^*]\), d(t) is also nondecreasing. This completes the proof.

Now, choose any \(q\in \mathbb {R}^n_{++}\), and define \(p(t)=(1-t)p+tq\). Let \(T^*\) be the supremum of \(t^*\) such that the function \(t\mapsto c(t;q,m)\) is defined on \([0,t^*]\), and suppose that \(T^*\le 1\). Then, \(t\mapsto c(t;q,m)\) is defined on \([0,T^*[\), and by the usual arguments on nonextendable solutions to ODEs, for every compact set \(C\subset P\), there exists \(t^*\in [0,T^*[\) such that if \(t^*\le t<T^*\), then \((p(t),c(t;q,m))\notin C\). Therefore, either \(\limsup _{t\uparrow T^*}c(t;q,m)=+\infty\) or \(\liminf _{t\uparrow T^*}c(t;q,m)=0\). Define \(x(t)=f(p(t),c(t;q,m))\). By Lemma 4.2,

for all \(t\ge 0\), and thus \(\limsup _{t\uparrow T^*}c(t;q,m)\le \langle p(T^*),x(0)\rangle\). Therefore, there exists a sequence \((t^k)\) such that \(t^k\uparrow T^*\) and \(c(t^k;q,m)\rightarrow 0\) as \(k\rightarrow \infty\). Because of Lemma 4.2, for every k,

and thus,

Therefore, \(x(t^k)\in \{y\in \mathbb {R}^n_+|\langle q,y\rangle \le \langle q,x(0)\rangle \}\), and this set is compact. Hence, we assume without loss of generality that \(x(t^k)\rightarrow x^*\in \mathbb {R}^n_+\) as \(k\rightarrow \infty\). Because \(\langle p,x^*\rangle \ge m\), \(x^*\ne 0\). However,

which is a contradiction. Therefore, \(T^*>1\), and thus \(t\mapsto c(t;q,m)\) is defined on [0, 1]. Hence, for every \((p,m)\in P\), there exists a solution \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}\) of (17) with initial value condition \(u(p)=m\). The uniqueness of the solution follows from Theorem 3.1.

We now present the following lemma.

Lemma 4.3

Suppose that \(x=f(p,m),\ y=f(q,w),\ x\ne y\), \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}\) is a solution to (17) with \(u(p)=m\), and \(w\ge u(q)\). Then, \(\langle p,y\rangle >m\).

Proof (Proof of Lemma 4.3)

Let \(\bar{u}:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) be a solution to (17) with \(\bar{u}(q)=w\). By the uniqueness of the solution to (17), 1) if \(u(q)=w\), then \(u=\bar{u}\), and 2) if \(u(q)<w\), then \(\bar{u}(r)>u(r)\) for every \(r\in \mathbb {R}^n_{++}\). Define \(d(t)=\langle p,f((1-t)p+tq,\bar{u}((1-t)p+tq))\rangle\). Then, by Lemma 4.2, d(t) is nondecreasing on [0, 1], and thus

If \(w>u(q)\), then \(\bar{u}(p)>m\), and thus \(\langle p,y\rangle >m\), as desired. Therefore, we assume that \(w=u(q)\), and \(u=\bar{u}\).

By Lemma 3.1, we can choose a sequence \((q^k,w^k)\) such that \((q^k,w^k)\rightarrow (q,m)\) as \(k\rightarrow \infty\), and for every k, f is differentiable at \(((1-t)p+tq^k,c(t;q^k,w^k))\) and \(S_f((1-t)p+tq^k,c(t;q^k,w^k))\) is symmetric and negative semi-definite for almost all \(t\in [0,1]\). Let \(2\varepsilon =\Vert x-y\Vert\). Define \(p^k(t)=(1-t)p+tq^k\) and \(x^k(t)=f(p^k(t),c(t;q^k,w^k))\). Then, \(x^k(1)\rightarrow y\) and \(x^k(0)\rightarrow x\) as \(k\rightarrow \infty\), and thus without loss of generality, we can assume that \(\Vert x^k(1)-x^k(0)\Vert \ge \varepsilon\) for every k. Moreover, \(x^k(t)\) is a Lipschitz function defined on [0, 1]. If f is differentiable at \((p^k(t),c(t;q^k,w^k))\), then define \(S_t^k=S_f(p^k(t),c(t;q^k,w^k))\). By our assumption, \(S_t^k\) can be defined and is symmetric and negative semi-definite for almost all \(t\in [0,1]\). Because \(S_t^k\) is symmetric and negative semi-definite, there exists a positive semi-definite matrix \(A_t^k\) such that \(S_t^k=-(A_t^k)^2\). Moreover, the operator norm \(\Vert A_t^k\Vert\) is equal to \(\sqrt{\Vert S_t^k\Vert }\).Footnote 13 Because f is locally Lipschitz, there exists \(L>0\) such that \(\Vert S_t^k\Vert \le L\) for all k and almost all \(t\in [0,1]\). Define \(d^k(t)=\langle p,x^k(t)\rangle\), and choose \(\delta >0\) such that \(\varepsilon ^2>2L^2\delta \Vert q^k-p\Vert ^2\) for every sufficiently large k. Then, by (19),

and thus,

Hence, taking \(k\rightarrow \infty\), we have

as desired. This completes the proof of this lemma.

Now, suppose that \(x=f(p,m),\ y=f(q,w),\ x\ne y\), and \(\langle p,y\rangle \le m\). Let \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) be a solution to (17) with initial value condition \(u(p)=m\). By contraposition of Lemma 4.3, \(u(q)>w\). Let \(\bar{u}:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) be a solution to (17) with initial value condition \(\bar{u}(q)=w\). Then, by the uniqueness of the solution, \(m=u(p)>\bar{u}(p)\), and thus by Lemma 4.3, \(\langle q,x\rangle >w\). To summarize this argument, we obtain that if \(x=f(p,m),\ y=f(q,w), x\ne y\) and \(\langle p,y\rangle \le m\), then \(\langle q,x\rangle >w\).Footnote 14

Let \(x=f(p,m)=f(q,w)\), and \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) be a solution to (17) with \(u(p)=m\). We show that \(u(q)=w\). Define \(p(t)=(1-t)p+tq\) and \(m(t)=(1-t)m+tw\). Suppose that \(f(p(t),m(t))=y\ne x\) for some \(t\in [0,1]\). Because \(\langle p(t),x\rangle =m(t)=\langle p(t),y\rangle\), either \(\langle p,y\rangle \le m\) or \(\langle q,y\rangle \le w\). In both cases, by the above argument, \(\langle p(t),x\rangle >\langle p(t),y\rangle\), which is a contradiction. Therefore, we conclude that \(x\equiv f(p(t),m(t))\) for every \(t\in [0,1]\). Let \(d(t)=u((1-t)p+tq)\). Then,

Meanwhile, because

we also have

Therefore, by the Picard-Lindelöf uniqueness theorem, \(d(t)\equiv m(t)\). In particular,

as desired.

Fix \(\bar{p}\in \mathbb {R}^n_{++}\). For \(x\in \Omega\), define v(x) as follows: If x is not in the range of f, then define \(v(x)=0\). If \(x=f(p,m)\), then choose a solution \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) to (17) with \(u(p)=m\), and define \(v(x)=u(\bar{p})\). If \(x=f(q,w)\) and \(\bar{u}:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) is a solution to (17) with \(\bar{u}(q)=w\), then by the above argument, \(\bar{u}(\bar{p})=u(\bar{p})\), and thus \(v:\Omega \rightarrow \mathbb {R}\) is well-defined.

Suppose that \(x=f(p,m)\) and \(y\ne x,\ \langle p,y\rangle \le m\). Choose a solution \(u:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) to (17) with \(u(p)=m\). Then, \(v(x)=u(\bar{p})>0\). If y is not in the range of f, then \(v(y)=0<v(x)\). If \(y=f(q,w)\), then by contraposition of Lemma 4.3, \(u(q)>w\). If \(\bar{u}:\mathbb {R}^n_{++}\rightarrow \mathbb {R}_{++}\) is a solution to (17) with \(\bar{u}(q)=w\), then \(v(x)=u(\bar{p})>\bar{u}(\bar{p})=v(y)\). Therefore, for all instances, \(v(x)>v(y)\). This implies that \(f=f^v\). This completes the proof.

Note that v(x) is obtained by only calculating \(c(1;\bar{p},m)\), where c is the solution function of ODE (18). Therefore, we can obtain the information of v by only solving an ODE, and solving a PDE is not needed in the actual calculation.

As its corollary, we can obtain the following result. Let \(R(f)=f(P)\).

Corollary 4.1

Suppose that \(f=f^v\) is a locally Lipschitz demand function that satisfies Walras’ law, and \(v:\Omega \rightarrow \mathbb {R}\) is a function defined in the proof of Theorem 4.1. Then, v is upper semi-continuous on R(f).Footnote 15 Moreover, if \(f=f^w\) for another function \(w:\Omega \rightarrow \mathbb {R}\) and w is upper semi-continuous on R(f), then

for every \(x,y\in R(f)\).

Proof

Choose any \(a>0\), and suppose that \(x\in R(f)\) and \(v(x)<a\). Choose \((p,m)\in P\) with \(x=f(p,m)\). Let c(t; q, w) be the solution function of (18). Because \(v(x)=c(1;\bar{p},m)<a\), \(c(1;\bar{p},m+\varepsilon )<a\) for some \(\varepsilon >0\). Let \(y=f(p,m+\varepsilon )\). Then, \(v(y)=c(1;\bar{p},m+\varepsilon )<a\). The set \(U=\{z\in R(f)|\langle p,z\rangle <\langle p,y\rangle \}\) is an open neighborhood of x, and \(v(z)<v(y)<a\) for every \(z\in U\). This implies that v is upper semi-continuous on R(f).

Next, suppose that \(f=f^w\) and w is upper semi-continuous on R(f). For every \(x\in R(f)\), define

Then, by Lemma 1 of Hosoya [4], \(E^x\) is a solution to (17), and if \(x=f(p,m)\), then \(E^x(p)=m\). By the definition of v, \(v(x)=E^x(\bar{p})\).

Choose any \(x\in R(f)\) and define \(z=f(\bar{p},E^x(\bar{p}))\). For every \(\varepsilon >0\), define \(z^{\varepsilon }=f(\bar{p},E^x(\bar{p})+\varepsilon )\). By the definition of \(E^x(\bar{p})\), there exists \(y\in \Omega\) such that \(w(y)\ge w(x)\) and \(\langle \bar{p},y\rangle \le E^x(\bar{p})+\varepsilon\). Therefore, \(w(z^{\varepsilon })\ge w(y)\ge w(x)\) for every \(\varepsilon >0\). Because w is upper semi-continuous on R(f), \(w(z)\ge w(x)\). Next, \(E^z\) is a solution to (17) with \(E^z(\bar{p})=E^x(\bar{p})\). By the uniqueness of the solution to (17), \(E^z(p)=E^x(p)=m\), and thus \(x=f(p,E^z(p))\). By repeating the above arguments, \(w(x)\ge w(z)\), and thus \(w(x)=w(z)\).

Now, choose any \(x,y\in R(f)\), and define \(z_x=f(\bar{p},E^x(\bar{p}))\) and \(z_y=f(\bar{p},E^y(\bar{p}))\). Then,

as desired. This completes the proof.

Therefore, the information of v is unique on R(f). Moreover, we can obtain the following result, which implies that we can reversely calculate the information of v appropriately.

Corollary 4.2

Suppose that \(f=f^v\) is a locally Lipschitz demand function that satisfies Walras’ law, and \(v:\Omega \rightarrow \mathbb {R}\) is a function defined in the proof of Theorem 4.1. Moreover, suppose that R(f) includes \(\mathbb {R}^n_{++}\) and is open in the relative topology of \(\Omega\). Define the following function

Then, \(\tilde{v}\) is upper semi-continuous on \(\Omega\), \(f=f^{\tilde{v}}\), and for every upper semi-continuous function \(w:\Omega \rightarrow \mathbb {R}\) with \(f=f^w\),

for every \(x,y\in \Omega\).

Proof

As in the proof of Corollary 4.1, define

First, we show that \(f=f^{\tilde{v}}\).Footnote 16 Suppose that \(x=f(p,m)=f^v(p,m)\). If \(y\in R(f), y\ne x\) and \(\langle p,y\rangle \le m\), then clearly \(\tilde{v}(x)=v(x)>v(y)=\tilde{v}(y)\).

Suppose that \(z\in \Omega \setminus R(f)\) and \(\langle p,z\rangle \le m\). Because \(x\in R(f)\), \(z\ne x\). Because R(f) is relatively open in \(\Omega\), there exists \(t\in ]0,1[\) such that \(y=(1-t)x+tz\in R(f)\). Suppose that \(y=f(q,w)\). Because \(\langle p,y\rangle \le m\) and \(y\ne x\), \(v(x)>v(y)\). This implies that \(\langle q,x\rangle >w\), and thus \(\langle q,z\rangle <w\). Choose \(\varepsilon >0\) such that if \(z'\in R(f)\) and \(\Vert z'-z\Vert <\varepsilon\), then \(\langle q,z'\rangle <w\). Then,

Therefore, \(x=f^{\tilde{v}}(p,m)\), and thus \(f=f^{\tilde{v}}\).

Second, we prove the upper semi-continuity of \(\tilde{v}\). Choose any \(a>0\) and suppose that \(\tilde{v}(x)<a\). By the definition of \(\tilde{v}(x)\), there exists \(z\in R(f)\) such that \(z\ge x\) and \(\tilde{v}(z)<a\). Suppose that \(z=f(p,m)\). Then, by the same argument as in the proof of Corollary 4.1, there exists \(\varepsilon >0\) such that \(\tilde{v}(y)<a\), where \(y=f(p,m+\varepsilon )\). Define \(U=\{z'\in \Omega |\langle p,z'\rangle <m+\varepsilon \}\). Then, \(\tilde{v}(z')<a\) for every \(z'\in U\), and U is an open neighborhood of x. This implies that \(\tilde{v}\) is upper semi-continuous.

Third, suppose that \(w:\Omega \rightarrow \mathbb {R}\) is an upper semi-continuous function such that \(f=f^w\). Define

for any \(q\in \mathbb {R}^n_{++}\). Again by Lemma 1 of Hosoya [4], if \(x=f(p,m)\), then both \(E^x\) and \(F^x\) are solutions to (17) with initial value condition \(E^x(p)=F^x(p)=m\). Thus, \(E^x\equiv F^x\). By the arguments in the proof of Corollary 4.1, \(v(x)=v(z)\) and \(w(x)=w(z)\) for \(z=f(\bar{p},E^x(\bar{p}))\).

Choose any \(x\in \Omega \setminus R(f)\). If \(z-x\in \mathbb {R}^n_{++}\), then \(z\in R(f)\), and thus there exists \((p,m)\in P\) such that \(z=f(p,m)\) and \(\langle p,x\rangle <m\), which implies that \(\tilde{v}(z)>\tilde{v}(x)\) and \(w(z)>w(x)\). Choose any sequence \((z^k)\) in R(f) such that \(z^k-x\in \mathbb {R}^n_{++}\) for every k and \(z^k\rightarrow x\) as \(k\rightarrow \infty\). Then, \(\tilde{v}(z^k)>\tilde{v}(x)\) for every k and \(\tilde{v}(z^k)\rightarrow \tilde{v}(x)\) as \(k\rightarrow \infty\). If \(\tilde{v}(x)>0\), then define \(y=f(\bar{p},\tilde{v}(x))\) and \(y^k=f(\bar{p},\tilde{v}(z^k))\). Then, \(w(z^k)=w(y^k)>w(y)\) and by the upper semi-continuity of w, \(w(x)\ge w(y)\). Additionally, because \(y^k\rightarrow y\) as \(k\rightarrow \infty\) and \(w(y^k)=w(z^k)>w(x)\), we have \(w(y)\ge w(x)\), which implies that \(w(y)=w(x)\). Meanwhile, if \(\tilde{v}(x)=0\), then \(y^k=f(\bar{p},\tilde{v}(z^k))\rightarrow 0\). By the same argument as above, \(w(x)=w(0)\). Hence, for every \(x\in \Omega\), either \(\tilde{v}(x)=0\) and \(w(x)=w(0)\) or \(\tilde{v}(x)>0\) and \(w(x)=w(f(\bar{p},\tilde{v}(x)))\).

Finally, choose any \(x,y\in \Omega\). If \(\tilde{v}(x)=\tilde{v}(y)=0\), then \(w(x)=w(y)=w(0)\). If \(\tilde{v}(x)>0=\tilde{v}(y)\), then \(w(x)=w(f(\bar{p},\tilde{v}(x)))>w(0)=w(y)\). If \(\tilde{v}(x)>0\) and \(\tilde{v}(y)>0\), define \(z=f(\bar{p},\tilde{v}(x))\) and \(z'=f(\bar{p},\tilde{v}(y))\). Then,

Therefore, in all cases,

which completes the proof.

Example 2

In Example 1, we considered the function

and solved the PDE (2). As a result, we have a solution to (2) with initial value condition \(u(p)=m\): if \(4p_1m\le p_2^2\), then the solution u(q) is given by (15), and if \(4p_1m>p_2^2\), then the solution u(q) is given by (16). Actually, this function satisfies all the requirements in Corollary 4.2, and thus we can calculate \(\tilde{v}\) rigorously. Set \(\bar{p}=(1,1)\). Then, we can easily verify that

Therefore, the integrability problem is solved in this situation.

Notes on Theorem 4.1 and Corollaries 4.1 and 4.2. The first famous result of integrability theory that considered Shephard’s lemma as a PDE was provided by Hurwicz and Uzawa [5]. They treated a differentiable and locally Lipschitz CoD that satisfies Walras’ law and an additional condition, and derived a theorem similar to our Theorem 4.1. Their additional condition is as follows: For every compact set \(C\subset \mathbb {R}^n_{++}\), there exists \(L>0\) such that if \(p\in C\) and \(m>0\), then \(\Vert \frac{\partial f}{\partial m}(p,m)\Vert \le L\). This condition was removed by Hosoya [3, 6]. However, even in those papers, f was also assumed to be differentiable. Our Theorem 4.1 is the first result that removes the differentiability assumption from integrability theory.

Note that, in economic theory, the demand function \(f^v\) is not necessarily differentiable. For example, consider \(v(x)=w(x_1)+x_2\), where w is a continuous, increasing, and strictly concave function such that it is twice continuously differentiable and \(w''(x_1)<0\) for every \(x_1>0\), and \(\lim _{x_1\rightarrow 0}w'(x_1)=+\infty\). Then,

and this function is not differentiable but locally Lipschitz. Note that such v is very common in economic theory.Footnote 17

There is another treatment of integrability theory that uses the Frobenius theorem. See, for example, Hosoya [7] for detailed arguments.

5 Application on the Frobenius Theorem

The classical total differential equation is written as follows:

where \(g:U\rightarrow \mathbb {R}^n\setminus \{0\}\) is given. We assume that \(U\subset \mathbb {R}^n\) is open. In the classical case, g is assumed to be continuously differentiable. For a given \(x^*\in U\), a pair of functions \(u:V\rightarrow \mathbb {R}\) and \(\lambda :V\rightarrow \mathbb {R}\) is a solution to equation (20) around \(x^*\) if and only if u is continuously differentiable, \(\lambda\) is continuous and positive, V is an open neighborhood of \(x^*\), and \(Du(x)=\lambda (x)g(x)\) for every \(x\in V\).

Suppose that such a solution \((u,\lambda )\) exists and u is twice continuously differentiable. Then, \(\lambda\) is continuously differentiable and the Hessian matrix of u is symmetric. If \(i,j,k\in \{1,...,n\}\), then because \(\frac{\partial ^2u}{\partial x_j\partial x_i}=\frac{\partial ^2u}{\partial x_i\partial x_j}\),

Multiplying the first equation by \(g_k(x^*)\), the second equation by \(g_i(x^*)\), and the third equation by \(g_j(x^*)\), and summing all three equations, we have

Equation (21) is called Jacobi’s integrability condition. The classical Frobenius theorem states that the converse is also true: that is, for every \(x^*\in U\), there exists a solution \((u,\lambda )\) around \(x^*\) if and only if Jacobi’s integrability condition holds.

Using Theorem 3.1, we can extend this classical result. Suppose that \(g:U\rightarrow \mathbb {R}^n\setminus \{0\}\) is not necessarily differentiable but locally Lipschitz. For a given \(x^*\in U\), a pair of functions \(u:V\rightarrow \mathbb {R},\ \lambda :V\rightarrow \mathbb {R}\) is a solution to (20) around \(x^*\) if and only if u is locally Lipschitz, \(\lambda\) is positive, V is an open neighborhood of \(x^*\), and \(Du(x)=\lambda (x)g(x)\) for almost every \(x\in V\). If, additionally, for every \(w\in \mathbb {R}\), \(u^{-1}(w)\) is either the empty set or an \(n-1\) dimensional \(C^1\) manifold, then we call this solution normal.Footnote 18 Meanwhile, we say that g satisfies extended Jacobi’s integrability condition if equation (21) holds for almost all \(x\in U\). Then, the following theorem holds.

Theorem 5.1

Suppose that \(n\ge 2\), U is open, and \(g:U\rightarrow \mathbb {R}^n\setminus \{0\}\) is locally Lipschitz. Then, g satisfies extended Jacobi’s integrability condition if and only if for every \(x^*\in U\), there exists a normal solution \((u,\lambda )\) to equation (20) around \(x^*\).

Before proving this result, we note a fact. In this paper, we use the definition of ‘manifold’ in Milnor [8] or Guillemin and Pollack [9]. Because this is slightly different from the usual definition used in differential topology, we provide definitions of some of the terms related to ‘manifold.’

First, let \(A\subset \mathbb {R}^N\) and \(f:A\rightarrow \mathbb {R}^M\). If \(x\in A\), we say that f is \(C^k\) at x if there exists a function \(F:V\rightarrow \mathbb {R}\) such that 1) \(V\subset \mathbb {R}^N\) is an open neighborhood of x in the topology of \(\mathbb {R}^N\), 2) F is \(C^k\) in the usual sense, and 3) \(F(y)=f(y)\) for all \(y\in A\cap V\). If f is \(C^k\) everywhere, then it is simply said to be \(C^k\). For a set X and \(x\in X\), suppose that \(\phi :U\rightarrow V\) is a topological homeomorphism, where \(U\subset \mathbb {R}^m\) is an open set and \(V\subset X\) is an open neighborhood of x with respect to the relative topology X. Then, \(\phi\) is called a continuous local parameterization around x. If both \(\phi\) and \(\phi ^{-1}\) are \(C^k\), then \(\phi\) is called a \(C^k\) local parameterization around x. A set X is called an m dimensional \(C^k\) manifold if for every \(x\in X\), there exists a \(C^k\) local parameterization \(\phi :U\rightarrow V\) around x, where \(U\subset \mathbb {R}^m\).Footnote 19

Second, suppose that X is an m dimensional \(C^k\) manifold, and \(x\in X\). Define

This set \(T_x(X)\) is called the tangent space of X at x. It is well-known that \(T_x(X)\) is an m dimensional linear space. Next, let \(Y\subset X\) be open, \(x\in Y\), and \(f:Y\rightarrow \mathbb {R}^M\) be a \(C^1\) function. For \(v\in T_x(X)\), choose a \(C^1\) curve \(c:I\rightarrow X\) such that \(c(0)=x\) and \(\dot{c}(0)=v\). If we define \(c_f(t)=f(c(t))\), then \(\dot{c}_f(0)\) depends only on v and is independent of the choice of c(t). Hence, we can define the derivative \(df_x:v\mapsto \dot{c}_f(0)\) of f at x. It is well-known that \(df_x\) is a linear mapping from \(T_x(X)\) into \(\mathbb {R}^M\).

Third, we note the inverse function theorem. Suppose that X is an m dimensional \(C^k\) manifold, \(x\in X\), \(Y\subset X\) is an open neighborhood of x, and \(f:Y\rightarrow \mathbb {R}^M\) is \(C^1\). If \(df_x\) is a bijection, then there exists an open set \(U\subset \mathbb {R}^M\) and an open neighborhood \(V\subset Y\) of x such that f is a \(C^1\) diffeomorphism from V onto U. For a proof, see Section 1.3 of Guillemin and Pollack [9].

We now complete the preparation of the proof of Theorem 5.1.

Proof

First, we introduce lemmas.

Lemma 5.1

Suppose that \((u,\lambda )\) is a solution to (20) around \(x^*\). Let V be the domain of u and \(\lambda\). Choose any \(x\in V\). Suppose that \(g_{i^*}(x)\ne 0\). We use the following notation: if \(\tilde{y}=(y_1,...,y_{i^*-1},y_{i^*+1},...,y_n)\), then \((\tilde{y},y_{i^*})=(y_1,...,y_{i^*-1},y_{i^*},y_{i^*+1},...,y_n)\), and conversely, if \(y=(y_1,...,y_n)\), then \(\tilde{y}=(y_1,...,y_{i^*-1},y_{i^*+1},...,y_n)\). For \(i\in \{1,...,n\}\setminus \{i^*\}\), define

and consider the following ODE

Then, \(u(\tilde{y},c(1;\tilde{y},x_{i^*}))=u(x)\) for every \(\tilde{y}\) such that \([0,1]\times \{\tilde{y}\}\times \{x_{i^*}\}\) is included in the domain of the solution function c and \(((1-t)\tilde{x}+t\tilde{y},c(t;\tilde{y},x_{i^*}))\in V\) for every \(t\in [0,1]\).

Proof (Proof of Lemma 5.1)

Without loss of generality, we assume that \(i^*=n\). We note that equation (22) is the same as equation (8). Therefore, we can apply Lemma 3.1. By Lemma 3.1, there exists a sequence \((\tilde{y}^k,x_n^k)\) such that \((\tilde{y}^k,x_n^k)\rightarrow (\tilde{y},x_n)\) as \(k\rightarrow \infty\) and for all k and almost all \(t\in [0,1]\), u is differentiable and \(Du=\lambda g\) at \(((1-t)\tilde{x}+t\tilde{y}^k,c(t;\tilde{y}^k,x_n^k))\). Then, for almost all \(t\in [0,1]\),

which implies that \(u(\tilde{y}^k,c(1;\tilde{y}^k,x_n^k))=u(\tilde{x},x_n^k)\). Letting \(k\rightarrow \infty\), \(u(\tilde{y},c(1;\tilde{y},x_n))=u(x)\), as desired.

Lemma 5.2

Suppose that \((u,\lambda )\) is a normal solution to (20) around \(x^*\). Let V be the domain of u and \(\lambda\). Suppose that \(g_{i^*}(x^*)\ne 0\). We use the notations used in Lemma 5.1. Then, there exists \(\varepsilon >0\) such that 1) for \(\bar{W}\equiv \prod _{i=1}^n[x_i^*-\varepsilon ,x_i^*+\varepsilon ]\subset V\), \(g_{i^*}\ne 0\) in this set, 2) the solution function \(c(t;\tilde{x},x_{i^*})\) of the ODE

is defined on \([0,1]\times \bar{W}\) and \(((1-t)\tilde{x}^*+t\tilde{x},c(t;\tilde{x},x_{i^*}))\in V\) for all \(t\in [0,1]\) and \(x\in \bar{W}\), and 3) if we define

then \(E_{x_{i^*}}\) is continuously differentiable at \(\tilde{x}\) for every \(x\in W=\prod _{i=1}^n]x_i^*-\varepsilon ,x_i^*+\varepsilon [\) and

Proof (Proof of Lemma 5.2)

Again, we assume without loss of generality that \(i^*=n\). Because the constant function \(c(t)\equiv x_n^*\) satisfies equation (23) with \(\tilde{x}=\tilde{x}^*\) and \(x_n=x_n^*\), \(t\mapsto c(t;\tilde{x}^*,x_n^*)\) is defined on \(\mathbb {R}\). Because the domain of the solution function c is open and c is continuous, there exists \(\varepsilon >0\) such that requirements 1) and 2) of this lemma are satisfied. Meanwhile, because \(g_n\ne 0\) at \(x^*\) and the solution function c is continuous, we can assume that \(\varepsilon\) is so small that there exists \(\varepsilon '>\varepsilon\) such that \(g_n\ne 0\) on \(W'=\prod _{i=1}^n]x_i^*-\varepsilon ',x_i^*+\varepsilon '[\subset V\), and \(|E_{x_n}(\tilde{x})-x_n^*|<\varepsilon '\) for every \(x\in W\).

Suppose that \(n=2\) and let \(x\in W\). If \(x_1\ne x_1^*\), define

Then,

and \(d(x_1-x_1^*)=E_{x_2}(x_1)\). Therefore, \(E_{x_2}'(x_1)=f(x_1,E_{x_2}(x_1))\) for such x. If \(x_1=x_1^*\), then for \(0<|h|<\varepsilon\),

which implies that \(E_{x_2}'(x_1)=f(x_1,E_{x_2}(x_1))\). Hence, 3) of our claim is correct. Thus, we can assume that \(n\ge 3\).

Choose any \(x\in W\) such that u is differentiable and \(Du=\lambda g\) at \(\bar{x}\equiv (\tilde{x},E_{x_n}(\tilde{x}))\in W'\). Let \(X=u^{-1}(u(\bar{x}))\cap W'\). Because u is a normal solution to (20), X is an \(n-1\) dimensional \(C^1\) manifold. Define a function \(\chi :X\rightarrow \mathbb {R}^{n-1}\) as

Then, \(\chi\) is the restriction of the linear mapping

into X. Therefore, we can easily check that it is \(C^1\) and the derivative \(d\chi _{\bar{x}}\) is the restriction of K to the tangent space \(T_{\bar{x}}(X)\). Choose any \(\tilde{v}\in \mathbb {R}^{n-1}\), and define \(v_n=\langle f(\bar{x}),\tilde{v}\rangle\). Because \(f_i=-\frac{g_i}{g_n}\), \(\langle v,g(\bar{x})\rangle =0\). This implies that \(\langle v,Du(\bar{x})\rangle =0\), and thus \(v\in T_{\bar{x}}(X)\).Footnote 20 Hence, \(\tilde{v}=d\chi _{\bar{x}}(v)\), and thus the linear mapping \(d\chi _{\bar{x}}\) is a bijection from \(T_{\bar{x}}(X)\) onto \(\mathbb {R}^{n-1}\). Therefore, by the inverse function theorem, there exists an open neighborhood \(U'\) of \(\tilde{x}\) in \(\mathbb {R}^{n-1}\) and \(V'\) of \(\bar{x}\) in X such that \(\chi\) is a diffeomorphism from \(V'\) onto \(U'\). Without loss of generality, we can assume that \(|y_i-x_i^*|<\varepsilon\) for all \(\tilde{y}\in U'\) and \(i\in \{1,...,n-1\}\). By the definition of the relative topology, there exists \(\delta >0\) such that if \(y\in \mathbb {R}^n\) and \(\Vert y-\bar{x}\Vert <\delta\), then \(y\in X\) if and only if \(y\in V'\). Let \(U''=\{\tilde{y}\in U'|\Vert (\tilde{y},E_{x_n}(\tilde{y}))-\bar{x}\Vert <\delta \}\). Then, \(U''\) is an open neighborhood of \(\tilde{x}\). Define \(\phi =\chi ^{-1}\) on \(U''\). Because of Lemma 5.1, for every \(\tilde{y}\in U''\), \(u(\tilde{y},E_{x_n}(\tilde{y}))=u(\bar{x})\), and thus \((\tilde{y},E_{x_n}(\tilde{y}))\in X\). Therefore, \((\tilde{y},E_{x_n}(\tilde{y}))\in V'\) and \(\chi (\tilde{y},E_{x_n}(\tilde{y}))=\tilde{y}\). This means that \(\phi _n(\tilde{y})=E_{x_n}(\tilde{y})\). Because \(\phi\) is \(C^1\) on \(U''\), \(E_{x_n}\) is also \(C^1\) on \(U''\), as desired. By a simple calculation,

and thus,

for such x.

Now, choose any \(x\in W\) such that \(x_{n-1}\ne x_{n-1}^*\). Because we assumed that \(n\ge 3\), \(n-2\ge 1\). Let \(\hat{x}=(x_1,...,x_{n-2})\) and for \(\hat{y}=(y_1,...,y_{n-2})\), we write \(\tilde{y}=(y_1,...,y_{n-2},x_{n-1})\) unless otherwise stated. Then, by Lemma 3.1, for every \(i\in \{1,...,n-2\}\), there exists \(\delta '>0\) and a sequence \((t^k,\hat{x}^k,x_n^k)\) in \([0,1]\times \mathbb {R}^{n-2}\times \mathbb {R}\) such that \(t^k\rightarrow 1,\ \hat{x}^k\rightarrow \hat{x},\ x_n^k\rightarrow x_n\) as \(k\rightarrow \infty\), and for all k and almost every \(s\in ]-\delta ',\delta '[\), \(((1-t^k)\tilde{x}^*+t^k(\tilde{x}^k+se_i),c(t^k;\tilde{x}^k+se_i,x_n^k))\in W'\), and u is differentiable and \(Du=\lambda g\) at \(((1-t^k)\tilde{x}^*+t^k(\tilde{x}^k+se_i),c(t^k;\tilde{x}^k+se_i,x_n^k))\), where \(e_i\) denotes the i-th unit vector. Define \(\hat{y}^k=(1-t^k)\hat{x}^*+t^k\hat{x}^k,\ y_{n-1}^k=(1-t^k)x_{n-1}^*+t^kx_{n-1}\), and \(\tilde{y}^k=(\hat{y}^k,y_{n-1}^k)\). If we define \(c(t)=c(tt^k;\tilde{x}^k,x_n^k)\), then

and thus \(c(t)=c(t;\tilde{y}^k,x_n^k)\), and in particular,

By the same arguments,

By assumption, if \(|\ell |<\delta '\), then

By the dominated convergence theorem,

which implies that

Next, choose any \(x\in W\) and \(i\in \{1,...,n-2\}\). Let \(e=(1,1,...,1)\in \mathbb {R}^n\) and define \(x^k=x+k^{-1}e\). Then, for sufficiently large k, \(x^k_{n-1}\ne x_{n-1}^*\). Therefore, there exists \(\delta '>0\) such that if \(|s|<\delta '\), then for all sufficiently large k, \(E_{x_n^k}\) is partially differentiable with respect to i at \(\tilde{x}^k+se_i\) and

Hence, if \(|\ell |<\delta '\),

By the dominated convergence theorem,

and thus \(E_{x_n}\) is partially differentiable with respect to i at x and

To summarize the above arguments, we obtain the following result: if \(x\in W\) and \(i\in \{1,...,n-2\}\), then

Replacing \(n-1\) by 1 and repeating the above arguments, we find that

which completes the proof of Lemma 5.2.

We now prove the ‘if’ part of Theorem 5.1. By Rademacher’s theorem, g is differentiable almost everywhere. Choose any \(x^*\in U\) such that g is differentiable at \(x^*\). Because \(g(x^*)\ne 0\), we can assume without loss of generality that \(g_n(x^*)\ne 0\). Set \(i^*=n\) and choose \(\varepsilon >0\) defined in Lemma 5.2. Then, the function \(E_{x_n^*}\) is \(C^1\) and satisfies the following equation

where \(\tilde{x}^*=(x_1^*,...,x_{n-1}^*)\). Therefore, \(E_{x_n^*}\) is twice differentiable at \(\tilde{x}^*\), and by Lemma 3.2, the Hessian matrix \(DE_{x_n^*}(\tilde{x}^*)\) is symmetric. If \(s_{ij}\) is the (i, j)-th element of \(DE_{x_n^*}(\tilde{x}^*)\), thenFootnote 21

Because \(DE_{x_n^*}(\tilde{x}^*)\) is symmetric, \(s_{ij}=s_{ji}\) for \(i,j\in \{1,...,n-1\}\). Because \(g_n\ne 0\),

Now, suppose that \(i,j,k\in \{1,...,n\}\). If \(i=j\), \(j=k\), or \(k=i\), then (21) is automatically satisfied. Hence, we assume that \(i\ne j\ne k\ne i\). If \(k=n\), then (21) is equivalent to (27), and thus we assume that \(i,j,k\in \{1,...,n-1\}\). Then, by (27),

Multiplying the first equation by \(g_k\), the second equation by \(g_i\), and the third equation by \(g_j\), and summing these three equations, we obtain

Because \(x^*\) is an arbitrary point at which g is differentiable, we conclude that g satisfies extended Jacobi’s integrability condition, and the ‘if’ part is correct.

Next, we treat the ‘only if’ part. Suppose that g satisfies the extended Jacobi’s integrability condition, and choose any \(x^*\). Without loss of generality, we assume that \(g_n(x^*)\ne 0\). For \(i\in \{1,...,n-1\}\), define

Then, f(x) is defined and Lipschitz on some open neighborhood W of \(x^*\). Without loss of generality, we assume that \(g_n(x)>0\) on W.

If g is differentiable at \(x\in W\), then by (26),

and by equation (21), \(s_{ij}(x)=s_{ji}(x)\) for almost all \(x\in W\), that is, f is integrable in the sense of Theorem 3.1.

Consider the following PDE:

and the corresponding ODE:

Suppose that \(c(t;\tilde{x},w)\) is the solution function of (29). Then, the domain of this function includes \([0,1]\times \{\tilde{x}^*\}\times \{0\}\), because \(c(t)\equiv x_n^*\) is a solution to (29) with parameter \((\tilde{x}^*,0)\). Therefore, there exists \(\varepsilon >0\) such that if \(|x_i-x_i^*|\le 2\varepsilon\) for all \(i\in \{1,...,n-1\}\) and \(|w|\le 2\varepsilon\), then \(t\mapsto c(t;\tilde{x},w)\) is defined on [0, 1] and \(((1-t)\tilde{x}^*+t\tilde{x},c(t;\tilde{x},w))\in W\). Note that, by Theorem 3.1, \(E_w(\tilde{x})=c(1;\tilde{x},w)\) is a solution to (28).Footnote 22 By the same arguments in the proof of Lemma 3.1, we can show that \(c(t;\tilde{x},w)\) is increasing in w. Because \(c(t;\tilde{x},w)\) is locally Lipschitz and \(c(1;\tilde{x}^*,0)=x_n^*\), there exists \(\delta \in ]0,\varepsilon ]\) such that if \(|x_i-x_i^*|\le \delta\) for every \(i\in \{1,...,n-1\}\), then \(c(1;\tilde{x},-\varepsilon )<x_n^*-\delta\) and \(c(1;\tilde{x},\varepsilon )>x_n^*+\delta\). If \(|x_i-x_i^*|\le \delta\) for every \(i\in \{1,...,n\}\), then there uniquely exists \(w\in [-\varepsilon ,\varepsilon ]\) such that \(x_n=c(1;\tilde{x},w)\). Define u(x) as such a w. Then, u is defined on \(\bar{V}=\{x\in \mathbb {R}^n||x_i-x_i^*|\le \delta \text{ for } \text{ all } i\in \{1,...,n\}\}\). Let \(V=\{x\in \mathbb {R}^n||x_i-x_i^*|<\delta \text{ for } \text{ all } i\in \{1,...,n\}\}\).

Define \(\tilde{V}=\{\tilde{x}\in \mathbb {R}^{n-1}||x_i-x_i^*|<\delta \text{ for } \text{ all } i\in \{1,...,n-1\}\}\). For all \((\tilde{x},w)\in \tilde{V}\times ]-\varepsilon ,\varepsilon [\), if \(|h|<\varepsilon\), then

and

for every \(t\in [0,1]\), where \(\tilde{x}(t)=(1-t)\tilde{x}^*+t\tilde{x}\) and \(L>0\) is some constant independent of \((t,\tilde{x},w)\). Therefore, if \(\Vert \tilde{x}-\tilde{x}^*\Vert <(2L)^{-1}\), then \(|c(1;\tilde{x},w+h)-c(1;\tilde{x},w)|\ge 2^{-1}h\). This implies that if \(\delta >0\) is sufficiently small, then \(\frac{\partial c}{\partial w}(1;\tilde{x},w)\ge 2^{-1}\) whenever the left-hand side is defined. Because \(x_n\mapsto u(\tilde{x},x_n)\) is the inverse function of \(w\mapsto c(1;\tilde{x},w)\), if \(\delta >0\) is sufficiently small, then u is Lipschitz in \(x_n\) on \(\bar{V}\). Hence, hereafter, we assume that \(\delta >0\) is so small that u is Lipschitz in \(x_n\) on \(\bar{V}\).

Next, choose any \(x\in V\) and suppose that \(u(x)=w\). Then, \(E_w(\tilde{x})=x_n\), and the graph of \(E_w(\tilde{y})\) coincides with the set \(u^{-1}(w)\), and thus the latter set is an \(n-1\) dimensional \(C^1\) manifold. Moreover, because \(DE_w(\tilde{x})=f(x)\), there exists \(\delta '>0\) such that if \(|h|<\delta '\), then \((x_1,...,x_i+h,...,x_n)\in \bar{V}\), and there exists \(y_n(h)\in [x_n-(|f_i(x)|+1)|h|,x_n+(|f_i(x)|+1)|h|]\) such that \(y(h)=(x_1,...,x_i+h,...,x_{n-1},y_n(h))\in \bar{V}\) and \(u(y(h))=w\). Because u is Lipschitz in \(x_n\) on \(\bar{V}\), there exists \(L>0\) independent of h such that

which implies that u is Lipschitz on V. By Rademacher’s theorem, u is differentiable at almost every point \(x\in V\). If u is differentiable at \(x\in V\), then for \(w=u(x)\), \(E_w(\tilde{x})=x_n\) and the graph of \(E_w\) coincides with \(u^{-1}(w)\), and thus by the chain rule,

which implies that either \(\frac{\partial u}{\partial x_i}(x)=g_i(x)=0\) or

for every \(i\in \{1,...,n-1\}\). Therefore, if we define

then

If \(\lambda (x)\) is positive for almost all \(x\in V\), then we can re-define \(\lambda (x)\) such that \(\lambda (x)=1\) when either \(\frac{\partial u}{\partial x_n}(x)\) is zero or undefined, and thus we can assume \(\lambda (x)\) is positive for all \(x\in V\). Hence, it suffices to show that \(\lambda (x)\) is positive for almost all \(x\in V\). Fix \(\tilde{x}\in \tilde{V}\). Note that \(\varphi :x_n\mapsto u(\tilde{x},x_n)\) is the inverse function of \(\psi :w\rightarrow c(1;\tilde{x},w)\), and the latter function is increasing. Therefore, \(\varphi\) is increasing. Moreover, \(\varphi\) is Lipschitz on \([x_n^*-\delta ,x_n^*+\delta ]\), and \(\psi\) is Lipschitz on \([-\varepsilon ,\varepsilon ]\). This implies that for almost all \(x_n\in [x_n^*-\delta ,x_n^*+\delta ]\), \(\varphi\) is differentiable at \(x_n\) and \(\psi\) is differentiable at \(\varphi (x_n)\). For such \(x_n\), \(\psi '(\varphi (x_n))\varphi '(x_n)=1\), and thus \(\varphi '(x_n)=\frac{\partial u}{\partial x_n}(\tilde{x},x_n)>0\). Because \(g_n(x)>0\), \(\lambda (x)>0\) for almost all \(x\in V\), which completes the proof.

This theorem has two important corollaries. The first is to connect Theorem 5.1 to the classical Frobenius theorem.

Corollary 5.1

Suppose that \(n\ge 2\), \(k\in \mathbb {N}\cup \{\infty \}\), \(U\subset \mathbb {R}^n\) is open, and \(g:U\rightarrow \mathbb {R}^n\setminus \{0\}\) is \(C^k\) and satisfies extended Jacobi’s integrability condition. Then, 1) for every \(x^*\in U\), there exists a normal solution \((u,\lambda )\) of (20) around \(x^*\) such that u is \(C^k\), \(\lambda\) is \(C^{k-1}\), and \(Du(x)=\lambda (x)g(x)\) whenever both sides are defined, and 2) for every normal solution \((u,\lambda )\) of (20) and \(w\in \mathbb {R}\), \(u^{-1}(w)\) is either the empty set or an \(n-1\) dimensional \(C^{k+1}\) manifold.

Proof

Recall the definition of u constructed in the proof of Theorem 5.1. Without loss of generality, we assume that \(g_n(x^*)\ne 0\), and for some \(\varepsilon >0\) and \(\delta >0\), we have shown that if \(x\in W\equiv \prod _{i=1}^n]x_i^*-\delta ,x_i^*+\delta [\), then there exists \(w\in ]-\varepsilon ,\varepsilon [\) such that

where c is the solution function of (29). Then, we define \(u(x)=w\), that is, for \(x\in W\),

If g is \(C^k\), then \((x,w)\mapsto c(1;\tilde{x},x_n^*+w)-x_n\) is also \(C^k\). If \(\frac{\partial c}{\partial x_n}(1;\tilde{x},x_n^*+w)=0\), then u becomes not locally Lipschitz, which contradicts Theorem 5.1. Therefore, we can apply the implicit function theorem, and thus u is \(C^k\). By the chain rule, \(\frac{\partial u}{\partial x_n}(x)>0\) for all x. Moreover, in the proof of Theorem 5.1, we have shown that \(Du(x)=\lambda (x)g(x)\) for all x at which both sides are defined and u is differentiable. Because u is differentiable everywhere, 1) is correct.

Next, suppose that \((u,\lambda )\) is a normal solution to (20). If \(x\in u^{-1}(w)\), then by Lemmas 5.1 and 5.2, there exists a \(C^{k+1}\) local parameterization function \((\tilde{y},E_{u(x)}(\tilde{y}))\) of \(u^{-1}(w)\) defined on a neighborhood of x, where \(E_{u(x)}\) is a solution to (25). Therefore, if \(u^{-1}(w)\) is nonempty, then it is an \(n-1\) dimensional \(C^{k+1}\) manifold. This completes the proof.

Next, we define the integral manifold. Suppose that \(n\ge 2\), \(U\subset \mathbb {R}^n\) is open, and \(g:U\rightarrow \mathbb {R}^n\) is locally Lipschitz and satisfies extended Jacobi’s integrability condition. An \(n-1\) dimensional \(C^1\) manifold \(X\subset U\) is called an integral manifold of g if and only if for every \(x\in X\), there exists a normal solution \((u,\lambda )\) to (20) defined on an open neighborhood \(V\subset U\) of x such that \(X\cap V=u^{-1}(u(x))\). Then, the following result holds.

Corollary 5.2

Suppose that \(n\ge 2\), \(U\subset \mathbb {R}^n\) is open, and \(g:U\rightarrow \mathbb {R}^n\setminus \{0\}\) is locally Lipschitz and satisfies extended Jacobi’s integrability condition. Let X be an integral manifold, and suppose that \(x\in X\). Then, g(x) is normal to the tangent space \(T_x(X)\).

Proof

Suppose without loss of generality that \(g_n(x)\ne 0\). Let \((u,\lambda )\) be a normal solution to (20) defined on some neighborhood V of x, and suppose that \(Y\equiv X\cap V=u^{-1}(u(x))\). Define a function f as in Lemma 5.1 and let \(E_{u(x)}\) be the solution to (25). We have already shown in Lemma 5.2 that \(E_{u(x)}\) is \(C^1\) around \(\tilde{x}\). By the inverse function theorem, \(\phi (\tilde{y})=(\tilde{y},E_{u(x)}(\tilde{y}))\) is a \(C^1\) local parameterization of Y around x. This implies that the tangent space \(T_x(X)\) is normal to g(x).Footnote 23 This completes the proof.

This theorem and the corollaries can be easily extended to a non-smooth vector field defined on a differentiable manifold, and analysis of foliations with a non-smooth vector field.

Notes on Theorem 5.1. In recent years, the Frobenius theorem has been treated as a result on either vector fields or differential forms in differentiable manifolds. For a modern treatment of this theorem, see, for example, Auslander and MacKenzie [10], Hicks [11], Kosinski [12], Matsushima [13], and Sternberg [14]. The relationship between the modern Frobenius theorem and Jacobi’s integrability condition was explained by Hosoya [15].

However, in almost all textbooks that treat Frobenius theorem, our function g is assumed to be \(C^{\infty }\). Meanwhile, there are a few applications concerning this theorem that need to relax the smoothness of g. For example, Debreu [16, 17] treated g as a \(C^1\) function, and argued that a global result on the Frobenius theorem cannot be shown generally. This result is related to the theory of consumer’s behavior, which was argued in the previous section. In this connection, Hosoya [15] treated g as a \(C^k\) function and derived a general result regarding the Frobenius theorem. However, to the best of our knowledge, our Theorem 5.1 is the first result that removes the differentiability of g in this context.

Note that, the most general form of the Frobenius theorem is different from that given in Theorem 5.1. In the general Frobenius theorem, a system \((g^1,...,g^k)\) of functions is treated. To treat this problem appropriately, we must extend Theorem 3.1. This is a future task.

6 Conclusion

In this paper, we considered a first-order nonlinear PDE (2) such that the system is not necessarily differentiable but is locally Lipschitz, and first derived the necessary and sufficient condition for the existence of a global solution under integrability. Using this result, we easily obtained the necessary and sufficient conditions for the existence of local solutions. Moreover, using the above results, we succeeded in solving the integrability problem of consumer theory when the demand function is not necessarily differentiable. We also confirmed that our theorem on the existence of global solutions can be applied to obtaining an extension of Frobenius’ theorem.

We note a few possibilities for further research. First, for the differential equation (2) when f is a matrix-valued function, the integrability condition becomes slightly more difficult. Such a result is classically known when f is \(C^1\), but it is unsolved when it is only locally Lipschitz.

Next, we can consider using the extension of Frobenius’ theorem treated in this paper for the integrability problem in economics. Frobenius’ theorem was classically used by Antonelli [18] to solve this problem, and recently, Hosoya [7] submitted a complete solution to the continuous differentiable problem. However, this is an area where no one has yet attempted to solve the problem without differentiability.

Finally, because Frobenius’ theorem can be discussed with only the notion of Lipschitz, there is a possibility that we can discuss it on topological manifolds that do not have a differentiable structure. However, in that case, we will need a new language to talk about differential forms and vector fields on such manifolds.

Data Availability Statement

Not applicable.

Code Availability

Not applicable.

Notes

In economics, the inner product \(x_1y_1+...+x_ny_n\) is usually denoted by \(x\cdot y\). However, throughout this paper, we use \(\langle x,y\rangle\) instead of \(x\cdot y\).

Throughout this paper, for a function u, \(Du(x^*)\) is a column vector that represents the transpose of the Fréchet derivative of u at \(x^*\).

In this paper, we say that v is increasing if \(x_i>y_i\) for all i implies \(v(x)>v(y)\). For example, \(v(x)=x_1x_2\) is increasing on \(\mathbb {R}^2_+\) although \(v(1,0)=v(2,0)=0\).

If \(f^v\) is not necessarily locally Lipschitz, then there may be two solutions to equation (5). The example is constructed in Mas-Colell [19]. Therefore, the integrability problem cannot be resolved. Fortunately, in many microeconomic examples, \(f^v\) can be assumed to be locally Lipschitz, and thus this problem is not serious.

Throughout this paper, we do not treat any sort of weak solution. We discuss only classical solutions to PDEs.

to verify this claim rigorously, we need extended Young’s theorem. See Lemma 3.2.

A set V in some linear space is star-convex centered at x if and only if for every \(y\in V\), the segment \([x,y]=\{(1-t)x+ty|0\le t\le 1\}\) is included in V.

For the following parameterized ODE

$$\begin{aligned} \dot{x}(t)=f(t,x(t),y),\ x(t_0)=x_0, \end{aligned}$$we call a function X the solution function of this ODE if for every \((y,x_0)\) such that \((t_0,x_0,y)\) is in the domain of f, \(t\mapsto X(t;y,x_0)\) is the unique nonextendable solution to this ODE. It is well known that if \(f:U\rightarrow \mathbb {R}^N\) is a locally Lipschitz function defined on an open set \(U\subset \mathbb {R}\times \mathbb {R}^N\times \mathbb {R}^M\), then the solution function X is well-defined, the domain of X is open, and X is locally Lipschitz.

Because c is a solution function of ODE (8) and f is locally Lipschitz, the domain of c is open, and hence \(P_1\) and \(P_2\) exist.

Note that the integrability assumption is used only in this equation.

We do not know any article or textbook written in English that includes this result. This result and its proof are provided in Takagi [20], whose textbook is written in Japanese. Thus, the proof is presented here.

Because \(S_t^k\) is symmetric, there exists an orthogonal transform P such that

$$S_t^k=P^T\begin{pmatrix} \lambda _1 &{} 0 &{} ... &{} 0\\ 0 &{} \lambda _2 &{} ... &{} 0\\ \vdots &{} \vdots &{} \ddots &{} \vdots \\ 0 &{} 0 &{} ... &{} \lambda _n \end{pmatrix}P,$$where \(\lambda _i\) is some eigenvalue of \(S_t^k\). Because \(S_t^k\) is negative semi-definite, \(\lambda _i\le 0\) for every i. Hence, if we define

$$A_t^k=P^T\begin{pmatrix} \sqrt{-\lambda _1} &{} 0 &{} ... &{} 0\\ 0 &{} \sqrt{-\lambda _2} &{} ... &{} 0\\ \vdots &{} \vdots &{} \ddots &{} \vdots \\ 0 &{} 0 &{} ... &{} \sqrt{-\lambda _n} \end{pmatrix}P,$$then \(S_t^k=-(A_t^k)^2\). Moreover, because the operator norm \(\Vert S_t^k\Vert\) (resp. \(\Vert A_t^k\Vert\)) coincides with \(\max _i|\lambda _i|\), (resp. \(\max _i\sqrt{|\lambda _i|}\),) all our claims are correct.

In economics, this property of f is called the weak axiom of revealed preference.

Rigorously, this sentence means that the restriction of v to R(f) is upper semi-continuous with respect to the relative topology of R(f).

Hereafter, we frequently use the fact that if \(x\in \Omega\) and \(y-x\in \mathbb {R}^n_{++}\), then \(y\in R(f)\).

This type of utility function is called quasi-linear. Note that the function f we treat in examples 1-2 coincides with this \(f^v\), where \(w(x_1)=\sqrt{x_1}\). Because \(v(x)=\sqrt{x_1}+x_2\), our \(\tilde{v}(x)\) is just a monotone transform of this v(x).

Note that, if u is continuously differentiable and nondegenerate, then it is automatically normal.

In fact, any regular submanifold of Euclidean space is what we call a ‘manifold’ as a set. Conversely, our ‘\(C^k\) manifold’ together with an appropriate differential structure becomes a \(C^k\) differentiable manifold in the usual sense. See Hosoya [21] for detailed arguments.

Note that both \(T_{\bar{x}}(X)\) and the space of all vectors normal to \(Du(\bar{x})\) are \(n-1\) dimensional linear spaces. By the chain rule, \(v\in T_{\bar{x}}(X)\) implies \(\langle v,Du(\bar{x})\rangle =0\), and thus these two spaces are the same.

We abbreviate the variable \(x^*\).

If \(n=2\), then Theorem 3.1 cannot be applied. However, in this case this result can easily be obtained by a simple calculation.

It can be easily proved that the range of the derivative of a \(C^1\) local parameterization is the same as the tangent space.

References

Dieudonné J (2006) Foundations of modern analysis. Hesperides Press

Nikliborc W (1929) Sur les équations linéaires aux différentielles totales. Studia Mathematica 1:41–49

Hosoya Y (2017) The relationship between revealed preference and the Slutsky matrix. J Math Econ 70:127–146

Hosoya Y (2020) Recoverability revisited. J Math Econ 90:31–41

Hurwicz L, Uzawa H (1971) On the integrability of demand functions. In: Chipman JS, Hurwicz L, Richter MK, Sonnenschein HF (eds) Preference, utility and demand. Harcourt Brace Jovanovich Inc., New York, pp 114–148

Hosoya Y (2018) First-order partial differential equations and consumer theory. Discret Contin Dyn Syst Ser S 11:1143–1167

Hosoya Y (2013) Measuring utility from demand. J Math Econ 49:82–96

Milnor J (1965) Topology from the differentiable viewpoint. Princeton University Press, Princeton

Guillemin V, Pollack A (1974) Differential topology. Prentice Hall

Auslander L, Mackenzie RE (2009) Introduction to differential manifolds. Dover Publications

Hicks NJ (1965) Notes on differential geometry. Van Nostrand Reinhold U.S.

Kosinski AA (1993) Differential manifolds. Academic Press

Matsushima Y (1972) Differentiable manifolds. Marcel Dekker

Sternberg S (1999) Lectures on differential geometry. American Mathematical Society

Hosoya Y (2012) Elementary form and proof of the frobenius theorem for economists. Adv Math Econ 16:39–51

Debreu G (1972) Smooth preferences. Econometrica 40:603–615

Debreu G (1976) Smooth preferences: a corrigendum. Econometrica 44:831–832

Antonelli GB (1886) Sulla teoria matematica dell’ economia politica. Tipografia del Folchetto, Pisa

Mas-Colell A (1977) The recoverability of consumers’ preferences from market demand behavior. Econometrica 45:1409–1430

Takagi T (1961) Kaiseki gairon (in Japanese). Iwanami Shoten

Hosoya Y (2015) The relationship between two definitions of manifolds. Keizaikei 263:14–17

Funding

This work was supported by JSPS KAKENHI Grant Numbers JP18K12749, JP21K01403.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

Not applicable.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions