Abstract

Deep Convolutional Neural Networks (CNNs) have been successfully used in different applications, including image recognition. Time series data, which are generated in many applications, such as tasks using sensor data, have different characteristics compared to image data, and accordingly, there is a need for specific CNN structures to address their processing. This paper proposes a new CNN for classifying time series data. It is proposed to have new intermediate outputs extracted from different hidden layers instead of having a single output to control weight adjustment in the hidden layers during training. Intermediate targets are used to act as labels for the intermediate outputs to improve the performance of the method. The intermediate targets are different from the main target. Additionally, the proposed method artificially increases the number of training instances using the original training samples and the intermediate targets. The proposed approach converts a classification task with original training samples to a new (but equivalent) classification task that contains two classes with a high number of training instances. The proposed CNN for Time Series classification, called CNN-TS, extracts features depending the distance of two time series. CNN-TS was evaluated on various benchmark time series datasets. The proposed CNN-TS achieved 5.1% higher overall accuracy compared to the CNN base method (without an intermediate layer). Additionally, CNN-TS achieved 21.1% higher average accuracy compared to classical machine-learning methods, i.e., linear SVM, RBF SVM, and RF. Moreover, CNN-TS was on average 8.43 times faster in training time compared to the ResNet method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Time series (TS) datasets are obtained by recording a series of time-dependent observations. TS covers a broad range of applications, such as investigation of market prices, prediction of epidemic spread, speech signal processing, electrocardiogram (ECG) investigation, understanding the brightness of a target star, manufacturing, and weather forecasting [18, 42, 47, 48, 82]. Time Series Classification (TSC) is an important part of TS data mining that has been used in many areas. For example, in medical science, classification of TS datasets generated from Electrocardiogram (ECG) data has been used for heart disease diagnosis [59, 73], and Electroencephalogram (EEG) signal data are used as a primary tool for seizure onset detection [3]. TSC has been used for different applications, such as activity recognition [2, 57, 69]; and in industry, classification of TS generated from different sensors such as gas pressure sensors, and thermometers play important roles in industrial control processes [47, 48].

Deep learning (DL) algorithms have exhibited impressive capabilities in image processing and big data analysis [61, 70]. DL uses several layers of processing elements to extract high-level abstraction from input data. One of the most popular approaches in DL is the Convolutional Neural Network (CNN). CNN is inspired by biological visual systems and has been used in various machine vision tasks [20, 40, 42, 65, 72]. The CNN has gained much interest and popularity due to its capabilities of processing raw data, eliminating the requirement to pre-process data using feature extraction methods. Indeed, the feature extraction property of convolutional layers, and the powerful training methods of the CNN, are reasons for the high performance of CNNs on image classification. CNNs have been widely used in computer vision tasks [16, 33], object detection [35], bioinformatics [54], economics [33], and natural language processing [75].

The focus of deep CNNs has mainly been on image processing, and the application of CNNs for TS data is only now starting to emerge [4]. TS datasets have different characteristics compared to images, and therefore, specific CNN structures are required to optimally process such data [47, 48].

Deep CNNs usually have numerous learning parameters and consequently need large training datasets. Some TS tasks, such as EEG classification, usually do not have large volumes of data for training deep learning algorithms with good generalizing ability. Yannick et al. [78] found that many authors who adopt deep learning methods for EEG processing have suggested that more training data would improve the performance of their deep models. Additionally, the number of training data is important when intra-subject models are used. In intra-subject models, the data of a single subject are used to train the model related to the subject. The data of intra-subject models have less variability which can lead to high performance [78]. However, in intra-subject models, each model is trained with a limited number of training samples, corresponding to a single subject, which often contains a small number of signal recordings. This need for a large training sample set poses a specific problem for intra-subject situations [46, 60]. However, in general, there are many application areas where the collection of large training datasets may not be feasible. For example, in medical situations where data are collected in hospitals from patients with epilepsy, it may be difficult to collect a high number of training samples from a specific patient. Collecting such data could include years of recordings collected from different subjects with a specific disease [78].

Moreover, a deep CNN usually uses a supervised learning approach that requires labeled data, and labeling is a time-consuming task, particularly for large datasets. Therefore, there is a need for new techniques that can be used to train deep neural networks with a relatively small number of training samples. Liu et al. [49] have highlighted that designing deep learning models to learn from fewer training samples will have a significate effect on the future progress of deep learning methods.

In this paper, inspired by the intermediate concept of the brain [43], intermediate outputs are constructed to control learning in hidden layers of a deep learning method to improve the performance of the method. It is proposed to have new intermediate outputs extracted from different hidden layers instead of having a single output to control weight adjustment in the hidden layers during training. Intermediate targets which are different from the main target are used as labels to train the intermediate outputs. The intermediate targets control the creation of features in the hidden layers of deep learning methods to generate more informative features in hidden layers. Consequently, they improve accuracy of the deep learning. Additionally, the proposed CNN artificially increases the number of training instances using the original training samples. The proposed CNN-TS approach converts a classification task with original training samples to a new (but equivalent) classification task that contains two classes with a high number of training instances. The proposed method receives two TS as inputs, and it extracts features from the two applied inputs using intermediate outputs and subtracts the features to measure the distance of two TS. Distance-based methods are well-known methods in classical TSC but have not been explored in detail in the deep learning domain.

The structure of the paper is as follows. In the section “Related Works” a brief review of TSC methods is presented. The proposed method, CNN-TS, is described in the section “Proposed Method”. Simulation results are demonstrated in the section “Results” before the conclusion in the section “Discussion and Conclusion”.

Related Works

Time Series Classification

The high-dimensional and ordered properties of TS data and the redundancy in TS resulting from their highly autocorrelated properties make TSC a challenging task [46]. TSC requires machine-learning methods that are compatible with the characteristics of TS to process a sequence of observations following each other in time [46]. There exist different methods to classify TS data, which can be summarized into three main categories: model-based, feature-based, and distance-based techniques [37, 76, 82].

In model-based classification methods, a collection of TS data is used to build a model. Usually, a model is built for each class using the TS belonging to that class. Then the class of an unknown data sample is determined by each model (i.e., built for each class) to evaluate which is the best fit for the unknown data sample [37, 76]. For example, the Autoregressive model is a model-based method that is used for TSC. In Autoregressive models, it is assumed that the TS satisfies the stationary assumption, which cannot be followed in every situation [82]. The Markov Model (MM) and the Hidden Markov Model (HMM) are two other model-based methods which are used for non-stationarity TS [5].

Feature-based techniques are commonly used in classical TSC approaches to reduce the dimensionality of samples in TS data using different feature extraction methods. Simple statistical methods such as mean and variance, or more complicated methods such as spectral feature extraction methods can be used in the feature-based techniques [46]. Discrete Fourier Transform and Discrete Wavelet Transform (DWT) are two examples of spectral feature extraction methods. The spectral methods usually transform the time domain into the frequency domain and take a number of low-frequency harmonics that contain most of the TS energy. Eigenvalue methods, such as Principal Component Analysis (PCA) and Singular Value Decomposition (SVD), are other dimensionality reduction methods that can be applied to TS; these usually have better performance compared to spectral methods as they are calculated in an optimal way. However, they are not suitable for large datasets [37].

In distance-based classification methods, distances between TS are measured, and a method such as k-Nearest Neighbors (k-NN) is used to classify an unknown sample [46]. The distances between an unknown sample and the training samples are calculated and the unknown sample is classified based on its distances from the training samples [7]. Euclidean distance and a Dynamic Time Warping distance (DTW) have also been successfully used with one nearest-neighbor classifier for TSC [34]. DTW has been shown to be robust to TS variation generated by translations or dilations and it is considered a strong solution for TS distance measurement. DTW performs a local comparison instead of measuring similarity by considering the high-level structure in a long TS [7].

In addition to the above-mentioned k-NN distance-based classification method, the distance features method for TSC is another main distance-based TSC. In distance features method, new representations of TSs are created using the distance between TSs. In the global distance features method, the distances between a time series and other time series in the training data are calculated, for the full length of time series, to extract global distance features, which are then used as a feature vector to represent the time series. This learning method is from a general learning approach which is called learning in dissimilarity space [10, 62]. Gudmundsson et al. [25] have used two distance measure methods based on DTW to create global distance features. Then, an SVM model is used to classify the global features. Kate [36] has used different distance measures including DTW and Euclidian Distance (ED) to construct global distance features to be classified by SVM. Giusti et al.’s [22] generalized Kate’s [36] approach by extending the distance features method to other domains, such as frequency, which is different from the time domain in the previous global distance feature method. The computation cost of the global distance feature method is a significant drawback of the method. A high number of training samples and consequently a high number of pairs lead to a large input dimensions. Jain and Spiegel [32] proposed to use Principal Component Analysis (PCA) to reduce the dimensionality of global distance features created using DTW method to be classified by SVM. Kenji et al. [38] reduce the computation cost by calculating the distance between a time series and n subset of time series as prototypes out of the total time series. They consider the prototype selection as a feature selection method.

Local Distance Features (LDFs) are used as another sub-group of distance features method for TSC [1]. In LDF methods, the distance between some local patterns is calculated. Ye and Keogh [79] have proposed an important LDF method where sub sequences of time series called shapelets are selected to represent different classes. Specific shapes in time series, i.e., specific subsequence of time series, can be determined by experts and they can be used to identify the class of the time series. The original shapelets method [79] enumerates all possible subsequences of time series to find the appropriate shapelets; this has a high computation cost. Consequently, other works have been carried out to reduce the computational cost of the shapelets method [28, 56, 64, 80], and to learn appropriate shapelets [23]. Hills et al. [29] proposed a method for finding the most discriminative shapelets. Then, a vector of feature matrices is constructed by the distance between each time series and the selected shapelets. The minimum distance between a shapelet and all the subsequences of a time series with the size of the shapelet is considered as the distance between the time series and the shapelet. Li and Lin [44] have used an evolutionary method to find shapelets called Separating References (SRs) that effectively separate different classes. The distance between the SRs and the series from different classes are such features that can separate the classes with large margins. Despite the research summarized above, there remains room for applying distance features approaches to deep learning neural networks for TSC.

Deep Neural Networks for Time Series Classification

Classical feature-based methods for TSC do feature extraction and classification separately, and their performance relies on the quality of the extracted features. There is no specific method for extracting high-quality features for different TS, and different tasks need particular expertise to extract appropriate features [46]. However, a CNN can merge feature extraction and classification into a single process and the network is trained to extract appropriate features to improve the network’s performance. For instance, Lin et al. [46] proposed an end-to-end deep learning structure called Group-Constrained Convolutional Recurrent Neural Network (GCRNN) for TSC. A network of convolutional layers is used to extract features from the input TS. The extracted features are input to a recurrent network to capture the temporal characteristics of TS. The output of the recurrent network is fed to a fully connected network with sparse group lasso regularization.

Long Short-Term Memory (LSTM) is a recurrent neural network that is designed for analyzing TS. LSTM requires more computing resources than CNN, and training an LSTM is more computationally expensive than training a CNN. Additionally, recent research has shown that certain convolutional architectures for different applications, such as audio synthesis, machine translation, and skeleton-based action prediction, can reach state-of-the-art accuracies [15, 17, 19, 21, 48, 58]. For instance, Liu et al. [77] have proposed a CNN for fault diagnosis by proposing a dislocate layer at the input level. Their proposed layer extracts windows of TS in different intervals of an original signal. Liu et al.’s [77] experimental results have shown that their proposed CNN-based method has good performance in such industrial applications.

The end-to-end Multi-scale Convolutional Neural Network (MCNN) [13] applies different transformations using down-sampling transformation in the time domain; additionally, it performs spectral transformation in the frequency domain on an input signal. Then, different convolutional layers are used to extract high-level features from the original input and the transformed versions of the input. The extracted features are fed to a fully connected layer, and then, a Softmax output layer is used to classify the input.

Liu et al. [47, 48] proposed a deep learning method called Multivariate Convolutional Neural Network (MVCNN) that considers the multivariate properties of TS data. They utilized a 1 × 1 convolution filter for layers that are close to the input layer to extract features that specifically come from each variant. However, the shared filter among different variants can mix the data from different sources during training.

The main property of the above-mentioned methods in this section is the use of a CNN’s ability for automatic feature extraction, and for this reason, they can be considered as feature-based techniques for TSC using the deep learning method.

In model-based classifiers or generative models, the first goal is to find a suitable representation of TS before training a classifier [17, 41]. In a model-based method, an unsupervised method is often used to model the TS. For example, some deep learning methods used stacked denoising auto-encoders (SDAEs) to model input signals [8, 30]. RNN auto-encoders have also been used to generate a representative TS, and then, a classifier such as SVM was used for classification [52, 53, 63]. Echo State Networks (ESNs) project the input TS inside a reservoir of a recurrent neural network to reconstruct a representation of the input TS, and then, the learned representation of input TS is used for classification [6, 9, 12, 51]. Antoniades et al. [3] have proposed an Asymmetric–Symmetric Autoencoder (ASAE) to map a scalp EEG to an intracranial EEG (iEEG), since recording an iEEG is an invasive method to record the brain activity and is also expensive to implement. The model is used for the classification of Intracranial Epileptic Discharges (IEDs) and non-IED. Wang et al. [68] and Mittelman [55] have designed deep neural networks that reconstruct a multivariate TS using a deconvolutional operation followed by an upsampling method.

In summary, the literature shows that there exist several deep learning methods to extract features and to classify TS. These methods, like feature-based methods in classical TSC, are focused on features that are extracted by a number of convolution layers. Additionally, the literature review revealed that there exist a considerable number of deep learning methods that classify TS using model-based techniques. Although distance-based classification of TS data is thoroughly investigated in traditional TSC methods, distance-based methods have not been investigated in the deep learning field as much as they have been studied in classical methods for classification of TS. The review shows that it would be useful to design deep learning methods that are based on the principle of distance-based classification methods. This paper proposes a CNN that takes as input a pair of TS, evaluates their distance, and predicts whether these two TS are close enough to be in the same class. The ability of the proposed CNN to take two TS as inputs increases the number of different instances that are available to train the proposed network. The proposed method is described in detail in “Time Series Classification”.

Proposed Method

In this section, a technique to synthetically increase the number of training instances and create an extended dataset is first described. Then, a CNN called CNN-TS is proposed to classify the extended TS data. The structure of the proposed CNN is designed to be compatible with the extended dataset.

A Method for Synthetically Increasing the Number of Training Samples

Suppose a training dataset is \(X=\{\left({{\varvec{x}}}_{1},{c}_{1}\right),..., \left({{\varvec{x}}}_{N},{c}_{N}\right)\}\), where \({{\varvec{x}}}_{i}\) is a p-dimensional input vector, i.e., \({{\varvec{x}}}_{i} \in {R}^{p}\), and \({c}_{i}\) is the output corresponding to \({{\varvec{x}}}_{i}\), and \({c}_{i} \in \{1, 2, \dots , K\}\), where \(K\) is the total number of classes. \({{\varvec{x}}}_{i}\) contains p sequential elements of a TS. \(N\) is the number of training samples in the dataset. The training goal is to fit a classifier, \(C(x)\), using training data. The trained classifier can then be used to find the class labels of unseen testing data samples.

Different permutations with replacement of two samples from the original dataset, \(X\), are picked to construct a new training dataset called \({X}^{n}\). Each instance from the new training set, \({X}^{n}\), contains two samples from the original dataset, \(X\). Therefore, the new dataset has \(T={P}^{R}(N,r=2)\) = \({N}^{r}\)=\({N}^{2}\) training instances, where \({P}^{R}\) stands for Permutations with Replacement, \(N\) is the number of samples in the original dataset, and \(r=2\) is the number of samples that are selected. Therefore, the newly constructed dataset has a higher number of training instances, \({N}^{r}\) compared to \(N\), which is suitable for a deep neural network since it needs a high number of training samples.

The structure of a constructed training dataset is shown in (1)

where \({\widetilde{X}}^{n}\) consists of several data arrangements denoted as \(\left({{\varvec{x}}}_{i},{{\varvec{x}}}_{j}, {c}_{i},{c}_{j}\right)\), where \({{\varvec{x}}}_{i}\) and \({{\varvec{x}}}_{j}\), are two training samples from the original dataset, \(X\), along with \({c}_{i}\) and \({c}_{j}\) which are the labels corresponding to each input, respectively. The two inputs, \({{\varvec{x}}}_{i}\) and \({{\varvec{x}}}_{j}\), within an arrangement could belong to the same class or they could belong to two different classes. A third label, \({c}_{ij}\), is constructed based on this arrangement, and it shows whether \({{\varvec{x}}}_{i}\) and \({{\varvec{x}}}_{j}\) belong to the same class. The new training set is as follows:

where

Therefore, a new binary classification task emerges from the original multi-class classification task. In the new training set shown in (2) each training instance, i.e., \(\left({{\varvec{x}}}_{i},{{\varvec{x}}}_{j}, {c}_{i},{c}_{j}, {c}_{ij}\right)\), includes: the first sample, \({\boldsymbol{ }{\varvec{x}}}_{i}\), and the second sample, \({{\varvec{x}}}_{j}\), and their corresponding labels, \({c}_{i}\) and\({c}_{j}\), from the original dataset, \(X\). Additionally, each training instance contains the fifth element, \({c}_{ij}\), which holds a binary value, 1 if \({{\varvec{x}}}_{i}\) and \({{\varvec{x}}}_{j}\) belong to the same class and 0 otherwise. Note that in this paper, ‘sample’ is used to refer to each item in the original dataset, i.e., \(X\), and ‘instance’ is used to refer to each item in the newly constructed dataset, i.e., \({X}^{n}\). A figure-based description of the permutation on the input samples used to construct the new large data described in (2) is shown in Fig. 1.

The method increases the number of training samples by \({N}^{2}\) training instances that are generated by selecting 2 samples from an original training dataset of N samples but may excessively boost the number of training data if N is large. When N grows, the number of samples for the proposed method grows by the power of 2, i.e., \({N}^{2}\). Given the impact of \({N}^{2}\), an original training dataset with a high number of training samples could increase the number of generated training instances substantially, and not all the newly generated training instances may be required. To control the number of generated training instances, an under-sampling method can be used to control the number of newly generated instances. Thus, instead of selecting two samples from all the original training samples, a subset of representative samples that has similar characteristics as the original samples are selected using the under-sampling technique proposed by Zhang and Mani [81]. This approach controls the number of generated training instances, especially when the original training dataset has a high number of training samples. It helps to continue the learning for higher numbers of training epochs in shorter time duration, because of a lower number of training instances. If the number of representative samples is \(M\) which is smaller than N, then the total number of new instances being generated for the proposed method is \(N\times M\) < \({N}^{2}\). The proposed method can increase the number of training instances intensively for small data by setting a high value for \(M\), while providing a smaller increase in the number of training instances for datasets that already have a high number of training samples, by setting \(M\) to a small value.

Proposed Method for Time Series Classification Using Synthetically Extended Training Samples

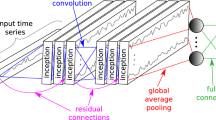

In this section, a structure for CNN is designed to be trained on the new training set, \({X}^{n}\), which contains a high number of training instances. Then, proposed intermediate targets are described. The proposed network has two inputs, \({\mathrm{In}}_{1}\) and \({\mathrm{In}}_{2},\) and it accepts two training samples, \({{\varvec{x}}}_{i}\) and \({{\varvec{x}}}_{j}\) which are in each instance of \({X}^{n}\), i.e., \(\left({{\varvec{x}}}_{i},{{\varvec{x}}}_{j}, {c}_{i},{c}_{j}, {c}_{ij}\right)\). The structure of the proposed deep neural network is shown in Fig. 2. The network compares the two inputs, \({\mathrm{In}}_{1}\) and \({\mathrm{In}}_{2}\), and returns a main output, i.e., \({O}_{m}\). The main output, i.e., \({O}_{m},\) corresponds to label \({c}_{ij}\).

The structure of the base deep neural network which is trained on the new training dataset which uses the synthetically increased training samples. The structure of Blocks 1–4 is shown in Fig. 3. The base network does not have intermediate targets

Block 1 and Block 2 in the CNN network shown in Fig. 2 are composed of a number of layers of neurons including convolutional layers, and the blocks extract high-level features from pairs of TS inputs. The extracted features are subtracted to make a set of features that reflect the distance of the two inputs to assist the network to make an accurate comparison between the two inputs.

Then, the extracted features are processed by the next three components (Block 3, Block 4, and Global Average Pooling) to generate the main output, \({O}_{m}\). The network generates the main output based on the comparison of the two inputs. The main output, \({O}_{m}\), shows whether the two applied inputs belong to the same class.

The structure inside each block used in the previous network (see Fig. 2) is shown in Fig. 3. The structure is inspired by ResNet (deep Residual Network) [27] blocks. ResNet is a deep CNN that uses shortcut connections in its blocks, which are called residual blocks. The shortcut connections help the gradient flow directly to the bottom layers. ResNet [27] is a well-known deep structure for CNN and it has achieved state-of-the-art results in image processing tasks. Note that the network shown in Fig. 2, which has been designed to be trained on the increased number of training instances in \({X}^{n}\) by accepting two inputs, is called the base network. The base network does not have the proposed intermediate targets which are described in the next section. The structure of the residual blocks which are used in this paper is shown in Fig. 3. The main branch is composed of three pairs of a 1-dimensional convolutional layer (1D-Conv) coupled with batch normalization. The outputs of the first two pairs are passed through ReLU (Rectified Linear Unit) activation function, as shown in Fig. 3. The shortcut connection on the right side of Fig. 3 comprises a pair of 1D-Conv and a batch normalization layer. The outputs of the main branch and the shortcut connection are added. The results are passed through an ReLU activation function to make the output of the block.

CNN-TS: Proposed Intermediate Target Concept for TSC Using CNN

Convolutional neural networks (CNNs) are end-to-end learning machines. During the learning process on a usual CNN, an input is applied to the first end, and a label at the other end is used to calculate loss and to tune the learning parameters. Usually, in CNN, inherent intermediate representation is generated without control and observation. However, human visual systems work based on perceptual organization. The process of extraction of low-level features in the intermediate level of the vision system has been referred by different names, such as perceptual organization, or feature grouping [66]. Determining how emerging low-level features in the intermediate levels of vision systems leading to perceptual organization remains a challenging problem in vision research. Perceptual organization is not generated randomly and it follows some rules [43]. In this work, applying controls on the intermediate features in a CNN can improve the processing ability of the CNN while making it similar to its natural counterpart. In this work, the proposed method is used for TS processing.

In this paper, in addition to the method to increase the number of training instances described in “Time Series Classification”.A, intermediate targets are constructed to improve the performance of CNN for TSC. The intermediate targets are used to train hidden layers of the CNN. The original CNN without intermediate targets shown in Fig. 2 is used as a base network, while the concept of “intermediate targets” is used to design a novel CNN structure for classification of TS, which is called CNN-TS.

The proposed network has two intermediate outputs, which are shown by \({O}_{1}\) and \({O}_{2}\) in Fig. 4. The two intermediate outputs, i.e., \({O}_{1}\) and \({O}_{2}\), are used to guide the training of the layers in Block 1 and Block 2, respectively. The labels of the training samples that are applied to \({\mathrm{In}}_{1}\) are used for \({O}_{1}\). Therefore, the features generated in the output of Block1 are controlled by the label of \({\mathrm{In}}_{1}\), and they contain information about the label of \({\mathrm{In}}_{1}\).

The proposed method with intermediate targets. The labels related to the two inputs applied to \({\mathrm{In}}_{1}\) and \({\mathrm{In}}_{2}\) are used as labels for the two intermediate targets, \({O}_{1}\) and \({O}_{2},\) respectively. The main target shows if the two inputs are from the same class or from different classes

On the other hand, the labels of the input samples which are applied to \({\mathrm{In}}_{2}\) are used for \({O}_{2}.\) Therefore, the features generated at the output of Block 2 are affected by the label of \({\mathrm{In}}_{2}\), and generate features that contain information about label of \({\mathrm{In}}_{2}\). The output features of Block 1 and Block 2 that reflect the labels of the two samples applied to the two inputs are subtracted. The subtracted features which reflect the distance of the two applied inputs are processed by Block 3 and Block 4 and Global Average Pooling to generate the main output \({O}_{m}\), and then, the main output is trained to evaluate whether the two inputs are from the same class or not.

When using deep neural networks for TSC where there are a high number of layers in the network, the error back propagated from the final output of the network should travel through a high number of layers to reach the input layer, and this could vanish the propagated error. Consequently, the training of the layers far from the outputs was not effective as it is expected. Using the intermediate outputs helps the learning algorithm to control the errors for the intermediate layers and creates more accurate backpropagated errors to train the network.

Training the Proposed CNN-TS with Class-Related Coefficients

In the proposed CNN-TS, the inputs are first applied to their corresponding CNN layer. The CNN layer maps an input to a feature map with shared weights called a kernel, i.e., \({\varvec{W}}\). In the lth layer, there are a number of feature maps, and (4) calculates the output of the ith feature map in the lth layer, \({{\varvec{y}}}_{i}^{l}\)

where \({{\varvec{w}}}_{i,j}^{l}\) is the convolutional kernel used to map the jth feature map in the \((l-1)\mathrm{th}\) layer to the \(i\mathrm{th}\) feature map in the next layer (the lth layer), \({{\varvec{b}}}_{i}^{l}\) is the bias related to the ith feature map in the lth layer. The ‘*’ is the convolutional operator sign. As shown in Fig. 3, there is a batch normalization layer after each convolution layer. The batch normalization layer normalizes the output of its previous layer to maintain the mean and standard deviation (Std.) close to 0 and 1, respectively.

After a batch normalization, ReLU function is used to generate the activation for the next layer (see Fig. 3). The activation is passed through different 1D-Conv, Batch normalization layers, and ReLU functions before reaching the three outputs of the proposed network (see Fig. 4). Figure 4 shows that before each output layer, there is a Global Average Pooling (GAP) layer [23]. In the GAP layer, the average of each feature map is calculated to represent the feature map to reduce the number of features. After this operation, the number of outputs returned by the GAP layer is reduced to the number of feature maps in the previous layer.

Three logistic regression models are placed on the top of the previous layers to construct three categorical outputs (see Fig. 4). A SoftMax function is used for the kth output as shown in (5)

where \({{\varvec{O}}}^{k}\) is the output vector of the kth output of the proposed network, and \(k\in \{\mathrm{1,2},m\}\) as the network has three outputs (see Fig. 4). The number of elements in \({{\varvec{O}}}^{k}\) is equal to the number of classes for the kth output. For instance, the main output has two classes, and \({{\varvec{O}}}^{k=m}\) has two elements. \({{\varvec{G}}}^{K}\) is the output of the GAP layer before the kth output layer, \({{\varvec{W}}}^{k}\) is the weight matrix that connects the output of the previous corresponding GAP layer to the kth output layer, and \({b}^{k}\) is the bias related to the kth output layer.

Adam (A Method for Stochastic Optimization [39]) for backpropagation learning is used to train the proposed CNN. Categorical cross-entropy is used to calculate loss function to train the network. Three loss functions corresponding to the three outputs of the proposed method are used to generate the total value of the loss of the network, i.e., \(L\)

where \({\alpha }_{k}\) is a coefficient that weights the effect of the loss related to the kth output on the total value of the loss. As \({{\varvec{O}}}^{{\varvec{k}}={\varvec{m}}}\) is the main output, \({\alpha }_{k=m}\)= 1 and the coefficients related to the two intermediate targets are set to 0.5, i.e., \({\alpha }_{1}={\alpha }_{2}=0.5\), which are half the value for the main output. \({L}_{k}\) is categorical cross-entropy loss related to the kth output.

The increased number of instances generated from the original data results in an imbalanced dataset, in \({{\varvec{O}}}^{{\varvec{m}}}\). Additionally, real-world data are more likely to be imbalanced. Accordingly, class-related coefficients are used in the loss function of each output to improve the ability of the proposed method to process imbalanced data. Equation (7) shows the loss function of the kth output for the ith input instance, i.e., \({L}_{k}^{i}\), that includes the class-related coefficient

where \({N}_{k}\) is the number of classes for the kth output, \({t}_{k}^{c,i}\) is the cth element of the label vector corresponding to the kth output for the ith input instance, \({z}_{k}^{c,i}\) is the cth element of the predicted output vector related to the kth output for the ith input instance, and \({C}_{k}^{i}\) is a class-related coefficient related to the class of the ith input instance in the kth output. \({L}_{k}\) for each batch of data is calculated by summing \({L}_{k}^{i}\) over the number of samples in the batch of training instances.

The class-related coefficient has a high value when there are a low number of training samples in its corresponding class. The high value for a minority class causes a high loss value generated for an error related to the minority class, and consequently, the proposed method puts more attention on the class with a low number of instances. The class coefficient, \({C}_{k}^{i}\), is calculated using (8)

where \(T\) is the total number of training instances, and \({T}_{k}^{i}\) is the number of training instances out of the total number of training instances that have the same class as the ith input instance for the kth output. All the samples that belong to the same class according to the label for the kth output have the same class-related coefficient.

Note that selecting pairs of inputs from different classes to find if they are or they are not from the same class could result in an imbalanced classification task. Suppose that there are \({N}_{\mathrm{c}}\) classes in the original dataset with equal numbers of training samples in different classes, i.e., \({t}_{1}=\dots ={t}_{{N}_{\mathrm{c}}}=t\) where \({t}_{i}\) is the number of training samples in the ith class, and the total number of training samples in the original training set is \({T=t}_{1}+\dots +{t}_{{N}_{\mathrm{c}}}\). In this case, the total number of training instances for the generated data corresponding to the main output is \({T}_{\mathrm{t}}=T\times T={T}^{2}\). The number of pairs of training samples that are from the same class can be calculated using (9)

where \({T}_{\mathrm{s}}\) is the number of pairs of inputs that are from the same class. On the other hand, the number of pairs of inputs that are from different classes, i.e., \({T}_{\mathrm{d}}\), can be calculated using (10)

The two different values for the number of instances in the two classes related to the main output, i.e., \({T}_{\mathrm{s}}\) and \({T}_{\mathrm{d}}\), imply that the resulted classification task is an imbalanced classification problem when \({N}_{\mathrm{c}}\)>2. When the number of classes in the original data is increased, i.e., \({N}_{\mathrm{c}}\gg 2\), the level of imbalance will be increased. The proposed method used (7) to overcome the imbalance in the generated data.

Classifying a Test Input Based on the Main Output

During testing, a similar data structure described by (1) and (2) for training will be used. A test sample, \({x}_{i}^{t}\), is applying to the first input, i.e., \({\mathrm{In}}_{i}\), and the representative subset of training samples that are selected to be applied to the second input for training the network is used. While a test sample is applied to the first input, each of the training samples in the representative subset is applied to the second input and the main output of the network predicts if the testing sample, \({x}_{i}^{t}\), is from the class of a sample from the representative subset. Whenever it is predicted that an applied sample (from representative subset of the training data) has the same class as the testing sample, the class of the test sample will be predicted based on the class of the sample from the representative subset of training data.

A subset of representative samples from the training dataset was selected using an under-sampling called NearMiss method [31, 81]. Two samples from each class were selected using the method. The number of samples in the representative subset is set to be small, i.e., 2 samples from each class, to prevent an intense increase in the number of input pairs. To obtain the results for the proposed method in Tables 2, 3, and 4, each testing sample is compared with the samples in the representative subset. Then, using the final output of the proposed method, it is predicted that the applied test sample has the same class label as one of the samples in the subset. If the applied test sample is predicted to have a similar class with more than one sample from the subset, it is assigned based on the vote appointed by the samples from the subset that is predicted to have the same class as the applied test input.

During testing, a test input is applied to \({\mathrm{In}}_{1}\) (see Fig. 4), and \(\overline{N }\) training samples from the original training dataset, i.e., \(X\), are applied to \({\mathrm{In}}_{2}\) one by one, and the network predicts whether the test data are from the same class as each training sample which is applied to \({\mathrm{In}}_{2}\). Therefore, the number of predicted main outputs, \({O}_{\mathrm{m}}\), for a testing input is equal to the number of the training data applied to \({\mathrm{In}}_{2}\), i.e., \(\overline{N }\). The training samples out of the \(\overline{N }\) samples applied to \({\mathrm{In}}_{2}\) that are predicted to have the same class as the testing sample are considered to decide about the label of the testing input. Suppose that \({N}_{\mathrm{s}}\) training samples out of the \(\overline{N }\) samples are predicted to have the same class as the testing input. The \({N}_{s}\) training samples may belong to different classes because of the prediction error. The testing input is assigned to a class according to the maximum voting over the label of the \({N}_{\mathrm{s}}\) training samples.

Results

The experimental results are presented in this section. First, the datasets, which are used in the experiments, are introduced. Then, the proposed method is compared with the base method, as shown in Fig. 2. In the subsection, the effect of the intermediate targets is investigated. In the third part of this section, the proposed method is compared to other state-of-the-art methods. Finally, the effect of the number of training samples is investigated.

Dataset

The experiments in this section are run on TS datasets obtained from the UCR Time series Classification Archive [14]. The characteristics of the datasets, which are used in the following experiments, are provided in Table 1. The first column of the table shows the type of TS. The name of each dataset is shown in the second column. Each dataset has a training set and a testing set, and the number of training and testing samples is shown in columns four and five, respectively. The number of classes in each dataset is shown in the fifth column. The length of TS in each dataset is shown in the last column of Table 1.

Comparison of CNN-TS with the Base Deep Neural Network

Table 2 compares the accuracy of the final proposed method (Fig. 4) with the base network that does not have the intermediate output (Fig. 2). Note that the base method is essentially the standard approach, which is trained by a large set of training instances generated by the proposed approach, i.e., both methods are trained with the same number of training instances. In the following experiments, down-sampling is used to select 2 samples, i.e., \(M=2\), from each class of a training dataset to construct new instances for the proposed network. For instance, Table 1 shows that the ‘InsectWingbeatSound’ [14] dataset contains 220 training samples. \({220}^{2}=\mathrm{48,400}\) different pairs of samples can be selected from the training dataset to be applied to the two inputs of the proposed network. However, if the number of instances for the second input is restricted to 22, the number of samples in the new dataset (composed of two inputs) is 220 × 22 = 4840 which is 10 times smaller than the previous situation—hence, training can be performed by 10 times less computation cost. In this section, the effect of the intermediate targets is investigated on different TS datasets, and the results are shown in Table 2. The results show that in most datasets (17 out of 20), the proposed method with intermediate targets has higher accuracy compared to when the intermediate targets are removed from the proposed structure. The proposed CNN-TS method achieved an average accuracy of 80.6%, outperforming the base method which achieved an average accuracy of 77.1%. The bold numbers in Table 2 show the largest values of Accuracy (A), precision (P), and recall (R) in each row. As shown in Table 2, intermediate targets have increased the classification accuracy by 22.40% on the ‘InlineSkate’ dataset, and from 91.67 to 97.37% on the ‘ToeSegmentation1’ dataset.

The learning parameters of the hidden layers in Block 1 are affected by the \({O}_{1}\) and \({O}_{\mathrm{m}}\) during training. The error generated in \({O}_{1}\) is backpropagated to Block 1 through Block 5. The intermediate output \({O}_{1}\) controls the output of Block1 (based on the labels provided for \({O}_{1}\)), and therefore, the intermediate output \({O}_{1}\) keeps the features in Block 1 that contain information about the label of \({\mathrm{In}}_{1}\). Similarly, the output \({O}_{2}\) controls the features generated by ‘Block 2’ to generate features that have information about the label of the second input. This strategy helps the network make more accurate decisions on whether two inputs belong to the same class.

Comparison of CNN-TS with Other Methods

In this section, the proposed method, CNN-TS, is compared with different methods. First, three classical machine-learning methods are trained on different time series data sets. The three methods are a linear Support Vector Machine (SVM) with a linear kernel, SVM with the Radial Basis Function (RBF) kernel, and a Random Forest (RF) with 50 estimators. Raw time series data have high dimensionality and cannot be directly fitted using classical machine-learning methods. Time-domain, frequency-domain, and time–frequency-domain features which are employed in [83] are extracted from each time series, and the three classical machine-learning methods are trained by the extracted features. Root mean square (RMS), Variance, the Maximum value, Skewness, Kurtosis, and Peak-to-Peak difference are six features in time domain that are utilized. Spectral Skewness, Kurtosis and power, which are calculated using the Fast Fourier transform (FFT), are the three frequency-domain features that are used in this research. Wavelet Energy is also used to extract a time–frequency-domain feature. The accuracies of these methods relative to the proposed method, when applied to various datasets obtained from the USR database, are shown in Table 3. The last row, i.e., ‘Wins’ row, in Table 3 shows the number of times each method reached the highest accuracy among the other methods. The proposed method has achieved the highest accuracy on 18 datasets, followed by the SVM with linear Kernel method, which reached the highest accuracy on 2 datasets. The ‘Average’ row in Table 3 shows the mean value of accuracy achieved by each method across all datasets. The results show that, on average, the proposed CNN-TS achieved the highest accuracy, i.e., 80.6%, followed by the RF method which achieved an average accuracy of 59.5%, i.e., on average, the proposed CNN-TS approach achieves 21.1% higher accuracy compared the best results achieved among the three methods. Note that the proposed method was directly applied to raw time series data with a high dimension; however, the classical machine-learning methods used the features extracted from the specified feature extraction methods (because the dimension of the raw time series data is high, such approaches cannot directly be applied to the raw data).

In the next experiments, the proposed method is compared with other methods that can directly be applied to raw data. Table 4 presents the results when applying the proposed method and other methods to TS benchmark datasets. In Table 4, ‘MLP’ stands for Multi-Layer Perceptron (MLP) which is a traditional form of DNN. The MLP for TSC proposed by Wang et al. [74] is used in the comparison. The MLP has 4 fully connected layers and a Softmax layer as the output layer of the network. ‘FCN’ stands for Fully Convolutional Neural Network (FCN) [50], and it is extended by Wang et al. [74] for TSC. FCNs do not have any pooling layers and contain 5 layers. ‘ResNet’ is a deep Residual Network (ResNet) for TSC proposed in [74]. The ResNet has 11 layers and it is the deepest architecture used in this paper. The original ResNet [27] has achieved state-of-the-art results in image processing, and it is a well-known method.

‘Encoder’ is the Encoder network [67] with 5 layers include 3 convolutional layers. Encoder is similar to FCNs [50]; however, it uses Parametric Rectified Linear Unit (PReLU) activation function where an additional parameter is added to enable learning of the slop of each function. Additionally, the Encoder network uses dropout regularization and max-pooling operation. MCNN is the Multi-scale Convolutional Neural Network (MCNN) [13] which comprises 4 layers. t-LeNet is the Time Le-Net [26] which has 4 layers. MCDCNN is a Multi-Channel Deep Convolutional Neural Network [84]. Time-CNN [17] has 3 layers, uses the mean-squared error loss function with a Fully Convolutional layer with sigmoid activation function in its final layer. Finally, ‘TWIESN’ is a Time Warping Invariant Echo State Network [71] with 3 layers.

Table 4 shows that the proposed method has reached the highest accuracy on 8 datasets, followed by the ResNet [74] which has reached the highest accuracy on 7 datasets. The results in Table 4 show that, on average, the proposed method has achieved the highest accuracy, i.e., 80.6%, followed by the ResNet method which achieved an average accuracy of 79.0%. Table 4 also compares the Precision, and Recall of the proposed method and ResNet.

The critical difference diagram (CDD) used in [17] was implemented to statistically compare all the classifiers over all the data sets. The code in https://github.com/hfawaz/cd-diagram was used to do the statistic test. First, Friedman test was performed to reject a null hypothesis, i.e., there are no differences between the accuracies to depict the CDD. After the rejection of the null hypothesis, Wilcoxon–Holm method was used to perfume a post hoc analysis. The result is reported in Fig. 5. The thick horizontal lines in Fig. 5 show different groups of classifiers whose accuracies are not significantly different.

The t-SNE visualization implemented in [11] is used to intuitively evaluate the effectiveness of the proposed method. The t-SNE visualization is applied to the \({O}_{1}\) and \({O}_{\mathrm{m}}\) output of the proposed method to find the separability of the method. Additionally, t_SNE visualization is applied to the output of the ResNet. The t-SNE visualizations for different data sets are reported in Figs. 6, 7, and Appendix 1.

Next, the computation time of the proposed method is compared to ResNet, and the results are shown in Table 5. The number of training epochs and the required time duration for training both methods are shown in Table 5. The ‘Improvement’ column in Table 5 shows the difference between the time duration of ResNet and the proposed method, i.e., the time duration of ResNet is subtracted from the time duration of the proposed method. The last column ‘Times of Improvement’ in Table 5 shows how many times the time duration of ResNet is higher than the time duration of the proposed method. ‘Times of Improvement’ is obtained by dividing the time duration of ResNet by the time duration of the proposed method.

The results in Table 5 show that for 18 out of 20 datasets, the proposed method performs its learning in shorter time durations than ResNet. ResNet can be trained in a shorter time than the proposed method only for two datasets, i.e., ‘SwedishLeaf’ and ‘FaceAll’ datasets. Note that these two datasets, ‘SwedishLeaf’ and ‘FaceAll’, have 15 and 14 classes, respectively, and thus contain a relatively high number of classes compared to the other datasets (see Table 1). Therefore, there are \(15\times 2=30\) selections for the second input for ‘SwedishLeaf’ dataset. Note that two samples are selected from each class using the mentioned down-sampling described in “Time Series Classification”.A to represent the class. As the ‘SwedishLeaf’ dataset contains 500 training samples, the new training dataset for the two inputs of the proposed network has 500 × 30 = 15,000 instances, which is a high number compared to the original training dataset that contains 500 samples. The high number of available selections for the second input (which occurs due to the high number of classes in the dataset) increases the number of new training instances, and it consequently increases the training computation time. Therefore, the proposed method has a high training duration for datasets with a high number of classes.

Using the mentioned down-sampling can reduce the number of training samples and consequently reduce the training time. However, when the number of classes is increased, the reduction in the number of training samples is limited; because an appropriate number of training samples from each class is required. The high number of classes prevents a reduction in the number of generated training instances.

One method to further reduce the computation time for the proposed method is to reduce the number of selections for the second input from 30 to 15. To reach this aim, and thus to reduce the number of selections for the second input, instead of selecting two samples from each class, a single training sample will be selected from each class—this consequently reduces the number of instances from 1500 to 500 × 15 = 7500 which is the half of the previous one. Therefore, the computation time can also be reduced up to half. However, reducing the number of training samples might impact the model’s accuracy (see the “Investigation of the Effect of the Number of Input Samples”).

For 18 out of 20 datasets in Table 5, the proposed method performed the learning task in a shorter duration compared to ResNet. For instance, the proposed method has a reduction of 24,425.7 s and 23,112.9 s in learning time duration for ‘Mallat’ and ‘CinC_ECG_torso’ datasets, respectively, while the proposed method can reach a higher accuracy than ResNet on the datasets. In the last columns of Table 5, the results show that the proposed method can reach up to 46.9 times faster processing time than ResNet. For instance, the training time duration of ResNet for ‘ItalyPowerDemand’ dataset is 2673.7 s which is 15.3 times higher than of the time duration required by the proposed method. The proposed method only requires 175.0 s to reach an accuracy higher than the ResNet accuracy.

Investigation of the Effect of the Number of Input Samples

Deep CNNs usually have a high number of layers of neurons, and consequently, they have a high number of training parameters. The high number of training parameters needs a high number of training samples to train the neural network. The proposed method combines different training samples and generates a new training set with a high number of training samples, and it increases the ability of the proposed method to learn with a comparably low number of original training samples. In this section, the ability of the proposed method is investigated when the number of training samples is reduced. In this simulation, the number of training samples in the ‘CinC_ECG_torso’ dataset from the UCR data archive is reduced gradually, and then, the accuracy of the proposed method is obtained for different reduced numbers of training samples, i.e., the network is trained by the remainder of the training set. The number of training samples is reduced by 2, 4, 6, and 8. The first class in the ‘CinC_ECG_torso’ dataset has a small number of training samples (5 training samples), so it is kept unchanged and the training samples from the second class which has a higher number of training samples are reduced. The accuracy of the proposed method on the reduced number of training samples is then compared to the base method. Figure 8a shows that the accuracy of the base method is reduced when the number of removed training samples is increased, i.e., the number of remaining training samples is reduced. However, in comparison, the proposed method is relatively stable in accuracy values related to the reduced numbers of training samples when it is compared to the base method. The proposed method has 12.68% higher accuracy compared to the base method for the original dataset (‘# Reduced Samples = 0’) which contains all the training samples. The improvement of the proposed method is increased compared to the base method when the number of training samples is reduced. For instance, when the number of training samples is reduced from 40 to 32, the testing accuracy of the proposed method is 20.29% higher than the base method. The testing set in ‘CinC_ECG_torso’ contains 1380 samples. The proposed method can recognize 280 more testing instances correctly than the base method. The effect of the number of training samples is tested on other datasets and the results are shown in Fig. 8.

Comparison of the accuracy of the proposed method and the base method on a CinC_ECG_torso, b earthquakes, c car, and d Ham data sets when the number of training samples is reduced. Note that when ‘# Reduced Samples = 0’, there is no reduction in the number of training samples, and thus, all the original training samples are used

Discussion and Conclusion

In this paper, a method (described in the section “A Method for Synthetically Increasing the Number of Training Samples”) is used to synthetically transform an initially labeled training dataset to improve the training process for time series classification. In particular, the method selects pairs of raw TS from the original training dataset. The higher number of available selections of two TS helps to increase the number of training instances. Therefore, the method increases the number of training instances by the power of two of the number of initial training samples. A deep CNN is a data-hungry method and it needs a high number of labeled training samples, and the proposed method makes more training data available for CNNs.

Then, a new CNN, called CNN-TS, is proposed to work with the increased number of training data. CNN-TS compares the two TS in each pair and predicts whether the two TS are from the same class. Moreover, the proposed CNN-TS benefits from intermediate targets which are set based on the new learning task. Two intermediate targets are set corresponding to the two TS which are applied as inputs of the proposed method. The intermediate targets supervise the intermediate features which are extracted from each input to increase the overall classification accuracy of the proposed method. The intermediate targets use the label of their corresponding input to train the network.

The proposed method can be considered as a deep distance-based TSC. In a classical distance-based TSC, a classification is performed based on the distance between a test sample and training samples. The main element in a classical distance-based TSC is its distance measurement method. Measuring the distance between two TS is not a straight-forward task, because the method should be invariant against translation in the TS or it should be insensitive to the speed of performing similar tasks. In the classical method usually, the distance between two samples is calculated, and then, an analysis is performed on the measured distances to decide in which class a sample belongs. However, the proposed method, CNN-TS, automatically evaluates the distance between two TS and performs the distance measurement and classification jointly in a network to increases its accuracy and to improve CNN’s abilities. In fact, the intermediate targets in the proposed method control the features extracted in the intermediate layers to reflect information related to the labels of the applied inputs as the labels are available to the intermediate targets during training. Then, in the next layer, the intermediate features are subtracted to generate features that reflect the distance between the two inputs. In the following layers, the distance-related features are used to decide whether the two inputs are from the same class. The proposed method adjusts the learning parameters to learn the distance and classification in a CNN for TSC.

Siamese neural networks have the ability to evaluate similarity between inputs [24]. A Siamese neural network learns to determine the probability of its applied pair of inputs belonging to the same class or different classes. The Siamese neural network proposed in [24] does not take into account the imbalance property which is generated as the result of selecting pairs of inputs from different classes. The severity of the imbalance will be increased when the number of classes is increased [see (9) and (10)]. Additionally, the method proposed in [24] does not use the extra knowledge that exists in the labels of each input in an applied pair of inputs to the network. However, the proposed method in this paper deals with the imbalance in the generated data using (7). Moreover, the proposed method in this paper has used the labels of inputs in each pair as intermediate targets to train hidden layers.

The proposed CNN-TS method is evaluated on different datasets obtained from the UCR time series classification archive. First, CNN-TS is compared to a base method, which is a similar CNN to the proposed method but without the intermediate targets. Experimental results show improvement in the accuracy of the proposed method compared to the base method on 17 out of 20 datasets. Additionally, the proposed method is compared with three classical machine-learning methods namely linear SVM, RBF SVM, and RF. The results show that, on average, the proposed method achieved 21.1% higher accuracy than that achieved by the other methods. The proposed method is also compared to other state-of-the-art methods, and the experiment results show that it has achieved higher accuracies on various different datasets compared to the best results achieved by the other methods. Moreover, CNN-TS achieved higher accuracies with a shorter training time duration, which is on average 8.43 times shorter than the time duration required for the method with the best accuracy among the other state-of-the-art methods, i.e., ResNet.

Although the classical distance-based methods are known to perform well in the traditional TSC, they have not been considered thoroughly in the literature of CNN methods for TSC to date. Investigating the different aspects of distance-based TSC and reflecting them in CNN can be a new direction for future research. Experimental results have shown that intermediate targets can improve the performance of a CNN, because intermediate targets supervise the generation of features in the intermediate layers instead of allowing the features to generate without control. Thus, finding appropriate targets for intermediate layers in different applications of CNN can be another direction for future research.

Availability of Data and Materials

N/A.

Code Availability

Code will be available publicly in github after acceptance.

References

Abanda A, Mori U, Lozano JA. A review on distance based time series classification. Data Min Knowl Disc. 2019;33(2):378–412. https://doi.org/10.1007/s10618-018-0596-4.

Alani AA, Cosma G, Taherkhani A. Classifying imbalanced multi-modal sensor data for human activity recognition in a smart home using deep learning. Proc Int Jt Conf Neural Netw. 2020. https://doi.org/10.1109/IJCNN48605.2020.9207697.

Antoniades A, Spyrou L, Martin-Lopez D, Valentin A, Alarcon G, Sanei S, Took CC. Detection of interictal discharges with convolutional neural networks using discrete ordered multichannel intracranial EEG. IEEE Trans Neural Syst Rehabil Eng. 2017;4320(c):1–10. https://doi.org/10.1109/TNSRE.2017.2755770.

Antoniades A, Spyrou L, Martin-Lopez D, Valentin A, Alarcon G, Sanei S, Took CC. Deep neural architectures for mapping scalp to intracranial EEG. Int J Neural Syst. 2018;0(0):1850009. https://doi.org/10.1142/S0129065718500090.

Antonucci A, De Rosa R, Giusti A, Cuzzolin F. Robust classification of multivariate time series by imprecise hidden Markov models. Int J Approx Reason. 2015;56(PB):249–63. https://doi.org/10.1016/j.ijar.2014.07.005.

Aswolinskiy W, Reinhart RF, Steil J. Time series classification in reservoir- and model-space: a comparison. In: Schwenker F, Abbas HM, El Gayar N, Trentin E, editors. Artificial neural networks in pattern recognition. Cham: Springer International Publishing; 2016. p. 197–208.

Baydogan MG, Runger G, Tuv E. A bag-of-features framework to classify time series. IEEE Trans Pattern Anal Mach Intell. 2013;35(11):2796–802.

Bengio Y, Yao L, Alain G, Vincent P (2013) Generalized denoising auto-encoders as generative models. Advances in neural information processing systems, pp. 899–907.

Bianchi FM, Scardapane S, Jenssen R. Reservoir computing approaches for representation and classification of multivariate time series. 2018. https://arxiv.org/pdf/1803.07870.pdf

Chen Y, Garcia EK, Gupta MR, Rahimi A, Cazzanti L. Similarity-based classification: concepts and algorithms. J Mach Learn Res. 2009;10:747–76.

Chen Z, Liu Y, Zhu J, Zhang Y, Jin R, He X, et al. Time-frequency deep metric learning for multivariate time series classification. Neurocomputing. 2021;462:221–37. https://doi.org/10.1016/j.neucom.2021.07.073.

Chouikhi N, Ammar B, Alimi AM, Member S (2018) Genesis of basic and multi-layer echo state network recurrent autoencoder for efficient data representations. https://arxiv.org/ftp/arxiv/papers/1804/1804.08996.pdf

Cui Z, Chen W, Chen Y. Multi-scale convolutional neural networks for time series classification. 2016. https://doi.org/10.3724/SP.J.1077.2009.00909

Dau HA, Keogh E, Kamgar K, Yeh C-CM, Zhu Y, Gharghabi S et al. The UCR Time Series Classification Archive. 2018. https://www.cs.ucr.edu/~eamonn/time_series_data_2018/

Dauphin YN, Fan A, Auli M, Grangier D. Language modeling with gated convolutional networks. In: The 34th international conference on machine learning—volume 70 (ICML’17), 2017. (pp. 933–41).

Ding C, Tao D. Robust face recognition via multimodal deep face representation. IEEE Trans Multimedia. 2015;17(11):2049–58. https://doi.org/10.1109/TMM.2015.2477042.

Fawaz HI, Forestier G, Weber J, Idoumghar L, Muller P-A. Deep learning for time series classification: a review. Data Min Knowl Discov. 2019. https://doi.org/10.1007/s10618-019-00619-1.

Fu TC. A review on time series data mining. Eng Appl Artif Intell. 2011;24(1):164–81. https://doi.org/10.1016/j.engappai.2010.09.007.

Gao Z, Wang X, Yang Y, Mu C, Cai Q, Dang W, Zuo S. EEG-based spatio-temporal convolutional neural network for driver fatigue evaluation. IEEE Trans Neural Netw Learn Syst. 2019. https://doi.org/10.1109/TNNLS.2018.2886414.

Garcia-Gasulla D, Parés F, Vilalta A, Moreno J, Ayguadé E, Labarta J, et al. On the behavior of convolutional nets for feature extraction. J Artif Intell Res. 2018;61:563–92.

Gehring J, Auli M, Grangier D, Yarats D, Dauphin YN. Convolutional sequence to sequence learning. Int Conf Mach Learn (ICML). 2017. https://doi.org/10.18653/v1/P16-1220.

Giusti R, Silva DF, Batista GEAPA. Improved time series classification with representation diversity and SVM. In: Proceedings—2016 15th IEEE international conference on machine learning and applications, ICMLA 2016, 2016, (1), 1–6. https://doi.org/10.1109/ICMLA.2016.108

Grabocka J, Schilling N, Wistuba M, Schmidt-Thieme L. Learning time-series shapelets. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining, 2014, pp. 392–401. https://doi.org/10.1145/2623330.2623613

Gregory K, Zemel R, Salakhutdinov R. Siamese neural networks for one-shot image recognition gregorylation. In: 32th international conference on machine learning, Vol. 37; 2013. Lille, France, p. 1355. https://doi.org/10.1136/bmj.2.5108.1355-c.

Gudmundsson S, Runarsson TP, Sigurdsson S. Support vector machines and dynamic time warping for time series. In: 2008 IEEE international joint conference on neural networks (IEEE World congress on computational intelligence; 2008, pp. 2772–277662. https://doi.org/10.4018/978-1-5225-2498-4.ch012.

Le Guennec A, Malinowski S, Tavenard R. Data augmentation for time series classification using convolutional neural networks. In: ECML/PKDD workshop on advanced analytics and learning on temporal data; 2016. Riva Del Garda.

He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition. In: IEEE conference on computer vision and pattern recognition (CVPR); 2016, pp. 770–778. Las Vegas. https://arxiv.org/pdf/1512.03385.pdf.

He Q, Dong Z, Zhuang F, Shang T, Shi Z. Fast Time Series Classification Based on Infrequent Shapelets. In: In 2012 11th international conference on machine learning and applications; 2012 (pp. 215–219). https://doi.org/10.1109/ICMLA.2012.44

Hills J, Lines J, Baranauskas E, Mapp J, Bagnall A. Classification of time series by shapelet transformation. Data Min Knowl Disc. 2014;28(4):851–81. https://doi.org/10.1007/s10618-013-0322-1.

Hu Q, Zhang R, Zhou Y. Transfer learning for short-term wind speed prediction with deep neural networks. Renewable Energy. 2016;85:83–95. https://doi.org/10.1016/j.renene.2015.06.034.

Imblearn. Class to perform under-sampling based on NearMiss methods. 2003. https://imbalanced-learn.org/stable/references/generated/imblearn.under_sampling.NearMiss.html?highlight=nearmiss

Jain B, Spiegel S. Dimension reduction in dissimilarity spaces for time series classification. In: International workshop on advanced analysis and learning on temporal data; 2015 (pp. 31–46).

Jean N, Burke M, Xie M, Davis WM, Lobell BD, Ermon S. Combining satellite imagery and machine learning to predict poverty. Science. 2016;353(6301):790–4.

Jeong YS, Jeong MK, Omitaomu OA. Weighted dynamic time warping for time series classification. Pattern Recogn. 2011;44(9):2231–40. https://doi.org/10.1016/j.patcog.2010.09.022.

Jonathan T, Goroshin R, Jain A, LeCun Y, Bregler C. Efficient object localization using convolutional networks. In: IEEE conference on computer vision and pattern recognition (CVPR); 2015 (pp. 648–656). Boston. https://doi.org/10.1109/CVPR.2015.7298664.

Kate RJ. Using dynamic time warping distances as features for improved time series classification. Data Min Knowl Disc. 2016;30(2):283–312. https://doi.org/10.1007/s10618-015-0418-x.

Kaya H, Gündüz-Öʇüdücü Ş. A distance based time series classification framework. Inf Syst. 2015;51:27–42. https://doi.org/10.1016/j.is.2015.02.005.

Kenji B, Frinken V, Riesen K, Uchida S. Efficient temporal pattern recognition by means of dissimilarity space embedding with discriminative prototypes. Pattern Recogn. 2017;64(January 2016):268–76. https://doi.org/10.1016/j.patcog.2016.11.013.

Kingma DP, Ba J. Adam: a method for stochastic optimization. In: The 3rd international conference on learning representations (ICLR); 2014 (pp. 1–15). Banff. https://doi.org/10.1145/1830483.1830503

Krizhevsky A, Sutskever I, Geoffrey EH. ImageNet classification with deep convolutional neural networks. In: Advances in neural information processing systems 25 (NIPS2012) (pp. 1–9); 2012. https://doi.org/10.1109/5.726791.

Längkvist M, Karlsson L, Loutfi A. A review of unsupervised feature learning and deep learning for time-series modeling. Pattern Recogn Lett. 2014;42(1):11–24. https://doi.org/10.1016/j.patrec.2014.01.008.

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–44. https://doi.org/10.1038/nature14539.

Li C, Zia MZ, Tran Q-H, Yu X, Hager GD, Chandraker MM. Deep supervision with intermediate concepts. IEEE Trans Pattern Anal Mach Intell. 2019;41(8):1828–43. https://doi.org/10.1109/CVPR.2017.49.

Li X, Lin J. Evolving separating references for time series classification. SIAM Int Conf Data Min SDM. 2018;2018:243–51. https://doi.org/10.1137/1.9781611975321.28.

Lin M, Chen Q, Yan S (2014). Network in network. In: International conference on learning representations (ICLR) (pp. 1–10). Banff.

Lin S, Runger GC. GCRNN: Group-constrained convolutional recurrent neural network. IEEE Trans Neural Netw Learn Syst. 2018;29(10):4709–18. https://doi.org/10.1109/TNNLS.2017.2772336.

Liu CL, Hsaio WH, Tu YC. Time series classification with multivariate convolutional neural network. IEEE Trans Industr Electron. 2019;66(6):4788–97. https://doi.org/10.1109/TIE.2018.2864702.

Liu J, Shahroudy A, Wang G, Duan L-Y, Kot AC. Skeleton-based online action prediction using scale selection network. IEEE Trans Pattern Anal Mach Intell. 2019;8828(c):1–15. https://doi.org/10.1109/CVPR.2018.00871.

Liu W, Wang Z, Liu X, Zeng N, Liu Y, Alsaadi FE. A survey of deep neural network architectures and their applications. Neurocomputing. 2017;234(October 2016):11–26. https://doi.org/10.1016/j.neucom.2016.12.038.

Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. Proc Int Jt Conf Neural Netw. 2015. https://doi.org/10.1109/IJCNN.2017.7966367.

Ma Q, Shen L, Chen W, Wang J, Wei J, Yu Z. Functional echo state network for time series classification. Inf Sci. 2016;373:1–20. https://doi.org/10.1016/j.ins.2016.08.081.

Malhotra P, Vig L, Agarwal P, Shroff G. TimeNet: pre-trained deep recurrent neural network for time series classification. In: 25th European symposium on artificial neural networks, computational intelligence and machine learning; 2017.

Mehdiyev N, Lahann J, Emrich A, Enke D, Fettke P, Loos P. ScienceDirect ScienceDirect time series classification using deep learning for process planning: a case from the process industry. Proc Comput Sci. 2017;114:242–9. https://doi.org/10.1016/j.procs.2017.09.066.

Min S, Lee B, Yoon S. Deep learning in bioinformatics. Brief Bioinform. 2017;18(5):851–69. https://doi.org/10.1093/bib/bbw068.

Mittelman, R. Time-series modeling with undecimated fully convolutional neural networks. 2015. https://arxiv.org/pdf/1508.00317.pdf

Mueen A, Young N (n.d.). Logical-Shapelets: an expressive primitive for time series classification, 1154–62.

Nweke HF, Teh YW, Al-garadi MA, Alo UR. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: state of the art and research challenges. Expert Syst Appl. 2018;105:233–61. https://doi.org/10.1016/j.eswa.2018.03.056.

van den Oord A, Dieleman S, Zen H, Simonyan, K., Vinyals, O., Graves, A., et al. WaveNet: a generative model for raw audio. In: Speech Synthesis Workshop (SSW); 2016 (pp. 1–15). https://doi.org/10.1109/ICASSP.2009.4960364.

Özbay Y, Ceylan R, Karlik B. A fuzzy clustering neural network architecture for classification of ECG arrhythmias. Comput Biol Med. 2006;36(4):376–88. https://doi.org/10.1016/j.compbiomed.2005.01.006.

Page A, Shea C, Mohsenin T. Wearable seizure detection using convolutional neural networks with transfer learning. In: Proceedings—IEEE international symposium on circuits and systems, 2016-July, 2016, pp 1086–1089. https://doi.org/10.1109/ISCAS.2016.7527433

Peng S, Jiang H, Wang H, Alwageed H, Zhou Y, Sebdani MM, Yao YD. Modulation classification based on signal constellation diagrams and deep learning. IEEE Trans Neural Netw Learn Syst. 2018;30(3):718–27. https://doi.org/10.1109/TNNLS.2018.2850703.

Pw DR, Elzbieta P. Dissimilarity representation for pattern recognition, the: foundations and applications, Vol. 64; 2005. World scientific, Singapore.

Rajan D, Thiagarajan JJ. A Generative Modeling Approach to Limited Channel ECG Classification. In: 40th annual international conference of the IEEE engineering in medicine and biology society (EMBC); 2018 (pp. 2571–2574).

Rakthanmanon T (n.d.). Fast shapelets: a scalable algorithm for discovering time series shapelets, pp. 668–676.

Rios-Navarro A, Corradi F, Aimar A, Delbruck T, Milde MB, Tapiador-Morales R, et al. NullHop: a flexible convolutional neural network accelerator based on sparse representations of feature maps. IEEE Trans Neural Netw Learn Syst. 2018;30(3):1–13. https://doi.org/10.1109/tnnls.2018.2852335.

Sarkar S, Soundararajan P. Supervised learning of large perceptual organization: graph spectral partitioning and learning automata. IEEE Trans Pattern Anal Mach Intell. 2000;22(5):504–25. https://doi.org/10.1109/34.857006.

Serrà J, Pascual S, Karatzoglou A. Towards a Universal neural network encoder for time series. Artif Intell Res Dev Curr Challenges New Trends Appl. 2018;308:120–9. https://doi.org/10.3233/978-1-61499-918-8-120.

Song W, Wang Z, Liu L, Zhang F, Xue J, Ye Y, et al. Representation learning with deconvolution for multivariate time series classification and visualization. 2016. https://arxiv.org/pdf/1610.07258.pdf.

Taherkhani A, Cosma G, Alani AA, McGinnity TM. Activity recognition from multi-modal sensor data using a deep convolutional neural network. Adv Intell Syst Comput. https://doi.org/10.1007/978-3-030-01177-2_15.

Taherkhani A, Cosma G, McGinnity TM. AdaBoost-CNN: an adaptive boosting algorithm for convolutional neural networks to classify multi-class imbalanced datasets using transfer learning. Neurocomputing. 2020. https://doi.org/10.1016/j.neucom.2020.03.064.

Tanisaro P, Heidemann G. Time series classification using time warping invariant Echo State Networks. In: Proceedings—2016 15th IEEE international conference on machine learning and applications, ICMLA 2016; 2017, pp. 831–836. https://doi.org/10.1109/ICMLA.2016.166.

Tian Y, Wang X, Wu J, Wang R, Yang B. Multi-scale hierarchical residual network for dense captioning. J Artif Intell Res. 2019;64:181–96.

Wang J, Ping L, She MFH, Nahavandi S, Kouzani A. Bag-of-words representation for biomedical time series classification. Biomed Signal Process Control. 2013;8(6):634–44. https://doi.org/10.1016/j.bspc.2013.06.004.

Wang Z, Yan W, Oates T. Time series classification from scratch with deep neural networks: a strong baseline. In: Proceedings of the international joint conference on neural networks, 2017-May; 2017, pp. 1578–1585. https://doi.org/10.1109/IJCNN.2017.7966039.

Wu Y, Schuster M, Chen Z, Le QV, Norouzi M, Macherey W et al. Google’s neural machine translation system: bridging the gap between human and machine translation. 2016. http://arxiv.org/abs/1609.08144

Xing Z, Pei J, Keogh E. A brief survey on sequence classification. ACM SIGKDD Explorations Newsl. 2010;12(1):40. https://doi.org/10.1145/1882471.1882478.

Yang B, Liu R, Sun C, Meng G, Chen X. Dislocated time series convolutional neural architecture: an intelligent fault diagnosis approach for electric machine. IEEE Trans Industr Inf. 2017;13(3):1310–20. https://doi.org/10.1109/tii.2016.2645238.