Abstract

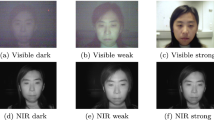

Facial biometric trait based on visible spectrum have been widely studied for gender classification in recent times due to its non-intrusive nature of image capture in a covert manner. Recently, with the advancement in sensing technology, multi-spectral imaging has gained significant attention in this direction. Although substantial studies related to facial gender classification can be seen in the literature independently based on visible and limited on multi-spectral imaging, the essence of gender classification based on visible to multi-spectral band is still an open and challenging problem due to varying spectral gap. In this paper, we present an extensive benchmarking study that employs 22 photometric normalization methods to bridge the gap between visible face images in the training set and facial band images in the testing set before performing the classification. Furthermore, for gender classification, we employed a robust Probabilistic Collaborative Representation Classifier (ProCRC) to learn the features from visible face images and then testing with the individual bands. We present the evaluation results based on visible and multi-spectral face database comprises of 82,650 sample face images belonging to six different illumination conditions. We present a benchmarking quantitative and qualitative average gender classification accuracy by employing 10-fold cross-validation approach independently across nine spectral bands, six different illumination conditions, and 22 photometric normalization methods. Furthermore, to present the essence of our proposed approach, we compare our evaluation results against Convolutional Neural Network (CNN) classifier. The experimental results show maximum gender classification accuracy of \(95.3\pm 2.3\%\) with our proposed approach, outperforming the state-of-the-art CNN demonstrating the potential of using photometric normalization methods for the improved performance accuracy.

Similar content being viewed by others

References

Ahonen T, Rahtu E, Ojansivu V, Heikkila J. Recognition of blurred faces using local phase quantization. In: 19th International Conference on pattern recognition (ICPR), 2008; pp. 1–4.

Antipov G, Berrani S-A, Dugelay J-L. Minimalistic cnn-based ensemble model for gender prediction from face images. Pattern Recognit Lett. 2016;70:59–65.

Bekios-Calfa J, Buenaposada JM, Baumela L. Revisiting linear discriminant techniques in gender recognition. IEEE Trans Pattern Anal Mach Intell. 2011;33:858–64.

Cai S, Zhang L, Zuo W, Feng X. A probabilistic collaborative representation based approach for pattern classification. In: IEEE Conference on computer vision and pattern recognition (CVPR). 2016; p. 2950–959.

Chen C, Ross A. Evaluation of gender classification methods on thermal and near-infrared face images. In: 2011 International Joint Conference on biometrics (IJCB), 2011; p. 1–8.

Eidinger E, Enbar R, Hassner T. Age and gender estimation of unfiltered faces. IEEE Trans Inf Forensics Secur. 2014;9:2170–9.

Fg-net aging database. http://www.fgnet.rsunit.com. Accessed 2010.

Gallagher AC, Chen T. Understanding images of groups of people. In: IEEE Conference on computer vision and pattern recognition. 2009; p. 256–63.

Graf ABA, Wichmann FA. Gender classification of human faces. In: Proceedings of the Second International Workshop on biologically motivated computer vision, BMCV ’02, Berlin, Heidelberg, Springer-Verlag, 2002; p. 491–500.

Hadid A, Ylioinas J, Bengherabi M, Ghahramani M, Taleb-Ahmed A. Gender and texture classification: a comparative analysis using 13 variants of local binary patterns. Pattern Recognit Lett. 2015;6(Part 2):231–8 (Special Issue on “Soft Biometrics").

Han H, Shan S, Chen X, Gao W. A comparative study on illumination preprocessing in face recognition. Pattern Recognit. 2013;46:1691–9.

Hassanpour H, Zehtabian A, Nazari A, Dehghan H. Gender classification based on fuzzy clustering and principal component analysis. IET Comput Vis. 2016;10:228–33.

Huang G, Mattar M, Berg T, Learned-Miller E. Labeled faces in the wild: a database for studying face recognition in unconstrained environments, Tech. rep., 2008.

Jain AK, Dass SC, Nandakumar K. Can soft biometric traits assist user recognition? In: Jain AK, Ratha NK, editors. Biometric technology for human identification, vol. 5404. Bellingham: International Society for Optics and Photonics, SPIE; 2004. p. 561–72.

Jia S, Cristianini N. Learning to classify gender from four million images. Pattern Recognit Lett. 2015;58:35–41.

Juefei-Xu F, Verma E, Goel P, Cherodian A, Savvides M. Deepgender: occlusion and low resolution robust facial gender classification via progressively trained convolutional neural networks with attention. In: 2016 IEEE Conference on computer vision and pattern recognition workshops (CVPRW), June 2016; p. 136–145.

Kannala J, Rahtu E. Bsif: binarized statistical image features. In: Proceedings of the 21st International Conference on pattern recognition (ICPR2012), 2012; p. 1363–366.

Khan K, Attique M, Syed I, Gul A. Automatic gender classification through face segmentation. Symmetry. 2019;11:770.

Levi G, Hassner T. Age and gender classification using convolutional neural networks. In: IEEE Conf. on computer vision and pattern recognition (CVPR) workshops, June 2015.

Liew SS, Khalil-Hani M, Radzi F, Bakhteri R. Gender classification: a convolutional neural network approach. Turk J Electr Eng Comput Sci. 2016;24:1248–64.

Makinen E, Raisamo R. Evaluation of gender classification methods with automatically detected and aligned faces. IEEE Trans Pattern Anal Mach Intell. 2008;30:541–7.

Mansanet J, Albiol A, Paredes R. Local deep neural networks for gender recognition. Pattern Recognit Lett. 2016;70:80–6.

Martinez AM, Benavente R. The ar face database. In: CVC Technical Report No. 24, June 1998; pp. 1–8. Database available at http://www2.ece.ohio-state.edu/~aleix/ARdatabase.html. Accessed 2017

Moeini A, Faez K, Moeini H. Real-world gender classification via local gabor binary pattern and three-dimensional face reconstruction by generic elastic model. IET Image Proc. 2015;9:690–8.

Moeini H, Mozaffari S. Gender dictionary learning for gender classification. J Vis Commun Image Represent. 2017;42:1–13.

Moghaddam B, Yang M-H. Learning gender with support faces. IEEE Trans Pattern Anal Mach Intell. 2002;24:707–11.

Narang N, Bourlai T. Gender and ethnicity classification using deep learning in heterogeneous face recognition. In: 2016 International Conference on biometrics (ICB), June 2016; pp. 1–8.

Nicolo F, Schmid NA. Long range cross-spectral face recognition: Matching swir against visible light images. IEEE Trans Inf Forensics Secur. 2012;7:1717–26.

Nixon MS, Correia PL, Nasrollahi K, Moeslund TB, Hadid A, Tistarelli M. On soft biometrics. Pattern Recognit Lett. 2015;68:218–30.

Phillips PJ, Hyeonjoon M, Rizvi SA. The feret evaluation methodology for face-recognition algorithms. IEEE Trans Pattern Anal Mach Intell. 2000;22:1090–104.

Raghavendra R, Raja KB, Yang B, Busch C. Automatic face quality assessment from video using gray level co-occurrence matrix: an empirical study on automatic border control system. In: Proceedings of the 2014 22nd International Conference on pattern recognition, ICPR ’14, USA, IEEE Computer Society, 2014;p. 438–43.

Raghavendra R, Vetrekar N, Raja KB, Gad RS, Busch C. Robust gender classification using extended multi-spectral imaging by exploring the spectral angle mapper. In: 2018 IEEE 4th International Conference on identity, security, and behavior analysis (ISBA), Jan 2018; pp. 1–8.

Ren J, Jiang X, Yuan J. Noise-resistant local binary pattern with an embedded error-correction mechanism. IEEE Trans Image Process. 2013;22:4049–60.

Ross A, Chen C. Can gender be predicted from near-infrared face images?. In: Proceedings of the 8th International Conference on image analysis and recognition—volume Part II, ICIAR’11, 2011; p. 120–29.

Shan C. Learning local binary patterns for gender classification on real-world face images. Pattern Recognit Lett. 2012;33:431–7 (Intelligent Multimedia Interactivity).

Shih H-C. Robust gender classification using a precise patch histogram. Pattern Recognit. 2013;46:519–28.

Stegmann MB, Ersboll BK, Larsen R. Fame-a flexible appearance modeling environment. IEEE Trans Med Imaging. 2003;22:1319–31.

Štruc V. Phd (pretty helpful development function for) face recognition toolbox. Software available at http://luks.fe.uni-lj.si/sl/osebje/vitomir/face_tools/PhDface/.

Štruc V, Pavešić N. Photometric normalization techniques for illumination invariance. IGI-Global: Pennsylvania; 2011. p. 279–300.

Tapia JE, Perez CA. Gender classification based on fusion of different spatial scale features selected by mutual information from histogram of lbp, intensity, and shape. IEEE Trans Inf Forensics Secur. 2013;8:488–99.

Uzair M, Mahmood A, Mian A. Hyperspectral face recognition with spatiospectral information fusion and pls regression. IEEE Trans Image Process. 2015;24:1127–37.

Vetrekar N, Raghavendra R, Gad RS. Low-cost multi-spectral face imaging for robust face recognition. In: IEEE International Conference on imaging systems and techniques (IST), 2016; p. 324–29.

Vetrekar N, Raghavendra R, Raja KB, Gad RS, Busch C. Extended multi-spectral imaging for gender classification based on image set. In: Proceedings of the 10th International Conference on security of information and networks, SIN ’17, 2017; p. 125–30.

Vetrekar N, Ramachandra R, Raja K, Venkatesh S, Gad R, Busch C. Visible to band gender classification: An extensive experimental evaluation based on multi-spectral imaging. In: 2019 15th International Conference on signal-image technology internet-based systems (SITIS), Nov 2019; p. 120–27.

Vetrekar N, Ramachandra R, Raja KB, Gad RS, Busch C. Robust gender classification using multi-spectral imaging. In: 2017 13th International Conference on signal-image technology internet-based systems (SITIS), 2017; p. 222–28.

Zhu X, Ramanan D. Face detection, pose estimation, and landmark localization in the wild. In: IEEE Conference on computer vision and pattern recognition, June 2012; pp. 2879–2886. Software available at https://www.ics.uci.edu/~xzhu/face/. Accessed 2018

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all the authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Vetrekar, N., Naik, A. & Gad, R.S. Collaborative Representation for Visible to Band Gender Classification Using Multi-spectral Imaging: Extensive Evaluations by Exploring 22 Photometric Normalization Methods. SN COMPUT. SCI. 2, 478 (2021). https://doi.org/10.1007/s42979-021-00910-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-021-00910-3