Abstract

What is the relationship between language and emotion? The work that fills the pages of this special issue draws from interdisciplinary domains to weigh in on the relationship between language and emotion in semantics, cross-linguistic experience, development, emotion perception, emotion experience and regulation, and neural representation. These important new findings chart an exciting path forward for future basic and translational work in affective science.

Similar content being viewed by others

What does language have to do with emotion? Estimates place the origin of spoken language around 50,000–150,000 years ago, a period that coincides with the speciation of Homo sapiens (Botha & Knight, 2009; Perreault & Mathew, 2012). Estimates on the origin of emotion in our evolutionary past are less clear. Although even the earliest nervous systems mitigated threats and acquired rewards, some scholars argue that the experience of discrete and conscious emotions only emerged with brain structures and functions unique to H. sapiens (Barrett, 2017; Ledoux, 2019). Indeed, some scholars hypothesize that the evolutionary pressures and adaptations that may have led to spoken language (caregiving, living in large social groups; de Boer, 2011; Dunbar, 2011; Falk, 2011) may be the same that scaffolded the development of discrete and conscious emotions in H. sapiens (Barrett, 2017; Ledoux, 2019). Correspondingly, H. sapiens’ ability to make discrete meaning of their affective states and to communicate about those states to others could itself have conferred evolutionary fitness (Bliss-Moreau, 2017). When taken together, these anthropological findings suggest that the relationship between language and emotion may be as deep as the origin of our species. They also suggest that the answer to the question “what does language have to do with emotion?” requires a truly interdisciplinary lens that blends questions and tools from fields as far-ranging as biological anthropology, cultural anthropology, linguistics, sociology, psychology, and neuroscience. Addressing this question is the purpose of this special issue of Affective Science.

In recent decades, there has been a veritable explosion of interdisciplinary research on language and emotion within modern affective science. For instance, linguistics research shows that almost all aspects of human spoken language communicate emotion, including prosody, phonetics, semantics, grammar, discourse, and conversation (Majid, 2012). Computational tools applied to natural human language reveal affective meanings that predict outcomes ranging from health and well-being to political behavior (see Jackson et al., 2020). Anthropological and linguistic research demonstrates differences in the meaning of emotion words around the world (Jackson et al., 2019; Lutz, 1988; Wierzbicka, 1992), which could contribute to obsverved cross-cultural differences in emotional experiences (Mesquita et al., 2016) and emotional expressions (Jack et al., 2016) and have implications for cross-cultural communication and diplomacy (see Barrett, 2017). Research in developmental psychology demonstrates that caregiver’s use of emotion words in conversation with infants longitudinally predicts emotion understanding and self-regulatory abilities in later childhood (see Shablack & Lindquist, 2019). In adults, psychological, physiological, and neuroscience research show that accessing emotion words alters emotion perception, self-reported experiences of emotion, the physiology and brain activity associated with emotion, and emotion regulation (Lindquist et al., 2016; Torre & Lieberman, 2018). It is thus no surprise that being able to specifically describe one’s emotional states predicts a range of physical and mental health outcomes (Kashdan et al., 2015) and that expressive writing can have a therapeutic effect (Pennebaker, 1997).

It was this burst of far-reaching and interesting recent findings that led us to propose a special issue on language and emotion at Affective Science. This special issue is the first of its kind at our still nascent journal, and I am grateful to the scholars who submitted 50 proposals for consideration in early 2020, the editorial team that helped evaluate them, and the authors, reviewers, and copyeditors of the 11 articles that now appear herein (all of whom accomplished this feat during the trials of the ongoing COVID-19 pandemic). The result is an issue that builds on foundational knowledge about the relationship between language and emotion with a set of interdisciplinary tools that weigh in on new questions about this topic. The papers herein address the role of affect in semantics; the role of bilingualism in pain, emotional experiences, and emotion regulation; the role of language in the development of emotion knowledge; the role of labeling in emotion regulation; the role of language in the visual perception of emotion on faces; and the shared functional neuroanatomy of language and emotion. In this brief introduction to the special issue, I discuss why these findings are so fascinating and important by situating them in the existing literature and looking to still-unanswered questions. I close by looking forward to the future of research on language and emotion and the bright future of affective science.

What Is the Role of Emotion in Semantics?

Perhaps one of the most essential questions about the relationship between language and emotion revolves around the information that language conveys about emotion. Spoken language expresses experiences, which means that studying language can shine light on the nature of emotions. Indeed, language communicates emotion via almost every aspect (Majid, 2012). Computational linguistics approaches can now use tools from computer science and mathematics to summarize huge databases of spoken language to reveal the semantic meanings conveyed by language on an unprecedented scale (see Jackson et al., 2020). Perhaps most interesting among those endeavors is the ability to use the structure of spoken language to ask questions about how humans understand emotion concepts in particular and concepts in general (i.e., semantics).

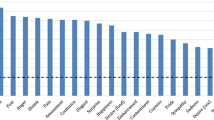

Especially when analyzed at scale, linguistic databases offer an unprecedented opportunity to explore human mental life. For instance, we (Jackson et al., 2019) recently used a computational linguistics approach to examine humans’ understanding of 24 emotion concepts (e.g., “anger,” “sadness,” “fear,” “love,” “grief,” “joy”) across 2,474 languages spanning the globe. We used colexification—a phenomenon in which multiple concepts within a language are named by the same word—as an index of semantic similarity between concepts within a language family. We subsequently constructed networks representing universal and language-specific representations of emotion semantics—that is, which emotion concepts were understood as similar and which were understood as different within a language family. We observed significant cross-linguistic variability in the semantic structure of emotion across the 19 language families in our database. Interestingly, cross-linguistic similarity in emotion semantics was predicted by geographic proximity between language families, suggesting the potential role of cultural contact in shaping a language family’s understanding of emotions. Notably, we also observed a universal semantic structure for emotion concepts; emotion similarity was predicted by the affective dimensions of valence and arousal.

In this issue, DiNatale et al. (in press) used computational linguistics to show that valence and arousal do not just undergird the meaning of emotion concepts; these affective dimensions may undergird all linguistic concepts. Osgood (1952) classically demonstrated that valence, arousal, and dominance predict the extent to which people judge concepts as semantically similar. DiNatale et al. (in press) used a computational linguistics approach to show, for the first time at scale, that colexifications of roughly 40,000 concepts in 2,456 languages are predicted by the affective dimensions of valence, arousal, and dominance. This study exemplifies how computational tools and large corpora of text can be used to delve deeper into the relationship between language and emotion. More specifically, it provides strong empirical evidence at scale that affect underlies word meaning around the globe and that colexificationis a useful index of semantic similarity. These findings are fascinating in that they suggest that knowing what something is may fundamentally depend on knowing its impact on one’s emotions.

How Does Speaking Multiple Languages Alter Emotion?

Computational linguistics of course builds on linguistics, anthropology, sociology, cross-cultural psychology, and other social sciences that use ethnography and cross-cultural comparisons to understand cross-linguistic differences and similarities in emotion. Classic accounts of the 19th and 20th centuries (Darwin, 1872/1965; Levy, 1984; Lutz, 1988; Mead, 1930) documented cross-cultural differences and similarities in emotion concepts (see Russell, 1991 for a review). Similarly, qualitative comparisons between languages (Wierzbicka, 1992) documented both variability in the semantic meaning of emotion concepts across lexicons and core commonalities.

More recently, cross-cultural psychology has addressed cross-cultural similarities and differences in the experience, perception, regulation, and expression of emotions (Chentsova-Dutton, 2020; Kitayama & Markus, 1994; Mesquita et al., 2016; Mesquita & Boiger, 2014; Scherer, 1997; Tsai, 2007). Although this work studies people from different cultures (who largely speak different languages), relatively little has explicitly addressed the role of language in emotion. Pavlenko’s (2008) work on bilingualism and emotion examines how access to different languages can subserve cross-cultural differences in emotion but much more work is needed in our increasingly bicultural world.

Two papers in this special issue specifically address how bilingualism alters affective states. Gianola et al. (in press) examined how bilingualism can impact the intensity of pain by assessing bilingual Latinx participants’ experience of pain when reporting in Spanish versus English. They found that participants reported more intense thermal pain when reporting in the language consistent with their dominant cultural identity (Spanish vs. English). This effect was mediated by skin conductance responses during pain, such that speaking in Spanish resulted in greater skin conductance and greater intensity pain for Latinx participants.

Zhou et al. (in press) investigated in this issue how both culture and language impact the pattern of daily self-reported emotional experiences in Chinese–English bilinguals. They show that the patterns of Chinese and English monolinguals’ emotion self-reports are distinct, but that Chinese–English bilinguals’ self-reported emotion patterns are a blend of their monolingual counterparts’ patterns. Answering the survey in Chinese versus English exacerbated the difference between the emotion patterns of Chinese bilinguals and English monolinguals; this effect was also influenced by the extent of exposure to speakers’ non-native culture. Collectively, these findings are beginning to show that language is not merely a form of communication, but that speaking different languages may differentially impact the experience of affective states.

How Does Learning About Emotion Words Alter the Development of Emotion?

Different languages may impact emotion because they encode different emotion concepts. Learning a language helps speakers acquire the emotion concepts relevant to their culture. Indeed, developmental psychology research demonstrates that early discourse with adults helps children to acquire and develop a complex understanding of the emotion concepts encoded in their language (see Shablack & Lindquist, 2019). For instance, early findings showed that parents’ use of language about emotions in child-directed speech longitudinally predicted children’s understanding of other’s emotions at 36 months (Dunn et al., 1991). Other work found that language abilities, more generally, predicted emotion understanding (Pons et al., 2003). Widen and Russell (2003, 2008) showed that young (aged 2–7) children’s ability to perceive emotions on faces correlated with the number of emotion category labels that those children produced in spoken discourse. These findings furthermore showed that children’s understanding of the meaning of labels such as “anger,” “sadness,” “fear,” and “disgust” started quite broadly in terms of valence but that it narrowed over time to be more discrete and categorical. More recently, Nook et al. (2020) demonstrated a developmental shift in the structure of emotion knowledge, such that children’s understanding of linguistic emotion categories starts unidimensionally in terms of valence but becomes more multidimensional from childhood to adolescence.

What still remains unknown is how infants and children learn to map emotion labels to emotional behaviors and sensations in the world, and how their understanding of emotions ultimately becomes adult-like through experience and learning. Although such label-concept mapping has been well studied in object perception, it is relatively unstudied in emotion (see Hoemann et al., 2020). In this issue, Ruba et al. (in press) weighed in on this question by examining how 1-year-olds learn to map novel labels to facial expressions. They found that 18-month-olds are readily able to match labels to human facial muscle movements but that vocabulary size and stimulus complexity predict 14-month-olds’ ability to do so. What is perhaps most interesting about these findings is not that 18-month-olds can map labels to facial muscle movements, but that variation in linguistic abilities and stimulus complexity predicts 14-month-olds’ ability to do so. These findings are consistent with other research showing that receptive vocabulary also predicts 14-month-olds’ ability to categorize physical objects in a more complex abstract, goal-directed, and situated manner (e.g., that blocks can be categorized by both shape or material; Ellis & Oakes, 2006) Taken together, these findings may suggest that emotion categories are more akin to abstract categories that are a product of social learning than concrete categories that exist in the physical world.

In this special issue, Grosse et al. (in press) also provide important new evidence on how children aged 4–11 learn to use words to communicate about emotion. They reveal that children’s use of a range of emotion labels to describe vignettes increases with age, but that children fail to show an adult-like understanding of emotion labels even by age 11. Critically, they build upon the prior developmental work by showing that the more children hear certain labels for emotion in adults’ child-directed discourse, the earlier and more frequently children use those labels to describe emotional situations. Collectively, the work of Ruba et al. (in press) and Grosse et al. (in press) is beginning to show exactly how exposure to language about emotion contributes to more complex emotional abilities from infancy onward.

How Does Language Alter Emotion Perception?

The research makes increasingly clear that language represents semantic content related to emotion, impacts the emotional reports—and perhaps even physiological intensity of --affective experiences for bilingual individuals, and influences emotion perception and understanding during early development. These findings may still leave open the possibility that language has a relatively superficial effect on emotion, insofar as it only impacts emotion after the fact during communication. Yet a body of growing research suggests that momentary access to emotion words may also impact the content of individuals’ emotional perceptions (Lindquist et al., 2016). Studies that use cognitive techniques such as semantic satiation or priming to increase or decrease accessibility to the meaning of emotion category labels demonstrate respective impairments or facilitation in participants’ ability to perceive emotion on other’s faces; these effects occur even in tasks that do not explicitly require verbal labeling during responding. For instance, in the first study to this effect, we (Lindquist et al., 2006) used semantic satiation—in which participants repeat a word relevant to an upcoming perceptual judgment (e.g., “anger”) out loud 30 times—to temporarily impair participants’ access to the semantic meaning of emotion category labels. Participants then made a perceptual judgment about whether two facial portrayals of the named emotion category (e.g., anger) matched one another or not. We found that participants were slower and less accurate to perceptually match the two angry faces on satiation but not control trials (in which they had repeated the word out loud only 3 times). Multiple studies have since replicated and extended this effect. For instance, we have shown that semantic satiation impairs an emotional facial portrayals’ ability to perceptually prime itself in the subsequent moments (Gendron et al., 2012) and that individuals with semantic dementia, who have permanently reduced access to the meaning of words following neurodegeneration, can no longer freely sort facial portrayals of emotion into six discrete categories of anger, disgust, fear, happiness, sadness, and neutral (Lindquist et al., 2014). In contrast, studies found that priming emotion labels facilitates and biases the perception of emotional facial portrayals. For instance, verbalizing the emotional meaning of facial muscle movements with an emotion label (e.g., “anger”) biases perceptual memory of the face towards a more prototypical representation of the facial muscle movements associated with that category (Halberstadt et al., 2009). Access to emotion category labels also speeds and sensitizes perceptions of facial portrayals of emotion towards a more accurate representation of the observed face (Nook et al., 2015). Moreover, learning novel emotion category labels induces categorical perception of unfamiliar facial behaviors (Fugate et al., 2010) and helps individuals to acquire and update their perceptual categories of emotional facial behaviors (Doyle & Lindquist, 2018).

Collectively, these findings lead to the hypothesis that emotion category labels serve as a form of context that helps disambiguate the meaning of others’ affective behaviors (Barrett et al., 2007; Lindquist et al., 2015; Lindquist & Gendron, 2013). Two papers in this special issue are the first to my knowledge to show that emotion category labels are superior to other forms of context when participants are perceiving emotion in facial portrayals. Lecker and Aviezer (in press) presented a study that extends their prior work demonstrating that body postures serve as a form of context for understanding the meaning of emotional facial portrayals. They found that labeling face–body pairs with emotion category labels boosts the effect of the body on facial emotion perception when compared to trials in which participants use a nonverbal response option; these findings suggest that emotion concepts are likely used to make meaning of both facial and body cues as instances of emotion categories, and that body cues may evoke contextual effects on the perception of facial emotion, in part, because they prime emotion concepts. Indeed, one interpretation of the effect of other forms of context on perception of facial emotion is that these contexts prime emotion categories. To test this hypothesis, we (Doyle et al., in press) explicitly compared the effect of visual scenes versus emotion category labels as primes for perceptions of facial emotion. Mirroring the findings of Lecker and Aviezer (in press), we found that priming the words “sadness” and “disgust” facilitates the perception of those emotions in facial portrayals to a greater extent than do visual scenes conveying sad versus disgusting contexts (e.g., a funeral, cockroaches on food). We further demonstrate that basic-level categories such as “sadness” and “disgust” more effectively prime facial emotion perception than subordinate-level categories such as “misery” or “repulsion,” consistent with the notion that basic-level categories are those that most efficiently guide categorization (Rosch, 1973).

Finally, in this issue Schwering et al. (in press) extended these “language as context” findings by showing how exposure to emotion words via fiction reading may help individuals to build the cache of emotion concepts that in turn guide emotion perception. Through a large-scale corpus analysis, the authors demonstrate that fiction specifically contains linguistic information related to the contents of other’s minds; exposure to fiction, in turn, predicts participants’ ability to perceive emotion in facial portrayals. These findings mirror the developmental evidence that exposure to emotion words in childhood predicts emotion understanding (Dunn et al., 1991; Grosse et al., in press) and suggest that exposure to emotion words via fiction may continue to facilitate greater emotion perception ability across the lifespan.

How Does Language Alter Emotion Experience and Regulation?

Finally, there is growing evidence that language not only alters emotion perception on others’ faces, but that it also alters how a person experiences and regulates emotional sensations within their own bodies. Like the perception findings, these findings suggest that the effect of language on emotion goes beyond mere communication insofar as access to emotion labels during emotional experience impacts behavioral, physiological, and neural correlates of emotion and emotion regulation. Some research suggests that labeling one’s affective state solidifies it, causing participants to experience a more ambiguous affective state as a discrete and specific instance of an emotion category. For instance, we (Lindquist & Barrett, 2008) found that priming participants with the linguistic emotion category “fear” (vs. “anger” or a neutral control prime) prior to an unpleasant experience made participants exhibit greater risk aversion, which is prototypically associated with experiences of fear but not anger. Similarly, Kassam and Mendes (2013) demonstrated that asking participants to label (vs. not label) their experience of anger during an unpleasant interview may have solidified participants’ experience of anger. Participants who labeled versus did not label their feelings had increased cardiac output and vasoconstriction, which are both associated with experiences of anger.

It may be that accessing emotion labels allows a person to conceptualize their affective state in a discrete and context-appropriate manner and that doing so confers benefits for well-being. Indeed, an abundance of findings shows that individuals who use emotion labels in a discrete and specific manner (i.e., those who are high in “emotional granularity” also known as “emotion differentiation”; e.g., Barrett, 2004; Barrett et al., 2001; see Kashdan et al., 2015 for a review) experience less psychopathology (Demiralp et al., 2012), experience better outcomes following psychotherapy (e.g., length of time to relapse in substance use disorder; Anand et al., 2017), and engage in less interpersonal aggression (Pond et al., 2012). Moreover, individuals who are high in granularity exhibit brain electrophysiology associated with greater semantic retrieval and cognitive control during emotional experiences (Lee et al., 2017) and use more specific (Barrett et al., 2001) and effective (Kalokerinos et al., 2019) emotion regulation strategies during intense instances of negative emotion.

Studies that explicitly manipulate the presence versus absence of emotion labels and examine the effect on the neural correlates of emotion corroborate the notion that access to emotion labels facilitates the regulation of affective states. Early neuroimaging studies (Hariri et al., 2000; Lieberman et al., 2007) demonstrated that labeling facial expressions with emotion words reduced amygdala activity to those stimuli, an effect that is mediated by connectivity between prefrontal brain regions involved in semantic retrieval and the amygdala (Torrisi et al., 2013). Our recent meta-analysis (Brooks et al., 2017) revealed that even the incidental presence of emotion labels across 386 neuroimaging studies of emotion (i.e., as response options or instructions in emotional tasks) results in increased activation of regions involved in semantic retrieval and decreases in amygdala activation.

These findings collectively explain why labeling one’s affective state reduces the intensity of emotional experiences (Lieberman et al., 2011) and why expressive writing can be therapeutic (Pennebaker, 1997), but much remains to be understood about the complex relationship between language, emotion experience, and emotion regulation. Two papers in this special issue delve deeper into these questions. Nook et al. (in press) showed that labeling one’s emotional state prior to engaging in explicit goal-directed emotion regulation strategies such as reappraisal and mindfulness solidifies that emotion, such that it becomes more difficult to subsequently regulate. These findings are important in terms of the role of language in emotion construction and explicit regulation; whereas accessing emotion labels may lead to more discrete and situationally relevant affective states, the downside might be that it becomes harder to “re-think” or transcend these more discrete states after the fact. In contrast, Vives et al. (in press) asked bilingual participants to engage in affect labeling, an implicit non-goal directed form of emotion regulation. They replicate classic affect labeling findings (Hariri et al., 2000; Lieberman et al., 2007) but show that affect labeling in one’s second language has an inert effect on amygdala activity. These findings converge with evidence that first languages are more affectively impactful than second languages (Harris et al., 2003) and suggest some primacy for the language in which one first learned about emotions in modulating the intensity or quality of affective states.

Finally, the findings of Vives et al. (in press) raise questions about how to best understand the neural interaction of language and emotion. One major question is whether language is separate from and merely interactive with emotional states, or whether the semantic representations supported by language ultimately help constitute discrete emotional states by transforming general affective sensations into instances of specific emotion categories; this is a question that has both theoretical and practical implications. As we (Satpute & Lindquist, in press) discuss in our theoretical review, neuroscience research may uniquely weigh in on this question by addressing how brain regions associated with representing semantic knowledge about emotion categories dynamically interact with and alter activity within brain regions associated with representing affective sensations.

Looking Forward

As this brief look at the literature shows, there is still much—from a basic science lens—to understand about how language and emotion interact. Language and emotion may have interacted in the minds of H. sapiens for millennia, yet we have only scratched the surface of empirically examining their complex relationship and its implications for human behavior. Papers in this special issue are especially pushing the dial on our knowledge by further examining this question in terms of semantics, cross-linguistic experience, development, emotion perception, emotion experience and regulation, and the neural representation of emotion.

In addition, translational questions about the relationship between language and emotion remain wide open. There is early evidence that language has an important impact on emotion in psychotherapy (Kircanski et al., 2012) and education (Brackett et al., 2019). Computing (Ong et al., 2019) is increasingly seeking to understand the impact of language and emotion. Finally, a better understanding of the relationship between language and emotion can weigh in on an understanding of the human condition, more generally (Jackson et al., 2020). These are ultimately interdisciplinary questions that we as affective scientists are especially well poised to address, and I look forward to what we continue to learn as affective science continues to expand.

References

Anand, D., Chen, Y., Lindquist, K. A., & Daughters, S. B. (2017). Emotion differentiation predicts likelihood of initial lapse following substance use treatment. Drug and Alcohol Dependence, 180, 439–444. https://doi.org/10.1016/j.drugalcdep.2017.09.007.

Barrett, L. F. (2004). Feelings or words? Understanding the content in self-report ratings of experienced emotion. Journal of Personality and Social Psychology, 87(2), 266–281.

Barrett, L. F. (2017). How emotions are made: The secret life of the brain. Houghton Mifflin Harcourt.

Barrett, L. F., Gross, J., Christensen, T. C., & Benvenuto, M. (2001). Knowing what you’re feeling and knowing what to do about it: Mapping the relation between emotion differentiation and emotion regulation. Cognition & Emotion, 15(6), 713–724. https://doi.org/10.1080/02699930143000239.

Barrett, L. F., Lindquist, K. A., & Gendron, M. (2007). Language as context for the perception of emotion. Trends in Cognitive Sciences, 11(8), 327–332. https://doi.org/10.1016/j.tics.2007.06.003.

Bliss-Moreau, E. (2017). Constructing nonhuman animal emotion. Current Opinion in Psychology, 17, 184–188. https://doi.org/10.1016/j.copsyc.2017.07.011.

Botha, R., & Knight, C. (2009). The cradle of language. Oxford University Press.

Brackett, M. A., Bailey, C. S., Hoffmann, J. D., & Simmons, D. N. (2019). RULER: A theory-driven, systemic approach to social, emotional, and academic learning. Educational Psychologist, 54(3), 144–161.

Brooks, J. A., Shablack, H., Gendron, M., Satpute, A. B., Parrish, M. H., & Lindquist, K. A. (2017). The role of language in the experience and perception of emotion: A neuroimaging meta-analysis. Social Cognitive and Affective Neuroscience, 12(2), 169–183.

Chentsova-Dutton, Y. (2020). Emotions in cultural dynamics. Emotion Review, 12(2), 47. https://doi.org/10.1177/1754073920921467.

Darwin, C. (1965). The expression of the emotions in man and animals (1872). U of Chicago P.

De Boer, B. (2011). Infant‐directed speech and language evolution. In K.R. Gibson and M. Tallerman (Eds.), The Oxford Handbook of Language Evolution. https://doi.org/10.1093/oxfordhb/9780199541119.013.0033.

Demiralp, E., Thompson, R. J., Mata, J., Jaeggi, S. M., Buschkuehl, M., Barrett, L. F., Ellsworth, P. C., Demiralp, M., Hernandez-Garcia, L., Deldin, P. J., Gotlib, I. H., & Jonides, J. (2012). Feeling blue or turquoise? Emotional differentiation in major depressive disorder. Psychological Science, 23(11), 1410–1416. https://doi.org/10.1177/0956797612444903.

DiNatale, A., Pellert, M., & Garcia, D. (in press). Colexification networks encode affective meaning. Affective Science.

Doyle, C. M., & Lindquist, K. A. (2018). When a word is worth a thousand pictures: Language shapes perceptual memory for emotion. Journal of Experimental Psychology: General, 147(1), 62–73.

Doyle, C. M., Gendron, M., & Lindquist, K. A. (in press). Language is a unique context for emotion percetion. Affective Science, 22.

Dunbar, R. I. M. (2011). Gossip and the social origins of language. In K.R. Gibson and M. Tallerman (Eds.), The Oxford Handbook of Language Evolution. https://doi.org/10.1093/oxfordhb/9780199541119.013.0036.

Dunn, J., Brown, J., & Beardsall, L. (1991). Family talk about feeling states and children’s later understanding of others’ emotions. Developmental Psychology, 27(3), 448–455.

Ellis, A. E., & Oakes, L. M. (2006). Infants flexibly use different dimensions to categorize objects. - PsycNET. Developmental Psychology, 42, 1000–1011. https://doi.org/10.1037/F0012-1649.42.6.1000.

Falk, D. (2011). The role of hominin mothers and infants in prelinguistic evolution. In K.R. Gibson and M. Tallerman (Eds.), The Oxford Handbook of Language Evolution. https://doi.org/10.1093/oxfordhb/9780199541119.013.0032.

Fugate, J., Gouzoules, H., & Barrett, L. F. (2010). Reading chimpanzee faces: Evidence for the role of verbal labels in categorical perception of emotion. Emotion, 10(4), 544.

Gendron, M., Lindquist, K. A., Barsalou, L., & Barrett, L. F. (2012). Emotion words shape emotion percepts. Emotion, 12(2), 314–325.

Gianola, M., Llabre, M., & Losin, E. A. R. (in press). Effects of language context and cultural identity on the pain experience of Spanish–English bilinguals. Affective Science. https://doi.org/10.1007/s42761-020-00021-x.

Grosse, G., Steubel, B., Gunenhauser, C., & Saalbach, H. (in press). Let’s talk about emotions: The development of children’s emotion vocabulary from 4 to 11 years of age. Affective Science.

Halberstadt, J., Winkielman, P., Niedenthal, P. M., & Dalle, N. (2009). Emotional conception. Psychological Science, 20(10), 1254–1261. https://doi.org/10.1111/j.1467-9280.2009.02432.x.

Hariri, A. R., Bookheimer, S. Y., & Mazziotta, J. C. (2000). Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport, 11(1), 43–48.

Harris, C. L., Ayçiçeǧi, A., & Gleason, J. B. (2003). Taboo words and reprimands elicit greater autonomic reactivity in a first language than in a second language. Applied Psycholinguistics., 24, 561–579. https://doi.org/10.1017/S0142716403000286.

Hoemann, K., Wu, R., LoBue, V., Oakes, L. M., Xu, F., & Barrett, L. F. (2020). Developing an understanding of emotion categories: Lessons from objects. Trends in Cognitive Sciences, 24(1), 39–51.

Jack, R. E., Sun, W., Delis, I., Garrod, O. G., & Schyns, P. G. (2016). Four not six: Revealing culturally common facial expressions of emotion. Journal of Experimental Psychology: General, 145(6), 708–730.

Jackson, J. C., Watts, J., Henry, T. R., List, J.-M., Forkel, R., Mucha, P. J., Greenhill, S. J., Gray, R. D., & Lindquist, K. A. (2019). Emotion semantics show both cultural variation and universal structure. Science, 366(6472), 1517–1522.

Jackson, J., Watts, J., List, J.-M., Drabble, R., & Lindquist, K. (2020). From text to thought: How analyzing language can advance psychological science [Preprint]. PsyArXiv Preprints, a.

Kalokerinos, E. K., Erbas, Y., Ceulemans, E., & Kuppens, P. (2019). Differentiate to regulate: low negative emotion differentiation is associated with ineffective use but not selection of emotion-regulation strategies. Psychological Science, 30(6), 863–879. https://doi.org/10.1177/0956797619838763.

Kashdan, T. B., Barrett, L. F., & McKnight, P. E. (2015). Unpacking emotion differentiation: transforming unpleasant experience by perceiving distinctions in negativity. Current Directions in Psychological Science, 24(1), 10–16. https://doi.org/10.1177/0963721414550708.

Kassam, K. S., & Mendes, W. B. (2013). The effects of measuring emotion: Physiological reactions to emotional situations depend on whether someone is asking. PLoS One, 8(6), e64959.

Kircanski, K., Lieberman, M. D., & Craske, M. G. (2012). Feelings into words: Contributions of language to exposure therapy. Psychological Science, 23(10), 1086–1091. https://doi.org/10.1177/0956797612443830.

Kitayama, S. E., & Markus, H. R. E. (1994). Emotion and culture: Empirical studies of mutual influence. American Psychological Association.

Lecker, M., & Aviezer, H. (in press). More than words? Semantic emotion labels boost context effects on faces. Affective Science.

Ledoux, J. (2019). The deep history of ourselves: The four-billion-year story of how we got conscious brains. Penguin Books.

Lee, J. Y., Lindquist, K. A., & Nam, C. S. (2017). Emotional granularity effects on event-related brain potentials during affective picture processing. Frontiers in Human Neuroscience, 11. https://doi.org/10.3389/fnhum.2017.00133.

Levy, R. (1984). The emotions in comparative perspective. In K. R. Scherer & P. Ekman (Eds.), Approaches to motion (pp. 397–412). Lawrence Erlbaum Associates.

Lieberman, M. D., Eisenberger, N. I., Crockett, M. J., Tom, S. M., Pfeifer, J. H., & Way, B. M. (2007). Putting feelings into words. Psychological Science, 18(5), 421.

Lieberman, M. D., Inagaki, T. K., Tabibnia, G., & Crockett, M. J. (2011). Subjective responses to emotional stimuli during labeling, reappraisal, and distraction. Emotion, 11(3), 468–480.

Lindquist, K. A., & Barrett, L. F. (2008). Constructing emotion: The experience of fear as a conceptual act. Psychological Science, 19(9), 898–903. https://doi.org/10.1111/j.1467-9280.2008.02174.x.

Lindquist, K. A., & Gendron, M. (2013). What’s in a word? Language constructs emotion perception. Emotion Review, 5(1), 66–71.

Lindquist, K. A., Barrett, L. F., Bliss-Moreau, E., & Russell, J. A. (2006). Language and the perception of emotion. Emotion, 6(1), 125–138. https://doi.org/10.1037/1528-3542.6.1.125.

Lindquist, K. A., Gendron, M., Barrett, L. F., & Dickerson, B. C. (2014). Emotion perception, but not affect perception, is impaired with semantic memory loss. Emotion, 14(2), 375–387.

Lindquist, K. A., Satpute, A. B., & Gendron, M. (2015). Does language do more than communicate emotion? Current Directions in Psychological Science, 24(2), 99–108.

Lindquist, K. A., Gendron, M., Satpute, A. B., & Lindquist, K. (2016). Language and emotion. Handbook of emotions (4th ed.). The Guilford Press.

Lutz, C. (1988). Ethnographic Perspectives on the emotion lexicon. In V. Hamilton, G. H. Bower, & N. H. Frijda (Eds.), Cognitive perspectives on emotion and motivation (pp. 399–419). Springer. https://doi.org/10.1007/978-94-009-2792-6_16.

Majid, A. (2012). Current emotion research in the language sciences. Emotion Review, 4(4), 432–443. https://doi.org/10.1177/1754073912445827.

Mead, M. (1930). Growing up in New Guinea: A comparitive study of primitive education. New York: William Morrow.

Mesquita, B., & Boiger, M. (2014). Emotions in context: A sociodynamic model of emotions. Emotion Review, 6(4), 298–302. https://doi.org/10.1177/1754073914534480.

Mesquita, B., Boiger, M., & De Leersnyder, J. (2016). The cultural construction of emotions. Current Opinion in Psychology, 8, 31–36. https://doi.org/10.1016/j.copsyc.2015.09.015.

Nook, E. C., Lindquist, K. A., & Zaki, J. (2015). A new look at emotion perception: Concepts speed and shape facial emotion recognition. Emotion, 15, 569–578.

Nook, E. C., Stavish, C. M., Sasse, S. F., Lambert, H. K., Mair, P., McLaughlin, K. A., & Somerville, L. H. (2020). Charting the development of emotion comprehension and abstraction from childhood to adulthood using observer-rated and linguistic measures. Emotion, 20(5), 773–792. https://doi.org/10.1037/emo0000609.

Nook, E. C., Satpute, A. B., & Ochsner, K. N. (in press). Emotion naming impedes both cognitive reappraisal and mindful acceptance strategies of emotion regulation. Affective Science.

Ong, D. C., Wu, Z., Zhi-Xuan, T., Reddan, M., Kahhale, I., Mattek, A., & Zaki, J. (2019). Modeling emotion in complex stories: The Stanford emotional narratives dataset. IEEE Transactions on Affective Computing, 1–1. https://doi.org/10.1109/TAFFC.2019.2955949.

Osgood, C. E. (1952). The nature and measurement of meaning. Psychological Bulletin, 49(3), 197–237.

Pavlenko, A. (2008). Emotion and emotion-laden words in the lexicon. Bilingualism: Language and Cognition, 11, 147–164. https://doi.org/10.1017/S1366728908003283.

Pennebaker, J. W. (1997). Writing about emotional experiences as a therapeutic process. Psychological Science, 8(3), 162–166.

Perreault, C., & Mathew, S. (2012). Dating the origin of language using phonemic diversity. PLoS ONE, 7(4). https://doi.org/10.1371/journal.pone.0035289.

Pond, R. S., Kashdan, T. B., DeWall, C. N., Savostyanova, A., Lambert, N. M., & Fincham, F. D. (2012). Emotion differentiation moderates aggressive tendencies in angry people: A daily diary analysis. Emotion, 12(2), 326–337. https://doi.org/10.1037/a0025762.

Pons, F., Lawson, J., Harris, P. L., & De Rosnay, M. (2003). Individual differences in children’s emotion understanding: Effects of age and language. Scandinavian Journal of Psychology, 44(4), 347–353.

Rosch, E. H. (1973). On the internal structure of perceptual and semantic categories. In T.E. Moore (Ed.), On the internal structure of perceptual and semantic categories. New York: Academic Press.

Ruba, A., Harris, L. T., & Wilbourne, M. P. (in press). Examining preverbal infants’ ability to map labels to facial configurations. Affective Science.

Russell, J. A. (1991). Culture and the categorization of emotions. Psychological Bulletin, 110(3), 426–450.

Satpute, A. B., & Lindquist, K. A. (in press). At the neural intersection of language and emotion. Affective Science.

Scherer, K. R. (1997). The role of culture in emotion-antecedent appraisal. Journal of Personality and Social Psychology, 73(5), 902–922.

Schwering, S. C., Ghaffari-Nikou, N., Zhao, F., Niedenthal, P. M., & MacDonald, M. C. (in press). Exploring the relationship between fiction reading and emotion recognition. Affective Science.

Shablack, H., & Lindquist, K. A. (2019). The role of language in emotional development. In Handbook of emotional development (pp. 451–478). Springer.

Torre, J. B., & Lieberman, M. D. (2018). Putting feelings into words: Affect labeling as implicit emotion regulation. Emotion Review, 10(2), 116–124.

Torrisi, S. J., Lieberman, M. D., Bookheimer, S. Y., & Altshuler, L. L. (2013). Advancing understanding of affect labeling with dynamic causal modeling. NeuroImage, 82, 481–488.

Tsai, J. L. (2007). Ideal affect: Cultural causes and behavioral consequences. Perspectives on Psychological Science, 2(3), 242–259.

Vives, M. L., Costumero, V., Avila, C., & Costa, A. (in press). Foreign language processing undermines affect labeling. Affective Science.

Widen, S. C., & Russell, J. A. (2003). A closer look at preschoolers’ freely produced labels for facial expressions. Developmental Psychology, 39(1), 114–127.

Widen, S. C., & Russell, J. A. (2008). Children acquire emotion categories gradually. Cognitive Development, 23(2), 291–312.

Wierzbicka, A. (1992). Semantics, culture, and cognition: Universal human concepts in culture-specific configurations. Oxford University Press.

Zhou, C., Dewaele, M., M. Ochs, & De Leersnyder, J. (in press). The role of language and cultural engagement in emotional fit with culture: An experiment comparing Chinese-English bilinguals to British and Chinese monolinguals. Affective Science.

Author information

Authors and Affiliations

Contributions

KAL wrote and prepared the manuscript.

Corresponding author

Ethics declarations

Data Availability

There is no data associated with this manuscript.

Conflict of Interest

The corresponding author states there is no conflict of interest.

Ethical Approval

There are no studies associated with this manuscript and thus no preregistrations.

Informed Consent

Not applicable.

Additional information

Handling Editor: Wendy Berry Mendes

Rights and permissions

About this article

Cite this article

Lindquist, K.A. Language and Emotion: Introduction to the Special Issue. Affec Sci 2, 91–98 (2021). https://doi.org/10.1007/s42761-021-00049-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42761-021-00049-7