Abstract

This paper demonstrates the application of smoothed bootstrap methods and Efron’s methods for hypothesis testing on real-valued data, right-censored data and bivariate data. The tests include quartile hypothesis tests, two sample medians and Pearson and Kendall correlation tests. Simulation studies indicate that the smoothed bootstrap methods outperform Efron’s methods in most scenarios, particularly for small datasets. The smoothed bootstrap methods provide smaller discrepancies between the actual and nominal error rates, which makes them more reliable for testing hypotheses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The bootstrap method, as introduced by Efron [13], is a nonparametric statistical method proposed to specify the variability of sample estimates. The method has been widely used in the literature for a variety of statistical problems [17] as it is easy to apply and overall provides good results. When the distribution is unknown, the bootstrap method could be of great practical use [10].

For univariate real-valued data, Efron [13] introduced the bootstrap method, which is used in many real-world applications; see Efron and Tibshirani [17], Davison and Hinkley [10] and Berrar [5] for more details. For an original data set of size n, bootstrap samples of size n are created by random sampling with replacement and then computing the function of interest based on each bootstrap sample. The empirical distribution of the results can be used as a proxy for the distribution of the function of interest. In the case of finite support, Banks [4] presented a smoothed bootstrap method by linear interpolation between consecutive observations. Banks’ bootstrap method starts with ordering the n observations of the original sample, where it is assumed that there are no ties, and taking the \(n+1\) intervals of the partition of the support created by the n ordered observations. Each interval is assigned probability \(\frac{1}{n+1}\). To generate one Banks’ bootstrap sample, n intervals are resampled, and then one observation is drawn uniformly from each chosen interval. With Banks’ bootstrap method, it is allowed to sample from the whole support, and ties occur with probability 0 in the bootstrap samples. This is contrary to Efron’s method, where the process is restricted to resampling from the original data set [13]. In the case of underlying distributions with infinite support, Coolen and BinHimd [8] generalised Banks’ bootstrap method by assuming distribution tail(s) for the first and last interval.

Efron [14] presented the bootstrap method for right-censored data, which is widely used in survival analysis; see Efron and Tibshirani [4, 16]. This bootstrap version is very similar to the method presented for univariate real-valued data, where multiple bootstrap samples of size n are created by resampling from the original sample, and the function of interest is computed based on each bootstrap sample. The empirical distribution of those resulting values can be used as a good proxy for the distribution of the function of interest. Al Luhayb et al. [2] generalized Banks’ bootstrap method based on the right-censoring \(A_{(n)}\) assumption [9]. The generalised bootstrap method produced better results; see Al Luhayb [1] and Al Luhayb et al. [2] for more details.

Efron and Tibshirani [16] introduced the bootstrap method for bivariate data, where again, multiple bootstrap samples are generated by resampling from the original data set, and the function of interest is computed based on each bootstrap sample. The empirical distribution of the resulting values can be a good proxy for the distribution of the function of interest. However, Efron’s bootstrap method often produces poor results when working with small data sets. To address this issue, Al Luhayb et al. [3] proposed three new smoothed bootstrap methods. These methods rely on applying Nonparametric Predictive Inference on the marginals and modelling the dependence using parametric and nonparametric copulas. The new bootstrap methods have been shown to produce more accurate results. For further details, we refer the reader to Al Luhayb [1] and Al Luhayb et al. [3].

Classical statistical methods are widely used for testing statistical hypotheses, although their underlying assumptions are not always met, especially with complex data sets. To avoid these issues, Efron’s bootstrap method has been used to test statistical hypotheses [16, 23, 24], which is easy to implement, and it provides good approximation results. However, it may not be suitable for small data sets and may include ties in the bootstrap samples. To overcome these limitations, various smoothed bootstrap methods have been proposed by Banks [4], Al Luhayb et al. [2] and Al Luhayb et al. [3] for real-valued data, right-censored data, and bivariate data, respectively. This paper investigates the use of these bootstrap methods for hypothesis testing and compares their results with those of Efron’s methods.

This paper is organised as follows: Sect. 2 provides an overview of several bootstrap methods for real-valued univariate data, right-censored univariate data, and real-valued bivariate data. To illustrate their application, an example with data from the literature is presented in Sect. 3 using Efron’s and Banks’ bootstrap methods for hypothesis testing. Section 4 compares the smoothed bootstrap methods and Efron’s bootstrap methods through simulations in various hypothesis tests, such as quartile hypothesis tests, two-sample medians, Pearson and Kendall correlation tests. Firstly, the smoothed bootstrap methods and Efron’s bootstrap methods for real-valued univariate data and right-censored univariate data are used to compute the Type I error rates for quartile tests. Secondly, the achieved significance level is used to compute the Type I error rate for two-sample median tests. Lastly, for real-valued bivariate data, the smoothed bootstrap methods and Efron’s bootstrap method are compared in computing the Type I error rates for Pearson and Kendall correlation tests. The final section provides some concluding remarks.

2 Bootstrap Methods for Different Data Types

When it comes to real-world applications, using traditional statistical methods can be challenging due to the mathematical assumptions involved. However, the use of bootstrap methods can provide a computer-based way of conducting statistical inference that doesn’t require complex formulas. This paper demonstrates the use of different bootstrap methods for hypothesis testing. This section will provide an overview of multiple bootstrap methods that can be applied to real-valued data, right-censored data, and bivariate data.

2.1 Bootstrap Methods for Real-Valued Univariate Data

In this section, we will discuss two bootstrap methods for data that include only real-valued observations, namely Efron’s bootstrap method and Banks’ bootstrap method [4, 13]. These methods are used to measure the variability of sample estimates for a given function of interest \(\theta (F)\), where F is a continuous distribution defined on the interval [a, b]. Suppose we have n independent and identically distributed random quantities \(X_{1}, X_{2}, \ldots , X_{n}\) from the distribution F and the corresponding observations are \(x_{1}, x_{2}, \ldots , x_{n}\).

Efron’s bootstrap method [13] is a nonparametric method proposed to measure the variability of sample estimates. It uses the empirical distribution function of the original sample, where each observation has the same probability of being selected. To create B resamples of size n, we randomly select observations with replacement from the original sample. We then calculate the function of interest \(\hat{\theta }\) for each bootstrap sample to obtain \(\hat{\theta }_{1}, \hat{\theta }_{2}, \ldots , \hat{\theta }_{B}\). The empirical distribution of these results approximates the sampling distribution of \(\theta (F)\). Efron’s bootstrap method is commonly used for hypothesis testing and has been shown to provide reliable results [17].

Banks’ bootstrap method [4] is a smoothed bootstrap method for real-valued univariate data. The original data points are ordered as \(x_{(1)}, x_{(2)}, \ldots , x_{(n)}\), and the sample space [a, b] is divided into \(n+1\) intervals by the observations, where the end points \(x_{(0)}\) and \(x_{(n+1)}\) are equal to a and b, respectively. Each interval \((x_{(i)}, x_{(i+1)})\) for \(i= 0, 1, 2, \ldots , n\) is assigned a probability of \(\frac{1}{n+1}\). To create a bootstrap sample, we randomly select n intervals with replacement, and then sample one observation uniformly from each selected interval. Based on the bootstrap sample, we calculate the function of interest and repeat this process B times to obtain \(\hat{\theta }_{1}, \hat{\theta }_{2}, \ldots , \hat{\theta }_{B}\). The empirical distribution of these values approximates the sampling distribution of \(\theta (F)\). Banks’ bootstrap method is used for hypothesis testing in this paper and will be compared to Efron’s bootstrap method in Sect. 4.

2.2 Bootstrap Methods for Right-Censored Univariate Data

This section presents Efron’s bootstrap method [14] and the smoothed bootstrap method for right-censored data [1, 2]. Let \(T_{1},T_{2},\ldots ,T_{n}\) be independent and identically distributed event random variables from a distribution F supported on \(\mathbb {R}^{+}\) and let \(C_{1},C_{2},\ldots ,C_{n}\) be independent and identically distributed right-censored random variables from a distribution G supported on \(\mathbb {R}^{+}\). Furthermore, let \((X_{1}, D_{1}), (X_{2}, D_{2}), \ldots ,\) \( (X_{n}, D_{n})\) be the right-censored random variables, where each pair can be derived by

where \(i= 1, 2, \ldots , n\). Let \((x_{1},d_{1}),(x_{2},d_{2}),\ldots ,(x_{n},d_{n})\) be the observations of the corresponding random quantities \((X_{1},D_{1}),(X_{2},D_{2}),\ldots ,(X_{n},D_{n})\) and \(\theta (F)\) is the function of interest, where this function can be estimated by \(\theta (\hat{F})\).

Efron [14] proposed a nonparametric bootstrap method for data with right-censored observations. This method is similar to the one he proposed for real-valued data. In this method, the empirical distribution function of the original sample is used, so that each observation has an equal probability of \(\frac{1}{n}\), regardless of whether it is an event or a censored observation. To apply this method, B bootstrap samples of size n are generated by randomly selecting observations from the original dataset with replacement. The function of interest is then calculated based on each bootstrap sample. This process results in values \(\hat{\theta }_{1}, \hat{\theta }_{2}, \ldots , \hat{\theta }_{B}\), where the empirical distribution of these values can be a good estimate for the sampling distribution of \(\theta (F)\). This bootstrap method is useful for testing the equality of average lifetimes over two populations [25], and it has been shown to provide good results in multiple statistical inferences, see Efron [15], Efron and Tibshirani [16, 17] for more details.

Another method for right-censored data is the smoothed bootstrap method, introduced by Al Luhayb [1] and Al Luhayb et al. [2]. This method generalises Banks’ bootstrap method for right-censored data, and is based on the generalisation of the A\(_{(n)}\) assumption for data that contains right-censored observations, proposed by Coolen and Yan [9]. To implement this method, the data support is divided into \(n+1\) intervals by the original data, and the right-censored A\(_{(n)}\) assumption is used to assign specific probabilities to these intervals. For each bootstrap sample, n intervals are resampled with the assignment probabilities, and one observation is sampled from each interval. Performing these steps B times creates B bootstrap samples. Then, the function of interest is computed for each bootstrap sample, resulting in the values \(\hat{\theta }_{1}, \hat{\theta }_{2}, \ldots , \hat{\theta }_{B}\). The empirical distribution of these values is used to estimate the sampling distribution of \(\theta (F)\). In this paper, we use the smoothed bootstrap method for hypothesis testing and compare its performance to Efron’s bootstrap method, with the comparison results presented in Sect. 4.

2.3 Bootstrap Methods for Bivariate Data

In this section, we will discuss Efron’s bootstrap method [16] and three smoothed bootstrap methods for bivariate data [1, 3]. Let \((X_i, Y_i) \in \mathbb {R}^{2}\), for \(i= 1, 2, \ldots , n\) denote independent and identically distributed random variables with a distribution of H. The observations corresponding to \((X_i, Y_i)\) are \((x_i, y_i)\). We are interested in \(\theta {(H)}\), which is estimated by \(\theta {(\hat{H})}\). To implement the bootstrap, Efron and Tibshirani [16] used the empirical distribution. The bootstrap method involves creating multiple bootstrap samples, say B, of size n by resampling with equal probability from the observed data. Based on each bootstrap sample, the function of interest is calculated, resulting in B values. The empirical distribution of these B values is used as a proxy for the distribution of the function of interest. This is the same approach as for univariate data. Several references use this bootstrap method for hypothesis testing. For further details, see e.g. Dolker et al. [11], MacKinnon [19] and Hesterberg [18].

In their recent work, Al Luhayb [1] and Al Luhayb et al. [3] proposed three different smoothed bootstrap methods for estimating the distribution of a function of interest. The first smoothed bootstrap method, referred to by SBSP, is based on the semi-parametric predictive method, which is proposed by Muhammad [20]. The second smoothed bootstrap method, referred to by SBNP, is based on the nonparametric predictive method introduced by Muhammad et al. [21]. These two methods divide the sample space into \((n+1)^2\) squares (or blocks hereafter), each assigned with a certain probability. The third method, referred to by SEB, is based on uniform kernels, where each data point is surrounded by a block of size \(b_X \times b_Y\), and the observation is located at the centre of its corresponding block, with \(b_X\) and \(b_Y\) being the chosen bandwidths for the kernel. To create a bootstrap sample, n blocks are resampled with the assignment probabilities, and one observation is sampled from each chosen block. This process is repeated multiple times, typically \(B=1000\) times, and based on each bootstrap sample, the function of interest is calculated. This results in B values, and the empirical distribution of these values is used to estimate the distribution of the function of interest.

3 Example

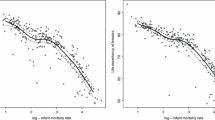

In this section, we will explore an example using data from the literature on the maximum flow rates over a 100 year period at gauging stations on rivers in North Carolina [6]. The data is presented in Table 1, and it shows the maximum flow rates in gallons per second. Our goal is to investigate whether the median of the data is equal to 5400 gallons per second using a 90% confidence interval, using Efron’s bootstrap method and Banks’ bootstrap method.

To conduct the test, we first generate 1000 bootstrap data sets from the original data using each of the two bootstrap methods, resulting in 1000 bootstrap samples for each method. Then, we calculate the median for each bootstrap sample, and from the resulting values, we can define the 90% bootstrap confidence interval for the median by taking the 50th and 950th ordered values.

If the value 5400 is included in the confidence interval, we fail to reject the null hypothesis. Otherwise, we reject the null hypothesis. Table 2 presents the 90% confidence intervals for the median based on both Efron’s and Banks’ bootstrap methods. As the value 5400 falls within both confidence intervals, therefore we fail to reject the null hypothesis.

4 Comparison of the Bootstrap Methods

Hypothesis tests based on the bootstrap method are a type of computer-based statistical technique. Thanks to recent advancements in computational power, these tests have become practical for real-world applications. The basic idea behind the bootstrap method is simple to understand and doesn’t rely on complex mathematical assumptions. In this section, we will conduct various tests for different types of data using the bootstrap method explained in Sect. 2.

4.1 Hypothesis Tests for Quartiles

In this section, we calculate the Type I error rates of quartile hypothesis tests based on bootstrap methods presented in Sect. 2.2. These methods are used when the data contains right-censored observations. To determine how well the bootstrap methods perform, we simulate datasets that include right-censored observations from two different scenarios. For the first scenario, we use the Beta distribution with parameters \(\alpha =1.2\) and \(\beta =3.2\), where \(\alpha \) and \(\beta \) are the shape parameters, and the Uniform distribution with parameters \(a=0\) and \(b=1.82\) for event time observations and right-censored observations, respectively. The second scenario is defined as \(T\sim \text {Log-Normal}(\mu =0,\sigma =1)\) and \(C\sim \text {Weibull}(\alpha =3, \beta =3.7)\), where \(\alpha \) is the shape parameter and \(\beta \) is the scale parameter (see Appendix). In both scenarios, the censoring proportion p in the generated datasets is \(15\%\), and this is determined by setting the two parameters of the uniform distribution. For more information on how to fix the censoring proportion, we refer the reader to Wan [26] and Al Luhayb [1].

To compare Efron’s bootstrap method with the smoothed bootstrap method, we generate \(N=1000\) datasets from each scenario. For each dataset, we apply each method \(B=1000\) times, resulting in 1000 bootstrap samples based on each method. We then compute the quartile of interest at each bootstrap sample and use the resulting values to define the \(100(1-2\alpha )\%\) bootstrap confidence interval for the quartile. We count one if the value of the quartile specified in the null hypothesis is not included in the confidence interval; otherwise, we count zero. We repeat this procedure for all \(N=1000\) generated datasets, then count the number of times the null hypothesis was rejected over the 1000 trials. This ratio will be the Type I error rate of the quartile’s hypothesis test with significance level \(2\alpha \).

It’s important to note that Efron’s bootstrap samples often include some censored observations, so we use the Kaplan–Meier (KM) estimator to find their corresponding quartiles. Suppose we are interested in the median; we should find a time t such that \(\hat{S}(t)=0.50\) in each bootstrap sample. Unfortunately, in some samples, we cannot find that time t because there is no time such that \(\hat{S}^{-1}(0.50)=t\). In this case, we have considered three options or solutions. The first option is to neglect all not applicable medians, so the \(100(1-2\alpha )\%\) bootstrap confidence interval for the median is based on fewer than 1000 bootstrap samples. This option is referred to as E\(_{(1)}\). The second option is to assume the median to be the maximum event time of that bootstrap sample. This is Efron’s suggestion, which is used for each bootstrap sample whose median is not found by the KM estimator [12]. This option is referred to as E\(_{(2)}\). Finally, we fit an Exponential distribution to the interval with a rate parameter of \(\hat{\lambda }^{*}=-\ln (\hat{S}(t_{max}))/t_{max}\), where \(t_{max}\) is the maximum event time of the bootstrap sample and \(\hat{S}(.)\) is the KM estimator. This allows us to find the corresponding median, \(X_{med}\), with \(X_{med}=-\ln (0.50)/\hat{\lambda }^{*}\). This suggestion is presented in Brown et al. [7], and we refer to it as E\(_{(3)}\). In the last two cases, we can ensure that the confidence interval is based on 1000 bootstrap samples’ medians.

In the tables, the NA represents the number of Efron’s bootstrap samples where quartiles cannot be found, while ABS represents the number of cases where a bootstrap sample containing only right-censored observations is replaced by another sample that includes at least one event time. These two numbers are out of 1,000,000.

We consider three different strategies for the smoothed bootstrap method when sampling observations from the \(n+1\) intervals partitioning the sample space. The first strategy is to sample uniformly from all intervals, denoted by SB. The second strategy is to assume an exponential tail for each interval and sample from the tails to create the bootstrap samples, denoted by SB\(_{\text {exp}}\). The third strategy is to sample uniformly from all intervals except the last intervals, for which we sample from the exponential tails. We refer to this strategy as SB\(_{\text {Lexp}}\). By investigating how the sampling strategies affect the results, we can gain insight into the impact of different sampling methods on the smoothed bootstrap method.

Tables 3 and 4 show the results of the Type I error rates for the quartiles’ hypothesis tests with significance levels 0.10 and 0.05 for simulated data sets in the first scenario. When the sample size is 10, the smoothed bootstrap with its three assumptions, SB, SB\(_{\text {exp}}\) and SB\(_{\text {Lexp}}\), provides lower discrepancies between actual and nominal error rates for all quartiles’ tests compared to Efron’s bootstrap with its three assumptions, E\(_{(1)}\), E\(_{(2)}\) and E\(_{(3)}\). The superiority of the smoothed bootstrap methods is due not only to the event observations obtained for the smoothed bootstrap samples, but also to the fact that the KM estimator used in Efron’s bootstrap samples is often not able to find the quartiles, particularly the second and third ones. In 1,000,000 bootstrap samples, we cannot find the first, second and third quartiles in 228, 3736 and 32,821 bootstrap samples, respectively. As the sample size increases to 50, 100 and 500, both methods provide good results, but Efron’s method is better, and the number of NA and ABS decreases toward zero. These decreases lead to equal results when E\(_{(1)}\), E\(_{(2)}\) and E\(_{(3)}\) are used. Also, at these large sample sizes, SB, SB\(_{\text {exp}}\) and SB\(_{\text {Lexp}}\) provide approximately equal outcomes.

In the second scenario, we should note that the data space is \((0,\infty )\), which is different from the first scenario where the support is (0, 1), so the last intervals for the smoothed method are not bounded. In this case, we can only use smoothed bootstrap assumptions SB\(_{\text {exp}}\) and SB\(_{\text {Lexp}}\), not SB. Tables 5 and 6 present the results of Type I error rates for the quartiles’ hypothesis tests with significance levels of 0.10 and 0.05, respectively. The SB\(_{\text {exp}}\) and SB\(_{\text {Lexp}}\) methods again outperform Efron’s method in defining the Type I error rates when the sample size is small. As the sample size gets large, both methods perform well, as observed in Tables 3 and 4.

In a special case where data includes only failures, with no censored observations, we will use Banks’ bootstrap method and Efron’s bootstrap method, which are presented in Sect. 2.1, to compute the Type I error rates for the quartiles’ hypothesis tests. In the simulations, we use \(\text {Beta}(\alpha =1.2,\beta =3.2)\) to create data sets and repeat the same comparison procedure as in the previous simulations. Tables 7 and 8 present the Type I error rates for the quartiles’ hypothesis tests based on Banks’ and Efron’s methods with significance levels of 0.10 and 0.05, respectively. Banks’ bootstrap method performs better, particularly when \(n=10\) and \(2\alpha =0.05\). As the sample size gets large, both methods perform well.

4.2 The Two-Sample Problem

When conducting a hypothesis test \(H_{0}: \theta _{1}=\theta _{2}\) against \(H_1: \theta _1 \ne \theta _2\), where \(\theta _{1}\) and \(\theta _{2}\) represent the function of interest in the first and second populations respectively, the achieved significance level (ASL) is used to draw a conclusion. ASL is defined as the probability of observing at least the same value as \(\hat{\theta }=\hat{\theta }_{1}-\hat{\theta }_{2}\), when the null hypothesis is true,

The smaller the value of ASL, the stronger the evidence against \(H_{0}\). The value \(\hat{\theta }\) is fixed at its observed value, and the quantity \(\hat{\theta }^{*}\) has the null hypothesis distribution, which is the distribution of \(\hat{\theta }\) if \(H_{0}\) is true [17].

Efron and Tibshirani [17] used the achieved significance level to test whether the two populations have equal mean or not. Suppose we have two samples \({\textbf {z}}=\{z_{1},z_{2},\ldots ,z_{n}\}\) and \({\textbf {y}}=\{y_{1},y_{2},\ldots ,y_{m}\}\) from possibly different probability distributions, and we wish to test the null hypothesis \(H_{0}: \theta _{1}=\theta _{2}\). Efron’s bootstrap method is used to approximate the ASL value, then \(H_{0}\) is rejected when \(\widehat{ASL}<2\alpha \). The algorithm to test the null hypothesis based on the bootstrap methods is as follows

-

(i)

Combine \({\textbf {z}}\) and \({\textbf {y}}\) samples together, so we get a sample \({\textbf {x}}\) of size \(n+m\). Thus, \({\textbf {x}}=\{z_{1},z_{2},\ldots ,z_{n},y_{1},y_{2},\ldots ,y_{m}\}\)

-

(ii)

Draw B bootstrap samples of size \(n+m\) with replacement from \({\textbf {x}}\), and call the first n observations \({\textbf {z}}^{*b}\) and the remaining m observations \({\textbf {y}}^{*b}\) for \(b=1,2,\ldots ,B\).

-

(iii)

For each bootstrap sample, we compute the means of \({\textbf {z}}^{*b}\) and \({\textbf {y}}^{*b}\), then find \(A^{*b}=\overline{{\textbf {z}}}^{*b}-\overline{{\textbf {y}}}^{*b}\), \(b=1,2,\ldots ,B\).

-

(iv)

The achieved significance level ASL can be approximated by

$$\begin{aligned} \widehat{ASL}=\frac{\sum _{b=1}^{B}\{A^{*b}\ge A_{obs}\}}{B} \end{aligned}$$(4)where \(A_{obs}=\overline{{\textbf {z}}}-\overline{{\textbf {y}}}\), and \(\overline{{\textbf {z}}}\) and \(\overline{{\textbf {y}}}\) are the sample means of the two original samples.

We will employ the proposed strategy in this section to examine whether the two samples have the same median (\(Q_{2}^{1}=Q_{2}^{2}\)) or not. To conduct these tests, we will use the bootstrap methods presented in Sect. 2.2 and make comparisons through simulations. Specifically, we will calculate the Type I error rate for the following hypothesis test:

In order to compare different bootstrap methods through simulation, we first generate two datasets of size n using the second scenario proposed in Sect. 4.1. We compute the medians of these datasets, \(\hat{Q}_{2}^{1}\) and \(\hat{Q}_{2}^{2}\), and calculate \(A_{obs}=\hat{Q}_{2}^{1}-\hat{Q}_{2}^{2}\). Next, we combine the two datasets so that they form a new dataset of size 2n. Then, for each bootstrap method, we draw 1000 samples of size 2n, and call the first n observations \({\textbf {z}}^{*b}\) and the remaining n observations \({\textbf {y}}^{*b}\) for \(b=1,2,\ldots ,B\). We compute \(A^{*b}=\hat{Q}_{2}({\textbf {z}}^{*b})-\hat{Q}_{2}({\textbf {y}}^{*b})\) for each bootstrap sample, resulting in 1000 \(A^{*}\) values. Finally, we calculate the ASL value and reject \(H_{0}\) if \(\widehat{ASL}<2\alpha \). We repeat this process \(B=1000\) times and count the number of times we reject the null hypothesis. We take the ratio of rejected hypotheses out of 1000 trials and consider the method with the ratio closest to \(2\alpha \) as the best method. The final results of the simulations are presented in Tables 9 and 10 for two different significance levels.

As the sample space of the underlying distribution is \([0,\infty )\), we only consider SB\(_{\text {exp}}\) and SB\(_{\text {Lexp}}\) for the smoothed bootstrap method. For Efron’s method, we consider E\(_{(2)}\) and E\(_{(3)}\) as they are guaranteed to find the median of each set in each bootstrap sample. Tables 9 and 10 present the Type I error rates of the hypothesis test in Equation (5) with significance levels of 0.10 and 0.05, respectively. The SB\(_{\text {exp}}\) and SB\(_{\text {Lexp}}\) methods generally provide lower actual Type I error rates compared to E\(_{(2)}\) and E\(_{(3)}\) at different sample sizes. However, E\(_{(2)}\) and E\(_{(3)}\) provide smaller discrepancies between the actual and nominal Type I error levels, especially when the sample size is small. When \(n=500\), all methods provide almost identical results.

In previous simulations, we created both samples in each run from a single scenario, but now we want to create samples from two different scenarios. In each run, the first sample is created from \(T\sim \text {Log-Normal}(\mu =0,\sigma =1)\) and \(C\sim \text {Weibull}(\alpha =3,\beta =3.7)\), while the second sample is created from \(T\sim \text {Weibull}(\alpha =1,\beta =1.443)\) and \(C\sim \text {Exponential}(\lambda =0.12)\), where \(p=0.15\) in both scenarios (see Appendix). We aim to investigate how the bootstrap methods perform when the two samples have different distributions but the same median (which is equal to 1). Tables 11 and 12 show the Type I error rates with significance levels of 0.10 and 0.05, respectively. All methods perform well at different sample sizes, and the results are close to the nominal size \(2\alpha \), particularly when the sample size is large.

4.3 Pearson Correlation Test

In Sect. 2.3, we present smoothed bootstrap methods and compare them to Efron’s method. We compute the Type I error rate to determine the superiority of each method, where a method is considered superior if its corresponding Type I error rate is closer to the significance level of \(2\alpha \). In this section, we simulate data sets from two different distributions to compare the methods. For the first scenario, we generate data sets from Gumbel copula, where the marginals X and Y both follow the standard uniform distribution. The second scenario is Clayton copula where X follows the normal distribution with mean 1 and standard deviation 1, and Y follows the normal distribution with mean 5 and standard deviation 3. For both scenarios, we consider three dependence levels of \(\rho \) and three sample sizes with two significance levels. We also include the dependence parameters of copulas and their concordance measure Kendall’s \(\tau \). The cumulative distribution functions of Gumbel copula and Clayton copula are, respectively, given by [22]

where all marginals are uniformly distributed on [0,1].

To compute the Type I error rate for the null hypothesis of \(\rho =\rho ^{\star }\) based on a bootstrap method, we create \(N=1000\) data sets with sample size n and dependence level \(\rho =\rho ^{\star }\) from one of the scenarios presented above. For each generated data set, we apply each bootstrap method \(B=1000\) times and compute the Pearson correlation of each bootstrap sample. We order the 1000 Pearson correlation bootstrapped values from lowest to highest and obtain the \(100(1-2\alpha )\%\) bootstrap confidence interval. If the null hypothesis value is not included in the confidence interval, we reject \(H_{0}\) and count 1; otherwise, we do not reject \(H_{0}\) and count 0. The number of times that the null hypothesis was rejected over the 1000 trials will be the Type I error rate.

Table 13 presents the Type I error rates based on the bootstrap methods, where the significance level is 0.10. For a small sample size of \(n=10\), the SBSP and SBNP methods provide error rates closer to the nominal rate of 0.10 compared to Efron’s and the smoothed Efron’s methods. However, the SBNP method is the best when \(\rho = 0.4\) and 0.8. When n increases to 50 and 100, all methods decrease the discrepancies between the actual and nominal error rates, but the SBNP method is the superior one in most cases.

With a significance level of 0.05, the actual Type I error rates based on the bootstrap methods are listed in Table 14. The SBSP and SBNP methods again provide lower discrepancies between the nominal and actual Type I error rates compared to Efron’s and the smoothed Efron’s methods, especially when \(n=10\). When the sample size increases to 50 and 100, all methods perform better, but the SBNP method is the best one in most settings.

In the second scenario, we simulate \(N=1000\) data sets with dependence level \(\rho =\rho ^{\star }\), and we compute Type I error rates using the bootstrap methods as shown in Tables 15 and 16. For \(n=10\), the SBSP method provides the closest results to the nominal error rates at most levels of \(\rho \). As n increases to 50 and 100, its performance worsens for \(H_{0}: \rho =0.8\) because the underlying distribution is not symmetric. At these large sample sizes, the SBNP, Efron and SEB methods perform better than the SBSP method, particularly the SBNP method. The SBNP method provides the lowest discrepancies between the nominal and actual error rates in most cases, in both significance levels of 0.10 and 0.05; however, when \(n=10\) and \(\rho =0\), 0.4, the SBNP method provides very small error rates.

4.4 Kendall Correlation Test

In Sect. 4.3, we computed the Type I error rate for the Pearson correlation test using different sample sizes and dependence levels. In this section, we aim to repeat the same comparisons, but this time, we will use the Kendall correlation test instead. We will use the same scenarios, generating datasets with \(n=10, 50\) and 100, and dependence levels of \(\tau =0, 0.4\) and 0.8, with significance levels of 0.10 and 0.05.

To generate data sets and apply the bootstrap methods, we will use the Gumbel copula, where both marginals follow Uniform(0,1). From Tables 17 and 18, we can see that the SBSP method performs well when \(\tau =0\) across all different sample sizes. However, it performs poorly as the sample size increases for \(\tau =0.4\) and 0.8. This is in contrast to the results based on SBNP, Efron’s, and smoothed Efron’s methods. These methods provide lower error rates than the nominal levels when the sample size is small at all different dependence levels. As n increases to 50 and 100, the error rates become closer to the nominal level \(2\alpha \).

Tables 19 and 20 present the Type I error rates for the Kendal correlation test at different dependence levels with significance levels of 0.10 and 0.05, respectively. When \(\tau =0\) and \(n=10\), the error rate based on the SBNP method is significantly lower than the nominal level \(2\alpha \), while the results of other methods are close to the nominal levels. As the sample size increases to 50 and 100, all methods provide good results. If there is a strong relation between the variables, it is recommended to use either Efron’s bootstrap method or the SEB method. These methods are both able to produce good results because they have much less effect than the SBSP and SBNP methods on the observation’s rank, which is the basis for computing the Kendall correlation.

5 Concluding Remarks

In this paper, we explored how the proposed smoothed bootstrap methods can be used to compute Type I error rates for different hypothesis tests and compare their results to Efron’s bootstrap methods through simulations. The smoothed bootstrap methods are applied to real-valued data, right-censored data and bivariate data. For real-valued data and right-censored data, we test the null hypothesis that quartiles are equal to those of the underlying distributions. We also test whether two sample medians are equal, regardless of whether the two samples are from the same underlying distribution or not. For bivariate data, we compute the Type I error rates for Pearson and Kendall correlation tests.

We found that the smoothed bootstrap methods perform better when the sample size is small for real-valued and right-censored data, providing lower discrepancies between actual and nominal error rates. As the sample size gets larger, all bootstrap methods provide good results, but Efron’s methods mostly perform better for the third quartile. For the two-sample median test, we use the achieved significance level to test whether the two samples have equal medians or not. All bootstrap methods performed well, and the Type I error rates are close to the nominal levels.

For the Pearson correlation test, the SBSP and SBNP methods lead to lower discrepancies between actual and nominal Type I error rates compared to Efron’s and smoothed Efron’s methods when the sample size is small. For large sample sizes, all methods provide good results. However, the SBNP method performs better in most dependence levels. In situations where the data distribution is asymmetric, the SBSP method does not perform well, particularly when \(\tau \) is not close to zero, which results from the Normal copula assumption.

For the Kendall correlation test, it is recommended to use either Efron’s bootstrap method or the SEB method, particularly when the underlying distribution is asymmetric and has a strong Kendall correlation. Their influences on the observations rank are much less than those of the SBSP and SBNP methods. When \(\tau =0\) and the sample size is small, all bootstrap methods perform well, and as n gets large, their performances improve and the Type I error rates become closer to the nominal level \(2\alpha \).

In conclusion, we used the bootstrap methods for real-valued data, right-censored data and bivariate data to compute Type I error rates for different hypothesis tests. Future research could focus on applying these bootstrap methods to compute power or Type II error rates for some hypothesis tests.

References

Al Luhayb ASM (2021) Smoothed bootstrap methods for right-censored data and bivariate data. PhD thesis, Durham University. http://etheses.dur.ac.uk/14096

Al Luhayb ASM, Coolen FPA, Coolen-Maturi T (2023) Smoothed bootstrap for right-censored data. Commun Stat Theory Methods. https://doi.org/10.1080/03610926.2023.2171708

Al Luhayb ASM, Coolen-Maturi T, Coolen FPA (2023) Smoothed bootstrap methods for bivariate data. J Stat Theory Pract 17(3):1–37. https://doi.org/10.1007/s42519-023-00334-7

Banks DL (1988) Histospline smoothing the Bayesian bootstrap. Biometrika 75:673–684

Berrar D (2019) Introduction to the non-parametric bootstrap. In: Encyclopedia of bioinformatics and computational biology. Academic Press, Oxford, pp 766–773

Boos DD (2003) Introduction to the bootstrap world. Stat Sci 18(2):168–174

Brown BW, Hollander M, Korwar RM (1974) Nonparametric tests of independence for censored data with applications to heart transplant studies. In: Proschan F, Serfling RJ (eds) Reliability and biometry. SIAM, Philadelphia, pp 327–354

Coolen FPA, BinHimd S (2020) Nonparametric predictive inference bootstrap with application to reproducibility of the two-sample Kolmogorov–Smirnov test. J Stat Theory Pract 14:1–13

Coolen FPA, Yan KJ (2004) Nonparametric predictive inference with right-censored data. J Stat Plan Inference 126:25–54

Davison AC, Hinkley DV (1997) Bootstrap methods and their application. Cambridge University Press, Cambridge

Dolker M, Halperin S, Divgi DR (1982) Problems with bootstrapping Pearson correlations in very small bivariate samples. Psychometrika 47(4):529–530

Efron B (1967) The two-sample problem with censored data. In: Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, vol 4. University of California Press, Berkeley, pp 831–853

Efron B (1979) Bootstrap methods: another look at the jackknife. Ann Stat 7:1–26

Efron B (1981) Censored data and the bootstrap. J Am Stat Assoc 76:312–319

Efron B (1982) The jackknife, the bootstrap, and other resampling plans, vol 38. Society for Industrial and Applied Mathematics, Philadelphia

Efron B, Tibshirani R (1986) Bootstrap methods for standard errors, confidence intervals, and other measures of statistical accuracy. Stat Sci 1:54–77

Efron B, Tibshirani RJ (1993) An introduction to the bootstrap. Chapman & Hall, London

Hesterberg T (2011) Bootstrap. Wiley Interdiscip Rev Comput Stat 3(6):497–526

MacKinnon JG (2009) Bootstrap hypothesis testing. In: Handbook of computational econometrics, pp 183–213

Muhammad N (2016) Predictive inference with copulas for bivariate data. PhD thesis, Durham University, UK

Muhammad N, Coolen FPA, Coolen-Maturi T (2016) Predictive inference for bivariate data with nonparametric copula. Am Inst Phys AIP Conf Proc 1750(1):0600041–0600048. https://doi.org/10.1063/1.4954609

Muhammad N, Coolen-Maturi T, Coolen FPA (2018) Nonparametric predictive inference with parametric copulas for combining bivariate diagnostic tests. Stat Optim Inf Comput 6(3):398–408

Rasmussen JL (1987) Estimating correlation coefficients: bootstrap and parametric approaches. Psychol Bull 101(1):136–139

Strube MJ (1988) Bootstrap type I error rates for the correlation coefficient: an examination of alternate procedures. Psychol Bull 104(2):290–292

Vaman H, Tattar P (2022) Survival analysis. Chemical Rubber Company Press, Boca Raton

Wan F (2017) Simulating survival data with predefined censoring rates for proportional hazards models. Stat Med 36:721–880

Acknowledgements

Asamh Al Luhayb was a PhD student at Durham University, supported by a scholarship from the Deanship of Scientific Research at Qassim University. During his studies, he worked under the supervision of Prof. Frank Coolen and Dr. Tahani Coolen-Maturi.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The probability density functions for the distributions used in each scenario to generate right-censored data.

Scenario 1:

Beta distribution for event times:

\(f(t)=\frac{t^{\alpha -1} (1-t)^{\beta -1}}{\beta (\alpha ,\beta )}; \ t\in [0,1]\) where \(\alpha =1.2\) and \(\beta =3.2\).

Uniform distribution for censored times:

\(g(c)=\frac{1}{b-a}; \ c\in [a,b]\) where \(a=0\) and \(b=1.82\).

Scenario 2:

Log-Normal distribution for event times:

\(f(t)=\dfrac{1}{t\sqrt{2\pi }} \exp (-\frac{(\ln (t))^2}{2}); \ t\in (0,\infty )\).

Weibull distribution for censored times:

\(g(c)=\frac{\alpha }{\beta } (\frac{c}{\beta })^{\alpha -1} \exp (-(\frac{c}{\beta })^{\alpha }); \ c\in [0,\infty )\) where \(\alpha =3\) and \(\beta =3.7\).

Scenario 3:

Weibull distribution for event times:

\(f(t)=\frac{\alpha }{\beta } (\frac{t}{\beta })^{\alpha -1} \exp (-(\frac{t}{\beta })^{\alpha }); \ t\in [0,\infty )\) where \(\alpha =1\) and \(\beta =1.443\).

Exponential distribution for censored times:

\(g(c)=\lambda \exp (-\lambda c); \ c\in [0,\infty )\) where \(\lambda =0.12\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Al Luhayb, A.S.M., Coolen-Maturi, T. & Coolen, F.P.A. Smoothed Bootstrap Methods for Hypothesis Testing. J Stat Theory Pract 18, 16 (2024). https://doi.org/10.1007/s42519-024-00370-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-024-00370-x