Abstract

Probabilistic language models, e.g. those based on recurrent neural networks such as long short-term memory models (LSTMs), often face the problem of finding a high probability prediction from a sequence of random variables over a set of tokens. This is commonly addressed using a form of greedy decoding such as beam search, where a limited number of highest-likelihood paths (the beam width) of the decoder are kept, and at the end the maximum-likelihood path is chosen. In this work, we construct a quantum algorithm to find the globally optimal parse (i.e. for infinite beam width) with high constant success probability. When the input to the decoder follows a power law with exponent k > 0, our algorithm has runtime Rnf(R, k), where R is the alphabet size, n the input length; here f < 1/2, and \(f\rightarrow 0\) exponentially fast with increasing k, hence making our algorithm always more than quadratically faster than its classical counterpart. We further modify our procedure to recover a finite beam width variant, which enables an even stronger empirical speedup while still retaining higher accuracy than possible classically. Finally, we apply this quantum beam search decoder to Mozilla’s implementation of Baidu’s DeepSpeech neural net, which we show to exhibit such a power law word rank frequency.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

A recurring task in the context of parsing and neural sequence to sequence models—such as machine translation (Ilya et al. 2011; Sutskever et al. 2014), natural language processing (Schmidhuber 2014) and generative models (Graves 2013)—is to find an optimal path of tokens (e.g. words or letters) from a sequential list of probability distributions. Such a distribution can for instance be produced at the output layer of a recurrent neural network, e.g. a long short-term memory (LSTM). The goal is to decode these distributions by scoring all viable output sequences (paths) under some language model, and finding the path with the highest score.

Nowadays, the de facto standard solution is to use a variant of beam search (Steinbiss et al. 1994; Vijayakumar et al. 2016; Wiseman and Rush 2016; Kulikov et al. 2018; Pratap et al. 2020) to traverse the list of all possible output strings. Beam search stores and explores a constant sized list of possible decoded hypotheses at each step, compared to a greedy algorithm that only considers the top element at each step. Beam search thus interpolates between a simple greedy algorithm and best-first search; but just like greedy search, beam search is not guaranteed to find a global optimum. Furthermore, beam search suffers from sensitivity to the predicted sequence length. Improving the algorithm itself (Murray and Chiang 2018; Yang et al. 2018), as well as finding new decoding strategies (Fan et al. 2018; Holtzman et al. 2020), is an ongoing field of research.

A related task is found in transition based parsing of formal languages, such as context-free grammars (Hopcroft et al. 2001; Zhang and Clark 2008; Zhang and features 2011; Zhu et al. 2015; Dyer et al. 2015). In this model, an input string is processed token by token, and a heuristic prediction (which can be based on various types of classifiers, such as feed forward networks) is made on how to apply a transition at any one point. As in generative models and decoding tasks, heuristic parsing employs beam search, where a constant sized list of possible parse trees is retained in memory at any point in time, and at the end the hypothesis optimising a suitable objective function is chosen. Improvements of beam search-based parsing strategies are an active field of research (Buckman et al. 2016; Bohnet et al. 2016; Vilares and Gȯmez-Rodri̇guez 2018).

In essence, the problem of decoding a probabilistic sequence with a language model—or probabilistically parsing a formal grammar—becomes one of searching for paths in an exponentially growing tree: since at each step or node the list of possible sequence hypotheses branches, with maximum degree equal to the number of predictions for the next tokens. The goal is to find a path through this search space with the highest overall score. Due to runtime and memory constraints, a tradeoff has to be made which limits any guarantees on the performance of the search strategy.

Quantum computing has shown promise as an emerging technology to efficiently solve some instances of difficult computing tasks in fields ranging from optimisation (Gilyén et al. 2019; Montanaro 2020), linear algebra (Harrow et al. 2009; Berry et al. 2017), number theory and pattern matching (Montanaro 2016; 2017), language processing (Aaronson et al. 2019; Wiebe et al. 2019), machine learning (McClean et al. 2016; Bausch 2018; Wang et al. 2019; Li et al. 2019), to quantum simulation (Lloyd 1996; Babbush et al. 2018; Childs and Su 2019). While quantum computers are not yet robust enough to evaluate any of these applications on sample sizes large enough to claim an empirical advantage, a structured search problem such as language decoding is a prime candidate for a quantum speedup.

Although most naïve search problems can be sped up using Grover’s search algorithm (or one of its variants, such as fixed point search or oblivious amplitude amplification), finding good applications for quantum algorithms remains challenging, and super-quadratic (i.e. faster than Grover) speedups—such as Shor’s for prime factorisation (Shor 1999)—are rare. Recently, several exponentially faster algorithms (such as quantum recommender systems (Kerenidis and Prakash 2016), or dense low rank linear algebra (Wossnig et al. 2018)) have been proven to rely on a quantum random access memory model which, if classically available, can yield an exponential speedup without the need for quantum computing (Tang 2019).

In this work, we develop a quantum search decoder for parsing probabilistic token sequences with a super-quadratic speedup as compared to its classical counterpart. The algorithm can be seen as a generalisation of classical beam search, with potentially infinite beam width; for finite beam width, the list of hypotheses is pruned only once at the very end—after all possible parsing hypotheses have been generated—instead of performing continuous pruning during decoding, resulting in higher accuracy guarantees.

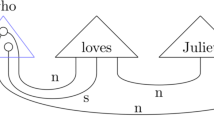

We develop two variants of the decoder. The first one is for finding the most likely parsed string. The more realistic use case is where the input sequence simply serves as advice on where to find the top scoring parse under a secondary metric—i.e. where the element with the highest decoder score is not necessarily the one with the highest probability of occurring when sampled. In this variant the speedup becomes more pronounced (i.e. the runtime scales less and less quickly in the input length) the better the advice (i.e. the steeper the power law falloff of the input, see Fig. 1).

Our novel algorithmic contribution is to analyse a recently developed quantum maximum finding algorithm (Apeldoorn et al. 2017) and its expected runtime when provided with a biased quantum sampler that we developed for formal grammars, under the premise that at each step the input tokens follow a power-law distribution; for a probabilistic sequence obtained from Mozilla’s DeepSpeech (which we show satisfies the premise), the quantum search decoder is a power of ≈ 4–5 faster than possible classically (Fig. 2).

Runtime of quantum beam search decoding the output of Mozilla’s DeepSpeech LSTM with a grammar, assuming an average branching ratio of R = 5, a token power law distribution with exponent k = 2.91, and post-amplification of the quantum search decoder with a constant number of retained hypotheses Nhyp ∈{101,…,1015}, plotted in rainbow colors from purple to red, bottom to top. In the left region, where full QuantumSearchDecoding is performed (as the beam comprises all possible hypotheses), a super-Grover speedup is obtained (Corollary 2). Where the beam width saturates, a Grover speedup is retained, and hypotheses are pruned only after all hypotheses have been constructed

In the following we assume basic familiarity with the notion of quantum computation, but provide a short overview for the reader in Appendix 1.

2 Main results

In this paper, we address the question of decoding a probabilistic sequence of words, letters, or generally tokens, obtained, e.g., from the final softmax layer of a recurrent neural network, or given as a probabilistic list of heuristic parse transitions. These models are essentially identical from a computational perspective. Hence, we give the following formal setup, and will speak of a decoding task, leaving implicit the two closely related applications.

Given an alphabet Σ, we expect as input a sequence of random variables X = (X1,X2,…,Xn), each distributed as \(X_{i}\sim \mathcal {D}_{i}^{{{\varSigma }}}\). The distributions \(\mathcal {D}_{i}^{{{\varSigma }}}\) can in principle vary for each i; furthermore, the Xi can either be independent, or include correlations. The input model is such that we are given this list of distributions explicitly, e.g. as a table of floating point numbers; for simplicity of notation we will continue to write Xi for such a table. The decoding machine M is assumed to ingest the input (a sample of the Xi) one symbol at a time, and branch according to some factor R at every step; for simplicity we will assume that R is constant (e.g. an upper bound to the branching ratio at every step). As noted, M can for instance be a parser for a formal grammar (such as an Earley parser (Earley 1970)) or some other type of language model; it can either accept good input strings, or reject others that cannot be parsed. The set of configurations of M that lead up to an accepted state is denoted by Ω; we assume that everything that is rejected is mapped by the decoder to some type of sink state ω ≠Ω. For background details on formal grammars and automata we refer the reader to Hopcroft et al. (2001), and we provide a brief summary over essential topics in Appendix 2.

While we can allow M to make use of a heuristic that attempts to guess good candidates for the next decoding step, it is not difficult to see that a randomised input setting is already more generic than allowing extra randomness to occur within M itself: we thus restrict our discussion to a decoder M that processes a token sequence step by step, and such that its state itself now simply becomes a sequence (Mi)i≤n of random variables. More precisely, described as a stochastic process, the Mi are random variables over the set Ω of internal configurations after the automaton has ingested Xi, given that it has ingested Xi− 1,…,X1 prior to that, with a distribution \(\mathcal {D}_{i}^{{{\varOmega }}}\). The probability of decoding a specific accepted string x = (x1,…,xn) is then given by the product of the conditional probabilities

where \(\mathcal {N}= {\sum }_{x \in {{\varOmega }}} \text {Pr}(X=x)\). In slight abuse of notation we write Mn = x when we mean Mn = y(x), where y(x) is the configuration of the parser M that was provided with some input to produce the parsed string x (which is unambiguous as there is a one-to-one mapping between accepted strings and parser configurations y(x)). Similarly, we write x ∈Ω for an accepted string/decoded path.

The obvious question is: which final accepted string of the decoder is the most likely? This is captured in the following computational problem.

Most Likely Parse

Input: | Decoder M over alphabet Σ, set of accep- |

ting configurations Ω. Sequence of random | |

variables (Xi)i≤n over sample space Σ. | |

Question: | Find σ = argmaxx∈ΩPr(Mn = x). |

Classically, it is clear that if we have a procedure that can sample the random variable Mn efficiently, then we can find the most likely element with an expected runtime of 1/Pr(Mn = σ), as this is the number of samples we are expected to draw to see the element once. While such sampling algorithms might be inefficient to construct in general, we emphasise that the question of drawing samples from strings over a formal language is an active field of research, and algorithms to sample uniformly are readily available for a large class of grammars: in linear time for regular languages (Bernardi and Giménez 2012; Oudinet et al. 2013), but also context-free grammars/restrictions thereof (McKenzie 1997; Goldwurm et al. 2001; Hickey and Cohen 1983; Gore et al. 1997; Denise 1996), potentially with global word bias (Reinharz et al. 2013; Lorenz and Ponty 2013; Denise et al. 2000; Ponty 2012).

In Theorem 3 and Section 3.1, we lift such a classical uniform sampler to a quantum sampler (denoted Uμ) with local (instead of global) word bias, which we can use to obtain a quantum advantage when answering Most Likely Parse. We note that the techniques used to prove Theorem 3 may well be used to obtain a (potentially faster) classical Monte Carlo procedure to sample from Mn. In what follows, we will therefore keep the decoder’s time complexity separate from the sampler’s runtime and simply speak of the decoder’s query complexity to Uμ, but we emphasise that constructing such an Uμ is efficiently possible, given a classical description of an automaton that parses the grammar at hand.

We start with the following observation, proven in Section 4.1.

Theorem 1

For an input sequence of n random variables to a parser with sampling subroutine Uμ, there exists a quantum search algorithm answering Most Likely Parse, using \(\pi /4\sqrt {\text {Pr}(M_{n}=\sigma )}\) queries to Uμ.

As explained, this theorem formalises the expected quadratic speedup of the runtime as compared to a classical algorithm based on sampling from Mn. Given the input to the parser is power-law distributed (see Definition 1), this allows us to formulate the following corollary.

Corollary 1

If the \(X_{i}\sim \text {Power}_{R}(k)\), answering Most Likely Parse requires at most 1/HR(k)n/2 queries; where \(H_{R}(k)={\sum }_{i=1}^{R} i^{-k}\).

Yet a priori, it is not clear that the weight of a decoded path (e.g. the product of probabilities of the input tokens) also corresponds to the highest score we wish to assign to such a path. This becomes obvious in the setting of a heuristic applied to a live translation: while at every point in time the heuristic might be able to guess a good forward transition, it might well be that long range correlations strongly affect the likelihood of prior choices. Research addressing these long-distance “collocations” indicates that LSTM models are capable of using about 200 tokens of context on average, but that they sharply distinguish nearby context (≈ 50 tokens) from the distant past. Furthermore, such models appear to be very sensitive to word order within the most recent context, but ignore word order in the long-range context (more than 50 tokens away) (Zhu et al. 2015; Dabrowska 2008; Khandelwal et al. 2018). Similarly, transformer-type architectures with self-attention—while outperforming LSTMs—feature a fixed-width context window; extensions thereof are an active field of research (Al-Rfou et al. 2019; Dai et al. 2019; Kitaev et al. 2020).

To address this setting formally, we assume there exists a scoring function  , which assigns scores to all possible decoded paths. Without loss of generality, there will be one optimal string which we denote with τ = argmaxx∈ΩF(x). Furthermore, we order all decoded strings Ω in some fashion, and index them with numbers i = 1,…,|Ω|. Within this ordering, τ can now be in different places—either because the heuristic guesses differently at each step, or because the input sequence varied a little. We denote the probability that the marked element τ is at position i with pi. In essence, the position where τ is found is now a random variable itself, with probability mass Pr(finding τ at index i) = pi.

, which assigns scores to all possible decoded paths. Without loss of generality, there will be one optimal string which we denote with τ = argmaxx∈ΩF(x). Furthermore, we order all decoded strings Ω in some fashion, and index them with numbers i = 1,…,|Ω|. Within this ordering, τ can now be in different places—either because the heuristic guesses differently at each step, or because the input sequence varied a little. We denote the probability that the marked element τ is at position i with pi. In essence, the position where τ is found is now a random variable itself, with probability mass Pr(finding τ at index i) = pi.

For the decoder probabilities Pr(Mn = x) to serve as good advice on where to find the highest-score element under the metric F, we demand that the final distribution over the states of the decoder puts high mass where the highest-scoring element often occurs; or formally that

To be precise, we define the following problem.

Highest Score Parse

Input: | Decoder M over alphabet Σ and with state |

space Ω. Sequence of random variables | |

(Xi)i≤n over sample space Σ. Scoring | |

function | |

Promise: | Eq. (2). |

Question: | Find τ = argmaxx∈ΩF(x). |

What is the classical baseline for this problem? As mentioned in Montanaro (2011), if px is the probability that x is the highest-scoring string, then in expectation one has to obtain 1/px samples to see x at least once. Any procedure based on sampling from the underlying distribution px thus has expected runtime \({\sum }_{x\in {{\varOmega }}}\frac {1}{p_{x}}\times p_{x} = |{{\varOmega }}|\). In a sense this is as bad as possible; the advice gives zero gain over iterating the list item by item and finding the maximum in an unstructured fashion. Yet provided with the same type of advice, a quantum computer can exhibit tremendous gains over unstructured search, such as the following statement, formally proven in Section 4.2.

Theorem 2

With the same setup as in Theorem 1 but under the promise that the input tokens are iid with \(X_{i}\sim \text {Power}_{|{{\varSigma }}|}(k)\) over alphabet Σ (Definition 1), that the decoder has a branching ratio R ≤|Σ|, and that we can uniformly sample from the grammar to be decoded, there exists a quantum algorithm QuantumSearchDecode (Algorithm 1) answering Highest Score Parse with an expected number of iterations

and where HR(k) is defined in Corollary 1.

There exists no classical algorithm to solve this problem based on taking stochastic samples from the decoder M that requires less than Ω(Rn) samples.

The exponent f(R, k) indicates the speedup over a classical implementation of the decoding algorithm (which would have to search over Rn elements). We find that f(R, k) < 1/2 for all R, k > 0, and in fact f(R, k)→0 exponentially quickly with k; we formulate the following corollary.

Corollary 2

For k > 0, QuantumSearchDecode is always faster than plain Grover search (with runtime ∝ Rn/2); the extent of the speedup depends on the branching ratio R and the power law exponent k (see Fig. 1).

Finally, in Section 5 we modify the full quantum search decoder by only searching over the paths with likelihood above some given threshold (that we allow to depend on n in some fashion), turning the decoder into a type of beam search, but where the pruning only happens at the very end (Algorithm 2). This means that in contrast to beam search, the top scoring element is found over the globally most likely parsed paths, avoiding the risk early beam pruning brings. We analyse the runtime of Algorithm 2 for various choices of beam width numerically, and analyse its performance on a concrete example—Mozilla’s DeepSpeech implementation, a speech-to-text LSTM which we show to follow a power-law token distribution at each output frame (see Appendix 8 for an extended discussion). F or DeepSpeech, we empirically find that input sequence lengths of up to 500 tokens can realistically be decoded, with an effective beam width of 1015 hypotheses—while requiring ≈ 3 × 106 search iterations (cf. Fig. 2). As expected, the super-Grover speedup from Corollary 2 is achieved in the regime where full QuantumSearchDecoding happens; once the beam width saturates, the speedup asymptotically approaches a quadratic advantage as compared to classical beam search.

3 Quantum search decoding

In this section, we give an explicit algorithm for QuantumSearchDecode. As mentioned before (see Section 2), we assume we have access to a classical sampling algorithm that, given a list of transition probabilities determined by the inputs X1,…,Xn, yields a random sample drawn uniformly from the distribution. Since this sampler is given as a classical probabilistic program, we first need to translate it to a quantum algorithm. We start with the following lemma.

Lemma 1

For a probabilistic classical circuit with runtime T(n) and space requirement S(n) on an input of length n, there exists a quantum algorithm that runs in time \(\mathrm {O}(T(n)^{\log _{2} 3})\) and requires \(\mathrm {O}(S(n)\log T(n))\) qubits.

Proof

Follows from Thm. 1 in Buhrman et al. (2001); see Appendix 7. □

3.1 Biased quantum sampling from a regular or context-free grammar

Given a sampler that can yield uniformly distributed strings si of a language, we want to raise it to a quantum circuit Uμ that produces a quantum state which is a biased superposition over all such strings si = ai1ai2⋯ain, where each string is weighted by the probability pij of the symbol aij occurring at index j (i.e. by Eq. (1)). In addition to the weighted superposition, we would like to have the weight of each state in the superposition spelled out as an explicit number in an extra register (e.g. as a fixed precision floating point number), i.e. as

where Ω is the set of accepted strings reachable by the decoder in n steps, |hq〉 is an ancillary state that depends on q and is contained in the decoder’s work space, where q is a state reached by reading the input sequence aq1,aq2,…,aqn. The weights \(p_{q} = {\prod }_{j=1}^{n} p_{qj}\).

As outlined in the introduction, we know there exist uniform classical probabilistic samplers for large classes of grammars, e.g. for regular languages in linear time (e.g. Oudinet et al. 2013) and polynomial time for variants of context free grammars (e.g. Goldwurm et al. 2001). Keeping the uniform sampler’s runtime separate from the rest of the algorithm, we can raise the sampler to a biased quantum state preparator for |μ〉.

Theorem 3

Assume we are given a classical probabilistic algorithm that, in time T(n), produce a uniform sample of length n from a language, and we are also given a list of independent random variables X1,…,Xn with pdfs pi, j for i = 1,…,n and j = [Σ]. Then we can construct a quantum circuit \(\mathbf {U}_{\mu ^{\prime }}\) that produces a state \(|{\mu ^{\prime }}\rangle \epsilon \)-close (in total variation distance) to the one in Eq. (3). The algorithm runs in time O(T(n)1.6 × n3κ/𝜖2), where κ is an upper bound on the relative variance of the conditional probabilities \(Pr(a|s_{1} {\dots } s_{i})\), for a, si ∈Σ, for the variable Xi+ 1 given the random string XiXi− 1⋯X1.

Proof

See Appendix 3. □

Getting a precise handle on κ strongly depends on the grammar to be parsed and the input presented to it; it seems unreasonable to claim any general bounds as it will most likely be of no good use for any specific instance. However, we note that it is conceivable that if the input is long and reasonably independent of the language to be sampled, then κ should be independent of n, and \(\kappa \approx 1/p(r_{\min \limits })\), where p(r) is the distribution of the input tokens at any point in time—e.g. p(r) ∝ r−k as in a power law.Footnote 1

3.2 The quantum search decoder

The quantum algorithm underlying the decoder is based on the standard maximum finding procedure developed by Dürr and Høyer (1996) and Ahuja and Kapoor (1999), and its extension in Apeldoorn et al. (2017) used in the context of SDP solvers.

The procedure takes as input a unitary operator Uμ which prepares the advice state, and a scoring function F which scores its elements, and returns as output the element within the advice state that has the maximum score under F. As in Section 3.1, we assume that F can be made into a reversible quantum circuit to be used in the comparison operation. We also note that reversible circuits for bit string comparison and arithmetic are readily available (Oliveira and Ramos 2007), and can, e.g., be implemented using quantum adder circuits (Gidney 2018).

Algorithm 1 lists the steps in the decoding procedure. As a subroutine within the search loop, we perform exponential search with oblivious amplitude amplification (Berry et al. 2014). As in the maximum finding algorithm, the expected query count for quantum search decoding is given as follows.

Theorem 4

If x ∈Ω is the highest-scoring string and px its score in Eq. (3), the expected number of iterations in QuantumSearchDecode to find it maximum is \(\mathrm {O}(\min \limits \{ 1/\sqrt {p_{x}},\sqrt n\})\).

Proof

Immediate by Apeldoorn et al. (2017). □

In the following we will for simplicity say |x〉 is the highest-scoring string (including ancilliary states given in Eq. (3)), and write |〈x|μ〉| instead of \(\sqrt {p_{x}}\). It is clear that the two notions are equivalent.

4 Power law decoder input

In this section we formally prove that if the decoder is fed independent tokens that are distributed like a power law, then the resulting distribution over the parse paths yields a super-Grover speedup—meaning the decoding speed is faster than applying Grover search, which itself is already quadratically faster than a classical search algorithm that traverses all possible paths individually.

A power law distribution is the discrete variant of a Pareto distribution, also known as Zipf’s law, which ubiquitously appears in the context of language features (Jäger 2012; Stella and Brede 2016; Egghe 2000; Piantadosi 2014). This fact has already been exploited by some authors in the context of generative models (Goldwater et al. 2011).

Formally, we define it as follows.

Definition 1

Let A be a finite set with |A| = R, and k > 1. Then PowerR(k) is the power law distribution over R elements: for \(X\sim \text {Power}_{R}(k)\) the probability density function Pr(X = x) = r−k/HR(k) for an element of rank r (i.e. the rth most likely element), where HR(k) is the Rth harmonic number of order k (Corollary 1).

We are interested in the Cartesian product of power law random variables, i.e. sequences of random variables of the form (X1,…,Xn). Assuming the random variables \(X_{i}\sim \text {Power}_{R}(k)\) are all independent and of rank ri with pdf \(q(r_{i})=r_{i}^{-k}/H_{R}(k)\), respectively, it is clear that

As in Montanaro (2011), we can upper bound the number of decoder queries in QuantumSearchDecode by calculating the expectation value of the iterations necessary—given by Theorem 4—with respect to the position of the top element.

We assume that at every step, when presented with choices from an alphabet Σ, the parsed grammar branches on average R ≤|Σ| times. Of course, even within a single time frame, the subset of accepted tokens may differ depending on what the previously accepted tokens are. This means that if the decoder is currently on two paths β1 (e.g. corresponding to “I want”) and β2 (“I were”), where the next accepted token sets are Σ1,Σ2 ⊂Σ (each different subsets of possible next letters for the two presented sentences), respectively, then we do not necessarily have that the total probability of choices for the two paths—Pr(Σ1) and Pr(Σ2)—are equal. But what does this distribution over all possible paths of the language, weighted by Eq. (1), look like?

Certainly this will depend on the language and type of input presented. Under a reasonable assumption of independence between input and decoded grammar, this becomes equivalent to answering the following question: let X be a product-of-powerlaw distribution with pdf given in Eq. (4), where every term is a powerlaw over Σ. Let Y be defined as X, but with a uniformly random subset of elements deleted; in particular, such that Rn elements are left, for some R < |Σ|. Is Y distributed as a product-of-powerlaws as in Eq. (4), but over R elements at each step? In the case of continuous variables this is a straightforward calculation (see Appendix 5); numerics suggest it also holds true for the discrete case.

But even if the input that the parser given is independent of the parsed grammar, it is not clear whether the sample distribution over R (i.e. sampling R out of |Σ| power-law distributed elements) follows the same power law as the original one over Σ; this is in fact not the case in general (Zhu et al. 2015). However, it is straightforward to numerically estimate the changed power law exponent of a sample distribution given R and |Σ|—and we note that the exponent shrinks only marginally when R < |Σ|.

In this light and to simplify the runtime analysis, we therefore assume the decoder accepts exactly R tokens at all times during the parsing process (like an R-ary tree over hypotheses) with a resulting product-of-powerlaw distribution, and give the runtimes in terms of the branching ratio, and not in terms of the alphabet’s size. This indeed yields a fair runtime for comparison with a classical variant, since any classical algorithm will also have the aforementioned advantage (i.e. we assume the size of final elements to search over is Rn, which precisely corresponds to the number of paths down the R-ary tree).

4.1 Most likely parse: query bound

In this case F simply returns pq as the score in Eq. (3). If x labels the highest-mass index of the probability density function (neglecting the ancilliary states in Eq. (3) for simplicity), it suffices to calculate the state overlap |〈x|μ〉|. By Eq. (4), we then have \(|{\langle {x}|{\mu }\rangle }|^{2} = H_{R}^{-n}(k)\). The claim of Corollary 1 follows from these observations.

4.2 Highest score parse: simple query bound

We aim to find a top element scored under some function F under the promise that |μ〉 (given in Eq. (3)) presents good advice on where to find it, in the sense of Eq. (2). The expected runtimes for various power law falloffs k can be obtained by taking the expectation with respect to px as in Montanaro (2011).

In order to do so, we need to be able to calculate expecation values of the cartesian product of power law random variables, where we restrict the domain to those elements with probability above some threshold. We start with the following observation.

Lemma 2

If QuantumSearchDecode receives as input iid random variables X1,…,Xn, with \(X_{i}\sim \text {Power}_{R}(k)\), then the number of queries required to the parser is \( \text {RT}_{1}(R,k,n) = \mathrm {O}\left (H_{R}(k/2)^{n} / H_{R}(k)^{n/2} \right ). \)

Proof

The expectation value of 1/〈x|μ〉 is straightforward to calculate; writing r = (r1,…,rn), by Eq. (4), we have

As \(\mathrm {O}(\min \limits \{ 1/\langle {x}|{\mu }\rangle , \sqrt n \}) \le \mathrm {O}(1/\langle {x}|{\mu }\rangle )\) the claim follows. □

We observe that the runtime in Lemma 2 is exponential in n. Nevertheless, as compared to a Grover algorithm—with runtime Rn/2—the base is now dependent on the power law’s falloff k. We can compare the runtimes if we rephrase RT1(R, k, n) = Rnf(R, k), by calculating

We observe that the exponent f(R, k) ∈ (0,1/2), i.e. it is always faster than Grover, and always more than quadratically faster than classically. The exponent’s precise dependency on k for a set of alphabet sizes R is plotted in Fig. 1. For growing k, f(R, k) falls off exponentially.

4.3 Most likely parse: full query bound

A priori, it is unclear how much we lose in Lemma 2 by upper-bounding \(\mathrm {O}(\min \limits \{ 1/\langle {x}|{\mu }\rangle , \sqrt n \})\) by O(1/〈x|μ〉)—so let us be more precise. In order to evaluate the expectation value of the minimum, we will break up the support of the full probability density function p(r) into a region where p(r) > 1/Rn, and its complement. Then, for two constants C1 and C2, we have for the full query complexity

In order to calculate sums over sections of the pdf p(r), we first move to a truncated Pareto distribution by making the substitutions

While this does introduce a deviation, its magnitude is minor, as can be verified numerically throughout (see Fig. 4, where we plot both RT1 and the continuous variant \(\text {RT}_{1'}(R,k,n):={h_{R}^{n}}(k/2)/h_{R}^{n/2}(k)\)).

The type of integral we are interested in thus takes the form

where k1 is not necessarily equal to k2, and typically \(c=(R/h_{R}(k_{1}))^{n/k_{1}}\), which would reduce to the case we are seeking to address in Eq. (5). Here, χ(⋅) denotes the characteristic function of a set, i.e. it takes the value 1 where the premise is true, and 0 otherwise. We derive the following closed-form expression.

Lemma 3

For k ≠ 1, Eq. (6) becomes

where \(k^{\prime }=1-k_{2}\), \(c^{\prime }=\log c\), \(a^{\prime }=\log R\).

Proof

See Appendix 4. □

5 Quantum beam search decoding

The goal of this section is to modify the QuantumSearchDecoder such that it behaves more akin to a classical beam search algorithm. More specifically, instead of searching for the top scored element which could sit anywhere within the advice distribution, we make the assumption that wherever the advice probability lies below some threshold p(x) < p0—where p0 can be very small—we discard those hypotheses. This is done by dovetailing a few rounds of amplitude amplification to suppress all beam paths with probability less than p0 (which we can do, since we have those probabilities written out as numbers within the advice state |μ〉 in Eq. (3)); a schematic of the algorithm can be found in Algorithm 2.

Of course we only want to do this if the number of amplification rounds, given as the squareroot of the inverse of the leftover probability \({\sum }_{x:p(x)\ge p_{0}}p(x)\), is small (i.e. constant, or logarithmic in n). We note that this expression is, as before, well-approximated by \(M_{p_{0},n}^{R,k,k}\) given in Lemma 3.

In beam search, only the top scoring hypotheses are kept around at any point in time; the difference to our method is of course that we can score the elements after every hypothesis has been built. This is not possible in the classical case, since it would require an exponential amount of memory, or postselection. As in Section 3, we have the two cases of finding the top scoring path and the most likely parse. Deriving a runtime bound for Most Likely Parse is straightforward—and does not, in fact, gain anything. This is because when finding the maximum-likelihood path τ, one performs amplitude amplification on that element anyhow, and p(τ) > p0—so it is within the set of elements with probability kept intact by the post-amplification.Footnote 2

The only interesting case of amplifying the advice state in QuantumSearchDecode to raise it to a beam search variant is thus for the case of Highest Score Parse, using the decoder’s output as advice distribution. Instead of listing a series of results for a range of parameters, we provide an explicit example of this analysis with real-world parameters derived from Mozilla’s DeepSpeech neural network in the next section, and refer the reader to Appendix 6 for a more in-depth analysis of variants of a constant and non-constant amount of post-amplification.

6 DeepSpeech

6.1 Analysis of the output rank frequency

To support the applicability of our model, we analysed our hypothesis that the output probabilities of an LSTM used to transcribe voice to letters—which can then be used, e.g., in a dialogue system with an underlying parser—is distributed in a power-law like fashion. More specifically, we use DeepSpeech, Mozilla’s implementation of Baidu’s DeepSpeech speech recognition system (Hannun et al. 2014; Mozilla 2019b); our hypothesis was that these letter probabilities follow a power-law distribution; our data supports this claim (see Appendix 8, also for a discussion of the LSTM’s power-law output—a model feature—vs. the power-law nature of natural language features).

6.2 Runtime bounds for quantum beam search decoding

We take the power law exponent derived from Mozilla’s DeepSpeech neural network, k = 3.03 (cf. Appendix 8), and derive runtime bounds for decoding its output with a parser under the assumption that, on average, we take R = 5 branches in the parsing tree at every time step. As discussed in Section 4, the sampling distribution over five elements only yields a slightly lower exponent of k = 2.91. How does quantum beam search perform in this setting, and how many hypotheses are actually searched over? And what if we fix the beam’s width to a constant, and increase the sequence length? We summarise our findings in Figs. 2, 7 and 8).

7 Summary and conclusions

We have presented a quantum algorithm that is modelled on and extends the capabilities of beam search decoding for sequences of random variables. Studies of context sensitivity of language models have shown that state-of-the-art LSTM models are able to use about 200 tokens of context on average while working with standard datasets (WikiText2, Penn Treebank) (Khandelwal et al. 2018); state of the art transformer-based methods level off at a context window of size 512 (Al-Rfou et al. 2019). On the other hand, under the premise of biased input tokens, our quantum search decoding method is guaranteed to find—with high constant success probability—the global optimum, and it can do so in expected runtime that is always more than quadratically faster than possible classically. As demonstrated empirically (cf. Fig. 2), our quantum beam search variant features a runtime independent of the sequence length: even for token sequences of length > 500 the top 1014 global hypotheses can be searched for an optimal prediction, within 107 steps.

We have further shown that neural networks used in the real world—concretely DeepSpeech—indeed exhibit a strong power law distribution on their outputs, which in turn supports the premise of our algorithm; how the performance scales in conjunction with a native recurrent quantum neural network such as in Bausch (2020) is an interesting open question.

Notes

This should make intuitive sense: the branching ratios are already biased with respect to the number of future strings possible with prefix s; if the input sequence is independent of the grammar, then we would expect them to weigh the strings roughly uniformly; the extra factor of \(1/p(r_{\min \limits })\) simply stems from the weighing of the token we bin by, namely a.

If anything, p0 introduces some prior knowledge about the first pivot to pick for maximum finding.

Even for linear space bounded nondeterministic TMs, the halting problem is in principle decidable, as for a finite number of internal states any computation must eventually halt or loop; see Minsky (1967).

Curiously, even though the linear runtime of Thompson et al.’s NFA has been known since 1968, even until relatively recently, modern regular expression implementations were implemented using backtracking and memorisation techniques that have an exponential asymptotic runtime (Cox 2007).

References

Aaronson S, Grier D, Schaeffer L (2019) A quantum query complexity trichotomy for regular languages. In: IEEE 60th annual symposium on foundations of computer science (FOCS). IEEE, pp 942–965

Ahuja A, Kapoor S (1999) A quantum algorithm for finding the maximum

Al-Rfou R, Choe D, Constant N, Guo M (2019) Character-level language modeling with deeper self-attention. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 3159–3166

Bausch J (2018) Classifying data using near-term quantum devices. Int J Quantum Inf 16 (08):1840001

Bausch J (2020) Recurrent quantum neural networks. In: Advances in neural information processing systems. 34th Annual conference on neural information processing systems (NeurIPS), vol 33

Buckman J, Ballesteros M, Dyer C (2016) Transition-based dependency parsing with heuristic backtracking. In: Proceedings of the 2016 Conference on empirical methods in natural language processing. Stroudsburg, PA, USA. ACL (Association for Computational Linguistics), Association for Computational Linguistics, pp 2313–2318

Berry DW, Childs AM, Cleve R, Kothari R, Somma RD (2014) Exponential improvement in precision for simulating sparse hamiltonians. In: Proceedings of the forty-sixth annual ACM symposium on theory of computing, STOC ’14. ACM, New York, pp 283–292

Berry DW, Childs AM, Ostrander A, Wang G (2017) Quantum algorithm for linear differential equations with exponentially improved dependence on precision. Commun Math Phys 356(3):1057–1081

Bernardi O, Giménez O (2012) A linear algorithm for the random sampling from regular languages. Algorithmica 62(1-2):130–145

Bohnet B, McDonald R, Pitler E, Ma J (2016) Generalized transition-based dependency parsing via control parameters. In: Proceedings of the 54th Annual meeting of the association for computational linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Stroudsburg, pp 150–160

Buhrman H, Tromp J, Vitányi P (2001) Time and space bounds for reversible simulation. J Phys A Math 34(35):6821–6830

Babbush R, Wiebe N, McClean J, McClain J, Neven H, Chan GK-L (2018) Low-depth quantum simulation of materials. Phys Rev X 8(1):011044

Chomsky N (1956) Three models for the description of language. IEEE Trans Inform Theory 2 (3):113–124

Cox R (2007) Regular expression matching can be simple and fast (but is slow in Java, Perl, PHP, Python, Ruby, ...)

Childs AM, Su Y (2019) Nearly optimal lattice simulation by product formulas. Phys Rev Lett 123(5):050503

Dabrowska E (2008) Questions with long-distance dependencies: A usage-based perspective. Cogn Linguist 19(3)

Dyer C, Ballesteros M, Ling W, Matthews A, Smith NA (2015) Transition-based dependency parsing with stack long short-term memory. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (Volume 1: Long Papers). Association for Computational Linguistics, Stroudsburg, pp 334–343

Denise A (1996) Génération aléatoire uniforme de mots de langages rationnels. Theor Comput Sci 159(1):43–63

Dürr C, Høyer P (1996) A quantum algorithm for finding the minimum in LANL e-print quantph/9607014

Denise A, Roques O, Termier M (2000) Random generation of words of context-free languages according to the frequencies of letters. In: Mathematics and computer science. Basel, Birkhäuser Basel, pp 113–125

Dai Z, Yang Z, Yang Y, Carbonell J, Le QV, Salakhutdinov R (2019) Transformer-XL: attentive language models beyond a fixed-length context. In: Proceedings of the 57th Annual meeting of the association for computational linguistics

Earley J (1970) An efficient context-free parsing algorithm. Commun ACM 13(2):94–102

Egghe L (2000) The distribution of N-Grams. Scientometrics 47(2):237–252

Fan A, Lewis M, Dauphin Y (2018) Hierarchical neural story generation. In: Proceedings of the 56th annual meeting of the association for computational linguistics

Gilyén A, Arunachalam S, Wiebe N (2019) Optimizing quantum optimization algorithms via faster quantum gradient computation. In: Proceedings of the Thirtieth annual ACM-SIAM symposium on discrete algorithms. Society for Industrial and Applied Mathematics, Philadelphia, pp 1425–1444

Goldwater S, Griffiths TL, Johnson M (2011) Producing power-law distributions and damping word frequencies with two-stage language models. J Mach Learn Res 12:2335–2382

Gidney C (2018) Halving the cost of quantum addition. Quantum 2:74

Gore V, Jerrum Mark , Kannan S, Sweedyk Z, Mahaney S (1997) A quasi-polynomial-time algorithm for sampling words from a context-free language. Inf Computat 134(1):59–74

Goldwurm M, Palano B, Santini M (2001) On the circuit complexity of random generation problems for regular and context-free languages. In: Ferreira A, Reichel H (eds) STACS 2001. Springer, Berlin, pp 305–316

Graves A (2013) Generating sequences with recurrent neural networks

Holtzman A, Buys J, Du L, Forbes M, Choi Y (2020) The curious case of neural text degeneration. In: International conference on learning representations

Hickey T, Cohen J (1983) Uniform random generation of strings in a context-free language. SIAM J Comput 12(4):645–655

Hannun A, Case C, Casper J, Catanzaro B, Diamos G, Elsen E, Prenger R, Satheesh S, Sengupta S, Coates A, Ng AY (2014) Deep speech: Scaling up end-to-end speech recognition

Harrow AW, Hassidim A, Lloyd S (2009) Quantum algorithm for linear systems of equations. Phys Rev Lett 103(15):150502

Hopcroft JE, Motwani R, Ullman JD (2001) Introduction to automata theory, languages, and computation, vol 32, 2nd edn.

Jäger G. (2012) Power laws and other heavy-tailed distributions in linguistic typology. Adv Complex Syst 15(03n04):1–21

Khandelwal U, He H, Qi P, Jurafsky D (2018) Sharp nearby, fuzzy far away: how neural language models use context. In: Proceedings of the 56th annual meeting of the association for computational linguistics (Volume 1: Long Papers). Association for Computational Linguistics., Stroudsburg, pp 284–294

Kitaev N, Kaiser L, Levskaya A (2020) Reformer: The efficient transformer. In: International conference on learning representations

Kleene SC (1956) Representation of events in nerve nets and finite automata. In: Automata studies. (AM-34). Princeton University Press, Princeton, pp 3–42

Kulikov I, Miller AH, Cho K, Weston J (2018) Importance of a search strategy in neural dialogue modelling

Kerenidis I, Prakash A (2016) Quantum recommendation systems

Li T, Chakrabarti S, Wu X (2019) Sublinear quantum algorithms for training linear and kernel-based classifiers. In: ICML

Lloyd S (1996) Universal quantum simulators. Science 273(5278):1073–1078

Louden KC (1997) From a Regular Expression to an NFA. Pearson/Addison Wesley, Boston

Lorenz WA, Ponty Y (2013) Non-redundant random generation algorithms for weighted context-free grammars, vol 502

Murray K, Chiang D (2018) Correcting length bias in neural machine translation. In: Proceedings of the third conference on machine translation: research papers. Association for Computational Linguistics, Stroudsburg, pp 212–223

McKenzie B (1997) Generating strings at random from a context free grammar. Technical report, Department of Computer Science, University of Canterbury, Engineering Reports

Minsky ML (1967) Unsolvability of the halting problem. Prentice-Hall, Inc, Upper Saddle River

Montanaro A (2011) Quantum search with advice. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), 6519 LNCS, pp 77–93

Montanaro A (2016) Quantum algorithms: an overview. Npj Quantum Inf 2(1):15023

Montanaro A (2017) Quantum pattern matching fast on average. Algorithmica 77(1):16–39

Montanaro A (2020) Quantum speedup of branch-and-bound algorithms. Phys Rev Res 2 (1):013056

Mozilla (2019) Common voice

Mozilla (2019) DeepSpeech

McClean JR, Romero J, Babbush R, Aspuru-Guzik Alán (2016) The theory of variational hybrid quantum-classical algorithms. New J Phys 18(2):23023

Nielsen MA, Chuang IL (2010) Quantum computation and quantum information. Cambridge University Press, Cambridge

Oudinet J, Denise A, Gaudel M-C (2013) A new dichotomic algorithm for the uniform random generation of words in regular languages. Theor Comput Sci 502:165–176

Piantadosi ST (2014) Zipf’s word frequency law in natural language: A critical review and future directions. Psychon Bull Rev 21(5):1112–1130

Ponty Y (2012) Rule-weighted and terminal-weighted context-free grammars have identical expressivity. Research report

Pratap V, Xu Q, Kahn J, Avidov G, Likhomanenko T, Hannun A, Liptchinsky V, Synnaeve G, Collobert R (2020) Scaling up online speech recognition using ConvNets. facebook research

Reinharz V, Ponty Y, Waldispühl J (2013) A weighted sampling algorithm for the design of RNA sequences with targeted secondary structure and nucleotide distribution. Bioinformatics 29(13):i308–i315

Rabin MO, Scott D (1959) Finite automata and their decision problems. IBM J Res Dev 3 (2):114–125

Stella M, Brede M (2016) Investigating the phonetic organisation of the English language via phonological networks, percolation and markov models. pp 219–229

Schmidhuber J (2014) Deep learning in neural networks: an overview. Neural Netw 61:85–117

Shor PW (1999) Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. SIAM Rev 41(2):303–332

Ilya S, Martens J, Hinton G (2011) 1017–1024. In: Proceedings of the 28th international conference on international conference on machine learning, ICML’11. Omnipress

Oliveira DS, Ramos R (2007) Quantum bit string comparator: circuits and applications. Quantum Comput Comput 7:01

Steinbiss V, Tran B-H, Ney H (1994) Improvements in beam search. In: Third international conference on spoken language processing

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger KQ (eds) Advances in neural information processing systems, vol 27. Curran Associates, Inc., pp 3104–3112

Tang E (2019) A quantum-inspired classical algorithm for recommendation systems. In: Proceedings of the 51st Annual ACM SIGACT symposium on theory of computing - stoc 2019.ACM Press, New York, pp 217–228

Thompson K (1968) Programming techniques: regular expression search algorithm. Commun ACM 11(6):419–422

Ullman AVA, Lam MS, Sethi R, Jeffrey D (1997) Construction of an NFA from a regular expression. PWS Pub. Co, Boston

Vijayakumar AK, Cogswell M, Selvaraju RR, Sun Q, Lee S, Crandall D, Batra D (2016) Diverse beam search: decoding diverse solutions from neural sequence models. pp 1–16

Apeldoorn JV, Gilyén A, Gribling S, de Wolf R, Gilyen A, Gribling S, de Wolf R (2017) Quantum SDP-solvers: better upper and lower bounds. In: Annual symposium on foundations of computer science - Proceedings, 2017-Octob(617), pp 1–74

Vilares D, Gȯmez-Rodri̇guez C (2018) Transition-based parsing with lighter feed-forward networks. UDW@EMNLP

Wiebe N, Bocharov A, Smolensky P, Troyer M, Svore KM (2019) Quantum language processing

Wang D, Higgott O, Brierley S (2019) Accelerated variational quantum eigensolver. Phys Rev Lett 122(14):140504

Wiseman S, Rush AM (2016) Sequence-to-sequence learning as beam-search optimization. In: Proceedings of the 2016 conference on empirical methods in natural language processing. Association for Computational Linguistics, Stroudsburg, pp 1296–1306

Wossnig L, Zhao Z, Prakash A (2018) Quantum linear system algorithm for dense matrices. Phys Rev Lett 050502:120

Yang Y, Huang L, Ma M (2018) Breaking the beam search curse: a study of (re-)scoring methods and stopping criteria for neural machine translation. In: Proceedings of the 2018 conference on empirical methods in natural language processing. Association for Computational Linguistics, Stroudsburg, pp 3054–3059

Younger DH (1967) Recognition and parsing of context-free languages in time n3. Inf Control 10 (2):189–208

Zhang Y, Clark S (2008) A tale of two parsers: investigating and combining graph-based and transition-based dependency parsing using beam-search. In: Proceedings of the conference on empirical methods in natural language processing. Association for Computational Linguistics, pp 562–571

Zhang Y, features Joakim Nivre. (2011) Transition-based dependency parsing with rich non-local. In: Proceedings of the 49th annual meeting of the association for computational linguistics: human language technologies: short papers, vol 2. Association for Computational Linguistics, pp 188–193

Zhu C, Qiu X, Huang X (2015) Transition-based dependency parsing with long distance collocations. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics)

Acknowledgements

J. B. would like to thank the Draper’s Research Fellowship at Pembroke College. S. S. would like to thank the Science Education and Research Board (SERB, Govt. of India) and the Cambridge Trust for supporting his PhD through a Cambridge-India Ramanujan scholarship. We are grateful for the useful feedback and the comments we recieved from Jean Maillard, Ted Briscoe, Aram Harrow, Massimiliano Goldwurm, Mark Jerrum, and when presenting this work at IBM Zürich. We further thank Terence Tao for the suggestion to try to take the Fourier transform of the indicator function in Section 4.3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1. Quantum computing: preliminaries and notation

In this section we briefly review the basic notions and notations in quantum computation, referring to Nielsen and Chuang (2010) for more details.

The usual unit of classical computation is the bit, a Boolean variable taking values in \(\mathbb {Z}_{2}=\{0,1\}\). Its analogue in quantum computation is called the qubit, and represents the state of a physical quantum 2-level system. A qubit can take values in \(\mathbb {C}^{2}\), i.e. linear combinations or superpositions of two classical values (complex numbers)

In particular we require that |α|2 + |β|2 = 1. We have also introduced the Dirac bracket in the above:

More generally, the set of states an m-qubit quantum register can take is the set of unit vectors

in the Hilbert space spanned by a set of orthonormal basis vectors {|i〉, i ∈{0, 1}m}, known as the computational basis. Each αi is called the amplitude of basis state |i〉. We interpret the vector |i〉 as the m-dimensional complex vector vi with entries given by (vi)j = δij, and also interchangeably as the integer i or the bit string that gives its binary representation b1…bm where bi is either 0 or 1. Furthermore, and of key importance to quantum mechanics and computation, the vector \(|{b_{1}{\ldots } b_{m}}\rangle \in \mathbb {C}^{2^{m}}\) is interpreted as a tensor product

of the m vectors |bi〉 in \(\mathbb {C}^{2}\). The ⊗ is often dropped for convenience, and we write |b1〉|b2〉 for |b1〉⊗|b2〉.

Unitary operators

There are two ways in which we can compute on a state ϕ. The first is by unitary evolution of the system under the Schrödinger equation with a specified Hamiltonian operator H

where H is a hermitian matrix. Closed systems undergo reversible dynamics in quantum mechanics, and this dynamics is represented by unitary matrices. Since we can think of |ϕ〉 as a vector in \(\mathbb {C}^{2^{m}}\), a computation is represented by multiplication of this state by a U ∈SU(2m), i.e. |ϕout〉 = U|ϕin〉. Recall that a matrix U is said to be unitary if  , where U‡ is the conjugate transpose of U. It is possible to compile a large ‘algorithm’ U down into elementary unitary operations, or quantum gates.

, where U‡ is the conjugate transpose of U. It is possible to compile a large ‘algorithm’ U down into elementary unitary operations, or quantum gates.

Measurements

The second kind of operation we can perform on |ϕ〉 is measurement. For our purposes, note that the postulates of quantum mechanics say that on measuring the state |ϕ〉 in Eq. (7) in the basis {|i〉}, we obtain as outcome the basis state |i〉 with probability |αi|2. Since we have chosen states to be normalised, the measurement gives a valid probability mass function over the set of classical m-bit strings. After the measurement, the state “collapses” to the observed basis state |i〉, and no further information can be retrieved from the original state.

Input models

We will use two kinds of input models. The first is a quantum analogue of the classical query model, where inputs are accessed via a black-box or oracle that can be queried with an index i and returns the i-th bit of the input bit string. For a bit string x ∈{0, 1}n we assume access to a unitary \(\mathcal {O}_{x}\) which performs the map

where the first register consists of \(\lceil \log n\rceil \) qubits, the second is a single qubit register to store the output of the query, and the third is any additional workspace the quantum computer might have and is not affected by the query. Here ⊕ is addition on \(\mathbb {Z}_{2}\), i.e. the XOR operation in Boolean logic. Note that \(\mathcal {O}_{x}\) can be used by a quantum computer to make queries in superposition:

Complexity measures

For many theoretical studies in complexity theory, the query input model is a powerful setting where several results have been proven. In this model, the total number of queries made to the input oracle is the primary measure of algorithmic complexity, known as the query complexity.

For practical purposes, it is more important to understand the number of elementary quantum gates used to implement the unitary circuit corresponding to the algorithm in the quantum circuit model. This is known as the gate complexity of the algorithm. The depth of the circuit is directly related to the time complexity, and gives an idea of how parallelisable the algorithm is.

Appendix 2. Languages, automata, and parsing

We refer the reader to the excellent overview of automata theory in Hopcroft et al. (2001). In the following, we introduce the two lowest-level type automata in the Chomsky hierarchy; those that accept regular languages, which are relatively restricted sets of strings, and those that accept context-free languages. While the latter are still somewhat restrictive, they are interesting in the context of natural language processing (NLP) as they are able to capture the essential features of a large fraction of human languages, and are as such a natural model to study in this context.

More pragmatically, we study these two types of languages because there exist efficient algorithms for executing automata that accepts them—in linear time in the length of the input string and of the regular expression in the case of a regular language, and in cubic time for the context- free case.

Moving further up in the Chomsky hierarchy, we first encounter context sensitive languages. Those have a natural associated automaton which is a linear space bounded nondeterministic Turing machine. These languages can be recognised by non-deterministic Turing machines with linear space, and so are in NSPACE(O(n)) for an input of size n. While the necessary memory might be bounded, we do not have good runtime guarantees (i.e. the Turing machine can potentially run for a time \(\propto \exp (n)\)). These models thus seem less relevant in practice; naturally, this just becomes worse when we allow an arbitrary Turing machine for which we have even less of a grasp on their runtime for a given string, and where even the question of whether the machine halts on some input is undecidable.Footnote 3

Furthermore, for both NFAs and PDAs, at each step one symbol of the input is ingested. This symbol will then dictate how the automaton proceeds. If this input is not given by a fixed string but by a sequence of random variables over an alphabet, the sequence of states the automaton passes through as it processes the input will also be a sequence of random variables. Since we ingest one input symbol at every step, the random variable representing the state of the automaton at any given step will be significantly easier to analyse (in contrast to Turing machines, which can choose to ignore the given input; cf. Remark 1).

1.1 2.1 Regular languages and finite state automata

To give motivation for the study of finite state machines (FSAs), we start with a simple example of a regular expression, which is a string of characters from an alphabet (e.g. the letters “a”-“z”), with placeholders for arbitrary symbols (commonly denoted with a .), as well as quantifiers (e.g. the Kleene star ⋆). For instance, the regular expression hello only matches the word “hello”, whereas a regular expression a⋆b would match a string conprising any number of “a”s (including zero), and ending with “b”, such as “aaaaab”, or “ab”, or “b”.

Example 1

Take the regular expression a⋆b over the lowercase letters of the English alphabet. Then a finite state automaton that checks whether a string matches the regular expression would be

where the double lines of state 1 signify an accepting state. Given the string “aaab”, one starts in the initial state q0 and ingests all “a”s at the beginning of the string; once “b” is encountered, we jump to the accepting state qf. Similarly, had the string been “aaabc”, there would now be a “c” left to be ingested and the finite state automaton proceeds to state q2. Since q2 is not an accepting state, the regular expression does not match the string.

Notice that this model is deterministic: the automaton does not use randomness in making transitions, or indeed at any step at all. Any regular expression can be expressed as a finite state automaton—they are in fact equivalent, in the sense that both accept regular languages (Hopcroft et al. 2001, sec. 3.2).

With our newly gained intuition, we can now naturally define deterministic finite state automata as follows.

Definition 2 (Deterministic Finite State Automaton (DFA))

A deterministic finite state automaton is a tuple (Q, Σ, δ, q0,F) of a finite set of states Q, an alphabet Σ, an initial state q0 ∈ Q and a set of accepting states \(F\subseteq Q\). The allowed transitions are described by the transition function δ : Q ×Σ→Q.

While regular expressions and FSAs are equivalent, there exist pathological examples for which enumerating the possible intermediate states incurs an exponential overhead.

Example 2

Take the regular expression .⋆ab.....—which, written in this way, requires ten characters to note down. It is easy to see that this regular expression matches any string where the three characters preceding the five last characters equal “ab”. However, since for every state q ∈ Q the function δ allows precisely one forward transition per letter in the input string, the DFA has to memorise all the past characters it has seen, and thus requires a number of states that is exponential in the length of the regular expression (in an example such as the above) and/or exponential in the input length (e.g. for an expression like .⋆ab.⋆), see Hopcroft et al. (2001, sec. 2.3.6]).

To circumvent such a scenario, it would be beneficial to introduce the possibility of branching in order to attempt multiple matches in parallel, i.e. to have a list of multiple head states at which to attempt the next match. This is formalised with a nondeterministic finite state automaton, defined as follows.

Definition 3 (Nondeterministic Finite State Automaton (NFA))

An NFA is a tuple (Q, Σ, δ, q0,F), where Q, Σ, q0 and F are defined as in Definition 2, while now the transition function allows a subset of Q to as possible target states for any transition, i.e. δ : Q ×Σ→2Q.

Example 3

An NFA for the regular expression .⋆ab..... is given by

The initial state q0 is set up such that it will always be active, while—as soon as an “a” is encountered—there is the possibility of branching out to qa to explore whether the successive letters match the rest of the regular expression.

Note that in Example 3, as depicted, we dropped the non-accepting states after qf; we simply implicitly assume for NFAs that every state q ∈ Q has a link to a non-accepting state for all the missing transitions not yet present.

There is one final variant of nondeterministic automata we wish to define, which are called 𝜖-NFA because they allow so-called 𝜖-transitions, which need not be conditioned on reading an input symbol.

Definition 4 (𝜖-NFA)

An 𝜖-NFA is an NFA with the addition of 𝜖-transitions, i.e. transitions that can be taken unconditionally.

NFAs or 𝜖-NFAs appear intrinsically more powerful than DFAs, as they give more freedom in the way the parsing tree is traversed. However, this is not the case.

Theorem 5 ()

The languages recognisable by NFAs, DFAs, and regular expressions, are all equal, and are called regular languages.

It is known (Thompson 1968) that a regular language can be parsed in time O(mn), where m is the length of the regular expression, and n is the length of the input string.Footnote 4

So what is the configuration of an NFA at any one point? In contrast to a DFA, the internal state the automaton in Example 3 is not anymore a single state q ∈ Q, but it will have multiple hypotheses: for instance, for an input “xyaaxabx...”, the successive state of the automaton from Example 3 will be

At every time step, the set of hypotheses is the union of all the hypotheses that δ yields, when applied to the current list of marked vertices.

To be precise and because we will need it later we define the set of possible configurations for finite state automata as follows.

Definition 5 (DFA and NFA State Space)

The set of possible states for a DFA as defined in Definition 2 is Q. The set of possible states for an NFA and 𝜖-NFA is defined as \({{\varOmega }}\subseteq 2^{Q}\), such that c ∈Ω iff there exists an input string s ∈Σ∗ such that the automaton, starting in its initial configuration is in state c after ingesting it.

Note that for DFAs we implicitly assumed that all such states Q are reachable from its initial configuration q0, and a suitably chosen input.

We finally remark the following.

Remark 1

DFAs, NFAs, and 𝜖-NFAs all ingest one input symbol per step. If there is an 𝜖 transition, the instantaneous state of an 𝜖-NFA is that of all the states in Q reachable with 𝜖 transitions at any given moment.

In particular, what Remark 1 exemplifies is that there is no notion of “𝜖-loops”; if there is such a loop between two q1,q2 ∈ Q, the state of the automaton is in both q1 and q2 at the same time.

1.2 2.2 Context-free grammars and pushdown automata

While regular languages play an important role in computer science, it is straightforward to come up with an example of a language that cannot be parsed with an NFA, e.g. palindromes, or all strings of the form anbn for  . Regular grammars are the most restrictive ones in the Chomsky hierarchy (Chomsky 1956), i.e. they are Type-3 grammars.

. Regular grammars are the most restrictive ones in the Chomsky hierarchy (Chomsky 1956), i.e. they are Type-3 grammars.

The palindrome example can be captured by going one level further up in the hierarchy, to Type-2 or context-free grammars (CFGs). They form a strict superset of regular languages and encompass common structures of modern programming languages such as nested balanced opening and closing brackets, e.g. {()([…]…)}.

While CFGs find wide application, e.g. for parsing (Hopcroft et al. 2001, sec. 5.3.2) or markup languages (Hopcroft et al. 2001, sec. 5.3.3), they still fail to capture more complicated language features, even ones as fundamental as requiring that variables have to be declared before they can be used within a scope; in fact, most widely used programming languages are not described with a context-free grammar. Indeed, the fact whether a programming language is Turing complete is independent on where its syntax is placed in the Chomsky hierarchy; the programming language “whitespace” can be described with a regular expression, whereas any language using brackets can easily be seen to require a stack, which goes beyond the capabilities of an NFA.

Instead of digressing too far into the theory of languages, we follow the same pattern as in Appendix 2.1 and discuss the natural machine that comes with CFGs. As mentioned, the existence of a stack seems to be necessary to recognise a context-free grammar, and in fact it also turns out to be sufficient.

Definition 6 (Pushdown Automaton (PDA)

A pushdown automaton is an 𝜖-NFA with access to a stack, which itself is given by a stack alphabet Γ with initial stack configuration (Z0) ∈Γ, and such that the transition operation

always pops the top element a ∈Γ off the stack and returns a list of pairs (q, s) ∈ Q ×Γ∗ that indicate what state to transition to, and what string to push onto the stack. Here Γ∗ is the set of strings of arbitrary length in the stack alphabet, and 𝜖 is the empty input symbol.

Theorem 6 ((Hopcroft et al. 2001, sec. 6.3))

Context-free grammars are equivalent to pushdown automata.

As for the case of NFAs, there exist efficient algorithms based on dynamic programming such as an Earley parser that can decide whether a CFG accepts an input string, namely in time O(n3) for a string of length n (Earley 1970; Younger 1967).

As in Definition 5, we define the set of possible configurations that a pushdown automaton can be in as follows.

Definition 7 (PDA State Space)

A PDA M’s state space \({{\varOmega }}\subset 2^{Q\times {\varGamma }^{*}}\) is the set of all those configurations of M reachable from its initial configuration (q0, (Z0)) with a suitably chosen input string s ∈Σ∗.

We point out that an instantaneous state of a PDA can be a larger list than an instantaneous state of an NFA, since for any two possible chosen paths towards a state q ∈ Q, depending on the path the stack content can vary. We further note that the stack size is, in principle, unlimited, but as the transition function is specified a priori and is of finite size, the amount of information that can be pushed onto the stack for each step is upper-bounded by a constant as well; the stack can thus grow at most linearly in the runtime of the PDA.

Appendix 3. Biased quantum sampling from a regular or context-free grammar

In this section we rigorously proof Theorem 3, which we restate for completeness.

Theorem 6

Assume we are given a classical probabilistic algorithm that, in time T(n), produces uniform samples of length n from a language, and we are also given a list of independent random variables X1,…,Xn with pdfs pi, j for i = 1,…,n and j = [Σ]. Then we can construct a quantum circuit \(\mathbf {U}_{\mu ^{\prime }}\) that produces a state \(|{\mu ^{\prime }}\rangle \epsilon \)-close to the one in Eq. (3). The algorithm runs in time O(T(n)1.6 × n3κ/𝜖2), where κ is an upper bound on the relative variance of the conditional probabilities \(Pr(a|s_{1} {\dots } s_{i})\), for a, si ∈Σ, for the variable Xi+ 1 given the random string XiXi− 1⋯X1.

Proof

Using Lemma 1, translate the parser—which takes its input step by step—into a sequence of unitaries U = Un⋯U1. Considering a single unitary Ui at the ith step, it is clear that it can be broken up into a family of unitaries \((\mathbf {U}_{i}^{a} )_{a\in {{\varSigma }}}\), such that each \(\mathbf {U}_{i}^{a}\) is a specialisation of Ui when given a fixed input symbol a ∈Σ. We define \(\mathbf {V}_{i}^{a}\) to perform \(\mathbf {U}_{i}^{a}\), and in addition store the input a in some ancillary workspace, e.g. via \(\mathbf {V}_{i}^{a}|\phi \rangle |{\xi }\rangle =(\mathbf {U}_{i}^{a}|\phi \rangle )|{\xi \oplus a}\rangle \). Then define the block-diagonal unitary \(\mathbf {V}_{i} := \text {diag}(\mathbf {V}_{i}^{a})_{a\in {{\varSigma }}}\), which acts like a controlled matrix, meaning that if Vi acts on some state |ψ〉 = |a〉|ϕ〉, then \(\mathbf {V}_{i}|\psi \rangle = |{a}\rangle \mathbf {V}_{i}^{a}|\phi \rangle \). Naturally this works in superposition as well, e.g. \(\mathbf {V}_{i}(\alpha |{a}\rangle + \beta |{b}\rangle )|\phi \rangle = \alpha |{a}\rangle \mathbf {V}_{i}^{a}|\phi \rangle + \beta |{b}\rangle \mathbf {V}_{i}^{b}|\phi \rangle \). We further assume that the \(\mathbf {V}_{0}^{a}\) take as initial state |0〉|q0〉.

The final step in augmenting the parser is to extend Vi to carry out a controlled multiplication: for a finite set of numbers  (e.g. fixed precision), and d1,d2 ∈ F, we write \(\mathbf {V}_{i}(d_{1})|{a}\rangle |{d_{2}}\rangle |\phi \rangle = |{a}\rangle |{d_{1} \times d_{2}}\rangle \mathbf {V}_{i}^{a}|\phi \rangle \). We denote this extended unitary for step i with \(\mathbf {U}^{\prime }_{i}\).

(e.g. fixed precision), and d1,d2 ∈ F, we write \(\mathbf {V}_{i}(d_{1})|{a}\rangle |{d_{2}}\rangle |\phi \rangle = |{a}\rangle |{d_{1} \times d_{2}}\rangle \mathbf {V}_{i}^{a}|\phi \rangle \). We denote this extended unitary for step i with \(\mathbf {U}^{\prime }_{i}\).

The next ingredient we take is the classical uniform language sampler. Once again using Lemma 1, we raise it to a unitary W, which takes as input a prefix sm := a1⋯am of the m previously seen tokens, and a list of distributions over the future weights Wm := (pi, j)m<j≤n. These are the distribution of tokens for each of the Xj. We then augment W to a circuit \(\mathbf {W}^{\prime }\) that quantumly performs the following classical calculations, in superposition over its input:

-

1.

Draw S samples uniformly at random from the grammar starting at strings prefixed with sm (which is certainly possible given an algorithm that samples uniformly from the entire regular/context-free language, as one can derive a language which is also regular/context-free, but starts with strings from the given prefix). Denote this list with B := {b1,…,bS}.

-

2.

Group the samples B into bins Ca of samples with the same first token a ∈Σ, i.e. Ca = {b ∈ B : b = a??⋯?}, where ? stands for any token in the alphabet Σ.

-

3.

Calculate the total of the probabilities of each bin Ca where each element is weighted with respect to the future probabilities given in list Wm, which yields a distribution D = (da)a∈Σ.

It is straightforward to write the unitary \(\mathbf {W}^{\prime }\) that then takes a state  —the first register for storing a number in F, and the second for storing a letter—and a list of such weights D to a weighted superposition \(\mathbf {W}^{\prime }(D)|{0}\rangle = {\sum }_{a\in {{\varSigma }}} \sqrt {d_{a}}|{d_{a}}\rangle |{a}\rangle \) (where for the sake of simplicity we drop the scratch space register that is certainly required). Furthermore, we need a controlled unitary Q that, given some state |h〉|a〉 where h = h(a) in some specified fashion—which we can demand the \(\mathbf {V}_{i}^{a}\) produce—uncomputes a and da from the second register, i.e. Q|h〉|da〉|a〉 = |h〉|00〉.

—the first register for storing a number in F, and the second for storing a letter—and a list of such weights D to a weighted superposition \(\mathbf {W}^{\prime }(D)|{0}\rangle = {\sum }_{a\in {{\varSigma }}} \sqrt {d_{a}}|{d_{a}}\rangle |{a}\rangle \) (where for the sake of simplicity we drop the scratch space register that is certainly required). Furthermore, we need a controlled unitary Q that, given some state |h〉|a〉 where h = h(a) in some specified fashion—which we can demand the \(\mathbf {V}_{i}^{a}\) produce—uncomputes a and da from the second register, i.e. Q|h〉|da〉|a〉 = |h〉|00〉.

Together with the sequence of parser unitaries \(\mathbf {U}_{i}^{\prime }\), the overall quantum circuit Uμ—depicted in Fig. 3—can then be constructed as follows:

For a partial string s1s2⋯si of length i, we denote the set of all strings in the grammar prefixed with letters of s with \(\mathcal {A}(s_{1}{\dots } s_{i})\). At every step i in the algorithm we sample the expectation value of a future hypothesis continuing with some token a, weighted by their individual likelihood pij. The sampling procedure then yields an empirical distribution (da)a∈Σ, which we denote with

where the S sampled hypothesis is given in list B = {b1,…,bS} with individual letters bj = bj,1⋯bj, n ). As usual, χ[⋅] denotes the indicator function, and

Our goal is to show that the algorithm reproduces the desired weight distribution given in Eq. (3), i.e.

where

To estimate the total probability distribution to error 𝜖 in total variation distance, it suffices to approximate each conditional distribution to error 𝜖/n, and thus we must show how many samples S are required for da to be a good estimator for \(\text {Pr}(a|s_{1}{\dots } s_{i})\).

First note that fsi(a) = usi(a)/vsi for

It is straightforward to calculate that

and so \(\mathbb {E}(u^{si}(a))/\mathbb {E}(v^{si})= \text {Pr}(a|s_{1} {\dots } s_{i})\), the value we are trying to estimate.

Therefore it suffices to take enough samples S such that the usi(a) are close to their mean in relative error (and thus vsi is also close in relative error, since \(v^{si}={\sum }_{a} u^{si}(a)\)).

Noting that \(u^{si}(a)=\frac {1}{S}{\sum }_{j=1}^{S} Y_{j}\) for i.i.d. random variables Yj, we have that \(\text {Var}(u^{si}(a)) = \frac {1}{S} \text {Var}(Y)\). Therefore by Chebyshev’s inequality, to get a 𝜖/n relative error approximation requires the number of samples S to be at least

By assumption \(\text {Var}(Y)/\mathbb {E}(Y)^{2} \leq \kappa \), and so the total number of uses of the sampler over all n steps of the algorithm is O(κn3/𝜖2) as claimed. □

We note that variants of this sampling algorithm are certainly possible: a naïve approach would be to just sample from the product-of-powerlaws distribution and postselect on the resulting strings being in the grammar; the performance of this will then depend on the number of strings in the grammar vs. the number of all possible strings. Another method could be to execute the uniform sampler in superposition, and perform amplitude amplification on the resulting quantum state to reintroduce the power-law bias. The number of amplification rounds will again depend on the distribution of the strings in the grammar.

Appendix 4. Most likely parse: full query bound

In this section we rigorously prove the integral runtime expression in Lemma 10.

Expected runtime RT1(R, k, n) as evaluated for R = 10 and various k (top row: k ∈{0.2,0.4,0.6,0.8}, middle row: k ∈{1.2,1.4,1.6,1.8}, bottom row: k ∈{2.5,3,3.5,4}, always from left to right), vs. the same parameters used for \(\text {RT}_{1^{\prime }}(R,k,n)\) (dashed line), where the discrete probabilities from the power law are approximated with a continuous Pareto distribution. On the x-axis is the length of the input sequence n