Abstract

Embodied Digital Technologies (EDTs) are increasingly populating private and public spaces. How EDTs are perceived in Hybrid Societies requires prior consideration. However, findings on social perception of EDTs remain inconclusive. We investigated social perception and trustworthiness of robots and telepresence systems (TPS) and aimed at identifying how observers’ personality traits were associated with social perception of EDTs. To this end, we conducted two studies (N1 = 293, N2 = 305). Participants rated five different EDTs in a short video sequence of a space sharing conflict with a human in terms of anthropomorphism, sociability/morality, activity/cooperation, competence, and trustworthiness. The TPS were equipped with a tablet on which a person was visible. We found that the rudimentarily human-like TPS was perceived as more anthropomorphic than the automated guided vehicle, but no differences emerged in terms of other social dimensions. For robots, we found mixed results but overall higher ratings in terms of social dimensions for a human-like robot as opposed to a mechanical one. Trustworthiness was attributed differently to the EDTs only in Study 2, with a preference toward TPS and more human-like robots. In Study 1, we did not find any such differences. Personality traits were associated with attributions of social dimensions in Study 1, however results were not replicable and thus, associations remained ambiguous. With the present studies, we added insights on social perception of robots and provided evidence that social perception of TPS should be taken into consideration before their deployment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interactions between human beings and Embodied Digital Technologies (EDT), artificial agents that are physically represented in areas previously only claimed by human beings, are in the focus of current research. While common EDTs such as industrial robots are more or less static and remain in exclusive surroundings, social robots and telepresence systems (TPS) are bound to inhabit public spaces. When doing so, interactions with laypeople are inevitable and even desired, and should thus be as smooth as possible to mitigate problematic situations. Of course, technical aspects in terms of security and safety, among others, need to be met: EDTs should be able to navigate the physical world without causing accidents, and data protection should be granted. However, not only the technical details play a role for a smooth integration. Rather, with EDTs being part of everyday lives of humans and playing an, albeit smaller, role in social interactions, social perception of EDTs comes into play. Social perception governs social interactions in societies (Cuddy et al. 2008), which could soon include EDTs as artificial actors in so-called Hybrid Societies (Meyer et al. 2022). Social perception of humans includes the well-established dimensions of the Stereotype Content Model, competence and warmth (Fiske et al. 2002), or, in a more differentiated approach, competence, and sociability and morality as subdimensions of warmth (Kervyn et al. 2015). However, research has shown that these dimensions are only partly attributable to robots, or that people are not willing to attribute dimensions like morality (Bretschneider et al. 2022; Mandl et al. 2022a, b). Social perception of robots has been a topic of interest for a couple of years (e.g., Carpinella et al. 2017; McKee et al. 2022; Naneva et al. 2020). Users should accept robots as helpful assets and, therefore, should perceive them in a positive way. This could be achieved by designing them in a way that is in line with the intended application area (Roesler et al. 2022). For instance, android robots, which appear eerily human-like, might fall prey to the uncanny valley phenomenon (MacDorman and Ishiguro 2006). Robots that appear to have a broad array of functions, by design or through commercials of the producer, could provoke rejection if the actual functions fall short (Malle et al. 2020). Furthermore, if robots are perceived as incompetent, or as unfriendly, they might be employed less readily. Low trust is especially problematic and might lead to the rejection of the technology in question (Hancock et al. 2011b; Lee and See 2004).

A specific type of EDTs are telepresence systems. TPS refers to a technology that enables people to perform actions in a distant location, that is, to communicate or explore as if they are physically present in that location (Merriam-Webster, n.d.). Especially with COVID-19 regulations, this technology has gained more attention in research as an alternative to direct contact for COVID-19 patients, with first positive evaluations among patients and health-care workers (Ruiz-del-Solar et al. 2021). Under COVID-19 regulations, TPS might be useful assets to make social contacts easier and safe, but negative aspects such as ethical challenges should be taken into account (Isabet et al. 2021). However, little research in terms of social perception of TPS was carried out until now (Isabet et al. 2021; Mandl et al. 2023; Ruiz-del-Solar et al. 2021; Savela et al. 2018).

In the present studiesFootnote 1, we aimed at closing this gap by investigating not only robots but also TPS, thus providing a more comprehensive view of social perception of EDTs in a space sharing conflict with a human. We investigated the dimensions of competence, anthropomorphism, sociability/morality, and activity/cooperation, as suggested by Mandl et al. (2022a).

2 Embodied digital technologies

Embodied Digital Technologies is an umbrella term for novel technologies that are physically present in public and private spaces and thus take part in Hybrid Societies (Meyer et al. 2022). They comprise technologies that can move and act in public and private spaces, for example robots, and technologies such as bionic prostheses people are equipped with (e.g., Mandl et al. 2022b). EDTs can be either autonomous or, partly or permanently, remote controlled. For the present study, we focus on two specific types of EDTs: robots and telepresence systems.

Robots come in many forms and contexts, that is, social settings, industrial settings, or even military settings. Especially within Hybrid Societies, two types of robots require attention: industrial and social robots. The present study is concerned with robots that belong to the category of social robots, as they are physically embodied artificial agents that have features to communicate with human beings via social interfaces (Meyer et al. 2022). Social robots can be deployed in a variety of settings: For instance, zoomorphic robots are used as companions or therapeutic devices, more or less successfully (Gácsi et al. 2013; Libin and Libin 2004; Melson et al. 2009; Scholtz 2010). For humanoid robots, deployment areas are even more various: They might staff hotel lobbies (Seo 2022) or assist individuals living independently as service robots (Scopelliti et al. 2005).

Telepresence systems provide users with the option to investigate areas which are currently not accessible for humans for certain reasons, such as (health) hazards or far distance, as they are not physically present but use TPS as stand-ins in those areas. Application areas include health care or Search-and-rescue-missions, among others. A review of studies from the past twenty years showed that TPS received predominantly positive feedback in terms of design and core functionalities (Isabet et al. 2021). Isabet et al. (2021) pointed out that some challenges, for example ethical considerations such as data privacy, equal access to the use of this technology, and possibly reduced direct social contact, remain unsolved, and require further research. Virkus et al. (2023) provided evidence via literature review that TPS in educational fields have significant potential, for example when supporting students with health problems or disabilities: TPS enabled users to experience social situations more realistically than if videoconferencing tools were used. However, the authors pointed out that overall acceptance of TPS was still challenging (Virkus et al. 2023). Zillner et al. (2022) emphasized that TPS could enable pediatric patients with diseases associated with the central nervous system to participate in everyday school life and thus, counteract social isolation. Overall, TPS were evaluated rather positively and subsequently, we expect the deployment of TPS in a variety of settings. This highlights the importance of investigating social perception of TPS as a relevant form of EDTs.

3 Social perception of EDTs

How EDTs are perceived in terms of social dimensions is proposed to be determined by three factors: one, the design of the EDT, that is, whether it appears human-, animal-, or machinelike, two, the number of robots, and three, interindividual differences of the users (Fraune et al. 2015).

3.1 Anthropomorphism

As humans are susceptible to anthropomorphizing simple everyday objects, for instance, their printer, anthropomorphism also takes a prime spot in Human-Robot Interaction (HRI) research. Anthropomorphic tendency is a multiply determined phenomenon of applying human characteristics to non-human beings, for instance, animals or even objects (cf. Epley et al. 2007). The non-human actor is attributed human-like emotional states, a mind, or behavioral characteristics typical for human beings (Epley et al. 2007). Therefore, it is important to state that anthropomorphism not only entails the morphological details of said non-human actor, but also the characteristics attributed to it. However, in the case of robots, anthropomorphism could be exploited to facilitate human-robot interaction especially in meaningful social interactions (Duffy 2003). Whether this is a good or less good choice might depend on the setting the robot is employed in (Roesler et al. 2022). When deployed in care services, humanoid robots that showed emotions elicited negative emotions in elderly potential users, even though the general attitudes towards robots were predominantly positive (Johansson-Pajala et al. 2022). Assistance robots, designed to replace assistant dogs in the long haul, were disregarded as emotional companions, even if their technical competence was anticipated as moderate to high (Gácsi et al. 2013). However, other findings implied that the perception of robot dogs was rather ambivalent: Melson et al. (2009) found that even though the robot animal was perceived primarily as a technical artifact, it was still perceived as a ‘social other’, that is, it was attributed some qualities that are found in living animals.

Designing humanoid robots is not trivial at all: Depending on design choices of color of the robot, their facial details, or whether the robot possesses hair, it was categorized as an in- or out-group member by the users and subsequently evaluated differently (Eyssel and Kuchenbrandt 2012). This suggests that (humanoid) robots were stereotyped similar to human beings.

However, as Fossa and Sucameli (2022) put it, “(…) implementing gender and other social cues in the design of social robots – if not discriminatory and if wisely dosed – is expected to improve human-machine interactions significantly” (p.23). Furthermore, the authors challenged the viability of gender neutral robots, because even if a social robot was designed to appear gender neutral, users might still engage in bias projection (Fossa and Sucameli 2022).

3.2 Sociability, morality, and competence

In dependence of their morphological details, robots were attributed more or less warmth (Carpinella et al. 2017), competence (Carpinella et al. 2017; Mandl et al. 2022b), and sociability and morality (Bretschneider et al. 2022; Mandl et al. 2022b). For the dimensions commonly associated with social interaction, human-likeness was correlated with higher perceptions of warmth, sociability, and morality (Bretschneider et al. 2022; Carpinella et al. 2017; Mandl et al. 2022b). For competence, research revealed a mixed picture: Carpinella et al. (2017) and Paetzel et al. (2020) found that competence was positively associated with human-likeness whereas Mandl et al. (2022b) and Bretschneider et al. (2022) found that machinelike, i.e., industrial robots were perceived as more competent. The reason was proposed to be due to the very clear area of application of the specific robot in contrast to the more abstract application area of social or android robots. Furthermore, perceived competence was the trait that was fastest to stabilize after repeated encounters with the same robot (Paetzel et al. 2020). Jung et al. (2022) emphasized the importance of competence over warmth attributed to non-humanoid robots in care, advocating for the implementation of less human- and more machine-like robots in care-settings. By reverting to non-humanoid robots, the authors assumed that the problem of (human) stereotyping could be circumnavigated (Jung et al. 2022). Robots would then serve as mere tools to execute tasks that demand competence, but not warmth (Jung et al. 2022). This would imply that - apart from competence - social dimensions are less important for the perception of robots which is in accordance with the current criticism of anthropomorphic robots (Johansson-Pajala et al. 2022). The relevance of framing, i.e., of whether a robot was framed as anthropomorphic or functional, was associated with participants’ willingness to donate money to ‘save’ the robot from malfunctioning in such a way that anthropomorphic framing had a negative effect on willingness to donate (Onnasch and Roesler 2019). The authors pointed out that functional awareness, i.e., the ability of the robot as a tool, was more important than anthropomorphic framing. However, other researchers challenge this view and reported that participants - in the interaction with artificial agents - attributed warmth and competence to the artificial agent and preferred warm agents over cold agents (McKee et al. 2022). Surprisingly, participants preferred artificial agents that they perceived as incompetent over those that they perceived as competent (McKee et al. 2022). This brought in a new facet in the potential expectations towards robots in cooperative settings, again highlighting the importance of context in HRI. Furthermore, Paetzel et al. (2020) found that for perceived competence, the robots’ appearance and interaction modalities played a bigger role than the content of the interaction. Additionally, interaction with a robot facilitated likeability: After a positively perceived interaction, participants liked the robot more (Paetzel et al. 2020). For telepresence systems, prior research indicated a generally positive attitude to the deployment of TPS in health settings both by health staff and by patients (Ruiz-del-Solar et al. 2021; Savela et al. 2018).

3.3 Trust

No matter how sociable or competent an EDT is perceived, if trust is not placed in EDTs, people will not use them, i.e., collaborate with them in the intended ways (Malle et al. 2020). Thus, trust is an intensively investigated aspect of HRI due to the significancy of it: In workplace settings, trust has a mediating role in the relationship between a robots’ usability and the willingness to use it (Babamiri et al. 2022). Trust in robots can be seen as a multidimensional concept, including two superordinate factors of trust (performance trust, moral trust) with two subfacets each (reliable and capable within performance trust, sincere and ethical within moral trust; Malle and Ullman 2021; Ullman and Malle 2018). Hancock et al. (2011a) identified three factors (human, robot, environmental), which were associated with trust in a robotic teammate and, subsequently, influenced the human-robot team interaction. However, with regard to social robots, a recent meta-analysis showed that people did not explicitly trust or distrust social robots. The authors pointed out that due to the variability in estimated trust across studies, other factors might have moderated the extent to which people trusted social robots (Naneva et al. 2020).

Trust is furthermore associated with anthropomorphism: Research has shown that anthropomorphism not only increased trust (Waytz et al. 2014), but also increased trust resilience, that is, the resistance to breakdowns in trust (de Visser et al. 2016). Robot form was found to predict perceived trustworthiness, primarily through the perceived intelligence and classification of the robot (Schaefer et al. 2012). However, Nijssen et al. (2022) found that in the case of moral trust, that is, trusting a robot to take moral choices, mechanical robots were preferred over humanoid robots, at least if the robots were framed as possessing affective states. The authors suggested that this was an indication toward the Uncanny Valley-hypothesis (MacDorman 2019; Mori et al. 2012): Robots which closely resemble humans and also possess other human-like traits, in this case affectivity, were perceived as a threat to human distinctiveness and were therefore rejected (Nijssen et al. 2022).

3.4 Interindividual differences in HRI

Apart from design aspects of the robot, individual differences play a significant role in HRI. User characteristics such as age, gender, or educational level were associated with different perceptions of robots: Younger people were generally less cautious to interact with robots and saw them more positively than older individuals (Bishop et al. 2019). In contrast, older people believed that robots could contribute to their personal happiness, that is, improve their quality of life (Arras and Cerqui 2005). That is not to say that older people would readily welcome robots into their personal home, but it shows that the (perceived) area of use was associated with the readiness to de facto use the technology (Arras and Cerqui 2005; Savela et al. 2018). Younger people tended to have a higher tendency to anthropomorphize than older people, highlighting the importance of taking generational differences into account (Letheren et al. 2016).

Studies concerned with associations between personality traits and social perception of robots have revealed inconsistent results: In some studies, Affinity for Technology Interaction (ATI), that is, the tendency to actively engage in intensive technology interaction (Franke et al. 2019), and Need for Cognition (NFC), that is, the tendency of an individual to engage in and enjoy thinking (Cacioppo and Petty 1982), were correlated with sociability (Mandl et al. 2022a, Study 2): Individuals higher in NFC and ATI attributed more sociability to robots. However, findings on the associations between ATI and NFC with attributions of social perception to robots have also yielded non-significant results (Mandl et al. 2022a, b) (Study 1). Naturally, attitudes toward robots affect how people perceive them (Nomura et al. 2008): If people have a negative image of robots, they attribute social dimensions, that is, warmth or sociability/morality, to a lesser extent. The Negative Attitude Toward Robots Scale (NARS; Bartneck et al. 2005; Nomura et al. 2008), although primarily applicable to robots, has been successfully applied to assess attitudes toward telepresence systems (Tsui et al. 2011), which, by design, explicitly require a human-in-the-loop, i.e., it might be perceived differently than a robot. The tendency to anthropomorphize is not universal, instead it stems from differences in culture, norms, experience, education, cognitive reasoning styles, and attachment to human and nonhuman agents (Epley et al. 2007), resulting in stable individual differences (Waytz et al. 2010). Another aspect to influence attitudes toward robots is familiarity: contact with robots, be it direct or indirect, changes attitudes analogously to intergroup relationships between humans (Sarda Gou et al. 2021).

4 The present studies

Previous research revealed positive associations between human-likeness of robots and perceived warmth, sociability, and morality (e.g., Bretschneider et al. 2022; Carpinella et al. 2017; Mandl et al. 2022a; Paetzel et al. 2020), and negative associations with competence (Bretschneider et al. 2022; Mandl et al. 2022b; but see Carpinella et al. 2017; Paetzel et al. 2020) – albeit these contradicting results might be caused by intended application areas. However, whether these results are applicable to TPS is unclear. Thus, the aim of the present studies was to gain insights in the social perception of telepresence systems and to deepen the understanding of social perception of robots. We thus investigated the following research questions (RQ) and hypotheses (H) in two consecutive studies.

RQ1: Are different telepresence systems perceived differently in terms of perceived (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence?

RQ2: Is there an association between (I) age and/or (II) gender of the users and attributions of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence?

RQ3: Is there an association between (I) negative attitudes toward robots and/or (II) anthropomorphic tendency and/or (III) Need for Cognition and/or (IV) Affinity for Technology Interaction of users and the perceived (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence of different telepresence systems?

According to prior findings (e.g., Bretschneider et al. 2022; Mandl et al. 2022a), robots were perceived vastly different depending on their human-likeness. In order to replicate these findings, we posed the following hypotheses (H):

H1: The more morphologically human-like a robot is designed, the more (a) anthropomorphism, (b) sociability/morality, and (c) activity/cooperation is attributed to it.

H2: The more morphologically human-like a robot is designed the less competence is attributed to it.

Furthermore, research has shown that attributions of anthropomorphism were associated with the perceivers’ gender (e.g., Mandl et al. 2022a).

H3: People identifying as female attribute less anthropomorphism to robots than people identifying as male.

Since associations between personality variables and social perception of robots remained largely unclear (e.g., Mandl et al. 2022b), we investigated how attitudes toward robots and the anthropomorphic tendency were associated with social perception of robots by posing the following research question:

RQ4: Is there an association between (I) negative attitudes toward robots and/or (II) anthropomorphic tendency of users and the attributions of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence to robots?

Prior research has shown that trustworthiness of robots was linked to their design (Schaefer et al. 2012). We therefore investigated the following research question:

RQ5: Are there differences between various robots in terms of perceived trustworthiness?

5 Methods

Prior to data collection, the present studies were preregistered on OSF (Study 1: https://osf.io/gzcx9/?view_only=af5d3c435b0642f7be2c5fd6b80230c3 and Study 2: https://osf.io/asjtp/?view_only=8cac8d0b8589473296deed264ae58c50). The procedure was evaluated and approved by the Ethics Committee of Chemnitz University of Technology (#101552377). It was not considered to require further ethical approvals and hence, to be uncritical concerning ethical aspects according to the criteria used by the Ethics Committee, which includes aspects of the sample of healthy adults, voluntary attendance, noninvasive measures, no deception, and appropriate physical and mental demands on the subject. Study 1 included the assessment of gender attributions to telepresence systems. Data collected on this topic will be used for subsequent studies.

We report how we determined our sample size, all data exclusions (if any), all manipulations, and all measures in the study (Simmons et al. 2012).

5.1 Participants

We conducted an a-priori-power analysis with G*Power (version 3.1.9.6; Faul et al. 2007) with the following parameters for ANOVA (fixed effects, omnibus, one-way): medium effect size f = 0.25; alpha-error probability: 0.05; power: 0.95; number of groups = 5, resulting in a total sample size of N = 305. We will report the respective sample characteristics in the sections Study 1 and Study 2.

5.2 Measures

5.2.1 Stimulus material

The stimulus material consisted of five videos of an interaction between a human being and five different EDTs: two anthropomorphic robots, one zoomorphic robot, and two telepresence systems (Fig. 1):

Pepper. Pepper (Fig. 1a) by SoftBank Robotics is a humanoid robot, which is approx. 1.20 m tall and weighs 28 kg and is shaped like a typical human body with a head, body, and arms. Pepper was developed as an IT and communicative companion robot and has various sensors (e.g., microphones, HD cameras, 3D distance sensors, gyro sensors, laser scanners and bumper sensors) which, among other things, serve to detect people and subsequently interact with them. In order to move, three all-side wheels hidden in the foot part are used, with which Pepper can move with up to 3.6 km/h. In addition, the head, torso, arms, wrists and fingers can be moved to carry loads of up to 500 g. Pepper (Version 1.6) with the NAOqi OS v 2.4.3. (adapted Ubuntu 14.04 Trusty Tahr) Linux operating system, programmed with Choregraphe Suite 2.4.3.28-r2, was used in the stimulus material. In response to the human cue that the robot could proceed, Pepper waved at the human.

Nao. Nao (Fig. 1b) by SoftBank Robotics, is a bipedal humanoid robot which stands 57.4 cm tall and weighs 5.4 kg. It is equipped with different sensors for different communication features like vision, speech, hearing and touch-sensing. Nao has 25 degrees of freedom to enable a wide range of movements like walking, standing, sitting. In contrast to Pepper, Nao has two separate legs, which means that more complex movements (e.g., kicking) are also possible (Lopez-Caudana et al. 2022), but Nao’s forward movements are significantly slower as a result (max speed 0.3 km/h). Nao (Version V4) was used with the NAOqui OS v 2.4.3 (adapted Ubuntu 14.04 Trust Tahr) Linux operating system, programmed with Choregraphe Suite 2.4.3.28-r2, in the stimulus material. In response to the human cue that the robot could proceed, Nao waved at the human.

PhantomX AX Metal Hexapod MK-III. The PhantomX AX Metal Hexapod MK-III (Fig. 1c) was developed by Interbotix Labs and is an open platform hexapod robot with rugged full metal frame. The structure of the robot is bio-inspired from the structure of arthropods hexapodas (Zangrandi et al. 2021), with each of the six legs having three degrees of freedom through three servomotors on each leg. This design allows a very high level of flexibility and adaptability of the robot, as well as moving forwards and sideways. For the stimulus material, PhantomX was remotely controlled using a controller. In response to the human cue to allow the robot to move on, PhantomX changed its movement pattern, briefly moving sideways and then moving forward again.

AGV “Hubert”. The automated guided vehicle (AGV) with the nickname “Hubert” (Fig. 1d) is a self-developed robot by the Institute for Machine Tools and Production Processes, Chemnitz University of Technology. The AGV was developed for use in intralogistics to assist pickers in transporting the selected goods. It has both an autonomous driving mode and a following and interaction mode with humans. The AGV uses three-stage tracking to manoeuvre independently and without a guidance system in the area (for a more detailed description of the sensors, see Winkler et al. 2022). For the present study, a tablet was installed on the vehicle so it could be used as a remotely controlled telepresence system. In response to the human cue that the robot could continue, the remotely connected person who was visible on the tablet waved at the human.

Double3. Double3 (Fig. 1e) by Double robotics is a self-driving two-wheeled telepresence robot. The robot consists of a head (tablet) and a base part (wheel). In the base are two electric engines, which allow the robot to move freely and balance the whole robot. Double3 is controlled remotely through a web connection. A controller was used for the stimulus material. In response to the human cue that the robot could continue, the remotely connected person who was visible on the tablet waved at the human.

Both TPS were remotely controlled by the same person who was also visible on the tablet.

Embodied Digital Technologies used in the stimulus videos. Note: Robots: (a) Pepper, (b) Nao, (c) PhantomX AX Metal Hexapod MK-III (Robotics and Human-Machine Interaction Lab at Chemnitz University of Technology); Telepresence systems: (d) AGV “Hubert” (Institute for Machine Tools and Production Processes at Chemnitz University of Technology), (e) Double3. Both telepresence systems were equipped with a tablet on which a person was visible and initiated the interaction.

Video material. The following space sharing conflict was shown from the first-person view of the person involved (see Fig. 2): a person walks down a corridor in a building (Fig. 2a) and comes to a point where two corridors cross. The person is met by one of the EDTs, which approaches the person from the left (Fig. 2b). Both stop at the point where their paths cross. The human shows the EDT with a hand gesture that it may continue driving (Fig. 2c). The EDT then responds with a reaction that is adapted to the EDT (Fig. 2d) and continues to drive (Fig. 2e) while the human briefly looks after the EDT (Fig. 2f).

The videos are on average 20 s long with a deviation of +/- 3 s due to the differing movement speeds of the EDTs. Nao moves significantly slower than the other EDTs, which resulted in the decision to speed the video by 1.5x. All videos were filmed using a GoPro camera (HERO6) and a gimbal stick to enable stable image recording from a first-person perspective (point of view, POV). The videos were shot in an office building at Chemnitz University of Technology. All videos were presented in randomized order with instructions to rate how the participants perceive the EDT, how they think the EDT would act/think/react, even though this first impression might be wrong and revoked later. In Study 1, we provided an ‘easy-out’ option: Participants could decide that the respective attribute was not fitting to robots at all, resulting in missing values. In contrast, in Study 2, participants had to decide on a rating for each EDT.

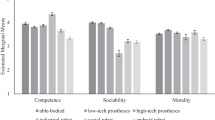

5.2.2 Items

Social Perception. We used the Social Perception of Robots Scale (SPRS; Mandl et al. 2022a) to assess three factors of social perception: anthropomorphism, sociability/morality, and activity/cooperation. In total, 18 items were presented on a semantic differential to be rated on a five-point Likert-scale. The dimension of anthropomorphism consists of eight items (e.g., natural – artificial; Cronbach’s αStudy 1 = 0.79, Cronbach’s αStudy 2 = 0.81), the dimension of morality/sociability of six items (e.g., honest-dishonest; Cronbach’s αStudy 1 = 0.84, Cronbach’s αStudy 2 = 0.86), and the dimension of activity/cooperation of four items (e.g., altruistic – self-serving; Cronbach’s αStudy 1 = 0.66, Cronbach’s αStudy 2 = 0.54). We averaged the items of each scale (Fig. 3).

Competence. To assess perceived competence of the robots and telepresence systems, we used one item, rated as a semantic differential on a five-point Likert scale (competent – incompetent), analogously to previous studies (Mandl et al. 2022a) (Fig. 3).

Trustworthiness. We used the short version of the Multi-Dimensional Measure of Trust Scale, Version 2 (MDMTv2; Ullman and Malle 2019), comprising of one item which we translated into German in consultation with one author of the scale, B. Malle (personal communication, July 20, 2021). Participants were asked to rate how trustworthy they perceive the robot in question on a seven-point scale, anchored at 1 = not at all and 7 = very much. In both studies, if participants did not think that the attribute of trustworthiness was fitting for a robot, we provided an option to indicate this (Fig. 4).

Affinity for Technology Interaction. We used the German version of the Affinity for Technology Interaction (ATI) Scale (Franke et al. 2019). Nine items were rated on a six-point scale (anchored at ‘not true at all’ and ‘very true’) to indicate whether people tend to interact with technological systems (e.g., I like to try out functions of new technical systems) and averaged into a scale (Table 1).

Need for Cognition. We used the German short version of the Need for Cognition (NFC) scale (Bless et al. 1994), comprising of sixteen items (e.g., I consider finding new solutions to problems a fun activity), to assess NFC. The items were rated on a seven-point scale, anchored at 1 = strong disagreement and 7 = strong agreement. We calculated a sum score (Table 1).

Attitudes Toward Robots. To assess the attitude toward robots, we used the German version (Bartneck et al. 2005) of the Negative Attitudes Toward Robots Scale (NARS; Nomura et al. 2008), comprising of fourteen items (e.g., I would feel relaxed if speaking to a robot), rated on a five-point scale anchored at 1 = strong disagreement and 5 = strong agreement. We averaged the fourteen items with higher scores indicating more negative attitudes toward robots (Table 1).

Anthropomorphic tendency. To assess the anthropomorphic tendency of the participants, we used the German version of the Individual Differences in Anthropomorphism Questionnaire (IDAQ; Waytz et al. 2010). Participants rated their agreement to fifteen statements (e.g., to what extent does technology – devices and machines for manufacturing, entertainment, and productive processes (e.g., cars, computers, television sets) – have intentions?) on a 10-point scale anchored at 1 = not at all and 10 = very much. We calculated an average score (Table 1).

5.3 Procedure

The study was conducted as an online survey via Prolific Academic (Palan and Schitter 2018). Data were collected with Limesurvey (Limesurvey GmbH, n.d.). Participants were required to give informed consent to proceed with the study. After they filled out a sociodemographic questionnaire (age, gender, educational level), participants were shown the five stimulus videos of a short interaction with different EDTs in randomized order. After each video, participants rated the EDTs on 18 adjectives of the SPRS, one item on competence, and one item on trustworthiness. Afterwards, participants completed four additional personality questionnaires to assess Need for Cognition, Affinity for Technology Interaction, anthropomorphic tendency, and negative attitudes toward robots, presented in randomized order. Lastly, we asked whether the participants had any prior knowledge of robots and telepresence systems and whether they had previously interacted with such technical systems. Upon finishing, participants were forwarded to Prolific Academic (www.prolific.co) to receive a compensation of EUR 3.60. Participants who participated via social media and university circulars could choose between 0.5 credits for necessary participation in studies or take part in a raffle to win EUR 20. The total processing time was approximately 20 min.

5.4 Statistical analysis

Each participant rated all five videos. These repeated measures of the dependent variables were thus nested in participants. We tested whether the perceptions of competence, anthropomorphism, morality/sociability, activity/cooperation, and trustworthiness differed across participants, indicating non-independence (Table 2).

We thus employed Mixed Models to account for nested data. We used R (Version 4.1.1.; (R Core Team 2021) and the R packages dplyr (Version 1.0.7), sjPlot (Version 2.8.9), multilevel (Version 2.6), psych (Version 2.1.6), ggplot2 (Version 3.3.5), lme4 (Version 1.1–27.1), lattice (Version 0.20–44), tidyverse (Version 1.3.1), MuMIn (Version 1.43.17), effects (Version 4.2-0), lm.beta (Version 1.5-1), emmeans (Version 1.6.3), and rstatix (Version 0.7.0).

6 Study 1

6.1 Participants

The sample was composed of Na = 100 participants acquired via Prolific Academic (www.prolific.co) and Nb = 250 participants acquired via social networks and university circulars, resulting in a total sample size of N = 350 participants. To account for outliers in the data, we used box plots to identify and subsequently remove multiple outliers on the dependent variables anthropomorphism, sociability/morality, activity/cooperation, and competence. We did not identify any multivariate outliers. 57 participants failed the attention check and where subsequently removed from the sample, resulting in a final sample size of N = 293. To take part in the study, participants were required to be fluent in German. The mean age of the sample was M = 28.55 years (SD = 9.33, range: 18–66 years). The sample consisted of 184 female, 106 male, and three non-binary participants and was mostly highly-educated, with 57.34% having obtained a university degree (high-school diploma: 31.40%, other degrees: 11.26%). All participants had a degree. 15.36% of the participants reported prior experience with TPS, and 13.99% had prior knowledge about TPS.

6.2 Results

We fitted Mixed Models with random intercepts for the dependent variables, attributed anthropomorphism, sociability/morality, activity/cooperation, competence (Fig. 3) and trustworthiness (Fig. 4) across the different types of stimuli and, if applicable, computed post-hoc Tukey tests. Data revealed a non-normal distribution for all dependent variables. We thus employed bootstrapping with 10,000 iterations. We controlled for participants’ age and genderFootnote 2. We investigated via correlation analyses how personality variables, namely NFC, ATI, anthropomorphic tendency, and negative attitudes toward robots, were associated with social perception of EDTs.

Estimated Marginal Mean Scores for perceived competence, anthropomorphism, sociability/morality, and activity/cooperation in Study 1. Notes. Ntotal = 293. Anthropomorphism: NAGV = 122, NDouble3 = 125, NPhantomX = 125, NNao = 141, NPepper = 145; sociability/morality: NAGV = 75, NDouble3 = 96, NPhantomX = 79, NNao = 92, NPepper = 92; activity/cooperation: NAGV = 97, NDouble3 = 108, NPhantomX = 90, NNao = 109, NPepper = 113; competence: NAGV = 256, NDouble3 = 258, NPhantomX = 243, NNao = 269, NPepper = 271. Error bars indicate Standard Errors.

Participants could indicate that attributes were not fitting to robots or telepresence systems, which resulted in missing values (Table 3).

6.2.1 Telepresence Systems

Research question 1 proposed that different telepresence systems would be perceived differently in terms of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence. We found that the telepresence systems were perceived as differently only in terms of anthropomorphism (b = 0.39, SE = 0.09, t = 4.54, p < .001): AGV “Hubert” was perceived as less anthropomorphic than Double3 (ΔM = -0.37, SE = 0.09, t = -4.29, p < .001). The telepresence robots were not perceived differently in terms of sociability/morality (b = 0.15, SE = 0.10, t = 1.59, p = .113), activity/cooperation (b = 0.06, SE = 0.06, t = 0.94, p = .346) and perceived competence (b = -0.06, SE = 0.08, t = -0.77, p = .444).

Research question 2 was concerned with whether there would be an association between (I) age and/or (II) gender of the users and attributions of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence of different telepresence systems.

Age was uncorrelated with perceived anthropomorphism (r = − .12, p = .059), sociability/morality (r = .13, p = .099), activity/cooperation (r = .07, p = .339), or competence (r = .01, p = .841). Gender was uncorrelated to attributions of anthropomorphism (r = − .01, p = .876), sociability/morality (r = − .07, p = .333), activity/cooperation (r = − .06, p = .422), or competence (r = − .08, p = .076).

Research question 3 was concerned with whether there would be an association between (I) negative attitudes toward robots and/or (II) anthropomorphic tendency and/or (III) Need for Cognition and/or (IV) Affinity for Technology Interaction of users and the perceived (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence of different telepresence systems (Table 4).

We found that only NFC was associated with attributions of sociability/morality and activity/cooperation. None of the other variables were correlated with attributions of social dimensions.

6.2.2 Robots

Hypothesis 1

proposed that the more morphologically human-like a robot is designed, the more (a) anthropomorphism, (b) sociability/morality, and (c) activity/cooperation is attributed to it.

The robots were perceived differently in terms of anthropomorphism (b = 0.29, SE = 0.04, t = 7.02, p < .001). The most human-like robot Pepper was perceived as more anthropomorphic than PhantomX (ΔM = 0.58, SE = 0.08, p < .001) and Nao (ΔM = 0.50, SE = 0.08, p < .001). Nao and Phantom X did not differ from each other in terms of perceived anthropomorphism (p = .603). We could therefore partly confirm Hypothesis 1a.

The robots differed in terms of perceived sociability/morality (b = 0.39, SE = 0.05, t = 7.25, p < .001): PhantomX was attributed less sociability/morality than Nao (ΔM = 0.80, SE = 0.10, p < .001) and Pepper (ΔM = 0.80, SE = 0.10, p < .001). Pepper and Nao were attributed the same amount of sociability/morality (p = .999). We could therefore partly confirm Hypothesis 1b.

In terms of perceived activity/cooperation, the robots differed from each other (b = 0.13, SE = 0.04, t = 2.97, p = .003). Pepper was attributed more activity/cooperation than PhantomX (ΔM = 0.25, SE = 0.08, p = .011) and Nao (ΔM = 0.19, SE = 0.08, p = .049). Nao and PhantomX did not differ from each other in terms of attributed activity/cooperation (p = .770). We therefore partly confirm Hypothesis 1c.

Hypothesis 2

proposed that the more morphologically human-like a robot is designed, the less competence is attributed to it. The robots differed in terms of perceived competence (b = 0.18, SE = 0.04, t = 4.14, p < .001): Pepper was perceived as more competent than PhantomX (ΔM = 0.34, SE = 0.08, t = -4.06, p = .000) and Nao (ΔM = 0.44, SE = 0.08, t = -5.36, p < .001). PhantomX and Nao were attributed the same amount of competence (p = .494). We therefore reject Hypothesis 2.

Hypothesis 3

proposed that people identifying as female attribute less anthropomorphism to robots than people identifying as male. The correlational analysis did not reveal any differences between people identifying as female and people identifying as male in the attribution of anthropomorphism (r = − .02, p = .750), and therefore, we reject Hypothesis 3.

Research question 4 was concerned with whether there are associations between (I) negative attitudes toward robots and/or (II) anthropomorphic tendency of users and the attributions of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence to robots (Table 5).

We found that NARS was negatively correlated with anthropomorphism, with sociability/morality, with activity/cooperation, and with competence. Anthropomorphic tendency showed a positive correlation with perceived anthropomorphism, but no correlations with sociability/morality, activity/cooperation, or competence.

Finally, with research question 5, we investigated whether there were differences between various robots, including telepresence systems, in terms of perceived trustworthiness and did not find any (b = 0.05, SE = 0.03, t = 1.61, p = .108).

6.3 Summary

We evaluated attributions of anthropomorphism, sociability/morality, activity/cooperation, competence, and trustworthiness to EDTs. Participants could indicate that a given attribution might not be feasible for EDTs at all to avoid random clicking or a central tendency. This also accounted for missing data, since, for instance, only 27% of the participants attributed sociability/morality to the mechanical-looking PhantomX robot.

We found that the telepresence systems differed only in terms of perceived anthropomorphism, but not in terms of sociability/morality, activity/cooperation, or competence. We suggest that this was due to Double3 looking less mechanical, whereas the smaller, four-wheeled TPS AGV “Hubert” was clearly identifiable as a vehicle. As for the remaining social dimensions, it is possible that the person on the tablet was evaluated rather than the TPS itself, and thus, no differences were evident. For robots, we found that Pepper, the most human-like robot, was attributed more anthropomorphism, activity/cooperation, and competence than Nao and PhantomX. Interestingly, Nao, a robot that is - albeit less so than Pepper - designed to look human-like, was perceived as anthropomorphic as PhantomX, a clearly mechanical robot. For competence and activity/cooperation, Nao and PhantomX again did not differ from each other. In terms of sociability/morality, Nao and Pepper did not differ from each other, but PhantomX was perceived as less sociable/moral than both other robots. Both gender and age were not associated with attributions of social dimensions, neither for robots nor for TPS. In terms of interindividual differences, we found small to very small positive correlations between the tendency to anthropomorphize and attributions of anthropomorphism, sociability/morality, and competence to robots, and – surprisingly – a small negative correlation between the tendency to anthropomorphize and attribution of activity/cooperation. We nonetheless emphasize that the participants in the present study rated their tendency to anthropomorphize rather low and therefore, interpret these results as preliminary. Interestingly, attitudes toward robots were not associated with attributions of social dimensions. For TPS, only Need for Cognition showed small positive correlations with attributions of sociability/morality and activity/cooperation. As for trustworthiness, we did not find any differences between the different EDTs.

7 Study 2

In Study 2, we investigated - analogously to Study 1 - how different EDTs were evaluated in terms of the social dimensions anthropomorphism, sociability/morality, activity/cooperation, and competence. Contrary to Study 1, we did not provide the option to indicate that participants did not feel certain attributions of social dimensions were applicable to EDTs. Furthermore, participants evaluated the different EDTs with regard to perceived trustworthiness.

7.1 Participants

The sample was acquired via Prolific Academic (www.prolific.co). The total sample size was N = 306. Participants who had already taken part in the first study were excluded from participation. To account for outliers in the data, we proceeded analogously to Study 1. We excluded one participant because their data for the dependent variables deviated more than +/- 3 SD from the sample mean. We report the results excluding outliersFootnote 3. The final sample consisted of N = 305 participants. To take part in the study, participants were required to be fluent in German. The mean age of the sample was M = 33.19 (SD = 11.54, range: 18–72 years). The sample consisted of 135 female, 166 male, and four non-binary participants and was mostly highly-educated, with 51.47% having obtained a university degree (high-school diploma: 30.16%, other degrees: 18.37%). All participants had a degree. Of the participants, 20.66% reported prior experience with TPS and 19.67% had prior knowledge about TPS. For robots, 37.38% of the participants indicated that they had prior knowledge and 40.98% indicated that they had prior experience.

7.2 Results

We fitted Mixed Models with random intercepts for the dependent variables, attributed anthropomorphism, sociability/morality, activity/cooperation, competence (Fig. 5) and trustworthiness (Fig. 6) across the different types of stimuli and, if applicable, computed post-hoc Tukey tests. Data revealed a non-normal distribution for all dependent variables. We thus employed bootstrapping with 10,000 iterations. Controlling for participants’ age and genderFootnote 4, we investigated via correlation analyses how personality variables, namely Need for Cognition, Affinity for Technology Interaction, anthropomorphic tendency, and negative attitudes toward robots, are associated with social perception of EDTs.

7.2.1 Telepresence systems

Research question 1 proposed that different telepresence systems would be perceived differently in terms of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence. We found that the telepresence systems were indeed perceived differently in terms of anthropomorphism (b = 0.17, SE = 0.02, t = 8.64, p < .001): AGV “Hubert” was perceived as less anthropomorphic than Double3 (ΔM = -0.35, SE = 0.04, t = -8.64, p < .001).

The telepresence robots were also perceived differently in terms of sociability/morality (b = 0.11, SE = 0.02, t = 6.61, p < .001): Double3 was perceived as possessing more sociability/morality than AGV “Hubert” (ΔM = -0.23, SE = 0.03, t = -6.61, p < .001).

In terms of perceived activity/cooperation (b = -0.04, SE = 0.03, t = -1.34, p = .181) and perceived competence (b = 0.07, SE = 0.06, t = 1.16, p = .247), we did not find any differences between the two telepresence robots.

Research question 2 was concerned with whether there would be an association between (I) age and/or (II) gender of the users and attributions of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence of different telepresence systems.

Correlations between age and gender and social dimensions were, even if significant, very low (age: ranthropomorphism = − 0.09, panthropomorphism = 0.03, rsociability/morality = − 0.08, psociability/morality = 0.057, ractivity/cooperation = − 0.01, pactivity/cooperation = 0.720, rcompetence = − 0.04, pcompetence = 0.271; gender: rsociability/morality = 0.08, psociability/morality = 0.042, ranthropomorphism = 0.08, panthropomorphism = 0.059, ractivity/cooperation = 0.00, pactivity/cooperation = 0.982, rcompetence = − 0.01, pcompetence = 0.812).

Research question 3 was concerned with whether there would be an association between (I) negative attitudes toward robots and/or (II) anthropomorphic tendency and/or (III) Need for Cognition and/or (IV) Affinity for Technology Interaction of users and the perceived (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence of different telepresence systems (Table 6).

Perceived anthropomorphism showed a small negative correlation with NARS and ATI, but was not correlated with anthropomorphic tendency or NFC. Perceived sociability/morality was negatively correlated with NARS and positively correlated with ATI. Perceived sociability/morality was not correlated with NFC and anthropomorphic tendency. Perceived activity/cooperation was negatively correlated with NARS, and positively correlated with ATI. Perceived competence was positively correlated with ATI, but not with NFC, anthropomorphic tendency, or NARS.

7.2.2 Robots

Hypothesis 1

proposed that the more morphologically human-like a robot is designed, the more (a) anthropomorphism, (b) sociability/morality, and (c) activity/cooperation is attributed to it.

The three robots did not differ in terms of perceived anthropomorphism (b = -0.01, SE = 0.01, t = -0.49, p = .622). Therefore, we rejected Hypothesis 1a.

All three robots differed in terms of perceived sociability/morality (b = 0.14, SE = 0.01, t = 13.69, p < .001): Pepper was perceived as more sociable/moral than both Nao (ΔM = 0.13, SE = 0.04, t = 3.52, p = .001) and PhantomX (ΔM = 0.70, SE = 0.04, t = 18.98, p < .001). PhantomX was furthermore perceived as less sociable/moral than Nao (ΔM = 0.57, SE = 0.04, t = 15.46, p < .001). We could therefore confirm Hypothesis 1b.

In terms of perceived activity/cooperation, the three robots differed from each other (b = -0.07, SE = 0.01, t = -7.92, p < .001). Post-hoc Tukey tests revealed that PhantomX and Pepper did not differ significantly from each other (p = .537). However, Nao was perceived as significantly less active/cooperative than PhantomX (ΔM = 0.27, SE = 0.03, t = 8.14, p < .001) and Pepper (ΔM = 0.30, SE = 0.03, t = 9.21, p < .001), therefore we rejected Hypothesis 1c. Gender was a significant predictor (b = -0.13, SE = 0.05, t = -2.67, p = .008). However, post-hoc tests did not reveal significant contrasts for the variable gender.

Hypothesis 2

proposed that the more morphologically human-like a robot is designed, the less competence is attributed to it. All three robots differed in terms of perceived competence (b = -0.05, SE = 0.02, t = -3.12, p = .002): Pepper was perceived as more competent than PhantomX (ΔM = 0.35, SE = 0.06, t = 5.45, p < .001) and Nao (ΔM = 0.56, SE = 0.06, t = 8.73, p < .001). PhantomX was perceived as more competent than Nao (ΔM = 0.21, SE = 0.06, t = 3.29, p = .003). We therefore reject Hypothesis 2.

Hypothesis 3

proposed that people identifying as female attribute less anthropomorphism to robots than people identifying as male. The correlational analysis did not reveal any differences between people identifying as female and people identifying as male in the attribution of anthropomorphism (r = .01, p = .747) and therefore, we reject Hypothesis 3.

Research question 4 was concerned with whether there are associations between (I) negative attitudes toward robots and/or (II) anthropomorphic tendency of users and the attributions of (a) anthropomorphism, (b) sociability/morality, (c) activity/cooperation, and (d) competence to robots (Table 7).

We found that NARS was negatively correlated with anthropomorphism, with sociability/morality, with activity/cooperation, and with competence. Anthropomorphic tendency showed a positive correlation with perceived anthropomorphism, but no correlations with sociability/morality, activity/cooperation, or competence.

Finally, with research question 5, we investigated whether there are differences between various robots, including telepresence systems, in terms of perceived trustworthiness and found that the robots were perceived differently in terms of trustworthiness (b = 0.21, SE = 0.03, t = 7.58, p < .001). Post-hoc Tukey tests revealed that PhantomX was attributed less trustworthiness than the robots Nao (ΔM = -0.97, SE = 0.12, t = -8.17, p < .001) and Pepper (ΔM = -1.35, SE = 0.12, t = -11.51, p < .001) and both telepresence systems AGV “Hubert” (ΔM = -1.14, SE = 0.12, t = -9.39, p < .001) and Double3 (ΔM = 1.33, SE = 0.12, t = -11.14, p < .001). Furthermore, Pepper was perceived as more trustworthy than Nao (ΔM = 0.38, SE = 0.12, t = 3.31, p = .009), and as trustworthy as AGV “Hubert” (p = .385) and Double3 (p = .999). We did not find any differences between Nao and AGV “Hubert” (p = .610), but Nao was perceived as less trustworthy than Double3 (ΔM = 0.36, SE = 0.12, t = 3.10, p = .017). Between the telepresence systems, we did not find any differences (p = .481).

7.3 Summary

We evaluated attributions of anthropomorphism, sociability/morality, activity/cooperation, and competence, and trustworthiness to EDTs. In contrast to Study 1, participants were forced to make a choice for each EDT.

We found that the telepresence systems were perceived differently in terms of anthropomorphism and sociability/morality: Double3 was perceived as more anthropomorphic and sociable/moral than AGV “Hubert”. Both TPS did not differ in terms of attributed competence or activity/cooperation.

As for robots, attributions of anthropomorphism did, even though graphic examination of the Estimated Marginal Means might have suggested so, not yield any statistically significant differences. Even though anthropomorphism is multiply determined (Epley et al. 2007) and not reduced to design choices, it was unexpected that human-like design seemed to have no significant effect on perceived anthropomorphism. In terms of perceived sociability/morality, the most human-like robot, Pepper, was perceived as most sociable/moral, as we hypothesized. Furthermore, Nao was perceived as more sociable/moral than PhantomX, indicating that differences in attributed sociability/morality might be rather due to human-like design than anthropomorphism. In terms of attributed activity/cooperation, Pepper and PhantomX did not differ from each other. Nao was perceived as less active/cooperative than both other robots. As for perceived competence, the most human-like robot Pepper was perceived as more competent than both other robots, and Nao as least competent of all three robots.

We did not find any associations between gender or age and attributions of social dimensions to robots or TPS. We evaluated how interindividual differences affected attributions of social dimensions to EDTs and found inconclusive results: For TPS, only Affinity for Technology Interaction was - albeit only marginally - correlated with all four dimensions of social perception. For robots, we found small negative correlations between Negative Attitude Toward Robots and all four dimensions of social perception. Intuitively, these results were not surprising. In terms of trustworthiness, we found differences between different EDTs, thereby contrasting Study 1. The machinelike robot PhantomX was perceived as least trustworthy. Pepper was perceived as trustworthy as both TPS, indicating a clear preference toward human-like robots and TPS, where the human user is prominently displayed. It is noteworthy that, even though both TPS featured a tablet with a person visible, Pepper was still attributed the same amount of trustworthiness. Nao was perceived as more trustworthy than PhantomX, as trustworthy as AGV “Hubert”, but less trustworthy than Pepper and Double3. These differences were less clearly interpretable, but further indicated that human-likeness could have played a role in the attribution of trust to EDTs.

8 General discussion

With the present studies, we were aiming at a more thorough understanding of how EDTs were perceived if they were shown in a social interaction, precisely a space sharing conflict, with humans. We investigated this with two consecutive studies. We presented five videos of three robots and two telepresence systems. Both TPS were equipped with a tablet on which the same person was visible and initiated the interaction. We asked participants how they perceived the different EDTs in terms of social perception, that is, anthropomorphism, sociability/morality, activity/cooperation, and competence. We furthermore investigated how trustworthy the different types of EDTs were perceived.

8.1 Telepresence Systems

Telepresence systems were, in both studies, perceived as different with regard to anthropomorphism, which is not surprising given that Double3, with its rather tall and, at least rudimentary, human-like form was perceived as more anthropomorphic than AGV “Hubert”, the four-wheeled vehicle. Furthermore, in Study 2, Double3 was perceived as more sociable/moral than AGV “Hubert”. It is interesting that this difference did not occur as statistically significant in Study 1, even though visual inspection of the data implied so. We suggest that this was caused by the easy-out option (‘does not fit the EDT’) we provided participants with in Study 1, i.e., participants could indicate that a given attribute was not fitting for the EDT. If participants were forced to choose, it is possible that only then anthropomorphism of the TPS played a role in the attribution of sociability/morality. In previous studies, results restricted to robots indicated a positive association between human-likeness of robots and perceived sociability/morality (Bretschneider et al. 2022; Mandl et al. 2022b). However, in both studies, both TPS were evaluated as equally competent and active/cooperative. Whether evaluations were really indicative of the TPS or rather of the person visible should be topic of follow-up studies to deepen the understanding of social perception of TPS. We suggest that this should be controlled for in subsequent studies. Furthermore, since both TPS were equipped comparably and therefore, usable in the same way, perceived activity/cooperation might not differ. Taken together, the findings imply that if the intended use-case requires people to attribute a more positive valence to the telepresence system, it might be advisable to design them in a more human-like way to evoke more positive feelings in terms of perceived sociability/morality.

We did not find evidence that age or gender played a role in the evaluation of TPS, therefore contrasted previous research applicable to robots (Letheren et al. 2016; Scopelliti et al. 2005). We would like to point out that the age range of our samples was 18 to 66 (mean: 29) and 18 to 72 (mean: 33), respectively, and thus, was not indicative of elderly people who might be confronted with TPS in healthcare or eldercare settings. Gender was uncorrelated with attributions of social dimensions in the present studies, which contrasted with previous research (e.g., Bretschneider et al. 2022; Mandl et al. 2022a, b; Scopelliti et al. 2005). Prior studies showed that gender seemed to play a role in the evaluation of robots in terms of competence and anthropomorphism (Bretschneider et al. 2022; Mandl et al. 2022a). We subsume that future research should focus on these two distinct dimensions to investigate the possibility of gender effects. Only few participants had prior knowledge of (13.99% and 19.67%, respectively) or experience (15.36% and 20.66%) with telepresence systems. Therefore, we assume that most participants were unaware of the systems’ capabilities. This naivety probably affected the overall evaluation of the systems. With increasing adoption, system knowledge and experience are likely to increase, potentially changing perceptions (cf. Sarda Gou et al. 2021 for similar findings for social robots).

8.2 Robots

In terms of anthropomorphism, we found that robots were perceived differently in Study 1, where the most human-like robot was perceived as most anthropomorphic and, surprisingly, Nao and PhantomX did not differ from each other. These results further indicate that anthropomorphism is multiply determined (Epley et al. 2007) and not only restricted to design choices. Contrary, in Study 2, the graphical examination of the data indicated differences between the robots, but statistical analysis did not reveal a significant difference. We point out that in both studies, but especially in Study 2, the overall attributions of anthropomorphism to the robots were in the lower half of the scale, which could be partly responsible for non-detectable differences in Study 2. However, in Study 2, all three robots were perceived differently in terms of sociability/morality. This is interesting since it implied that sociability/morality was not necessarily positively associated with anthropomorphism, which would have seemed rather intuitive (e.g., Fossa and Sucameli 2022). Pepper, the most human-like robot, was perceived as the most sociable/moral, followed by Nao and PhantomX. In Study 1, Nao and Pepper did not differ from each other but PhantomX was again perceived as least sociable/moral. It is not surprising that the results regarding Nao are more ambiguous: Even though Nao is designed as more human-like than PhantomX, it still differs from Pepper with regard to design aspects (e.g., size, face, limbs, movement). Taken together, these findings imply that robots with a human-like design are, if sociability/morality are required, the preferable choice, based on our findings and prior research (Bretschneider et al. 2022; Mandl et al. 2022b; McKee et al. 2022). It should be mentioned, however, that Pepper, although the most human-like out of the three robots we presented, was still obviously a robot and designed to look friendly rather than indistinguishable from a real human.

In both studies, Nao was perceived as less active/cooperative than Pepper. Attributed activity/cooperation for PhantomX was less clear, as the robot was perceived as equally active/cooperative as Pepper (Study 2) and as Nao (Study 1). The notion that Nao was less active/cooperative than Pepper and, partly, PhantomX, could be attributable to the toy-like design of Nao. Additionally, both Pepper and PhantomX moved fluently, whereas Nao showed a shuffling gait pattern and moved very slowly, compared to the other two robots. The presented video was already speeded up to mitigate the differences, but this might have resulted in less attributed activity/cooperation nonetheless. These qualities might also be negatively associated with perceived competence: Nao was perceived as least competent (Study 2) or as competent as PhantomX (Study 1), whereas Pepper was perceived as the most competent robot in both studies. Since we showed a social interaction scenario, where competence was attributed to the way the robot would ‘solve’ the situation, Pepper indeed solved the situation more elegantly by waving as a sign of ‘thank you’ whereas PhantomX moved in a short sideways-forward pattern to show its ‘thankfulness’. Previous research showed that industrial robots were perceived as more competent than android or social robots (Bretschneider et al. 2022; Mandl et al. 2022b). However, whilst for the specific case of industrial robots, human-likeness and attributions of competence were negatively associated, this might have been more of a product of the robot’s intended application area than of perceived human-likeness, indicating that attributed competence could significantly depend on the context and the behavior required in a situation. In an interaction scenario, the more human-like robot was potentially ascribed more competence than the less human-like robot, while an industrial robot appeared to be more competent than human-like robots in a manufacturing scenario (Bretschneider et al. 2022; Mandl et al. 2022b). While we describe PhantomX as a mechanical robot, participants might have also perceived it as zoomorphic (i.e., animal-like) and even as arachnoid (i.e., spider-like). PhantomX might thus trigger negative reactions in terms of sociability/morality. Furthermore, such perceptions could also explain why participants rated PhantomX as less anthropomorphic than Pepper. However, we need to point out that we used video stimuli for the present study. Therefore, attributions of competence could have been different if a physical interaction took place, as Paetzel et al. (2020) suggested: For perceived competence, the content of the interaction was less important than the robot’s design and interaction modalities. Differences in attributions of social dimensions between both studies might have also been caused by the fact that in Study 1, participants were forced to make choices in attributions of social dimensions, and thus, part of the variance was lost and the verdicts converged. For robots, we did not find any indications that gender played a role in attributions of social dimensions, thus contrasting previous research (Mandl et al. 2022a; Scopelliti et al. 2005). We take this, coupled with the findings for TPS, as an indicator that gender of the users might only play a subordinate role in the evaluation of EDTs.

8.3 Interindividual differences

In terms of interindividual differences, we found mixed results over two studies: Negative attitudes toward robots were, in Study 1, negatively associated with perceptions of anthropomorphism, sociability/morality, and activity/cooperation, but not competence. In Study 2, none of the social dimensions were associated with attitudes toward robots whatsoever. Affinity for Technology Interaction was positively correlated with attributions of social dimensions in Study 2, but not in Study (1) While it was not surprising that ATI was positively associated with attributions of social dimensions since it indicates an individual’s tendency to extensively and positively interact with new technologies, previous findings for robots showed that, even though this association was rather intuitive, correlations between ATI and social dimensions did not always prevail (Mandl et al. 2022a) or only applied to specific dimensions, e.g., sociability (Mandl et al. 2022a, b). Need for Cognition was positively correlated with attributions of sociability/morality and activity/cooperation in Study 1, but not in Study (2) Interestingly, previous research suggested that individuals higher in NFC anthropomorphized less than individuals low in NFC (Epley et al. 2007). We would like to emphasize that the anthropomorphic tendency was uncorrelated to all four social dimensions, including perceived anthropomorphism, in both studies, which was surprising. Given that in the sample, the anthropomorphic tendency was quite low with a maximum of 7.60 and 7.62 a mean of 2.93 and 3.20, on a potential range from 1 to 15, we suspect that the lack of positive association might have been due to the limited variance. Taken together, these results showed again that interindividual differences are, if at all, randomly associated with attributions of social dimensions but should not be discarded yet.

8.4 Trust

In terms of perceived trustworthiness, we found mixed results: In Study 1, we did not find any differences between the robots and telepresence systems, which is surprising. In Study 2, the EDTs differed in terms of trustworthiness. Research has shown that anthropomorphism increased trust (Waytz et al. 2014). This made our results more difficult to interpret: As mentioned, in Study 1, both TPS and robots were perceived differently in terms of anthropomorphism but not in terms of trustworthiness, whereas in Study 2, only the TPS were perceived differently in terms of anthropomorphism. They did, however, differ in terms of trustworthiness. This suggests an independence between perceived anthropomorphism and attributed trust, at least when participants observed a space sharing conflict between an EDT and a human being. Contrary to that, de Visser et al. (2016) reported that anthropomorphization of cognitive agents increased trust resilience, which indicated an influence of anthropomorphism on trust. Further research will hopefully paint a clearer picture of whether associations between trust and anthropomorphism are viable.

9 Limitations

The studies were designed as online studies where videos of EDTs and a human being in a space sharing conflict were rated in terms of social dimensions and perceived trustworthiness. Online studies have several limitations: The sample is subject to a self-selection bias and thus often primarily consists of young and highly educated adults. Furthermore, it is unknown whether the participants were distracted while working on the study. Despite these limitations, online studies are useful for basic and first studies since they deliver large samples size at reasonable effort for gaining first insights. Of course, more detailed investigations in the laboratory and in the field are required to gain a deeper understanding of these initial results and findings. Due to size differences between the EDTs, the camera perspective had to be adapted and could have affected how the EDTs were perceived. Additionally, the EDTs have different movement patterns and speeds. To keep the total length of the survey acceptable, the videos were quite short, 20 s on average. Therefore, it is possible that participants did not perceive the space sharing conflict as intended. Follow-up studies could, for instance, investigate a space sharing conflict in virtual reality or real world settings to further the understanding of perception of EDTs. The EDTs approached the person from the left, which, in the country the studies took place in, would have given the person right of way in traffic situations. Whether this had an effect on how the EDT was perceived should be further investigated. Participants were not in direct contact with the EDTs, which would probably change their perception. Subsequent studies should include participants being in direct, close contact with the EDTs. The study was designed in German, thus only participants with at least good knowledge in German were able to participate. Hence, we did not integrate cultural differences in our study but recommend replication studies in different languages to gain a deeper understanding of potential cultural differences in the evaluation of EDTs. We thus refrain from generalizing our findings over cultures, age groups and educational backgrounds, and recommend to aim at a more diverse sample in case of replication studies.

10 Conclusion

To conclude, we found evidence that TPS and robots were indeed perceived differently in social interactions such as space sharing conflicts. Interestingly, the results between the two studies differed and made a preliminary interpretation difficult: Overall, we found indications that the TPS were perceived differently in terms of anthropomorphism, but results for other social dimensions were ambiguous. The same held true for robots: Differences in terms of social dimensions were evident but discordant between both studies. Slightly higher attributions of sociability/morality, competence, and activity/cooperation were visible for the most human-like robot, Pepper. We also found evidence that the most machinelike robot, PhantomX, was attributed lower levels of social dimensions overall. We found additional evidence that negative attitudes toward robots entail less attributed social dimensions overall, with the exception of attributed competence to TPS. Interestingly, attributed competence to robots was in a similar range, indicating that this specific dimension was less dependent on general attitudes than dimensions which were inherently human-like. In terms of trustworthiness, the results from both studies contradicted each other, and thus, further research is needed. This could be achieved, for instance, by focusing on trust, e.g., by incorporating examples of erroneous behavior of the TPS and by investigating whether different TPS are evaluated differently if they make the same mistakes. Along these lines, Waytz et al. (2014) concluded that anthropomorphic design increased trust in an autonomous vehicle, and Gulati et al. (2018) provided a model including, among other factors, competence as affecting trust. Therefore, it is possible that evaluations on different dimensions of social perception result in different levels of trust in the case of erroneous behavior. Future work should be concerned with actual interaction, or interaction in virtual reality, between EDTs and humans in diverse settings such as first-contact or work scenarios to further evaluate context-specificities in the social perception of EDTs. Furthermore, we strongly suggest to investigate TPS as more flexible alternatives to robots due to the possibility of remote control in differing scenarios and how different design choices affect (social) perception of TPS. Our results also indicate that overall, differences in social perception of robots prevail and should be taken into account, but that design choices need to be coordinated with application areas, as was suggested by Roesler et al. (2022).

Notes

Study 1 is published as a conference paper: Mandl et al. (2023)

Gender was transformed to a dichotomous variable due to the low count of participants who identified as non-binary (female = 1, male = 2).

The results including outliers only yielded marginal deviations and will thus not be reported in the following.

Gender was transformed to a dichotomous variable due to the low count of participants who identified as non-binary (female = 1, male = 2).

References

Arras KO, Cerqui D (2005) Do we want to share our lives and bodies with robots? A 2000-people survey. Tech Rep 0605–001:1–41

Babamiri M, Heidarimoghadam R, Ghasemi F, Tapak L, Mortezapour A (2022) Insights into the relationship between usability and willingness to use a robot in the future workplaces: studying the mediating role of trust and the moderating roles of age and STARA. PLoS ONE 17(6):e0268942. https://doi.org/10.1371/journal.pone.0268942

Bartneck C, Nomura T, Suzuki T, Kato K, Kanda T (2005) A cross-cultural study on attitudes towards robots. Proc HCI Int 2005 3. https://doi.org/10.13140/RG.2.2.35929.11367

Bishop L, van Maris A, Dogramadzi S, Zook N (2019) Social robots: the influence of human and robot characteristics on acceptance. Paladyn J Behav Rob 10(1):346–358. https://doi.org/10.1515/pjbr-2019-0028

Bless H, Wänke M, Bohner G, Fellhauer RF, Schwarz N (1994) Need for cognition: a scale measuring engagement and happiness in cognitive tasks. Z Für Sozialpsychologie 25:147–154

Bretschneider M, Mandl S, Strobel A, Asbrock F, Meyer B (2022) Social perception of embodied digital technologies—A closer look at bionics and social robotics. Gruppe Interaktion Organisation Zeitschrift Für Angewandte Organisationspsychologie (GIO). https://doi.org/10.1007/s11612-022-00644-7

Cacioppo J, Petty RE (1982) The need for Cognition. J Personal Soc Psychol 42(1):116–131. https://doi.org/10.1037/0022-3514.42.1.116

Carpinella CM, Wyman AB, Perez MA, Stroessner SJ (2017) The Robotic Social Attributes Scale (RoSAS): Development and Validation. Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 254–262. https://doi.org/10.1145/2909824.3020208

R Core Team (2021) R: A language and environment for statistical computinghttps://www.R-project.org/

Cuddy AJC, Fiske ST, Glick P (2008) Warmth and competence as Universal Dimensions of Social Perception: the Stereotype Content Model and the BIAS Map. Adv Exp Soc Psychol 40:61–149. https://doi.org/10.1016/S0065-2601(07)00002-0

de Visser EJ, Monfort SS, McKendrick R, Smith MAB, McKnight PE, Krueger F, Parasuraman R (2016) Almost human: Anthropomorphism increases trust resilience in cognitive agents. J Experimental Psychology: Appl 22(3):331–349. https://doi.org/10.1037/xap0000092

Duffy BR (2003) Anthropomorphism and the social robot. Robot Auton Syst 42(3–4):177–190. https://doi.org/10.1016/S0921-8890(02)00374-3

Epley N, Waytz A, Cacioppo JT (2007) On seeing human: a three-factor theory of anthropomorphism. Psychol Rev 114(4):864–886. https://doi.org/10.1037/0033-295X.114.4.864