Abstract

Rising mortality rates in recent years have elevated melanoma to the ranks of the world’s most lethal cancers. Dermoscopy images (DIs) have been used in smart healthcare applications to determine medical features using deep transfer learning (DTL). DI-related lesions are widespread, have local features, and are associated with uncertainty. There are three components to our bi-branch parallel model: (1) the Transformer module (TM), (2) the self-attention unit (SAU), and (3) a convolutional neural network (CNN). With CNN and TM able to extract local and global features, respectively, a novel model has been developed to fuse global and local features using cross-fusion to generate fine-grained features. Parallel systems between the branches are merged using a feature-fusion architecture, resulting in a pattern that identifies the characteristics of a variety of lesions. Moreover, this paper proposes an optimized and lightweight CNN architecture version (optResNet-18) that discriminates skin cancer lesions with high accuracy. To verify the proposed method, the procedure evaluated the accuracy for the ISIC-2019 and the PH2 datasets as 97.48 and 96.87%, respectively, a significant difference over traditional CNN networks (e.g., ResNet-50 and ResNet-101) and the TM. The proposed model outperforms state-of-the-art performance metrics such as AUC, F1-score, specificity, precision, and recall. The proposed method can also be used as a generalizable model to diagnose different lesions in DIs with smart healthcare applications by combining DTL and medical imaging. With the proposed e-Health platform, skin diseases can be detected in real-time, which is crucial to speedy and reliable diagnostics.

Article Highlights

-

A novel approach to classifying multiple lesions in DIs is presented using a Transformer module (TM) with the self-attention unit (SAU).

-

A lightweight and optimized version of deep transfer learning is designed to alleviate overfitting.

-

Test multi-tissue classifying accuracy was 97.48% and 96.87% for two datasets, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Melanoma cancer has risen in recent years to become one of the world’s most serious cancers [1, 2]. One method of diagnosing melanoma is dermoscopy imaging. By using this tool, pigment changes in skin lesions can be assessed in the disease [3]. Despite the difficulty of reducing cancer-related mortality, image processing technologies can improve survival rates through early detection and prognosis of melanoma. Computer-aided diagnostic (CAD) technologies save effort and time. Asymmetry, border, color, size (more than 6 mm), and change of lesions are features of ABCDE [4] metrics used by many medical professionals in assessing lesions. Dermatologists can accurately identify skin lesions in approximately 80% of cases [5]. The degree of homogeneity between and within skin lesions makes it difficult to classify them accurately [6]. In ISIC, which has become the most widely used dataset for skin lesions, all stages of development are included.

There is a considerable amount of asymmetry in the distribution of skin lesion diagnoses in the datasets shown. Thus, there are a number of reasons why it is difficult to classify skin using automated algorithms, including the complexity of the skin. It has been difficult to classify systems due to the difficulties in producing fewer complex models, lightweight network architectures, class imbalances, and fast inference times. Deep learning (DL) is widely accepted for medical applications [7]. However, by employing the Transformer architecture alone, the structure parameters would be significantly increased. Some research indicates that a network’s effectiveness can be enhanced by using CNN as the network’s start [8].

To classify the multi-lesions seen on DIs, Transformer modules (TMs) and self-attention units (SAUs) are implemented in an optimized CNN structure. The Transformer structure and CNN branches are incorporated into the bi-directional feature fusion architecture (it is also called cross-fusion), so that the procedure can extract more comprehensive features from the structure. Current state-of-the-art models identify skin lesions using a binary approach, but the proposed model has classified all skin lesions, besides melanoma, using a multi-class method. Some of the main contributions to this article include:

-

1.

Using a deep CNN structure and the Transformer concept, this paper introduces a smart healthcare system. Moreover, the proposed architecture is bi-directional and two-branch fusion of features or cross-fusion.

-

2.

The suggested approach has been assessed on a variety of DIs in order to assess its ability and comprehensiveness to overcome uncertainty.

-

3.

A feature visualization test verifies the model’s validity on its classification basis.

-

4.

To verify the validity of the suggested approach, the proposed method analyzed two DI sets.

The subsequent sections of the manuscript are structured in the following manner: Sect. 2 of the document provides an in-depth analysis and examination of the existing body of research and literature relevant to the topic at hand. In Sect. 3, a suggested transformer module and optimized ResNet categorization method are introduced. Section 4 discusses outcomes and comparisons. In Sect. 5, the final section of the manuscript, the conclusion is presented.

2 Related work

Several classification methods rely extensively on handcrafted feature sets to generalize to dermoscopic skin images [9, 10]. Inception v3 was employed to diagnose 2032 different illnesses based on 129,450 clinical images by Esteva et al. [11]. Using this network, skin cancer could be accurately diagnosed. Employing heat maps generated by Li et al. [12], the researchers established a lesion index calculation unit for the FCRN model, so coarse classification results can be filtered. Using augmented regularized learning (ARL) blocks, classification layers, and pooling global averages, Zhang et al. [13] introduced a CNN for skin categorization. It was created by Iqbal et al. Based on ISIC 2017 and ISIC 2019 datasets, they classified DIs into several classes by relying on deep CNN models [14]. Hence, 68 convolutional layers are applied to feed feature data down from the top of the structure. In addition to a dermatoscope, Jinnai et al. [15] used a rapid region-based CNN to categorize melanoma from 5846 clinical scans.

According to Yap et al. [16], taking into account patient information can increase categorization reliability. In order to complete the classification, the ResNet50 network’s properties from dermoscopic and macroscopical images were combined. In addition, loss-balancing was developed to handle imbalanced datasets. Srinivasu et al. [17] used a deep CNN with MobileNetV2 and Long Short-Term Memory (LSTM) to classify tumors from HAM10000 images. Additionally, Wang et al. [18] created a self-supervised topological structure that can detect unlabeled images without requiring the user to have any prior knowledge of the class. Pre-trained models like AlexNet, ResNet, and VGGNet have all been compared in transfer learning-based investigations [19, 20].

Branch networks can comprise cascading branches [21]. Various fields can take advantage of this technology, which extracts attributes of different sizes and combines them in an understandable manner. Liu et al. [22] propose a pyramidal structure to deal with visual features at different scales. Lin et al. [23] suggest a feature combination approach with multiple resolutions using Feature Pyramid Networks (FPN).

Wu et al. [24] discovered that the deep convolution vector can be derived by converting the CNN’s structure into a query vector, value vector, and key vector. Because it uses fewer parameters than other transformers, the transformer can use the convolution module in the center. It can achieve an accuracy of 87.75% for the ImageNet dataset.

In MT-TransUNet, an innovative multi-task structure was introduced by Chen et al. [25], multi-task tokens in Transformers were employed for classification and segmentation of the skin collaboratively. In a study by Kumar et al. [26], the Vision Transformer (ViT) classification proved helpful in diagnosing melanonychia nail conditions. Wu et al. [27] built a flexible transformer system, termed FAT-Net, but incorporated an additional transformer branch. Zhou et al. [28] introduced a visual presentation for skin masses detection using the vision-linguistic integration paradigm in Visual Question and Answer (VQA). According to Sayed et al. [29], a method for detecting benign or malignant skin lesions has been developed. Using ISIC 2020, a large publicly available data set, they evaluated their proposed method for melanoma prediction.

It has been shown recently in studies by Iqbal et al. [14], Mahbod et al. [30], Kaur et al. [31], Kassem et al. [32], El-Khatib et al. [33], Ha et al. [34], Kumar et al. [35], Alfi et al. [36], Lu et al. [37], Alenezi et al. [38], and Maqsood and Damaševičius [39] that various methods have been proposed to classify melanoma lesions.

Few studies have shown that their proposed models have been able to be used as an efficient method in smart healthcare applications, and it has been found that some of them are not generalizable. During the melanoma diagnosis process, DI provides the specialist with specific features including well-defined local lesions and extensive systemic manifestations. But the interpretability of these indicators needs expertise. However, deep learning networks help to improve interpretability by extracting separable features. To increase the accuracy of the classification outcomes by considering the global DI features, the proposed method uses the transformer unit to integrate these features.

3 Proposed learning

Utilizing the Transformer module component and the CNN design, our novel approach presents a categorization healthcare system for feature extraction from DIs. Our procedure is multi-stage:

-

1.

The global receptive field of Transformer’s branch unit allows it to extract global features from passing DIs. The DI is then suggested employing a CNN branch design with convolution of local receptive field features.

-

2.

Bidirectional feature fusion enhances recognition rates by obtaining more comprehensive and richer features.

-

3.

In the final phase, the fuse procedure evaluates the categorization vectors from each split, computes the loss, and uses the inverse gradient methodology to fine-tune the model’s settings.

3.1 Transformer module

A transformer is constructed of two components, a decoder and encoder. Figure 1 illustrates that the encoder component of the transformer’s architecture is sufficient for this specific application. Global receptive fields greatly influence the establishment of the transformation network. An alternative interpretation of Transformers can be likened to a CNN international special. The Transformer Self-Attention system employes a normalized dot product attention structure. The feature vector ai of the input xi is propagated to the outputs qi, ki, and vi. The Self-Attentive model in Self-Attentive supports matrix operations on variables k and q.

Prior to calculating the attention weight matrix, it is imperative to normalize each input mapping. The softmax function is employed to compute the weights when the dimensions of the query and key inputs are dk and dv, correspondingly. The attention weight matrix is then computed by taking the dot product of the query and key inputs. Finally, the output is obtained by taking the weighted sum of the values, using the attention weights. The point multiplication operation is initially determined and subsequently divided by the variable (dk)0.5. The attention (ATN) module is described as follows:

In the context of image processing applications, the matrices representing the values, keys, and query (referred to as V, K, and Q) as well as the resulting matrix output are utilized.

The two “Add and Norm” layers of the encoder are denoted by the Norm and Add blocks.

Feed-forward (FF) networks and multi-head attention networks are respectively referred to as FeForNetwork and MHATN. In addition, X indicates the input to FeFor or MHATN. Output and input have the same dimensions, allowing them to be added. MHATN(X) and FeFor(X) represent outputs. The FF layer is completely coupleed to two other layers. There is no specification regarding the activation function (AF) employed in the second layer, while ReLU is used in the first layer. The following equation represents the first two layers:

Furthermore, it is imperative to acknowledge that the input variable X is presumed to be present in all equations. The size of the resulting matrix obtained through the Feed Forward process corresponds to X’s dimension.

3.2 Modified CNN

The CNN model was constructed utilizing the enhanced ResNet architecture, which incorporates over-parameterized convolution in the depth dimension. The ResNet-18 architecture employs a tensor, denoted as P ∈ RM × Cin. M represents the spatial dimension of the feature map (FM) and Cin indicates the number of channels in the input FM. This architecture is specifically designed to optimize both speed and detection accuracy. The kernel K is a three-dimensional entity denoted by K ∈ RCout × M × Cin. Its output is a feature with dimensions corresponding to the size of Cout, which represents the number of output channels.

The input channels of the P feature map are generated through depthwise convolution performed on the D channel. During the process of depthwise convolution, as depicted in Fig. 2, every input channel undergoes a transformation resulting in D-dimensional features. The output is then evaluated utilizing the equation provided below:

The process of performing convolution with depthwise hyperparameterization involves the utilization of a depthwise convolution kernel J in conjunction with a regular convolution kernel K. The conventional convolutional kernel is combined with the depthwise convolutional kernel, and subsequently, the resulting composite convolutional kernel is employed. The ultimate characteristic is obtained through the process of convolving the feature map.

Figure 2 illustrates the utilization of variables K and J in order to achieve the desired outcome of K′ = JT·K. Moreover, the K′ kernel is applied to each individual DI channel. This results in the extraction of texture characteristics from DI images that are more distinct and of higher quality. Given that K′ is of equal magnitude to the classical convolution kernel, computational capacity remains unaltered. The presence of D ≥ M is a necessary condition for K′ to achieve the same linear transformation as K in standard CNN. Through the use of hyper-parameterized depthwise convolution, it becomes evident that the network is over-parameterized, which further promotes the progression of learnable parameters and expedites training.

3.3 Fusion unit with bi-directional operation

In the fusion layer between the CNN branches and Transformer, there is a bi-directional fusion structure. Using bi-directional fusion (or cross-fusion) of the CNN module and the Transformer branch, the structure becomes more efficient and accurate. The bi-directional fusion model of ResNet-18 is based on the block convolution pool block and encoder block. It was also decided to connect these two components because the distance between them affects parallel computing efficiency. Integrating the two strands is crucial for making full use of the global characteristics obtained in the first half of the transition. This will ensure the receiver field expands and loses local information when the convolution layers overlap. Global features disappear due to layer-by-layer overlap in the attention mechanism. Figure 3a depicts a transition from CNN to Transformer as well as their parallelism and connection to self-attention. CNN is employed for the purpose of extracting local features from X ∈ RH×W×3 DIs. The variables d and M can be utilized as input values for the transformation process, in conjunction with the Y ∈ RM×d parameters. The original input picture undergoes convolution using the parameters d and M of the target fusion layer, resulting in the generation of Y0. The bi-directional feature fusion (i.e., cross-fusion) process is employed to integrate local and global features in both CNN and Transformer models. The utilization of an attention mechanism in the CNN to Transformer unit is illustrated in Fig. 3a, where it facilitates the integration of local CNN attributes with global Transformer features. The feature vectors are combined with a CNN layer and multiple channels. There are two kinds of graphs that are present in this context, namely local feature graphs and global label graphs. In a multi-head self-attention model, the variable H represents the overall count of heads, encompassing X ∈ [Xh], Y ∈ [Yh], and 1 ≤ h ≤ H. The aforementioned description is applicable to both domestic and cross-border mergers.

The utilization of the projection matrix WhQ is observed in (9), while the Attention function operating on Q, K, and V is represented as ATN (Q, K, V). The letters K and V represent specific regional input details, while the letter Q signifies a broader overarching element. The variables WO and WhQ are employed to modify the value of Y. The following equations are employed for the computation of the global-to-local feature fusion architecture:

By using the matrices WhV and WhK, key values are projected. In general, queries are considered local features, while key-value pairs are considered global features. The structure of feature fusion illustrated in Fig. 3b encompasses the CNN up to the Transformer module. It uses two inputs: (1) X ∈ Rhw×C, which represents a local feature graph with C channels in H, W space, and (2) Y ∈ RM×d, which is composed of M and d blocks containing input and output features.

Besides the commonly used serial approach, CNN and Transformer can also be implemented using parallel approaches. The fact that the input of the model is the vector that indicates the entire HI, as shown in Fig. 4, implies that the receptive part is also global. Parallelizing the transformer and roll integration branches of the convolutional neural network creates a bi-branch parallel structure. Figure 4 illustrates the bi-branch parallel network that is the basis for bi-directional cross-fusion.

4 Results

4.1 Dataset

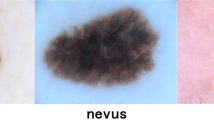

A validation of the suggested model is carried out using the first available ISIC-2019 data set [40]. HAM_10000 and BCN_20000 are included in the ISIC-2019 database (the newest kind of ISIC-2018). The eight classes in ISIC-2019 are: Melanoma (MEL), Vascular Lesion (VASC), Actinic Keratosis (AKIEC), Squamous Cell Carcinoma (SCC), Dermatofibroma (DF), Basal Cell Carcinoma (BCC), Benign Keratosis (BKL), and Melanocytic Nevus (NV). In the second dataset of dermoscopic images, a broad range of patients with varying degrees of skin disease, lesions, or malignancy were presented. Images are taken from the PH2 database, which includes images of normal skin, nevi, and malignant tissues associated with melanoma. A sample of DIs received from the ISIC-2019 and PH2 datasets is shown in Fig. 5.

PH2 contains dermoscopy data with clinical diagnosis and manual segmentation. Dermoscopy images of 200 normal nevi, 200 aberrant nevi, and 40 malignant melanomas (three classes) were captured with 8 bits of color depth. Clinical diagnostic techniques and outcomes at Hispano Hospital include dermoscopic components, such as ABCD recommendations, 7-point checklists, and Menzies methodologies. Scholars in this field hold the aforementioned characteristics in high regard and have successfully incorporated them into several research studies [41].

In addition, the proposed model was validated using the second available ISIC-2019 data set [42]. Two versions of the dataset are available, one with a lower resolution (600 × 450) and one with a higher resolution (1024 × 1024). As a result of including an additional class in the experimental set, the test is larger than the training set. To identify any potential limitations from the implementation of ISIC-2019, a comprehensive series of tests has been conducted in order to optimize our outcomes. ISIC-2019 categorizes skin malignancies into 8 distinct categories, including melanoma, vascular lesions, actinic keratosis, squamous cell carcinoma, dermal fibroma, basal cell carcinoma, benign keratosis, and melanocytic nevus. To verify the efficacy of the proposed model, we employed the second ISIC-2019 dataset [42]. There are two resolutions in the dataset: 600 by 450 pixels and 1024 by 1024 pixels. An unidentified class has been added to the experimental set-in addition to the established training set. We perform several tests to determine whether ISIC-2019 may restrict our ability to achieve optimal results.

4.2 Setting

Our goal is to develop the model’s classified superiority by setting them during the learning process. This leads to value changes when the training strategy is based on an optimization technique. Some other parameters were adjusted using variables used in similar methods as well, and initialization of these parameters was also taken into consideration, since the values of these parameters were changed during the learning procedure by optimizing the convolutional set-in order to achieve a better response and monitoring the training process. The mini-batch size was set at 6, and the training epochs were limited to 100. Furthermore, the fine-tune was set to 0.90 momentum and 0.00001 learning rate. In addition, DIs are divided into three sections. Data from 80% of the set was employed for training, 10% for testing, and 10% for validation. Furthermore, the fivefold cross validation (CV = 5) method was used in another experiment. Due to the different DIs split in all experiments, the response dispersion was low. There are 25,331 DIs in ISIC 2019, which are distributed in a variety of methods, as illustrated in Table 1.

4.3 Evaluations

As a result of the low categorization accuracy of some classes in the ISIC-2019 dataset, the classification distribution has been tested using the criteria described in three fine-tuned models, namely the first model (ResNet50), the second model (ResNet101), and the third model (optResNet).

VASC and DF lesions are more unpredictable than other types of skin lesions. The data set contains a relatively small number of these lesions. Overall, however, the proposed method offers satisfactory outcomes for the diagnosis of skin lesions. From the ISIC-2019 images, Fig. 6 shows the confusion matrix (CM) for 8 various classes of DIs. Based on multiple examinations, DIs can accurately detect 97.3–97.8% of skin lesions.

Additionally, the classification technique was applied to two datasets, shifting the number of rounds and incorporating various lesion features. The classification of both types was confirmed by testing and validation. Sections (a) and (b) of Fig. 7 show accuracy and loss based on convergence. In sections (c) and (d), optResNet-18 was also compared to other similar methods, such as ResNet-50 and ResNet-101, for the same accuracy criteria and loss for optimal convergence. A second experiment used the fivefold cross validation method to divide the data and estimate performance criteria. This work examines the efficiency of the algorithm when the data distribution is changed. The Micro F-measure metric extends multi-label evaluation by employing the F1-score, whereas the Macro F-score integrates precision and recall for each item within a label vector, regardless of the presence of separate labels.

Shown in sections (a, b) are the accuracy and loss of the suggested structure including optResNet-18 with TM and SAU for training and validation DIs. Sections (c, d) compare the convergence of optResNet-18 with other similar CNN models, such as ResNet-50 and ResNet-101, through the same accuracy criteria and loss function

The macro and micro metrics for F1-score, precision, and recall have been calculated separately for both datasets, as revealed in Table 2. The outcomes reported in Table 3 are the evaluation of the same experiments performed in Table 2, which include fivefold (1), fivefold (2), and fivefold (3). There is a possibility that the proposed attention mechanism and enhanced learning algorithms will provide sufficient reliability and generalization. Based on the findings, the proposed approach proves to be competitive in terms of skin cancer detection and classification.

5 Discussion

The lack of generalizability and repeatability has limited previous approaches to diagnosing skin diseases. The plotted ROCs illustrate how the model can distinguish between different types of skin diseases. Using four random categories of unseen DIs, various analyses were performed, and shown in Fig. 8 is the AUC value for each class. As a result of the classification of DF (AUC ≈ 0.873) and VASC (AUC ≈ 0.932) lesions due to their similarity to other classes, they had a lower AUC.

5.1 Comparison

The transformer module (TM) of the optResNet-18 is oriented around the Self-attention unit (SAU) as opposed to previous TL topologies, allowing for rapid network construction while minimizing training and testing errors. It is crucial to distinguish between DL algorithms based on their convergence speed and dependability. The optResNet-18 strategy with TM and SAU was found to have more useful features and converge faster than the other two strategies (e.g., ResNet-50 and ResNet-101). As depicted in Fig. 9, the TM with SAU and bi-directional feature fusion-based melanoma classification method is both stable and reliable, even when the number of DI classes is high.

There are a number of DI classes, but the TM-based melanoma classification method combining SAUs and bidirectional feature fusions is stable and reliable, despite the number of DI categories. The performance of the fusion system and the TM part was measured through maximum, mean, and minimum values (in the first and second rows, respectively)

Here, the maximum, minimum, and mean accuracy is computed and shown so that the highest possible level of accuracy can be achieved for feature extraction and classification. The optResNet-18 and TM with SAU were found to outperform other similar models for categorizing skin lesions, as shown in Table 3. In the context of melanoma detection, bidirectional feature fusion is superior to alternative methods for melanoma classification. Results that surpass or meet expectations using criteria such as accuracy, F1-score, specificity, recall, and precision are highlighted in bold. Due to the high computational complexity of some methods, dermatologists may not consider time delay to be highly desirable, since the dermatologist’s decision is time-sensitive. Based on what the authors know, accuracy does not exceed 94% for methods that rely on identifying skin diseases. It should be noted, however, that these studies are not considered accurate in comparison with the method proposed in this study. The suggested healthcare system uses a small number of learnable parameters, which makes the network simpler and more efficient. Because of the necessity of diagnosing skin cancers as soon as possible, rapid and accurate DI categorization is a crucial feature of early skin cancer identification.

Accurate classification algorithms, which function even without annotated datasets, reduce dermatologists’ burden. This research presented the hybrid approach as a potential way to deal with limited time and space for education. It provided efficient deployment of DIs in healthcare settings and precise categorizations.

5.2 Limitations

There are many limitations associated with DL-based methods for skin cancer categorization, including model robustness, lack of labeled data, imbalance and limitation of classification data, domain compatibility, and efficiency. There are more benign skin cancer cases in most datasets than cancerous cases due to data imbalance. Therefore, this challenge leads to the inaccuracy of classification models. The robustness and efficiency of a classification model refer to its ability to correctly classify with a minimum amount of overhead. A skin cancer classification system must take into account all of these factors. Furthermore, the system should be validated using real-world data to ensure accuracy and reliability. Using clinical scans for skin cancer categorization is problematic because they contain limited morphological information. Various imaging settings, such as angle, light, brightness, contrast, etc., also introduce significant inaccuracies in diagnostic results. This paper shows that the model’s generalization performance decreases when training data is insufficient. Furthermore, various dermoscopic imaging conditions, such as hand tremors, excessive changes in cell color, image darkness, and pixel occlusion, can affect the model and thus reduce detection. In addition, if the limitations of the model consist the increase in the number of layers, the number of parameters, and the change in the initial fine-tuning conditions of the network, the operational efficiency and resource consumption of the model could limit its clinical implementation in a variety of medical devices. Neither the model’s performance nor its detection power can be evaluated based on images where the lesion was mixed with the skin surface. Furthermore, artifacts such as human hair can affect the generation of original images and, therefore, the classification of the images. This is because the model is only able to detect the lesion if it is clearly defined and separated from the rest of the image. Any artifacts, such as hair, can make it difficult to distinguish the lesion from the rest of the image, leading to a misclassification.

6 Conclusion

In this work, a hybrid architecture was introduced for the classification of multiple skin lesions from DIs that integrates the TM and the SAU with an efficient CNN structure. A lightweight architecture was created by lowering the computational complexity of the CNN structure-based feature generation process. Compared to existing comparable methods that are not generalizable, the proposed procedure typically maintains accuracy when complex sections and textures are incorporated into DIs. An extensive analysis of the ISIC-2019 and PH2 datasets demonstrates the generalizability of the suggested approach. An experiment was conducted with a large number of previously unseen DI collections. With the aim of achieving the optimal model, the authors will combine and permute attention processes using DL models. This study employs the idea of cross-fusion in the analysis of dermoscopy photos, with the aim of presenting a concise compilation of traits that are integrated to determine the advancement of a disease. In order to enhance the representation and visualization of traits, the network may employ many photos of a single patient. The authors of this study express their intention to further explore this subject matter in further research endeavors. The potential for elevated misclassification rates may arise from suboptimal picture quality in specific photographs; however, enhancing the quality of all images would need additional computational resources. To make a kind of compromise between accuracy and computational complexity, we plan to use more data sets in the future. Furthermore, we use ensembling methods to integrate attentional mechanisms in the future.

Availability of data and materials

The implemented codes are all available from the corresponding authors. Additionally, dermoscopic images are available through the link below. https://challenge2019.isic-archive.com/.

References

Papachristou I, Bosanquet N. Improving the prevention and diagnosis of melanoma on a national scale: a comparative study of performance in the United Kingdom and Australia. J Public Health Policy. 2020;41:28–38. https://doi.org/10.1057/s41271-019-00187-0.

Siegel RL, Miller KD, Jemal A. Cancer statistics. CA Cancer J Clin. 2019;69(1):7–34. https://doi.org/10.3322/caac.21551.

Andtbacka RH, Kaufman HL, Collichio F, Amatruda T, Senzer N, Chesney J, Delman KA, Spitler LE, Puzanov I, Agarwala SS, Milhem M. Talimogene laherparepvec improves durable response rate in patients with advanced melanoma. J Clin Oncol. 2015;33(25):2780–8. https://doi.org/10.1200/jco.2014.58.3377.

Rigel DS, Friedman RJ, Kopf AW, Polsky D. ABCDE—an evolving concept in the early detection of melanoma. Arch Dermatol. 2005;141(8):1032–4. https://doi.org/10.1001/archderm.141.8.1032.

Nikolaou V, Stratigos AJ. Emerging trends in the epidemiology of melanoma. Br J Dermatol. 2014;170(1):11–9. https://doi.org/10.1111/bjd.12492.

Yang Y, Xie F, Zhang H, Wang J, Liu J, Zhang Y, Ding H. Skin lesion classification based on two-modal images using a multi-scale fully-shared fusion network. Comput Methods Programs Biomed. 2023;1(229):107315. https://doi.org/10.1016/j.cmpb.2022.107315.

Mukadam SB, Patil HY. Skin cancer classification framework using enhanced super resolution generative adversarial network and custom convolutional neural network. Appl Sci. 2023;13(2):1210. https://doi.org/10.3390/app13021210.

Dhamija T, Gupta A, Gupta S, Katarya R, Singh G. Semantic segmentation in medical images through transfused convolution and transformer networks. Appl Intell. 2023;53(1):1132–48. https://doi.org/10.1007/s10489-022-03642-w.

Barata C, Celebi ME, Marques JS. A survey of feature extraction in dermoscopy image analysis of skin cancer. IEEE J Biomed Health Inform. 2018;23(3):1096–109. https://doi.org/10.1109/JBHI.2018.2845939.

Xie F, Fan H, Li Y, Jiang Z, Meng R, Bovik A. Melanoma classification on dermoscopy images using a neural network ensemble model. IEEE Trans Med Imaging. 2016;36(3):849–58. https://doi.org/10.1109/TMI.2016.2633551.

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–8. https://doi.org/10.1038/nature21056.

Li Y, Shen L. Skin lesion analysis towards melanoma detection using deep learning network. Sensors. 2018;18(2):556. https://doi.org/10.3390/s18020556.

Zhang J, Xie Y, Xia Y, Shen C. Attention residual learning for skin lesion classification. IEEE Trans Med Imaging. 2019;38(9):2092–103. https://doi.org/10.1109/TMI.2019.2893944.

Iqbal I, Younus M, Walayat K, Kakar MU, Ma J. Automated multi-class classification of skin lesions through deep convolutional neural network with dermoscopic images. Comput Med Imaging Graph. 2021;1(88):101843. https://doi.org/10.1016/j.compmedimag.2020.101843.

Jinnai S, Yamazaki N, Hirano Y, Sugawara Y, Ohe Y, Hamamoto R. The development of a skin cancer classification system for pigmented skin lesions using deep learning. Biomolecules. 2020;10(8):1123. https://doi.org/10.3390/biom10081123.

Yap J, Yolland W, Tschandl P. Multimodal skin lesion classification using deep learning. Exp Dermatol. 2018;27(11):1261–7. https://doi.org/10.1111/exd.13777.

Srinivasu PN, SivaSai JG, Ijaz MF, Bhoi AK, Kim W, Kang JJ. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors. 2021;21(8):2852. https://doi.org/10.3390/s21082852.

Wang D, Pang N, Wang Y, Zhao H. Unlabeled skin lesion classification by self-supervised topology clustering network. Biomed Signal Process Control. 2021;1(66):102428. https://doi.org/10.1016/j.bspc.2021.102428.

Jojoa Acosta MF, Caballero Tovar LY, Garcia-Zapirain MB, Percybrooks WS. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Med Imaging. 2021;21(1):1–1. https://doi.org/10.1186/s12880-020-00534-8.

Kassani SH, Kassani PH. A comparative study of deep learning architectures on melanoma detection. Tissue Cell. 2019;1(58):76–83. https://doi.org/10.1016/j.tice.2019.04.009.

Ma M, Xia H, Tan Y, Li H, Song S. HT-Net: hierarchical context-attention transformer network for medical CT image segmentation. Appl Intell. 2022;15:1–4. https://doi.org/10.1007/s10489-021-03010-0.

Liu Z, Gao G, Sun L, Fang L. IPG-net: image pyramid guidance network for small object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops; 2020. p. 1026–7. https://doi.org/10.1109/CVPRW50498.2020.00521.

Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2017. p. 2117–25.

Wu H, Xiao B, Codella N, Liu M, Dai X, Yuan L, Zhang L. Cvt: introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF international conference on computer vision; 2021. pp 22–31. https://doi.org/10.1109/ICCV48922.2021.00009.

Chen J, Chen J, Zhou Z, Li B, Yuille A, Lu Y. MT-TransUNet: mediating multi-task tokens in transformers for skin lesion segmentation and classification. Preprint arXiv:2112.01767. 2021 Dec 3.

Kumar K, Kumar C, Nijhawan R, Mittal A. The hybrid vision transformer approach for hyperpigmentation nail disease detection. In: Proceedings of second international conference on sustainable expert systems. Singapore: Springer; 2022. p. 31–42. https://doi.org/10.1007/978-981-16-7657-4_4.

Wu H, Chen S, Chen G, Wang W, Lei B, Wen Z. FAT-Net: Feature adaptive transformers for automated skin lesion segmentation. Med Image Anal. 2022;1(76):102327. https://doi.org/10.1016/j.media.2021.102327.

Zhou L, Luo Y. Deep features fusion with mutual attention transformer for skin lesion diagnosis. In 2021 IEEE international conference on image processing (ICIP); 2021 Sept 19. p. 3797–801. https://doi.org/10.1109/ICIP42928.2021.9506211.

Sayed GI, Soliman MM, Hassanien AE. A novel melanoma prediction model for imbalanced data using optimized SqueezeNet by bald eagle search optimization. Comput Biol Med. 2021;1(136):104712. https://doi.org/10.1016/j.compbiomed.2021.104712.

Mahbod A, Schaefer G, Ellinger I, Ecker R, Pitiot A, Wang C. Fusing fine-tuned deep features for skin lesion classification. Comput Med Imaging Graph. 2019;1(71):19–29. https://doi.org/10.1016/j.compmedimag.2018.10.007.

Kaur R, GholamHosseini H, Sinha R, Lindén M. Melanoma classification using a novel deep convolutional neural network with dermoscopic images. Sensors. 2022;22(3):1134. https://doi.org/10.3390/s22031134.

Kassem MA, Hosny KM, Fouad MM. Skin lesions classification into eight classes for ISIC 2019 using deep convolutional neural network and transfer learning. IEEE Access. 2020;19(8):114822–32. https://doi.org/10.1109/ACCESS.2020.3003890.

El-Khatib H, Popescu D, Ichim L. Deep learning-based methods for automatic diagnosis of skin lesions. Sensors. 2020;20(6):1753. https://doi.org/10.3390/s20061753.

Ha Q, Liu B, Liu F. Identifying melanoma images using efficientnet ensemble: winning solution to the siim-isic melanoma classification challenge. Preprint arXiv:2010.05351. 2020 Oct 11.

Kumar NS, Hariprasath K, Tamilselvi S, Kavinya A, Kaviyavarshini N. Detection of stages of melanoma using deep learning. Multimed Tools Appl. 2021;80:18677–92. https://doi.org/10.1007/s11042-021-10572-1.

Alfi IA, Rahman MM, Shorfuzzaman M, Nazir A. A non-invasive interpretable diagnosis of melanoma skin cancer using deep Learning and ensemble stacking of machine learning models. Diagnostics. 2022;12(3):726. https://doi.org/10.3390/diagnostics12030726.

Lu X, Firoozeh Abolhasani Zadeh YA. Deep learning-based classification for melanoma detection using XceptionNet. J Healthc Eng. 2022. https://doi.org/10.1155/2022/2196096.

Alenezi F, Armghan A, Polat K. A multi-stage melanoma recognition framework with deep residual neural network and hyperparameter optimization-based decision support in dermoscopy images. Expert Syst Appl. 2023;215:119352. https://doi.org/10.1016/j.eswa.2022.119352.

Maqsood S, Damaševičius R. Multiclass skin lesion localization and classification using deep learning based features fusion and selection framework for smart healthcare. Neural Netw. 2023;160:238–58. https://doi.org/10.1016/j.neunet.2023.01.022.

Sloan Kettering Cancer Center. The international skin imaging collaboration. 2019. Available online: https://www.isic-archive.com/#!/topWithHeader/wideContentTop/main/. Accessed on 15 Dec 2020.

Mendonça T, Ferreira PM, Marques JS, Marcal AR, Rozeira J. PH 2-A dermoscopic image database for research and benchmarking. In 2013 35th annual international conference of the IEEE engineering in medicine and biology society (EMBC); 2013, July. p. 5437–40. https://doi.org/10.1109/EMBC.2013.6610779.

Combalia M, Codella NC, Rotemberg V, Helba B, Vilaplana V, Reiter O, et al. Bcn20000: dermoscopic lesions in the wild. Preprint arXiv:1908.02288; 2019.

Funding

No funding was received.

Author information

Authors and Affiliations

Contributions

HGZ and KR planned the experiments. KR carried out the implementations of the proposed model. KR and HGZ contributed to the interpretation of the results. KR and HGZ took the lead in writing the manuscript. KR and HGZ reviewed the manuscript and provided critical feedback and helped shape the research, analysis, and manuscript editing.

Corresponding author

Ethics declarations

Competing interests

There is no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rezaee, K., Zadeh, H.G. Self-attention transformer unit-based deep learning framework for skin lesions classification in smart healthcare. Discov Appl Sci 6, 3 (2024). https://doi.org/10.1007/s42452-024-05655-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-024-05655-1