Abstract

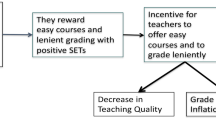

Student evaluations of teaching (SET) are used to measure faculty’s teaching effectiveness and to make high-stakes personnel decisions about hiring, firing, promotion, merit pay, and teaching awards. However, evidence demonstrates that SET are invalid and students do not learn more from more highly rated professors. SET combined with unreasonable standards of satisfactory performance setup and fuel a high-stakes race among professoriate to place in the forefront of the race. SET are used as a standard-o-meter to shape professors’ behavior to students’ satisfaction, to fulfill students’ needs and wants. SET are one of the major causes of work deflation, grade inflation, standards reduction, and declining quality of higher education. Other reasons include several relevant trends: (a) education attainment of populations has increased massively, (b) students’ ability/intelligence has declined to population mean, (c) students’ study time has decreased, and (d) students’ grades have increased rapidly with A grades being now the most common. Finally, continued use of SET as a measure of faculty’s teaching effectiveness runs afoul various professional, ethical, and legal standards. There are several paths for evaluating faculty in the future. One option is to continue to pretend that SET are valid and to continue to use SET as a measure of professors’ teaching effectiveness, that is, to continue to travel on the higher education’s highway to hell where students’ satisfaction is all that matters and students’ learning is optional. Other options are more rational and do not pressure professors to deflate work, deflate standards, and inflate grades.

Similar content being viewed by others

Availability of Data and Materials

Not applicable.

References

Abrami, P. C., & d’Apollonia, S. (1999). Current concerns are past concerns. American Psychologist, 54(7), Article 7. https://doi.org/10.1037/0003-066X.54.7.519

AERA, APA, & NCME. (1999). Standards for educational and psychological testing. AERA, APA, NCME.

AERA, APA, & NCME. (2014). Standards for educational and psychological testing. AERA, APA, NCME.

AERA statement on use of value-added models (VAM) for the evaluation of educators and educator preparation programs. (2015). Educational Researcher, 44(8), 448–452. https://doi.org/10.3102/0013189X15618385

Ahn, R., Woodbridge, A., Abraham, A., Saba, S., Korenstein, D., Madden, E., Boscardin, W. J., & Keyhani, S. (2017). Financial ties of principal investigators and randomized controlled trial outcomes: Cross sectional study. BMJ, 356, i6770. https://doi.org/10.1136/bmj.i6770

Aleamoni, L. (1999). Student rating myths versus research facts from 1924 to 1998. Journal of Personnel Evaluation in Education, 13(2), Article 2. https://doi.org/10.1023/A:1008168421283

American Psychological Association. (2017). Ethical principles of psychologists and code of conduct. American Psychological Association.

Amrein-Beardsley, A., Pivovarova, M., & Geiger, T. J. (2016). Value-added models: What the experts say. Phi Delta Kappan, 98(2), 35–40. https://doi.org/10.1177/0031721716671904

Babcock, P. S., & Marks, M. (2010). The falling time cost of college: evidence from half a century of time use data (Working Paper No. 15954; Working Paper Series, Issue 15954). National Bureau of Economic Research. https://doi.org/10.3386/w15954

Benton, S., & Li, D. (2021). Teacher clarity: cornerstone of effective teaching (pp. 1–20). IDEA Center.

Beran, T., & Violato, C. (2009). Student ratings of teaching effectiveness: student engagement and course characteristics. Canadian Journal of Higher Education, 39(1), Article 1.

Berk, R. (2014). Should student outcomes be used to evaluate teaching? The Journal of Faculty Development, 28(2), 87–96.

Boring, A. (2015). Gender Biases in student evaluations of teachers. In Documents de Travail de l’OFCE (No. 2015–13; Documents de Travail de l’OFCE, Issues 2015–13). Observatoire Francais des Conjonctures Economiques (OFCE). Retrieved April 1, 2023, from https://ideas.repec.org/p/fce/doctra/1513.html

Boring, A. (2017). Gender biases in student evaluations of teaching. Journal of Public Economics, 145, 27–41. https://doi.org/10.1016/j.jpubeco.2016.11.006

Boring, A., Ottoboni, K., & Stark, P. (2016). Student evaluations of teaching (mostly) do not measure teaching effectiveness. ScienceOpen Research. https://doi.org/10.14293/S2199-1006.1.SOR-EDU.AETBZC.v1

Boysen, G. A. (2015). Uses and misuses of student evaluations of teaching: The interpretation of differences in teaching evaluation means irrespective of statistical information. Teaching of Psychology, 42(2), 109–118. https://doi.org/10.1177/0098628315569922

Boysen, G. A., Kelly, T. J., Raesly, H. N., & Casner, R. W. (2014). The (mis)interpretation of teaching evaluations by college faculty and administrators. Assessment & Evaluation in Higher Education, 39(6), Article 6. https://doi.org/10.1080/02602938.2013.860950

Canadian Psychological Association. (2017). Canadian Code of Ethics for Psychologists Fourth Edition. Canadian Psychological Association.

CAUT. (2016). Evaluation of teaching: CAUT policy statement. Canadian Association of University Teachers. Retrieved April 1, 2023, from https://www.caut.ca/about-us/caut-policy/lists/caut-policy-statements/evaluation-of-teaching

Centra, J. A. (2003). Will teachers receive higher student evaluations by giving higher grades and less course work? Research in Higher Education, 44(5), 495–518. https://doi.org/10.1023/A:1025492407752

Centra, J. A. (2009). Differences in responses to the Student Instructional Report: Is it bias? Educational Testing Service. Retrieved September 4, 2023, from https://web.archive.org/web/20150918224633/, https://www.ets.org/Media/Products/SIR_II/pdf/11466_SIR_II_ResearchReport2.pdf

Centra, J. A., & Creech, F. R. (1976). The relationship between student, teachers, and course characteristics and student ratings of teacher effectiveness. Princeton, NJ: Educational Testing Service. Project Report 76-1.

CFR. (2023). Code of Federal Regulation, Title 34, Subtitle B, Chapter IV, Part 600, Subpart A, Para 600.2. Retrieved April 1, 2023, from https://www.ecfr.gov/current/title-34/subtitle-B/chapter-VI/part-600/subpart-A/section-600.2

Chiu, Y.-L., Chen, K.-H., Hsu, Y.-T., & Wang, J.-N. (2019). Understanding the perceived quality of professors’ teaching effectiveness in various disciplines: The moderating effects of teaching at top colleges. Assessment & Evaluation in Higher Education, 44(3), 449–462. https://doi.org/10.1080/02602938.2018.1520193

Clayson, D. E. (2009). Student evaluations of teaching: Are they related to what students learn?: A meta-analysis and review of the literature. Journal of Marketing Education, 31(1), Article 1. https://doi.org/10.1177/0273475308324086

Cohen, P. A. (1981). Student ratings of instruction and student achievement: A meta-analysis of multisection validity studies. Review of Educational Research, 51(3), Article 3. https://doi.org/10.3102/00346543051003281

Coladarci, T., & Kornfield, I. (2007). RateMyProfessors.com versus formal in-class student evaluations of teaching. Practical Assessment, Research & Evaluation, 12(6), Article 6.

Feldman, K. (1984). Class size and college students’ evaluations of teachers and courses: A closer look. Research in Higher Education, 21(1), Article 1. https://doi.org/10.1007/BF00975035

Feldman, K. A. (1989). The association between student ratings of specific instructional dimensions and student achievement: Refining and extending the synthesis of data from multisection validity studies. Research in Higher Education, 30(6), Article 6.

Felton*, J., Mitchell, J., & Stinson, M. (2004). Web-based student evaluations of professors: The relations between perceived quality, easiness and sexiness. Assessment & Evaluation in Higher Education, 29(1), Article 1. https://doi.org/10.1080/0260293032000158180

Felton, J., Koper, P. T., Mitchell, J., & Stinson, M. (2008). Attractiveness, easiness and other issues: Student evaluations of professors on Ratemyprofessors.com. Assessment & Evaluation in Higher Education, 33(1), Article 1. https://doi.org/10.1080/02602930601122803

Flaherty, C. (2018). DFW Fail. Retrieved April 1, 2023, from https://www.insidehighered.com/news/2018/05/31/savannah-state-professors-object-new-unwritten-policy-linking-dfw-grades-teaching

Gravestock, P., & Gregor-Greenleaf, E. (2008). Student course evaluations: research, models and trends. Higher Education Quality Council of Ontario. Retrieved April 1, 2023, from https://deslibris.ca/ID/215362

Haskell, R. E. (1997). Academic freedom, tenure, and student evaluation of faculty. Education Policy Analysis Archives, 5(0), Article 0. https://doi.org/10.14507/epaa.v5n6.1997

Hessler, M., Pöpping, D. M., Hollstein, H., Ohlenburg, H., Arnemann, P. H., Massoth, C., Seidel, L. M., Zarbock, A., & Wenk, M. (2018). Availability of cookies during an academic course session affects evaluation of teaching. Medical Education, 52(10), Article 10. https://doi.org/10.1111/medu.13627

Hoyt, D. P., & Lee, E.-J. (2002). Basic data for the revised IDEA system Technical Report No. 12 (p. 93). Individual Development and Educational Assessment. IDEA Center Inc. Retrieved September 4, 2023, from https://eric.ed.gov/?id=ED604061

Kaplan, R. M., & Saccuzzo, D. P. (2001). Psychological testing: Principles, applications, and issues, 5th ed (pp. xxiii, 708). Wadsworth/Thomson Learning.

Khazan, E., Borden, J., Johnson, S., & Greenhaw, L. (2020). Examining gender bias in student evaluation of teaching for graduate teaching assistants. NACTA Journal.

Kruger, J., & Dunning, D. (1999). Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of Personality and Social Psychology, 77(6), Article 6. https://doi.org/10.1037/0022-3514.77.6.1121

MacNell, L., Driscoll, A., & Hunt, A. (2015). What’s in a name: Exposing gender bias in student ratings of teaching. Innovative Higher Education, 40(4), Article 4. https://doi.org/10.1007/s10755-014-9313-4

Mangan, K. (2009). Professors compete for bonuses based on student evaluations. Chronicle of Higher Education. Retrieved April 1, 2023, from. https://www.chronicle.com/article/professors-compete-for-bonuses-based-on-student-evaluations/

Marsh, H. W. (1980). Students’ evaluations of college/university teaching: A description of research and an instrument. Australia: University of Sydney. Retrieved September 4, 2023, from https://eric.ed.gov/?id=ED197645

Marsh, H. W. (1982). SEEQ: A reliable, valid, and useful instrument for collecting students’ evaluations of university teaching. British Journal of Educational Psychology, 52, 77.

Marsh, H. W. (1991). Multidimensional students’ evaluations of teaching effectiveness: A test of alternative higher-order structures. Journal of Educational Psychology, 83(2), Article 2. https://doi.org/10.1037/0022-0663.83.2.285

Mitchell, K. M. W., & Martin, J. (2018). Gender bias in student evaluations (No. 3). 51(3), Article 3. https://doi.org/10.1017/S104909651800001X

Mount Royal University. (2022). 2022–2023 Academic Calendar Mount Royal University: Academic Regulations. Retrieved April 1, 2023, from https://catalog.mtroyal.ca/content.php?catoid=29&navoid=2314

Murray, H. G. (1982). Use of student instructional ratings in administrative personnel decisions at the University of Western Ontario.

Neath, I. (1996). How to improve your teaching evaluations without improving your teaching. Psychological Reports, 78(3 PART 2), Article 3 PART 2. Scopus.

OCUFA. (2019). Report of the OCUFA Student Questionnaires on Courses and Teaching Working Group. Ontario Confederation of University Faculty Associations. Retrieved April 1, 2023, from https://ocufa.on.ca/assets/OCUFA-SQCT-Report.pdf

Orpwood, G., & Brown, E. S. (2015). Closing the numeracy gap. CGC Educational Communications. Retrieved April 1, 2023, from http://www.numeracygap.ca/assets/img/Closing_the_numeracy_Executive_Summary.pdf

Rojstaczer, S., & Healy, C. (2010). Grading in American colleges and universities. Teachers College Record. Retrieved September 4, 2023, from https://www.gradeinflation.com/tcr2010grading.pdf

Rosen, A. S. (2018). Correlations, trends and potential biases among publicly accessible web-based student evaluations of teaching: A large-scale study of RateMyProfessors.com data. Assessment & Evaluation in Higher Education, 43(1), Article 1. https://doi.org/10.1080/02602938.2016.1276155

Ruscio, J. (2001). Administering quizzes at random to increase students’ reading. Teaching of Psychology, 28(3), 204–206. https://doi.org/10.1207/S15328023TOP2803_08

Ryerson University v Ryerson Faculty Association. (2018). CanLII 58446 (ON LA). Retrieved September 4, 2023, from https://canlii.ca/t/hsqkz

Schwartz, Z. (2016). Where students study the most 2016: Full results. Maclean’s.

Sonntag, M. E., Bassett, J. F., & Snyder, T. (2009). An empirical test of the validity of student evaluations of teaching made on RateMyProfessors.com. Assessment & Evaluation in Higher Education, 34(5), Article 5. https://doi.org/10.1080/02602930802079463

Stroebe, W. (2016). Why good teaching evaluations may reward bad teaching: On grade inflation and other unintended consequences of student evaluations. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 11(6), Article 6. https://doi.org/10.1177/1745691616650284

Stroebe, W. (2020). Student evaluations of teaching encourages poor teaching and contributes to grade inflation: A theoretical and empirical analysis. Basic and Applied Social Psychology, 42(4), Article 4. https://doi.org/10.1080/01973533.2020.1756817

Subtirelu, N. C. (2015). “She does have an accent but…”: Race and language ideology in students’ evaluations of mathematics instructors on RateMyProfessors.com (No. 1). 44(1), Article 1. https://doi.org/10.1017/S0047404514000736

Theall, M. (2001). Can we put precision into practice? Commentary and thoughts engendered by Abrami’s “Improving judgments about teaching effectiveness using teacher rating forms.” New Directions for Institutional Research, 2001(109), 89–96. https://doi.org/10.1002/ir.5

Timmerman, T. (2008). On the validity of RateMyProfessors.com. Journal of Education for Business, 84(1), Article 1. https://doi.org/10.3200/JOEB.84.1.55-61

Tukey, J. W. (1977). Exploratory data analysis. Addison-Wesley Pub. Co.

US Census. (2022). Table A-1. Years of School Completed by People 25 Years and Over, by Age and Sex, Selected Years 1940 to 2021. US Census Bureau. Retrieved April 1, 2023, from https://www2.census.gov/programs-surveys/demo/tables/educational-attainment/time-series/cps-historical-time-series/taba-1.xlsx

Uttl, B. (2021). Lessons learned from research on student evaluation of teaching in higher education. In W. Rollett, H. Bijlsma, & S. Röhl (Eds.), Student Feedback on Teaching in Schools: Using Student Perceptions for the Development of Teaching and Teachers (pp. 237–256). Springer International Publishing. https://doi.org/10.1007/978-3-030-75150-0_15

Uttl, B., Bell, S., & Banks, K. (2018). Student evaluation of teaching (SET) ratings depend on the class size: A systematic review. In Proceedings of International Academic Conferences (No. 8110392; Proceedings of International Academic Conferences, Issue 8110392). International Institute of Social and Economic Sciences. Retrieved April 1, 2023, from https://ideas.repec.org/p/sek/iacpro/8110392.html

Uttl, B., Cnudde, K., & White, C. A. (2019). Conflict of interest explains the size of student evaluation of teaching and learning correlations in multisection studies: A meta-analysis. PeerJ, 7(7), Article 7. https://doi.org/10.7717/peerj.7225

Uttl, B., White, C. A., & Gonzalez, D. W. (2017). Meta-analysis of faculty’s teaching effectiveness: Student evaluation of teaching ratings and student learning are not related. Studies in Educational Evaluation, 54, 22–42. https://doi.org/10.1016/j.stueduc.2016.08.007

Uttl, B., White, C. A., & Morin, A. (2013). The numbers tell it all: Students don’t like numbers! PLoS ONE, 8(12), Article 12. https://doi.org/10.1371/journal.pone.0083443

Uttl, B., & Kibreab, M. (2011). Self-report measures of prospective memory are reliable but not valid. Canadian Journal of Experimental Psychology = Revue Canadienne De Psychologie Experimentale, 65(1), Article 1. https://doi.org/10.1037/a0022843

Uttl, B., & Smibert, D. (2017). Student evaluations of teaching: Teaching quantitative courses can be hazardous to one’s career. PeerJ, 5(5), Article 5. https://doi.org/10.7717/peerj.3299

Uttl, B., & Violo, V. (2021a). Gender bias in student evaluation of teaching or a mirage? ScienceOpen Research. https://doi.org/10.14293/S2199-1006.1.SOR.2021.0003.v1

Uttl, B., & Violo, V. C. (2021b). Small samples, unreasonable generalizations, and outliers: Gender bias in student evaluation of teaching or three unhappy students? ScienceOpen Research. https://doi.org/10.14293/S2199-1006.1.SOR.2021.0001.v1

Uttl, B., Violo, V., & Gibson, L. (n.d., in review). Meta-analysis: On average, undergraduate students’ intelligence is merely average.

Vul, E., Harris, C., Winkielman, P., & Pashler, H. (2009). Puzzlingly high correlations in fmri studies of emotion, personality, and social cognition. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 4(3), 274–290. https://doi.org/10.1111/j.1745-6924.2009.01125.x

Wachtel, H. K. (1998). Student evaluation of college teaching effectiveness: A brief review. Assessment & Evaluation in Higher Education, 23(2), Article 2. https://doi.org/10.1080/0260293980230207

Wallisch, P., & Cachia, J. (2019). Determinants of perceived teaching quality: The role of divergent interpretations of expectations. https://doi.org/10.31234/osf.io/dsvgq

Ware, H. (2016). Why pop quizzes are the Devil. Retrieved April 1, 2023, from https://studybreaks.com/college/seriously-who-still-thinks-pop-quizzes-are-a-good-idea/

Wechsler, D. (1939). The measurement of adult intelligence. Williams & Wilkins.

Wechsler, D. (1955). Manual for the Wechsler adult intelligence scale. Psychological Corp.

Wechsler, D. (1981). Wechsler Adult Intelligence Scale-Revised. Psychological Corp.

Wechsler, D. (1997). Wechsler Adult Intelligence Scale: Third Edition—Technical Manual.

Wechsler, D. (2008). WAIS-IV Technical and Interpretive Manual. Pearson.

Williams, P. G., Rau, H. K., Suchy, Y., Thorgusen, S. R., & Smith, T. W. (2017). On the validity of self-report assessment of cognitive abilities: Attentional control scale associations with cognitive performance, emotional adjustment, and personality. Psychological Assessment. https://doi.org/10.1037/pas0000361

Winer, L., Di Genova, L., Vungoc, P.-A., & Talsma, S. (2012). Interpreting end-of-course evaluation results. Teaching and Learning Services, McGill University. Retrieved April 1, 2023, from https://www.mcgill.ca/mercury/files/mercury/course_evaluation_results_interpretation_guidelines.pdf

Youmans, R. J., & Jee, B. D. (2007). Fudging the numbers: Distributing chocolate influences student evaluations of an undergraduate course. Teaching of Psychology, 34(4), Article 4. https://doi.org/10.1080/00986280701700318

Acknowledgements

I am thankful to Douglas Bernstein for numerous insightful discussions and comments on earlier versions of this work.

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Ethics Approval

Not applicable.

Competing Interests

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Uttl, B. Student Evaluation of Teaching (SET): Why the Emperor Has No Clothes and What We Should Do About It. Hu Arenas 7, 403–437 (2024). https://doi.org/10.1007/s42087-023-00361-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42087-023-00361-7