Abstract

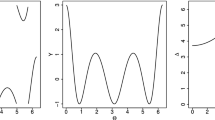

We discuss kernel-based nonparametric regression where a predictor has support on a circle and a responder has support on a real line. Nonparametric regression is used in analyzing circular–linear data because of its flexibility. However, nonparametric regression is generally less accurate than an appropriate parametric regression for a population model. Considering that statisticians need more accurate nonparametric regression models, we investigate the performance of sine series local polynomial regression while selecting the most suitable kernel class. The asymptotic result shows that higher-order estimators reduce conditional bias; however, they do not improve conditional variance. We show that higher-order estimators improve the convergence rate of the weighted conditional mean integrated square error. We also prove the asymptotic normality of the estimator. We conduct a numerical experiment to examine a small sample of characteristics of the estimator in scenarios wherein the error term is homoscedastic or heterogeneous. The result shows that choosing a higher degree improves performance under the finite sample in homoscedastic or heterogeneous scenarios. In particular, in some scenarios where the regression function is wiggly, higher-order estimators perform significantly better than local constant and linear estimators.

Similar content being viewed by others

References

Di Marzio, M., Panzera, A., & Taylor, C. C. (2009). Local polynomial regression for circular predictors. Statistics & Probability Letters, 79(19), 2066–2075.

Di Marzio, M., Panzera, A., & Taylor, C. C. (2011). Kernel density estimation on the torus. Journal of Statistical Planning and Inference, 141(6), 2156–2173.

Di Marzio, M., Panzera, A., & Taylor, C. C. (2014). Nonparametric regression for spherical data. Journal of the American Statistical Association, 109(506), 748–763.

García-Portugués, E., Van Keilegom, I., Crujeiras, R. M., & González-Manteiga, W. (2016). Testing parametric models in linear-directional regression. Scandinavian Journal of Statistics, 43(4), 1178–1191.

Hall, P., Watson, G., & Cabrera, J. (1987). Kernel density estimation with spherical data. Biometrika, 74(4), 751–762.

Lejeune, M., & Sarda, P. (1992). Smooth estimators of distribution and density functions. Computational Statistics & Data Analysis, 14(4), 457–471.

Mardia, K. V., & Jupp, P. E. (2000). Directional statistics. Wiley.

Qin, X., Zhang, J. S., & Yan, X. D. (2011). A nonparametric circular-linear multivariate regression model with a rule-of-thumb bandwidth selector. Computers & Mathematics with Applications, 62(8), 3048–3055.

Ruppert, D., & Wand, M. P. (1994). Multivariate locally weighted least squares regression. The Annals of Statistics, 22(3), 1346–1370.

Tsuruta, Y., & Sagae, M. (2017). Higher order kernel density estimation on the circle. Statistics & Probability Letters, 131, 46–50.

Tsuruta, Y., & Sagae, M. (2018). Properties for circular nonparametric regressions by von Miese and wrapped Cauchy kernels. Bulletin of Informatics and Cybernetics, 50, 1–13.

Wand, M. P., & Jones, M. C. (1994). Kernel smoothing. CRC Press.

Wang, X. (2002). Exponential bounds of mean error for the kernel regression estimates with directional data. Chinese Journal of Contemporary Mathematics, 23(1), 45–52.

Wang, X., Zhao, L., & Wu, Y. (2000). Distribution free laws of the iterated logarithm for kernel estimator of regression function based on directional data. Chinese Annals of Mathematics, 21(04), 489–498.

Acknowledgements

We would like to thank the reviewers for the helpful comments. This work was supported by JSPS KAKENHI Grant Numbers JP16K00043, JP16H02790, JP17H03321, and JP20K19760.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This work was supported by JSPS KAKENHI Grant Numbers JP16K00043, JP16H02790, JP17H03321, and JP20K19760.

Conflict of interest

The authors state that there are no conflicts of interest with this paper.

Availability of data and materials

Not applicable.

Code availability

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Proof

(Proof of Proposition 1) We replace series \((\varTheta _{i}-\theta )^{t}\) in the Taylor extension of \(m(\varTheta _{i})\) with \(\sin ^{-1}(\sin (\varTheta _{i}-\theta ))^{t}\) for \(|\varTheta _{i}-\theta |<\pi /2\). Then, \(m(\varTheta _{i})\) is

for \(|\varTheta _{i}-\theta |<\pi /2\). The Taylor extension of \(\sin ^{-1}(u)\) is

where \(b_{s} =\{(2s-1)!!\}/\{(2s)!!(2s+1)\}\). By combining (12) and (13), we obtain the sine series

for \(|\varTheta - \theta | <\pi /2\), where \(D_{t}:= (\sum ^{[(p+1)/2]}_{s=0}b_{s}\sin (\varTheta _{i}-\theta )^{2s+1})^{t}\) and \(D_{t}= O_{p}(\sin (\varTheta _{i}-\theta )^{p+2})\).

When polynomial theorem is applied, its term \((\sum ^{[(p+1)/2]}_{s=0}b_{s}\sin (\varTheta _{i}-\theta )^{2s+1})^{t}\) becomes

By combining (14) and (15), we obtain

The proof of Proposition 1 is complete with (16). \(\square \)

Appendix B

Proof

(Proof of Lemma 1) Recalling the first-order approximation \(\cos (hz)= 1-h^{2}z^{2}/2 + O(h^{4})\), we have

The proof of property (i) is complete with (17). We next derive property (ii). Condition (b) indicates that \(\bar{L}(z) < Mz^{-(2p + 4 +\alpha )}\) with the upper bound M of \(\bar{L}\) if z is large enough. When n is large, we obtain

Hence, we can ignore the tail part of \(\mu _{t}(\bar{L})\). The proof of property (ii) is complete with (18).

We next derive property (iii). Properties (i) and (ii) provide

Therefore, the normalizing constant \(C_{h}(L)\) closes to bandwidth c(L)h. The proof of property (iii) is complete from (19).

We then consider property (iv). Let Q be the upper bound of L. Note that \(1 \le 1-\cos (\theta ) \le 2\) for \(|\theta | \ge \pi /2 \). Recalling that L(r) is a monotonically non-increasing function, we find that \(L(h^{-2}\{ 1- \cos (\theta ) \}) \le L(h^{-2} )\) for \(|\theta | \ge \pi /2 \). When n is large enough, we have \(L(h^{-2}\{ 1- \cos (\theta ) \}) \le L(h^{-2} ) \le Q h^{2(p + 2 + \alpha /2)}\) for \(\theta \le |\pi /2|\) from condition (b). By combining condition (b) and property (iii), we obtain the order of a kernel’s tail

for \(|\theta | \ge \pi /2 \). The proof of property (iv) is complete with (20). \(\square \)

Appendix C

Proof

(Proof of Theorem 1) If n is large enough, we can ignore observing \(|\varTheta _{i}-\theta |\ge \pi /2\) because the kernel is \(K_{h}(\varTheta _{i}-\theta ) = o_{p}(h^{p+2})\) from (iv) in Lemma 1. Therefore, we assume that all observations satisfy \(|\varTheta _{i}-\theta |<\pi /2\) in the sample \({\varvec{\varTheta }}_{n}\) to simplify the proof. Assume that \({\varvec{M}}:=(m(\varTheta _{1}),\ldots ,m(\varTheta _{n}))^{T}\). By applying \({\varvec{M}}\) to the Taylor series of Proposition 1, we obtain

where

and the remaining \({\varvec{R}}_{m,\theta }\) is \({\varvec{R}}_{m,\theta }=o_{p}( {\varvec{T}}_{m,\theta })\). From (21), we obtain the bias as

Assume that \({\varvec{A}}:=\mathop {\mathrm{diag}}\nolimits \{1,h,\ldots ,h^{p}\}\) and \({\varvec{\mu }}_{\kappa }:=(\mu _{k},\mu _{k+1},\ldots ,\mu _{k+p})^{T}\) with \(\mu _{j}:=\mu _{j}(\bar{L})\). Let \({\varvec{Q}}_{p}\) be the \((p+1)\times (p+1)\) matrix with the (i, j) entry equal to \(\mu _{i+j-1}\). Recall that \({\varvec{N}}_{p}\) is the \((p+1)\times (p+1)\) matrix having the (i, j) entry equal to \(\mu _{i+j-2}\).

We show the asymptotic forms of \({\varvec{e}}_{1}^{T}(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta })^{-1}\) and \(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{T}}_{m,\theta }\) in (23) as the two following lemmas.

Lemma 2

The term \({\varvec{e}}_{1}^{T}(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta })^{-1}\) is given by

Lemma 3

The term \(n^{-1}{\varvec{A}}^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{T}}_{m,\theta }\) is given by

Proof

(Proof of Lemma 2) From (i), (ii), and (iii) of Lemma 1, we find that each entry in \({\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta }\) is equal to

From (24), we have

Assume that \(g(hf^{\prime }(\theta ){\varvec{Q}}_{p}):=[f(\theta ){\varvec{N}}_{p}+hf^{\prime }(\theta ){\varvec{Q}}_{p}]^{-1}\). The Taylor expansion of g is then given by

From combining (25), (26), and \({\varvec{e}}_{1}^{T}{\varvec{A}}^{-1}={\varvec{e}}_{1}^{T}{\varvec{A}}={\varvec{e}}_{1}^{T}\), we find that matrix \({\varvec{e}}_{1}^{T}(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta })^{-1}\) is equal to

The proof of Lemma 2 is complete with (27). \(\square \)

Proof

(Proof of Lemma 3) From (24), we derive

By combining (22) and (28), we find that

The proof of Lemma 3 is complete with (29). \(\square \)

By combining (23) and Lemmas 2 and 3, we derive the bias as equal to

We employ the following lemma given by Ruppert and Wand (1994) to simplify (30). \(\square \)

Lemma 4

It holds that

-

(i)

if j is odd, then \(\mu _{j}=0\), otherwise \(\mu _{j}\ne 0\);

-

(ii)

if \(i+j\) is odd, then \(({\varvec{N}}_{p})_{ij}=({\varvec{N}}_{p}^{-1})_{ij}=0\);

-

(iii)

if \(i+j\) is even, then \(({\varvec{Q}}_{p})_{ij}=0\).

When p is odd, we find that the first term on the right-hand side (RHS) of (30) does not become zero if (i) and (ii) of Lemma 4 are combined. This leads to

for odd p.

Next, we consider the case where p is even. When we combine (i) and (ii) in Lemma 4, we find that the first term on the RHS of (30) is zero. Additionally, the first p columns of \({\varvec{Q}}_{p}\) are identical to the last p columns of \({\varvec{N}}_{p}\). Ruppert and Wand (1994) found that \({\varvec{e}}^{T}_{1}{\varvec{N}}_{p}^{-1}{\varvec{Q}}_{p}\) becomes zero when they combine (ii) and (iii) in Lemma 4. This demonstrates that the last term on the RHS of (30) vanishes. Therefore, we obtain

for even p.

Assume that a cofactor of the determinant \(|{\varvec{N}}_{p}|\) is \(c_{ij}\) and that \(({\varvec{N}}_{p}^{-1})_{ij}=c_{ij}/|{\varvec{N}}_{p}|\) and \(|{\varvec{M}}_{p}(z)|=\sum _{j}c_{1j}z^{j-1}\). We provide the relation between the k-th moment of \(\bar{L}_{(p)}\) and \(\sum ^{p+1}_{j=1}({\varvec{N}}_{p}^{-1})_{1j}\mu _{k-1+j}\) as the following equation:

When we apply (33) to (31) and (32), we obtain biases (6) and (7).

We next consider the variance. Assume that \({\varvec{V}}:=\mathop {\mathrm{diag}}\nolimits \{v(\varTheta _{1}),\ldots ,v(\varTheta _{n})\}\). The variance is given by

Moreover, let \({\varvec{T}}_{p}\) be the \((p+1)\times (p+1)\) matrix with (i, j) entry equal to \(\mu _{i+j-2}(\bar{L}^{2})\). We approximate matrix \(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{V}}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta }\) with the following lemma.

Lemma 5

Matrix \(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{V}}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta }\) is given by

Proof

(Proof of Lemma 5) when n is large enough, using the same procedure (ii) in Lemma 1, we have

for \(l \le 2p+2\). Assume that \(\hat{r}_{l}(\theta ;h):=n^{-1}\sum _{i}K_{h}(\varTheta _{i}-\theta )^{2}\sin (\varTheta _{i}-\theta )^{l}v(\varTheta _{i})\). Then, matrix \(n^{-1}{\varvec{S}}_{\theta }^{T}{\varvec{W}}_{\theta }{\varvec{V}}{\varvec{W}}_{\theta }{\varvec{S}}_{\theta }\) becomes the matrix \((p+1)\times (p+1)\) with (i, j) entry to \(\hat{r}_{i+j-2}(\theta ;h)\). When we combine (1) and (iii) in Lemma 1 and (35), we obtain

From (36), we have

The proof of Lemma 5 is complete with (37). \(\square \)

By combining (34), Lemmas 2, and 5, we show that the variance is equal to

The term \({\varvec{e}}^{T}_{1}{\varvec{N}}^{-1}_{p}{\varvec{T }}_{p}{\varvec{N}}^{-1}_{p}{\varvec{e}}_{1}\) on the RHS of (38) is given by

The following equation can be used to simplify \({\varvec{e}}^{T}_{1}{\varvec{N}}^{-1}_{p}{\varvec{T }}_{p}{\varvec{N}}^{-1}_{p}{\varvec{e}}_{1}\).

By combining (39) and (40), we obtain

Furthermore, by combining (38) and (41), we obtain variance (8). \(\square \)

Appendix D

Proof

(Proof of Theorem 2) Assume vector \({\varvec{e}}^{T}_{1}(n^{-1}{\varvec{S_{\theta }}}^{T}{\varvec{W_{\theta }}}{\varvec{S_{\theta }}})^{-1}{\varvec{S_{\theta }}}^{T}{\varvec{W_{\theta }}}=(c_{1},\ldots ,c_{n})\). We then have \(\hat{m}(\theta ;p,h)=n^{-1}\sum _{i=1}^{n}c_{i}Y_{i}\). Further, we assume that the sum of the conditional variance of \(h^{1/2}c_{i}Y_{i}\) is \(S^{2}_{n}:=\sum _{i=1}^{n}\mathrm {Var}[h^{1/2}c_{i}Y_{i}|{\varvec{\varTheta }}_{n}]\). From (8), we find that \(S^{2}_{n}\) is equal to

From (42), we have

for any \(\varepsilon >0\). By combining (42) and (43), we have

for any \(\varepsilon >0\). Equation (44) indicates that the Lindeberg condition for \(h^{1/2}c_{i}Y_{i}\) holds. By combining (42) and the central limit theorem, we obtain

as \(n\rightarrow \infty \).

Thus, we have

When p is odd, we obtain \(n^{1/2}h^{1/2}\mathrm {Bias}[\hat{m}(\theta ;p,h)|{\varvec{\varTheta }}_{n}]=O_{p}(n^{\{1+\gamma (2p+3)\}/2})\) from (6). Thus, the second term on the RHS of (46) vanishes when \(\gamma < -1/(2p+3)\). Therefore, if \(\gamma <-1/(2p+3)\) and \(n\rightarrow \infty \), it then follows that

When p is even, we find that (47) holds if \(\gamma <-1/(2p+5)\) and \(n\rightarrow \infty \) use the same procedure applied when p is odd. \(\square \)

Rights and permissions

About this article

Cite this article

Tsuruta, Y., Sagae, M. Improving kernel-based nonparametric regression for circular–linear data. Jpn J Stat Data Sci 5, 111–131 (2022). https://doi.org/10.1007/s42081-022-00145-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-022-00145-3