Abstract

Full detector simulation was among the largest CPU consumers in all CERN experiment software stacks for the first two runs of the Large Hadron Collider. In the early 2010s, it was projected that simulation demands would scale linearly with increasing luminosity, with only partial compensation from increasing computing resources. The extension of fast simulation approaches to cover more use cases that represent a larger fraction of the simulation budget is only part of the solution, because of intrinsic precision limitations. The remainder corresponds to speeding up the simulation software by several factors, which is not achievable by just applying simple optimizations to the current code base. In this context, the GeantV R&D project was launched, aiming to redesign the legacy particle transport code in order to benefit from features of fine-grained parallelism, including vectorization and increased locality of both instruction and data. This paper provides an extensive presentation of the results and achievements of this R&D project, as well as the conclusions and lessons learned from the beta version prototype.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

With ever-increasing data acquisition rates and detector complexity, the experimental particle physics program is reaching the exascale in terms of the data produced. The high-luminosity phase of the Large Hadron Collider (LHC) will produce about 150 times more data than its first run [1]; hence, a proportional increase in computing requirements is expected. All steps in the data processing chain are expected to cope with the increased throughput, under the assumption of a flat computing budget.

Particle transport simulation is an essential component in all phases of a particle physics experiment, from detector design to data analysis. Its main role is trying to predict the detector response to the traversal of particles, which is a very complex task involving a large number of models. Among the most used particle transport libraries in high energy physics (HEP) are Geant4 [2], Fluka [3], and Geant3 [4]. Simulation is one of the most computationally demanding applications in HEP, utilizing more than half of the distributed computing resources of the LHC. The increasing demand for simulated data samples can be satisfied in part with approximate (so-called) fast simulation techniques, but accelerating the detailed simulation process remains essential for increasing simulation throughput.

The ambitious experiment upgrades are occurring in a context where computing technology is rapidly evolving. Since the historical approach to improve CPUs, increasing the clock speed and shrinking the transistors, is now limited by quantum leakage, industry is exploring alternative solutions for the next technological breakthrough. The main hardware manufacturers now favor parallel (or vector) processing units as well as heterogeneous hardware solutions with accelerators such as GPUs, FPGAs, and ASICs, facilitating a performance boost for many domain-specific applications. Most HEP applications are not optimized for Single Instruction Multiple Data (SIMD) parallelism or coprocessors and therefore do not make efficient use of these new resources.

The SIMD model utilizes specialized CPU vector registers to execute the same sequence of instructions in parallel for multiple data. The Single Instruction Multiple Threads (SIMT) model has the same concept as SIMD but the common code (kernel) is executed by multiple synchronous threads. The main practical difference between the two models is the length of the data vector: short in the case of SIMD, usually found on CPUs, and much longer in the case of SIMT, usually found on GPUs. Also, SIMD requires the strict alignment in a single register of all the data to be processed, while each thread in SIMT processes data in its own, separate register. Vectorized applications are easier to port to coprocessors that implement the SIMT model.

The benefits of SIMD and SIMT have been demonstrated for applications featuring massive data parallelism, such as linear algebra and graphics. However, bringing these vectorization techniques to complex code with significant branching presents a different type of challenge. Particle transport simulation has many features hostile to SIMD, including sparse memory access into large data structures, deep conditional branching, and long algorithmic chains and deep function call stacks per data unit (a track, representing a particle state) with poor code locality.

The GeantV simulation R&D project [5] aimed to exploit modern CPU vector units by re-engineering the simulation workflow implemented in Geant4 [2] and the associated data structures. The goal was to enhance instruction locality by regrouping data (tracks) according to the tasks to be executed, rather than executing a sequence of tasks for the same track. The advantage of such an approach, besides the temporal locality, is that it enables new forms of data parallelism that were inaccessible before, such as SIMD and SIMT. Other computational workflows in HEP, such as reconstruction or physics analysis, could benefit from the same optimizations and it is expected that the lessons learned from the GeantV R&D can be applied to these areas.

The target of the GeantV prototype was to speed up particle transport simulation applications by a factor of 2–5 on modern CPUs [5], compared to Geant4 in similar conditions. Gains from SIMD and better instruction cache locality were foreseen, along with code and algorithm refactoring. To support multi- and many-core platforms, thread parallelism was supported starting with the very early versions of the prototype. Another design requirement of this study was to ensure portability to various hardware architectures. This entails keeping the same code and preserving the ability to migrate the data model representation in a device-friendly format.

Concepts and Architecture

Particle transport simulation is peculiar in terms of workflow and data access patterns. In most HEP event processing applications, the data lifetime is rather short: data is filtered and processed to produce results or derived quantities that are consumed by subsequent tasks. It is common for the same data to be used as constant input by several algorithms, but it is less common for that data to be recursively changed while being processed. The latter is the case for simulation, which follows the life cycle of a track, representing a particle traveling through the detector. The track is the central data object used by most of the transport algorithms: geometry computations, propagation in electric or magnetic fields, or physics processes affecting the associated particle. From a computational perspective, the track represents a state taken as input and modified subsequently by a sequence of tasks, collaborating to perform a step that moves it from one point to another. There is a design choice in the ordering of individual steps. In the traditional design, simulation engines perform consecutive steps on a single track until it completes its transportation. To enhance code locality, one can chose an alternative approach, grouping tracks undergoing similar stepping tasks (e.g. the same physics model actions). This requires deep changes in the track handling and step ordering compared to the classical approach, which is the basic direction taken by GeantV.

An important feature of simulation that drives the application design is unpredictability: particle physics is stochastic by nature, implying that the next physics process affecting a particle has to be chosen according to probability distribution functions. One cannot generate a sequence of processes in advance, because their probabilities are dependent on the material properties of the geometry location and the kinematic properties of the current track. Hence, the scalar (per track) data flow consists in a sequence of tasks which, depending on the previous one, cannot be known a priori.

The most convenient concept for handling the multitude of alternative algorithms is run-time polymorphism or virtual inheritance. Moreover, the large diversity and complexity of physics and geometry algorithms typically generate deep simulation call stacks and expensive branching logic, with a corresponding loss of computational efficiency.

The main GeantV concept is to change the focus from being data-centric to being algorithm-centric, making simulation SIMD- and SIMT-friendly. Instead of following a workflow from a track’s perspective, static processing stages are defined that handle track populations being processed by each stage. This change of viewpoint helps to enhance spatial and temporal instruction locality, at the price of using more memory and likely worse data caching. Bundling more work together also enables more fine-grained parallelism and favors deployment on heterogeneous computing resources.

Another important exploration in the context of simulation is parallelism. Multi-threading parallelism is an important lever for making use of the full processing power of modern CPUs. Even if most HEP workflows are embarrassingly parallelizable on input data (such as individual LHC collision events), most of our applications are memory-bound and simulation is not an exception. Event-level parallelism has already been used in production for several years in Geant4, with very good overall scaling performance in multi-threaded mode and rather small memory overhead coming from each additional thread. The only problem is that, while multi-threading allows effective use of many-core CPUs, it does not produce any increase in the throughput per thread.

Vectorization is one of the throughput-increasing acceleration techniques and becomes beneficial when the code produces a large percentage of SIMD instructions. Although compiler authors are striving to provide solutions for automatic vectorization, in practice there are only a few kinds of problems for which auto-vectorization works out of the box. Auto-vectorization is more likely to be successful within confined data loops with reduced branching complexity and without any dependence on the input data. Since, in simulation, relatively few algorithms have natural internal loops, there are only limited benefits from auto-vectorization. GeantV explores percolating track data into low-level algorithms, aiming to loop over this data internally. This approach requires being able to schedule reasonable data populations for each vectorized algorithm.

In this approach, data first needs to be accumulated into per-algorithm containers (“baskets” in GeantV jargon), before being processed. The algorithms need to expose a new interface to handle an input basket and provide implementations that handle the basket data in a vectorizable manner. Note that the tracks coming from a single event may not suffice to fill baskets efficiently, given the complex branching of simulation code and the sheer variety of physics processes needed. One framework prerequisite is, therefore, to be able to mix tracks belonging to many concurrent events in the same processing unit.

Moving one level below, the requested track data has to be gathered and copied into the vector registers. For this to happen, the data are copied into arrays, each entry corresponding to the data of one track. In this scenario, the algorithm can be expressed as an easily-vectorized loop over C-like arrays. Scattering the algorithm output data to the original tracks completes the procedure and allows the processed tracks to be dispatched to subsequent algorithms. This schema requires a data transformation layer on top of each algorithm as shown in Fig. 1.

During this study, available vectorization techniques were thoroughly investigated in terms of programmability, performance, and portability. The techniques evaluated include auto-vectorization, compiler pragmas, SIMD libraries, and compiler intrinsics. The conclusion was that the higher the control over vectorization performance, the lower the portability and programmability. Assembly code or intrinsics are both difficult to write and maintain. On the other hand, auto-vectorization and compiler pragmas do not guarantee vectorization as an outcome, and this is an effect that worsens with increasing algorithm complexity. Our preferred choice was to use SIMD libraries offering a high-level approach to vectorization via SIMD types and higher-level constructs, while keeping the complexity at a reasonable level and leveraging the portability of the library. It was decided to decouple as much as possible the implementation of algorithms from the concrete SIMD libraries, leading to the creation of VecCore [6], an abstraction layer on top of SIMD types and interfaces, supporting both scalar and vector backends (such as Vc [7], and UME::SIMD [8]). The scalar backend supports SIMT as well.

Software Design

GeantV transforms the scalar workflow into a vector one. Instead of handling one track at a time, algorithms can operate on baskets of tracks. Once a basket is injected in the algorithm, the vectorization problem is reduced to transforming all scalar operations on track data into vector operations on basket data. To generate efficient SIMD instructions and to quickly load data into SIMD registers, the basket data needs to be transformed from an array of track structures (AOS) to a structure of arrays of track data (SOA). This copying operation is only necessary for the part of the track data needed by the algorithm.

The workflow is orchestrated by a central run manager. This coordinates the work of several components, among which there are the event generator, the geometry and physics managers, and the user application. The main event loop can be controlled by either the GeantV application or the user framework. Primary tracks, defining the original input collision event, are either generated internally or injected by the user, buffered by an event server. The track-stepping loop is re-entrant, executed concurrently in several threads. Each thread takes and processes tracks from the event server. Once all tracks from a given event are transported, another event is generated/imported. The scheduler respects the constraint not to exceed the maximum number of events in flight set by the user.

GeantV Scheduler

The scheduler’s main task is to gather data efficiently in baskets for all the components, in order to improve vectorization. Also, the multi-threaded approach needs to have good scaling to make efficient use of all available cores. During the study, several different approaches to achieve both of these goals were tested, resulting in several versions of the scheduler.

The first version of scheduling was mostly geometry-centric. It tried to benefit from the observation, illustrated in Fig. 2, that many track steps are done in a smaller number of important detector volumes/materials (volume locality). At the least, geometry calculations could be vectorized for such baskets. The model had a central work queue that handled baskets containing tracks located in the same geometry volume. Dedicated transport threads concurrently picked baskets from the queue and transported them to the next boundary. Whenever a track entered a new volume, it was copied into a pending basket for that volume. The worker thread that managed to fill a given basket beyond a threshold was then responsible for dispatching it to the work queue and replacing it with a recycled empty basket. A garbage collector thread was responsible for pushing partially filled baskets to the work queue whenever the queue started to be depleted. Merging produced hits and storing them to the output file was managed by a special I/O thread.

This first approach focused on demonstrating track-level parallelism based on geometry locality, although vectorized algorithms for baskets were not available at the start of the project. This was an extremely useful step for understanding the differences and peculiarities of the basket-based track workflow compared to the single-track approach. However, the model had scaling issues due to high contention on specific baskets and frequent flushes done by the garbage collector during the event tails.

A second version of the scheduler introduced support for explicit SIMD vectorization. The basket contained a track SOA with aligned arrays ready to be copied into the vector registers. Track data was copied in and out of the SOA, as tracks were passing from one basket to another. A simplified tabulated physics model was available in this version and, since it was not vectorized, the scheduler was still dealing only with geometry-local baskets. The prototype complexity increased and several tunable parameters were introduced in an attempt to implement an adaptive behavior, optimizing the performance of different setups and in different simulation regimes. Gathering and scattering data into the SOA baskets introduced new overheads due to extra memory operations, plus extra bottlenecks in the concurrent approach. To minimize the cost of memory operations, awareness of non-uniform memory access (NUMA) was introduced for handling basket data, leading to improvements of up to \(10\%\) of the simulation time.

The final version of the GeantV scheduler is shown in Fig. 3. Track data is described as a POD structure and pre-allocated in contiguous memory blocks. Each thread takes pointers to primary tracks from an event server, storing them in an input buffer (having the role of particle stack). The stepping loop is implemented as a sequence of stages, each implementing a specific part of the processing required to make a single step for a population of tracks. Pointers to tracks tagged to execute a given stage are accumulated in the input stage basket, processed by the stage algorithms, then dispatched to the input stage basket of the next stage. This implements a stepping pipeline for track populations. The scheduler takes bunches of track pointers (last generations first) and copies them in the input basket of the first stage, triggering the pipeline execution. The stage basket is dispatched internally to specific handlers of specific processing tasks. For example, the propagation stage dispatches all neutral tracks to a linear propagator and the charged ones to a field propagator. The handlers of vectorized algorithms first accumulate (basketize) enough tracks to make the algorithm execution efficient. Subsequently, only the members of the track structure needed by the algorithm are gathered in an SOA before being processed, and then the results are scattered back to the original track pointers. Scalar algorithms make use directly of the stage basket track pointers, without having to gather/scatter data, so scalar and vector workflows can coexist. The last stage in the stepping pipeline implements the final stepping actions and calls the user application for scoring (tallying hits in sensitive detectors), before completing the cycle by copying the surviving tracks back to the prioritized particle stack. The scheduler has the role to push tracks in the stepping pipeline until exhausting the initial track population, then refilling it from the event server. Globally, the scheduler has also to balance the workload among concurrent threads and enforce policies to optimize the global workflow. In addition to fixing many of the issues identified in the previous versions, such as contention in multi-threaded mode and memory behavior, this version introduced a generic model for basketizing, corresponding to the availability of more vectorized algorithms, in addition to geometry ones. The new framework significantly improved the basketizing efficiency, while also accommodating scalar and vector processing flows, switching from one to another depending on the workflow conditions.

Scalar and Vector Workflows

To support both scalar and vector workflows in the same framework, a common interface class called handler was introduced to wrap all simulation algorithms in a common tasking system. The algorithm needs to implement the appropriate scalar and vector interfaces taking as input either a single track pointer or a vector (basket) of tracks. The vector method acts as a dispatcher for the SIMD version of the algorithm. It has to first gather the needed data from the container of tracks and copy it into a custom SIMD data structure. For example, geometry navigation requires only the track position and direction, while magnetic field propagation needs also the charge, momentum, and energy. The SIMD structure is then passed to the vectorized algorithm. The newly produced track state variables are then scattered to the original track pointers. To feed such handlers in a workflow, tracks executing the same algorithm need to be gathered in SIMD baskets before being handed to the vector interface. In the case vectorization of a given algorithm is not implemented or inefficient, the scalar interface can be directly invoked, using a scalar pipeline for this algorithm.

Algorithms of the same type are grouped into simulation stages. The simulation stages refer to specific operations that have to be executed in a pipelined manner to perform a single step that moves a particle from one position to the next. The sequence of stages executed per step by baskets of tracks can be followed in Fig. 4. At the beginning of the step, a PreStep stage initializes the track flags and separates killed tracks, handling them to a final SteppingActions to be accounted and scored. The remaining tracks enter the stage ComputeIntLen, which samples physics processes’ cross-sections and proposes an interaction length. Subsequently, a GeomQuery stage computes the geometry stepping limits in the current volume and a PrePropagation stage uses the actual step to determine in advance if multiple scattering will affect the current step. The actual track propagation is performed during a PropagationStage, having one handler for neutral and one for charged particles. The multiple scattering deflection is added after the propagation in a PostPropagation stage, and any continuous processes are subsequently applied by the AlongStepAction stage. For steps limited by physics processes, a PostStepActions stage is executed, and then the final SteppingActions stage that accounts for stopped tracks and executes user actions. Every stage has an input basket per thread, used to execute the stage either in scalar mode, by looping over the contained tracks, or in vector mode, by passing the full basket to the interface.

The sequence of stepping stages for baskets of particles in the GeantV prototype. Stages are connected in a predefined sequence similar to the stepping approach in Geant4, but there are also shortcuts or back connections that allow dumping stopped particles or repeating some stages. Each stage provides scalar and, in most cases, vector handlers for the stage algorithms

The workflow is executed in the following manner. Each thread collects a set of primary tracks in a special buffer, called StackBuffer, which emulates the functionality of a typical track stack (also used in Geant4). Secondary tracks of a higher generation are also pushed into this buffer and prioritized compared to their ancestors. The workload manager only copies the highest generation tracks into the basket of the first stage, then executes it. Once processed, the tracks are copied to the input basket of the second stage, and so on. Each stage has one or more follow-ups, so most particles get pushed along the stepping pipeline, but some particles may loop between stages before being able to execute the complete step. As an example, charged particle propagation requires repeated queries to the geometry before finally crossing the volume boundary. The stepping loop just pushes the input buffers executing the stages one after another, multiple times, until the baskets are empty. It then takes a new bunch of tracks from the StackBuffer. During this loop, some tracks typically end up in unscheduled SIMD baskets, but a subsequent loop can fill these SIMD baskets and flush them back into the pipeline.

Concurrency Model

The GeantV prototype implements parallelism at the track level. It supports an internal mode where the workload is parallelized among threads managed by the GeantV scheduler. It also supports an external mode implemented as a call to a re-entrant task transporting an event set, where the parallelism is controlled by the framework that makes the call.

Primary tracks produced by an event generator are stored in a concurrent event server and delivered to worker threads in bunches of customized size. The track data storage itself is pre-allocated to avoid dynamic memory management, partitioned per NUMA domain, and only pointers to tracks are delivered via the event server interfaces, as shown in Fig. 5. Once a thread picks up a set of primary tracks, it becomes the only user of each track in the set for the given step. Due to this design, there is no synchronization needed when changing the state of a track. Threads handle tracks in their buffers; however, they share a single set of SIMD baskets per NUMA domain, so a thread may steal the tracks accumulated in these baskets by other threads. Even in scalar mode, when the SIMD baskets are empty, there is a mechanism allowing threads to steal tracks from each other as a mechanism of work balancing during the processing tail at the end of events.

Schematic view of parallelism for the GeantV prototype. Multiple transportation tasks, handling their task data, share a set of propagator objects, one per NUMA domain. Each propagator shares a set of simulation stages with their SIMD baskets, but each transport task will handle its stack buffer of tracks. The system threads are scheduled by the run manager, and they will pick-up a free task data object from a concurrent queue. Each transport tasks will preload tracks from a concurrent event server, executing the stepping loop

The concurrency model was designed to minimize the synchronization needs and to reduce contention in the concurrent services, while sharing track data to increase basket populations. The thread-specific state data needed by the different methods cooperating for track propagation is aggregated in specific objects (called TaskData), different for every thread. A TaskData object is passed as argument to the stepping loop method executed by a given thread, becoming visible to all the callees requiring it. This approach avoids the need of syncronizing concurrent write operations on state data.

To maximize the basket population, vectorized handlers have a common SOA basket shared between threads. This was a requirement for enhancing the vector population, but it has a large cost of increased contention and loss of data locality. To improve this, thread-local copies of the SIMD basket are created for the handlers with the largest population of tracks, such as those for field propagation and multiple scattering. For these, the track population in a single thread is enough to fill them, without workflow perturbation or basket population loss. This allowed a large reduction in contention in the multi-threaded basket mode.

An important feature for fine-grained workflows is load balancing. The GeantV workflow is naturally balanced by the event server, which acts as a concurrent queue. The main problem that occurs is the depletion of the stack buffers of each thread when most of the remaining particles reside in SIMD baskets that do not have a large enough population to execute efficiently in vectorized mode. Such a regime becomes blocking when the number of events in flight has already reached the maximum specified by the user, so the scheduler enters the so-called flush mode. All SIMD baskets are simply flushed and the scalar DoIt methods are executed by the first thread triggering this mode. Flushed particles are gathered in the stage baskets of this thread, which feeds the thread but depletes, even more, the other threads that were already starving. This unbalancing mechanism is compensated by a round-robin track sharing mechanism, which allows threads to feed not only from the event server, but also from the shared buffers of other threads. To preempt the depletion regime, threads always share a small fraction of their own track populations, but will consume those themselves if no other client has. This mechanism of weak sharing allows the reduction of contention in the normal regime. Sharing is dominant during event tails and is also more important when running with many threads.

The externally-driven concurrency mode is the so-called external loop mode. In this mode, no internal threads are launched. The run manager provides an entry point that is called by a user-defined thread and that takes a set of events coming from the user framework. This will subsequently book a GeantV worker to perform the stepping loop, and will notify the calling framework via a callback. An example is provided, performing a simplified simulation of the CMS detector steered by a toy CMSSW [9] framework, mimicking the features of the full multi-threaded software framework of an LHC experiment. The GeantV simulation can be wrapped in a TBB (Threading Building Blocks, Intel®) [10] task and executed in a complex workflow, as described in Sect. 5.2.

Implementation

This section describes the core components of GeantV libraries and modules: VecCore, VecGeom, VecMath, propagation in a magnetic field and electromagnetic (EM) physics. Auxiliary modules, such as I/O and user interfaces, are briefly summarized as well.

Vector Libraries: VecCore

Portable and efficient vectorization is a significant challenge in large-scale software projects such as GeantV. The VecCore library [6] was created to address the problem of lack of portability of SIMD code and unreliable performance when relying solely on auto-vectorization by the compiler. VecCore allows developers to write generic computational kernels and algorithms using abstract types that can be dispatched to different backend implementations, such as the Vc [7] and UME::SIMD [8] libraries, CUDA, and scalar. VecCore provides an architecture-agnostic API, illustrated in Fig 6, that covers the essential parts of the SIMD instruction set. These include performing arithmetic in vector mode, computing basic mathematical functions, operating on elements of a SIMD vector, and performing gather and scatter, load and store, and masking operations. Code written using VecCore can be annotated for running on GPUs with CUDA, and can be portable across ARM®, PowerPC®, and Intel® architectures, if not relying on features specific to a particular backend (e.g. using CUDA-specific variables such as thread and block indices, or calling external library functions that may be available only on the CPU).

VecCore is used to implement vectorized geometry primitives in VecGeom (described in Sect. 3.2), and vectorized physics models in GeantV. A brief discussion of VecCore with code samples can be found in Ref. [6], and examples of VecCore usage within VecGeom and GeantV appear in the following sections as well.

Geometry Description: VecGeom

Introduction

Detector simulation relies on the availability of methods to describe and construct the detector layout in terms of elementary geometry primitives, as well as interfaces that allow the determination of positions and distances with respect to the constructed layout. Well-known examples of such geometry modelers are the Geant4 geometry module and the ROOT TGeo library [11]. Both enable users to build detectors out of hierarchical descriptions of (constructive) solids and their containment within each other.

The vectorized geometry package, named VecGeom, was chosen as one of the first areas in which to study the optimal usage of SIMD and SIMT paradigms for passing vector data between algorithms, which is one of the main targets of GeantV. From this point of view, the primary development focus was implementing algorithms capable of operating on elements of baskets in parallel. This entails geometry primitives, such as a simple box, that offer kernels to calculate distances for a group of tracks in one function call, in addition to the normal case where only one track is handled.

Below as an example the signature for a typical geometry primitive is followed by the corresponding signature of the new vector/basket interface:

Moreover, data structures and algorithms in VecGeom are laid out to enable efficient operation in heavily multi-threaded frameworks. For instance, a clear separation of state and services enables frequent track or context switches in the navigation module. This module is responsible for predicting where a track will go in the geometry hierarchy along its straight-line path. Multi-platform usage was targeted since the beginning: the same code base is intended to compile and run on CPUs as well as GPU accelerators.

Besides these primary goals, the development of VecGeom was guided and influenced by other requirements and circumstances. The first is to continue offering traditional interfaces operating on the single-particle (scalar) level. This ensures backward compatibility with the Geant4 or TGeo systems and is, in any case, needed to treat particles that have not been put into a basket. Secondly, another geometry project called USolids [12], funded by the EU AIDA project, was already in place, aiming to review and modernize the algorithms of Geant4 and TGeo and to unify the geometry code base. The VecGeom project joined forces with the USolids project for better use of available resources. As a consequence, VecGeom was factored out into a standalone repository and with the potential to evolve independently of GeantV. Therefore, VecGeom serves GeantV, with a basketized treatment and GPU support, as well as making the modernized code available to clients in traditional scenarios using the single-particle interface.

The multitude of use cases and APIs to support (scalar, basketized, CPU, GPU) poses the risk of code duplication. In order to reduce this, VecGeom started with an approach, adopted by most GeantV modules, in which standalone (static) templated algorithmic kernels are instantiated multiple times with different types and specializations behind the public interfaces. This development architecture is visualized in Fig. 7, where the typical use cases are depicted as functional chains of algorithms (scalar, vector, GPU), all implemented in terms of the same kernel templates. In order to make this happen, the kernels are written in such a way that they can be instantiated with native C++ types as well as with SIMD vector types (as offered by vectorization libraries such as Vc). Furthermore, all constructs used have the proper annotation to compile on the GPU (using CUDA). VecCore, prototyped within the VecGeom effort, provides the abstractions needed to write these generic kernels.

Using this development approach, VecGeom has evolved into a geometry library that offers similar features to the classical Geant4 geometry or TGeo for transport simulation for single particle queries. On top of this, these algorithms are also made available for basket queries or for execution on CUDA GPUs. In particular, all major geometry primitives have been implemented, and hierarchical detectors can be constructed from them via composition and placement. To solve the complex geometry tasks typically needed in particle detector simulation, such as determining the minimum distance of particles to any other material boundary or computing the intersection points with the next object along a particle’s straight-line path, VecGeom offers navigator classes that operate on top of these primitives.

Today VecGeom’s objective is to be a high-performance library for these tasks in general. A lesson learned in the development was that it is worth taking a more loosely defined approach to achieve good performance and to benefit from SIMD instructions. In particular, VecGeom targets both basketized (or horizontal) vectorization as well as inner-loop (vertical) vectorization, depending on the complexity of the algorithm. A simple box primitive is an example of the former, and a complicated tessellated shape is an example of the latter. The best SIMD performance for a box is obtained with the use of baskets, yet a SIMD speedup for the tessellated solid is available even in scalar/single-particle mode and does not require basket input. However, processing baskets can still be beneficial due to positive cache effects.

VecGeom has been discussed and presented in various publications [13,14,15]. The following sections briefly review a few important results for specific aspects of VecGeom.

The Performance of Geometry Primitives

Performance examples of VecGeom on the shape level for the case of a more elementary solid primitive, a tube segment. This demonstrates the performance improvement of important functions for the one-particle interface (1) (better algorithms) as well as an additional SIMD acceleration for the basket interface (2), automatically obtained by instantiating the same underlying kernel with Vc vector types

Geometry primitives (or solids) are, in addition to affine transformations, the basic building blocks of complex detectors. The range goes from simple structures such as boxes, tubes, and cones, to more complex entities such as polycones, polyhedrons, and tessellated solids (see, e.g., the GDML reference manual [16] for a description). In general, VecGeom offers improved performance of the solid algorithms with respect to previous implementations in Geant4 and TGeo and even with respect to USolids [12]. In most cases, the improvement is due to better algorithms, often as a natural consequence of the effort to restructure towards SIMD-friendly code. In the case of simpler geometry primitives, the implementations provide real SIMD acceleration for basketized usage. Figure 8 exemplifies this for a tube segment, where the SIMD acceleration was found to be a factor of 2 or better with the AVX instruction set (maximum of 4 vector lanes) via the use of VecCore and Vc.

For the more complex solids, some performance improvements for the scalar interface are shown in Fig. 9. In these cases, an additional SIMD acceleration for the basket interface is not feasible due to divergent code paths taken by different particles in a basket. However, as mentioned, the vector units can often still be utilized by vectorizing inner loops or inner computations. This technique is used heavily in the tessellated solid, polyhedra, and multi-union cases [14], and it contributed to the excellent performance gain compared to previous implementations.

The Performance of Navigation Algorithms

Apart from solid primitives, VecGeom offers navigation algorithms for solving geometry problems such as distance calculations between particles and geometry boundaries in composite geometry scenes made up of many primitives. These navigation algorithms are the primary point of contact or interface between the geometry and the simulation engine. The algorithmic chain in Fig. 7 is a simplified example of a typical navigation algorithm flow. This chain contains transformations of global particle coordinates to the frame of reference of the volume in which the particle is currently situated, and performs distance queries to the solids embedded in this volume.

Just as with the geometry primitives, complexity defines the performance scenario for SIMD acceleration.

-

1.

Simple geometry limit: In this case the current volume contains only a few (simple) solids, e.g. in simple showering modules in calorimeters.

In this limit, SIMD-accelerated geometry navigation of a basket is feasible for GeantV because most of the algorithmic chain can process baskets efficiently. Table 1 gives a few benchmark performance values that show the gain from using baskets and SIMD for simple volumes. In the generic case, the gain is rather modest because the SIMD throughput is limited by some non-vectorizable parts. The typical non-vectorizing operation is particle relocation after crossing boundaries, which becomes more expensive with increasing complexity, due to divergence of the location at the end of the step and the need to access a larger amount of non-local transformation matrix and 3D solid data. However, a process [15] was developed that can auto-generate code implementing specialized navigators that take into account the specific properties of the geometry. This generated code can reduce the non-vectorizable parts significantly. This increases the gain from baskets and SIMD, but is also beneficial in its own right to improve the performance of the scalar interface. The drawback of specialized navigators is that they require a generation workflow to be run before simulation, and so far they have not been extensively tested within GeantV. This explains, in part, why the overall gain from baskets in the current version of GeantV did not materialize for geometry navigation. Navigation specialization requires the analysis of all possible geometry state transitions for tracks crossing any placement of a given volume (possibly replicated) to its neighbours. Cached in the form of compiled code, the method performs global to local conversions between states. If the number of transitions is small (e.g. in the case of well-packed touching neighbour volumes), the number of crossing candidates to check can be much smaller than in the generic case, so the search can be accelerated. For complex structures, the number of combinations can lead to very large libraries with inefficient instruction caching. The navigation specialization approach is nevertheless very promising and will continue to be optimized in the context of future VecGeom developments.

-

2.

Complex geometry limit: In this case, the current volume contains many solids, which typically occurs for container volumes inside which many other modules are placed.

In this limit, due to the large number of geometry objects to test, acceleration structures are typically used to reduce the complexity from O(N) to O(log(N)), in the case of hit-detection or ray-tracing, where N is the number of geometry objects present in this volume. This, in turn, makes it difficult to achieve a coherent instruction flow for all particles in a basket and to avoid branching. However, as for the tessellated solid, the navigation algorithms can benefit from SIMD acceleration via internal vectorization. In Ref. [15], a particular regular tree data structure, based on bounding boxes, was proposed, which can be traversed with a SIMD speedup. The VecGeom implementations for tessellated solids and for navigation are based on the same data structure. Table 2 shows a comparison of the performance of the navigation algorithms in given complex volumes using Geant4, TGeo, or VecGeom. The benefit of the SIMD speedup is highlighted by the additional gain when switching from SSE4 to AVX2 instructions on the x86_64 architecture. This benefit is also available in non-basketized modes via internal vectorization.

There are many possible layouts of acceleration structures with SIMD support. This gives room for further improvement by selectively choosing the best possible acceleration structures for any given geometry volume. In this respect, VecGeom is ready to interface with kernels available from industrial ray-tracing libraries, such as Intel® Embree [17, 18], which has SIMD support.

VecMath

VecMath is a library that collects general-purpose mathematical utilities with SIMD and SIMT (GPUs) support based on VecCore. Templated fast math operations, pseudorandom number generators, and specific types (such as Lorentz vectors) were initially extracted from GeantV, then developed and extended within VecMath. The library is being extended to support vector operations for 2D and 3D vectors, and general-purpose vectorized algorithms. VecMath is intended as a core mathematical library, free of external dependencies other than VecCore and usable by vector-aware software stacks.

Fast Math

The Math.h header in the VecMath library contains templated implementations for FastSinCos, FastLog, FastExp, and FastPow functions. The functions can take either scalar or SIMD types as arguments. While the scalar specializations redirect to the corresponding Vdt [19] implementations, the SIMD specialization is currently implemented based on Vc types.

Pseudorandom Number Generation

The VecRNG class of VecMath provides parallel pRNGs (pseudorandom number generators) implementations for both SIMD and SIMT (GPU) workflows via architecture-independent common kernels, using backends provided by VecCore. Several state-of-the-art RNG algorithms are implemented as kernels supporting parallel generation of random numbers in scalar, vector, and CUDA workflows. For the first phase of implementation, the following representative generators from major classes of pRNG were selected: MRG32k3a [20], Random123 [21], and MIXMAX [22]. These generators meet strict quality requirements, belonging to families of generators that have been examined in depth [23] or that have evidence from ergodic theory of exceptional decorrelation properties [22]. All pass major crush-resistant tests such as DIEHARD [24] and BigCrush of TestU01 [25]. In addition, constraints in the size of the state and the performance were considered: (1) a very long period (\(\ge 2^{200}\)), obtained from a small state (in memory), (2) fast implementations and repeatability of the sequence on the same hardware configuration, and (3) efficient ways of splitting the sequence into long disjoint streams.

The design choice for the class hierarchy was the exclusive use of static polymorphism, motivated by performance considerations. Every concrete generator inherits through the CRTP (curiously recurring template pattern) from the VecRNG base class, which defines mandatory methods and common interfaces. VecRNG is exclusively implemented in header files, and provides a minimal set of member methods. This approach allows more flexibility in the higher-level interfaces for specific computing applications, but minimizes the overhead in compilation time.

The essential methods of VecRNG interfaces are Uniform<Backend>() and Uniform<Backend>(State_t& s), which generate the backend type of double-precision u.i.i.d (uniformly independent and identically distributed) numbers in [0,1), and update the internal fState and the given state s, respectively. The State_t is defined in each concrete generator and provided to the base class through RNG_traits. One of the associated requirements for each generator in VecRNG is to provide an efficient skip-ahead algorithm, \(s_{n+p} = f_p(s_n)\) (advance a state \(s_n\) by p sequences, where p is the unit of the stream length or an arbitrary number) in order to assign disjointed multiple streams for different tasks. For example, the mandatory method, Initialize(long n), moves the random state at the beginning of the given \(n\)th stream. Each generator supports both scalar and vector backends with a common kernel. Random123 has an extremely efficient stream assignment without any additional cost since the key serves as the stream index, while MRG32k3a uses transition matrices (A), which recursively evaluate \((A^{s}~\mathrm{mod}~m)\) using the binary decomposition of s. The vector backend uses N (SIMD length) consecutive substreams and also supports the scalar return type, which corresponds to the first lane of the vector return type. Besides the Uniform method, some commonly used random probability distribution functions are also provided.

GeantV Tracking and Navigation

GeantV implements basketized vectorization of geometry navigation queries following the workflow described in Sect. 2.1.2. Geometry “baskets” are passed to a top-level navigation API, then dispatched to VecGeom to benefit from its vectorization features as described in Sect. 3.2.3. The geometry queries are integrated into the stepping procedure in a special GeomQuery stage, providing a large number of handlers, one per logical volume in the user geometry. Each query for computing the distance to the next boundary and safety within the current volume can be executed in either scalar or vector mode. The efficiency in the vectorized case depends strongly on the volume shape and number of daughters. The track position and direction data are internally gathered into SOA data structures by VecGeom and dispatched to the 3D solid algorithms, updating navigation states held by the GeantV track structure. Even in the scalar calling sequence, VecGeom vectorizes the calls to the internal navigation optimizer.

An initial attempt to basketize and call the vector DoIt method for all volumes in a complex geometry such as CMS proved to be inefficient. The main reason was not VecGeom vectorization inefficiency, but the impact of SIMD basketizing on the GeantV workflow. CMS geometry has O(4K) volumes, out of which \(\sim 80\%\) of the steps are performed in only \(\sim 10\%\) of the volumes. In a GeantV vector flow scenario, after an initial propagation out of the central vertex volumes, most tracks become isolated in SIMD baskets belonging to many different, less important volume handlers. The workflow enters starvation mode and has to force frequent flushes of these baskets and execution of geometry queries in scalar mode. The effect worsens for complex geometry setups, typical for detectors at the LHC. In simple setups, composed of just a few geometry volumes, this scenario does not happen and basketization gains are evident in the case that the geometry code takes a sizeable fraction of the execution time.

A new dynamic basketizing feature is implemented to alleviate this effect. Initially all volume basketizers are switched to ON, but the frequency of flushes versus vectorized executions is measured and triggers scalar mode for inefficient baskets. Depending on the tuned efficiency threshold, the prototype will end up disabling most volume basketizers and keeping only about 5% active. Due to the fact that some shapes with intensive computation (such as polycones and polyhedra) are not vectorized in VecGeom in multi-particle mode, the overall vectorization efficiency is rather poor and is reduced further by scatter/gather overheads.

Related to the geometry, GeantV uses a different strategy per step for the boundary crossing algorithm in a magnetic field, compared to Geant4. The algorithm first estimates the deviation of the particle moving with a given step along the helix arc using a small angle approximation, compared to a straight-line propagation with the same step. Constraining this bending error to be less than an acceptable tolerance gives the maximum allowed step in magnetic field, \(\delta _{\mathrm {field}}\). The geometry navigation interface is queried for the distance to the next boundary along a straight line, \(\delta _{\mathrm {boundary}}\), as well as the isotropic safe distance within the current volume, \(\delta _{\mathrm {safe}}\). The propagation step is first constrained by the minimum between the physics step limit, \(\delta _{\mathrm {physics}}\), and the next boundary limit, \(\delta _{\mathrm {boundary}}\). The second constraint is the maximum between the magnetic field limitation and the safety. This allows particles with small momenta to ignore trajectory bending in the limit of nearby volumes, and particles with large momenta to ignore nearby volumes and travel forward much farther in the case that the deflection is small. In practice, this allows larger steps to be taken near volume boundaries without the risk of crossing accidentally. Finally, the step to be taken is the minimum of either the geometry or the physics limit, within the field/safety constraint. This step is passed to the integrator algorithm to move the track to the new position. If it was the geometry that limited the step, the geometry is queried for a possible final relocation after the propagation; otherwise, the algorithm is repeated until either the physics or geometry distance limits are reached. Note that after the track kinematics are updated, tracks limited by geometry that have not completed crossing into the next volume are copied back into the geometry stage basket and considered in a subsequent execution of this stage. The tracks having reached the next boundary are forwarded to the PostPropagation stage, as shown in Fig. 4.

EM Physics Models and Vectorization

The ultimate goal of the GeantV R&D project is to exploit the possible computational benefits of applying vectorization techniques to HEP detector simulation code. From the physics modeling point of view, the most intensively used and computationally demanding part of these simulations is the description of electromagnetic (EM) interactions of \(e^-\), \(e^+\), and \(\gamma \) particles with matter. This is what motivated the choice of EM shower simulation code to demonstrate the possible computational benefits of applying track-level vectorization.

Geant4 provides a unique variety of EM physics models to describe particle interactions with matter [26], from the eV to PeV energy range, with different levels of physics accuracy. Each application area can find a suitable set of models with the appropriate balance between the accuracy of the physics description and the corresponding computational complexity. Moreover, Geant4 provides a pre-defined collection of EM physics models and processes for different application areas in the form of EM physics constructors [27]. Among these, the so-called EM standard physics constructor (sometimes called EM Opt0) is recommended by the developers for HEP detector simulations.

A corresponding set of EM models has been provided for the GeantV transport engine, together with the appropriate physics simulation framework. The accuracy of each GeantV model implementation was carefully tested through individual, model-level tests by comparing the computed final states and integrated quantities (e.g. cross-sections, stopping power) to those produced by the corresponding Geant4 version of the given model. Moreover, several simulation applications have been developed to test and verify the GeantV EM shower simulation accuracy, including both a general, simplified sampling calorimeter and a complete CMS detector setup. In all cases, the GeantV simulation results, measured using quantities such as energy deposit distributions in a given part of the detector, number of charged and neutral particle steps, secondary particles, etc., agreed with the corresponding Geant4 simulation results to within 0.1% (see more in Sect. 4).

The final state generation, or interaction description, pieces of these models are the most computationally demanding subset of the physics simulation. At the same time, they provide the physics code that can be the most suitable for track level vectorization. The final state generation usually includes the generation of stochastic variables, such as energy transfer, scattering, or ejection angles, from their probability distributions, determined by the corresponding energy or angle differential cross sections (DCS) of the underlying physics interaction. The probability density functions (PDF) are proportional to the DCS, which is usually a complex function and very often only available in numerical form. This implies that the analytical inversion of the corresponding cumulative distribution function (CDF) is unknown. For this reason, to generate samples of the stochastic variables needed to determine the final state of a primary particle that underwent a physics interaction, different numerical techniques have to be used.

The composition–rejection method, for example, is one of the most extensively used method in particle transport simulation codes to generate random samples according to a given PDF. However, it is not very suitable for vectorization, being based on an unpredictable number of loop executions depending on the outcome of the random variable. This implies that if this algorithm was vectorized over primary tracks, the different lanes of the vector would reach exit conditions in a non-deterministic way, at different moments, thus reducing the number of used lanes, eventually causing a loss of any potential computational gain. For this reason, special care was taken to find new sampling algorithms, more suitable for vectorization, and also to implement solutions that could provide the maximum possible benefits of track-level vectorization, even when applied to existing and well known sampling techniques.

As an outcome of this R&D phase, two methods were implemented and tested in the GeantV EM Physics library. In GeantV jargon, the first method is known as “sampling tables”, which makes it possible to use an alias method in combination with sampling tables, thanks to the introduction of a discrete random variable as an intermediate step. The second method is known as “lane-refilling rejection”, or simply “rejection”, which takes advantage of vectorization even in the presence of non-deterministic sampling techniques. More details about the implementation of the EM physics models and their vectorization can be found in Ref. [28].

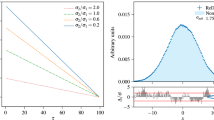

Using the above mentioned unit tests to analyze the performance of the vectorized EM models compared to their (optimized) scalar versions, excellent 1.5–3\(\times \) and 2–4\(\times \) vectorization gains were achieved on Haswell and Skylake architectures, respectively. The instruction set used on both architectures was AVX2, since AVX512 was not supported by the Vc backend. Figure 10 shows the speedup of the final state generation of different electromagnetic physics models obtained with SIMD vectorization in the cases of the two different methods.

As a result of these developments, the physics simulation part of the GeantV R&D project provides the possibility of exploiting the benefits offered by applying track-level vectorization on a complete EM shower simulation suitable for HEP detector simulation applications.

Speedup of the final state generation of different electromagnetic physics models obtained with SIMD vectorization in case of different sampling algorithms. The results were obtained by using Google Benchmarks [29] on an Intel® Haswell CoreTM i7-6700HQ, 2.6 GHz, with the Vc backend and AVX2 instruction set processing 256 tracks. “Scal” and “Vec” refer to scalar and vector implementations, while “Table” and “Rej” refer to the sampling table and rejection methods, respectively

A relatively wide range of performance variation in the algorithms and their vectorization gains is observed. This is due to the fact that each of the EM physics models under study translates to a final state sampling algorithm with unique computational characteristics more favorable for one sampling technique or the other. In addition to this, while the sampling table-based final state generations have a constant run time under any external conditions, the efficiency of a given rejection algorithm can change significantly with the primary particle energy. This is illustrated in Fig. 11 that shows the relative speedup of these two techniques applied to the Bethe–Heitler \(e^-/e^+\) pair production model, as a function of the primary \(\gamma \) particle energy. The two algorithms perform similarly at lower energies, while the rejection algorithm becomes \({\sim }35\%\) faster at higher \(\gamma \) energies simply because of the smaller rejection rate.

The results shown indicate that the GeantV vectorized EM physics library has to be tuned to select the most efficient algorithm for any specific physics process, depending on the specific conditions. The complexity of the underlying DCS, the target material composition, or the primary particle energy are examples of conditions that can heavily affect the physics performance. The GeantV physics framework has been designed to take all of these considerations into account and to allow the choice of the most efficient algorithm for final state generation, depending on the primary particle energy or detector region. This makes it possible to obtain the maximum possible performance, while keeping the memory consumption of the algorithms under control, even in the case of the most complex HEP detector simulation applications.

Magnetic Field Integration

The integration of the equations of motion for a charged particle in a non-uniform pure magnetic field (or an electromagnetic field) accounts for about 15–20% of the CPU time of a typical HEP particle transport simulation. This integration is typically performed using the family of Runge–Kutta methods, which involves the generation of multiple intermediate states (x, p), and the evaluation of the field and the corresponding equation of motion. Many floating point operations are carried out for each step of each track, providing substantial work for each initial data point, but without any expensive functions such as logarithms or trigonometric functions. In GeantV, the input to the field propagation stage is a basket of tracks. Each track has a requested step length for integration (see Sect. 3.4), obtained from the physical step size, the distance to the nearest boundary, and the curvature of the track (to avoid missing boundaries).

The tracks’ positions are typically scattered throughout the detector. The integration of separate tracks is carried out in separate vector lanes in order to create the most portable code, and to make the best potential use of vector units with different widths. The vectorization of this part of a particle transport simulation has an important requirement. All steps of charged particles must be integrated, so long as the step can affect the deposition of energy or other quantities measured.

The lower level parts of the integration can be fully vectorized, because the operations proceed in lockstep, synchronously over the lanes of a vector with each lane corresponding to a different track:

-

the evaluation of the EM field at each track’s current or predicted location, either using interpolation (as in our benchmark example) or other methods such as the evaluation of a function;

-

the evaluation of the ‘force’ part of the equation of motion using the Lorentz equation;

-

and a single step of a Runge–Kutta algorithm, which provides an estimate of the end state of a track (position, momentum) and the error in this estimate.

The top level of Runge–Kutta integration involves checking whether the estimated error conforms to the required accuracy and checking if a successful step finishes the required integration interval. If the integration goes on, it must also calculate the size of the next integration step. Different actions are required depending on whether a step succeeded or not. In case a step was not successful, integration must continue for those tracks. A lane with a finished track, or one that reached the maximum allowed number of integration substeps, must be refilled from the potentially remaining pool of tracks in the basket.

Since all charged particles are involved, there is a large population of particles undertaking integration steps during a simulation. Larger-size baskets to accumulate work in field integration were created, and can be configured separately from the general basket size. With larger baskets, the fraction of lanes doing useful work increases substantially, getting close to unity, as shown in Table 3.

Memory size of a simulation versus the basket size for field propagation. Simulations with different number of simultaneous events are shown. The number of primaries per event is also varied, using 16 (default) and 8. All are single-threaded simulations of 100 events, each with 16 primary electrons with 10 GeV energy in the CMS setup. The simulations were run on a MacBook Pro 2016 with 16GB RAM running MacOS 10.13.6

Unfortunately, increasing the size of the buffer for field propagation has an additional effect, which counteracts the increase in efficiency from the reduction of idle lanes. It increases the number of simultaneous tracks in flight, as larger baskets accumulate more tracks. In turn, this increases the memory usage, which is proportional to the the number of tracks in flight. The effects can be seen clearly in Fig. 12, where a linear relation between the buffer size for field propagation and the memory usage is evident. It is possible, however, to reduce memory usage by starting fewer simultaneous events, as seen in the additional measurements with 1, 8, 16, or 32 simultaneous events.

Input and Output (I/O)

Input

Simulation input consists of particles to be transported through the detector. These can be either realistic collision events produced by Monte Carlo event generators or single particles (similar to a test beam) to study a particular response. The use of an interface (the so-called event record) makes the generation and the simulation steps independent, as schematically shown in Fig. 13. For the GeantV transport engine, it is irrelevant how the ’primary’ (input particles) are produced. The simulation threads concurrently process particles from the input.

The interface to the HepMC3 [30] event record has been implemented (the HepMCGenerators class). This interface can read both HepMC3 ASCII data and ROOT files containing serialized objects. The different types of input files are recognized by their file extensions. The interface selects the stable (outgoing) particles from the provided event and passes them to the transport engine. It can also apply optional cuts, for instance on \(\eta \) (pseudorapidity), \(\phi \) (azimuthal angle), or momentum.

Output

The detector simulation produces hits that contain energy deposition and timing information in the sensitive parts of the detector, which are the output of the program. In the case of GeantV, those hits are produced concurrently by all the simulation threads and need to be recorded properly. Thread-safe queues have been implemented to handle the asynchronous generation of hits from several threads simultaneously. The GeantFactory machinery takes care of grouping the hits into so-called HitBlocks and putting them in the queues. Two possible approaches were tried for saving the hits into a file. In the first, all the hits produced by different threads were given to one ‘output thread’ for serialization. This approach turned out not to scale properly and became a bottleneck. The problem was solved by the second approach, where the serialization was performed by each transport thread and the ‘output thread’ was only responsible for the actual writing of the data to the file. This approach did not adversely affect the memory consumption in any visible way. The implementation is based on the TBufferMerger class provided by ROOT [11]. Each transport thread fills, in parallel, its ROOT TTree objects, and the TBufferMerger merges these TTrees and saves them into the file on disk, as shown in Fig. 14.

This architecture has been profiled and shows very good scaling behavior, as seen in Fig. 15. In particular, it solves the bottleneck problem of the ‘single thread serialization’ approach. More details on the usage of the multithreaded output are provided in Sects. 3.8.1 and 5.2.

The I/O performance compared to the ‘single thread serialization’ approach. These tests used an Intel® Xeon® CPU E5-2630 v3 @ 2.40 GHz (Haswell), 2 × 8 cores, HT = 2 (16 native threads, 32 in hyperthreading mode), disk: SSD 430 MB/s non-cached write speed (measured with: dd if=/dev/zero of=/tmp/testfile bs=1G count=1 oflag=direct)

MC Truth

In addition to the hits, users may be interested in saving the kinematic output, so-called MC truth information. This consists of the generated particles (or at least some of them) that produced those hits. The handling of MC truth is quite detector dependent and no general solution exists. The algorithms selecting which particles to store, how to keep connections between them, and how to associate hits to them are not straightforward and, most of the time, require some trade-offs between the completeness of the stored event information and its size. In general, it is not desirable to store all the particles, as this would only waste the disk space without providing any useful information. For instance, typically delta rays (low-energy secondary electrons), low-energy gamma showers, etc. are not stored. It is best to store all the particles needed to understand the given event and to associate to the output hits. In all cases, it is necessary to set the particles’ connections in order to form consistent event trees.

In addition to all of the above points, multi-threading and concurrency only increase the complexity, because the order of processing the particles is non-deterministic. There are situations where, depending on the load of the processor, processing of the ‘daughter’ particle may be completed before the ‘mother’ particle’s propagation ends. Once finished, the events need to be reassembled from the products generated by the different threads after parallel processing.

Following the idea that there is no perfect or complete strategy for handling MC truth, users must be able to decide which particles to store. The GeantV particle transport, therefore, must provide the possibility of flagging particles as ‘to be stored’ according to some user-defined rules and, at the same time, to ensure that the stored event has consistent mother-daughter links, as well the correct hit associations. Taking all these requirements into account, MC truth handling was implemented using an architecture that has a light coupling to the transport engine, with minimal interaction with the transport threads, and at the same time provides the flexibility to implement custom particle history handlers. In this design, shown in Fig. 16, the interface provided by the MC truth manager (MCTruthMgr class) receives concurrent notifications from transport threads about adding new primary or secondary particles, ending particles, or finishing events. It then delegates the processing of particles’ history to a concrete MC truth implementation. In other words, the implementation is composed of the interface from the MCTruthMgr class and the underlying infrastructure for the particles’ history (with a light-weight, transient, intermediate event record) and the user code that implements the decision-making (filtering) algorithm, as well as the conversion to the users’ event format. As a proof of principle, an example using HepMC3 as the MC truth output format is provided. This demonstrates how to implement a simple filtering algorithm based on particles’ energy, allowing a consistent particle history to be serialized into an output file.

User Interface

GeantV provides ‘user actions’ (similar to those in the Geant4 toolkit) that allow users to control the program flow at the level of run, event, track and step. Concurrent containers allow users to accumulate different kinds of information and merge the information from the different threads at the end of the run. Scoring is done using dedicated stepping actions in which information from the sensitive volumes is accessible.

Scoring Interfaces

The GeantV prototype implements specific scoring interfaces that aim to facilitate handling user-defined data structures for mixed concurrent events. The concurrency aspect is handled by having multiple copies of the scoring data structures attached to GeantV task data objects. Each running worker thread picks up a different task data object, percolating it as an argument to the user scoring interfaces. Since the maximum number of events transported concurrently, \(N_{\mathrm {max}}\), is limited, the user scoring data structures have to be indexed in arrays having the same limit: \(N_{\mathrm {max}}\). The user class must have a trivial default constructor and copy constructor, and has to implement methods to merge and clear the data for a given event slot. The users are provided the interface to attach custom data types to each task, usable subsequently in their application for scoring in a thread-safe way. A detailed example using this pattern can be seen in the CMSFullApp example from the GeantV Git [31] repository. Another example is presented in Sect. 5.2.

GeantV Applications and Physics Validations

The complexity of detector simulation software requires rigorous testing and continuous monitoring during development in order to ensure code correctness and to keep simulation precision and computing performance under control. Several tests and applications, with different levels of complexity, have been developed in order to meet these needs.

Particle transport simulation is composed of several individual components, including the geometry modeler, material description, physics models and processes, held together by the simulation framework. The framework is used to set up a flexible modeling environment, including a generic computation workflow controlled by high-level manager objects. As a consequence, the individual building blocks are accessed through their interfaces and provide their functionalities through the framework. Checking the correctness of individual components is a pre-requisite for ensuring the above-mentioned quality criteria. Subsequently, executing complete simulation applications that exploit and exercise the whole framework is an essential final step in this testing procedure.

Model-level tests allow the verification of the responses of individual physics models by directly calling their interface methods. This makes it possible to test in an isolated way the correctness of the integrated quantities (e.g. atomic cross section, stopping power, etc.) and differential quantities (e.g. energy or angular distribution of the final-state particles) that the physics models provide during the simulation. The production of such model-level tests was enforced as part of the physics model development procedure. The results were verified by comparing with the theoretical expectations and the corresponding Geant4 tests. To test and validate the overall simulation framework, including its building blocks, complete simulation applications were developed, along with the corresponding Geant4 applications, if these were not already available.

An application with a simple setup (TestEm5Footnote 1), with a configurable particle gun and a configurable target, was developed as a first-level test application. In spite of its relative simplicity, this application can produce several integrated quantities (e.g. mean energy deposit, track length, number of steps, backscattering and transmission coefficients, etc.) and differential quantities (e.g. transmitted/backscattered particle angular/energy distributions). The primary particle and target properties can be modified easily. This application was the perfect tool for primary testing, validation, and debugging during the development of the physics framework.

The second application developed was a generic, simplified sampling calorimeter simulation (TestEm3), similar to that used for monthly validation by the Geant4 electromagnetic (EM) physics developers. With its intermediate complexity, this application was used for verification of the simulation by comparing several differential and integrated quantities to those provided by the corresponding Geant4 simulation. As an example of such a differential quantity, the mean energy deposit in the calorimeter by electrons generated with 10 GeV energy as a function of the layer number (proportional to the depth) is shown in Fig. 17 and compared to the corresponding Geant4 (version 10.4.patch03) simulation results. Integrated results, such as the mean energy deposit, track lengths in the absorber and gap materials, or the mean number of secondary particles and simulation steps obtained from the same simulation setup, are summarised in Tables 4 and 5. All measured quantities demonstrate agreement within the per mil level compared to the corresponding values obtained from Geant4.

Finally, a simulation application using the complete CMS detector (FullCMS) was developed in order to validate and verify the correctness and robustness of the overall framework when reaching the complexity of an LHC experiment. While a similar level of agreement as mentioned above was found between the GeantV and the corresponding Geant4 simulation results, this application was mainly used for performance analysis and profiling.

Mean energy deposit by E\(_0\) = 10 GeV e\(^-\) in a simplified sampling calorimeter as a function of the layer number simulated by GeantV and Geant4 (10.4.patch03). The calorimeter is 50 layers of 2.3 mm lead and 5.7 mm liquid argon. A 4 T transverse magnetic field was applied and 0.7 mm secondary production threshold was used

Usability Aspects

Reproducibility

Due to the stochastic nature of particle physics processes, detector simulation results are influenced by the generated random number sequence. Different sequences will generally produce slightly different, but statistically compatible, results. Such sequences are pseudo-random, controlled and reproducible based on an initial ‘seed’ (the next generated number is fully determined by the current generator state). Reproducibility is an important requirement in the case of HEP detector simulation: simulations with the same initial configuration (primary particles and pRNG choice and seed) must give the same results. Even in case of non-sequential processing, the simulation must be repeatable when starting from the same initial configuration of the pRNG engine. This must hold true even if different choices are made during a run, e.g. using vector kernels for a set of physics processes of selected tracks. A key practical reason is the need to reproduce and debug problems that occur during the simulation of a particular event or initial particle. In general, the reliability of a simulation that cannot be exactly repeated is more difficult to assess.