Abstract

We present an algorithm for the computation of Vietoris–Rips persistence barcodes and describe its implementation in the software Ripser. The method relies on implicit representations of the coboundary operator and the filtration order of the simplices, avoiding the explicit construction and storage of the filtration coboundary matrix. Moreover, it makes use of apparent pairs, a simple but powerful method for constructing a discrete gradient field from a total order on the simplices of a simplicial complex, which is also of independent interest. Our implementation shows substantial improvements over previous software both in time and memory usage.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Persistent homology is a central tool in computational topology and topological data analysis. It captures topological features of a filtration, a growing one-parameter family of simplicial complexes and tracks the lifespan of those features throughout the parameter range in the form of a collection of intervals called the persistence barcode. Each interval corresponds to the birth and death of a homological feature, and the associated pair of simplices is called a persistence pair. One of the most common constructions for a filtration from a geometric data set is the Vietoris–Rips complex, which is constructed from a finite metric space by connecting any subset of the points with diameter bounded by a specified threshold with a simplex.

The computation of persistent homology has attracted strong interest in recent years (Chen and Kerber 2013; Milosavljević et al. 2011), with at least 15 different implementations publicly available to date (Bauer et al. 2014, 2017; Binchi et al. 2014; Henselman and Ghrist 2016; Huber 2013; Lewis 2013; Morozov 2006, 2014; Nanda 2010; Perry et al. 2000; Sexton and Vejdemo-Johansson 2008; Tausz 2011; Tausz et al. 2014; GUDHI 2015; Zhang et al. 2019, 2020; Čufar 2020).

Over the years, dramatic improvements in performance have been achieved, as demonstrated in recent benchmarks (Otter et al. 2017).

The predominant approach to persistence computation consist of two steps: the construction of a filtration boundary matrix, and the computation of persistence barcodes using a matrix reduction algorithm similar to Gaussian elimination, which provides a decomposition of the filtered chain complex into indecomposable summands (Barannikov 1994). Among the fastest codes for the matrix reduction step is PHAT (Bauer et al. 2017), which has been created with the goal of assessing and understanding the relation and interplay of the various optimizations proposed in the previous literature on the matrix reduction algorithm. In the course of that project, it became evident that often the construction of the filtration boundary matrix becomes the bottleneck for the computation of Vietoris–Rips barcodes.

The approach followed by Bauer (2016) is to avoid the construction and storage of the filtration boundary matrix as a whole, discarding and recomputing parts of it when necessary. In particular, instead of representing the coboundary map explicitly by a matrix data structure, it is given only algorithmically, recomputing the coboundary of a simplex whenever needed. The filtration itself is also not specified explicitly but only algorithmically, via a method for comparing simplices with respect to their appearance in the filtration order, together with a method for computing the cofacets of a given simplex and their diameters. The initial motivation for pursuing this strategy was purely to reduce the memory usage, possibly at the expense of an increased running time. Perhaps surprisingly, however, this approach also turned out to be substantially faster than accessing the coboundary from memory. This effect can be explained by the fact that, on current computer architectures, memory access is much more expensive than elementary arithmetic operations.

The computation of persistent homology as implemented in Ripser involves four key optimizations to the matrix reduction algorithm, two of which have been proposed in the literature before. While our implementation is specific to Vietoris–Rips filtrations, the ideas are also applicable to persistence computations for other filtrations as well.

Clearing birth columns The standard matrix reduction algorithm does not make use of the special structure of a boundary matrix D, which satisfies \(D^2=0\), i.e., boundaries are always cycles. Ignoring this structure leads to a large number of unnecessary and expensive matrix operations in the matrix reduction, computing a large number of cycles that are not used subsequently. The clearing optimization (also called twist), suggested by Chen and Kerber (2011), avoids the computation of those cycles.

Cohomology The use of cohomology for persistence computation was first suggested by de Silva et al. (2011b). The authors establish certain dualities between persistent homology and cohomology and between absolute and relative persistent cohomology. As a consequence, the computation of persistence barcodes can also be achieved as a cohomology computation. A surprising observation, resulting from an application of persistent cohomology to the computation of circular coordinates by de Silva et al. (2011a), was that the computation of persistent cohomology is often much faster than persistent homology. This effect has been subsequently confirmed by Bauer et al. (2017), who further observed that the obtained speedup also depends heavily on the use of the clearing optimization proposed by Chen and Kerber (2011), which is also employed implicitly in the cohomology algorithm of de Silva et al. (2011b). Especially for Vietoris–Rips filtrations and low homological degree, a decisive speedup is obtained, but only when both cohomology and clearing are used in conjunction. A fully satisfactory explanation of this phenomenon has not been given previously in the literature. In the present paper, we provide a simple counting argument that sheds light on this computational asymmetry between persistent homology and cohomology of Rips filtrations.

Implicit matrix reduction The computation of persistent homology usually relies on an explicit construction of a filtration coboundary matrix, which is then transformed to a reduced form, from which the persistence barcode can be read off directly. In contrast, our approach is to decouple the description of the filtration and of the boundary operator, representing both the filtration and the coboundary matrix only algorithmically instead of explicitly. Specifically, using a fixed lexicographic order for the k-simplices, independent of the filtration, the boundary and coboundary matrices in degree k for the full simplicial complex on n vertices are completely determined by the dimension k and the number n, and their columns can simply be recomputed instead of being stored in memory. Likewise, the filtration order of the simplices is defined to depend only on the distance matrix together with a fixed choice of total order on the simplices, used to break ties when two simplices appear simultaneously in the filtration. Together, the filtration and the boundary map can be encoded using much less information than storing the coboundary matrix explicitly. The algorithmic representation of the coboundary matrix in Ripser loosely resembles the use of lazy evaluation in the infinite-dimensional linear algebra framework of Olver and Townsend (2014).

Furthermore, we also avoid the storage of the reduced matrix as a whole, retaining only the much smaller reduction matrix, which encodes the column operations applied to the coboundary matrix. Besides the current column of the reduced matrix on which operations are performed, only information about the pivots of the reduced matrix is stored in memory. In addition, only the pivots which can not be obtained directly from the unreduced matrix are stored in memory, as explained next. The implicit representation of the reduced matrix by a reduction matrix has also been used in the cohomology algorithm by de Silva et al. (2011b), which is implemented in Dionysus (by Morozov 2006), and in GUDHI (2015). In contrast to our implementation, however, in those implementations the unreduced filtration coboundary matrix is still stored explicitly.

Apparent and emergent pairs Further improvements to persistence computation, both in terms of reduced memory usage and computational shortcuts, can be obtained by exploiting a frequent and certain easily identifiable type of persistence pair, called an apparent pair. The pairing of a given simplex in an apparent pair can be determined by a purely local condition, depending only on the facets and cofacets of the simplex, and thus can be read off the filtration (co)boundary matrix directly without any matrix reduction. In addition, since an apparent pair determines pivots in the boundary and the coboundary matrix, those pivots can be recomputed quickly, and thus only the pivots not corresponding to apparent pairs have to be stored in memory for the implicit matrix reduction algorithm.

Generalizing the notion of apparent pairs, emergent pairs are persistence pairs that can be identified directly during the reduction, and can be read off directly from a partially reduced matrix together with the previously computed pairs. The construction of the filtration coboundary matrix columns can be cut short when an apparent or emergent pair is encountered. During the enumeration of cofacets of a simplex for an appropriate refinement of the original filtration, apparent and emergent pairs of persistence 0 can be readily identified, circumventing the construction of the full coboundary of the simplex. Since a large portion of all pairs appearing in the computation arises this way, it becomes unnecessary to construct the entire filtration (co)boundary matrix, and the speedup obtained from this shortcut is substantial. Apparent pairs also provide a simple and natural construction for a discrete gradient (in the sense of discrete Morse theory) from a simplexwise filtration.

We note that special cases of the apparent pairs construction have been described in the literature before, and several equivalent variants have appeared in the literature, seemingly independently from the present work, after the public release of Ripser. In particular, Kahle (2011) described the construction of a discrete gradient on a simplicial complex based on a total order of the vertices, which is used to derive bounds on the topological complexity of random Vietoris–Rips complexes above the thermodynamic limit. Indeed, our definition of apparent pairs arose from the goal of generalizing Kahle’s construction to general filtrations of simplicial complexes. We verify in Lemma 3.8 that the discrete gradient constructed in that paper coincides with the apparent pairs of a simplexwise filtration given by the lexicographic order on the simplices. Apparent pairs were also considered by Delgado-Friedrichs et al. (2015) as close pairs in the context of cancelation of critical points in discrete Morse functions. More recently, apparent pairs have been described by Henselman-Petrusek (2017, see Remark 8.4.2) as minimal pairs of a linear order and employed in the software Eirene Henselman and Ghrist (2016), which has been developed simultaneously and independently of Ripser. In Eirene, apparent pairs are used to improve the performance of persistence computations by avoiding the construction of large parts of the boundary matrix, similar to the use of apparent and emergent pairs in Ripser. An elaborate focus lies on the choice of refinement of the Vietoris–Rips filtration, aiming for a large number of pairs. Following the first release of Ripser in 2016, apparent pairs have been used in various ways in the literature. They have been employed by Lampret (2020) in the more general context of algebraic discrete Morse theory under the name steepness pairing. In a computational context, they have also been employed for parallel and multi-scale (coarse-to fine) persistence computation on the GPU by Mendoza-Smith and Tanner (2017), and in a hybrid GPU/CPU variant of PHAT and Ripser developed by Zhang et al. (2019), Zhang et al. (2020). Further recent implementations based on Ripser include a reimplementation in Julia developed by Čufar (2020) and a lockfree shared-memory adaptation of Ripser written by Morozov and Nigmetov (2020).

While apparent pairs have not been considered a central part of discrete Morse theory and of persistent homology so far, we consider their importance in recent research and their multiple discovery as strong evidence that they will play a significant role in the further development of these theories.

2 Preliminaries

Simplicial complexes and filtrations Given a finite set X, an (abstract) simplex on X is simply a nonempty subset \(\sigma \subseteq X\). The dimension of \(\sigma \) is one less than its cardinality, \(\dim \sigma = |\sigma | - 1\). Given two simplices \(\sigma \subseteq \tau \), we say that \(\sigma \) is a face of \(\tau \), and that \(\tau \) is a coface of \(\sigma \). If additionally \(\dim \sigma + 1 = \dim \tau \), we say that \(\sigma \) is a facet of \(\tau \) (a face of codimension 1), and that \(\tau \) is a cofacet of \(\sigma \).

A finite (abstract) simplicial complex is a collection K of simplices X that is closed under the face relation: if \(\tau \in K\) and \(\sigma \subseteq \tau \), then \(\sigma \in K\). The set X is called the vertices of K, and the subsets in K are called simplices. A subcomplex of K is a subset \(L \subseteq K\) that is itself a simplicial complex.

Given a finite simplicial complex K, a filtration of K is a collection of subcomplexes \((K_i)_{i \in I}\) of K, where I is a totally ordered indexing set, such that \(i \le j\) implies \(K_i \subseteq K_j\). In particular, for a finite metric space (X, d), represented by a symmetric distance matrix, the Vietoris–Rips complex at scale \(t \in \mathbb {R}\) is the abstract simplicial complex

Vietoris–Rips complex were first introduced by Vietoris (1927) as a means of defining a homology theory for general compact metric spaces, and later used by Rips in the study of hyperbolic groups (see Gromov 1987). Their usage in topological data analysis was pioneered by Silva and Carlsson (2004), foreshadowed by results of Hausmann (1995) and Latschev (2001) on sampling conditions for recovering the homotopy type of a Riemannian manifold from a Vietoris–Rips complex. Letting the scale parameter t vary, the resulting filtration, indexed by \(I=\mathbb {R}\), is a filtration of the full simplicial complex \(\Delta (X)\) on the vertex set X called the Vietoris–Rips filtration. For this paper, other relevant indexing sets besides the real numbers \(\mathbb {R}\) are the set of distances \(\{d(x,y) \mid x, y \in X\}\) in a finite metric space (X, d), and the set of simplices of \(\Delta (X)\) equipped with an appropriate total order refining the order by simplex diameter, as explained later.

We call a filtration essential if \(i \ne j\) implies \(K_i \ne K_j\). A simplexwise filtration of K is a filtration such that for all \(i \in I\) with \(K_i \ne \emptyset \), there is some simplex \(\sigma _i \in K\) and some index \(j < i \in I\) such that \(K_i \setminus K_j = \{\sigma _i\}\). In an essential simplexwise filtration, the index j is the predecessor of i in I. Thus, essential simplexwise filtrations correspond bijectively to total orders extending the face poset of K, up to isomorphism of the indexing set I. In particular, in this case we often identify the indexing set with the set of simplices. If a simplex \(\sigma \) appears earlier in the filtration than another simplex \(\tau \), i.e., \(\sigma \in K_i\) whenever \(\tau \in K_i\), we say that \(\tau \) is younger than \(\sigma \), and \(\sigma \) is older than \(\tau \).

It is often convenient to think of a simplicial filtration as a diagram \(K_\bullet :I \rightarrow \mathbf {Simp}\) of simplicial complexes indexed over some finite totally ordered set I, such that all maps \(K_i \rightarrow K_j\) in the diagram (with \(i \le j\)) are inclusions. In terms of category theory, \(K_\bullet \) is a functor.

Reindexing and refinement of filtrations A reindexing of a filtration \(F_\bullet :R \rightarrow \mathbf {Simp}\) indexed over some totally ordered set R is another filtration \(K_\bullet :I \rightarrow \mathbf {Simp}\) such that \(F_t = K_{r(t)}\) for some monotonic map \(r :R \rightarrow I\), called reindexing map. If there is a complex \(K_i\) that does not occur in the filtration \(F_\bullet \), we say that \(K_\bullet \) refines \(F_\bullet \).

As an example, the filtration \({{\,\mathrm{Rips}\,}}_\bullet (X)\) is indexed by the real numbers \(\mathbb {R}\), but can be condensed to an essential filtration \(K_\bullet \), indexed by the finite set of pairwise distances of X. In order to compute persistent homology, one needs to apply one further step of reindexing, refining the filtration to an essential simplexwise one, as described in detail later.

Sublevel sets of functions A function \(f :K \rightarrow \mathbb {R}\) on a simplicial complex K is monotonic if \(\sigma \subseteq \tau \in K\) implies \(f(\sigma ) \le f(\tau )\). For any \(t \in \mathbb {R}\), the sublevel set \(f^{-1}(-\infty ,t]\) of a monotonic function f is a subcomplex. The sublevel sets form a filtration of K indexed over \(\mathbb {R}\). Clearly, any finite filtration \(K_\bullet :I \rightarrow \mathbf {Simp}\) of simplicial complexes can be obtained as a reduction of some sublevel set filtration. In particular, the Vietoris–Rips filtration is simply the sublevel set filtration of the diameter function.

Discrete Morse theory Forman (1998) studies the topology of sublevel sets for generic functions on simplicial complexes. A discrete vector field on a simplicial complex K is a partition V of K into singleton sets and pairs \(\{\sigma , \tau \}\) in which \(\sigma \) is a facet of \(\tau \). We call such a pair a facet pair. A monotonic function \(f :K \rightarrow \mathbb {R}\) is a discrete Morse function if the facet pairs \(\{\sigma ,\tau \}\) with \(f(\sigma )=f(\tau )\) generate a discrete vector field V, which is then called the discrete gradient of f. A simplex that is not contained in any pair of V is called a critical simplex, and the corresponding value is a critical value of f.

Persistent homology In this paper, we only consider simplicial homology with coefficients in a prime field \(\mathbb {F}_p\), and write \(H_*(K)\) as a shortcut for \(H_*(K; \mathbb {F}_p)\). Applying homology to a filtration of finite simplicial complexes \(K_\bullet :I \rightarrow \mathbf {Simp}\) yields another diagram \(H_*(K_\bullet ) :I \rightarrow \mathbf {Vect}_p\) of finite dimensional vector spaces over \(\mathbb {F}_p\), often called a persistence module (Chazal et al. 2016).

If all vector spaces have finite dimension, such diagrams have a particularly simple structure: they decompose into a direct sum of interval persistence modules, consisting of copies of the field \(\mathbb {F}_p\) connected by the identity map over an interval range of indices, and the trivial vector space outside the interval (Crawley-Boevey 2015; Zomorodian and Carlsson 2005). This decomposition is unique up to isomorphism, and the collection of intervals describing the structure, the persistence barcode, is therefore a complete invariant of the isomorphism type, capturing the homology at each index of the filtration together with the maps connecting any two different indices. In fact, a corresponding decomposition exists already on the level of filtered chain complexes (Barannikov 1994), and this decomposition is constructed by algorithms for computing persistence barcodes.

If \(K_\bullet \) is an essential filtration and \([i,j) \subseteq I\) is an interval in the persistence barcode of \(K_\bullet \), then we call i a birth index, j a death index, and the pair (i, j) an index persistence pair. Moreover, if \([i,\infty )\) is an interval in the persistence barcode of K, we say that i is an essential (birth) index. For an essential simplexwise filtration \(K_\bullet \), the indices I are in bijection with the simplices, and so in this context we also speak about birth, death, and essential simplices, and we consider pairs of simplices as persistence pairs. If \(K_\bullet \) is a reindexing of a sublevel set filtration for a monotonic function f, we say that the pair \((\sigma _i,\sigma _j)\) has persistence \(f(\sigma _j)-f(\sigma _i)\).

Persistence computation using simplexwise refinement A reindexing \(K_\bullet \) of a filtration \(F_\bullet = K_\bullet \circ r\) can be used to obtain the persistent homology of \(F_\bullet \) from that of \(K_\bullet \) as

Note that this is a direct consequence of the fact that the two filtrations \(F_\bullet ,K_\bullet \), the reindexing map r, and homology \(H_*\) are functors, and composition of functors is associative.

If the reindexing map is not surjective, the persistence barcode of the reindexed filtration \(K_\bullet \) may contain intervals that do not correspond to intervals in the barcode of \(F_\bullet \). The preimage \(r^{-1}[i,j) \subseteq R\) of an interval \([i,j) \subseteq I\) in the persistence barcode of \(K_\bullet \) is then either empty, in which case we call (i, j) a zero persistence pair; if \(F_\bullet \) is the sublevel set filtration of f, this is the case if and only if \(f(\sigma _j)=f(\sigma _i)\). Otherwise, \(r^{-1}[i,j)\) is an interval of the persistence barcode for \(F_\bullet \), and all such intervals arise this way. We summarize:

Proposition 2.1

Let \(f :K \rightarrow \mathbb {R}\) be a monotonic function on a simplicial complex K, and let \(K_\bullet :I \rightarrow \mathbf {Simp}\) be an essential simplexwise refinement of the sublevel set filtration \(F_\bullet = f^{-1}(-\infty ,\bullet ]\), with \(K_i = \{\sigma _k \mid k \in I, \, k \le i\}\). The persistence barcode of \(K_\bullet \) determines the persistence barcode of \(F_\bullet \),

with \(r^{-1}[i,j) = [f(\sigma _i),f(\sigma _j))\) and \(r^{-1}[i,\infty ) = [f(\sigma _i),\infty )\).

Filtration boundary matrices Given a simplicial complex K with a totally ordered set of vertices X, there is a canonical basis of the simplicial chain complex \(C_*(K)\), consisting of the simplices oriented according to the specified total order. A simplexwise filtration turns this into an ordered basis and gives rise to a filtration boundary matrix, which is the matrix of the boundary operator of the chain complex \(C_*(K)\) with respect to that ordered basis. We may consider boundary matrices both for the combined boundary map \(\partial _* :C_* \rightarrow C_*\) as well as for the individual boundary maps \(\partial _d :C_d \rightarrow C_{d-1}\) in each dimension d. Generalizing the latter case, we say that a matrix D with column indices \(I_d \subset I\) and row indices \(I_{d-1} \subset I\) is a filtration d-boundary matrix for a simplexwise filtration \(K_\bullet :I \rightarrow \mathbf {Simp}\) if for each \(i \in I\), the columns of D with indices \(\le i\) form a generating set of the \((d-1)\)-boundaries \(B_{d-1}(K_i)\). This allows us to remove columns from a boundary matrix that are linear combinations of the previous columns, a strategy called clearing that is discussed in Sect. 3.2.

Indexing simplices in the combinatorial number system We now describe the combinatorial number system (Knuth 2011; Pascal 1887), which provides a way of indexing the simplices of the full simplex \(\Delta (X)\) and of the Vietoris–Rips filtration \({{\,\mathrm{Rips}\,}}_\bullet (X)\) by natural numbers, and which has previously been employed for persistence computation by Bauer et al. (2014). Again, we assume a total order on the vertices \(X=\{v_0,\dots ,v_{n-1}\}\) of the filtration. Using this order, we identify each d-simplex \(\sigma \) with the sorted \((d+1)\)-tuple of its vertex indices \((i_{d},\dots ,i_0)\) in decreasing order \(i_d> \dots > i_0\). This induces a lexicographic order on the set of d-simplices, which we refer to as the colexicographic vertex order. The combinatorial number system of order \(d+1\) is the order-preserving bijection

mapping the lexicographically ordered set of decreasing \((d+1)\)-tuples of natural numbers to the set of natural numbers \(\{0,\dots ,\left( {\begin{array}{c}n\\ d+1\end{array}}\right) - 1\}\), as illustrated in the following value table for \(d=2\).

Note that for \(k > n\) the convention \({n \atopwithdelims ()k} = 0\) is used here. As an example, the simplex \(\{v_5,v_3,v_0\}\) is assigned the number

Conversely, if a d-simplex \(\sigma \) with vertex indices \((i_d,\dots ,i_0)\) has index N in the combinatorial number system, the vertices of \(\sigma \) can be obtained by a binary search, as described in Sect. 4.

Lexicographic refinement of the Vietoris–Rips filtration We now describe an essential simplexwise refinement of the Vietoris–Rips filtration, as required for the computation of persistent homology. To this end, we consider another lexicographic order on the simplices of the full simplex \(\Delta (X)\) with vertex set X, given by ordering the simplices

-

by diameter,

-

then by dimension,

-

then by reverse colexicographic vertex order.

We will refer to the simplexwise filtration resulting from this total order as the lexicographically refined Vietoris–Rips filtration. The choice of the reverse colexicographic vertex order has algorithmic advantages, explained in Sect. 4.

As an example, consider the point set \(X = \{v_0=(0,0), v_1=(3,0), v_2=(0,4), v_3=(3,4)\} \subseteq \mathbb {R}^2\), consisting of the vertices of a \(3\times 4\) rectangle with the Euclidean distance. We obtain the distance matrix

and the table of simplices (top row) with their diameters (bottom row)

listed in order of the lexicographically refined Vietoris–Rips filtration.

3 Computation

In this section, we explain the algorithm for computing persistent homology implemented in Ripser, and discuss the various optimization employed to achieve an efficient implementation.

3.1 Matrix reduction

The prevalent approach to computing persistent homology is by column reduction (Cohen-Steiner et al. 2006) of the filtration boundary matrix. We write \(M_i\) to denote the ith column of a matrix M. The pivot index of \(M_i\), denoted by \(\mathop {{\text {Pivot}}}\nolimits {M_i}\), is the largest row index of any nonzero entry, taken to be 0 if all entries of v are 0. Otherwise, the corresponding nonzero entry is called the pivot entry, denoted by \(\mathop {{\text {PivotEntry}}}\nolimits {M_i}\). We define \({{\,\mathrm{Pivots}\,}}M =\bigcup _i\mathop {{\text {Pivot}}}\nolimits {M_i} \setminus \{0\}\).

A column \(M_i\) is called reduced if \(\mathop {{\text {Pivot}}}\nolimits {M_i}\) cannot be decreased using column additions by scalar multiples of columns \(M_j\) with \(j<i\). Equivalently, \(\mathop {{\text {Pivot}}}\nolimits {M_i}\) is minimal among all pivot indices of linear combinations

with \(\lambda _i\ne 0\), meaning that multiplication from the right by a regular upper triangular matrix U leaves the pivot index of the column unchanged: \(\mathop {{\text {Pivot}}}\nolimits {M_i} = \mathop {{\text {Pivot}}}\nolimits {(MU)_i}\). In particular, a column \(M_i\) is reduced if either \(M_i = 0\) or all columns \(M_j\) with \(j<i\) are reduced and satisfy \(\mathop {{\text {Pivot}}}\nolimits {M_j} \ne \mathop {{\text {Pivot}}}\nolimits {M_i}\). A matrix M is called reduced if all of its columns are reduced. The following proposition forms the basis of matrix reduction algorithms for computing persistent homology.

Proposition 3.1

(Cohen-Steiner et al. 2006) Let D be a filtration boundary matrix, and let V be a full rank upper triangular matrix such that \(R=D\cdot V\) is reduced. Then the index persistence pairs are

and the essential indices are

A basis for the filtered chain complex that is compatible with both the filtration and the boundary maps is given by the chains

determining a direct sum decomposition of \(C_*(K)\) into elementary chain complexes of the form

for each death index j and

for each essential index i. Taking intersections with the filtration \(C_*(K_\bullet )\), we obtain elementary filtered chain complexes, in which \(R_j\) is a cycle appearing in the filtration at index \(i=\mathop {{\text {Pivot}}}\nolimits {R_j}\) and becoming a boundary when \(V_j\) enters the filtration at index j, and in which an essential cycle \(V_i\) enters the filtration at index i. The persistent homology is thus generated by the representative cycles

in the sense that, for all indices \(k \in I\), the homology \(H_*(K_k)\) has a basis generated by the cycles

and for all pairs of indices \(k,l \in I\) with \(k \le l\), the image of the map in homology \(H_*(K_k) \rightarrow H_*(K_l)\) induced by inclusion has a basis generated by the cycles

An algorithm for computing the matrix reduction \(R = D \cdot V\) is given below as Algorithm 1. It can be applied either to the entire filtration boundary matrix in order to compute persistence in all dimensions at once, or to the filtration d-boundary matrix, resulting in the persistence pairs of dimensions \((d-1,d)\) and the essential indices of dimension d. This algorithm appeared for the first time in the work of Cohen-Steiner et al. (2006), rephrasing the original algorithm for persistent homology (Edelsbrunner et al. 2002) as a matrix algorithm. An algorithmic advantage to the decomposition algorithm described by Barannikov (1994) is that it does not require any row operations.

Note that the column operations involving the matrix V are often omitted if the goal is to compute just the persistence pairs and representative cycles are not required. In Sect. 3.4, we will use the matrix V nevertheless to implicitly represent the matrix \(R = D \cdot V\).

Typically, reducing a column at a birth index tends to be significantly more expensive than one with a death index. This observation can be explained using the time complexity analysis for the matrix reduction algorithm given in (Edelsbrunner and Harer 2010, see Sect. VII.2): the reduction of a column for a d-simplex with death index j and corresponding birth index i requires at most \((d+1)(j-i)^2\) steps, while the reduction of a column with birth index i requires at most \((d+1)(i-1)^2\) steps. Typically, the index persistence \((j-i)\) is quite small, while the reduction of birth columns indeed becomes expensive for large birth indices i. The next subsection describes a way to circumvent these birth column reductions whenever possible.

3.2 Clearing inessential birth columns

An optimization to the matrix reduction algorithm, due to Chen and Kerber (2011), is based on the observation that for any birth index i, the column \(R_i\) of the reduced matrix will necessarily be 0. Reducing those columns to zero is therefore unnecessary, and avoiding their reduction can lead to dramatic improvements in running time. The clearing optimization thus simply sets a column \(R_i\) to 0 whenever i is identified as the pivot index of another column, \(i = \mathop {{\text {Pivot}}}\nolimits {R_j}\).

As proposed by Chen and Kerber (2011), clearing yields only the reduced matrix R, and the method is described in the survey by Morozov and Edelsbrunner (2017) as incompatible with the computation of the reduction matrix V. In fact, however, the clearing optimization can actually be extended to also obtain the reduction matrix, which plays a crucial role in our implementation. To see this, consider an inessential birth index \(i = \mathop {{\text {Pivot}}}\nolimits {R_j}\), corresponding to a cleared column. Obtaining the requisite full rank upper triangular reduction matrix V requires an appropriate column \(V_i\) such that \(D \cdot V_i = R_i = 0\), i.e., \(V_i\) is a cycle. It suffices to simply take \(V_i = R_j\); by construction, this column is a boundary, and since \(\mathop {{\text {Pivot}}}\nolimits {V_i} = i\), the resulting matrix V will be full rank upper triangular.

The birth index i of a persistence pairs (i, j) is determined after identified with the reduction of column j. In order to ensure that this happens before the algorithm starts reducing column i, so that the column is cleared already before it would get reduced, the matrices for the boundary maps \(\partial _d\) are reduced in order of decreasing dimension \(d = (p+1), \dots , 1\). For each index persistence pair (i, j) computed in the reduction of the boundary matrix for \(\partial _d\), the corresponding column for index i can now be removed from the boundary matrix for \(\partial _{d-1}\). Note however that computing persistent homology in dimensions \(0\le d \le p\) still requires the reduction of the full boundary matrix \(\partial _{p+1}\). This can become very expensive, especially if there are many \((p+1)\)-simplices, as in the case of a Vietoris–Rips filtration. In this setting, the complex K is the \((p+1)\)-skeleton of the full simplex on n vertices. The standard matrix reduction algorithm for persistent homology requires the reduction of one column per simplex of dimension \(1 \le d \le p + 1\), amounting to

columns in total. Note that \(\dim B_{d-1}(K)\) equals the number of columns with a death index and \(\dim Z_{d}(K)\) equals the number of columns with a birth index in the d-boundary matrix. As an example, for \(p=2,\,n=192\) we obtain \(56\,050\,096\) columns, of which \(1\,161\,471\) are death columns and \(54\,888\,625\) are birth columns. Using the clearing optimization, this number is lowered to

columns; again, for \(p=2,\,n=192\) this still yields \(54\,888\,816\) columns, of which \(1\,161\,471\) are death columns and \(53\,727\,345\) are birth columns. Because of the large number of birth columns arising from \((p+1)\)-simplices, the use of clearing alone thus does not lead to a substantial improvement yet.

3.3 Persistent cohomology

The clearing optimization can be used to a much greater effect by computing persistence barcodes using cohomology instead of homology of Vietoris–Rips filtrations. As noted by de Silva et al. (2011b), for a filtration \(K_\bullet \) of a simplicial complex K the persistence barcodes for homology \(H_*(K_\bullet )\) and cohomology \(H^*(K_\bullet )\) coincide, since for coefficients in a field, cohomology is a vector space dual to homology (Munkres 1984), and the barcode of persistent homology (and more generally, of any pointwise finite-dimensional persistence module) is uniquely determined by the ranks of the internal linear maps in the persistence module, which are preserved by vector space duality.

The filtration of chain complexes \(C_*(K_\bullet )\) gives rise to a diagram of cochain complexes \(C^d(K_\bullet )\), with reversed order on the indexing set. Since cohomology is a contravariant functor, the morphisms in this diagrams are however surjections instead of injections. To obtain a setting that is suitable for our reduction algorithms, we instead consider the filtration of relative cochain complexes \(C^d(K,K_\bullet )\). The filtration coboundary matrix for \(\delta :C^d(K,K_\bullet ) \rightarrow C^{d+1}(K,K_\bullet )\) is given as the transpose of the filtration boundary matrix with rows and columns ordered in reverse filtration order (de Silva et al. 2011b). The persistence barcodes for relative cohomology \(H^*(K,K_\bullet )\) uniquely determine those for absolute cohomology \(H^*(K_\bullet )\) (and coincide with the respective homology barcodes by duality). This correspondence can be seen as a consequence of the fact that the short exact sequence of cochain complexes of persistence modules

(where \(C^*(K)\) is interpreted as a complex of constant persistence modules) gives rise to a long exact sequence

in cohomology, which can be seen to split at \(H^d(K,K_{\bullet })\) and at \(H^{d+1}(K_{\bullet })\), with \(\mathop {{\text {im}}}\nolimits \delta ^*\) as the summand corresponding to the bounded intervals in either barcode (Bauer and Schmahl 2020). Now the persistence pairs (j, i) of dimensions \((d,d-1)\) for relative cohomology \(H^d(K,K_i)\) correspond to persistence pairs (i, j) of dimensions \((d-1,d)\) for (absolute) homology \(H_{d-1}(K_i)\) in one dimension below, i.e., a death index becomes a dual inessential birth index and vice versa, while the essential birth indices for \(H^d(K,K_i)\) remain essential birth indices for \(H_d(K_i)\) in the same dimension. Thus, the persistence barcode can also be computed by matrix reduction of the filtration coboundary matrix. Note that this is equivalent to row reduction of the filtration boundary matrix, reducing the rows from bottom to top.

Since the coboundary map increases the degree, in order to apply the clearing optimization described in Sect. 3.2, the filtration d-coboundary matrices are now reduced in order of increasing dimension using Algorithm 2. This yields the relative persistence pairs of dimensions \((d+1,d)\) for \(H^{d+1}(K,K_i)\), corresponding to the absolute persistence pairs of dimensions \((d,d+1)\) for \(H_d(K_i)\), and the essential indices of dimension d. This is the approach used in Ripser.

The crucial advantage of using cohomology to compute the Vietoris–Rips persistence barcodes in dimensions \(0 \le d \le p\) lies in avoiding the expensive reduction of columns whose birth indices correspond to \((p+1)\)-simplices, as discussed in Sect. 3.2. To illustrate the difference, we first consider cohomology without clearing. Note that for persistent cohomology, the number of column reductions performed by the standard matrix reduction (Algorithm 1) is

again, for K the \((p+1)\)-skeleton of the full simplex on n vertices with \(p=2,\,n=192\), this amounts to \(1\,179\,808\) columns, of which \(1\,161\,471\) are death columns and \(18\,337\) are birth columns. While this number is significantly smaller than for homology, for small values of d the number of rows of the coboundary matrix, \(\left( {\begin{array}{c}n\\ d+1\end{array}}\right) \), is much larger than that of the boundary matrix, \(\left( {\begin{array}{c}n\\ d\end{array}}\right) \), and thus the reduction of birth columns becomes prohibitively expensive in practice. Consequently, reducing the coboundary matrix without clearing has not been observed as more efficient in practice than reducing the boundary matrix (Bauer et al. 2017). However, in conjunction with the clearing optimization, only

columns remain to be reduced; for \(p=2,\,n=192\) we get \(1\,161\,472\) columns, of which \(1\,161\,471\) are death columns and only one is a birth column, corresponding to the single essential class in dimension 0. In addition, typically a large fraction of the death columns will be reduced already from the beginning, as observed in Sect. 5. Thus, in practice, the combination of clearing and cohomology drastically reduces the number of columns operations in comparison to Algorithm 1.

3.4 Implicit matrix reduction

The matrix reduction algorithm can be modified slightly to yield a variant in which only the reduction matrix V is represented explicitly in memory. The columns of the coboundary matrix D are computed on the fly instead, by a method that enumerates the nonzero entries in a given column of D in reverse colexicographic vertex order of the corresponding rows. Specifically, using the combinatorial number system to index the simplices on the vertex set \(\{0,\dots ,n-1\}\), the cofacets of a simplex can be enumerated efficiently, as described in more detail in Sect. 4. The matrix \(R = D \cdot V\) is now determined implicitly by D and V. During the execution of the algorithm, only the current column \(R_j\) on which additions are performed is kept in memory. For all previously reduced columns \(R_k\), with \(k < j\), only the pivot index and its entry, \(\mathop {{\text {Pivot}}}\nolimits {R_k}\) and \(\mathop {{\text {PivotEntry}}}\nolimits {R_k}\), are stored in memory. Whenever needed in the algorithm, those columns are recomputed on the fly as \(R_k = D \cdot V_k\) (see Sect. 1). Note that the extension of clearing to the reduction matrix described in Sect. 3.2 is crucial for an efficient implementation of implicit matrix reduction.

To further decrease the memory usage, we apply another minor optimization. Note that in the matrix reduction algorithm Algorithm 1, only a death index k (\(R_k = D \cdot V_k \ne 0\)) may satisfy the condition \(\mathop {{\text {Pivot}}}\nolimits {R_k} = \mathop {{\text {Pivot}}}\nolimits {R_j}\) in Algorithm 1. Hence, only columns with a death index are used later in the computation to eliminate pivots. Consequently, our implementation does not actually maintain the entire matrix V, but only stores those columns of V with a death index. In other words, it does not store explicit generating cocycles for persistent cohomology, but only their cobounding cochains.

3.5 Apparent pairs

We now discuss a class of persistence pairs that can be identified in a Vietoris–Rips filtration directly from the boundary matrix without reduction, and often even without actually enumerating all cofacets, i.e., without entirely constructing the corresponding columns of the coboundary matrix. The columns in question are already reduced in the coboundary matrix, and hence remain unaffected by the reduction algorithm. Most relevant to us are persistence pairs that have persistence zero with respect to the original filtration parameter, meaning that they arise only as an artifact of the lexicographic refinement and do not contribute to the Vietoris–Rips barcode itself. In practice, most of the pairs arising in the computation of Vietoris–Rips persistence are of this kind (see Sect. 5, and also Zhang et al. 2019, Sect. 4.2 ).

Definition 3.2

Consider a simplexwise filtration \(K_\bullet \) of a finite simplicial complex K. We call a pair of simplices \((\sigma ,\tau )\) of K an apparent pair of \(K_\bullet \) if both

-

\(\sigma \) is the youngest facet of \(\tau \), and

-

\(\tau \) is the oldest cofacet of \(\sigma \).

Equivalently, all entries in the filtration boundary matrix of \(K_\bullet \) below or to the left of \((\sigma ,\tau )\) are 0.

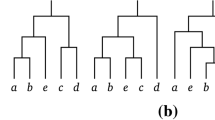

The notion of apparent pairs applies to any simplexwise filtration \(K_\bullet \), which may arise as a simplexwise refinement of some coarser filtration \(F_\bullet \), such as our lexicographic refinement of the Vietoris–Rips filtration. As an example, consider the Vietoris–Rips filtration on the vertices of a rectangle from Sect. 2. The filtration boundary matrices are

with bold entries corresponding to apparent pairs. The apparent pairs thus are

Apparent pairs provide a connection between persistence and discrete Morse theory (Forman 1998). The collection of all apparent pairs pairs of a simplexwise filtration \(K_\bullet \) constitutes a subset of the persistence pairs (Lemma 3.3), while at the same time also forming a discrete gradient in the sense of discrete Morse theory (Lemma 3.5).

Apparent pairs as persistence pairs As an immediate consequence of the definition of an apparent pair, we get the following lemma.

Lemma 3.3

Any apparent pair of a simplexwise filtration is a persistence pair.

Proof

Since the entries in the filtration boundary matrix of \(K_\bullet \) to the left of an apparent pair \((\sigma ,\tau )\) are 0, the index of \(\sigma \) is the pivot of the column of \(\tau \) in the filtration boundary matrix. Thus, the column of \(\tau \) in the boundary matrix is already reduced from the beginning, and Proposition 3.1 yields the claim. \(\square \)

Remark 3.4

Note that the property of being an apparent pair does not depend on the choice of the coefficient field. Indeed, the above statement holds for any choice of coefficient field for (co)homology. In that sense, the apparent pairs are universal persistence pairs.

Specifically, given an apparent pair \((\sigma ,\tau )\), the chain complex \(\dots \rightarrow 0 \rightarrow \langle \tau \rangle \rightarrow \langle \partial \tau \rangle \rightarrow 0 \rightarrow \dots \) can easily be seen to yield an indecomposable summand of the filtered chain complex \(C_*(K_\bullet ; \mathbb Z)\) with integer coefficients (by intersecting the chain complexes), and to generate an interval summand of persistent homology (as a diagram of Abelian groups indexed by a totally ordered set), and thus any other coefficients, by the universal coefficient theorem. The boundary \(\partial \tau \) enters the filtration simultaneously with \(\sigma \), and the appearance of \(\sigma \) and \(\tau \) in the filtration determine the endpoints of the resulting interval.

Dually, the coboundary \(\delta \sigma \) enters the filtration simultaneously with \(\tau \), and the cochain complex \(\dots \rightarrow 0 \rightarrow \langle \sigma \rangle \rightarrow \langle \delta \sigma \rangle \rightarrow 0 \rightarrow \dots \) yields an indecomposable summand of \(C^*(K_\bullet ; \mathbb Z)\), generating an interval summand of \(H^*(K_\bullet ; \mathbb Z)\) corresponding to the one mentioned above.

Apparent pairs as gradient pairs The next two lemmas relate apparent pairs to discrete Morse theory. First, we show that apparent pairs are gradient pairs.

Lemma 3.5

The apparent pairs of a simplexwise filtration form a discrete gradient.

Proof

Let \((\sigma , \tau )\) be an apparent pair, with \(\dim \sigma = d\). By definition, \(\tau \) is uniquely determined by \(\sigma \), and so \(\sigma \) cannot appear in another apparent pair \((\sigma , \psi )\) for any \((d+1)\)-simplex \(\psi \ne \tau \). We show that \(\sigma \) also does not appear in another apparent pair \((\phi , \sigma )\) for any \((d-1)\)-simplex \(\phi \). To see this, note that there is another d-simplex \(\rho \ne \sigma \) that is also a facet of \(\tau \) and a cofacet of \(\phi \). Since \(\sigma \) is assumed to be the youngest facet of \(\tau \), the simplex \(\rho \) is older than \(\sigma \). In particular, \(\sigma \) is not the oldest cofacet of \(\phi \), and so \((\phi , \sigma )\) is not an apparent pair. We conclude that no simplex appears in more than one apparent pair, i.e., the apparent pairs define a discrete vector field.

To show that this discrete vector field is a gradient, let \(\sigma _1, \dots , \sigma _m\) be the simplices of K in filtration order, and consider the function

To verify that f is a discrete Morse function, first note that \(f(\sigma _k) \le k\). Now let \(\sigma _i\) be a facet of \(\sigma _j\). Then \(i < j\). If \((\sigma _i, \sigma _j)\) is not an apparent pair, we have \(f(\sigma _i) \le i < j = f(\sigma _j) \). On the other hand, if \((\sigma _i, \sigma _j)\) is an apparent pair, then \(\sigma _i\) is the youngest facet of \(\sigma _j\), i.e., \(k \le i\) for every facet \(\sigma _k\) of \(\sigma _j\), and thus \(f(\sigma _k) \le k \le i = f(\sigma _j)\), with equality holding if and only if \(i = k\). We conclude that f is a discrete Morse function whose sublevel set filtration is refined by K and whose gradient pairs are exactly the apparent pairs of the filtration. \(\square \)

For the previous example of a simplexwise filtration obtained fron the vertices of a rectangle, the resulting discrete Morse function as constructed in the above proof is shown in the following table.

The following lemma is a partial converse of the above Lemma 3.5, showing that the gradient pairs of a discrete Morse function form a subset of the apparent pairs.

Lemma 3.6

Let f be a discrete Morse function, and let \(K_\bullet \) be a simplexwise refinement of the sublevel set filtration \(F_\bullet = K_\bullet \circ r\) for f. Then the gradient pairs of f are precisely the zero persistence apparent pairs of \(K_\bullet \).

Proof

Any 0-persistence pair \((\sigma ,\tau )\) satisfies \(f(\sigma )=f(\tau )\) and thus, by definition of a discrete Morse function, forms a gradient pair of f.

Conversely, any gradient pair \((\sigma ,\tau )\) of f satisfies \(f(\sigma )=f(\tau )\) and \(f(\rho )<f(\tau )\) for any facet \(\rho \ne \sigma \) of \(\tau \), and similarly, \(f(\upsilon )>f(\tau )\) for any cofacet \(\rho \ne \tau \) of \(\sigma \). Thus, in any simplexwise refinement of the sublevel set filtration, \(\sigma \) is the youngest facet of \(\tau \), and \(\tau \) is the oldest cofacet of \(\sigma \). This means that \((\sigma ,\tau )\) is an apparent zero persistence pair. \(\square \)

Note that there might be apparent pairs of nonzero persistence. In particular, starting with a discrete Morse function f and constructing another discrete Morse function \(\tilde{f}\) from f as in the proof of Lemma 3.5, the gradient pairs of f form a subset of the gradient pairs of \(\tilde{f}\). In particular, the nonzero persistence apparent pairs of f will be gradient pairs of \(\tilde{f}\), but not of f.

Remark 3.7

The notion of apparent pairs generalizes to the setting of algebraic Morse theory (Jöllenbeck and Welker 2009; Kozlov 2005; Sköldberg 2006) in a straightforward way (Lampret 2020). In this setting, one considers a finitely generated free chain complex of free modules \(C_{d}\) over some ring R, equipped with a fixed ordered basis \(\Sigma _d\), which is assumed to induce a filtration by subcomplexes. In this setting, the facet and cofacet relations are defined by the more general condition that two basis elements \(\sigma \in \Sigma _d\) and \(\tau \in \Sigma _{d+1}\) have a nonzero coefficient in the boundary matrix for \(\partial _{d+1}\). In addition, an algebraic apparent pair \((\sigma ,\tau )\) is required to satisfy the additional condition that this boundary coefficient is a unit in R. Similarly to the simplicial setting, in this case \(\sigma \) cannot appear in another apparent pair \((\phi , \sigma )\) for any facet \(\phi \) of \(\sigma \). To see this, note that again there has to be another basis element \(\rho \ne \sigma \in \Sigma _d\) that is also a facet of \(\tau \) and a cofacet of \(\phi \); if \(\sigma \) were the only such basis element, then the coefficient of \((\phi , \tau )\) in the matrix of \(\partial _{q}\circ \partial _{q+1}\) would be nonzero, which is excluded by the chain complex property. The above Lemmas 3.3, 3.5 and 3.6 therefore hold in this generalized setting as well. Note however that in this case, apparent pairs are no longer guaranteed to be universal persistence pairs, as the condition defining the apparent pairs now depends of the choice of coefficients. When working with \(\mathbb Z\) as the coefficient ring, apparent pairs are universal persistence pairs in the sense of 3.4.

Lexicographic discrete gradients The construction proposed by Kahle (2011) for a discrete gradient \(V_L\) on a simplicial complex K from a total vertex order can be understood as a special case of the apparent pairs gradient. The definition of the gradient \(V_L\) is as follows. Consider the vertices \(v_1, \dots v_n\) of the simplicial complex K in some fixed total order. Whenever possible, pair a simplex \(\sigma = \{v_{i_d}, \dots v_{i_1}\}\), \(i_d> \dots > i_1\), with the simplex \(\tau = \{v_{i_d}, \dots v_{i_1},v_{i_0}\}\) for which \(i_0 < i_1\) is minimal. These pairs \((\sigma ,\tau )\) form a discrete gradient (Kahle 2011), which we call the lexicographic gradient.

We illustrate how this gradient \(V_L\) can be considered as a special case of our definition of apparent pairs for the lexicographic filtration of K, where the simplices are ordered by dimension, and simplices of the same dimension are ordered lexicographically according to the chosen vertex order.

Lemma 3.8

The lexicographic gradient \(V_L\) is the apparent pairs gradient of the lexicographic filtration.

Proof

To see that any pair \((\sigma ,\tau )\) in \(V_L\) is an apparent pair, observe that \(i_0\) is chosen such that \(\tau \) is the lexicographically smallest cofacet of \(\sigma \). Moreover, \(\sigma \) is clearly the lexicographically largest facet of \(\tau \).

Conversely, assume that \((\sigma ,\tau )\) is an apparent pair for the lexicographic filtration. Let \(\tau = \{v_{i_d}, \dots , v_{i_1},v_{i_0}\}\). Then \(\sigma = \{v_{i_d}, \dots , v_{i_1}\}\) is the lexicographically largest facet of \(\tau \), and we have \(i_0 < i_1\). Moreover, since \(\tau \) is the lexicographically smallest cofacet of \(\sigma \), the index \(i_0\) is minimal among all indices i such that \(\{v_{i_d}, \dots , v_{i_1},v_{i_0}\}\) forms a simplex. \(\square \)

Vietoris–Rips filtrations Having discussed the Morse-theoretic interpretation of apparent pairs, we now illustrate their relevance for the computation of persistence. Focusing on the lexicographically refined Rips filtration, we first describe the apparent pair of persistence zero in a way that is suitable for computation.

Proposition 3.9

Let \(\sigma , \tau \) be simplices in the lexicographically refined Rips filtration. Then \((\sigma , \tau )\) is a zero persistence apparent pair if and only if

-

\(\tau \) is the lexicographically maximal cofacet of \(\sigma \) such that \({{\,\mathrm{diam}\,}}(\tau ) = {{\,\mathrm{diam}\,}}(\sigma )\), and

-

\(\sigma \) is the lexicographically minimal facet of \(\tau \) such that \({{\,\mathrm{diam}\,}}(\tau ) = {{\,\mathrm{diam}\,}}(\sigma )\).

Proof

The two conditions hold for any zero persistence apparent pair by definition. It remains to show that the two conditions also imply that \((\sigma , \tau )\) is an apparent pair. Recall that in the lexicographically refined Rips filtration, simplices are sorted by diameter, then by dimension, and then in (reverse) lexicographic order. The simplex \(\tau \) must be the oldest cofacet of \(\sigma \) in the filtration order: no cofacet of \(\sigma \) can have a diameter less than \({{\,\mathrm{diam}\,}}\tau = {{\,\mathrm{diam}\,}}\sigma \), and among the cofacets of \(\sigma \) with the same diameter as \(\tau \), the lexicographically maximal cofacet \(\tau \) is the oldest one by construction of the lexicographic filtration order. Similarly, \(\sigma \) must be the oldest cofacet of \(\sigma \) in the filtration order. \(\square \)

As it turns out, a large portion of all persistence pairs arising in the persistence computation for Rips filtrations can be found this way, and the savings from not having to enumerate all cofacets are substantial. The following theorem gives a partial explanation of this observation, for generic finite metric spaces and persistence in dimension 1.

To set the stage, note that in a a simplexwise refinement of a Vietoris–Rips filtration, any apparent pair, and more generally, any emergent facet pair \((\sigma ,\tau )\) of dimensions \((k,k+1)\) with \(k \ge 1\) necessarily has persistence zero, as the diameter of \(\tau \) equals the maximal diameter of its facets, which by definition is the diameter of \(\sigma \). The following theorem establishes a partial converse to this fact in dimension 1 and under a certain genericity assumption on the metric space.

Theorem 3.10

Let (X, d) be a finite metric space with distinct pairwise distances, and let \(K_\bullet \) be a simplexwise refinement of the Vietoris–Rips filtration for X. Among the persistence pairs of \(K_\bullet \) in dimension 1, the zero persistence pairs are precisely the apparent pairs.

Proof

As noted above, every apparent pair is a zero persistence pair. Conversely, let \((\sigma ,\tau )\) be a zero persistence pair of dimensions (1, 2). Since the edge diameters are assumed to be distinct, the edge \(\sigma \) must be the youngest facet of \(\tau \) in \(K_\bullet \). Now let \(\psi \) be the oldest cofacet of \(\sigma \). We then have \({{\,\mathrm{diam}\,}}(\sigma ) \le {{\,\mathrm{diam}\,}}(\psi ) \le {{\,\mathrm{diam}\,}}(\tau )\), and since \((\sigma ,\tau )\) is a zero persistence pair, this implies \({{\,\mathrm{diam}\,}}(\sigma ) = {{\,\mathrm{diam}\,}}(\psi ) = {{\,\mathrm{diam}\,}}(\tau )\). Since pairwise distances are assumed to be distinct, any edge \(\rho \subset \psi \), \(\rho \ne \sigma \) must satisfy \({{\,\mathrm{diam}\,}}(\rho ) < {{\,\mathrm{diam}\,}}(\psi )\). This implies that \(\sigma \) has to be the youngest facet of \(\psi \). Hence, \((\sigma ,\psi )\) is an apparent pair. But since \((\sigma ,\tau )\) is assumed to be a persistence pair, we conclude with Lemma 3.3 that \(\psi = \tau \), and so \((\sigma ,\tau )\) is an apparent pair. \(\square \)

This result implies that every column in the filtration 1-coboundary matrix that is not amenable to the emergent shortcut described in Sect. 3.5 actually corresponds to a proper interval in the Vietoris–Rips barcode. In other words, the zero persistence pairs of the simplexwise refinement do not incur any computational cost in the matrix reduction.

Emergent persistence pairs The definition of apparent pairs generalizes to the persistence pairs in a simplexwise filtration that become apparent in the reduced matrix during the matrix reduction, and are therefore called emergent pairs. They come in two flavors.

Definition 3.11

Consider a simplexwise filtration \((K_i)_{i \in I}\) of a finite simplicial complex K. A persistence pair \((\sigma ,\tau )\) of this filtration is an emergent facet pair if \(\sigma \) is the youngest facet of \(\tau \), and an emergent cofacet pair if \(\tau \) is the oldest cofacet of \(\sigma \).

In other words, \((\sigma ,\tau )\) is an emergent facet pair if the column of \(\tau \) in the filtration boundary matrix is reduced, and an emergent cofacet pair if the column of \(\sigma \) in the coboundary matrix is reduced. Thus, the columns corresponding to emergent pairs are precisely the ones that are unmodified by the matrix reduction algorithm.

Note that \((\sigma ,\tau )\) is an apparent pair if and only if it is both an emergent facet pair and an emergent cofacet pair. In contrast to the notion of an apparent pair, however, the property of being an emergent pair does depend on the choice of the coefficient field.

Proposition 3.12

Let \(\sigma , \tau \) be simplices in the lexicographically refined Rips filtration. Then \((\sigma , \tau )\) is a zero persistence emergent cofacet pair if and only if

-

\(\tau \) is the lexicographically maximal cofacet of \(\sigma \) such that \({{\,\mathrm{diam}\,}}(\tau ) = {{\,\mathrm{diam}\,}}(\sigma )\), and

-

\(\tau \) does not form a persistence pair \((\rho , \tau )\) with any simplex \(\rho \) younger than \(\sigma \).

A dual statement holds for emergent facet pairs.

Proof

Similar to Proposition 3.9, both conditions hold for any zero persistence emergent cofacet pair, and it remains to show that the conditions imply that \((\sigma , \tau )\) is an emergent cofacet pair. Again, the first condition implies that \(\tau \) is the oldest cofacet of \(\sigma \) in the filtration order. Now if \(\tau \) is not paired with some younger simplex \(\rho \), we conclude from Proposition 3.1 that \((\sigma , \tau )\) forms a persistence pair, which is then an emergent cofacet pair. \(\square \)

Shortcuts for apparent and emergent pairs In practice, zero persistence apparent and emergent pairs provide a way to identify persistence pairs \((\sigma , \tau )\) without even enumerating all cofacets of the simplex \(\sigma \), and thus without fully constructing the coboundary matrix for the reduction algorithm.

We first describe how to determine whether a simplex \(\sigma \) appears in an apparent pair \((\sigma , \tau )\). Following Proposition 3.9, enumerate the cofacets of \(\sigma \) in reverse lexicographic order until encountering the first cofacet \(\tau \) with the same diameter as \(\sigma \). Subsequently, enumerate the facets of \(\tau \) in forward lexicographic order, until encountering the first facet of \(\tau \) with the same diameter as \(\tau \). Now \((\sigma ,\tau )\) is an apparent pair if and only if that facet equals \(\sigma \). No further cofacets of \(\sigma \) need to be enumerated; this is the mentioned shortcut. This way, we can determine for a given simplex \(\sigma \) whether it appears in an apparent pair \((\sigma ,\tau )\), and identify the corresponding cofacet \(\tau \). By an analogous strategy, we can also determine whether a simplex \(\tau \) appears in an apparent pair \((\sigma ,\tau )\), and identify the corresponding facet \(\sigma \).

For the emergent pairs, recall that the columns of the coboundary filtration matrix are processed in reverse filtration order, from youngest to oldest simplex. Assume that D is the filtration k-coboundary matrix, corresponding to the coboundary operator \(\delta _k :C^k(K) \rightarrow C^{k+1}(K)\). Let \(\sigma \) be the current simplex whose column is to be reduced, i.e., the columns of the matrix \(R = D \cdot V\) corresponding to younger simplices are already reduced, while the current column is still unmodified, containing the coboundary of \(\sigma \). Following Lemma 3.12, enumerate the cofacets of the simplex \(\sigma \) in reverse lexicographic order. When we encounter the first cofacet \(\tau \) with the same diameter as \(\sigma \), we know that \(\tau \) is the youngest cofacet of \(\sigma \). Equivalently, the index of \(\tau \) is the pivot index of the column for \(\sigma \) in the coboundary matrix. Up to this point, all persistence pairs \((\rho , \phi )\) with simplices \(\rho \) younger than \(\sigma \) have been identified already, since the algorithm processes simplices from youngest to oldest. Thus, if \(\tau \) has not previously been paired with any simplex, we conclude from Proposition 3.1 that \((\sigma , \tau )\) is an emergent cofacet pair. Again, no further cofacets of \(\sigma \) need to be enumerated, shortcutting the construction of the coboundary column for \(\sigma \).

4 Implementation

We now discuss the main data structures and the relevant implementation details of Ripser, the C++ implementation of the algorithms discussed in this paper. The code is licensed under the MIT license and available at www.ripser.org. The development of Ripser started in October 2015, with support for the emergent pairs shortcut added in March 2016, and support for sparse distance matrices added in September 2016. The first version of Ripser has been released in July 2016. The version discussed in the present article (v1.2.1) has been released in March 2021.

Input The input to Ripser is a finite metric space (X, d), encoded in a comma (or whitespace, or other non-numerical character) separated list as either a distance matrix (full, lower, or upper triangular part), or as a list of points in some Euclidean space ( ), from which a distance matrix is constructed. The data type for distance values and coordinates is a 32 bit floating point number (

), from which a distance matrix is constructed. The data type for distance values and coordinates is a 32 bit floating point number ( ). There are two data structures for storing distance matrices:

). There are two data structures for storing distance matrices:  is used for dense distance matrices, storing the entries of the lower (or upper) triangular part of the distance matrix in a

is used for dense distance matrices, storing the entries of the lower (or upper) triangular part of the distance matrix in a  , sorted lexicographically by row index, then column index. The adjacency list data structure

, sorted lexicographically by row index, then column index. The adjacency list data structure  is used when the persistence barcode is computed only up to a specified threshold, storing only the distances below that threshold. If no threshold is specified, the minimum enclosing radius

is used when the persistence barcode is computed only up to a specified threshold, storing only the distances below that threshold. If no threshold is specified, the minimum enclosing radius

of the input is used as a threshold, as suggested by ? ] and implemented in Eirene. Above that threshold, for any point \(x \in X\) minimizing the above formula for the enclosing radius, the Vietoris–Rips complex is a simplicial cone with apex x, and so the homology remains trivial afterwards.

Vertices and simplices Vertices are identified with natural numbers \(\{0, \dots , n-1\}\), where n is the cardinality of the input space. Simplices are indexed by natural numbers according to the combinatorial number system. The data type for both is  , which is defined as a 64 bit signed integer (

, which is defined as a 64 bit signed integer ( ). The dimension of a simplex is not encoded explicitly, but passed to methods as an extra parameter.

). The dimension of a simplex is not encoded explicitly, but passed to methods as an extra parameter.

The enumeration of the vertices of a simplex encoded in the combinatorial number system can be performed efficiently in decreasing order of the vertices. It is based on the following simple observation.

Lemma 4.1

Let N be the number of a d-simplex \(\sigma \) in the combinatorial number system with vertex indices \(i_d> \dots > i_0\). Then the largest vertex index of \(\sigma \) is \(i_d = \max \left\{ i\in \mathbb N \mid {i \atopwithdelims ()d+1} \le N\right\} .\)

Proof

First note that \({i_d \atopwithdelims ()d+1}\) is a summand of \(N=\sum _{l=0}^{d}{i_l \atopwithdelims (){l+1}}\), and so we have

For the maximality of \(i_d\), note that \(\sigma \) is a d-simplex on the vertex set \(\{0, \dots , i_d\}\). In total, there are \({i_d+1 \atopwithdelims ()d+1}\) d-simplices on that vertex set, indexed in the combinatorial number system with the numbers \(0,\dots ,{i_d+1 \atopwithdelims ()d+1}-1\). Thus, we have

\(\square \)

Using this lemma, the largest vertex index of a simplex can be found by performing a binary search, implemented in the method  . Moreover, the second largest vertex index, \(i_{d-1}\), equals the largest vertex index of the simplex with vertex indices \((i_d,\dots ,i_0)\), which has number \(N - {i_d \atopwithdelims ()d+1}\). This way, the numbers of any simplex can be computed by iteratively applying the above lemma. This is implemented in the method

. Moreover, the second largest vertex index, \(i_{d-1}\), equals the largest vertex index of the simplex with vertex indices \((i_d,\dots ,i_0)\), which has number \(N - {i_d \atopwithdelims ()d+1}\). This way, the numbers of any simplex can be computed by iteratively applying the above lemma. This is implemented in the method  .

.

The binary search for the maximal vertex of a simplex is implemented in  . The requisite computation of binomial coefficients is done in advance and stored in a lookup table (

. The requisite computation of binomial coefficients is done in advance and stored in a lookup table ( ).

).

Enumerating cofacets and facets of a simplex The columns of the coboundary matrix are computed by enumerating the cofacets (implemented in the class  ). There are two implementations, one for sparse and one for dense distance matrices. For the enumeration of facets there is only one implementation (implemented in the class

). There are two implementations, one for sparse and one for dense distance matrices. For the enumeration of facets there is only one implementation (implemented in the class  ), as every facet of a simplex has to be contained in the filtration by the property that a simplicial complex is closed under the fact relation.

), as every facet of a simplex has to be contained in the filtration by the property that a simplicial complex is closed under the fact relation.

For sparse distance matrices with a distance threshold t ( ), the cofacets of a simplex are obtained by taking the intersection of the neighbor sets for the vertices of the simplex,

), the cofacets of a simplex are obtained by taking the intersection of the neighbor sets for the vertices of the simplex,

More specifically, the distances are stored in an adjacency list data structure, maintaining for every vertex an ordered list of its neighbors together with the corresponding distance, sorted by the indices of the neighbors ( ). The enumeration of cofacets of a given simplex (

). The enumeration of cofacets of a given simplex (

) searches through the adjacency lists for the vertices of the simplex for common neighbors (in the method (

) searches through the adjacency lists for the vertices of the simplex for common neighbors (in the method ( ). Any common neighbor then gives rise to a cofacet of the simplex.

). Any common neighbor then gives rise to a cofacet of the simplex.

For dense distance matrices, the following straightforward method is used to enumerate the cofacets of a d-simplex \(\sigma = \{v_{i_{d}},\dots ,v_{i_0}\}\) in reverse colexicographic order, represented by their indices in the combinatorial number system. Enumerating \(j = n - 1 , \dots , 0\), for each \(j \notin \{i_{d},\dots , i_0 \}\) there is a unique subindex \(k \in \{0,\dots , d\}\) with \(i_{k+1}> j > i_{k}\); the corner cases \(j > i_{d}\) and \(i_0 > j\) are covered by letting \(i_{d+1} = n\) and \(i_{-1}=-1\). For the corresponding kth cofacet of \(\sigma \) we obtain the number

Thus, the cofacets of \(\sigma \) can easily be enumerated in reverse colexicographic vertex order by maintaining and updating the values of the two partial sums appearing in the above equation, starting from the number for the simplex \(\sigma \), starting from the value 0 for the left partial sum ( ) and the number for the simplex \(\sigma \) for the right partial sum (

) and the number for the simplex \(\sigma \) for the right partial sum ( ). The coboundary coefficient of the corresponding cofacet is \((-1)^k\).

). The coboundary coefficient of the corresponding cofacet is \((-1)^k\).

Returning to the example from Sect. 2, the cofacets of the simplex \(\{v_5,v_3,v_0\}\) in the full simplex on seven vertices \(\{v_0,\dots ,v_6\}\) are, in reverse colexicographic vertex order, the simplices with numbers

The facets of a d-simplex \(\sigma = \{v_{i_{d}},\dots ,v_{i_0}\}\) can be enumerated in a similar way, this time in forward colexicographic vertex order (implemented in the class  ). Enumerating \(k = d + 1 , \dots , 1, 0\) and letting \(j=i_k\), for the corresponding kth facet of \(\sigma \) we obtain the number

). Enumerating \(k = d + 1 , \dots , 1, 0\) and letting \(j=i_k\), for the corresponding kth facet of \(\sigma \) we obtain the number

The facets of \(\sigma \) can easily be enumerated in (forward) colexicographic vertex order by maintaining and updating the values of the two partial sums appearing in the above equation, starting from the value 0 for the left partial sum ( ) and the number for the simplex \(\sigma \) for the right partial sum (

) and the number for the simplex \(\sigma \) for the right partial sum ( ).

).

Assembling column indices of the filtration coboundary matrix The above methods for enumerating cofacets of a simplex are also used to enumerate the d-simplices corresponding to the columns in the filtration coboundary matrix, i.e., the essential simplices and the death simplices for relative cohomology (in the method  ). A list of the \((d-1)\)-simplices is passed as an argument. Instead of enumerating all cofacets of each \((d-1)\)-simplex \(\sigma = \{v_{i_{d-1}},\dots ,v_{i_0}\}\) in the list of \((d-1)\)-simplices, only the cofacets of the form \((v_j, v_{i_{d-1}}, \dots , v_{i_0})\) with \(j>i_{d-1}\) are enumerated (using the method

). A list of the \((d-1)\)-simplices is passed as an argument. Instead of enumerating all cofacets of each \((d-1)\)-simplex \(\sigma = \{v_{i_{d-1}},\dots ,v_{i_0}\}\) in the list of \((d-1)\)-simplices, only the cofacets of the form \((v_j, v_{i_{d-1}}, \dots , v_{i_0})\) with \(j>i_{d-1}\) are enumerated (using the method  with parameter

with parameter  set to

set to  ). This way, each d-simplex \((v_{i_{d}}, \dots , v_{i_0})\) is enumerated exactly once, as a cofacet of \((v_{i_{d-1}}, \dots , v_{i_0})\).

). This way, each d-simplex \((v_{i_{d}}, \dots , v_{i_0})\) is enumerated exactly once, as a cofacet of \((v_{i_{d-1}}, \dots , v_{i_0})\).

Since version 1.2, Ripser only assembles column indices that do not correspond to simplices appearing in a zero persistence apparent pair. This strategy, adopted from the parallel GPU implementation of Ripser by Zhang et al. (2020), leads to another significant improvement both in memory usage and in computation time. The method  ) checks whether a simplex has a zero persistence apparent cofacet or facet. Since low-dimensional simplices in Vietoris–Rips filtration more frequently have apparent cofacets than apparent facets, the check for cofacets is carried out first. To check for a zero persistence apparent cofacet of a simplex (

) checks whether a simplex has a zero persistence apparent cofacet or facet. Since low-dimensional simplices in Vietoris–Rips filtration more frequently have apparent cofacets than apparent facets, the check for cofacets is carried out first. To check for a zero persistence apparent cofacet of a simplex ( ), following Proposition3.9, the method

), following Proposition3.9, the method  searches for the lexicographically maximal cofacet with the same diameter as the simplex, and then in turn

searches for the lexicographically maximal cofacet with the same diameter as the simplex, and then in turn  searches for its lexicographically minimal facet with the same diameter. If that facet is the initial simplex, a zero apparent pair is found. Checking for an apparent facet (

searches for its lexicographically minimal facet with the same diameter. If that facet is the initial simplex, a zero apparent pair is found. Checking for an apparent facet ( ) works analogously.

) works analogously.

Coefficients Ripser supports the computation of persistent homology with coefficients in a prime field \(\mathbb {F}_p\), for any prime number \(p < 2^{16}\). The support for coefficients in as prime field can be enabled or disabled by setting a compiler flag ( ). The data type for coefficients is

). The data type for coefficients is  , which is defined as a 16 bit unsigned integer (

, which is defined as a 16 bit unsigned integer ( ), admitting fast multiplication without overflow on 64 bit architectures. Fast division in modular arithmetic is obtained by precomputing the multiplicative inverses of nonzero elements of the field (in the method

), admitting fast multiplication without overflow on 64 bit architectures. Fast division in modular arithmetic is obtained by precomputing the multiplicative inverses of nonzero elements of the field (in the method  ).

).

Column and matrix data sutrctures The basic data type for entries in a ( ) boundary or coefficient matrix is a tuple consisting of a simplex index (

) boundary or coefficient matrix is a tuple consisting of a simplex index ( ), a floating point value (

), a floating point value ( ) caching the diameter of the simplex with that index, and a coefficient (

) caching the diameter of the simplex with that index, and a coefficient ( ) if coefficients are enabled. The type

) if coefficients are enabled. The type  thus represents a scalar multiple of an oriented d-simplex, seen as basis element of the cochain vector space \(C^d(K)\). If support for coefficients is enabled, the index (48 bit) and the coefficient (16 bit) are packed into a single 64 bit word (using

thus represents a scalar multiple of an oriented d-simplex, seen as basis element of the cochain vector space \(C^d(K)\). If support for coefficients is enabled, the index (48 bit) and the coefficient (16 bit) are packed into a single 64 bit word (using  ). The actual number of bits used for the coefficients can be adjusted by changing

). The actual number of bits used for the coefficients can be adjusted by changing  , in order to accommodate a larger number of possible simplex indices.

, in order to accommodate a larger number of possible simplex indices.

The reduction matrix V used in the persistence computation is represented as a list of columns in a sparse matrix format ( ), with each column storing only a collection of nonzero entries, encoding a linear combination of the basis elements for the row space. The diagonal entries of V are always 1 and are therefore not stored explicitly in the data structure. Note that the rows and columns of V are not indexed by the combinatorial number system, but by a prefix of the natural numbers corresponding to a filtration-ordered list of boundary columns (

), with each column storing only a collection of nonzero entries, encoding a linear combination of the basis elements for the row space. The diagonal entries of V are always 1 and are therefore not stored explicitly in the data structure. Note that the rows and columns of V are not indexed by the combinatorial number system, but by a prefix of the natural numbers corresponding to a filtration-ordered list of boundary columns ( ).

).

Pivot extraction During the matrix reduction, the current working columns \(V_j\) and \(R_j\) on which column operations are performed ( and

and  ) are maintained as binary heaps (

) are maintained as binary heaps (

) with value type

) with value type  , using a comparison function object to specify the ordering of the heap elements (

, using a comparison function object to specify the ordering of the heap elements (

) in reverse filtration order (of the simplices in the lexicographically refined Rips filtration), thus providing fast access to the pivot entry of a column.

) in reverse filtration order (of the simplices in the lexicographically refined Rips filtration), thus providing fast access to the pivot entry of a column.

A heap encodes a column vector as a sum of scalar multiples of the row basis elements, the summands being encoded in the data type  . The heap may actually contain several entries with the same row index, and should thus be considered as a lazily evaluated representation of a formal linear combination. In particular, the pivot entry of the column is obtained (in the method