Abstract

In 1925, the American entomologist Walter Sidney Abbott proposed an equation for assessing efficacy, and it is still widely used today for analysing controlled experiments in crop protection and phytomedicine. Typically, this equation is applied to each experimental unit and the efficacy estimates thus obtained are then used in analysis of variance and least squares regression procedures. However, particularly regarding the common assumptions of homogeneity of variance and normality, this approach is often inaccurate. In this tutorial paper, we therefore revisit Abbott’s equation and outline an alternative route to analysis via generalized linear mixed models that can satisfactorily deal with these distributional issues. Nine examples from entomology, weed science and phytopathology, each with a different focus and methodological peculiarity, are used to illustrate the framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In 1925, almost 100 years ago, the American entomologist Walter Sidney Abbott proposed a method to assess the efficacy (or effectiveness) of an insecticide treatment that takes into account the number of natural deaths of insects using results from a control plot. His corrected mortality is given by

where \(C\) is the observed proportion of surviving insects in the control plot and \(T\) is the observed proportion of surviving insects in the treatment plot (Abbott 1925). This corrected mortality is a common measure of efficacy. The rationale of the correction is that \(C - T\) is the observed proportion of insects killed by the treatment, which can be expressed as a proportion of the natural survival rate \(C\). We note in passing that without loss of generality, in Eq. (1) we can also omit the multiplication with 100%, rendering the efficacy a proportion rather than a percentage. We will henceforth omit this multiplication for simplicity.

Abbott’s formula has subsequently been applied in many publications, not only in entomology (Fleming and Retnakaran 1985; Rosenheim and Hoy 1989; Oplos et al. 2018), but also in weed science (Singh et al. 2021; Nath et al. 2022; Parkash et al. 2022), plant pathology (Samoucha and Cohen 1986; Aamlid et al. 2017), and other disciplines (Karunarathne et al. 2022). It is also discussed in classical statistical textbooks on bioassay such as Schneider-Orelli (1947, p. 205), Finney (1964, p. 564; 1971, p. 125), Bliss (1967, p. 75) and Püntener (1981, p. 36). While Eq. (1) is very frequently used in practice, it is not always referred to as Abbott’s formula, and it is sometimes presented in terms of mortality (see ‘Appendix’) rather than survival as in Eq. (1). Finney (1964, p. 564) argues that ‘this formula for calculating the response rate attributable to the stimulus from the total response rate is familiar to workers with insecticides under the name Abbott’s Formula […], but its basis is so simple that no special name is necessary’. Abbott’s original proposal was made in terms of binomial proportions for \(C\) and \(T\), but Eq. (1) can also be applied to other data on fitness for \(C\) and \(T\), such as biomass, abundance counts or survival times, as will be illustrated in this paper.

In blocked experiments, it is quite customary to compute Eq. (1) for each treatment and block and then conduct an analysis of variance (Common Approach 1; see Example 1). While this approach is easy to implement, it is important to appreciate that it may entail problems in statistical inference, because calculations involve a ratio of random variables \(\left( {T/C} \right)\). It is well known that to a first-order approximation using the delta method (Johnson et al. 1993, p. 55)

where \(C\) and \(T\) are regarded as random variables, \(E\left( {} \right)\) denotes the mean (expected value) of a random variable, \(\mu_{c} = E\left( C \right)\) and \(\mu_{t} = E\left( T \right)\) are the means of \(C\) and \(T\), respectively, \(c\) and \(t\) index the control and treatment, respectively, \({\text{var}} \left( C \right)\) is the variance of \(C\), and \({\text{cov}} \left( {C,T} \right)\) is the covariance of \(C\) and \(T\). It may be argued that corrected mortality should be defined in terms of the ratio of the means (expected values) of \(C\) and \(T\), \({{\mu_{t} } \mathord{\left/ {\vphantom {{\mu_{t} } {\mu_{c} }}} \right. \kern-0pt} {\mu_{c} }}\). Equation (2) suggests, however, that the observed ratio \({T \mathord{\left/ {\vphantom {T C}} \right. \kern-0pt} C}\) is a biased estimator of the corresponding ratio of means, \({{\mu_{t} } \mathord{\left/ {\vphantom {{\mu_{t} } {\mu_{c} }}} \right. \kern-0pt} {\mu_{c} }}\), especially when calculated per block. In particular, according to Eq. (2), assuming independence of \(C\) and \(T\), the ratio \({T \mathord{\left/ {\vphantom {T C}} \right. \kern-0pt} C}\) is an upwardly biased estimator of \({{\mu_{t} } \mathord{\left/ {\vphantom {{\mu_{t} } {\mu_{c} }}} \right. \kern-0pt} {\mu_{c} }}\), the more so the larger the variance \({\text{var}} \left( C \right)\) and the smaller the mean \(\mu_{C}\). If \(C\) and \(T\) are correlated, the bias also depends on their covariance \({\text{cov}} \left( {C,T} \right)\). There is a similar equation for the approximate variance of \({T \mathord{\left/ {\vphantom {T C}} \right. \kern-0pt} C}\) (Johnson et al. 1993, p. 55),

suggesting that there will also be issues with heterogeneity of variance between different treatments for such ratios, even if homogeneity of variance can be assumed for \(C\) and \(T\). For example, assume that \({\text{var}} \left( T \right)\) is the same for all treatments and \({\text{cov}} \left( {C,T} \right) = 0\). In this case, the variance is \({\text{var}} \left( {{T \mathord{\left/ {\vphantom {T C}} \right. \kern-0pt} C}} \right) \approx {{\mu_{t}^{2} {\text{var}} \left( C \right)} \mathord{\left/ {\vphantom {{\mu_{t}^{2} {\text{var}} \left( C \right)} {\mu_{c}^{4} }}} \right. \kern-0pt} {\mu_{c}^{4} }} + {{{\text{var}} \left( T \right)} \mathord{\left/ {\vphantom {{{\text{var}} \left( T \right)} {\mu_{c}^{2} }}} \right. \kern-0pt} {\mu_{c}^{2} }}\), which will vary between treatments when their means \(\mu_{t}\) differ, even if \({\text{var}} \left( T \right)\) is the same for all treatments.

A further common approach (Common Approach 2) is to compute the mean of all observations for the control and use this for \(C\) in Eq. (1). This somewhat alleviates the bias issue because the variance of \(C\) in Eq. (2) is reduced, but the heterogeneity of variance problem, reflected in Eq. (3), remains unabated. Moreover, this method does not properly account for any block effects.

An alternative to these two Common Approaches, which obviates the problems mentioned above, is to estimate the control and treatment means \(\mu_{c}\) and \(\mu_{t}\) based on all available data, and then to assess the corrected mortality based on

We believe that usually the best way to do this is by fitting a suitable generalized linear mixed model (GLMM) (Wolfinger and O’Connell 1993; Piepho 1999; Gbur et al. 2012; Malik et al. 2020; Stroup and Claassen 2020; Stroup et al. 2024) to estimate \(\mu_{c}\) and \(\mu_{t}\) and then use these estimates to evaluate Eq. (4). All statistical inference can take place on the linear predictor scale of the GLMM, and estimation of Eq. (4) corresponds to a simple post-processing step after fitting the model, whereas computation of approximate standard errors for estimates of Eq. (4) requires a bit more work and may involve application of the delta method. Alternatively, when a nonlinear regression package is used, e.g. when fitting a dose–response function, the correction may be integrated explicitly via suitable parameters into the fitted model (Ritz et al. 2015). The purpose of the present paper is to illustrate these approaches using several examples from entomology, weed science, and plant pathology. While this entails no methodology that is in and of itself new, we believe it is worth reminding researchers of this approach, as it appears to have been largely overlooked in practical research.

The suggested approach in a nutshell

Before showing several examples to illustrate our suggested approach, the general idea will be briefly sketched. The main component of a GLMM is the linear predictor, \(\eta\), comprising fixed effects, \(\beta\), and potentially also random effects, \(u\), i.e. \(\eta = x^{\prime}\beta + z^{\prime}u\), where \(x\) and \(z\) are incidence vectors. Then under a GLMM, the linear predictor is related to the expected value on the observed scale, \(\mu\), via a nonlinear link function, \(g\left( . \right)\), i.e. \(g\left( \mu \right) = \eta\). If the means of treatment and control on the linear predictor scale are denoted as \(\eta_{c}\) and \(\eta_{t}\), the means on the observed scale are obtained as \(\mu_{c} = g^{ - 1} \left( {\eta_{c} } \right)\) and \(\mu_{t} = g^{ - 1} \left( {\eta_{t} } \right)\). Estimates of these means can then be plugged into Eq. (4) to estimate efficacy. As mentioned in the introduction, Abbott’s formula is frequently applied to proportions (mortalities) estimated from binomial sampling. In this case, GLMMs with logit or probit link and binomial or related distributional assumptions are the standard models for analysis.

In general, obtaining standard errors and confidence intervals of estimates of efficacy based on Eq. (4), being a nonlinear function of the effects in the linear model, requires use of the delta method (Rice 1995, pp. 149–154), which allows assessing how estimation errors in the effects are propagated through the nonlinear function. This will be illustrated in the examples. It is worth mentioning here that some packages have options to automate application of the delta method, which requires differential calculus. Two examples are the NLMIXED procedure of SAS and the function deltaMethod() in the R package ‘car’. It is worth considering the special case that \(g\left( . \right) = \log \left( . \right)\), for which we find

where \(\tau_{c}\) and \(\tau_{t}\) are the effects of control and treatment on the linear predictor scale, respectively. A second special case worth mentioning is when a logarithmic transformation of the responses is considered to achieve approximate normality and homogeneity of variance on the transformed scale. In this case, a linear mixed model (LMM) can be fitted to the transformed data. This is a special case of a GLMM where \(g\left( . \right)\) is the identity function \(\mu = \eta\). The model implies a log-normal distribution on the original scale (Johnson et al. 1994, p. 212; McCulloch and Searle 2001, p. 223). The mean on the observed scale is given by \(\mu = \exp \left( {\eta + \frac{1}{2}\sigma^{2} } \right)\), where \(\eta\) and \(\sigma^{2}\) are the mean and the variance on the log-scale. It emerges that we can also use Eq. (5) to estimate efficacy in this case, because \({{\mu_{t} } \mathord{\left/ {\vphantom {{\mu_{t} } {\mu_{c} }}} \right. \kern-0pt} {\mu_{c} }} = \exp \left( {\tau_{t} - \tau_{c} } \right)\) when the variance on the transformed scale, \(\sigma^{2}\), is constant.

It is particularly simple to estimate a confidence interval for \(\varepsilon\) in these two special cases using Eq. (5). All that is required is to plug in the confidence limits for the treatment contrast \(\tau_{t} - \tau_{c}\) on the linear predictor scale. Obtaining approximate standard errors requires use of the delta method, as is also the case with other link functions (or data transformations). In both special cases, the standard error of the estimate of Eq. (5) obtained by the delta method is (Johnson et al. 1993, p. 54)

In general (i.e. not only with log-link or log-transformation), the difference of two efficacies of treatments \(t = i\) and \(t = h\), say, is

This shows that to establish a significant difference between efficacies of two treatments, it suffices to compare their treatment effects (\(\tau_{i}\)) or adjusted means (\(\eta_{i}\)) on the linear predictor scale.

Examples

Table 1 gives an overview of the examples that will be used to illustrate the general approach and provides some characterizations that may help guide readers in case they want to pick a case close to their own application. The order of examples reflects an increasing level of complexity of the application, with each example introducing a new aspect not covered in previous examples. Readers may choose to either read through all examples in one go, or to take their pick of specific examples based on the outline in Table 1 and then, while reading a specific example, decide whether wanting to go back to an earlier example for a methodological detail covered there for the first time. The SAS and R code for all examples is available in the Supporting Information. Unless indicated otherwise, the same results are obtained with both packages. The main text occasionally gives hints to the implementation in SAS. Similar hints are found for R in the Supporting Information.

There are several estimation methods for GLMM. In case a parametric model for the error distribution is specified such as the binomial, Poisson, or Gamma distribution, a likelihood can be defined, and the available methods can be broadly classified into two categories (Stroup and Claassen 2020): (i) methods using adaptive Gaussian quadrature to obtain the likelihood (Pinheiro and Bates 1995; use of a single quadrature point corresponds to the Laplace method) and (ii) methods that employ linearization to approximate the likelihood (penalized quasi-likelihood, PQL, Breslow and Clayton 1993; pseudo-likelihood, PL, O’Connell and Wolfinger 1993). Users of GLMMs should be aware that the choice of method matters and that packages differ in the methods they use. Some R packages offer the PQL method, but PL does not seem to be available. There are also R packages providing methods based on quadrature, including the approximate Laplace method. The SAS procedure GLIMMIX offers both the PL method as well as quadrature methods. Here, whenever a parametric distributional model is assumed, we primarily use the Laplace method, mainly because it is available in SAS and R, both of which we use in this paper. In the first example we use both PL and PQL. Readers should be aware that in some settings, methods based on linearization, such as the PL or PQL, may be preferable. Also, PL comes in different variants, depending on whether the variance components for the linearized model are estimated by maximum likelihood (method = MSPL in GLIMMIX) or residual maximum likelihood (method = RSPL in GLIMMIX). For detailed advice, the paper by Stroup and Claassen (2020) may be consulted. A general issue with maximum likelihood is that variance estimates may be substantially biased. Hence, methods that allow residual maximum likelihood are often preferable, but there are different ways for making this available for GLMM (Stiratelli et al. 1984; Wood 2011; Piepho et al. 2018), and there is no consensus as to the best option.

When no parametric model is specified, but instead only the variance-mean relationship is assumed, one may define a so-called quasi-likelihood (Wedderburn 1974) or its extensions in case of random effects in the linear predictor, i.e. PL or PQL. Several of our examples use the quasi-likelihood method, which is available in both SAS and R.

Example 1: control of Bromus sterilis

Büchse and Piepho (2006) report counts of the weed grass Bromus sterilis from a randomized complete block design with four herbicide treatments and four blocks (Table 2). We use this first example to introduce a few basic concepts and to illustrate the large versatility of the GLMM framework in terms of distributional assumptions and fitting methods. A natural model for these data is a Poisson distribution. We may use a linear predictor with fixed effects for blocks \(\left( {b_{j} } \right)\) and treatments \(\left( {\tau_{i} } \right)\):

As the linear predictor only has fixed effects, this is a generalized linear model (GLM) (McCullagh and Nelder 1989). For Poisson data, the canonical link is the logarithm,

We then assume that the observed counts per plot, yij, have a Poisson distribution with parameter (mean) \(\mu_{ij}\), i.e. \(y_{ij} \sim {\text{Poisson}}\left( {\mu_{ij} } \right)\). This distributional assumption implies heterogeneity of variance because under the Poisson model \({\text{var}} \left( {y_{ij} } \right) = \mu_{ij}\). The objective of the experiment is to assess the effectiveness of a new herbicide compared to a control treatment. The mean of the i-th treatment is \(\mu_{i} = \exp \left( {\theta + \overline{b}_{ \bullet } + \tau_{i} } \right)\), from which the efficacy can be determined based on Eq. (4), which in case of a log-link can be re-written as in Eq. (5). From these results, it is straightforward to estimate confidence limits for the reduction in weed infestation, simply by plugging in confidence limits for \(\tau_{i} - \tau_{c}\) into Eq. (5), where \(i\) indexes the treatment (t) in Eq. (5) to be compared to the control (c).

There is usually over-dispersion relative to the Poisson variance function (Piepho et al. 2022), so we may expand it to \({\text{var}} \left( {y_{ij} } \right) = \phi \mu_{ij}\), where \(\phi\) is the over-dispersion parameter. The model may be estimated by the quasi-likelihood method (Wedderburn 1974), which only requires specification of the mean and variance models, but this is not a full parametric model. With this specification, a quasi-likelihood can be defined, based on which all statistical inference can be performed, essentially in the same way as with a real likelihood. Details are described, e.g. in McCullagh and Nelder (1989). The implementation using a GLM package is particularly simple in the case at hand: fit a log-link and pseudo-Poisson (over-dispersed Poisson, quasi-Poisson) distribution.

In addition, we here consider three options with parametric distributional assumptions. (i) The Poisson distribution may be replaced with the negative binomial (NB) distribution, \(y_{ij} \sim {\text{NegBin}}\left( {\mu_{ij} ;\phi } \right)\), which has a dispersion parameter \(\phi\); (ii) the Poisson model may be extended by adding a random plot error \(e_{ij}\) in the linear predictor, assumed to have a normal distribution with zero mean and variance \(\sigma_{e}^{2}\) in the linear predictor, i.e. \(\eta_{ij} = \theta + b_{j} + \tau_{i} + e_{ij}\) (Poisson-normal model); (iii) we may also combine (i) and (ii) into a third model (NB-normal model). Option (i) is a GLM, whereas options (ii) and (iii) are GLMM on account of the presence of a random effect in the linear predictor (Gbur et al. 2012). We fitted these GLMM using the pseudo-likelihood method (Wolfinger and O’Connell 1993) and the maximum likelihood method (Pinheiro and Bates 1995). Detailed results are shown in Table 3.

Comparing the estimates of the models shows that the error variance \(\sigma_{e}^{2}\) and the scale parameter \(\phi\) compete in modelling the over-dispersion. The 95% confidence limits for the efficacy (percent reduction in weed infestation compared to control) show that it is important to account for over-dispersion. Specifically, with the Poisson model ignoring over-dispersion, Herbicide 4 provides a significant weed reduction compared to the control, but not with any of the models that account for over-dispersion. The dataset is rather small, so it is hard to decide based on residual diagnostics which of the over-dispersed models provides the best fit. The use of AIC is not an option for model selection when some of the contending methods do not involve a full likelihood, as is the case with quasi-likelihood methods. Perhaps the main recommendation for this example is that it is crucial to account for over-dispersion and that in lack of strong empirical evidence in favour of one particular method for this, the guiding principle should be that of parsimony. In this situation, the over-dispersed Poisson model may be preferred due to its simplicity. The two Common Approaches fit a linear model (LM) using the predictor in Eq. (8) to the estimated effectiveness per plot. Note that a LM is a special case of a GLM when the link is the identity function and the variance function is a constant. The LM-based analysis yields similar point estimates of the effectiveness but there are notable differences in the confidence intervals. In particular, the confidence intervals for Herbicides 2 and 3 are rather wider than those obtained by GLMM. Also, the effectiveness for Herbicide 4 is significant for the two LM but non-significant for all over-dispersed GLMM. The main reason for this discrepancy is that the Common Approaches based on LM assume homogeneity of variance, whereas heterogeneity is implied by the GLMM. To illustrate the problem, Table 4 shows the fitted conditional variances for the observed counts, given the linear predictor \(\eta_{ij} = \theta + b_{j} + \tau_{i} + e_{ij}\), using a log-link and a Poisson model. There is substantial heterogeneity of variance, which is not properly taken into account in the Common Approaches, which assume homogeneity of variance for the estimated effectiveness.

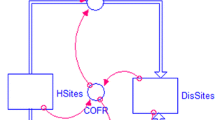

Example 2: biological control agents against Sclerotinia sclerotiorum

This study utilized a controlled laboratory setup to investigate the impact of diverse biological control agents (BCAs) on the development of Sclerotinia sclerotiorum infection in detached soybean leaves. The primary objective was to identify potential active ingredients within BCAs to effectively manage this plant pathogen at plant level. The experimental design adheres to a controlled laboratory setting and employs a randomized complete block design. Detached soybean leaves served as the experimental units. Four distinct active ingredients of BCAs were administered as treatments, each applied to separate groups of detached leaves in triplicates. Half of the treated leaves were subsequently inoculated with the pathogen, while the other half served as a positive control, receiving treatment solely with BCAs. The experiment was conducted under precisely controlled conditions, maintaining a constant temperature of 20 °C and humidity levels of ≥ 75% relative humidity to create a conducive environment for S. sclerotiorum infection. Disease development on the leaves was monitored after 7 days. Each treatment comprised three individual leaves as experimental units, each enclosed in separate Petri dishes and sealed with Parafilm. From each treatment, one Petri dish was placed inside a designated plastic box (block). The resulting blocks were subsequently arranged within the climate-controlled cabinet. The primary trait assessed in this experiment was disease severity or infection rate. Each leaf was separately photographed and analysed by ImageJ. This software quantitatively differentiated between healthy and intact leaf areas by assessing pixel counts in the respective images. The ratio of intact leaf area to the total leaf area were then used to calculate the infection rate (%). The positive controls, which were subjected to various test substances, did not exhibit any symptom development or phytotoxic effect and, therefore, are not considered in the results.

The response in this example is a continuous percentage, making the beta distribution a potential candidate for modelling (Ferrari and Cribari-Neto 2004; Douma and Weedon 2018). However, this distribution has support for proportions in the open interval between 0 and 1, which excludes the values 0 and 1, so it is not appropriate for dealing with observed percentages of 0% and 100%. In this example, the control has two observations of 100%, thus ruling out the beta distribution. A convenient alternative, which can also deal with proportions of 0 and 1, is the quasi-likelihood approach for GLM suggested by Wedderburn (1974) and its pseudo-likelihood extension to GLMM (Wolfinger and O’Connell 1993; Malik et al. 2020). As stated before, this approach only requires specifying the variance function, and the most natural choice here is the variance function of the pseudo-binomial. Thus, if the mean, a proportion in our case, is denoted by \(\mu\), then the variance function is defined as \({\text{var}} \left( y \right) = \phi \mu \left( {1 - \mu } \right)\). This is easily fitted using a GLMM package, specifying a logit or probit link and a pseudo-binomial (over-dispersed binomial, quasi-binomial) distribution.

Table 5 shows results for this model using a logit link and a linear model with effects for treatments and blocks. Using a logit link, if \(\mu_{ij}\) denotes the expected percentage for the j-th replicate of the i-th treatment, our model has linear predictor

where \(\theta\) is an intercept, \(b_{j}\) is the effect for the j-th block, and \(\tau_{i}\) is the effect of the i-th treatment. The probability of survival for the i-th treatment is the predicted mean for the i-th treatment given by

where \(\eta_{i} = \theta + \overline{b}_{ \bullet } + \tau_{i}\) are the treatment means on the logit-scale. Using these results, the delta method with the logit link (Agresti 2002, p. 581) yields

where \(\hat{\mu } = \left( {\hat{\mu }_{1} , \ldots ,\hat{\mu }_{6} } \right)^{\prime }\), \(\hat{\eta } = \left( {\hat{\eta }_{1} , \ldots ,\hat{\eta }_{6} } \right)^{\prime }\), and \(D_{\mu } = {\text{diag}}\left( {\frac{{\partial \mu_{i} }}{{\partial \eta_{i} }}} \right)\) with \(\frac{{\partial \mu_{i} }}{{\partial \eta_{i} }} = \mu_{i} \left( {1 - \mu_{i} } \right)\). Next, the efficacies for treatments T are computed from Eq. (5). Again invoking the delta method (Rice 1995, pp. 149–154), we have

from which we can compute standard errors of the estimated efficacies as reported in Table 5.

To facilitate generation of a letter display (Piepho 2018) for the pairwise comparisons among the treatments excluding the control shown in Table 5, we defined a binary factor (CNTRL_VS_TRT) with two levels that separates the five treatments into two groups, one consisting of the control and the other of the four other treatments (see Table 5) and then nest the treatment factor (TRT) in that binary factor (Piepho et al. 2006), leading to the model CNTRL_VS_TRT + CNTRL_VS_TRT.TRT for the treatment effects. Treatment means were then computed for the effect CNTRL_VS_TRT.TRT, followed by pairwise comparisons within the ‘Treatment’ level of CNTRL_VS_TRT.TRT. This same technique was used in subsequent examples as needed.

Example 3: control of apple rust mites

Different control options against apple rust mites, Aculus schlechtendali (Nalepa, 1890), were tested in a field trial. Particularly, it was investigated if the release of predatory mites would lead to similar reductions of the rust mite population as a conventional acaricide (Kiron®, Sumi Agro Ltd., Allershausen, Germany) or an organic acaricide (Eradicoat®, Certis Belchim BV., Hamburg, Germany). The trial was carried out in an apple plantation according to a randomized complete block design. Each treatment was replicated four times, and every replicate consisted of 9 trees (8 m width). Acaricides were sprayed with a conventional sprayer (Eradicoat® was sprayed twice). Predatory mites were released twice manually in small cardboard boxes, which were hung into branches of the trees. 250 predatory mites were applied per m2 of leaf wall area.

Mites were counted by collecting 25 random leaves per replicate, and were brushed off the leaves into Petri dishes using a spider mite-brushing machine (‘Spinnmilben-Bürstmaschine’, Jurchheim Laborgeräte GmbH, Bernkastel-Kues, Germany). Petri dishes were covered with a layer of fat, so that mites were immobilized and counted using stereo-microscopes. Replicates (plots) were sampled repeatedly for rust mites, once before treatments were applied (20th July 2023) and then weekly after spraying and releasing predatory mites, at 28 July, 4 August and 11 August.

Counts of rust mites were modelled using a GLMM with log-link and Poisson distribution. The linear predictor had time-specific effects for treatments and blocks (Piepho et al. 2004). Over-dispersion was modelled by a random error effect in the linear predictor. Serial correlation of observations on the same plot potentially is an issue with repeated measures data but could not be detected in this case when fitting an auto-regressive model [AR(1) and ARH(1)] for the time-specific plot effect in the linear predictor (not shown). However, in our analysis presented here we allowed for heterogeneity of variance between time points to allow for differences in the counting protocols between time points.

In this experiment, the number of mites differs substantially between sampling units before treatment. For this situation, Henderson and Tilton (1955) proposed the following modification of Abbott’s formula, expressed here in terms of means:

where \(\mu_{c}\) and \(\mu_{t}\) are the means for the control and treatment after applying the treatment, whereas \(\mu_{c}^{0}\) and \(\mu_{t}^{0}\) are the means before applying the treatment.

As we are using a log-link here, the efficacy can be written as

where \(\tau_{c}\) and \(\tau_{t}\) are the effects for the control and treatment after applying the treatment, whereas \(\tau_{c}^{0}\) and \(\tau_{t}^{0}\) are the means before applying the treatment. The standard error is obtained using the delta method as

Results shown in Table 6 reveal that the efficacies for 1 × Kiron®, the conventional acaricide, on both 28 July and 4 August, were significant at the 5% level (p = 0.0029 and p = 0.0157, respectively), whereas for the other two treatments, they were not significant.

Example 4: robotic weeding

Five field experiments were set up with sugar beet in 2021–2022 and with oil-seed rape in 2021 at Südzucker AG Research Station in Kirschgartshausen, Germany (49° 62′ 85.5′′ N 08° 45′ 85.0′′ E), and with sugar beet and oil-seed rape in 2022 at the University of Hohenheim Research Station Ihinger Hof, Germany (48° 44′ 32.5′′ N 8° 55′ 31.1′′ E). In those field experiments, each laid out as a randomized complete block design, seven weeding robots with different degrees of automation were tested. The objective of this study was to evaluate the robots in comparison to four standard herbicide treatments. In each experiment, only a subset of the eleven treatments was tested (Table 7).

Weed control efficacy (WCE), crop losses (CL), herbicide savings and treatment costs were the criteria for the evaluation. WCE and CL were defined as \({\text{WCE}} = \left( {1 - {{w_{{\text{t}}} } \mathord{\left/ {\vphantom {{w_{{\text{t}}} } {w_{{\text{c}}} }}} \right. \kern-0pt} {w_{{\text{c}}} }}} \right) \times 100\%\) with \(w_{{\text{t}}}\) representing the weed density in the treated plots and \(w_{{\text{c}}}\) the density of weeds in untreated plots, and \({\text{CL}} = \left( {1 - {{L_{{\text{t}}} } \mathord{\left/ {\vphantom {{L_{{\text{t}}} } {L_{{\text{c}}} }}} \right. \kern-0pt} {L_{{\text{c}}} }}} \right) \times 100\%\) with \(L_{{\text{t}}}\) representing the crop density of treated plots and \(L_{{\text{c}}}\) the crop density of the untreated plots. These two definitions agree with the definition of efficacy in Eq. (4). The main result of this study was that almost all robots achieved equal WCE as the standard herbicide treatment without causing significantly more CL. However, robots with selective in-row mechanical weed control had higher treatment costs due to low driving speed of maximum 1 km/h (Gerhards et al. 2021).

Here, we consider the following three research questions to illustrate our approach:

-

(i)

Is the WCE for bandspraying in-row and hoeing inter-row (treatment 1 in Table 7) in the two sugar beet trials with two separate passes of the robot higher than in the one trial with a simultaneous operation in a single pass of the robot?

-

(ii)

Is WCE of the herbicide treatment higher in sugar beet than in oil-seed rape?

-

(iii)

Do mechanical weeding robots (treatments 3, 5, 6, 9 and 10 in Table 7) cause higher CL than spraying robots (treatments 2 and 4 in Table 7)?

To address these three questions, the counts were modelled using a GLMM with a Poisson distribution and a log-link. To account for the differences in area samples per plot, we used an offset variable for area (A; in m2) in the linear predictor, which is given by

where \(\theta\) is an intercept, \(\tau_{i}\) is the i-th treatment main effect, \(\beta_{j}\) is the fixed main effect of the j-th trial, \(u_{ij}\) is the random trial-by-treatment interaction with zero mean and variance \(\sigma_{u}^{2}\), \(b_{jk}\) is the fixed effect of the k-th block in the j-th trial, and \(e_{ijk}\) is a random plot effect with zero mean and variance \(\sigma_{e}^{2}\), which models any over-dispersion. The offset \(\log \left( {A_{ijk} } \right)\) ensures that the treatment means for mean counts are on a square-metre (m2) basis. The random interaction \(u_{ij}\) models heterogeneity between trials in a meta-analytic sense (Madden et al. 2016). The fact that the trial main effect is modelled as fixed means that no inter-trial information is recovered. We fitted the model using full maximum likelihood based on a Laplace approximation. The treatment means and estimated efficacies are given in Table 8. The denominator degrees of freedom were determined by the between-within method as implemented in the GLIMMIX procedure of SAS.

To answer the first question, we define a factor BH, which is set equal to the treatment factor, except for the bandsprayer in-row + inter-row hoeing treatment, where we set BH = ‘simultaneous’ when bandspraying and hoeing are done simultaneously and BH = ‘consecutive’ when these operations are done consecutively (Table 7). Crossing the treatment factor with BH allows separating out means for these two variants of the in-row bandspraying + inter-row hoeing treatment. This shows mean weed densities (plants m−2) of 2.1247 for consecutive weeding (first pass spraying and second pass hoeing) and 10.7961 for simultaneous weeding. While the difference in means, and hence in efficacies, has the expected sign, it is not significant (p = 0.0711). Still, the results support previous findings by Warnecke-Busch (2022), who explained lower weed control efficacy of simultaneous weeding by dust covering the leaves due to hoeing and adsorbing the herbicide.

The second question is approached defining a factor CROP, again set equal to the treatment factor, except for the Herbicide treatment, where CROP is set equal to the name of the crop (Table 7). The factor CROP is then crossed with the treatment factor so crops can be compared within the Herbicide treatment. The mean weed abundances are 1.0859 for sugar beet and 5.1347 for oil-seed rape. This difference is significant (p = 0.0085), so we can conclude that the herbicide has significantly higher efficacy in sugar beet.

For the third question, we define a grouping factor ROBOT, which is set equal to the treatment factor, except for the relevant subset of robot treatments, with levels ROBOT = ‘hoeing weeding robot’ and ROBOT = ‘spraying robot’ (Table 7). The treatment model then is ROBOT + TREATMENT.ROBOT. Computing means for the factor ROBOT, we find no significant differences between the two robot types (p = 0.2498) in terms of the mean CL. However, the spraying robot is the only treatment with a significant CL, being the only treatment that is significantly different from the control and also the only treatment where the confidence interval for the efficacy does not cover zero (Table 9). Also, this treatment has the highest estimated efficacy (0.30771).

Example 5: biological control agents against soybean rust Phakopsora pachyrhizi

The primary objective of this study was to assess the antifungal properties of four bioactive secondary metabolites derived from fungal biological control agents (BCAs) concerning their inhibitory effects on the uredospore germination (UG), which is critical for the initiation of fungal infection and the length of germ tubes (LGT) emerging from the germinating spores of Phakopsora pachyrhizi. The traits under investigation were the responses of UG and LGT to the exposure of the bioactive secondary metabolites. The experiment assessed how these specific traits are influenced by the presence of the bioactive metabolites, ultimately aiming to determine the antifungal properties of these compounds. This assessment involved the individual exposure of uredospores to the metabolites at a concentration of 200 ppm, under controlled conditions of 25 °C temperature, ≥ 95% relative humidity, and complete darkness over a 6-h period. Microscopic examination was employed to determine UG and LGT, with inhibition percentages calculated using Abbott’s formula. Each treatment involved four individual microscopic slides (experimental units), and on each of these slides analysis of 50 randomly selected spores was conducted. If a spore germinated, its LGT was assessed, meaning that the number of spores available for measurement varied between slides. In this experiment, a randomized complete block design was implemented. Each block consisted of four separate humidity chambers, each containing a microscopic slide of each treatment. Subsequently, all chambers were incubated in a climate-controlled cabinet. This design was chosen to account for potential variations between the chambers (blocks) and to ensure the statistical robustness of the results.

For the length of germ tubes, we used a logarithmic transformation because this stabilized the variance and achieved approximate normality. Analysis then proceeded by a linear model assuming normality. If normality is assumed on the log-scale, the observed data have a log-normal distribution. Efficacies in this case can be estimated based on Eq. (5), which involves just the contrast of effects for treatment and control on the log-scale. The linear predictor comprised fixed effects for treatments and blocks and a random effect for slides. For uredospore germination, we fitted a pseudo-binomial logit model with fixed effects for treatments and blocks. Thus, if \(\mu\) denotes the probability of germination, the link function is

and the variance function for the observed number \(y\) of germinating uredospores in a sample of size \(m\) is

where \(\phi\) is an over-dispersion parameter and \(m\) is the number of spores in a sample. This model can be fitted by quasi-likelihood (Wedderburn 1974; Malik et al. 2020). The results for both traits are shown in Table 10. For both length of germ tubes and uredospore germination, there are some significant differences in efficacies, with PF3 clearly outperformed by the other three treatments.

Example 6: growth of milkweed bugs on different diets

Milkweed bugs (Heteroptera: Lygaeidae: Lygaeinae) are seed feeders known to sequester toxic secondary plant compounds such as cardiac glycosides or alkaloids for defence against predators. Although they feed on a wide variety of seeds in the field, milkweed bug species are typically associated with specific toxic plants from which they sequester toxins. The study by Petschenka et al. (2022) tested the hypothesis that selected milkweed bug species associate with such toxic plants to acquire toxins for defence rather than because the seeds of these plants are a valuable food resource. To this end, three milkweed bug species that sequester toxins from three different plant species were studied and their growth on different seed diets was assessed. Specifically, Horvathiolus superbus associated with Digitalis purpurea, Lygaeus equestris associated with Adonis vernalis, and Spilostethus saxatilis associated with Colchicum autumnale were studied. To study growth, first instar larvae of all species were reared in groups of initially three individuals (some individuals died during the observation period) in Petri dishes on four different diets (see Petschenka et al. 2022 for details). Sunflower seeds (1), which are a suitable diet for rearing milkweed bugs over many generations, were used as a control, mixtures (2) containing seeds of 11–15 different plant species, the identical seed mixture (3) containing seeds of the respective toxic plant (i.e. D. purpurea for H. superbus, A. vernalis for L. equestris, and C. autumnale for S. saxatilis), or pure toxic seeds (4) represented the experimental diets. Initial body weights were recorded before the start of the experiment. Subsequently, body masses were recorded weekly for three weeks (i.e. four body masses were recorded). To obtain body mass, all bugs in a Petri dish were weighed together. The level of replication (n) (i.e. the number of Petri dishes per diet) was 9 or 10 for H. superbus, and 11 for L. equestris and S. saxatilis.

The estimated biomass per individual was log-transformed and regressed on time for every Petri dish. The slope obtained for each dish corresponds to an estimate of the relative growth rate according to an exponential model, which was found to be suitable based on previous analyses (Petschenka et al. 2022). As in some cases one or two of the individuals on a Petri dish died, we used the number of individuals as a weight variable. The estimated slopes were used as response variable, assumed distributed as Normal, in a two-way ANOVA with effects for species and diet, nested within species. Treatment means for this model were used to estimate efficacy using Eq. (4). Note that this two-stage approach corresponds to the summary-measures approach to repeated measures analysis (Piepho et al. 2004). Serial correlation must be assumed for the four observations on the same Petri dish but independence is given between dishes. Hence, we may assume independence between estimates of the relative growth rates from different dishes. The error variance was set to depend on species (Table 11). Error degrees of freedom were approximated by the Kenward-Roger method. One outlier with absolute studentized residual > 4 was removed. The means are independent, so application of the delta method to estimates of efficacy as per Eq. (4) is simplified in this case. Only Lygaeus equestris displayed significant differences of all diets from the control, but there are no differences in efficacy between the three diets for this species (Table 12). Efficacies for the other two species are partly negative but due to the non-significant comparisons with the control, they are not significantly different from zero. The only exception is the mixture with Colchicum fed to Spilostethus saxatilis, which displayed a significantly larger relative growth rate than the control.

Example 7: weed suppression by cover cropping

In a field experiment in South-Western Germany, five cover crops were grown in mono-species culture and one mixture of all five crop crops (Gerhards et al. 2024). The experiment contained a control treatment without a cover crop. The methods of establishment for cover crop were tested in this experiment: (1) spreading seeds into the winter cereals crop shortly before harvest; (2) no-till seeding immediately after harvesting the previous winter cereal crop; (3) shallow soil tillage immediately after harvest followed by rotary hoeing and seeding of cover crop 10 days after harvest (conventional). The experiment was realized as a two-factorial block design with four replicates. The plots were 3 m wide and 12 m long. Row spacing of cover crops was 12.5 cm. Before winter, approximately 12 weeks after sowing, the aboveground biomass of cover crops, weeds and volunteer cereals were collected separately in four randomly placed frames per plot with a size of 0.5 m × 0.5 m. Biomass samples were dried at 80 °C for 48 h. The interest was in the percentage of weed biomass reduction due to the cover crop biomass relative to the control with no cover crop shortly before the end of the vegetation period in November. It was assumed that rapidly growing cover crop species such as Fagopyrum esculentum and Phacelia tanacetifolia as well as allelopathic cover crops such as Avena strigosa provide highest weed suppression under well prepared seedbed conditions (conventional). Mixtures, however, perform better under no-till and pre-harvest conditions without any seedbed preparation. In earlier studies, mixtures showed that they grow well under severe conditions because they can compensate for the failure of individual partners (Gerhards and Schappert 2020).

We fitted a GLM with fixed effects for block as well as the two treatment factors Sowing and Covercrop and their interaction. Inspection of studentized residuals based on a model with identity link assuming normality revealed an increase in variance with the mean (Fig. 1a). Thus, we used quasi-likelihood (Wedderburn 1974), specifying a log-link and a quadratic variance function, \({\text{var}} \left( y \right) = \phi \mu^{2}\), corresponding to a constant coefficient of variation. This is also the variance function of the Gamma distribution (McCullagh and Nelder 1989, Chapter 8). However, we could not fit the Gamma distribution, as this would have required a strictly positive response (\(y > 0\)), whereas for some plots we have \(y = 0\). The quasi-likelihood approach provides a convenient framework to handle such data. The studentized residuals for this model indicate that the quadratic variance function is suitable (Fig. 1b), and confirm that the response has a skewed distribution, as would also be expected for a Gamma distribution.

Example 7: studentized residuals for weed biomass using a linear predictor with fixed effects for block as well as the two treatment factors Sowing and Covercrop and their interaction; a assuming a normal distribution and identity link, b using quasi-likelihood with a log-link and variance function \({\text{var}} \left( y \right) = \phi \mu^{2}\)

The ANOVA in Table 13, which uses the modelling approach described in Piepho et al. (2006), shows that there is significant interaction between factors Sowing and Covercrop. Hence, we compare means and efficacies of cover crops separately for each sowing method. Table 14 shows means both on the log-scale and the original scale of the fitted GLM for the seven cover crop treatments, separately for the three sowing methods. Table 15 shows the estimated efficacies. As we used a log-link, confidence intervals for efficacy could be computed based on Eq. (5). Avena strigosa had higher efficacy than Phacelia tanacetifolia machine sowing and no-till, though the difference was not significant. With pre-harvest sowing, Phacelia tanacetifolia and the mixture had significantly better weed suppression.

Cover crop biomass varied significantly between treatment and modes of establishment. Phacelia tanacetifolia and Fagopyrum esculentum produced threefold more biomass than Avena strigosa and Vicia sativa. Avena strigosa could compensate lower biomass by a stronger allelopathic effect for weed suppression. Fagopyrum esculentum was characterized by a rapid increase in biomass after emergence. However, this species was already damaged by frost at the time of biomass cutting.

Example 8: entomopathogenic fungi

In a laboratory assay, the efficacy of different isolates of entomopathogenic fungi was tested against third stage nymphs of Pentatoma rufipes L. (Heteroptera: Pentatomidae), a fruit-damaging stink bug. Bugs were placed in small plastic containers and sprayed with spore suspensions of entomopathogenic fungi that previously were isolated from diseased insects found in orchards, where the bugs were also present. For each entomopathogenic fungus, six replicates of seven nymphs were sprayed. Bugs were fed freshly cut apples every two days and mortality was monitored on a weekly basis for four weeks. The goal of the assay was to understand if any entomopathogenic fungi can achieve a high efficacy and therefore provide a potential alternative to insecticides for controlling this pest in orchards. The tested entomopathogenic fungi were: Beauveria bassiana (Balsamo) Vuillemin, Lecanicillium lecanii Zare and W.Gams, two strains of Entomophthora sp., and Isaria farinosa (Homsk.) Fr.

We analyse the data separately for each time point (week) using a pseudo-binomial logit model, which has a scale parameter to account for over-dispersion relative to the binomial distribution (Malik et al. 2020; Stroup et al. 2024). Thus, if \(\mu_{ij}\) denotes the probability of survival for the j-th replicate of the i-th treatment, our model has the linear predictor

where \(\theta\) is an intercept and \(\tau_{i}\) is the effect of the i-th treatment. The probability of survival for the i-th treatment is

where \(\eta_{i} = \theta + \tau_{i}\) are the treatment means on the logit-scale. Estimates of these means can be obtained from the GLMM package, along with the estimated variance–covariance matrix. Standard errors of the estimate of \(\varepsilon_{i}\) can then be computed using the delta method as documented in Eqs. (12) and (13) in Example 2.

Table 16 shows adjusted means on the linear predictor scale (estimates of \(\eta_{i} = \theta + \tau_{i}\)) and their pairwise comparison for the last five dates. In all cases, the comparison with the control is not significant, meaning that efficacies \(\varepsilon\) are not significantly different from 1 and hence the analysis does not allow establishing an effect of treatments relative to the control. Efficacies and their pairwise comparisons are shown in Table 17. Some of the estimated efficacies are negative, reflecting the fact that the control had slightly lower predicted survival probabilities than some of the treatments, though these differences were not significant.

The same data can be used for survival analysis, which makes use of all time points simultaneously and therefore is potentially more efficient (Cox and Oakes 1984; Hay et al. 2014; Onofri et al. 2022). To this end, the data need to be re-coded so that the lower and upper limits of the time interval in which an individual died are obtained. Here, we assume that the lower bound of the interval between 10 and 17 April equals 1 day of survival after treatment. We use maximum likelihood to fit alternative distributions for the survival times of individual insects (Onofri et al. 2019), as implemented in the LIFEREG procedure of SAS (Allison 1995), and the ‘survival’ and ‘flexsurv’ packages in R. The Akaike information criterion (AIC) identifies the log-normal distribution as the best model for the survival times (Table 18). Means on the log-scale are reported in Table 19. Now there is one treatment (i.e. B. bassiana) that is significantly different from the control, suggesting that survival analysis has better power than analysis of counts per time point.

To estimate the efficacy, we need to transform means from the log-scale to the original scale. Under the log-normal model, the logarithmic survival times \(t_{ij}\) for the j-th insect and the i-th treatment follow the model

where \(e_{ij}\) is normal with zero mean and variance \(\sigma_{e}^{2}\). If \(\eta_{i} = \theta + \tau_{i}\) denotes the mean on the log-scale, it follows from properties of the log-normal distribution (Johnson et al. 1994, p. 212) that the mean on the original scale is \(\mu_{i} = \exp \left( {\eta_{i} + \frac{1}{2}\sigma_{e}^{2} } \right)\). From this result, we find the efficacy of the i-th treatment (T) using Eq. (5) and the corresponding standard error using Eq. (6).

Estimated efficacies for B. bassiana and for L. lecanii in Table 19 are notably larger than for the binomial analyses (Table 17), but the standard errors are quite large too. In closing we note that all inference has taken place on the linear predictor scale and that estimation of efficacies and their standard errors was a post-processing step based on a fitted model.

The survival analysis presented here has assumed independence between insects. It would normally be preferable to also fit a random effect for replicates, accounting for the fact that insects in the same replicate are positively correlated and constitute pseudo-replications (Hurlbert 1984; Onofri et al. 2019). Our simpler analysis here may be justified based on the fact that a Wald test for replicate effects nested within treatments is not significant (\(\chi^{2} = 26.88\); \(p = 0.6295\)).

All analyses reveal that the B. bassiana isolate has the strongest potential to control P. rufipes, however, and efficacy of around 54% (s.e. = 17.39%) is typically deemed not sufficient for controlling insect pests, especially at high pest pressures.

Example 9: estimation of LD 50 accounting for baseline mortality

Sensitivity to the neonicotinoid insecticide Mospilan®SG was tested on the non-target plant bug species Leptopterna dolabrata (Heteroptera: Miridae) (Sedlmeier et al. 2024). A classical dose–response assay with topical insecticide application was conducted. The aim was to obtain LD50 values at species level and for male and female specimens separately to investigate the potential influence of sex on insecticide sensitivity. A dilution series of Mospilan®SG (dissolved in water + 0.5% Tween20) with six 1:3 dilutions was made ranging from 100% (suggested field application rate) to 0.14%, following previous dose-spacing experiments. Specimens of L. dolabrata were collected on a meadow via sweep netting, transferred to the laboratory and placed in plastic boxes to allow them to settle. Four identical assays were run in sequence to correct for a possible cohort effect. Each assay included two replicates per concentration, each consisting of six specimens (3 male + 3 female). The specimens of a replicate were first placed in a Petri dish and later transferred to rearing boxes (see below). Accordingly, each concentration consisted of eight replicates nested within four cohorts. The specimens inside the Petri dish were anaesthetized with CO2, which was released into the Petri dish using a CO2-gun. Then, 1 µl of insecticide solution (or pure solvent as a control) was applied dorsally to each specimen. Specimens of each Petri dish were then transferred to rearing boxes that mimicked their natural environment and were provided with water and food. After 24 h, mortality rates for all treatments were recorded for each replicate and sex. A significant proportion of control mortality was observed, regardless of the cohort, which had to be accounted for in the dose–response model in order to avoid a bias in LD50 estimates.

To determine the LD50 when there is natural mortality in the control, it is convenient to model mortality rather than survival. Abbott’s correction assumes independence between mortality from natural causes and mortality due to the insecticide treatment (see ‘Appendix’). Let \(P_{1}\) denote the probability of mortality from natural causes and \(P_{2}\) the probability or mortality caused by the insecticide. It then follows from the independence assumption that the overall probability of mortality equals

Note that the overall survival probability, given by \(1 - P\), follows the product rule of independence, such that \(1 - P = \left( {1 - P_{1} } \right)\left( {1 - P_{2} } \right)\), which may be rearranged to yield Eq. (23). Both probabilities may be modelled by a binomial GLMM, and the GLMMs need to be connected to obtain the overall model. In the case at hand, we may use the following linear predictors for \(\eta_{1} = g\left( {P_{1} } \right)\) and \(\eta_{2} = g\left( {P_{2} } \right)\), where \(g\left( . \right)\) is a suitable link function such as the logit or probit function:

where \(\theta_{h}\) \(\left( {h = 1,2} \right)\) denotes the intercept, \(c_{hj}\) is the j-th cohort effect, \(r_{hjk} \sim N\left( {0,\sigma_{p}^{2} } \right)\) is the random effect for the k-th replicate within the j-th cohort, which accounts for possible over-dispersion, \(\beta\) is the regression on the log-dose, and \(D_{i}\) is the i-th dose. We impose the constraint \(\sum\nolimits_{j} {c_{hj} } = 0\). This ensures that \(\theta_{2}\) can be regarded as the average intercept of the regression on log-dose, such that the LD50 obeys the equation \(\theta_{2} = - \beta \times \log \left( {LD_{50} } \right)\), allowing the model to be re-parameterized defining LD50 as a parameter. Also, the average mortality in the control is given by \(g^{ - 1} \left( {\theta_{1} } \right)\). We may further consider the simplification \(c_{1j} = c_{2j}\) and \(r_{1jk} = r_{2jk}\). To fit this model, we need to consider that, whereas the individual models in Eqs. (24) and (25) are GLMMs, the joint model in Eq. (23) is not a GLMM but a nonlinear mixed model. Here, we use full maximum likelihood using adaptive Gaussian quadrature to fit the model Eq. (23) using a probit link for \(P_{1}\) and \(P_{2}\) (Pinheiro and Bates 1995) as implemented in the NLMIXED procedure of SAS and the ‘optim’ function in R.

For L. dolabrata, this model yields \(\hat{\sigma }_{p}^{2} = 0\), indicating there is no over-dispersion. Furthermore, \(\hat{\beta } = 0.5459\) (s.e. = 0.1184), the estimated base mortality is 0.1394 (s.e. = 0.0531) and the estimated LD50 equals 48.65 (s.e. = 15.28). The fitted dose–response curve is shown in Fig. 2.

Next, we extended the model to allow for the stratification of each Petri dish by the two sexes and fitted a joint model for both probit regressions. For male insects, we find an estimated base mortality of 0.2314 (s.e. = 0.0938) and an estimated LD50 of 6.478 (s.e. = 2.602). For female insects, we find an estimated base mortality of 0.0097 (s.e. = 0.0102) and an estimated LD50 of 150.82 (s.e. = 22.63). The two fitted models are depicted in Fig. 4. Again, the variance of Petri dishes is estimated to be zero, suggesting absence of over-dispersion. The AIC for both models (Figs. 2 and 3) suggests that accounting for sex is important. Also, a Wald test comparing the two LD50 values is significant (z = − 7.34, p < 0.0001), further supporting this extended model.

An alternative approach that is commonly used is to compute the corrected mortality, \(E = 1 - T/C\), per cohort and then fit a dose–response curve using standard procedures (probit regression, etc.), assuming that the corrected mortality can range from 0 to 1. The challenge with such an approach is how to account for the variance of the observed counts (Rosenheim and Hoy 1989). Assuming independence between replicates and purely binomial sampling, it follows from Eq. (3) that the approximate variance function for the estimated corrected mortality (efficacy) \(E\) is

where \(m\) is the number of insects per treatment and cohort and \(\varepsilon = 1 - \mu_{t} /\mu_{c}\) [In case of over-dispersion, the variance in Eq. (26) may be multiplied by a constant dispersion parameter]. For exploring the variance function, we here set \(m = 12\), but any other value would yield the same shape of the variance function. Figure 4 shows the variance of \(E\) as a function of \(\varepsilon\) and \(\mu_{c}\), the survival rate in the control. There is obviously heterogeneity of variance. One might be tempted to consider analysing \(E\) using a quasi-binomial model (Wedderburn 1974) with variance function \(\varepsilon \left( {1 - \varepsilon } \right)\) (Karunarathne et al. 2022), but inspection of Eq. (26) as well as Fig. 4 shows that this is not an appropriate variance function unless the survival rate in the control equals \(\mu_{c} = 1\). A further very serious problem with this idea is that estimates \(E\) for individual replicates can easily fall outside the permissible range between 0 and 1, in which case quasi-likelihood cannot be applied.

Example 9: variance of \(E\) [var(E)] as a function of \(\varepsilon = 1 - \mu_{t} /\mu_{c}\) and \(\mu_{c}\), the survival rate in the control, computed using Eq. (26)

Closing remarks

It has been well known for a long time that GLMM are a powerful tool for analysing data arising from designed experiments in the field plant protection, especially when count data are involved (Wedderburn 1974; Piepho 1999; Madden et al. 2002; Sudo et al. 2019). Application of this framework, however, still is relatively rare, compared to more classical approaches based on analysis of variance and the assumptions of normality and homogeneity of variance. In relation to Abbott’s formula, the Common Approaches 1 and 2 mentioned in the introduction are still dominant in practice. While these approaches may work reasonably depending on circumstances, there is an inherent risk that the usual assumptions are violated because estimates of efficacy involve ratios of random variables and because the trait under consideration is not distributed as normal and displays a functional variance-mean relationship that cannot be accommodated by standard ANOVA or simple nonlinear regression approaches. By contrast, GLMMs provide a very convenient framework to deal with these issues, and we hope that this tutorial-style paper can help to popularize the framework for efficacy evaluation.

The approach we suggest adheres to a general principle in applied statistics that is very lucidly laid down in the seminal text by McCullagh and Nelder (1989, pp. 21–25). It states that statistical analysis proceeds in three clearly separated steps: (i) model selection, (ii) fitting the selected model and (iii) making predictions from the fitted model. In the case of efficacy trials, step (ii) fits the model to the observed data, accounting for the experimental design and randomization layout using all necessary effects and distributional peculiarities of the observed data, which are critically assessed in step (i). These first two steps yield estimates of the effects of treatments and the control. In step (iii) the fitted model is used to estimate efficacies based on Eq. (4) and any statistical inference on these estimates is conducted, including pairwise comparisons or estimation of LD50. This approach differs fundamentally from the Common Approaches, where step (iii) precedes steps (i) and (ii) in that first some estimate of efficacy is obtained at the level of the experimental units, which is then analysed using classical ANOVA and regression. Important variance (and its degrees of freedom) due to the control treatment has then been effectively omitted prior to analysis of data but the contribution of such variance should really be assessed in the context of a model of the full data set.

GLMMs involve a link function that transforms the linear predictor to the mean scale. As such, these models provide a convenient path to obtaining estimates of means for control and treatment on the observed scale, which can then be used to estimate efficacy based on Eq. (4). A popular alternative to GLMMs is to seek a data transformation to achieve approximate normality. If such a transformation can be found, analysis including estimation of means for control and treatment as well as statistical inference can proceed on the transformed scale using LMM. When efficacy is to be assessed using Eq. (4) based on means (probabilities) on the observed scale, a back-transformation of means on the transformed scale must be performed. This back-transformation is challenging in general, typically requiring application of the delta method (Johnson et al. 1993). Only in the special case of a log-transformation is there a very easy route to estimation of efficacy as documented in Eqs. (5) and (6) and illustrated in Example 5. Except for this special case, a GLMM is more convenient and apt than a data transformation.

To deal with the problems associated with the two Common Approaches 1 and 2, we relied on the delta method. The delta method approximates standard errors of nonlinear transformations of parameter estimates based on a Taylor series expansion. The approximate nature of the method means that particularly in small samples empirical coverage probabilities of confidence intervals may deviate from the nominal levels. How relevant this issue is for typical designs used in plant protection needs more specific investigation beyond the scope of this paper. There are additional alternatives to using the delta method, for example using a parametric bootstrap (Efron and Tibshirani 1993). A bootstrap approach is implemented in the R package ‘lme4’ [bootMer()] and also the package ‘glmmTMB’ allows to simulate from the model. From a parametric bootstrap distribution, useful inferences such as standard errors and confidence intervals may be obtained for any function of the model parameters, including the efficacy. Furthermore, Bayesian methods are becoming a valid alternative for most practical data analysis applications (Gelman and Hill 2007). Many of the models presented in this paper can be fitted as Bayesian models and are readily available in various software packages, for example R packages ‘MCMCglmm’ or ‘rstanarm’ or the SAS procedure BGLIMM.

In several of the examples, consideration of pairwise comparisons among treatment means and efficacies constituted an integral part of the statistical inference. In many examples, there was only a single treatment factor, and comparing efficacies among levels of that factor was the main objective of the analysis. In four examples (3, 6, 7, 8), there was one additional factor of interest, and a key question was if that secondary factor modifies the efficacies of levels of the primary treatment factor. That question can be addressed by assessing the significance of the interaction between the two factors in question. Where such interaction is deemed important, either based on a formal test, or based on other evidence and reasoning, mean comparisons among levels of the primary treatment factor need to be made separately by levels of the secondary factor of interest (Onofri et al. 2010). It would not be helpful in these situations to indiscriminately perform all possible pairwise comparisons among means for the factorial combinations.

Throughout the paper, we have reported significance tests and confidence intervals providing comparison-wise type I error control not making any adjustments for multiplicity. The main reason for this choice was to keep the exposition focused on the linear modelling. It should be kept in mind, however, that there are several alternative options providing simultaneous rather than just comparison-wise control of type I error rates for all significance tests and confidence intervals. The most stringent procedures control the family-wise type I error rate (Bretz et al. 2010). An intermediate option is control of the false discovery rate (Benjamini and Hochberg 1995). These more stringent procedures are associated with a corresponding increase in the type II error rate. Hence, planning sample size in accordance with the envisioned method for type I error rate is crucial. The more stringent procedures require larger sample sizes to achieve the same level of power as procedures only controlling the comparison-wise type I error rate. In some examples, we have provided multiple comparisons among treatment means, in addition to multiple comparisons among efficacies. In our experience, both types of comparison are usually of interest. It should be kept in mind, however, that performing both types of comparisons increases the total number of comparisons and hence the chances of committing comparison-wise type I errors.

References

Aamlid TS, Espevig T, Tronsmo A (2017) Microbiological products for control of Microdochium nivale on golf greens. Crop Sci 57:559–566

Abbott WS (1925) A method of computing the effectiveness of an insecticide. J Econ Entomol 18:265–267

Agresti A (2002) Categorical data analysis, 2nd edn. Wiley, New York

Allison PD (1995) Survival analysis using the SAS system. SAS Institute Inc., Cary

Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B 57:289–300

Bliss CI (1967) Statistics in biology. McGraw-Hill, New York

Breslow NE, Clayton DG (1993) Approximate inference in generalized linear models. J Am Stat Assoc 88:9–25

Bretz F, Hothorn T, Westfall P (2010) Multiple comparisons using R. CRC Press, Boca Raton

Büchse A, Piepho HP (2006) Messen, Schätzen, Bonitieren: Konsequenzen des Skalenniveaus für die Auswertung und Interpretation von Versuchsergebnissen, Tagungsband der DLG-Technikertagung. 37. Arbeitstagung AG Feldversuche in Soest am 30 und 31. Januar 2006, S 82–102

Colby SR (1967) Calculating synergistic and antagonistic responses of herbicide combinations. Weeds 15:20–22

Cox DR, Oakes D (1984) Analysis of survival data. Chapman and Hall, London

Douma JC, Weedon JT (2018) Analysing continuous proportions in ecology and evolution: a practical introduction to beta and Dirichlet regression. Methods Ecol Evol 10:1412–1430

Efron B, Tibshirani RJ (1993) An introduction to the bootstrap. Chapman & Hall/CRC, Boca Raton

Ferrari SLP, Cribaro-Neto F (2004) Beta regression for modelling rates and proportions. J Appl Stat 31:799–815

Finney DJ (1964) Statistical method in biological assay, 2nd edn. Hafner Publishing Company, New York

Finney DJ (1971) Probit analysis, 3rd edn. Cambridge University Press, Cambridge

Fleming R, Retnakaran A (1985) Evaluating single treatment data using Abbott’s formulae with reference to insecticides. J Econ Entomol 78:1179–1181

Gbur EE, Stroup WW, McCarter KS, Durham S, Young LJ, Christman M, West M, Kramer M (2012) Analysis of generalized linear mixed models in the agricultural and natural resources sciences, Madison, ASA-CSSA-SSSA

Gelman A, Hill J (2007) Data analysis using regression and multilevel/hierarchical models. Cambridge University Press, Cambridge

Gerhards R, Schappert A (2020) Advancing cover cropping in temperate integrated weed management. Pest Manag Sci 76:42–46

Gerhards R, Andújar Sanchez D, Hamouz P, Peteinatos GG, Christensen S, Fernandez-Quintanilla C (2021) Advances in site-specific weed management: a review. Weed Res 62:123–133

Gerhards R, Schumacher M, Merkle M, Malik W, Piepho HP (2024) A new approach for modelling weed suppression of cover crops. Weed Res 64:219–226

Gisi U (1986) Synergistic interaction of fungicides in mixtures. Phytopathology 86:1273–1279

Hay FR, Mead A, Bloomberg M (2014) Modelling seed germination in response to continuous variables: use and limitations of probit analysis and alternative approaches. Seed Sci Res 24:165–186

Henderson CF, Tilton EW (1955) Tests with acaricides against the brown wheat mite. J Econ Entomol 48:157–161

Hurlbert SH (1984) Pseudoreplication and the design of ecological field experiments. Ecol Monogr 54:187–211

Jeger MJ (1989) Synergy of disease control actions: an epidemiological analysis. Plant Dis Prot 96:526–534

Johnson NL, Kotz S, Kemp W (1993) Univariate discrete distributions, 2nd edn. Wiley, New York

Johnson NL, Kotz S, Kemp W (1994) Continuous univariate distributions, vol 1, 2nd edn. Wiley, New York

Karunarathne P, Pocquet N, Labbé P, Milesi P (2022) BioRssay: an R package for analyses of bioassays and probit graphs. Parasit Vectors 15:35

Madden LV, Turecheck WW, Nita M (2002) Evaluation of generalized linear mixed models for analyzing disease incidence data obtained in designed experiments. Plant Dis 86:316–325

Madden LV, Piepho HP, Paul PA (2016) Models and methods for network meta-analysis. Phytopathology 106:792–806

Malik W, Llorca CM, Berendzen K, Piepho HP (2020) Analysing a binomial response in an Arabidopsis thaliana bZIP experiment using generalized linear mixed models with different link and variance functions. Commun Stat Theory Methods 49:4313–4332

McCullagh P, Nelder JA (1989) Generalized linear models, 2nd edn. Chapman & Hall, London

McCulloch CE, Searle SR (2001) Generalized, linear, and mixed models. Wiley, New York

Nath CP, Kumar N, Hazra KK, Praharaj CS (2022) Bio-efficacy of different post-emergence herbicides in mungbean. Weed Res 62:422–430

Onofri A, Carbonell EA, Mortimer M, Piepho HP (2010) Current statistical issues in weed research. Weed Res 50:5–24

Onofri A, Piepho HP, Kozak M (2019) Analyzing interval-censored data in agricultural research: a review with examples and software tips. Ann Appl Biol 174:3–13

Onofri A, Mesgaran MB, Ritz C (2022) A unified framework for the analysis of germination, emergence, and other time-to-event data in weed science. Weed Sci 70:259–271

Oplos C, Eloh K, Spiroudi UM, Pierluigi C, Ntalli N (2018) Nematicidal weeds, Solanum nigrum and Datura stramonium. J Nematol 50:317–328

Parkash V, Saini R, Singh M, Singh S (2022) Comparison of the effects of ammonium nonanoate and an essential oil herbicide on weed control efficacy and water use efficiency in pumpkin. Weed Technol 36:64–72

Petschenka G, Halitschke R, Zust T, Roth A, Stiehler S, Tenbusch L, Hartwig C, Moreno Gamez JF, Trusch R, Deckert J, Chalusova K, Vilcinskas A, Exnerovova A (2022) Sequestration of defenses against predators drives specialized host plant associations in preadapted milkweed bugs (Heteroptera: Lygaeinae). Am Nat 199(6):E211–E228

Piepho HP (1999) Analysing disease incidence data from designed experiments by generalized linear mixed models. Plant Pathol 48:668–674

Piepho HP (2018) Letters in mean comparisons: what they do and don’t mean. Agron J 110:431–434

Piepho HP, Büchse A, Richter C (2004) A mixed modelling approach to randomized experiments with repeated measures. J Agron Crop Sci 190:230–247

Piepho HP, Williams ER, Fleck M (2006) A note on the analysis of designed experiments with complex treatment structure. HortScience 41:446–452

Piepho HP, Madden LV, Roger J, Payne R, Williams ER (2018) Estimating the variance for heterogeneity in arm-based network meta-analysis. Pharm Stat 17:264–277

Piepho HP, Gabriel D, Hartung J, Büchse A, Grosse M, Kurz S, Laidig F, Michel V, Proctor I, Sedlmeier JE, Toppel K, Wittenburg D (2022) One, two, three: Portable sample size in agricultural research. J Agric Sci 160:459–482

Pinheiro JC, Bates DM (1995) Approximations to the log-likelihood function in the nonlinear mixed effects model. J Comput Graph Stat 4:12–35

Püntener W (1981) Manual for field trials in plant protection, 2nd edn. Agricultural Division, Ciba-Geigy Limited, Basel

Rice JA (1995) Mathematical statistics and data analysis, 2nd edn. Duxbury Press, Gaithersburg

Rice SJ, Furlong MJ (2023) Synergistic interactions between three insecticides and Beauveria bassiana (Bals.-Criv.) Vuill. (Ascomycota: Hypocreales) in lesser mealworm, Alphitobius diaperinus Panzer Coleoptera: Tenebrionidae), larvae. J Invertebr Pathol 200:107974

Ritz C, Baty F, Streibig JC, Gerhard D (2015) Dose-response analysis using R. PLoS ONE 10:e0146021

Robertson JL, Preisler HK (1992) Pesticide bioassays with arthropods. CRC Press, Boca Raton

Rosenheim JA, Hoy MA (1989) Confidence intervals for Abbott’s formula correction of bioassay data for control response. J Econ Entomol 82:331–335

Samoucha Y, Cohen Y (1986) Efficacy of systemic and contact fungicide mixtures in controlling late blight in potatoes. Phytopathology 76:855–859

Schneider-Orelli O (1947) Entomologisches Praktikum, 2nd edn. Sauerländer und Co., Aarau

Sedlmeier JE, Grass I, Bendalam P, Hoeglinger B, Walker F, Gerhard D, Piepho HP, Bruehl CA, Petschenka G (2024) The neonicotinoid acetamiprid is highly toxic to wild non-target insects. BioRxiv. https://doi.org/10.1101/2024.04.11.589033

Singh MK, Singh S, Prasad SK (2021) Weed suppression and crop yield in wheat after mustard seed meal aqueous extract application with reduced rate of isoproturon. J Agric Food Res 6:100235

Stiratelli R, Laird N, Ware JH (1984) Random-effects models for serial observations with binary response. Biometrics 40:961–971

Stroup WW, Claassen E (2020) Pseudo-likelihood or quadrature? What we thought we knew, what we think we know, and what we are still trying to figure out. J Agric Biol Environ Stat 25:639–656

Stroup WW, Ptukina M, Garai J (2024) Generalized linear mixed models: modern concepts, methods and applications, 2nd edn. CRC Press, New York

Sudo M, Yamanaka T, Miyai S (2019) Quantifying pesticide efficacy from multiple field trials. Popul Ecol 61:450–456

Warnecke-Busch G (2022) Mechanical-chemical weed control in sugar beets (Beta vulgaris subsp. vulgaris) and oil-seed rape (Brassica napus) with hoes in conjunction with a band spraying equipment. Julius-Kühn Archiv 468:385–399

Wedderburn R (1974) Quasi-likelihood functions, generalized linear models, and the Gauss-Newton method. Biometrika 61:439–447

Wolfinger R, O’Connell M (1993) Generalized linear mixed models: a pseudo-likelihood approach. J Stat Comput Simul 48:233–243

Wood S (2011) Fast stable restricted maximum likelihood and marginal likelihood estimation of semiparametric generalized linear models. J R Stat Soc B 73:3–36

Acknowledgements

Hans-Peter Piepho and Waqas Malik were supported by DFG Grant PI 377/24-1. We thank all reviewers of our paper for very helpful comments and suggestions.

Funding

Open Access funding enabled and organized by Projekt DEAL. German Research Foundation (DFG) Grant PI 377/24-1 to Hans-Peter Piepho.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

Let \(P_{1}\) be the probability of non-survival (mortality) from natural causes and \(P_{2}\) the probability of non-survival (mortality) due to a treatment. Then assuming independence between the two causes, the probability of non-survival from either one of the causes is (Finney 1971, p. 125)

Now observing that \(P\) in Eq. (27) must be the rate of non-survival in the treatment, hence equal to \(\left( {1 - \mu_{t} } \right)\), and that \(P_{1} = 1 - \mu_{c}\), we obtain

Solving this for \(P_{2}\), we find

which is equivalent to Eq. (1).

It is also worth noting that Abbot’s formula can be written in terms of the observed mortalities in the control and in the treatment. If we denote these as \(\overline{\mu }_{c} = 1 - \mu_{c}\) and \(\overline{\mu }_{t} = 1 - \mu_{t}\), we may write (Bliss 1967, p. 75; Finney 1964, p. 564; Finney 1971, p. 125; Schmeider-Orelli 1947; Püntener 1981; Rosenheim and Hoy 1989)

Incidentally, Eq. (27) may also be written as