Abstract

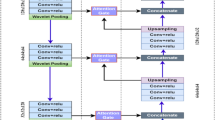

Distinguishing weeds from crops is a critical challenge in agriculture, with the existing agriculture semantic segmentation networks simply combining low-level with high-level features at the encoder and decoder stages to improve performance. However, a simple low-level and high-level feature fusion may not be effective due to the semantic and spatial resolution gap. Hence, this paper proposes a novel dual attention network (DA-Net), based on branch attention blocks in the encoding stage and spatial attention blocks in the decoding stage, to bridge the gap between low-level and high-level features. Our method first adds a branch selection module at the residual connection between the encoder and decoder, enabling low-level futures to select higher-level features for fusion adaptively. Then, a cascaded convolution block utilizing asymmetric convolution is constructed, supporting the receptive field’s expansion without increasing the computational burden or the parameter cardinality. We design a spatial attention block in the fusion stage to capture rich contextual dependencies. Finally, we construct a novel block named densely channel fusion, which utilizes a sub-pixel layer to encode most channel information into spatial information. The experimental results demonstrate that DA-Net is superior to ExFuse, Ddeeplabv3+, and PSPNet on three public datasets, with each added component significantly affecting the overall performance.

Similar content being viewed by others

References

Abdalla A, Cen H, Wan L et al (2019) Fine-tuning convolutional neural network with transfer learning for semantic segmentation of ground-level oilseed rape images in a field with high weed pressure. Comput Electron Agric 167:105091

Al-Badri AH, Ismail NA, Al-Dulaimi K et al (2022) Classification of weed using machine learning techniques: a review challenges current and future potential techniques. J Plant Dis Prot 129:1–24

Chen LC, Papandreou G, Kokkinos I et al (2017) Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFS. IEEE Trans Pattern Anal Mach Intell 40(4):834–848

Di Cicco M, Potena C, Grisetti G, et al (2017) Automatic model based dataset generation for fast and accurate crop and weeds detection. In: 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS), IEEE, pp 5188–5195

Dyrmann M, Jørgensen RN, Midtiby HS (2017) RoboWeedSupport-Detection of weed locations in leaf occluded cereal crops using a fully convolutional neural network. Adv Anim Biosci 8(2):842–847

Haug S, Ostermann J (2014) A crop/weed field image dataset for the evaluation of computer vision based precision agriculture tasks. In: European conference on computer vision. Springer, Cham, pp 105–111

He K, Zhang X, Ren S, et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hu K, Coleman G, Zeng S et al (2020) Graph weeds net: a graph-based deep learning method for weed recognition. Comput Electron Agric 174:105520

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 20(11):1254–1259

Jiang H, Zhang C, Qiao Y et al (2020) CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput Electron Agric 174:105450

Khan A, Ilyas T, Umraiz M et al (2020) Ced-net: crops and weeds segmentation for smart farming using a small cascaded encoder-decoder architecture. Electronics 9(10):1602

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 60(6):84–90

LeCun Y, Bottou L, Bengio Y et al (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Lee SH, Chan CS, Wilkin P, et al (2015) Deep-plant: plant identification with convolutional neural networks. In: 2015 IEEE international conference on image processing (ICIP). IEEE, pp 452–456

Li X, Wang W, Hu X, et al (2019) Selective kernel networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 510–519

Lottes P, Khanna R, Pfeifer J, et al (2017) UAV-based crop and weed classification for smart farming. In: 2017 IEEE international conference on robotics and automation (ICRA). IEEE, pp 3024–3031

Lykogianni M, Bempelou E, Karamaouna F et al (2021) Do pesticides promote or hinder sustainability in agriculture? The challenge of sustainable use of pesticides in modern agriculture. Sci Total Environ 795:148625

Ma X, Deng X, Qi L et al (2019) Fully convolutional network for rice seedling and weed image segmentation at the seedling stage in paddy fields. PLoS ONE 14(4):e0215676

Mishra AM, Harnal S, Gautam V et al (2022) Weed density estimation in soya bean crop using deep convolutional neural networks in smart agriculture. J Plant Dis Prot 129(3):593–604

Oktay O, Schlemper J, Folgoc LL, et al (2018) Attention u-net: learning where to look for the pancreas. arXiv preprint arXiv:1804.03999

Paszke A, Chaurasia A, Kim S, et al (2016) Enet: a deep neural network architecture for real-time semantic segmentation. arXiv preprint arXiv:1606.02147

Sa I, Chen Z, Popović M et al (2017) weednet: dense semantic weed classification using multispectral images and MAV for smart farming. IEEE Robot Autom Lett 3(1):588–595

Shi W, Caballero J, Huszár F, et al (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1874–1883

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large scale image recognition. Comput Sci, pp 1409–1556

Wang Q, Cheng M, Xiao X et al (2021) An image segmentation method based on deep learning for damage assessment of the invasive weed solanum rostratum dunal. Comput Electron Agric 188:106320

Woo S, Park J, Lee J Y, et al (2018) Cbam: convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

You J, Liu W, Lee J (2020) A DNN-based semantic segmentation for detecting weed and crop. Comput Electron Agric 178:105750

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122

Zhang S, Huang W, Wang Z (2021) Combing modified Grabcut, K-means clustering and sparse representation classification for weed recognition in wheat field. Neurocomputing 452:665–674

Zheng Y, Zhu Q, Huang M et al (2017) Maize and weed classification using color indices with support vector data description in outdoor fields. Comput Electron Agric 141:215–222

Zou K, Chen X, Wang Y et al (2021) A modified U-Net with a specific data argumentation method for semantic segmentation of weed images in the field. Comput Electron Agric 187:106242

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant Nos. 62073284 and 61972336, in part by the Zhejiang Provincial Natural Science Foundation of China under Grant LY19F030008, and in part by the Ministry of Education of Humanities and Social Science Project of China under Grant 20YJAZH028.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, H., Song, H., Wu, H. et al. Multilayer feature fusion and attention-based network for crops and weeds segmentation. J Plant Dis Prot 129, 1475–1489 (2022). https://doi.org/10.1007/s41348-022-00663-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41348-022-00663-y