Abstract

There is increasing interests in robotic and computer technologies to accurately perform endovascular intervention. One major limitation of current endovascular intervention—either manual or robot-assisted is the surgical navigation which still relies on 2D fluoroscopy. Recent research efforts are towards MRI-guided interventions to reduce ionizing radiation exposure, and to improve diagnosis, planning, navigation, and execution of endovascular interventions. We propose an MR-based navigation framework for robot-assisted endovascular procedures. The framework allows the acquisition of real-time MR images; segmentation of the vasculature and tracking of vascular instruments; and generation of MR-based guidance, both visual and haptic. The instrument tracking accuracy—a key aspect of the navigation framework—was assessed via 4 dedicated experiments with different acquisition settings, framerate, and time. The experiments showed clinically acceptable tracking accuracy in the range of 1.30–3.80 mm RMSE. We believe that this work represents a valuable first step towards MR-guided robot-assisted intervention.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background

Cardiovascular diseases stand as a leading global cause of mortality, responsible for approximately 17.9 million fatalities each year, constituting 32% of all worldwide deaths, as disclosed in a recent World Health Organization report (“Cardiovascular diseases (CVDs)” 2023). Endovascular intervention presents a minimally invasive surgical method employed in the treatment of various vascular and cardiac conditions (Lee et al. 2017). This procedure leverages catheters and guidewires to execute a range of tasks while skillfully navigating the vasculature to access specific anatomical targets. Illustrative endovascular procedures encompass stent placement (Walker et al. 2010), cardiac ablation (Rui et al. 2018), embolization (Molvar and Lewandowski 2015), and the delivery of devices (Ludman 2019). The intricacies of vascular navigation necessitate exceptional endovascular expertise to avoid inadvertent, albeit frequent, interactions between the manipulated instruments and vessel walls, which carry the risk of perforation and injury (Dagnino et al. 2018). Endovascular procedures involve the navigation of catheters and guidewires inside the vascular tree, to reach the anatomy of interest, and perform procedures like stent placement, coiling, valve (re-)implantation, and ablation (Lee et al. 2017). These procedures require a high level of maneuverability to avoid dangerous injuries to the vasculature, i.e., puncture or rupture, and robotic assistance can improve these challenging maneuvers by providing enhanced manipulation precision and stability while reducing the ionizing radiation doses—generated by the necessary fluoroscopy guidance—to both the patient and the operator.

Currently, there is a growing interest in the utilization of robotic and computer technologies to enhance the precision of endovascular interventions (for comprehensive reviews of the latest advancements, readers are directed to references (Lee et al. 2017; Troccaz et al. 2019; Rafii-Tari et al. 2014)). The advantages of such technology adoption encompass heightened stability and precision in catheter and guidewire manipulation, improved accessibility to challenging anatomical locations, diminished radiation exposure, and enhanced patient comfort (Troccaz et al. 2019). Numerous endovascular robotic platforms have been devised, each offering unique solutions in this regard. Exemplary commercial systems include the Magellan (for endovascular procedures) and Sensei X2 (for electrophysiology procedures) by Hansen Medical (now Auris Health Inc., USA) platforms. These platforms are teleoperated by the clinician—who sits at the master console—and using a joystick or buttons. The Magellan system makes use of 2D fluoroscopy for intraoperative guidance, while the Sensei X2 integrates 3D guidance provided by third-party software. Niobe (Stereotaxis, USA) is used in endovascular electrophysiology applications (Feng et al. 2017). Catheters and guidewires are remotely manipulated (therefore the operator is not exposed to ionizing radiations) using a magnetic field produced by two permanent magnets, while the CARTO 3 system provides 3D intraoperative navigation. CorPath GRX (Siemens Healthineers, Erlangen, Germany) is an interesting and more recent teleoperated robotic platform for endovascular intervention that features partial procedural automation of guidewire manipulation (e.g., spin to cross lesions) mimicking motion patterns from manual instrument handling (Mahmud et al. 2020). Moreover, the platform has been used for the first-in-human long distance robotic percutaneous coronary intervention (Patel et al. 2019). In 2023, Robocath (Rouen, France) launched their new product R-One+, a robotic endovascular platform that provides physicians with reliable, precise assistance during procedures and enhance movements creating better interventional conditions, by being totally protected from x-rays (Durand et al. 2023). The system can use third-party endovascular devices, an improvement with respect to other systems that requires proprietary devices.

1.2 Towards MR-guided robot-assisted endovascular intervention

Despite the growing adoption of robotic platforms for endovascular procedures, many existing systems exhibit several limitations related to clinical usability, such as counterintuitive user interfaces and poor integration into clinical workflows, as well as limited versatility due to their narrow range of applications and the use of expensive proprietary instruments. One significant constraint of current endovascular interventions, whether manual or robot-assisted, is the continued reliance on 2D fluoroscopy for surgical navigation. While this method has demonstrated its feasibility in large patient populations, it poses the risk of ionizing radiation exposure for both clinicians and patients. Additionally, the procedure necessitates the use of nephrotoxic contrast agents to delineate the vasculature for interventional planning, which may lead to nephropathy (Kundrat et al. 2021). Furthermore, fluoroscopy is contraindicated for pregnant patients and individuals of a young age, particularly pediatric patients who are more vulnerable to the long-term effects of ionizing radiation (Mainprize et al. 2023; Hill et al. 2017).

Recent research endeavors are focused on MRI-guided interventions to reduce ionizing radiation exposure and enhance the diagnosis, planning, navigation, and execution of endovascular procedures. This approach offers 3D functional imaging without radiation exposure, improved visualization of soft tissues, and the potential to characterize blood flow (Hopman et al. 2023; Jaubert et al. 2021; Nijsink et al. 2022a). However, the transition from fluoroscopy to MRI for endovascular interventions presents several practical challenges. Firstly, the design of current MRI scanners impacts procedural ergonomics, as clinical staff cannot directly access and monitor the patient (Fernández-Gutiérrez et al. 2015). Consequently, this creates a need for additional support, such as robotic systems, to simultaneously manage instrument manipulation and patient monitoring. Nonetheless, any assistive devices considered must adhere to MRI safety standards, including the elimination of ferromagnetic components.

While the number of MRI-compatible technologies is constantly growing, and MR-guided procedures are already part of the clinical routine (Huang et al. 2023), to the best of our knowledge there are no robotic platforms that can be used under MR-guidance to perform endovascular procedures.

1.3 Contribution

Research conducted by our group aims at addressing the aforementioned limitations. In previous works (Dagnino et al. 2018; Abdelaziz et al. 2019; Benavente Molinero, et al. 2019; Payne et al. 2012), we have created an MR-safe robotic platform for endovascular procedures, named as CathBot. CathBot is a teleoperated robotic platform featuring: a user-friendly and ergonomic master manipulator that replicates the endovascular maneuver patterns; an MR-safe remote robot that manipulates any type of catheters and guidewires based on users’ motion commands captured by a user-centered master device. CathBot was successfully validated under fluoroscopy guidance through an in-vitro user-study (on silicon phantoms) with expert vascular surgeons (Kundrat et al. 2021), and through in-vivo endovascular procedures on porcine models (Dagnino, et al. 2022).

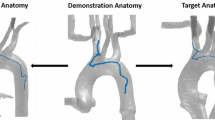

Current research aims at creating MR-based navigation for CathBot, with the final goal of making MR-guided robot-assisted endovascular intervention possible. This study focuses on the design and development of an MR-based navigation framework for our robotic platform. This is a navigation software created to acquire and process MR images in real-time, that can be integrated in the control architecture of the robotic platform to provide enhanced MR-based navigation. Namely the system allows: (1) acquisition of real-time MR images; (2) image processing: segmentation of the vasculature and tracking of vascular instruments; (3) generation of MR-based guidance.

In this paper we introduce the navigation framework, and we evaluate the vascular instrument tracking accuracy, a key aspect of the whole system.

We also make available to the readers the developed software and its user manual which are provided in the Supplementary Materials.

1.4 Outline

The next section summarizes the main features of the CathBot platform. Section 3 describes the proposed MR-based navigation framework, followed by experimental results in Sect. 4. The paper concludes with the discussion of the results in Sect. 5, and an outlook on open challenges and future directions in Sect. 6.

2 The CathBot endovascular platform

This section provides a comprehensive overview of our robotic platform. For a detailed analysis of the technology previously developed, we direct readers to our prior studies (Dagnino et al. 2018; Dagnino et al. 2022; Kundrat et al. 2021; Abdelaziz, et al. 2019).

Our robotic platform, as depicted in Fig. 1, consists of three key components: a master device for teleoperation and user interface, a remotely operated manipulator directly attachable to the surgical table to manipulate endovascular instruments from a distance, and a navigation system facilitating real-time imaging integration, such as fluoroscopy or MRI.

The CathBot endovascular robot presents a teleoperated configuration. The clinician operates the master device from the control room while looking at MR images provided by the navigation system (an exemplary image of a vascular phantom is shown). The remote manipulator replicates these motion commands in the intervention room, manipulating the actual vascular instruments

The master device emulates the handling of guidewires and catheters, allowing for actions like grasping, push/pull, and rotary motions. It additionally provides rotary and linear user input with force and torque feedback through linear and rotary motors. After a user advances or retracts the selected instrument by linearly displacing the user handle, the integrated linear motor automatically returns the handle to its starting position. This design mirrors human motion patterns, enabling the execution of arbitrary strokes.

The remote manipulator replicates the user's motion commands, which are captured by the master manipulator. Translation and rotation of the actual catheter and guidewire are accomplished via linear and rotary drivers. Customized pneumatic clamps are specifically designed to transmit forces to both instruments during the translation phase. The remote manipulator comprises two plug-and-play mechanisms, one for catheters and one for guidewires, facilitating quick docking and exchange of vascular instruments. All remote components are MR-compatible, allowing for use with MRI. The remote manipulator is positioned in close proximity to the patient within the interventional room, while the master device is located in the control room alongside the navigation system and a valve manifold controlling the pneumatic motors. The operator interacts with the master device from the control room while monitoring the real-time video stream displayed in the navigation system (Fig. 1). In addition to offering visual guidance, the navigation system processes the video stream to generate virtual fixtures that constrain instrument motion, guiding the operator through the vasculature. This haptic guidance, rendered through the master device as friction, is calculated in real-time by tracking the relative position of the instrument and the vessel walls. The closer the instrument is to the vessel wall, the greater the friction perceived by the user in the master device. Moreover, the velocity of the remote manipulator is adjusted accordingly to prevent potentially harmful peak-force impacts between the instruments and the vessel walls. Finally, the system processes the operator's inputs and generates motion commands for the remote manipulator to navigate the surgical instruments, be it a catheter or a guidewire, through the vasculature. Standard commercially available catheters and guidewires can be easily connected to and manipulated by the remote manipulator, and, when required by the clinical application, the surgeon's assistant can quickly exchange them in a matter of seconds.

It's important to note that only the MR-safe remote manipulator is situated within the interventional room, while the remainder of the platform, including the master device, navigation system, and additional electronics, is located in the control room. This configuration allows the use of CathBot in conjunction with MRI, minimizing its impact on the clinical workflow and facilitating collaboration with the clinical team.

3 MR-based navigation framework

As previously introduced, the MR-based navigation framework here proposed allows the (1) acquisition of real-time MR images; (2) segmentation of the vasculature and tracking of vascular instruments; (3) generation of MR-based guidance. The overall idea is to grab real-time images from the MR scanner, apply image processing to detect the vasculature and track the vascular instrument (in our study a guidewire in the abdominal aorta), and use this information to generate vision-based enhanced guidance in the form of visual guidance (visual information displayed on the screen in the control room), and through haptic guidance via the CathBot’s master manipulator. The surgeon teleoperates the remote manipulator, placed in the intervention room close to the patient, by manipulating the master device from the control room. The navigation system provides visual and haptic guidance to help the surgeon to accomplish the procedure. The video stream provided by the MR scanner is acquired and processed by the navigation system at 30 Hz. Tracking algorithms are applied to the grabbed video stream to capture the pose of vascular instruments and the vessel wall. These data are fed into the high-level controller which processes them in real-time along with motion inputs from the surgeon to generate and render dynamic active constraints on the master device at 200 Hz. Motion commands are finally sent to the remote manipulator which performs the actual manipulation of the vascular instruments. It is worth noting that the navigation system and the haptic control system work at different framerates, 30 Hz and 200 Hz respectively. The higher framerate of the haptic control system is necessary to guarantee proper haptic guidance. Despite the different rate, both systems run on real-time controllers that guarantee the determinism of the process adding an extra level of safety to the procedure.

Figure 2 provides a schematic overview of the system architecture, and a detailed description of the navigation framework are provided in the following sub-sections.

System architecture. The video stream provided by the MR is acquired and processed by the navigation system to generate haptic and visual guidance. The pose of the vascular instrument and the vasculature are captured and tracked in real time. Haptic algorithms generate haptic guidance to support the clinician during the procedure

3.1 MR images acquisition

In this study, images are acquired from a Magnetom Aera 1.5 T MRI scanner (Siemens Healthineers, Erlangen, Germany). MR scanning sequences are applied to generate 2D fluoroscopy-like MR images of the vasculature and the vascular instrument in real-time (see the Experimental Validation section for details). Real-time 2D video of this surgical scene is acquired in the PC workstation through an image grabber (DVI2USB3, Epiphan Video, Ottawa, Canada), displayed on a screen in the control room for visual guidance, and processed as follows. The software is written in PyQT and is available in the github at this link https://github.com/Jelle-Bijlsma/UTMR. Please refer to the Supplementary Materials for a technical description of the software. The software acquires the frames provided by the grabber using two functions (filebrowse_png() and get_imlist(), please check the UTMR_main2.py file in the github) that read and make the images available for the processing described in the next sub-section.

3.2 Image processing

The acquired video stream is processed to detect the vessel walls and track the vascular instrument. The image processing pipeline is described in Fig. 3.

Image processing pipeline. Each acquired frame is firstly smoothened by applying a Gaussian filter. A Canny edge detector defines the vessel walls. Further filtering and masking algorithms are applied to define the region of interest for the marker tracker algorithms (template matching and blob finding algorithms). A quadratic spline interpolation is then applied to the detected markers to define the shape of the instrument. This information is sent to the guidance algorithms for further processing

The first step in the image processing is to apply filtering to the acquired frames. The framework uses a Gaussian filter, to smoothen the image and reduce noise in the edge detection step. The filtering is applied in the frequency domain for speed purposes. Please refer to the /functions/filter.py function in the github for details. Then a Canny edge detection algorithm is used to detect the walls of the arteries (/functions/edge.py). The result is a binary image on which a dilation and erosion operator is applied (square 7 × 7 pattern) to provide a closed contour of the vessel walls in the image. Only the internal area of this contour is considered for instrument tracking purposes, by applying masking. This mask defines the region of interest (ROI) where the tracking algorithm (described here below) is applied, improving its efficiency and preventing false-positive detections (e.g., in region outside the vessel). These a template matching algorithm is applied within the aforementioned ROI to detect and track the position of the vascular instrument inside the anatomy (/functions/template.py). In this study, a commercial MR-visible guidewire from (EPflex Feinwerktechnik GmbH, Dettingen an der Erms, Germany) has been used. The guidewire is 0.89 mm thick and consists of an inner core of braided fibers, which is coated with composite and PTFE. The tip is covered with a large para-magnetic marker, followed by 5 short and evenly spaced markers. The markers afterwards are spaced farther apart. The markers are cylindrical and surround the braided fiber. A local descriptor for the para-magnetic markers is created by using a low discrepancy sampling algorithm. Up to 4 different template descriptors can be stored simultaneously. The function creates the templates to search the markers in the video images. The template is a copy of a small area of the input image that includes the marker. The system stores descriptive data, utilizing it to scan for markers in every video frame. This process involves employing a cross-correlation template matching algorithm, specifically focusing on 2D translations. It determines the optimal position of the template on the image, pinpointing the marker’s best estimated location.

A blob finding algorithm runs in parallel to the template matching algorithm for redundancy ensuring all the markers are properly tracked in each frame (/functions/blob_contour.py). Its operational concept relies on intensity thresholding, being shape-feature-independent for increased robustness against marker distortions compared to the template matching method. Despite a trade-off in localization accuracy, this secondary tracking algorithm can identify and rectify erroneous marker positions, ensuring the necessary safety levels for clinical applications.

The positions of magnetic markers detected by the tracking algorithm are interpolated (quadratic spline) to estimate the shape of the actual guidewire. These interpolated points are used in the following step to calculate the wire-wall distance and tip-wall angle for guidance purposes (/functions/spline.py).

The parameters of the image processing algorithms (namely gaussian filter, Canny edge detector, template matching and blob finder) can be tuned online via the software GUI depending on the application. Examples of parameters that can be tuned include the Gaussian kernel, Canny edge detector’s thresholds, up to 4 template acquisitions, blob finder’s thresholds and blob area. The full list of parameters can be found in the provided software in the github.

3.3 MR-based guidance

The idea behind the MR-based enhanced guidance is to use the information on the position of the vascular instrument with respect to the vasculature to enhance the instrument navigation. Contacts between the tip of the instrument and the vasculature may result in puncturing injuries. Contacts between the whole body of the instrument and the vessel walls can also be harmful due to forces and frictions that can damage the tissue. In this work we provide the user with enhanced information on the instrument body position via visual feedback, and on the instrument tip position via haptic feedback (Fig. 4) (Dagnino et al. 2018).

Guidance algorithms. Ray casting and collision detection algorithms are applied to calculate the closest distance between the instrument and the vessel wall. The haptic algorithm is exclusively implemented for a single marker, specifically the one positioned at the tip of the instrument. This information is used to generate the MR-based haptic guidance to mitigate the risk of puncture injuries. However, it is equally crucial to convey information to the user about the overall shape of the instrument, as interactions between the instrument's body and the vessel wall could also lead to injuries. Such information is conveyed through color-coded visual feedback, utilizing the interpolated points

The closer the instrument body is to the vessel wall, the higher is the risk to result in potentially dangerous high-impact contacts between the instrument and the vessel. This information is color-coded and displayed on the video screen in the control room: the vascular instrument assumes different colors according to its distance from the vessel wall, ranging from green (low risk) to red (high risk). The distance between each point of the instrument (after spline interpolation) and the closest point on vessel wall can be calculated. This is done by applying a ray casting algorithm to each point which defines its shape (see Fig. 4). 4 equally spaced rays (0, 30, 60, and 90 degrees) are cast in different directions starting at the actual position of each point Secondly, a collision detection algorithm detects the collisions between the rays and points on the vessel wall. Finally, the algorithm selects the closest point on the vessel wall and calculates the cartesian distance.

Predefined thresholds are set for instrument-wall distances to provide visual feedback to the surgeon. By outlining three different contact risk regions, low, medium, high, a color can be assigned to a line segment. Two thresholds define these three regions as follows. The low-medium threshold is set at the vessel center line ± 20% of the local vessel diameter. The medium–high threshold is set at the vessel center line ± 40% of the local vessel diameter.

When the distance between the instrument and the vessel wall is greater than a low-medium threshold, then the segment color is green (contact risk is low). When the distance between the instrument and the vessel wall is between the low-medium and medium–high thresholds, then the segment color fades from green to yellow (contact risk is medium). Finally, if the distance between the instrument and the vessel wall is lower than the medium–high threshold, then the color the segment color fades from yellow to red (contact risk is high). Figure 6c and d provide two examples.

Haptic guidance is generated via the CathBot’s master manipulator and is perceived as frictions which increase proportionally to the distance between the instrument tip and the vessel wall (i.e., the closer the instrument tip is to the vessel, the higher is the force feedback generated into the master manipulator to inform the surgeon of the proximity of the wall). It is worth noting that the haptic guidance algorithms described here below are only applied to the tip of the instrument, while the visual guidance algorithms are applied to the whole body of the instrument (please refer to Fig. 4).

Friction-like forces were chosen for haptic rendering, instead of other options (e.g., repulsive forces) to minimize the magnitude of the instrument-vessel contacts, which are anyhow required to navigate the vasculature. The 2D pose of the instrument tip Ptip = [xt, yt] is provided by the tracking algorithms (template matching and blob finding algorithms) described in the previous section. The ray casting algorithm is applied to the tip marker and the collision detection algorithm selects the point on the vessel wall Pvessel = [xv, yv,] with minimum distance d from the instrument tip Ptip. This information is then used to model the damping factor f and generate the haptic feedback in the master manipulator motors as follows.

where, Vmotor and Imotor are the motor velocity and current respectively. The damping factor f is modeled as:

where, d is the distance between the instrument tip and the closest point on the vascular wall; D is the local vessel diameter; and fmax is the the maximum friction achievable (user-defined). Equations (1) and (2) describe the following behavior: when the surgeon applies a force on the master manipulator, a motor current Imotor (proportional to the force applied) is generated. The corresponding motor velocity Vmotor is directly proportional to the force applied (described by Imotor) and inversely to the damping factor f. This means that when the surgeon pushes the instrument towards the vessel wall, then the friction generated by the motors increases accordingly. If the instrument tip is in contact with the vessel wall (d = 0), then f is equal to fmax.

If one marker is not detected, visual guidance is withheld. Haptic guidance, however, remains consistently available unless the reference marker at the instrument tip goes undetected. In such case, we keep the friction on the master manipulator constant to prevent unexpected abrupt movements.

4 Assessment experiment

The MR-based navigation was assessed by experiments designed to evaluate the vascular instrument tracking accuracy, a key aspect of the whole framework. The tracking accuracy was assessed by measuring the detection error of the magnetic targets on the MR-safe guidewire between experimental (navigation framework) and ground-truth (user-defined) data sets. The metrics chosen were the mean detection error over all markers, and the root-mean-square-error (RMSE).

The experimental setup is shown in Fig. 1 and described here below.

A silicon phantom of the abdominal aorta, including iliac and renal arteries. The box is 30 cm by 23 cm and the abdominal aorta is 31 mm in diameter. An MR-visible guidewire (EPflex Feinwerktechnik GmbH, Dettingen an der Erms, Germany). The guidewire is 0.89 mm thick and consists of an inner core of braided fibers, which is coated with composite and PTFE. The tip is covered with a large para-magnetic marker, followed by 5 short and evenly spaced markers. The markers afterwards are spaced farther apart. The markers are cylindrical and surround the braided fiber.

The guidewire was inserted in the phantom via the left iliac artery and manipulated through the aorta. Images were acquired using the Magnetom Aera 1.5 T MRI scanner (Siemens Healthineers, Erlangen, Germany). Two sets of scans—namely Scan A and Scan B—were performed using different settings (see Table 1). For each set, both static (guidewire not moving) and dynamic (guidewire moving in the aorta) acquisition have been performed, for a total of four different conditions.

In Scan A, the phantom was filled with water and placed in the MR scanner (a ‘body’ coilset was used). Scanner settings (Table 1) were defined to have good contrast between the markers and the background, whilst keeping the temporal resolution sufficiently high (3 image per second) to perform real-time catheterization. Two scans were made with these parameters, further referenced as scan A1 and scan A2. Scan A1 is stationary with no movement of the guidewire. During Scan A2, the guidewire was retracted from the aorta.

In Scan B, the phantom was filled with Manganese(II) chloride, to simulate a signal intensity similar to blood. Scanner settings (Table 1) were modified to have a larger field of view and slice thickness with respect to Scan A to minimize the chances that the guidewire moved out of plane. Similarly to Scan A, static (B1) and dynamic (B2) acquisitions were performed.

Positions of the magnetic markers in the guidewire were detected and tracked online using the proposed navigation framework. The positions (x, y pixel coordinates in the video reference) of each marker per each frame were log for post-processing analysis. To test the results of the framework, a test with ground-truth data is required. This ground truth data is defined offline using a custom-made program, which allows the user to zoom in, select the center point of each marker, and log the positions (x, y pixel coordinates in the video reference) per frame. Once the ground-truth dataset is defined, the results are compared with the results provided by the framework, and the position error calculated as mean error ± standard deviation and RMSE. It is worth noting that the framework provides a pixel-to-real-world units conversion, therefore the results presented in the next section are provided in mm.

5 Results and discussion

In this section, experimental results are presented and discussed. A total of 4 scans were performed with different settings, namely Scan A and Scan B. In scan A1 and B1 the guidewire was kept stationary, while in Scan A2 and B2 the guidewire was pushed in before being retracted slowly. Objective results are summarized in Table 2 and in Fig. 5. It is worth noting that the mean error is calculated over all the markers detected by the algorithm and reported in Fig. 5 as the mean detection error over all markers. Markers that are not detected by the algorithm are not considered for this calculation.

In Scan A1 all the markers were constantly detected and tracked throughout the acquisition, presenting a mean detection error of 1.36 ± 0.27 mm, and a RMSE of 1.30 mm. In Scan A2 however, there was a period in which no markers were detected. This happened because the whole guidewire was in contact with the wall, and the markers became indistinguishable from the wall. In Scan B1 and B2 the framework was able to detect all the markers but with lower accuracy with respect to Scan A. Namely, B1 presented a mean detection error of 3.95 ± 0.60 mm and RMSE of 3.80 mm while B2 a mean detection error of 3.78 ± 0.78 mm and RMSE of 3.60 mm. The lower detection accuracy reported in Scan B may be related to the lower contrast despite the larger field of view and slice thickness with respect to Scan A. However, a higher contrast can make the marker detection more difficult when the guidewire is close to the vessel wall.

Qualitative analysis of the acquired data (please refer to Fig. 6 for exemplary images) showed also some potential limitations. For example, a too low slice thickness may create tracking issues. In Fig. 6a (scan A1) the slice thickness was only 8 mm over a 31 mm diameter of the abdominal aorta, and the out-of-plane issue can be seen on the 5th MR-marker which is less in-plane than the other markers, resulting in a smaller and less distinctive size. Also, quick movement of the guidewire created strong motion artifacts occur when moving the guidewire as can be seen in Fig. 6b (Scan A2), another potential limitation in the detection and tracking accuracy. These effects were considerably mitigated in Scan B by replacing the water with Manganese(II) chloride solution and increasing the slice thickness. However, the increased slice thickness resulted in an increase of the acquisition time (1 frame per second), and the use of the Manganese(II) chloride solution in a brighter image with lower contrast. This can be seen in Fig. 6c, d where the guidewire can be hardly distinguished from the background, with respect to Scan A (Fig. 6a, b).

Qualitative experimental results for each scan condition. The figure reports exemplary images per each scanning condition, namely Scan A1 (a), Scan A2 (b), Scan B1 (c), and Scan B2 (d). In c, d the distance between the instrument and the vessel wall is color-coded: red means that the instrument is very close to or in contact with the vessel wall (high risk) while green means that the instrument is close to the center line of the vessel (no risk). Yellow represents an intermediate state

A potential solution to the out-of-plane issue, maintaining the slice thickness low and thus a higher framerate, would be automating the correct slice search. This is currently done manually by the radiographer but could be delegated to the navigation software. To this regard, we are working to integrate the robotic platform with the Siemens MRI scanner via the Siemens’ Access-I. Access-I is a software application from Siemens that allows third-party device integration with Magnetom scanners through interactive remote control. The idea is to integrate the navigation framework and the CathBot robotic platform within the MR scanner to control the acquisition plan based on the instrument position on users’ motion commands on the robotic manipulator. This would allow a precise slice selection and location and tracking of the vascular instruments from different angles.

There are very few other works in literature focusing on passive tracking of endovascular tools under MR guidance. Nijsink et al. (2022b) developed a deep learning model for automatic passive detection of guidewire markers assessing its detection performance in terms of correctly and false positive detected markers. The detection accuracy of the algorithm was not evaluated. Van der Weide et al. (2001) used paramagnetic materials embedded in a catheter that interact with the magnetic field of the MR system and thus enable its visualization in the reconstructed MR image.

On the other hand, there are several works aiming at tool tracking under standard fluoroscopy, commonly used in interventional surgeries. For example, Ma et al. (2018) developed a localized machine learning algorithm for detecting and tracking catheters or guidewires with a detection error ranging between 0.56 and 0.66 mm. Other solution, focused on active tracking of the instruments which are commonly embedded with micro-coils and connected to a receiver channel of the scanner. Based on the receiver signal produced by the coil, the 3D position of the catheter within the magnetic field can be inferred (Ramadani et al. 2022).

Our system exhibits a higher detection error in comparison to fluoroscopy applications primarily due to the lower spatial resolution of MRI. As a result, the artifacts produced by the markers within the guidewire have reduced resolution, leading to less precise tracking algorithms compared to fluoroscopy. While this may not pose a problem for safely tracking instruments in larger vessels, it becomes a limitation in cases where cannulation involves smaller arteries or veins. Likely, the tracking performance is mainly affected by the MRI acquisition sequences and the material producing artifacts in the guidewire. When we compare our findings to needle tracking in MRI (Li et al. 2020), our application shows a significantly higher detection error, doubling at its best. This discrepancy might be attributed to the limited visibility of guidewire markers in contrast to the clearly visible needle. Exploring a different MR-safe guidewire using the same MR sequences for comparison could be interesting for future research. Furthermore, ex vivo studies on different vascular phantoms and animal specimens should be conducted to assess the influence of different materials and tissues on the instrument tracking performance.

On the other hand, MRI offers excellent visualization of the vasculature, a capability not achievable with fluoroscopy without the injection of a contrast agent, which is nephrotoxic for the patient.

Another constraint in MRI-guided procedures, whether conducted manually or with robot-assistance, is the temporal resolution. A sufficiently high framerate is crucial for the safe execution of the procedure. Additionally, it is essential to design MRI acquisition sequences that strike a balance between spatial and temporal resolution, particularly in our robotic application where haptic guidance relies on these parameters. The 3 FPS achieved in Scan A of our study can be deemed clinically acceptable, addressing this concern, and there is potential for further improvement by integrating the Access-I software as outlined earlier.

Another useful aspect that can be learned from works on fluoroscopy guidance reported in literature, is the use of a-priori knowledge on the shape and mechanical behavior of the instrument to be tracked. For example, the distance between each marker on the instrument is pre-determined by the instrument manufacturer. Detecting the position of one single marker allows for inferring where the other markers likely are, based on the known distance between them. The detection can be further improved by knowing the mechanical properties of the instrument so that the maximum bend-angle of two successive points can be determined. Also, the inter-frame displacement vector of the markers is a useful information to predict their position. This ties in with robotic actuation of the instrument to get an even closer estimate of the displacement of the markers by knowing the displacement of the robotic actuators.

6 Conclusion

The goal of this work was to create a navigation framework using magnetic resonance imaging for robot-assisted endovascular intervention. The system can acquire and process MR images in real-time for tracking the vasculature and vascular instruments—a guidewire in this study. Tracking information is used to enhance the navigation of the instrument by creating haptic guidance via the robotic manipulator.

The instrument tracking accuracy—a key aspect of the navigation framework—was assessed via 4 dedicated experiments with different acquisition settings (see Table 1), framerate and time (see Table 2). The experiments showed clinically acceptable tracking accuracy in the range of 1.30–3.80 mm RMSE. We are currently preparing an ex vivo user study with our MR-guided robotic platform to demonstrate its feasibility.

Our future research will aim at integrating the use of the Siemens Access-I interface not only to explore automatic slice selection, but also to obtain 3D pose estimation of endovascular instruments with respect to the anatomy. The Siemens interface will be used to develop a program which can automatically control the MRI scanning planes and parameters. Online rotation and/or parameter adjustment of the MRI plane will allow the detection, observation and tracingof the vascular instrument in a 3D space. In such a way, the algorithms proposed in this work can be fully reused to extend the work to a 3D application.

We believe that this work represents a valuable first step towards MR-guided robot-assisted intervention and will pave the way for advanced tracking and navigation frameworks with application to endovascular applications.

Availability of data and material

Not applicable.

References

Abdelaziz, M., et al.: Toward a versatile robotic platform for fluoroscopy and MRI-guided endovascular interventions: a pre-clinical study. Macau (2019)

Benavente Molinero, M., et al.: Haptic guidance for robot-assisted endovascular procedures: implementation and evaluation on surgical simulator. Macau (2019)

Cardiovascular diseases (CVDs).: [Online]. https://www.who.int/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds). Accessed 3 Oct 2023

Dagnino, G., et al.: In-vivo validation of a novel robotic platform for endovascular intervention. IEEE Trans. Biomed. Eng. (2022). https://doi.org/10.1109/TBME.2022.3227734

Dagnino, G., Liu, J., Abdelaziz, M.E.M.K., Chi, W., Riga, C., Yang, G.Z.: Haptic feedback and dynamic active constraints for robot-assisted endovascular catheterization. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid (2018)

Durand, E., Sabatier, R., Smits, P.C., Verheye, S., Pereira, B., Fajadet, J.: Evaluation of the R-One robotic system for percutaneous coronary intervention: the R-EVOLUTION study. EuroIntervention. [Online]. https://eurointervention.pcronline.com/article/evaluation-of-the-r-one-robotic-system-for-percutaneous-coronary-intervention-the-r-evolution-study. Accessed 28 Sep 2023

Feng, Y., et al.: An efficient cardiac mapping strategy for radiofrequency catheter ablation with active learning. Int. J. Comput. Assist. Radiol. Surg. 12(7), 7 (2017). https://doi.org/10.1007/s11548-017-1587-4

Fernández-Gutiérrez, F., et al.: Comparative ergonomic workflow and user experience analysis of MRI versus fluoroscopy-guided vascular interventions: an iliac angioplasty exemplar case study. Int. J. Comput. Assist. Radiol. Surg. 10(10), 1639–1650 (2015). https://doi.org/10.1007/s11548-015-1152-y

Hill, K.D., et al.: Radiation safety in children with congenital and acquired heart disease. JACC Cardiovasc. Imaging 10(7), 797–818 (2017). https://doi.org/10.1016/j.jcmg.2017.04.003

Hopman, L.H.G.A., van de Veerdonk, M.C., Nelissen, J.L., Allaart, C.P., Götte, M.J.W.: Real-time magnetic resonance-guided right atrial flutter ablation after cryo-balloon pulmonary vein isolation. Eur. Heart J. Cardiovasc. Imaging 24(1), e23 (2023). https://doi.org/10.1093/ehjci/jeac211

Huang, S., et al.: MRI-guided robot intervention—current state-of-the-art and new challenges. Med-X 1(1), 4 (2023). https://doi.org/10.1007/s44258-023-00003-1

Jaubert, O., Steeden, J., Montalt-Tordera, J., Arridge, S., Kowalik, G.T., Muthurangu, V.: Deep artifact suppression for spiral real-time phase contrast cardiac magnetic resonance imaging in congenital heart disease. Magn. Reson. Imaging 83, 125–132 (2021). https://doi.org/10.1016/j.mri.2021.08.005

Kundrat, D., et al.: An MR-safe endovascular robotic platform: design, control, and ex-vivo evaluation. IEEE Trans. Biomed. Eng. 68(10), 3110–3121 (2021). https://doi.org/10.1109/TBME.2021.3065146

Lee, S.-L., Constantinescu, M., Chi, W., Yang, G.-Z.: Devices for endovascular interventions: technical advances and translational challenges. National Institute for Health Research and Clinical Research Network, UK, White Paper, White paper. [Online] (2017). https://www.nihr.ac.uk/news-and-events/documents/cardio_report_2017.pdf

Li, X., et al.: Automatic needle tracking using Mask R-CNN for MRI-guided percutaneous interventions. Int. J. Comput. Assist. Radiol. Surg. 15(10), 1673–1684 (2020). https://doi.org/10.1007/s11548-020-02226-8

Ludman, P.F.: UK TAVI registry. Heart Br. Card. Soc. 105(Suppl 2), s2–s5 (2019). https://doi.org/10.1136/heartjnl-2018-313510

Ma, Y., Alhrishy, M., Narayan, S.A., Mountney, P., Rhode, K.S.: A novel real-time computational framework for detecting catheters and rigid guidewires in cardiac catheterization procedures. Med. Phys. 45(11), 5066–5079 (2018). https://doi.org/10.1002/mp.13190

Mahmud, E., et al.: Robotic peripheral vascular intervention with drug-coated balloons is feasible and reduces operator radiation exposure: results of the robotic-assisted peripheral intervention for peripheral artery disease (RAPID) study II. J. Invasive Cardiol. 32(10), 380–384 (2020)

Mainprize, J.G., Yaffe, M.J., Chawla, T., Glanc, P.: Effects of ionizing radiation exposure during pregnancy. Abdom. Radiol. n. y. 48(5), 1564–1578 (2023). https://doi.org/10.1007/s00261-023-03861-w

Molvar, C., Lewandowski, R.J.: Intra-arterial therapies for liver masses: data distilled. Radiol. Clin. n. Am. 53(5), 973–984 (2015). https://doi.org/10.1016/j.rcl.2015.05.011

Nijsink, H., Overduin, C.G., Willems, L.H., Warlé, M.C., Fütterer, J.J.: Current state of MRI-guided endovascular arterial interventions: a systematic review of preclinical and clinical studies. J. Magn. Reson. Imaging 56(5), 1322–1342 (2022a). https://doi.org/10.1002/jmri.28205

Nijsink, H., et al.: Optimised passive marker device visibility and automatic marker detection for 3-T MRI-guided endovascular interventions: a pulsatile flow phantom study. Eur. Radiol. Exp. 6(1), 11 (2022b). https://doi.org/10.1186/s41747-022-00262-4

Patel, T., Shah, S., Pancholy, S.: Long distance tele-robotic-assisted percutaneous coronary intervention: a report of first-in-human experience. EClinicalMedicine (2019). https://doi.org/10.1016/j.eclinm.2019.07.017

Payne, C.J., Rafii-Tari, H., Yang, G.Z.: A force feedback system for endovascular catheterization. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 1298–1304 (2012). https://doi.org/10.1109/IROS.2012.6386149

Rafii-Tari, H., Payne, C.J., Yang, G.-Z.: Current and emerging robot-assisted endovascular catheterization technologies: a review. Ann. Biomed. Eng. 42(4), 697–715 (2014). https://doi.org/10.1007/s10439-013-0946-8

Ramadani, A., Bui, M., Wendler, T., Schunkert, H., Ewert, P., Navab, N.: A survey of catheter tracking concepts and methodologies. Med. Image Anal. 82, 102584 (2022). https://doi.org/10.1016/j.media.2022.102584

Rui, S., et al.: Epicardial ventricular tachycardia ablation guided by a novel high-resolution contact mapping system: a multicenter study. J. Am. Heart Assoc. 7(21), e010549 (2018). https://doi.org/10.1161/JAHA.118.010549

Troccaz, J., Dagnino, G., Yang, G.-Z.: Frontiers of medical robotics: from concept to systems to clinical translation. Annu. Rev. Biomed. Eng. 21(1), 193–218 (2019). https://doi.org/10.1146/annurev-bioeng-060418-052502

van der Weide, R., Bakker, C.J., Viergever, M.A.: Localization of intravascular devices with paramagnetic markers in MR images. IEEE Trans. Med. Imaging 20(10), 1061–1071 (2001). https://doi.org/10.1109/42.959303

Walker, T.G., et al.: Clinical practice guidelines for endovascular abdominal aortic aneurysm repair: written by the Standards of Practice Committee for the Society of Interventional Radiology and endorsed by the Cardiovascular and Interventional Radiological Society of Europe and the Canadian Interventional Radiology Association. J. Vasc. Interv. Radiol. JVIR 21(11), 1632–1655 (2010). https://doi.org/10.1016/j.jvir.2010.07.008

Acknowledgements

The authors would like to thank Remco Liefers and Jaap Greve—medical imaging and radiation experts in the TechMed Centre, University of Twente—for the support provided during the experiments reported in this work.

Funding

Authors have no relevant financial or non-financial interests to disclose.

Author information

Authors and Affiliations

Contributions

J.B. contributed to the conceptualisation, methodology, and investigation; performed data analysis; wrote the first draft. D.K. contributed to the conceptualisation; reviewed and edited the final draft. G.D. contributed to the conceptualisation; wrote, reviewed, and edited the final draft; created the figures; supervised the research.

Corresponding author

Ethics declarations

Conflict of interest

Authors have no conflict of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bijlsma, J., Kundrat, D. & Dagnino, G. MR-based navigation for robot-assisted endovascular procedures. Int J Intell Robot Appl (2024). https://doi.org/10.1007/s41315-024-00340-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41315-024-00340-3