Abstract

The detection and continuous updating of buildings in geodatabases has long been a major research area in geographic information science and is an important theme for national mapping agencies. Advancements in machine learning techniques, particularly state-of-the-art deep learning (DL) models, offer promising solutions for extracting and modeling building rooftops from images. However, tasks such as automatic labelling of learning data and the generalizability of models remain challenging. In this study, we assessed the sensor and geographic area adaptation capabilities of a pretrained DL model implemented in the ArcGIS environment using very-high-resolution (50 cm) SkySat imagery. The model was trained for digitizing building footprints via Mask R‑CNN with a ResNet50 backbone using aerial and satellite images from parts of the USA. Here, we utilized images from three different SkySat satellites with various acquisition dates and off-nadir angles and refined the pretrained model using small numbers of buildings as training data (5–53 buildings) over Ankara. We evaluated the buildings in areas with different characteristics, such as urban transformation, slums, regular, and obtained high accuracies with F‑1 scores of 0.92, 0.94, and 0.96 from SkySat 4, 7, and 17, respectively. The study findings showed that the DL model has high transfer learning capability for Ankara using only a few buildings and that the recent SkySat satellites demonstrate superior image quality.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Geographic information systems (GIS) and geographic information science (GIScience) enable collaborations and facilitate interdisciplinary work between different domains such as urban planning, resource management, and scientific disciplines (MacEachren 2000). They also promote volunteer participation in data collection and decision-making (Sun and Li 2016) by providing suitable platforms for data storage, access, analysis, and sharing (e.g., Can et al. 2020, 2021). Thanks to numerous online GIS platforms and open data repositories (e.g., the reference lists on OpenStreetMap Project (OpenStreetMap 2024), Open Aerial Map (2024), mapbox (2024) ArcGIS Online (ESRI 2024)), spatial analysis methods can be applied and tuned/configured by even non-professionals in the geospatial domain. Within this context, Rowland et al. (2020) also highlighted the increasing trend towards self-service applications among users, focusing not only on visualization and interactivity but also on analytics and usability features. GIS platforms also help to synthesize efforts of diverse science and engineering disciplines. A primary application domain has been smart cities. Collaboration among engineers, architects, computer scientists, urban planners, policymakers, decision-makers, and the general public is immensely required in this context (Buyukdemircioglu and Kocaman 2022).

The geometric and semantic updating of geodatabases is crucial to ensure their usability, and this task has long been a primary responsibility of national mapping agencies. Buildings in particular are subject to frequent changes due to construction or demolition. As the traditional approach, manual updating and mapping are highly challenging and time consuming. As an enabler, GIS platforms also facilitated crowdsourcing methodologies, such as volunteered geographic information (VGI) and citizen science. These approaches contribute to the collection and interpretation of geodata, and several studies exploring this aspect exist in the literature (e.g., see Chen and Zipf 2017; Fan et al. 2021; Can et al. 2020, 2021). Furthermore, recent advancements in deep-learning (DL) algorithms, particularly convolutional neural networks (CNN), have demonstrated significant potential for automatic detection and updating of various geospatial data including land use/land cover (LULC) types. Their efficiency in updating urban structures (Chen et al. 2021) and building footprints (Neupane et al. 2021; Buyukdemircioglu et al. 2021, 7,8,a, b), agricultural fields (Victor et al. 2022), complex LULC and topography (Sertel et al. 2022), and other related applications (Hoeser and Kuenzer 2020) has been demonstrated. Nevertheless, an ongoing challenge remains in ensuring the practicality and applicability of developed DL models and datasets across diverse user typologies and various geographical contexts.

As part of its commercial endeavors, the Environmental Systems Research Institute, Inc. (ESRI) in Redlands, California, has integrated pretrained CNN models for extraction and classification of various features, such as building footprints, cars, trees, ships, railways, etc. from images and point clouds obtained from optical imaging, radar, and light detection and ranging (LiDAR) sensors within their software environment (ESRI 2023a). The software is accessible to a user base exceeding 350,000 organizations, encompassing users of ArcGIS Desktop, Enterprise, and Online, as of the time of writing (ESRI 2023b). With its user-friendly interfaces, it is possible to execute the DL applications within this software environment without necessitating advanced coding skills. Hence, individuals who are not experts in DL within their respective fields can readily utilize these models in their analyses. They also have the option to fine-tune the models with a small dataset specific to their application area. This flexibility depends on the domain adaptation and transfer learning capabilities inherent to the model. Thus, by using the tools provided on the ArcGIS platform, a diverse range of users including local government personnel responsible for geodatabase updates and planning can enhance the performance of the pretrained DL models in specific application areas and increase the accuracy and reliability.

Transfer learning is an approach to apply knowledge and skills learned in previous tasks to new tasks (Pan and Yang 2010). If knowledge transfer between tasks is done successfully, the requirement of exhaustive training data collection can be reduced or avoided. Domain adaptation is a certain case of transfer learning (Farahani et al. 2021), occurring when the domains of a previous task and a new task are related but have different distributions.

Beside the growing interest in automated 3D building reconstruction with DL methods at the city scale (see a review by Buyukdemircioglu et al. 2022b), the number of studies focused on extracting buildings from aerial and satellite images is also increasing. Thanks to the domain-specific models, the use of pretrained weights for building extraction has also become a reasonable approach (e.g., see Chen et al. 2022; Bakirman et al. 2022; Buyukdemircioglu et al. 2022a). Although several DL-based building extraction studies exist with aerial images (e.g., Zhou et al. 2022; Yu et al. 2022; Hosseinpour et al. 2022; Buyukdemircioglu et al. 2022a), these datasets typically have superior radiometric and geometric quality when compared to satellite images. As an example, Wang et al. (2022) proposed a DL method and tested it with WHU and INRIA datasets that encompasses aerial images, which were free from relief and shadow effects. They obtained the highest F1 score as 96.7%. Gong et al. (2023) compared their proposed method with popular semantic segmentation methods (U-Net, SegNet, and PANet) and building extraction networks (SiU-Net, BRRNet, AGPNet, Res2-Unet, CFENet, and CBRNet) using three open building extraction datasets, such as Inria, East Asia, and Massachusetts. Among them, only East Asia encompasses satellite images and they achieved an F1 score of 82.8% with these data.

Chen et al. (2022) proposed a DL model for building extraction from satellite images utilizing three different datasets, such as the deep globe building extraction challenge (DG-BE-C) dataset, the urban 3D challenge (U-3D-C) dataset, and the 2021 Gaofen challenge building extraction and change detection in optical images (Gaofen-BECD) dataset. The DG-BE‑C dataset contains WorldView‑3 satellite imagery with 243,382 buildings with 30-cm ground sampling distance (GSD). The U‑3D‑C dataset contains 157,000 buildings labelled on commercial satellite imagery with 50 cm GSD. Images in the Gaofen-BECD dataset consisted of 4000 multitemporal images from Gaofen‑2 and Jilin‑1 satellites with resolution better than 1 m. They utilized pretrained weights on ImageNet-1K in their model and achieved F1 scores of 84 and 94% in difficult and easy test cases of the U‑3DC training set, respectively.

A recent study by Bakirman et al. (2022) proposed a new satellite image dataset composed of 150 Pléiades images and 40,000 buildings in Istanbul, and investigated various DL architectures. They comparatively evaluated widely used architectures (such as U‑Net, Unet++, DeepLabv3+, FPN, and PSPNet) and encoders (such as SEResNext, InceptionResNetv2, and EfficientNet) for building segmentation from the Istanbul dataset and obtained an Intersection over Union (IoU) of 94% as the highest prediction performance from Unet++ and SE-ResNeXt101. They also mentioned that the accuracy deteriorated with less commonly observed roof types.

In this study, we evaluated the accuracy and usability of the pretrained DL model developed by ESRI (2020) for extracting building footprints over a part of the Mamak district in Ankara, using SkySat satellite images after fine-tuning with a small amount of labelled building data. The pretrained model is designed and recommended for extracting building footprints specifically from very-high-resolution (VHR) aerial and satellite images over the USA. It operates with three spectral bands and requires GSD of 10–40 cm. The expected precision performance indicated for this model was at 71.8%. The model is based on Mask R‑CNN architecture (He et al. 2017) and is implemented on the ResNet50 backbone (He et al. 2016). The SkySat images used in the study were obtained as part of Cal/Val activities within the Earthnet Data Assessment Pilot (EDAP) Project of the European Space Agency (ESA) (Saunier et al. 2022). Their quality was previously analyzed for radiometry and geometry. The images were acquired over three different dates with off-nadir angles of 1.8°, 16.6°, and 22.4° from SkySat 7 (SKS7), SkySat 4 (SKS4), and SkySat 17 (SKS17) satellites. Consequently, we analyzed and presented the impact of image quality on the prediction results.

In addition, we trained the models separately in different parts of the Mamak district, which has a highly complex character with urban expansion, transformation, and slum areas (Yilmazer and Kocaman 2020). Consequently, this study introduces and discusses the performance of a model-adjustment approach for building detection with possibly unseen satellite (i.e., SkySat) data with 50-cm spatial resolution and tested it in three types of urban styles, such as high-rise buildings, slums, and others. The three SkySat images employed here have different viewing angles and acquisition periods. Furthermore, as the effectiveness of augmentation has been well recognized in the field of remote sensing (Yu et al. 2017; Abdelhack 2020), we applied a number of augmentation techniques to improve the accuracy. As an additional investigation, we employed the pretrained weights derived from the Istanbul dataset with Unet++ and SE-ResNeXt101 by Bakirman et al. (2022) and compared them with the results of the fine-tuned ESRI DL model. We utilized the Istanbul model due to the geographical similarity (both cities are located in the same country), the use of Pléiades satellite images with a similar GSD to SkySat satellites, and the availability of the model weights.

The main contribution of this study is the investigation of the efficiency of building detection from various SkySat satellite images via transfer learning, employing data augmentation techniques and a small dataset from areas with different urban characteristics. The influence of radiometric quality and image acquisition angles were addressed here. In addition, the domain adaptation approach implemented here works well with a small amount of labelled data, 5–53 buildings per image and urban style, indicating a clear pathway for domain adaptation for different regions and imaging conditions from satellites. Moreover, the usability of a pretrained DL model within a widely available GIS platform was evaluated by individuals who are not experts in DL applications or who have limited coding skills. Provision of such interfaces and models would allow widespread use of DL models without the requirement of programming knowledge or software installations. To our knowledge, this study stands as the first attempt to evaluate both the usability of the ArcGIS environment for building detection employing DL and the use of SkySat images for building detection using a transfer-learned DL method.

In this article, we first introduce the characteristics of the SkySat sensor and its products, followed by a description of the test site features and the methods used for building detection and validation in the next section. Section “Experimental Analysis Results” presents the results of the performance assessment for various settlement types, while the findings of the study are discussed in “Discussion” and the conclusions are presented in “Conclusion and Future Work.”

2 Materials and Methods

In this section, we present the SkySat sensor characteristics, describe the products used in the study, and introduce the test site. In addition, the building detection and validation methods are explained in detail.

2.1 SkySat Sensors and Products

The SkySat constellation is a group of commercial Earth observation (EO) satellites operated by Planet Labs PBC, San Francisco, California. The first unit, SkySat 1, was launched in 2013 (Murthy et al. 2014), and most recently, three units, SkySat 19–21, were launched in 2020. Starting from the third unit, SkySat 3, it was called the SkySat‑C generation as a high-resolution EO satellite (Planet 2022). The revisit period is 4–5 days per satellite at a nominal altitude of 500 km, providing multiple revisits per day for the constellation. All SkySat satellites contain three complementary metal oxide semiconductor (CMOS) pushframe cameras, which expand the width of a shooting range, with panchromatic and multispectral (blue, green, red, and near-infrared) bands.

SkySat data are distributed in four product lines: Basic Scene (BS), Ortho Scene (OS), Basemap, and SkySat Ortho Collect (SSC; Planet 2022). The BS is geometrically raw (uncorrected) and delivered with rational polynomial coefficients (RPCs) which can be used for stereo processing and orthorectification with an external digital elevation model (DEM). The OS involves sensor and geometric correction with a DEM with a spacing between 30 and 90 m by Planet (2022) in a map projection system. Although the accuracy of an OS product depends on the geographical location, the root mean square error (RMSE) is less than 10 m in general (Planet 2022). An SSC image consists of up to 60 OS products and the positional RMSE is similar to the OS based on long-term assessment results.

In this study, we utilized two products from an SSC product line, which were Analytic and Pansharpened Multispectral. The Analytic product is a four-band multispectral image generated after radiometric correction and orthorectification. The Pansharpened Multispectral product includes a four-band color-corrected image after orthorectification and pansharpening. The characteristics of the data used in the study are briefly summarized in Table 1.

A main issue in SSC images regarding building detection is relief artefacts, especially for tall objects, due to the sparse resolution of the DEMs employed for orthorectification. The degree of the relief displacement is determined by the elevation of the object and image acquisition specifications. Although the collection GSDs of all images were similar (0.70 m, 0.66 m, 0.64 m), the satellite off-nadir angle varied (16.6°, 1.8°, 22.4°) for the SKS4, SKS7, and SKS17. Therefore, we extracted rooftops instead of footprints due to the uncorrected relief displacement caused by building heights.

2.2 Ankara Test Site and the Dataset

Our test site is located in the Mamak district in the eastern part of Ankara (Fig. 1). Over the past 20 years, substantial development has taken place within the district, with numerous ongoing and planned construction projects. This brings diversity and complexity to the site, with a mixture of urban transformation areas with high-rise buildings, old residential areas which are now almost slums, and regular city parts. Several other studies were conducted in the area such as real estate valuation (Yilmazer and Kocaman 2020), natural hazard assessments (Yanar et al. 2020), or satellite data quality evaluations (e.g., Saunier et al. 2022; Yalcin et al. 2021). These studies were primarily motivated by topographic variations, with altitudes ranging from 900 to 1500 m, the diversity of the LULC types, and the rapidly changing environment, which allow several spatial analyses.

In this study, we used three SSC images with different characteristics in order to compare accuracy differences sourced from satellite and image acquisition angle differences. The main characteristics of the images are given in Table 1. The training and test areas were selected from discrete locations within the images. The training area was used for tuning the pretrained DL model provided in ArcGIS to adapt the test site and image sensor characteristics and thus increase the performance. Considering the need for a small training area, we selected a location that encompasses a wide variety of buildings to ensure better adaptation of the model. We assessed the performance of the refined model in test areas that were distinct from the training locations. Figure 2 shows the locations of training and test areas for SSC4, SSC7, and SSC17. The test area is shared between the SSC4 and SSC7 images, and the test area of SSC17 is included within this overlap due to the range of acquired images available. Regarding training and test areas, we focused on three kinds of regions, i.e., high-rises, slums, and others. The high-rise area is located in the urban transformation area and mainly involves newly constructed settlements. In slum regions, the majority of buildings are notably smaller and some of them have complex shapes resulting from impromptu renovations. The others encompass regular medium-rise buildings and include also the others such as mosques, malls, etc., with a variety of colors and shapes. We allocated one or two training areas to each of these region types per SSC image and trained the models individually for each urban style as explained in the next section. Table 2 presents the details of the training and test areas for each SSC image.

2.3 Building Detection Methodology

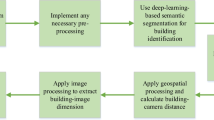

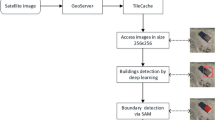

In this study, we extracted buildings from SkySat images over Ankara, using a DL model pretrained over the USA (ESRI 2020). Since the domains were closely related but not the same (e.g., sensor, geographic area), domain adaptation, which is a specific case of transfer learning, was employed in our study to reduce the effort of training sample collection over Ankara. We fine-tuned the model individually for each region, i.e., high-rises, slums, and others, using the respective training area data. We adopted a technique called ensemble modeling, which combines multiple models adjusted to specific characteristics in order to address variety. We predicted model performances in subparts of the test area, as the high-rise, slum, and other areas are distinctive (not mixed), and merged these subareas in the test regions to produce a combined accuracy result for each SkySat image. The numbers of buildings in the test area of SSC4, SSC7, and SSC17 are 2164, 2247, and 1770, respectively. The overall flow of our method is shown in Fig. 3. For processing, we used ESRI ArcGIS PRO v.2.8.

We eliminated the near-infrared band of SkySat and reduced the radiometric resolution to 8 bits as preprocessing to match the input data requirements of the pretrained model. We utilized the pretrained model entitled “Building Footprint Extraction—USA” (ESRI 2020). As stated by ESRI (2020), the model is applicable for the USA, i.e., designed to work well in that geographical region, with an average precision score of 71.8%. Although a further reference (ESRI 2021) emphasized that the model also performed well in other parts of the world and detection results from Sweden and Dubai were given as examples, no quantitative results were provided. The architecture and backbone of the model are Mask R‑CNN developed by He et al. (2017) and ResNet-50 (He et al. 2016), respectively. More details regarding how the model was constructed have not been disclosed.

2.3.1 Learning Step

The training samples to adapt the pretrained model for our task were manually delineated and rasterized with tile size and stride of 256 and 64 pixels in both X (east–west) and Y (north–south) directions. The pretrained model was tuned individually for the SSC4, SSC7, and SSC17 datasets with learning configurations given in Table 3. Data augmentation techniques such as dihedral affine, brightness, contrast, rand zoom, jitter, and symmetric warp from the fastai library v.1.0 (fastai 2022) were also applied to avoid overfitting. These techniques were selected considering the potential unseen variations in SkySat images when compared to aerial orthophotos. Dihedral affine applies a transformation fusing a horizontal or vertical flip and a multiple 90-degree rotation based on affine implementation. Brightness and contrast apply radiometric modifications to pixel intensity values with linear functions. The random zoom operation carries out scale modifications to the training data. Jitter swaps pixels with their neighbors. Lastly, symmetric warp applies tilt effects to image tiles in four directions simultaneously (fastai 2022).

The “arcgis.learn” module from ArcGIS Application Programming Interface (API) for Python (ESRI 2023c) was employed for transfer learning. Learning rate was determined by using the lr_find function in the module. Other optional parameters were used with the default values. Generally, finding optimal parameters is a time-consuming and labor-intensive process. In addition, these parameters vary depending on the dataset, training environment, etc. Thus, default settings for many parameters were adopted for ease of implementation. As an additional effort, we tested epochs with values of 20, 30, and 40. It was observed that the default value of 20 provided satisfactory accuracy, and increasing the number did not enhance the model performance but instead prolonged the duration of the process.

2.3.2 Prediction and Postprocessing Steps

The predicted segments for high-rise, slum, and other (mix) building types were merged to obtain the final set and non-maximum suppression was employed to remove duplicates with lower confidence.

2.4 Validation Approach

In order to assess the performance of our method, verifications were carried out manually based on instance detection. This involved labeling all instances identified as buildings in our study as either true or false. We conducted evaluations based on true positives (TP), false positives (FP), and false negatives (FN). Buildings predicted and actually existing were categorized as TP, those predicted but not existing were considered FP, and those existing but not predicted were marked as FN. In Sects. “Visual Inspection Results” and “Prediction Performance Results,” we visually inspected and classified predicted buildings as TP or FP solely based on their presence in the image, without considering IoU. In Sect. “Completeness of Building Shapes,” an IoU threshold of 0.5 was adopted for classification. These results were used to calculate precision, recall, and F1 score (Eqs. 1, 2 and 3), performance measures typically adopted in the literature (Ok 2013; Shao et al. 2020).

The ground truth was manually delineated on the SSC7 image obtained with nearly nadir viewing angles. Additionally, the Google Earth Pro platform was utilized to verify specific details. It must be noted that buildings present in either of the SSC4 or SSC17 images but absent in the SSC7 image were excluded from the assessment.

3 Experimental Analysis Results

In this section, we present the results derived from the various urban styles obtained from each SSC dataset with the prediction performance results, and give additional validation results regarding the completeness of the buildings.

3.1 Visual Inspection Results

Since we tuned the pretrained model to produce three models for high-rises, slums, and others, we analyzed the results for each specific region. Figures 4, 5, and 6 depict the results. Regarding high-rises, we observed some FNs in the SSC4 dataset, and although there were no FNs, some buildings displayed extracted wall surfaces in the SSC17 dataset. Additionally, several FPs are evident in Fig. 4. Across the three images, FPs were frequently noticed on sports courts, lawns, buildings under construction, and parking lots.

In slum regions, the majority of buildings were smaller compared to those in other regions. Additionally, some buildings exhibit complex shapes due to renovations. Within these regions, certain buildings were identified as several separate structures despite being parts of one larger building. Conversely, there were instances where multiple buildings were detected as connected although they were actually separate structures. Visual inspection revealed that the FNs were most abundant in these regions.

In terms of the other building types, a large proportion are medium-rise buildings with orange-colored rooftops. There are also other buildings such as mosques, large warehouses, etc., with diverse colors and shapes. FNs were observed less frequently in these regions. However, as illustrated in Fig. 6, several buildings, primarily exhibiting different colors and shapes, were either not detected or detected with ambiguous forms.

Figure 7 illustrates the results obtained from the pretrained model and those obtained from the retrained (tuned) models. While quantitative evaluations were not conducted in this comparison, it is evident from the figure that the retrained models detected buildings more accurately when a small quantity of local data was used for training. In a previous study, a detailed comparison between the pretrained model and a retrained model was reported in a part of the Ankara test site with urban structures similar to others used here and using SSC7 data (Sawa Hatta and Kocaman 2022). This study was conducted approximately 6 to 7 km away from the part evaluated here, and it demonstrated a similar trend. Furthermore, the model performance without fine-tuning provided F1 scores of 71.1%, while after the fine-tuning, this increased to 90.6% based on buildings with an IoU > 75% (Sawa Hatta and Kocaman 2022). The results without fine-tuning were found to be in line with the accuracy expectations reported by ESRI (71.8%), yet only SSC7 data with minimal relief distortions were evaluated.

3.2 Prediction Performance Results

We calculated the performance measures for the predictions after combining the results of the three models (Table 4). The assessments presented here were object based, i.e., validating the existence of buildings in the detection area, avoiding building completeness and the number of classified pixels. The precision values were high (0.98 and 0.99) in all three images. Merely 1 to 2% of the predicted buildings in each image were identified as FPs. The SSC4 image yielded the highest score, while the SSC17 image was the lowest, although the difference was negligible. Regarding the recall values, significant differences were noticeable among the images. The SSC17 image exhibited the highest recall (0.95), followed by the SSC7 (0.90) and the SSC4 (0.86) images. It is important to note the inverse relationship between precision and recall. Precision tends to increase as recall decreases, and vice versa. Therefore, we introduced the F1 score to evaluate the results while considering the balance between precision and recall. The SSC17 image yielded the highest F1 score. Since the precision values were similar across the images, the F1 scores primarily reflected the recalls.

From Table 4 it is evident that the performance metrics varied noticeably depending on the input image. As illustrated in Figs. 4, 5, and 6, the radiometric quality of the images differed. A previous study also indicated that the image quality tends to increase with newer SkySat sensors (Saunier et al. 2022). Our observations align with this trend. In this study, we found that the SSC7 image exhibited sharper details compared to the SSC4 image, and, similarly, the SSC17 image was sharper than the SSC7 image.

3.3 Completeness of Building Shapes

An additional investigation was conducted to further understand the differences in model performances concerning the different images used in the study. In the previously explained experiment, we visually assessed and categorized predicted buildings as TP or FP depending on their presence in the image. This classification criterion holds some significance, especially when a general understanding of the building distribution is needed. However, it lacks effectiveness when precise delineation of buildings with high similarity in size and shape is necessary, such as updating building polygons on a map. For this reason, we arranged the TPs by approximate completeness levels (CL) of the building shapes, i.e., CL > 90% (almost complete: AC), CL 50–90% (mostly correct: MC), and CL < 50% (partly correct: PC), based on visual inspection. We categorized a building as AC if it had complete edges and a segment area error approximately less than 4 pixels. A building with an IoU value greater than 0.5 but misrepresented edges was designated as MC. Finally, a building with an IoU value less than 0.5 was labelled as PC. Since ACs have straightforward edges and analogies to ground truth in size and shape, they have potential for direct utilization in other applications following simple regularization. However, MCs and PCs need to be improved before being converted to building shapes through regularization.

The classification results are shown in Table 5. Ratios were computed to compare the quantities of TPs among different images. Commonly, the majority of ACs were observed in other regions. In addition, they were mostly medium-rise buildings with simple orange-colored rooftops. Concerning the PCs, there were some cases of a building with a complicated or unusual roof shape being extracted in part(s), and adjacent buildings were detected as being connected. In addition, as can be seen in Table 5, the result of SSC7 was better than those of SSC4 and SSC17 based on the size and shape of TP buildings. As the viewing angles of SSC4 and SSC17 products were larger than the SSC7 image, we observed some TP predictions captured rooftops along with wall surfaces in the SSC4 and SSC17 images, due to relief displacements. This contributed to a decrease in accuracy (the ratio of AC) in the SSC4 and the SSC17 images.

4 Discussion

In this study, we analyzed the building detection performance of a DL model provided in the ESRI ArcGIS environment that was trained using data from the USA. We applied domain-adaptation to the model with a small amount of local data from the Mamak district, Ankara, based on three different urban characters, namely high-rise buildings, slums, and others (mostly settlements with regular mid-rise buildings). The numbers of rooftops used for domain adaptation of each region range from 5 to 53 (some buildings were even used twice or three times per image where a training area was common among images). Thus, three DL models were obtained for different building types. The employed images were acquired with three different satellites of the SkySat constellation, i.e., SKS4, SKS7, and SKS17, at different times and various viewing angles. Thus, the radiometric quality of the images was different. The results were validated using precision, recall, and F1 score values computed in each image.

The results show that high accuracy can be obtained from the transfer learning approach. Thanks to the desktop GIS environment of ESRI ArcGIS, the DL methods required minimal coding effort. Consequently, a widespread adoption of DL methods to aid in building extraction and geodatabase updating is anticipated and can be relied upon to support the operations of national mapping agencies. Furthermore, the transferability of the pretrained model can be notably enhanced by utilizing even a small quantity of training data for domain adaptation.

On the other hand, use of different DL models for different urban rooftop styles (e.g., slums, high-rises, others, etc.) is recommended to increase the prediction accuracy. The prediction accuracy declined when we combined all image tiles generated from the training areas shown in Sect. “Ankara Test Site and the Dataset” regardless of rooftop type and used them to produce one model. We performed this experiment using the test area of SSC7 for inference. In this experimental comparison, precision, recall, and F1 score were computed based on the IoU threshold of 0.5 using the ground truth. Here, buildings with an IoU greater than 0.5 (automatically computed in the software) were considered as TPs. The results showed that although precision decreased by 1%, the recall and F1 score values increased by 5.9 and 2.8%, respectively, when different DL models were applied for different rooftop types.

Furthermore, the use of different training sets for each rooftop type allowed us to decrease the number of required buildings for training. By using as few as five buildings for training, we could successfully predict high-rise buildings in SSC7. We used 10 and 14 buildings to fine-tune the DL models in SSC4 and SSC17, respectively. However, these values were around 30 and 50 for the others and the slums, respectively, which can be associated with the complexity and the variation of the roof type. The numbers of buildings in each learning set were selected based on data availability in the training region. Taking into account the findings of Sawa Hatta and Kocaman (2022) as well as the average precision score provided by ESRI (2020) at 71.8%, the results presented in this study suggest that fine-tuning the employed pretrained DL model could potentially lead to an enhancement in prediction performance of approximately 25–30%. This assumption was based on the achieved F1 scores of 92–94% given in Table 4. However, it is important to note that providing an exact value is not possible due to differences in the calculation methods of the mentioned measures (F1, average precision, IoU thresholds, etc.).

In neural networks, reducing the generalization error often necessitates more training data (Zhang et al. 2022). Expanding the training dataset and enhancing the generalization of neural networks and DL models in higher dimensions could potentially address various urban characteristics using a single model. However, acquiring a sufficient amount of high-quality training data poses a significant challenge in most cases, given that DL models have a vast number of parameters to train (Zhang and Zhang 2022). In our methodology described in Sect. “Building Detection Methodology,” by training different models based on regional features, we aimed to reduce the range of variations that one model needed to address, thus minimizing the generalization error. The results suggest that when training data are limited, using different DL models for different types of buildings could be a suitable approach.

Here, a number of data-augmentation procedures were applied to increase the model performance. Since choosing optimal augmentation techniques generally requires substantial effort, the selected processes in this study were determined heuristically based on the imaging conditions of the datasets. As an example, dihedral affine and zoom increased the model adaptability to different image resolutions and distortions, including relief effects or skewness caused by viewing angles and coarse DEM, which did not consider building-level orthorectification. Brightness and contrast increased the immunity to different imaging radiometric conditions, as the pretrained models were developed using aerial and satellite images with 10–40 cm GSD. Thus, depending on the employed data and the geographical area, utilization of augmentation techniques is recommended.

In order to give an impression of the accuracy of results obtained here with respect to the literature, we provide a brief summary in Table 6. It must be noted that direct comparisons might not be feasible due to different methodologies or datasets. We also considered our results for building segments as TPs only when the IoU was greater than 0.5 (automatically computed in the software). Considering the small amount of labelled data, it can be said that ours reach a sufficiently useful level of accuracy, which aligns closely with the highest accuracy achieved in Vegas, as reported in Prathap and Afanasyev (2018), and is slightly inferior to the findings in Hu et al. (2021). The DL models presented in both studies were trained with competition datasets, i.e., the SpaceNet dataset (SpaceNet on AWS 2022) and the mapping challenge dataset (AIcrowd 2022), with a large amount of training data. Considering the practical application of DL techniques, it is often impractical to build a DL model using an extensive amount of training data. The strength of our method lies in generating a highly accurate DL model with fewer training data through transfer learning from a pretrained model. In addition, as shown in Table 6, the model performance varies significantly among Vegas, Paris, Shanghai, and Khartoum. This indicates the requirement of further studies for geographic generalization. Furthermore, the results reported by Chen et al. (2022) with three different datasets involving images from various sensors such as WorldView‑3, Gaofen‑2, and Jilin‑1 yielded large variations (F1 scores between 68–94%), also showing the need for sensor adaptation.

Even with a vast amount of training data, achieving generalization of a model remains challenging. In this context, we evaluated the prediction performance of the pretrained model from the Istanbul dataset (Bakirman et al. 2022) and presented the results in Figs. 8, 9, and 10 and Table 7 in the Appendix. The numbers were computed based on predicted labels of the pixels located inside the same ground truth polygons as shown in Figs. 4, 5, and 6. Although the Unet++ model achieved an F1 score of 96.5% from Pléiades images over Istanbul, our results without fine-tuning indicate that the model is not generalizable even within the same country and using satellite images with similar GSDs. The highest accuracies were consistently obtained for others (mostly medium-rise buildings with orange-colored rooftops). Yet, the F1 scores varied largely among the sensors, such as 48% for SSC7, 82% for SSC17, and 86% for SSC4. In addition, the poorest accuracies were observed with high-rise buildings from SSC4 and SSC7 (6% for both), although the results were surprisingly good for SSC17 (92%). It must however be noted that complex and tall buildings could also not be detected well in Istanbul due to irregularity and shadows. The inter-sensor variations can be associated with the radiometric differences between the images. Based on our study results, retraining a pretrained model with a limited number of training samples tailored to the location and roof type was found to be a suitable approach. Furthermore, since the method proposed here is easy to use in the ArcGIS environment, it is recommended particularly for users with limited knowledge of DL method development and programming.

5 Conclusion and Future Work

In this study, we evaluated the transfer learning capability and the sensor and geographic area adaptation of a pretrained DL model implemented in the ArcGIS environment using VHR SkySat imagery (50 cm) obtained from three different sensors of the constellation (SkySat 4, SkySat 7, and SkySat 17) over a part of Ankara. The results showed that the transfer-learned model tuned with a small number of roofs and data-augmentation techniques was beneficial to detect buildings from the VHR images. However, it was observed that fine-tuning different models was necessary to accommodate various urban characteristics, i.e., urban transformation area with high-rise buildings, slum areas and the regular city parts. Highly accurate DL models were obtained with a small amount of training data (5–53 buildings depending on roof type) using a widely available GIS platform, the ArcGIS from ESRI. While the model design options in a transfer-learning approach are restricted when compared to training a model from scratch, the proposed approach can be beneficial for users with limited expertise in DL methods and software programming. Although training a model from scratch could also be highly beneficial (e.g., Buyukdemircioglu et al. 2022a), it requires a vast number of samples and knowledge of the different DL architectures and programming. Furthermore, preparation of training samples and tuning a pretrained model requires significantly less time when compared with model training from scratch.

The F1 scores obtained from SSC4, SSC7, and SSC17 were 0.92, 0.94, and 0.96, respectively. Although the data quality of the newer SkySat satellites as found to superior, image acquisition conditions influence the inference of the DL model. When considering the size and shape of detected rooftops, the result from SkySat 7 showed better performance compared to the others. The SSC7 image was acquired with an almost nadir angle and had fewer visible building facades compared to SSC4 and SSC17. Consequently, SSC7 detected rooftop areas more precisely. Employing three different SkySat satellite images for building detection revealed that the prediction results could be influenced by image quality and off-nadir acquisition angles when employing domain-adapted DL methods.

Future work involves evaluation of the approach in different geographical regions and by using other satellite images with different GSDs. The required minimum of building labels for domain adaptation and their impact on the accuracy is also a topic worth investigating, although the results may depend on regional variation (or urban complexity), sensor, and the DL model to be tuned. In addition, a methodology to facilitate automation of updating geodatabases with building footprints will be developed based on these results.

References

Abdelhack M (2020) A comparison of data augmentation techniques in training deep neural networks for satellite image classification. arXiv preprint arXiv:2003.13502v1

AIcrowd https://www.aicrowd.com/challenges/mapping-challenge#datasets. Accessed 2022-11-19

Bakirman T, Komurcu I, Sertel E (2022) Comparative analysis of deep learning based building extraction methods with the new VHR Istanbul dataset. Expert Syst Appl 202:117346

Buyukdemircioglu M, Kocaman S (2022) Development of a smart city concept in virtual reality environment. Int Arch Photogramm Remote Sens Spatial Inf Sci XLIII-B5-2022:51–58. https://doi.org/10.5194/isprs-archives-XLIII-B5-2022-51-2022

Buyukdemircioglu M, Can R, Kocaman S (2021) Deep learning based roof type classification using very high resolution aerial imagery. Int Arch Photogramm Remote Sens Spatial Inf Sci XLIII-B3-2021:55–60. https://doi.org/10.5194/isprs-archives-XLIII-B3-2021-55-2021

Buyukdemircioglu M, Can R, Kocaman S, Kada M (2022a) Deep learning based building footprint extraction from very high resolution true Orthophotos and nDSM. ISPRS Ann Photogramm Remote Sens Spatial Inf Sci V‑2-2022:211–218. https://doi.org/10.5194/isprs-annals-V-2-2022-211-2022

Buyukdemircioglu M, Kocaman S, Kada M (2022b) Deep learning for 3D building reconstruction: A review. Int Arch Photogramm Remote Sens Spatial Inf Sci: 359–366

Can R, Kocaman S, Gokceoglu C (2020) Development of a Citsci and artificial intelligence supported GIS platform for landslide data collection. ISPRS Virtual Congress 2020. https://doi.org/10.5194/isprs-archives-XLIII-B5-2020-43-2020

Can R, Kocaman S, Ok AO (2021) A WEBGIS framework for semi-automated geodatabase updating assisted by deep learning. Int Arch Photogramm Remote Sens Spatial Inf Sci XLIII-B5-2021:13–19. https://doi.org/10.5194/isprs-archives-XLIII-B5-2021-13-2021

Chen J, Zipf A (2017) DeepVGI: Deep learning with volunteered geographic information. In: Proceedings of the 26th International Conference on World Wide Web Companion, pp 771–772 https://doi.org/10.1145/3041021.3054250

Chen D, Zhong Y, Zheng Z, Ma A, Lu X (2021) Urban road mapping based on an end-to-end road vectorization mapping network framework. ISPRS J Photogramm Remote Sens 178:345–365. https://doi.org/10.1016/j.isprsjprs.2021.05.016

Chen X, Qiu C, Guo W, Yu A, Tong X, Schmitt M (2022) Multiscale feature learning by transformer for building extraction from satellite images. IEEE Geosci Remote Sens Lett 19:1–5

ESRI (2020) Building Footprint Extraction—USA. https://www.arcgis.com/home/item.html?id=a6857359a1cd44839781a4f113cd5934. Accessed 2024-01-21

ESRI (2021) Building Footprint Extraction: Sample detections using the building footprints (USA) deep learning model. ESRI Analytics. https://storymaps.arcgis.com/stories/69fb21b744204d75a1f7146602a0b479 (Created 15 Mar 2021). Accessed 2024-01-21

ESRI (2023a) Pretrained deep learning models. https://www.esri.com/en-us/arcgis/deep-learning-models. Accessed 2023-03-08

ESRI (2023b) Fact Sheet. https://www.esri.com/en-us/about/media-relations/fact-sheet. Accessed 2023-03-08

ESRI (2023c) arcgis.learn module. https://developers.arcgis.com/python/api-reference/arcgis.learn.toc.html. Accessed 2023-06-24

ESRI (2024) ArcGIS Online https://www.arcgis.com. Accessed 2024-01-20

Fan H, Kong G, Zhang C (2021) An Interactive platform for low-cost 3D building modeling from VGI data using convolutional neural network. Big Earth Data 5(1):49–65. https://doi.org/10.1080/20964471.2021.1886391

Farahani A, Voghoei S, Rasheed K, Arabnia HR (2021) A brief review of domain adaptation. Advances in data science and information engineering, pp 877–894 https://doi.org/10.1007/978-3-030-71704-9_65

fastai (2024) vision.transform. Retrieved from https://fastai1.fast.ai/vision.transform.html Accessed 2024-01-20

Gong M, Tongfei L, Mingyang Z, Qingfu Z, Lu D, Zheng H, Jiang F (2023) Context–content collaborative network for building extraction from high-resolution imagery. Knowl Based Syst 263:110283

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/CVPR.2016.90

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R‑CNN. IEEE International Conference on Computer Vision (ICCV). https://doi.org/10.1109/ICCV.2017.322

Hoeser T, Kuenzer C (2020) Object detection and image segmentation with deep learning on earth observation data: A review-part i: Evolution and recent trends. Remote Sens 12(10):1667

Hosseinpour H, Samadzadegan F, Javan FD (2022) CMGFNet: A deep cross-modal gated fusion network for building extraction from very high-resolution remote sensing images. ISPRS J Photogramm Remote Sens 184:96–115

Hu Q, Zhen L, Mao Y, Zhou X, Zhou G (2021) Automated building extraction using satellite remote sensing imagery, 123 https://doi.org/10.1016/j.autcon.2020.103509

MacEachren AM (2000) Cartography and GIS: facilitating collaboration. Prog Hum Geogr 24(3):445–456. https://doi.org/10.1191/030913200701540528

Mapbox https://www.mapbox.com/. Accessed 2024-01-20

Murthy K, Shearn M, Smiley BD, Chau AH, Levine J, Robinson MD (2014) Sensors, Systems, and Next-Generation Satellites XVIII. In: Meynard R, Neeck SP, Shimoda H (eds) SKS-1: Very High-Resolution Imagery from a Small Satellite, vol 9241. International Society for Optics and Photonics, Bellingham, p 92411E

Neupane B, Horanont T, Aryal J (2021) Deep learning-based semantic segmentation of Urban features in satellite images: a review and meta-analysis. Remote Sens 13(4):808. https://doi.org/10.3390/rs13040808

Ok AO (2013) Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts. ISPRS J Photogramm Remote Sens. https://doi.org/10.1016/j.isprsjprs.2013.09.004

Open Aerial Map https://openaerialmap.org/. Accessed 2024-01-20

Open Street Map https://www.openstreetmap.org/. Accessed 2024-01-20

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22(10):1345–1359. https://doi.org/10.1109/TKDE.2009.191

Planet (2022) Planet Imagery Product Specifications. https://assets.planet.com/docs/Planet_Combined_Imagery_Product_Specs_letter_screen.pdf (Created 05.2022). Accessed 2022-11-16

Prathap G, Afanasyev I (2018) Deep learning approach for building detection in satellite multispectral imagery. 2018 International Conference on Intelligent Systems (IS). https://doi.org/10.1109/IS.2018.8710471

Rowland A, Folmer E, Beek W (2020) Towards self-service GIS—combining the best of the semantic web and web GIS. ISPRS Int J Geo Inform 9(12):753. https://doi.org/10.3390/ijgi9120753

Saunier S, Karakas G, Yalcin I, Done F, Mannan R, Albinet C, Kocaman S (2022) Skysat data quality assessment within the EDAP framework. Remote Sens 14(7):1646. https://doi.org/10.3390/rs14071646

Sawa Hatta KS, Kocaman S (2022) Building detection by fine-tuning of pre-trained deep learning models in SkySat images. ISPRS Congress 2022, Nice, France. https://isprs2022.stream-up.tv/media-215-building-detection-by-fine-tuning-of-pre-trained-deep-learning-models-in-skysat-images (Created 06.2022). Accessed 2023-06-24

Sertel E, Ekim B, Ettehadi Osgouei P, Kabadayi ME (2022) Land use and land cover mapping using deep learning based segmentation approaches and VHR Worldview‑3 images. Remote Sens 14(18):4558

Shao Z, Tnag P, Wang Z, Saleem N, Yam S, Sommai C (2020) BRRnet: a fully Convolutional neural network for automatic building extraction from high-resolution remote sensing images. Remote Sens 12(6):1050. https://doi.org/10.3390/rs12061050

SpaceNet on AWS https://spacenet.ai/spacenet-buildings-dataset-v2/. Accessed 2022-11-19

Sun Y, Li S (2016) Real-time collaborative GIS: A technological review. ISPRS J Photogramm Remote Sens 115:143–152. https://doi.org/10.1016/j.isprsjprs.2015.09.011

Victor B, He Z, Nibali A (2022) A systematic review of the use of deep learning in satellite imagery for agriculture. arXiv preprint arXiv:2210.01272

Wang Y, Zeng X, Liao X, Zhuang D (2022) B‑FGC-net: a building extraction network from high resolution remote sensing imagery. Remote Sens 14(2):269

Yalcin I, Kocaman S, Saunier S, Albinet C (2021) Radiometrıc quality assessment for Maxar HD imagery. Int Arch Photogramm Remote Sens Spatial Inf Sci XLIII-B3-2021:797–804. https://doi.org/10.5194/isprs-archives-XLIII-B3-2021-797-2021

Yanar T, Kocaman S, Gokceoglu C (2020) Use of Mamdani fuzzy algorithm for multi-hazard susceptibility assessment in a developing urban settlement (Mamak, ankara, Turkey). ISPRS Int J Geo Inform 9(2):114. https://doi.org/10.3390/ijgi9020114

Yilmazer S, Kocaman S (2020) A mass appraisal assessment study using machine learning based on multiple regression and random forest. Land Use Policy 99:104889

Yu M, Chen X, Zhang W, Liu Y (2022) AGs-Unet: building extraction model for high resolution remote sensing images based on attention gates U network. Sensors 22(8):2932

Yu X, Wu X, Luo C, Ren P (2017) Deep learning in remote sensing scene classification: a data augmentation enhanced convolutional neural network framework. GISci Remote Sens 54:5. https://doi.org/10.1080/15481603.2017.1323377

Zhang L, Zhang L (2022) Artificial intelligence for remote sensing data analysis: a review of challenges and opportunities. IEEE Geosci Remote Sens Mag. https://doi.org/10.1109/MGRS.2022.3145854

Zhang A, Lipton ZC, Li M, Smola AJ (2022) Dive into Deep Learning. arXiv:2106.11342. https://doi.org/10.48550/arXiv.2106.11342

Zhou Y, Chen Z, Wang B, Li S, Liu H, Xu D, Ma C (2022) BOMSC-Net: boundary optimization and multi-scale context awareness based building extraction from high-resolution remote sensing imagery. IEEE Trans Geosci Remote Sens 60:1–17

Acknowledgements

The authors sincerely thank support from the overseas fellowship for space development by the Ministry of Education, Culture, Sports, Science, and Technology (MEXT) in Japan. We are also thankful to the ESA EDAP team and Planet Labs PBC for provision of data. We gratefully acknowledge the continuous support of Mr. Recep Can.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Results from the Pretrained Istanbul Model

Result of high-rise region obtained from Unet++ Istanbul model of Bakirman et al. (2022) (a SSC4, b SSC7, c SSC17)

Result of slum region obtained from Unet++ Istanbul model of Bakirman et al. (2022) (a SSC4, b SSC7, c SSC17)

Result of other regions obtained from Unet++ Istanbul model of Bakirman et al. (2022) (a SSC4, b SSC7, c SSC17)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sawa, K., Yalcin, I. & Kocaman, S. Building Detection from SkySat Images with Transfer Learning: a Case Study over Ankara. PFG 92, 163–175 (2024). https://doi.org/10.1007/s41064-024-00279-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41064-024-00279-x