Abstract

In this empirical study we investigate the hysteresis in informal employment rate for six central African countries for the period 1981–2012. We use a more powerful autoregressive fractionally integrated moving average model. This methodology helps to overcome the well-known problem of low power of traditional unit roots tests. The hypothesis is confirmed for all the countries, except for Gabon and the Republic of Congo.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In many developing countries, a majority of workers are employed in the informal economy with low incomes, limited job security and no social protection. Africa job creation has mainly taken place in the informal economy, where it is estimated that as many as nine in ten rural and urban workers have informal jobs,Footnote 1 and this is especially the case for women and young people, who have no other choice than the informal economy for their survival and livelihood (International Labour Organization 2009). Countries belong to Economic and Monetary Community of Central Africa (CEMAC) also have such high shares of informal employment. The survey of Cameroon in 2010 underlined this view, for example showing that out of the 90.4 % informal jobs identified in the country, 35.2 % were in the urban informal sector and 55.2 % were in the rural informal sector. The high level of informal employment in Cameroon is a feature shared by other countries in the central African sub-region. As second example, according to the 2013 country profile of Chad, 91 % of the main workers in the total non-agricultural workforce are in the informal sector.

Informal employment is understood to include all remunerative work—both self-employment and wage employment—that is not recognized, regulated or protected by existing legal or regulatory frameworks and non-remunerative work undertaken in an income-producing enterprise.Footnote 2 Despite this dynamism within the labour market, decent working conditions have not improved at the same rate. Job creation has gone hand in hand with the proliferation of non-standard work contracts in developed economies—temporary work, part-time employment—and a persistently large informal economy in developing countries (International Institute for Labour Studies 2008).

The persistence of high informal employment in many developing countries continues to be a fundamental problem.Footnote 3 The stagnation or decline of opportunities for employment in the formal sector is the main reason for the increase of informal sector activities.

Hysteresis, a term used by Blanchard and Summers (1986) to describe very high persistence or unit root (UR) in European unemployment, has attracted much attention from economists. Many researchers also find empirical evidence for unemployment hysteresis in several countries. Nevertheless, little work has been undertaken about informal employment in countries with market mechanisms that guide labour allocation such as that in poor-countries.

One reason that relatively few studies have been conducted on the stationarity of Sub-Saharan Africa informal employment rates is due to a lack of data. Except for South Africa, very limited unemployment rate data series exist for underdeveloped African economies. Because hysteresis is associated with non-stationary unemployment rates, UR-tests have been widely used to investigate its validity. But it is well known that the power of the conventional UR-test is low when the sample span is short. A panel-based procedure suchas in Maddala and Wu (1999) and Im et al. (2003), on the other hand, is attractive since it allows one to improve the estimation efficiency by exploiting a cross-sectional variation of data.

A structural break is also an obstacle in detecting the stationarity of the informal employment rate (IER). During central African economies’ low growth process, a certain structural changes have co-existed in their labour markets. Millions of workers have left the rural agricultural sector and moved into the urban service sector. Such movements lead to poor growth in real wages and extraordinary dynamics of laboursupply. It appears to be encouraging that one is able to consider structural breaks in testing the UR hypothesis of African IER.

Previous panel tests, however, do not consider structural changes when testing a UR. Im et al. (2005) point out that any small-sized distortion in an individual time series accumulates in the panel framework and extends the univariate Lagrange multiplier (LM) UR-test with level shifts to a panel LM test. Like the Im et al. (2003) test, the panel LM test is based on the pooled likelihood function and is calculated as the average of the individual LM statistics from each time series. Im et al. (2005) further show that the asymptotic distribution of the panel LM test with level shifts does not depend on the nuisance parameter indicating the position of the break.

While most of the empirical studies to date support the existence of a UR in unemployment, critics have claimed that this conclusion may be due to the low power of the conventional UR-tests employed.

Long-memory models have become increasingly popular as a tool to describe economic time series and autoregressive fractionally integrated moving average (ARFIMA) (p, d, q) processes are known to be capable of modeling long-run persistence, playing a key role in the time series series literature. Introduced by Granger (1980), Granger and Joyeux (1980), and Hosking (1981), they generalize Box–Jenkins models, when the order of differencing (or integration) is allowed to be fractional.

The empirical analysis of long memory models has seen equally impressive treatment, including studies by Sowell (1992), Baillie (1996), Diebold and Inoue (2001), Doornik and Ooms (2003), Palma (2007) and Jiang and Tian (2010), to name but a few. But the research on the topic has been mainly focused on developed countries instead of developing economies, such as African countries. In an attempt to fill this gap, our main goal in this article is to use ARFIMA methodology to estimate informal employment persistence of different labour forces in the central Africa zone. In other word, this paper therefore applies AFRIMA model to CEMAC African IER.

The outline of this paper is as follows: in “Hysteresis and Labour Market”, we analyze the ideas of hysteresis and the labour market; “Arfima Model” reviews ARFIMA model and its time series properties; “Econometric Results” then estimates the model describing CEMAC’s IER in recent years; finally, “Conclusion” provides concluding remarks.

Hysteresis and Labour Market

The basic principle of hysteresis was well recognized by economists—such as Frisch, Kaldor and Schumpeter (Cross and Allan 1988)—well before its revival in the seminal work of Blanchard and Summers (1986).

Fundamentally, two vague ideas revolve around the use of this term in economics. The first is the path dependence property and the second is the permanent effect of transitory shocks. The former imply that the equilibrium state of the system depends on the transition towards it while the latter underlines the persistence effect of a shock to system. The latter property is a major source of confusion between that is recognized as the “UR persistence in discrete time” and “hysteresis”.

In the general literature, “hysteresis” is generally defined as a particular type of response of a non-linear system when one modifies the value of input: the system is said to display the remanence property when there is permanent effect on output after the value of the input has been modified and brought back to its initial position. Briefly, hysteresis occurs in non-linear models that exhibit multiplicity equilibria and the remanence property. On the other hand, “UR persistence” lacks the romance effect with asymmetric persistence mainly in linear models. Two forms of hysteresis are well documented: the weak form at the micro level and the strong form (aggregation of a large number of heterogeneous agents) at the macro level.Footnote 4

Historically, the term hysteresis comes from the Greek “to be late, or come behind”. The term was first coined for application to scientific explanation by the physicist Ewing (1885) to refer to effects (in terms of magnetisation) that remain after the initial cause (the application of amagnetising force) is removed. Hysteresis occurs when a system exhibits path dependent but rate independent memory. Hence, in systems that exhibit hysteresis, it is no possible to determine output without considering the history of the input, which is referred to as the memory effect.

Such effects have subsequently been discovered or invoked in relation to a wide array of physical, biological and social phenomena.Footnote 5 A common account of hysteresis as a systems property has been provided in Krasnosel’skii and Pokrovskii (1989). The key elements necessary to produce hysteresis are some form of non-linearity in the way the elements in a system respond to shocks; and heterogeneity in the elements and therefore in their responses to shocks.

The major implications of hysteresis are remanence, in that the application and reversal of a shock will not be followed by a return to the status quo ante; and a selective memory, in dominated extremum values being wiped [see Cross (1993) for a general explanation of hysteresis in economic systems].

Standard economic analysis assumes that economic equilibria are homeostatic, in that the reversal or removal of a temporary shock will be accompanied by a return to the initial equilibrium. The issue of hysteresis raises the question of whether this assumption holds in economic systems. Marshall (1890) thought that this assumption was likely to be violated in actual market processes, citing the effects of the shock to the supply of cotton during the American civil war as an example. At a more aggregate level Keynes (1934) answered the question “are economic systems self-adjusting?” in the negative. If temporary shocks can have permanent effects economic equilibria become characterized by heterostasis, there now being a range of possible equilibrium values, with the actual equilibrium realized being determined by the temporary shocks experienced.

Hysteresis thus involves stronger properties than those conveyed by the use of the term to illustrate persistence or zero/unit root. In the persistence case the natural rate equilibrium is unaffected by shocks affecting actual unemployment, whereas hysteresis implies that each new extremum value of the shocks experienced will lead to a new unemployment equilibrium. In the zero/unit rootcase all the shocks experienced shape the equilibrium, whereas hysteresis involves only the non-dominated extremum values of the shocks counting in the equilibrium selection process.

In the policy literature the key distinction is frequently perceived as being between structural “...economic analysis generally distinguishes between the actual unemployment rate prevailing at any time, and the “natural” (or “structural”) unemployment rate” (OECD 1994). The presence of hysteresis implies that temporary shocks can change the structural dynamics which help determine equilibrium unemployment (Amable et al. 1995). Thus, in contrast to the natural rate hypothesis (NRH), the shocks associated with the peaks and troughs of actual unemployment are themselves component of the process determining equilibrium unemployment.

Theoretically, non-accelerating inflation rate of unemployment (NAIRU) and hysteresis are the two main hypotheses related to the explanation of unemployment and its persistence. Friedman (1968) and Phelps (1968) proposed the NRH, arguing that real variables determined their own behavior and, consequently, they could not be influenced permanently by nominalvariables, such as inflation. As a result, unemployment would converge to its naturalrate in the long run, meaning that it should be a non-integrated process, I(0), with transitory shocks. On the other hand, Blanchard and Summers (1986) showed that the insider’s bargaining power in wage-setting implied that aggregate employment followed a random walk with a drift. In this case, unemployment rate would be an integrated process, I(1), and any shocks to the series would shift unemployment equilibrium permanently from one level to another. This persistence is what defines the so-called hysteresis phenomenon. In other words, perturbations affecting unemployment can be either transitory (NAIRU) or permanent (hysteresis) and the degree of persistence they generate is a key determinant of the costs of disinflation.

As far as econometrics is concerned, the two theories stated above can be evaluated by means of UR-tests, in which the researcher estimates the order of integration “d” (see Eq. (1) below) of the series analyzed (Camarero and Tamarit 2004; Clement et al. 2005).

However, this methodology imposes that “d” assumes an integer value, i.e., informal employment is either I(0) or I(1), and discards the possibility of ARFIMA models account for this matter. Besides allowing for fractionally integrated parameters, this methodology helps to overcome the well-known problem of low power of traditional UR. Such models are also able to jointly model short-run and long-run dynamics of informal employment, which makes possible the estimation of useful impulse- response functions.

Arfima Model

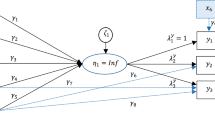

A time series that can be expressed as an invertible autoregressive moving average (ARMA) process after being differenced d times is said to be integrated of order d (Brockwell and Davis 2002). In traditional UR literature, according to the integrated ARMA models [ARIMA (p, d, q)] suggested by Box and Jenkins (1976), the integration parameter d is restricted to take integer values (i.e. 0, 1, ...); theparameters p and q are the number of autoregressive (AR) and moving average (MA) parts to be included in the model. According to fractional integration literature, the parameter dtakes values containing a fractional part as well (i.e. 0.01, 0.02, ...). Expressed in other words, the parameter d can take all real values inARFIMA models. Thefractional integration approach is therefore a generalization of the traditional view of time series. In the fractional integration literature, the parameter d is often referred to as the fractional integration parameter or (long) memory parameter (Baillie 1996). Some authors like Granger (1980), Granger and Joyeux (1980) and Hosking (1981) define a long memory ARFIMA (p, d, q) model \(y_t \) as

where \(t = 1,2,{\ldots },T\) is time and T is the total number of observations, d is the fractional integration parameter, L is the lag operator and \({\varepsilon }_{t} \) is a white noise process (i.e. a process with independent and identically distributed observations with zero mean and the variance \(\sigma _\varepsilon ^2 )\). Functions \({\Phi }\left( L \right) \) and \({\uptheta }\left( L \right) \) giving in Eq. (1) represent the AR operator and the MA operator, respectively. The AR and MA operators have no common roots and all solutions of the characteristic polynomials lie outside the unit circle (Baillie 1996). The AR and MA process expressed respectively as

Since in models define by Eqs. (2) and (3) the AR and MA parts have no influence on the long run behavior of the time series, these components are referred to as short memory components (Baillie 1996; Funke 1998). The long run properties of the time series are described by the memory parameter d (Baillie 1996; Funke 1998). Table 2 (see “Appendix”) summarizes the characteristics associated with different values of d.

A process is said to have short memory when d is equal to zero \((d=0)\). Short memory time series are both stationary in mean and variance. This means that the series reverts back to its equilibrium level after a disturbance to the process (Baillie 1996). The mean-reversion process is fairly rapid since there is no long memory in the time series when \(d=0\).

As Table 2 shows, there are several cases of when a process possesses long memory. When \(0<d<0.5\) the series is more persistent than when \(d= 0\). But since there is some long memory when \(0 <d<0.5\), it takes slightly longer time for the process to return its initial mean value than in the case when \(d= 0\); thus, a greater value of the memory parameter implies more persistence in the series. Any process with a parameter value of d within the interval \((0.5 \le d< 1)\) is therefore even more persistent than when 0 \(\le d< 0.5\). Table 2 reveals that the series is mean-reverting even though covariance non-stationarity is implied. Covariance non-stationarity occurs since the variance no longer isfinite (Granger 1980; Granger and Joyeux 1980; Hosking 1981). The series will however return to its mean value in the long run, it is only a matter of time.

The three cases exposed so far give support to the idea of a natural rate of informal employment (NRIE) (i.e. \(d<1\), the NRIE hypothesis describes the process best; see Table 2). In other words, as long as the series is mean-reverting, the informal employment equilibrium (IEE) is unchanged in the long run and no policy measures are needed. The hysteresis hypothesis is supported when the series have long memory with the memory parameter being greater than or equal to one \((d\ge 1)\). Since the series is neither mean-reverting nor covariance-stationary, the IEE will change in the long run as the series is subject to disturbances. From a statistical point of view, series with an integration order greater than or equal to one is problematic. Since the variance no longer is finite, and there is no mean value to return to, the series explodes as the number of observations increase. The series will therefore wander away in any direction if the process is left untreated. So if a shock occurs, the effect is neither predictable nor transitory. To achieve stationarity, the UR needs to be differenced at least one time when \(d\ge 1\).

Sowell’s (1992) exact maximum likelihood (EML) estimator assumes that a vector of observations \(Y=\left( {y_{1} ,y_{2} ,\ldots ,y_{T} } \right) ^{\prime }\) generated by the fractionally integrated model described in Eq. (1) follows a normal distribution with zero mean and the covariance matrix \(\Sigma \). The maximum likelihood objective function (log likelihood function) of the ARFIMA process in Eq. (1) is expressed as (see Sowell 1992; Franke et al. 2008)

The EML estimator by Sowell (1992) is given by maximizing the log likelihood function with respect to the parameters of interest \(\left( {\varPhi ,\theta ,d}\right) \). Consequently, the EML estimate of the memory parameter is obtained through

An advantage with Sowell (1992) EML estimator is that the short memory components (ARMA) are simultaneously calculated with the long memory component (Baillie 1996). This means that the effects from every type of components are efficiently separated from each other. Moreover, a correct specification of the ARMA part in ARFIMA models is crucial in order to obtain an unbiased estimate of the memory parameter (Gil-Alana 2001).

The maximum-likelihood estimation (MLE) estimator in Eq. (5) performs well in finite samples. Additionally, the EML estimator produces normally distributed estimates of the memory parameter when \(d>0\) (Dalhaus 1989; Yajima 1985). Hence, theory based on normality assumptions applies. Dalhaus (1989) and Yajima (1985) also conclude that the MLE estimator is efficient.Footnote 6

A frequently used method when selecting the most appropriate ARFIMA model is first to determine the number of AR and MA components to be included in the model (Box and Jenkins 1976; Gil-Alana 1999, 2001, 2002). Once this is done, some diagnostic tests are conducted to corroborate that the model is correctly specified. In earlier papers the residuals are often tested for at least autocorrelation and normality (Gil-Alana 1999, 2002; Kurita 2010). Other papers test for autoregressive conditional heteroskedastic (ARCH) errors as well (Gil-Alana 1999). Earlier studyhas however shown that testing for ARCH is unnecessary if the main interest is in the long run parameter; ARCH errors do not affect the memory estimate (d) (Hauser and Kunst 1998).

At last, some information criterion (IC) is used to choose the most suitable model. The Akaike information criterion (AIC) or some Bayesian variants [Schwarz information criterion (SIC)] are often used (Koustas and Veloce 1996; Gil-Alana 1999, 2001).

where k is the number of parameters (AR-, MA-components and the intercept) estimated in the model.

The AIC tends tooverfit models (include too many parameters) while Bayesian ICs sometimes underfits the model. Since the Bayesian ICs often lead to underfitted models when the sample is small, the AIC is used in our work. In this paper, after the verification of stationarity character of series (UR-tests), the following approach (in fourth steps) is taken when finding appropriate ARFIMA models:

-

(a)

Several ARFIMA models are fitted to the data material. An ARFIMA (3, d, 3) is modeled at first, next, a somewhat smaller model is estimated. This procedure continues for all combinations of AR and MA components until the smallest model is reached—an ARFIMA (0, d, 0) (see Tables 3, 4, 5 in the “Appendix”). Only models in which all AR and MA components are significantly different from zero at any reasonable level of significance (1, 5 or 10 %) continue to the next step in the procedure. Models with one or more insignificant variables are omitted from further analysis at this point.

-

(b)

The models of interest (from step 1) are tested for autocorrelation in the residuals using the LM-test by Breusch and Godfrey (BG)-test. If there is serial correlation between the residuals in any model, the model is likely misspecified. Models in which the null hypothesis of no autocorrelation is rejected at the 1 % significance level are considered as invalid and are omitted from the analysis at this stage.

-

(c)

Models that pass step 2 is next tested for normality in the residuals using the LM test of Jarque and Bera (JB)-test. The standard set in this paper is that models pass the JB test as long as the null hypothesis of normality isnot rejected at the 1 % significance level. The assumption of normality in the residuals is however not crucial for the findings in the paper. The samples in the paper are relatively sufficient (more than 30 years) and hence tests based on the normality assumptions still applied.

-

(d)

The last step is to choose the model that minimizes the value of AIC (see Eq. (6)). Since the maximum of the log likelihood function provides the best model, a larger value of the log likelihood function leads to a smaller AIC according to Eq. (6).

Furthermore, the AIC takes the number of parameters into account since the last part in Eq. (6) penalizes for including more parameters in the model. Essential to note is that the AIC is a measure that compares a set of models that are correctly specified : in this paper, that are models that have passed the criterions stated above—significant AR and MA estimates, no serial correlation in the residuals and preferably (but not necessarily) normality in the residuals.

When comparing models with respect to the AIC values, models that pass both the test for autocorrelation as well as the test for normality are always preferred over a model that only pass the autocorrelation test. For instance; suppose that we have a correctly specified modelFootnote 7 but with larger AIC value than a similar model that only passes the test for autocorrelation (but still have significant parameter estimates). Inthat case, the former model with the larger AIC value is preferred since that model passes both tests.

Econometric Results

Estimation of the ARFIMA Model

Long-memory processes are stationary processes whose autocorrelation functions (ACF) decay more slowly than short-memory processes. Because the autocorrelations die out so slowly, long-memory processes display a type of long-run dependence. The ARFIMA model provides a parsimonious parameterization of long-memory processes. This parameterization nests the ARMA model, which is widely used for short-memory processes.

The ARFIMA model also generalizes the autoregressive integrated moving average (ARIMA) model with integer degrees of integration. In estimating an ARIMA model, the researcher chooses the integer order of differencing d to guarantee that the resulting series \(\left( {1-L} \right) ^{d}y_t \) is a stationary process. As UR-tests often lack the power to distinguish between a truly non-stationary [I(1)] series and a stationary series [I(0)] embodying a structural break or shift, time series are often first-differenced if they do not receive a clean bill of health from UR testing. Many time series exhibit too much long-range dependence to be classified as I(0) but are not I(1). The ARFIMA model is designed to represent these series.

This problem is exacerbated by reliance on augmented Dickey–Fuller (ADF)-tests, including the improved Elliott–Rothenberg–Stock (ERS)-test, which have I(1) as the null hypothesis and I(0) as the alternative. For that reason, it is a good idea to also use a test with the alternative null hypothesis of stationarity [I(0)] such as the Kwiatkowski Phillips–Schmidt and Shin (KPSS)-test to see if its verdict agrees with that of the Dickey–Fuller style (DF)-tests. The KPSS-test, with a null hypothesis of I(0), is also useful in the context of the ARFIMA model we now consider. This model allows for the series to be fractionally integrated, generalizing the ARIMA model’s integer order of integration to allow the d parameter to take on fractional values, \(-0.5 <d<0.5\).

It is well-known that conventional UR-tests, such as the ADF-test, exhibit very low power when the span of the data is not long enough. Some studiesFootnote 8 applied panelUR methods to help increase the power of the tests. The aim of these panel UR-tests is tocombine information from the time dimension with the information obtained from the cross-sectional dimension, in the hope that inference about the existence of UR is more precise by taking into account the latter. The Im–Pesaran–Shin (IPS)-test tests the null hypothesis that all series in the panel are non-stationary against the alternative hypothesis that some series are stationary and other contains a UR. The Hadri-test is a generalization of the KPSS-test, and tests the null hypothesis that all series in the panel system are stationary.

There are two approaches to the estimation of an ARFIMA (p, d, q) model: exact maximum likelihood estimation, as proposed by Sowell (1992), and semi-parametric approaches. Sowell’s approach requires specification of the p and q values, and estimation of the full ARFIMA model conditional on those choices. This involves the challenge of choosing an appropriate ARMA specification. In the full maximum likelihood estimation, the ARFIMA model has the d parameter to handle long-run dependence and ARMA parameters to handle short-run dependence. Sowell (1992) has argued that using different parameters for different types of dependence facilitates estimation and interpretation.

Empirical Results

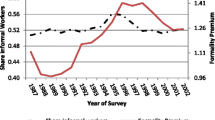

The empirical period begins in 1981 and ends in 2012. The data includes annual observations of the IER in six central African economies—Cameroon, Central African Republic, Chad, Equatorial Guinea, Gabon, and Republic of Congo (Table 1 give summary statistics computed by Eviews 7.2). Our dataset comes mainly from the ILO and various government or international statistics publications like African Economic Outlook, UNDP HDR, World Bank, etc.

As a benchmark, we have started by estimating KPSS unit root tests for all series. Using the 1–10 % levels of significance, the KPSS estimations reject the stationary hypothesis for all countries except for Gabon and Republic of Congo. IPS-tests accepted the null of UR in IER for all countries the model with constant. Hadri-test also supports this result. If we take into account the model with trend, we obtain that UR in IER is rejected for six countries by means of IPS tests. But it is obvious that they are unable to provide evidence on the true order of integration of the series once they usually show opposite results [especially ADF and Phillips PerronFootnote 9 (PP) estimations]. Therefore, a fractionally integrated process can be the case.

Integration order d plays a crucial role in explaining the degree of persistence of the series. In the ARFIMA framework, the higher the order of integration of the series, the higher its persistence will be. In fact, if \(0 = d = 0.5\), the series is stationary and mean reverting. If \(0.5 <d = 1\), the series is non-stationary but still mean-reverting (the effects of shocks are long-lasting). Finally, when “d” = 1, the series is non-stationary and non mean-reverting (Gil-Alana 2001).

In order to estimate the parameter “d”we apply the nonlinear least squares (NLS) method, which is sometimes referred to as the approximate maximum likelihood method (MLM). We allow p and d to be lower than or equal to 3, which generates 16 different models for each series [IER and Log(IER)]. We then use the SIC to select the most suitable model. These selected models and the “d”parameters of all estimations performed are reported on Tables 3 and 4, respectively. Looking at the overall estimations, it is clear that most of the calculated “d” lie between 0.5 and 1, which is a characteristic related to non-stationarity but mean-reversion.

The analysis of the informal employment rate in CEMAC shows that, apart from the first estimation, all others do not vary a lot, ranging \(0.75 <d< 0.98\), which means that the series is non-stationary but mean-reverting. Taking our selection criteriaFootnote 10 into account, the series can be characterized as an ARFIMA (0, 0.762, 2) model (Table 3). One may interpret this as evidence for an appropriate choice of the prior data transformation in comparison with the remaining series, for example, by taking logs, eliminating a changing variance which may influence the MLM in case of the IER.

For the question whether there is difference in informal employment persistence when the position of the countries within the sub-region is accounted for, both head of CEMAC (Cameroon), ARFIMA (0, 0.794, 3), other members [Central African Republic, ARFIMA (0, 0.729, 2), Chad, ARFIMA (0, 0.685, 2) and Guinea, ARFIMA (1, 0.715, 2)]. However, results change considerably when we analyze the informal employment rates of Gabon and Congo. In these two cases, the two best models are ARFIMA (0, 1.292, 3) and ARFIMA (0, 1.266, 3), respectively, which means that both are neither stationary nor mean-reverting (Tables 3, 4). The rate of the number of informal employees has a strong component of long memory, with shocks affecting the series, taking a long time to return to its original level. Besides that, some other points are worth mentioning. Firstly, contrary to the results above, all UR-tests reported on Table 3 indicate stationarity for Congo. On the other hand, for Congo and over the tests suggest non-stationarity, which is in line with the ARFIMA results. Secondly, the level of a time series and its persistence are two different things.

Informal Employment Policy Recommendations

The overall results show that the economic decisions made by central African policymakers in the past thirty years have had a negative impact on CEMAC’S labour force, and it is a good picture of what has happened to informal employment in the region as a whole. For countries with high d like Gabon and Republic of Congo, the ARFIMA results make sense once workers in these countries are comparatively old and usually unskilled than those of others countries, which makes very difficult to them to get into labour market.

Informal employment dynamics is observed both historically and across countries in all economies at a certain stage in their development. Informal employment poses important challenges to policy-makers, as they demonstrate that growth and modernization policies may not be sufficient to eliminate or even reduce informal employment. There is a wide consensus among economists and policymakers that informal employment harms economic growth and stability and should therefore be kept under control (Perry et al. 2007; Lopez and Servén 2009). They agree also that when attempting to bring down informal employment, they face a tradeoff at the expense of other goals—at least in the short run-; such tradeoff is more or less costly according to the levels and sources of informal employment pressures.

ARFIMA processes are a generalization of autoregressive factor models. Such processes which imply hyperbolic rate of decay better describe the dependence between increasingly distant observations in time than the ARMA models which imply exponential rate of decay. The implied slow decay of shocks and the very slow but eventual adjustment to equilibrium prove fractionally integrated models attractive in modelling long-memory time series. ARFIMA is based on economic theory, so the factors have a clear economic interpretation as real short rate and expected informal employment.

The IER are quite a special area in terms of the presence of the long memory property in the economic series, because the long range dependence relates to the level, and not to the volatility as in stocks or exchange rates.

The high IER rate seen in CEMAC countries in recent decades instruct two types of theories; the persistence hypothesis explains informal employment as a variable that needs long periods to recover after a shock, whereas the hysteresis hypothesis implies that informal employment can be characterized as a random walk, which never reverts to an equilibrium after a shock. If informal employment is characterized as a unit root process (hysteresis), macroeconomic policy measures should be focused on structural reforms in order to counter a negative shock. On the other hand, should informal employment be a stationary process (like NAIRU), macroeconomic policy should focus on the prevention of short run departures from the equilibrium.

Whenever persistence is found, there exists room to decrease the IER without changing any structure in the organization of the labor market. How fast can the IER be decreased depends on the persistence mechanism. Also, disinflation policies based on the IER will prove costly in terms of lost output. If IER exhibits persistence, it will never go back to its original starting point. This is a vital implication of informal employment persistence, and applies to the CEMAC’s disinflation policies. Whenever informal employment persists, the short-run adjustment of the economy can take place over a long period.

Conclusion

In this article, we examined the persistence phenomenon in the rates of informal employment of labour forces in the CEMAC countries by means of ARFIMA models. These models are advantageous because it avoids the need for seasonally adjusting data and allows a wider range of long run behavior to be incorporated into the modeling process. It is shown that a frequency domain maximum likelihood estimator easily and adequately estimates all of the parameters in this model.

Recent applied time series work has attempted to distinguish between hysteresis and natural rate models of unemployment rate behavior using the integer based integration dichotomy. This paper has improved upon the methodological approach by allowing a wider range of long run behavior to be modeled by using the ARFIMA methodologies apply on informal employment.

Labour markets in a number of African countries are characterized by high levels of informal employment and low rates of job creation. Approximate MLM of the AFRIMA process has revealed interesting similarities for six different countries’ annual informal employment. MLM provides strong evidence against the widespread assumption of informal employment having a UR. Apart from Gabon and Republic of Congo, which appears stationary, the other fourth CEMAC, high informal countries have an estimated order of integration between 0.7 and 0.6. The results indicate relatively small standard errors on the estimates of the fractional differencing parameter and suggest the model is significantly different from assuming I(0) or I(1). One interpretation of these models is that an employment shock will have long memory and persistence; but that ultimately will be mean reverting. Hence, it is possible to distinguish between a process with a UR one which is fractionally integrated. The empirical regularities of the persistence of informal employment across CEMAC countries raises interesting questions as to the type of employment policy such as decent work that would be consistent with this form of behavior.

Notes

In Africa, the majority of the labour force is in the informal economy. Studies conducted across a wide distribution of African countries lead to estimates that the informal economy accounts for 50–80 % of GDP, 60–80 % of employment, and as much as 90 % of new jobs.

This definition was adopted by the International Conference of Labour Statisticians (ICLS) during its 17th conference in 2003. It corresponds to similar general definitions proposed by academic researchers.

The persistence of the informal sector is due to the inability of the other sectors of the economy—agriculture or other rural activities on the one hand, and modern industry and services on the other—to provide adequate incomes or employment to a rapidly growing labour force (ILO, The Dilemma of the Informal Sector, Geneva, 1991).

It is the estimator with the smallest variance among a set of unbiased estimators (Miller and Miller 2003).

Significant AR and MA parameter estimates, no autocorrelation and the residuals belong to a normal distribution.

Results not show here.

It may also result surprising that both information criteria (the AIC and the Bayesian IC) lead to the same specification for our series.

References

Amable, B., J. Henry, F. Lordon, and R. Topol. 1995. Hysteresis revisited: A methodological approach. In The natural rate of unemployment: Reflections on 25 years of the hypothesis, ed. R. Cross. Cambridge: Cambridge University Press.

Baillie, R.T. 1996. Long memory processes and fractional integration in econometrics. Journal of Econometrics 73: 5–59.

Blanchard, O., and L. Summers. 1986. Hysteresis and the European unemployment problem. In NBER macroeconomics annual, ed. S. Fischer. Cambridge: MIT Press.

Box, G.E.P., and G.M. Jenkins. 1976. Time series analysis: Forecasting and control, Rev ed. Oakland: Holden-Day.

Brockwell, P.J., and R.A. Davis. 2002. Introduction to time series and forecasting, 2nd ed. New York: Springer.

Camarero, M., and C. Tamarit. 2004. Hysteresis vs natural rate of unemployment: New evidence for OECD countries. Economic Letters 84(3): 413–417.

Christopoulos, D.K., and E.G. Tsionas. 2004. Financial development and economic growth: evidence from panel unit root and panel cointegration tests. Journal of Development Economics 73:55–74.

Clement, J., L. Lanaspa, and A. Montanés. 2005. The unemployment structure of the US states. The Quarterly Review of Economics and Finance 45: 848–868.

Cross, R. 1993. On the foundations of hysteresis in economic systems. Economics and Philosophy 9(1): 53–74.

Cross, R., and A. Allan. 1988. On the history of hysteresis. In Unemployment, hysteresis and natural rate hypothesis, ed. Rod Cross, 26–38. Oxford: Blackwell.

Dalhaus, R. 1989. Correction efficient parameter estimation for self-similar processes. The Annals of Statistics 17: 1749–1766.

Diebold, F.X., and A. Inoue. 2001. Long memory and regime switching. Journal of Econometrics 105: 131–159.

Doornik, J.A., and M. Ooms. 2003. Computational aspects of maximum likelihood estimation of autoregressive fractionally integrated moving average models. Computational Statistics and Data Analysis 42(3): 333–348.

Ewing, J.A. 1885. Experimental researches in magnetism. Philosophical Transactions of the Royal Society of London 176(2): 523–640.

Franke, F., W.K. Hardle, and C.M. Hafner. 2008. Statistics of financial markets—an introduction, 2nd ed. Berlin: Springer.

Friedman, M. 1968. The role of monetary policy. American Economic Review 58: 1–17.

Funke, M. 1998. The long memory property of the US unemployment rate Hamburg University/London Business School. Discussion paper No. 19–98.

Gil-Alana, L.A. 1999. Estimation of fractional ARIMA models for the UK unemployment. University of Navarre. Discussion paper, No. 05–99.

Gil-Alana, L.A. 2001. The persistence of unemployment in the USA and Europe in terms of fractionally Arima models. Applied Economics 33(10): 1263–1269.

Gil-Alana, L.A. 2002. Modeling the persistence of unemployment in Canada. International Review of Applied Economics 16(4): 465–477.

Goodrich, L.F., and T.C. Stauffer. 2001. Hysteresis intransport critical-current measurements of oxidesuperconductors. Applied Superconductivity 11(1):3234–3237.

Granger, C.W.J. 1980. Long memory relationships and the aggregation of dynamic models. Journal of Econometrics 14: 227–238.

Granger, C.W.J., and R. Joyeux. 1980. An introduction to long memory time series models and fractional differencing. Journal of Time Series Analysis 1: 15–30.

Hanssens, D. M., and M. Ouyang. 2002. Hysteresis in market response: When is marketing spending an investment? In Review of Marketing Science. Working Paper No. 419. http://papers.ssrn.com/sol3/papers.cfm?abstract_id=310884.Accessed 3 Sept 2008

Hardy, L., S. Beattie, and T. Woodman. 2007. Anxiety-induced performance catastrophes: Investigating effort required as an asymmetry factor. British Journal of Psychology 98:15–31.

Harris, R.D.F., and E. Tzavalis. 1999. Inference for unit roots in dynamic panels where the time dimension is fixed. Journal of Econometrics 91:201–226.

Hauser, M.A., and R.M. Kunst. 1998. Fractionally integrated models with arch errors: With an application to the Swiss 1-month euromarket interest rate. Review of Quantitative Finance and Accounting 10: 95–113.

Hock, H.S., J.A. Kelso, and G. Schoner. 1993. Bistability, hysteresis, and phase transitions in the perceptual organization of apparent motion. Journal of Experimental Psychology: Human Perception and Performance 19:63–80.

Hosking, J.R.M. 1981. Fractional differencing. Biometrika 68: 165–176.

Im, K., M. Pesaran, and Y. Shin. 2003. Testing for unit roots in heterogeneous panels. Journal of Econometrics 115: 53–74.

Im, Kyung-So, Junsoo Lee, and Margie Tieslau. 2005. Panel LM unit root test with level shifts. Oxford Bulletin of Economics and Statistics 67: 393–419.

International Labour Organization (ILO). 2009. The informal economy in Africa: promoting transition to formality: challenges and strategies, international labour office. In Employment sector and social protection sector, 66 p.

International Institute for Labour Studies (IILS). 2008. World of work report 2008: income inequalities in the age of financial globalization. International Labour Organization.

Jiang, G.J., and Y.S. Tian. 2010. Forecasting volatility using long memory and comovements: An application to option valuation under SFAS 123R. Journal of Financial and Quantitative Analysis 45(2): 503–533.

Keynes, J.M. 1934. Poverty in Plenty: Is the Economic System Self-Adjusting? In The Collected Writings of John Maynard Keynes, The General Theory and after, Part I, vol. 13, ed. Moggridge, D. 1973, 489–492. London

Koustas, Z., and W. Veloce. 1996. Unemployment hysteresis in Canada: An approach based on long memory time series models. Applied Economics 28: 823–831.

Krasnosel’skii, M.A., and A.V. Pokrovskii. 1989. Systems with hysteresis. New York: Springer.

Kurita, T. 2010. A forecasting model for Japan’s unemployment rate. Eurasian Journal of Business and Economics 3(5): 127–134.

Levin, A., C.F. Lin, and C.J. Chu. 2002. Unit root tests in panel data: asymptotic finite-sample properties. Journal of Econometrics 108:1-24.

Lopez, H., and L. Servén. 2009. Too poor to grow. In Policy research working paper, 5012. Washington, DC: World Bank.

Maddala, G.S., and S. Wu. 1999. A comparative study of unit root tests with panel data and a new simple test. Oxford Bulletin of Economics and Statistics 61: 631–652.

Marshall, A. 1890. Principles of economics, 1st ed. USA: Macmillan.

Miller, I., and M. Miller. 2003. John E. Freund’s mathematical statistics with applications. In Pearson education, 7th ed. New Jersey: Prentice Hall.

OECD. 1994. The OECD jobs study. Paris.

Palma, W. 2007. Long-memory time series, theory and methods. In Wiley interscience. New York: Wiley

Perry, G.E., W.F. Maloney, O.S. Arias, P. Fajnzylber, A.D. Mason, and J. Saavedra-Chanduvi. 2007. Informality, exit and exclusion. Washington, DC: World Bank.

Phelps, E. 1968. Money-wage dynamics and labor-market equilibrium. Journal of Political Economy 76(4): 678–711.

Polak, J., F. Fardoun, and S. Degallaix. 2001. Analysis of the hysteresis loop in stainless steels I. Austenitic and ferritic steels. Materials science engineering, A. Structural Materials 297:144–153.

Pomerening, J.R., E.D. Sontag, and J.E. Ferrell Jr. 2003. Building a cell cycle oscillator: hysteresis and bistability in the activation of Cdc2. Nature Cell Biology 5:346251.

Roed, K. 1996. Unemployment hysteresis—macro evidence from 16 OECD countries. Empirical Economics 21:589–600.

Song, F.M., and Y. Wu. 1998. Hysteresis in unemployment: evidence from OECD countries. In NBER macroeconomics annual, Vol. 1. Cambridge: MIT Press.

Sowell, F. 1992. Maximum likelihood estimation of stationary univariate fractionally integrated time series models. Journal of Econometrics 53(1–3): 165–188.

Xu, C., Z.Y. Li, and P.M. Hui. 2001. Monte Carlo studies of hysteresis curves in magnetic composites with fine magnetic particles. Journal of Applied Physics 89:3403–3407.

Yajima, Y. 1985. On estimation of long-memory time series models. Australian Journal of Statistics 3: 303–320.

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors gratefully acknowledge the comments of two anonymous referees.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Njoda, M.T., Ulrich, G.M. Hysteresis in CEMAC Informal Employment. J. Quant. Econ. 13, 285–303 (2015). https://doi.org/10.1007/s40953-015-0016-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40953-015-0016-1