Abstract

I show how using response times as a proxy for effort can address a long-standing issue of how to separate the effect of cognitive ability on performance from the effect of motivation. My method is based on a dynamic stochastic model of optimal effort choice in which ability and motivation are the structural parameters. I show how to estimate these parameters from the data on outcomes and response times in a cognitive task. In a laboratory experiment, I find that performance on a digit-symbol test is a noisy and biased measure of cognitive ability. Ranking subjects by their performance leads to an incorrect ranking by their ability in a substantial number of cases. These results suggest that interpreting performance on a cognitive task as ability may be misleading.

Similar content being viewed by others

Notes

For example, consider two students, Adam and Bob, who are taking a cognitive test. Adam has high cognitive ability but is not interested in the outcome of the test. Bob, on the other hand, has lower cognitive ability but is highly motivated to get the right answers. As a result, Bob might end up having a higher score on the test, which according to the traditional approach would imply that Bob has higher ability than Adam, while in reality, their ranking by ability is the opposite.

The starting point of the effective effort process can be initialized at a value other than 0 to allow for the case of multiple-answer questions. The starting value \(E_0\) then would be chosen so that the probability of success at \(E_0\) equals 1/[number of answer options].

Ratcliff and Van Dongen (2011) also study a single-threshold diffusion model; however, they do not consider utility maximization.

Strictly speaking, motivation in this model is measured in the units of the cost of effort.

If \(E_0 = E^*\), the agent should give an answer immediately.

Importantly, the probability of success on a trial will not depend on the realized value of the response time. Figure C.5 in Online Appendix C empirically validates this prediction.

See Online Appendix B for the derivations.

In the experiment, decisions are made on timescales of under a minute. Discounting is unlikely to have a meaningful effect on such short timescales.

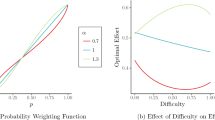

It is straightforward to show using (5) that these comparative statics results hold for any increasing and concave function p.

Diminishing marginal product of effective effort appears to be a reasonable assumption for a cognitive production function p.

The functional form assumption \(p(E) = 1-e^{-E}\) involves an implicit normalization. One could introduce an additional parameter \(\gamma\) in the probability of success function, \(p(E) = 1-e^{-\gamma E}\), which in the present case is normalized to 1. Then, one would have to normalize \(\sigma ^2\) to 1 and estimate \(\gamma\).

The experiment also included a risk elicitation task and a survey, the results of which are not reported here.

See Online Appendix A for the subject instructions and screenshots.

In a traditional implementation of a DST, the key does not change across trials. Performance on a traditional DST then captures subjects’ working memory in addition to processing speed. In the present context, however, processing speed is the only quantity of interest. See Benndorf et al. (2018) for a similar argument.

In most implementations, the time that is allowed to spend on a cognitive task is constrained. The performance measure that is used in the present experiment, i.e., performance with no time constraint, is, therefore, not strictly identical to the performance measures typically used. The underlying message, however, would remain the same even if the time were constrained: to separate the effect of ability from the effect of motivation, one needs to supplement a measure of performance with a measure of effort. In the case of a time constraint, however, the relevant measure of effort would be difficult to observe.

An important modification of this baseline design would be to introduce variable conditional rewards, which would allow one to study how parameter estimates change with the reward level.

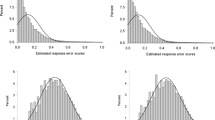

The distribution of mean response times is long-tailed and well approximated by an inverse Gaussian distribution. See Fig. C.2 in Online Appendix C for the distribution of scores and response times in the sample.

See Fig. C.3 in Online Appendix C for a quantile probability plot of a model’s fit.

The subjects with a perfect score of 100 (6 subjects or 3% of the sample) were assigned a score of 99 by randomly selecting a trial and assigning it as incorrectly solved. The model cannot be estimated in the case of a perfect score. This is a finite sample issue: adding more trials would likely eliminate the instances of perfect scores. Excluding the subjects with perfect scores does not alter the results significantly.

For simplicity, I assume that there is no measurement error in the counterfactual performance, which of course will not be true in practice. Allowing for this additional measurement error would only increase the overall noisiness of the observed performance.

An alternative way to interpret this number is that the probability that two subjects taken at random will have incorrect ability ranking, as implied by performance, is 24%.

In general, issues of this kind will arise when the effects of ability and motivation on the outcome of interest differ. Table C.2 in Online Appendix C makes this point clear by presenting the results of a simulation exercise.

References

Agarwal, S., & Mazumder, B. (2013). Cognitive abilities and household financial decision making. American Economic Journal: Applied Economics, 5(1), 193–207.

Benndorf, V., Rau, H. A., & Sölch, C. (2018). Minimizing learning behavior in repeated real-effort tasks. Working Paper 343, Center for European, Governance and Economic Development Research, Georg-August-Universität Göttingen.

Borghans, L., Duckworth, A. L., Heckman, J. J., & Ter Weel, B. (2008). The economics and psychology of personality traits. Journal of Human Resources, 43(4), 972–1059.

Cattell, R. B. (1971). Abilities: Their structure, growth, and action. Boston: Houghton Mifflin.

Clithero, J. A. (2018). Improving out-of-sample predictions using response times and a model of the decision process. Journal of Economic Behavior & Organization, 148, 344–375.

Dohmen, T., Falk, A., Huffman, D., & Sunde, U. (2010). Are risk aversion and impatience related to cognitive ability? American Economic Review, 100(3), 1238–1260.

Duckworth, A. L., Quinn, P. D., Lynam, D. R., Loeber, R., & Stouthamer-Loeber, M. (2011). Role of test motivation in intelligence testing. Proceedings of the National Academy of Sciences, 108(19), 7716–7720.

Gill, D., & Prowse, V. (2016). Cognitive ability, character skills, and learning to play equilibrium: A level-k analysis. Journal of Political Economy, 124(6), 1619–1676.

Heckman, J., Pinto, R., & Savelyev, P. (2013). Understanding the mechanisms through which an influential early childhood program boosted adult outcomes. American Economic Review, 103(6), 2052–2086.

Heckman, J. J., Stixrud, J., & Urzua, S. (2006). The effects of cognitive and noncognitive abilities on labor market outcomes and social behavior. Journal of Labor Economics, 24(3), 411–482.

Krajbich, I., Lu, D., Camerer, C., & Rangel, A. (2012). The attentional drift-diffusion model extends to simple purchasing decisions. Frontiers in psychology, 3, 193.

Lee, M.-L. T., & Whitmore, G. (2006). Threshold regression for survival analysis: Modeling event times by a stochastic process reaching a boundary. Statistical Science, 21(4), 501–513.

Murnane, R., Willett, J. B., & Levy, F. (1995). The growing importance of cognitive skills in wage determination. The Review of Economics and Statistics, 77(2), 251–66.

Ofek, E., Yildiz, M., & Haruvy, E. (2007). The impact of prior decisions on subsequent valuations in a costly contemplation model. Management Science, 53(8), 1217–1233.

Ratcliff, R. (1978). A theory of memory retrieval. Psychological Review, 85(2), 59.

Ratcliff, R., & Van Dongen, H. P. A. (2011). Diffusion model for one-choice reaction-time tasks and the cognitive effects of sleep deprivation. Proceedings of the National Academy of Sciences, 108(27), 11285–11290.

Segal, C. (2012). Working when no one is watching: Motivation, test scores, and economic success. Management Science, 58(8), 1438–1457.

Spiliopoulos, L., & Ortmann, A. (2018). The BCD of response time analysis in experimental economics. Experimental Economics, 21(2), 383–433.

Vernon, P. A. (1983). Speed of information processing and general intelligence. Intelligence, 7(1), 53–70.

Webb, R. (2019). The (neural) dynamics of stochastic choice. Management Science, 65(1), 230–255.

Weiss, L. G., Saklofske, D. H., Coalson, D. L., & Raiford, S. E. (2010). WAIS-IV clinical use and interpretation: Scientist-practitioner perspectives. San Diego: Academic Press.

Wilcox, N. T. (1993). Lottery choice: Incentives, complexity and decision time. The Economic Journal, 103(421), 1397–1417.

Woodford, M. (2014). Stochastic choice: An optimizing neuroeconomic model. American Economic Review, 104(5), 495–500.

Acknowledgements

I thank the editor, Ian Krajbich, and an anonymous reviewer for their suggestions that greatly improved the paper. I thank Jim Cox, Glenn Harrison, Susan Laury, Tom Mroz, Vjollca Sadiraj, and Todd Swarthout for their valuable comments on early drafts of the paper. I also thank the conference participants at the Economic Science Association meetings, the Southern Economic Association meetings, and the Western Economic Association meetings, as well as the seminar participants at Georgia State University, University of California San Diego, the University of Chicago, and Chapman University for their feedback. This work has been supported by the Andrew Young School Dissertation Fellowship.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Alekseev, A. Using response times to measure ability on a cognitive task. J Econ Sci Assoc 5, 65–75 (2019). https://doi.org/10.1007/s40881-019-00064-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40881-019-00064-2