Abstract

Purpose

Stroke patients may need to undergo rehabilitation therapy to improve their mobility. Electromyography (EMG) can be used to improve the effectiveness of at-home therapy programs, as it can assess recovery progress in the absence of a health professional. In particular, EMG armbands have the advantage of being easy to use compared to other EMG technologies, which could allow patients to complete therapy programs without external assistance. However, it is unclear whether there are drawbacks associated with the fixed electrode placement imposed by current armband designs. This study compared the hand gesture prediction capabilities of an off-the-shelf EMG armband with fixed electrode placement and an EMG setup with flexible electrode positioning.

Methods

Ten able-bodied participants performed a series of hand and finger gestures with their dominant hand, once with an EMG armband (Untargeted condition) and once with electrodes deliberately placed on specific muscles (Targeted condition). EMG features were extracted from overlapping sliding windows and were used to (1) classify the gestures and (2) predict finger joint positions as measured by a robotic hand exoskeleton.

Results

For the classification task, a logistic regression model performed significantly better (\(p < 0.001\)) for the Targeted condition (\(55.8\% \pm 10.1\%\)) compared to the Untargeted condition (\(47.9\% \pm 11.6\%\)). For the regression task, a k-nearest neighbours model obtained significantly lower (\(p = 0.007\)) mean RMSE values for the Targeted condition (\(0.260 \pm 0.037\)) compared to the Untargeted condition (\(0.270 \pm 0.043\)).

Conclusion

We observed a trade-off between predictive accuracy and ease-of-use of the EMG devices used in this study. It is important to consider such a trade-off when developing clinical applications such as at-home stroke rehabilitation therapy programs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stroke is one of the leading causes of death and long-term disability in the world [1, 2]. Stroke survivors often suffer from reduced mobility in the upper limbs [3,4,5], which can severely decrease overall quality of life [6,7,8]. Patients’ mobility can be improved through rehabilitation therapy aiming to improve outcomes such as strength and range of motion through regular exercise programs [9, 10]. Patients can not only undergo rehabilitation in hospitals or care centres, but also in their own home, and evidence suggests that home-based rehabilitation may be preferable to in-clinic care in terms of outcomes, costs, patient satisfaction and caregiver strain [11].

Electromyography (EMG), a technology used to measure electrical signals related to muscle activity, has many potential applications in stroke rehabilitation. For example, EMG can be used as a recovery assessment tool [12], to assist in robotics-based therapy [13,14,15] or to provide feedback during interactive training programs [16,17,18]. Such applications generally involve extracting information about a user’s motions based on their EMG signals. Hence, the viability of using EMG in stroke rehabilitation relies heavily on accurate movement recognition.

Research groups investigating EMG-based gesture classification have obtained overall promising results [19, 20]. Recent years have seen the emergence of increasingly cost-effective EMG recording systems, such as the popular 8-channel Myo armband by North (formerly Thalmic Labs). There exists a body of evidence indicating that the Myo armband is able to achieve high classification accuracy for hand and wrist gestures [21,22,23]. These findings suggest that off-the-shelf EMG armbands could be considered a viable alternative to more expensive EMG systems. In the context of stroke rehabilitation therapy, using an EMG armband to monitor a patient’s movements could be an attractive option, given that armbands are generally easier to use compared to other types of EMG devices. Ease-of-use would be especially relevant for non-expert users, as would be the case in stroke rehabilitation therapy that is performed at-home (i.e., by patients themselves or with the help of caregivers).

Ease-of-use and cost-effectiveness can play a large factor in determining the viability of using specific EMG technologies in at-home stroke rehabilitation therapy. Existing work comparing off-the-shelf EMG armbands with other EMG devices has so far focused on large-scale gestures that involve the entire hand or the wrist [19]. There has been less work looking into the impact of the choice of EMG device on single-finger (e.g., fine motor control) gesture prediction. Individual finger movements involve less muscle groups than whole-hand or wrist gestures, which could make them more difficult to predict using an armband that does not target-specific muscle groups. There is some evidence that EMG armbands may not achieve as good results when applied to single-finger gesture prediction: Caesarendra et al. obtained an accuracy of 72% for 5-finger classification using the Myo armband [24], while Al-Timemy et al. achieved 98% accuracy for a set of 15 gestures that included individual finger flexion for all 5 digits using custom-built hardware and electrode channels targeting a larger area of the forearm [25]. In an attempt to address the issue of electrode positioning while still using a low-cost EMG device, Wang et al. developed a 3D-printed armband allowing flexible electrode configurations and achieved a 93% accuracy in a 10-finger (two-hand) classification problem after excluding visibly noisy data [26]. However, it is important to note that results from different studies cannot be compared directly since there may be differences in experimental and analysis methods. To the best of our knowledge, no study has directly assessed the impact of using targeted electrode placement methods instead of an EMG armband when it comes to predicting single-finger gestures.

The objective of this study was to compare the hand and finger gesture recognition capabilities of two EMG acquisition methods: an off-the-shelf armband and a consumer-grade biosensing board connected to electrodes by flexible wires. These device types were chosen because of their accessibility to general consumers and relative affordability. We compared the two technologies in two prediction problems: (1) hand gesture classification, including single-finger movements, and (2) finger joint angle prediction. We included the joint angle regression, a more difficult problem compared to classification, because we believe information about joint angles may be more useful in the context of stroke rehabilitation; for example, being able to continuously track finger positions would allow for more precise biofeedback during training exercises. We hypothesized that the biosensing board setup with targeted electrode positioning would produce better results than the armband, and our goal was to discuss the tradeoff, if any, between ease-of-use and electrode placement precision.

2 Methods

2.1 Participants

Ten able-bodied participants (3 females, 7 males) were recruited for this study. All participants were right-handed and performed the experiment with their dominant hand. Participants gave their informed consent and received no monetary compensation for their participation. The experimental protocol was approved by the Research Ethics Boards of both the National Research Council Canada (#2022-18) and of McGill University (#22-05-057).

2.2 Experiment

Participants performed a series of 9 hand gestures (in addition to periods of rest) with their dominant hand. They were asked to sit and hold their forearm parallel to the floor, without supporting it on any surface, and to follow visual instructions on a computer screen. The set of gestures consisted of individual flexion for each of the fingers and the thumb, as well as four grasping gestures involving physical objects, as shown in Fig. 1A. We defined an experimental trial as a rest period followed by a “gesture” period, where participants engaged in, held, then disengaged from a given gesture following a visual prompt. Once the 3 s rest period was over, participants were given 2 s to transition to the gesture, then had to hold their position for 4 s, and finally had 2 s to transition back to the rest position (Fig. 1B). Video footage capturing the participant’s actions during the task was recorded for cross-referencing purposes.

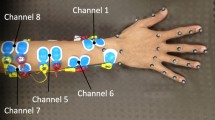

Details about experimental protocol. A rest position and nine gestures used in the task. B Unity interface with moving progress bar indicating if the participant should hold the rest position (Rest), transition from rest to one of the nine gestures (Start), hold the gesture (Hold), or transition back to rest (Stop). C Example electrode placement for the Targeted condition for a participant

The full task consisted of 9 blocks of trials, where a block is defined as performing each of the 9 gestures once. The order of the gestures was randomized within each block. To help reduce fatigue in the forearm, participants were given the chance to take a short break between each set of 3 blocks.

Flexion of individual joints were tracked at 200 Hz using a Dexmo robotic hand exoskeleton (Dexta Robotics, Shenzhen, Guangdong, China) and forearm muscle activity was measured using one of two EMG devices. Each participant performed the task twice, once for each of two conditions. In the Untargeted condition, they wore a GForcePro+ 8-channel EMG armband (OYMotion, Shanghai, China). In the Targeted condition, loose electrodes were placed on specific muscle groups and connected to an 8-channel OpenBCI Cyton biosensing board (OpenBCI, New York, New York, United States). Half of the participants started with the Untargeted condition and the other half started with the Targeted condition.

Skin preparation gel was applied to participant’s forearms to reduce electrical impedance. The armband was slipped up onto the forearm until it was felt tight, and the same armband orientation was used for all participants to maintain consistency in the data. For the Targeted condition, the targeted muscle groups were the following: flexor carpi ulnaris, flexor digitorum superficialis, flexor pollicis longus, extensor pollicis longus, extensor indicis, extensor digitorum, and extensor carpi ulnaris. Two EMG channels were used for the flexor digitorum superficialis muscle. Foam solid gel electrodes were placed by the experimenter, who determined the electrode locations by palpating the forearm during movements known to involve the target muscle groups (e.g., wrist adduction for the flexor carpi ulnaris muscle). A bipolar electrode configuration was used, with two electrodes per EMG channel and a distance of around 3 cm between electrodes in each pair. The ground electrode was placed on the elbow. Figure 1C shows an example electrode placement for a participant. An OpenBCI Wifi Shield was used to increase the sampling rate of the Cyton board from 250 to 500 Hz, matching the 500 Hz sampling rate of the GForcePro+ armband.

The experimental interface (Fig. 1B) was developed and run in Unity Editor (version 2021.3.4f1). The Brainflow libraryFootnote 1 was used to obtain and save raw EMG data from the devices. Spatial tracking data from the Dexmo hand exoskeleton were obtained via custom software libraries.

2.3 Data Analysis

Data preprocessing and analysis was conducted in the Python programming language, mainly using the NumPy [27], SciPy [28], Pandas [29], Scikit-learn [30] and Statsmodels [31] libraries.

2.3.1 Data Preprocessing

EMG signals were notch-filtered to remove 60 Hz power line noise, then bandpass-filtered to remove frequencies outside the 5–50 Hz range. EMG and spatial tracking data were aligned by linearly interpolating the 500 Hz EMG signals based on the timestamps of the 200 Hz hand exoskeleton values. Spatial tracking data were normalized because participants could have different ranges of values due to the design of the exoskeleton and differences in hand size. For each digit, a value of 1 corresponded to the most bent position when that finger was flexed, while a value of 0 corresponded to the median position for that finger during rest periods.

2.3.2 Feature Extraction

EMG features were extracted from overlapping sliding windows; a window size of 160 ms was chosen, consistent with previous work [19, 25], and an offset of 20 ms between windows was used. The feature set consisted of time-domain and other EMG features that have been commonly used in the literature [19, 32, 33]. For an N-sample window \(X = (x_1, x_2,\ldots , x_N)\) for a single EMG channel, where \(N \ge 3\), the following features were computed:

-

Mean absolute value (MAV): \(\frac{1}{N} \sum _{i=1}^N |x_i|\)

-

Number of zero crossings (ZC): \(\sum _{i=1}^{N-1} {\mathbb {I}}(\text {sign}(x_i) \ne \text {sign}(x_{i+1}))\), where \({\mathbb {I}}(.)\) is the indicator function, which maps to 1 if the condition is satisfied and otherwise maps to 0

-

Number of slope sign changes (SSC): \(\sum _{i=1}^{N-2} {\mathbb {I}}(\text {sign}(x_{i+1} - x_i) \ne \text {sign}(x_{i+2} - x_{i+1}))\)

-

Waveform length (WL): \(\sum _{i=1}^{N-1} |x_{i+1} - x_{i}|\)

-

Root-mean-square (RMS): \(\sqrt{\frac{1}{N} \sum _{i=1}^N {x_i}^2}\)

-

Autoregression (AR) coefficients: linear coefficients from a fourth-order autoregression model fitted on X. An order of four is commonly used in the EMG literature [19, 34] and is generally believed to be a good tradeoff between computational costs and added information, although some studies use sixth-order AR models instead [25, 32].

2.3.3 Machine Learning Classification and Regression

The machine learning models investigated in this analysis for gesture classification were logistic regression, linear discriminant analysis (LDA) and k-nearest neighbours (KNN). For finger joint angle regression, the models used were linear regression and KNN regression. A separate regression model was trained for each of the five digits, and reported performance metrics are the average of the five regression models. The hyperparameter for the number of neighbours was set to 100 for both KNN models because the default of 5 neighbours was too small and led to model overfitting.

Data from the first 2 s rest period in each 3-block recording were excluded to account for participants settling into the rest position. For the Targeted condition, the Cyton board and Wifi shield setup sometimes caused packet loss (a seemlingly known issueFootnote 2), resulting in abrupt jumps in the data; these occurrences were identified and excluded from analysis. In addition, one of the participants (S10) misunderstood parts of the task and performed the same gesture instead of separate gestures for the GRASP_TRIPOD and GRASP_PINCH positions. This was discovered upon reviewing video footage of the experiments, and data from the two gestures were excluded for that participant.

Due to the experiment design, the data contained many more samples for the REST class than for the gesture classes. To remediate this class imbalance, only a subset of the rest data were used in classification; the size of the subset was chosen as the median of the sample sizes of the nine other classes. Data from the periods of transition from gesture to rest were also excluded in the classification analysis because participants tended to reach the rest position well before the allowed transition time ended.

Cross-validation was used to obtain an estimate of model accuracy. Five-fold cross-validation was used to estimate model performance within-participant. To prevent data leakage due to the windows overlapping with each other, feature data obtained from windows that were part of the same trial was always assigned to the same fold. Leave-one-participant-out cross-validation was used to determine the models’ generalization abilities across participants.

3 Results

3.1 Gesture Classification

We compared three machine learning models in a 10-class (rest and the nine gestures) classification task: logistic regression, LDA and KNN. The logistic regression model achieved the highest overall accuracy of \(51.8\% \pm 11.5\%\) (averaged across all cross-validation folds, participants, and conditions), surpassing both the LDA (\(46.3\% \pm 9.7\%\)) and KNN (\(38.9\% \pm 8.7\%\)) classifiers. Only the results from the logistic regression model will be discussed further in this section.

Figure 2A shows the model’s within-participant accuracy for each condition. There is variability between participants, with the lowest accuracy being \(\sim\)25% (S02) and the highest reaching 70% (S10). Classification accuracy for the Targeted condition (\(55.8\% \pm 10.1\%\)) was significantly higher than that for the Untargeted condition (\(47.9\% \pm 11.6\%\)) (two-tailed paired \(t\)-test: \(t = 7.17\), \(p < 0.001\)). This is in line with our hypothesis and suggests that placing electrodes on specific muscles improves classification accuracy. Figure 2B shows the average confusion matrix across participants. For both experimental conditions, the gesture that was classified with the highest accuracy was ring finger flexion (RING_FLEX). The classes with the lowest classification accuracies were cylinder grasp (GRASP_CYLINDER) for the Targeted condition and thumb flexion (THUMB_FLEX) for the Untargeted condition.

We applied leave-one-participant-out cross-validation to assess the generalization abilities of the model. Classification accuracies were worse than those obtained in the within-participant analysis: \(25.5\% \pm 5.3\%\) for the Targeted condition and \(31.2\% \pm 5.9\%\) for the Untargeted condition (Fig. 3A). Similar to the within-participant analysis, the logistic regression model performed better (\(28.4\% \pm 6.5\%\)) than the LDA (\(23.7\% \pm 7.1\%\)) and KNN models (\(20.6\% \pm 5.0\%\)). The Untargeted condition has better results, though this does not reach statistical significance (two-tailed paired \(t\)-test: \(t = -2.24\), \(p= 0.052\)). A lack of a clear diagonal pattern in the confusion matrices Fig. 3B shows that most classes are not classified accurately in this task, though there are exceptions such as the REST and RING_FLEX classes for the Untargeted condition. Overall, these results suggest that the models do not generalize well to other participants.

3.2 Finger Joint Angle Regression

In the finger joint angle regression task, we compared a linear regression model with a KNN model. The KNN model achieved an overall average coefficient of determination (\(R^2\)) of \(0.200 \pm 0.102\) in the within-participant analysis, which was higher than the linear regression model (\(0.170 \pm 0.104\)). There was also a lower mean root-mean-squared-error (RMSE) for the KNN model (\(0.265 \pm 0.040\)) than for the linear regression model (\(0.269 \pm 0.039\)).

Figures 4A and B show within-participant cross-validation results for the KNN model. Similar to the classification task, model performance varies between participants, with the lowest \(R^2\) being less than 0.10 and the highest \(R^2\) exceeding 0.30. Mean \(R^2\) values were not significantly different between the Targeted (\(0.207 \pm 0.109\)) and the Untargeted (\(0.193 \pm 0.096\)) conditions (two-tailed paired \(t\)-test: \(t = 1.01\), \(p=0.318\)). The RMSE for different participants was more consistent at around 0.25 for most participants. Mean RMSE values for the Targeted condition (\(0.260 \pm 0.037\)) were significantly lower (better) than mean RMSE values for the Untargeted condition (\(0.270 \pm 0.043\)) (two-tailed paired \(t\)-test: \(t = -2.831\), \(p=0.007\)).

Figure 4C presents sample predicted and actual finger joint angle values from the KNN model for the best (S09) and worst (S02) participants. We observe that the models for S09 seem to be able to track fingers more closely than those for S02. However, for both participants, even when the models correctly predict changes in finger movement, the finger joint angle predictions were inconsistent and unable to match the smooth changes observed in the actual finger joint angle values.

Leave-one-participant-out cross-validation analysis for the regression task produced an \(R^2\) of \(-0.050 \pm 0.126\) for the KNN model and \(-0.090 \pm 0.269\) for the linear regression model. A negative \(R^2\) value indicates that the model performed worse than a model that constantly predicts the mean of the training data, suggesting that the regression models are unable to generalize to data from another participant.

4 Discussion

This work aimed to compare the movement recognition capabilities of two EMG devices, an armband (Untargeted condition) and electrodes placed on specific muscles (Targeted condition). We recorded forearm EMG signals while participants were in a resting position or performing one of nine gestures. We tested machine learning models on a set of EMG features (MAV, ZC, SSC, WL, RMS, and fourth-order AR coefficients; see Sect. 2.3.2 for definitions of the EMG features) in two prediction tasks: gesture classification and finger joint angle regression.

We obtained a statistically higher mean accuracy for gesture classification when using EMG electrodes individually placed on target muscles compared to an off-the-shelf armband. For finger joint angle regression, there was no significant difference between model goodness-of-fit for the two EMG methods, though the \(R^2\) values we obtained were lower than what others have reported in the literature. When trained prediction models were tested on data from a previously unseen subject, we found that model generalization abilities were low overall for both EMG acquisition methods.

In the gesture classification task, the best model (logistic regression) performed significantly better for the Targeted (accuracy of \(55.8\% \pm 10.1\%\)) than for the Untargeted condition (accuracy of \(47.9\% \pm 11.6\%\)) when trained and tested on data from the same participant. This result differs from previous work by Farrell and Weir, which found no significant difference between their Targeted and Untargeted conditions [19]. One of the differences between this study and ours is that Farrell used large-scale gestures involving movement of multiple fingers and the wrist (e.g., wrist extension/flexion, hand pronation/supination, palmar prehension), while the gestures we used were more dexterous (flexion of individual fingers and various graspings gestures) and more difficult to classify. Future studies will determine if classification of more dexterous gestures requires precise electrode positioning compared to classification of larger scale gestures.

Our results suggest that there may be benefits in terms of movement recognition accuracy to using individually placed EMG electrodes instead of an off-the-shelf armband, with a \(\sim\)8% difference in classification accuracy between the two methods. However, this improvement in performance comes with a cost in terms of setup effort and time. Setup for the targeted electrodes required the presence of a trained person to determine optimal locations for electrodes. We estimate that the process of identifying electrode positions and placing electrodes on the forearm took around 10–15 min in our study, while donning the armband only took a few seconds. Moreover, using sticky foam solid gel electrodes may also cause discomfort for the user, since forearm hair can be ripped off when removing the electrodes. Hence, the trade-off between boosting model performance and maintaining ease-of-use should be considered when designing EMG applications, especially if the target userbase is a patient population. In the context of at-home stroke rehabilitation therapy, lower prediction performance may still be outweighed by the convenience of using an armband, as treatment programs involve repeated exercise sessions, and long setup times could build fatigue and decrease patient motivation.

Overall, the classification accuracies we found are lower than what other groups have previously reported in the literature with similar EMG equipment [24, 26]. We suspect that the main reason explaining why our results do not match the literature is data labelling. Our EMG timeseries data were labelled by the Unity data collection interface we developed, and the labelling was based on expected movement timing, ignoring the possiblity that participants might start or end a movement without precisely following on-screen prompts. We began working on a relabelling method based on finger movement velocity from the Dexmo glove data, and preliminary tests have found an accuracy within the 60–70% range, which is consistent with previous literature.

In the finger joint angle regression task, we obtained an average within-participant \(R^2\) goodness-of-fit of \(0.207 \pm 0.109\) for the Targeted condition and \(0.193 \pm 0.096\) for the Untargeted condition using a KNN regression model, with no statistically significant difference between the two conditions. The mean \(R^2\) values we found were also lower than what has been previously reported in the literature (\(R^2\) > 0.72 for all five MCP joints using a similar set of features and the Myo armband [35]). One shortcoming of our current regression model is that its predictions show instability over time (Fig. 4C). This could be explained by the fact that the model considers each data sample independently, and does not account for the temporal dimension. Other groups have successfully applied specialized timeseries regression models to EMG-based finger joint angle classification, in particular nonlinear autoregressive exogenous (NARX) models [36]. These NARX models use as inputs both past and current EMG features in addition to past predictions. Future studies will incorporate the ability to track previous predictions, which would improve the stability of the regression model.

We also performed leave-one-participant-out cross-validation analysis on the two prediction tasks to assess our models’ generalization abilities. For gesture classification, accuracy for the Untargeted condition (\(31.2\% \pm 6.2\%\)) was better than that for the Targeted condition (\(25.5\% \pm 5.6\%\)), though the difference was not statistically significant. For finger joint angle regression, the \(R^2\) values were negative for both conditions, indicating poor model fits. Overall, performance metrics from the leave-one-participant-out analyses were consistently worse than those from the within-participant analyses, indicating that model generalization across participant was poor. This could be due to individual differences in participants’ forearm physiologies causing electrodes to be in different locations for each individual (for both the Targeted and Untargeted conditions). Other factors that could have contributed to poor generalizability include differences in participant’s muscle contraction strengths and varying noise levels in the EMG data across participants (due to e.g., electrode contact or armband fit).

Future directions for this research include running more classification analyses with the relabelled EMG data. For finger joint angle regression, in addition to trying other regression models such as the NARX, it would be interesting to try predicting joint angle velocity instead of position: finger joint angular velocity would be a meaningful measure for movement recognition, and position can be inferred from velocity if the initial position is known. Moreover, we observed differences in EMG signal waveforms when comparing transition versus steady-state periods with no changes in velocity. Hence, velocity may be easier to predict than position since it relates more directly to the muscle activity.

5 Conclusion

Our study directly compared the predictive performance of an off-the-shelf EMG armband with fixed electrode placement and an EMG setup with flexible electrode positioning. Our results showed a trade-off between predictive accuracy and ease-of-use. On the one hand, the setup with flexible electrode positioning resulted in better predictive accuracy, but required more setup time. On the other hand, while the off-the-shelf EMG armband performed worse than the other setup, its ease-of-use may be more applicable for patient populations. It is important to consider trade-offs between accuracy and ease-of-use during development of clinical applications, including in stroke rehabilitation therapy.

Data availability

Not available.

Notes

See GitHub issue: https://github.com/OpenBCI/OpenBCI_WIFI/issues/82

References

Feigin, V. L., Stark, B. A., Johnson, C. O., Roth, G. A., Bisignano, C., Abady, G. G., Abbasifard, M., Abbasi-Kangevari, M., Abd-Allah, F., Abedi, V. & Abualhasan, A. (2021). Global, regional, and national burden of stroke and its risk factors, 1990–2019: a systematic analysis for the Global Burden of Disease Study 2019. The Lancet Neurology, 20, 795–820.

Tsao, C. W., Aday, A. W., Almarzooq, Z. I., Alonso, A., Beaton, A. Z., Bittencourt, M. S., Boehme, A. K., Buxton, A. E., Carson, A. P., Commodore-Mensah, Y. & Elkind, M. S. (2022). Heart disease and stroke statistics-2022 update: A report from the American Heart Association. Circulation. https://doi.org/10.1161/CIR.0000000000001052

Broeks, J. G., Lankhorst, G. J., Rumping, K., & Prevo, A. J. H. (1999). The long-term outcome of arm function after stroke: results of a follow-up study. Disability and Rehabilitation, 21, 357–364. https://doi.org/10.1080/096382899297459

Raghavan, P. (2007). The nature of hand motor impairment after stroke and its treatment. Current Treatment Options in Cardiovascular Medicine, 9, 221–228. https://doi.org/10.1007/s11936-007-0016-3

Welmer, A. K., Holmqvist, L. W., & Sommerfeld, D. K. (2008). Limited fine hand use after stroke and its association with other disabilities. Journal of Rehabilitation Medicine, 40, 603–608.

Franceschini, M., Porta, F. L., Agosti, M., & Massucci, M. (2010). Is health-related-quality of life of stroke patients influenced by neurological impairments at one year after stroke? European Journal of Physical and Rehabilitation Medicine, 46, 11.

Nichols-Larsen, D. S., Clark, P., Zeringue, A., Greenspan, A., & Blanton, S. (2005). Factors influencing stroke survivor's quality of life during subacute recovery. Stroke, 36, 1480–1484. https://doi.org/10.1161/01.STR.0000170706.13595.4f

Veerbeek, J. M., Kwakkel, G., van Wegen, E. E., Ket, J. C., & Heymans, M. W. (2011). Early prediction of outcome of activities of daily living after stroke: A systematic review. Stroke, 42, 1482–1488. https://doi.org/10.1161/STROKEAHA.110.604090

Hatem, S. M., Saussez, G., Della Faille, M., Prist, V., Zhang, X., Dispa, D. & Bleyenheuft, Y. (2016). Rehabilitation of motor function after stroke: A multiple systematic review focused on techniques to stimulate upper extremity recovery. Frontiers in Human Neuroscience. https://doi.org/10.3389/fnhum.2016.00442

Lin, S. H., & Dionne, T. P. (2018). Interventions to improve movement and functional outcomes in adult stroke rehabilitation: Review and evidence summary. Journal of Participatory Medicine, 10, e3.

Mayo, N. E. (2016). Stroke rehabilitation at home. Stroke, 47, 1685–1691. https://doi.org/10.1161/strokeaha.116.011309

Feldner, H. A., Papazian, C., Peters, K. M., Creutzfeldt, C. J., & Steele, K. M. (2021). Clinical use of surface electromyography to track acute upper extremity muscle recovery after stroke: A descriptive case study of a single patient. Applied System Innovation, 4, 32.

Castiblanco, J. C., Mondragon, I. F., Alvarado-Rojas, C., & Colorado, J. D. (2021). Assist-as-needed exoskeleton for hand joint rehabilitation based on muscle effort detection. Sensors (Basel, Switzerland), 21, 4372.

Nam, C., Zhang, B., Chow, T., Ye, F., Huang, Y., Guo, Z., Li, W., Rong, W., Hu, X. & Poon, W. (2021). Home-based self-help telerehabilitation of the upper limb assisted by an electromyography-driven wrist/hand exoneuromusculoskeleton after stroke. Journal of Neuroengineering and Rehabilitation, 18, 137.

Xie, C., Yang, Q., Huang, Y., Su, S. W., Xu, T., & Song, R. (2021). A hybrid arm-hand rehabilitation robot with EMG-based admittance controller. IEEE Transactions on Biomedical Circuits and Systems, 15, 1332–1342.

Huang, J., Lin, M., Fu, J., Sun, Y. & Fang, Q. (2021). An Immersive motor imagery training system for post-stroke rehabilitation combining VR and EMG-based real-time feedback. 2021 43rd annual international conference of the IEEE engineering in medicine biology society (EMBC) (pp. 7590–7593).

Ito, K., Shintaro, U., Akiko, Y., Chang, M. K., Shin, K., Kazuki, U., Shigeo, T., & Yohei, O. (2021). Electromyography-controlled gamified exercise system for the distal upper extremity: a usability assessment in subacute post-stroke patients. Disability and Rehabilitation: Assistive Technology, 18(6), 883–888.

Jack, D., Boian, R., Merians, A. S., Tremaine, M., Burdea, G. C., Adamovich, S. V., Recce, M. & Poizner, H. (2001). Virtual reality-enhanced stroke rehabilitation. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 9, 308–318.

Farrell, T. R., & Weir, R. F. (2008). A comparison of the effects of electrode implantation and targeting on pattern classification accuracy for prosthesis control. IEEE Transactions on Biomedical Engineering, 55, 2198–2211.

Palkowski, A., & Redlarski, G. (2016). Basic hand gestures classification based on surface electromyography. Computational and Mathematical Methods in Medicine, 2016, 6481282.

Côté-Allard, U., Fall, C. L., Drouin, A., Campeau-Lecours, A., Gosselin, C., Glette, K., Laviolette, F. & Gosselin, B. (2019). Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 27, 760–771.

Kaya, E. & Kumbasar, T. (2018). Hand gesture recognition systems with the wearable myo armband. In 2018 6th international conference on control engineering information technology (CEIT), 1–6.

Mendez, I., Hansen, B. W., Grabow, C. M., Smedegaard, E. J. L., Skogberg, N. B., Uth, X. J., Bruhn, A., Geng, B. & Kamavuako, E. N (2017). Evaluation of the Myo armband for the classification of hand motions. IEEE International Conference on Rehabilitation Robotics: [proceedings] 2017, 1211–1214.

Caesarendra, W., Tjahjowidodo, T., Nico, Y., Wahyudati, S., & Nurhasanah, L. (2018). EMG finger movement classification based on ANFIS. Journal of Physics: Conference Series, 1007, 012005. https://doi.org/10.1088/1742-6596/1007/1/012005/meta

Al-Timemy, A. H., Bugmann, G., Escudero, J., & Outram, N. (2013). Classification of finger movements for the dexterous hand prosthesis control with surface electromyography. IEEE Journal of Biomedical and Health Informatics, 17, 608–618.

Wang, M., Bulger, M., Dai, Y., Noël, K., Axon, C., Brandenberger, A., Fay, S., Gao, Z., Gilmer, S., Hamdan, J. & Humane, P. (2020). A 3D-printed, adjustable armband for electromyography-based finger movement classification with haptic feedback. In 2020 IEEE international conference on systems, man, and cybernetics (SMC) (pp. 3460–3465). ISSN: 2577-1655.

Harris, C. R., et al. (2020). Array programming with NumPy. Nature, 585, 357–362.

Virtanen, P., et al. (2020). SciPy 1.0: fundamental algorithms for scientific computing in Python. Nature Methods, 17, 261–272.

McKinney, W. (2010). Data structures for statistical computing in python. (pp. 56–61). Austin, Texas. https://conference.scipy.org/proceedings/scipy2010/mckinney.html

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V. & Vanderplas, J. (2011). Scikit-learn: Machine learning in python. Journal of Machine Learning Research, 12, 2825–2830.

Seabold, S., & Perktold, J. (2010). Statsmodels: Econometric and statistical modeling with python (pp. 92–96). Austin, Texas. https://conference.scipy.org/proceedings/scipy2010/seabold.html

Hargrove, L. J., Englehart, K., & Hudgins, B. (2007). A comparison of surface and intramuscular myoelectric signal classification. IEEE Transactions on Biomedical Engineering, 54, 847–853.

Hudgins, B., Parker, P., & Scott, R. (1993). A new strategy for multifunction myoelectric control. IEEE Transactions on Biomedical Engineering, 40, 82–94.

Krasoulis, A., Vijayakumar, S., & Nazarpour, K. (2015). Evaluation of regression methods for the continuous decoding of finger movement from surface EMG and accelerometry. In: 2015 7th international IEEE/EMBS conference on neural engineering (NER) (pp. 631–634). ISSN: 1948-3554

Pallotti, A., Orengo, G., & Saggio, G. (2021). Measurements comparison of finger joint angles in hand postures between an sEMG armband and a sensory glove. Biocybernetics and Biomedical Engineering, 41, 605–616.

Gao, Z., Tang, R., Huang, Q., & He, J. (2021). A multi-DoF prosthetic hand finger joint controller for wearable sEMG sensors by nonlinear autoregressive exogenous model. Sensors (Basel, Switzerland), 21, 2576.

Acknowledgements

The authors thank Linda Pecora for providing feedback on the experimental setup and design.

Funding

Open Access funding provided by National Research Council Canada. This work was funded in part by the National Research Council Canada, the Fonds de recherche du Québec – Nature et technologies (FRQNT), McGill University (Quantitative Life Sciences graduate program). The first author received funding from training grant NSERC-CREATE in Complex Dynamics to C Palmer (PI).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by MW. The first draft of the manuscript was written by MW, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical Approval

Ethics approval was granted by the Research Ethics Board of the National Research Council Canada (#2022-18) and by the Research Ethics Board of McGill University (#22-05-057).

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

All participants provided informed consent for publication of their data.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, M., Khundrakpam, B. & Vaughan, T. Effects of Electrode Position Targeting in Noninvasive Electromyography Technologies for Finger and Hand Movement Prediction. J. Med. Biol. Eng. 43, 603–611 (2023). https://doi.org/10.1007/s40846-023-00823-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40846-023-00823-x