Abstract

Given increasing evidence of the importance of sensorimotor experience and meaningful movement in geometry learning and spatial thinking, the potential of digital designs to foster specific movements in mathematical learning is promising. This article reports a study with elementary children engaging with a learning environment designed to support meaningful mathematical movement through the use of two shared, but alternative, representations around Cartesian co-ordinates: a 3D immersive virtual environment, where one child collects flowers from target co-ordinates selected by another child using a 2D visual representation of the virtual garden and person location in space. In this design, the body becomes a ‘tangible’ resource for thinking, learning and joint activity, through bodily experience, and where body movement, position and orientation are made visible to collaborators. A qualitative, multimodal analysis examining collaborative interaction among twenty-one children 8–9 years old shows ways in which the ‘body’ became an instrument for children’s thinking through, and reasoning about, finding positions in space and movement in relation to Cartesian co-ordinates. In particular, it shows how the use of different representations (tangible and visual 2D screen-based) situated the meaning-making process in a space where children, using their bodies, crafted connections between the different representations and used transcending objects to facilitate an integration of the different perspectives.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

A substantial range of work drawing on theories of embodied cognition provides evidence of the importance of sensorimotor experience for cognition and mathematical learning (e.g. Barsalou, 2008; Cress, Fischer, Moeller, Sauter &Nuerk, 2010; Abrahamson &Trninic, 2011), and in eliciting sensorimotor representations that can be used in later reasoning (Goldin-Meadow Nusbaum, Kelly & Wagner, 2001; Alibali and Nathan, 2012; Johnson-Glenberg, 2018). Increasingly, research highlights the importance of particular action experiences, or purposeful body movements, in supporting cognition in different contexts, including geometry learning and spatial thinking (e.g. Hall & Nemirovsky, 2012; Shoval, 2011; Kaur, 2013; Ma, 2017; DeSutter and Stieff, 2017). Walkington, Srisurichan, Nathan, Williams and Alibali (2012) argue that full-body actions or gestures enable greater transfer to new problems, since they better highlight how the actions relate to the relevant concepts and constraints. Collectively, this work has important implications for designing multi-sensory digital environments that bring the body differently into play, since they can provide sensorimotor experiences designed to guide purposeful or meaningful actions in relation to mathematical ideas.

While various applications have been developed to support embodied learning in mathematics, none to date explore the potential of immersive virtual reality (VR) as an instrument for embodied exploration of spatial concepts by young children. Existing applications and design guidelines for immersive VR in education focus mainly on science subjects for older students (Johnson-Glenberg, 2018). This raises the need to better understand the role of VR in promoting meaningful sensorimotor interaction, as well as how the specific digital resources shape interaction and meaning-making.

VR offers new ways of exploiting the potential of full-body actions in space, in addition to fostering (meaningful) bodily movement, position and orientation in relation to dynamically responsive visual and audio feedback. However, a key challenge for learning with VR is the isolation of the user from the physical environment, thought to prohibit effective learning and interaction (Greenwald, Kulik, Kunert, Beck, Fröhlich, Cobb &Maes, 2017).Given that the body and its actions are visible by others in physical space, as well as in linked screen representations of the VR space, we propose that the body in immersive VR can become a ‘public’ and therefore ‘tangible’ resource for thinking, learning and joint activity, where students use “the physical [and VR] environment in his or her learning and thinking […] and cognition takesplace through perception and action” (Stevens, 2012, p.338).

This article reports on a qualitative study with twenty-one children aged 8–9 years interacting in a purpose-built VR environment designed to support elementary students’ learning of Cartesian co-ordinates. The environment was designed with two shared representations. The first is a 3D immersive virtual environment, providing a physical space with a virtual overlay of a garden of flowers placed on whole number grid references, intended to foster the use of the body as a tangible object for thinking in action (the explorer, in the VR space) and made visible to their peer. The second is a 2D visual representation of the ‘virtual’ garden and the person’s location, where another child (the navigator) selects flower co-ordinates for the explorer to ‘collect’ (body as a public, tangible resource).

The analysis examines how student interaction unfolded, in particular by exploring ways in which: a) the body as a tangible object operating in different spatial representations facilitated meaning-making around spatial concepts; b) how the dynamic connection of the VR environment to a 2D representation provided resources for creating shared understanding around space. In particular, we aim to gain “valuable insight into how the body–mind interplay can influence meaning-making during embodied experiences” (Tan and Chow, 2018, p.1), as well as insight into the design considerations this raises for digital, embodied, interaction environments.

Background

The potentialfor digital designs to enhance physical environments and interactions have rekindled discussions around embodiment. Multiple disciplinary perspectives point to the importance of sensorimotor interaction in underpinning cognitive development: meaning- making and conceptual understanding (e.g. Anderson, 2005; Smith and Gasser, 2005; Barsalou, 2008; Wilson, 2002). Stevens (2012) makes a distinction between two ‘meanings’ of embodiment. A ‘conceptualist’ meaning draws on work which takes a cognitive view of how sensorimotor interaction underpins the ways in which we conceptualise the world. For example, theories of conceptual metaphor (Lakoff & Johnson,1980) argue for the central role of sensorimotor experiences in forming image-schemas, which underpin our conceptualisation of experienced phenomena.

An ‘interactionist’ meaning places the body as a “public resource for thinking, learning, and joint activity” (Stevens, 2012, p.338), related to “a dynamically unfolding, interactively organized locus for the production and display of relevant meaning and action” (Goodwin 2000, p. 1517). This approach focuses on “experiential, non-causal/mechanistic, descriptive, lived experience [in interaction]” (Nemirovsky, Rasmussen, Sweeney &Wawro, 2012, p. 290), and, in our study, brings attention to how children respond to and engage with tasks, issues and challenges that arise in interaction and communication in relation to mathematical ideas. In particular, it draws attention to the role of the situated body in communication and meaning-making. This interactionist perspective provides a lens for the work reported here, where the ‘body’ in the interactive space becomes both a vehicle for thinking and making- meaning through movement in the VR environment, and a ‘tangible’ object – visible to others – that provides the link between the 3D VR space and the 2D visual representation.

Embodiment,Mathematics and Spatial Thinking

There is substantial evidence that mathematical cognition is embodied (Lakoff & Núñez, 2000; Alibali & Nathan, 2012). Educational research in mathematics has reported ways in which ‘action’ plays a role in teaching and learning (e.g. Goldin-Meadow et al., 2009; Alibali et al., 2013; Nemirovskyet al.,2012; Abrahamson& Sánchez-García, 2016). Barsalou (2008) highlights the importance of re-enaction of modality-specific experiences, Gerfosky (2012) argues for the benefits of performative, embodied modes of engagement and Goldin-Meadow et al. (2009) show that fostering specific actions that related to the gestures of those who correctly explain a mathematics problem helped children to learn mathematics. They suggest that the ‘actions’ benefit from being accurate, but also need to be congruent and, through this, become meaningful in relation to the concept being learned (Segal, Tversky & Black, 2014).

While similar effects have been shown for learning geometry (Kim, Roth & Thom, 2011; Elia, Gagatsis & van den Heuvel-Panhuizen, 2014), and the ‘primacy of the body’ in children’s geometric drawings (Thom and McGarvey, 2015), Walkington et al. (2012) claim that a greater degree of bodily involvement may assist in conveying mathematical principles beyond using gestures alone, since full-body gestures better highlight how actions relate to the relevant concepts and constraints. Similarly, a study of second- and third-grade children who were taught about angles through mindful movements reports how they outperformed those taught verbally (Shoval, 2011). The whole-body interaction ‘walking-scale geometry’ study highlighted the importance of the body being externally visible and accessible to others (e.g. an angle), as well as becoming the mathematical objects themselves (Ma, 2017).

Spatial reasoning is critical for early years learning in developing ability in STEM subjects (e.g. Sinclair and Bruce, 2015). Whilethere is some lack of attention to supporting the development of knowledge of space, spatial concepts and spatial thinking (Uttal, Meadow, Tipton, Hand, Alden, Warren & Newcombe,2013; DeSutter and Stieff, 2017), research interest in spatial reasoning is increasing (Mix and Battista, 2018). Concepts of space draw on the integration or co-ordination of space, representation and reasoning (NRC, 2006), and involve developing ideas through spatial relationship between objects (Kovacević, 2017). Providing opportunities via diverse, dynamic, spatial tasks for students can have positive impacts on, for instance, 2D–3D mental transformations (Bruce & Hawes, 2015). However, we know little about how the ways in which geometric constructs are represented influence learners’ spatial reasoning (Jones & Tzekaki, 2016). Educational interfaces that encourage specific bodily movements within a Cartesian plane (e.g. for elementary-aged children, computing distances between two or more points in Cartesian space) can exploit the body as an instrument to conceptualise space, through scaffolding spatial learning and re-enaction of movement and position in space.

Virtual Reality Ineducation

VR enables users to interact and socialize in worlds that simulate aspects of reality, that make VR desirable for education. While there are increasing applications for professional training, rehabilitation health and well-being, little research to date has been undertaken in school education. This is partly due to insufficient knowledge of potential health (e.g. dizziness) and ethical issues involving the use of Head-Mounted Displays (HMDs) by young children, although research suggests that 5–10 minute incremental use for young children and up to 20 minutes for older children is unproblematic (Bailey & Bailenson, 2017).

A main challenge of immersive VR environments for education, which typically aims to foster collaboration, is the isolation of the user, not only from the physical environment, but also from visually perceiving his/her own body. This challenge has been perceived as prohibiting effective learning and interaction, leading to design choices which bypass ‘user isolation’ by bringing the users together or by augmenting reality with digital overlays on the physical world (Greenwald et al., 2017). In the research reported here, a different approach was taken to foster collaborative interaction through two shared, but alternative, representations of the environment wherelearnerstook different, but collaborative, roles within the game: one child (the navigator) located in the physical world manipulating a 2D representation of the virtual world, while the other child (the explorer) whose moving in the virtual world required the development of shared understanding around body position and movement related to Cartesian co-ordinates.

Research shows how body-based forms of interaction can increase engagement and immersion (Adachi et al., 2013; Zuckerman and Gal-Oz, 2013), as well as support social learning interactions (Jakobsen and Hornbæk, 2014; Antle et al., 2011). However, designing digital sensory (e.g. tangible and whole-body interaction) environments for learning is challenging, given wide-flexibility sensing and linking action to digital effects. Research examining different design parameters on interaction and cognition across various learning domains (e.g. Price, Sheridan & Pontual Falcão,2010; Smith, King & Hoyte, 2014; Abrahamson & Bakker, 2016) suggestshow specific design parameters influence meaningful mappings in interaction (e.g. Malinverni & Pares,2015). Specifically, the location of visual representations relative to action (discrete or co-located) has been shown to have a direct impact on focus of attention and awareness of others’ actions (e.g. Price et al., 2010; Price, Jewitt & Sakr, 2015).

The Cartesian Garden Learning Environment

Cartesian co-ordinates as a mathematical concept focus on position, where the two co-ordinates are numerical descriptors of a point on the Cartesian plane. However, learning how to use co-ordinates is more complex. Somerville and Bryant (1985) identify the learning challenge as a problem of finding the intersection of two lines drawn from positions on each axis. Discussions with teachers, as part of a broader study to inform design process and design of the digital environment (Duffy, Price, Volpe, Marshall, Berthouze, Cappagli, Cuturi, Baud Bovy, Trainor & Gori, 2017), revealed challenges around awareness of the convention of the order of co-ordinates. Teachers foregrounded two key teaching strategies: a) employing memorisation techniques and b) enacting the process in 3D space:

Children have difficulties in remembering the right order of co-ordinates. We are using riddles as a mechanism to help them […] We also take the activity in the playground […]. When we are doing this kind of activity in the playground, it helps them because they are using their gross motor skills, it is really good for them to understand that they move in one direction before the other direction. (Teacher interview, 29-10-2018).

This extract highlights that the process of finding co-ordinates is not only about understanding where the intersection of two lines forms positions on axes, but also involves making a correspondence between the co-ordinates and their relation to points on the grid. The latter becomes an issue of order of movement to reach the correct point, where the order description of the co-ordinates provides the means to communicate the mathematical movement convention. However, children have difficulty transferring their understanding of these mathematical ideas from 3D-space-based to 2D-paper-based symbolic representation when enactment and paper-and-pencil tasks take place separately and in a non-connected way:

It is really good for them to understand that they move in one direction before the other direction. But when that is translated to a paper copy of it, you get lots of confusion, lots of issues and translation problems. Once it becomes 2D, it becomes abstract again for them. So they have to re-learn it [...] It is almost like a completely different task to some children because they can’t see what the connections are. (Teacher interview, 29-10-2018).

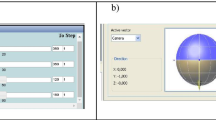

Taking these aspects into consideration, the Cartesian Garden was designed to engage children’s use of body movement in exploring the Cartesian plane. The design aimed to incorporate embodied actions – purposeful body movements in relation to the structure and function of co-ordinates (DeSutter and Stieff, 2017). The environment uses HTC Vive in a space of 4 × 4 metres, where children experience an immersive virtual garden, with flowers located at co-ordinate points of the Cartesian plane (see Fig. 1).The first quadrant is used, with the following mathematical concepts in mind: x-axis and y-axis in shaping the Cartesian plane, composing and using sets of whole number co-ordinates; finding co-ordinate positions; symmetry and symmetrical transformations. Children move to select flowers at specified co-ordinates, the design aiming to scaffold movement along axes and grid lines, meaningful in relation to Cartesian co-ordinate interaction. Through collecting flowers consecutively, they can also create lines which form a shape (see Fig. 4).

Children in VR used one controller positioned on a belt wrapped around their waists, requiring them to step onto the specific co-ordinate point to select the linked flower. In this way, they ‘become’ the co-ordinates or, in other words, attain body syntonicity (Papert, 1980). Drawing on previous work and pilot testing, this design aimed to ensure a direct mapping between body position in the garden and the target co-ordinates. Thus, movement from one co-ordinate pair to another involved a change in body position that maps to movement along the grid axes/lines, leading to congruent and meaningful movement and positioning. Use of the controller without the belt resulted in children standing in one position and selecting several flowers by reaching with their arms, inhibiting ‘embodying’ of meaningful movement over the Cartesian plane and providing conflicting information on the 2D space (which displays the position of the controller,not the body per se).

Research has highlighted the importance of dynamically connecting multiple representations of the same concept (e.g. Ferrara, 2006, Ainsworth & Van Labeke, 2004; Price et al., 2010). The Cartesian Garden was designed to connect the 3D virtual space with its 2D visual counterpart synchronously and dynamically, allowing the two users to engage collaboratively. The grid, the position and colour of the flowers, as well as the presence of the co-ordinate labels or not, are in direct correspondence between the two representations. Changes that happen in one representation are dynamically depicted in the other (see Fig. 2), innovatively linking 2D visualisation to embodied action in 3D space (DeSutter & Stieff,2017).

The 2D space shows changes in the position of the explorer, as well as the lines elicited when two flowers are connected in the drawing mode. Navigation in 3D virtual space draws on verbal communication between the navigator and the explorer. This requires crafting connections between the two worlds, using the child’s body as a point of reference and elaborating on resources that come from the different representations (i.e. mapping 2D representation to 3D space, and movement/position in the 3D world to its 2D representation).A sound and smilies are elicited to indicate the correct selection of a target flower in the virtual garden (see Fig. 3) and a red X appears when the selection is incorrect.

Three main tasks were created to enable children to experience and use the Cartesian plane that mapped onto those used in school. The findings focus on the following two tasks undertaken by all participants.

Find the Flower

This task involved the explorer finding a flower, located at a specific co-ordinate point, that was selected on the 2D representation by the navigator (child or teacher). The aim was for the explorer to walk along the x-axis to the specific x-co-ordinate, then up the y grid line to the specified y-co-ordinate.

Draw a Shape

In this task, the navigator created a shape on the 2D representation (not visible in the virtual garden) and provided sets of co-ordinates for the explorer to ‘create’ a shape by walking to and selecting target flowers, creating pathways (lines) in a ‘connect the flowers’ manner. The line trace between selected co-ordinates (flowers) was visible in the virtual garden and on the 2D representation (see Fig. 4). Discrepancies between the 2D-generated shape and the 3D-created shape were used for suggesting adjustments during the making of the shape.

During implementation, the desktop computer was aligned to the orientation of the virtual garden and the floor was marked using tape to show: the origin; the x and y axes; the distance between the flowers on the x-axis and y-axis (see Fig. 5). This was necessary for the navigator in aligning the 2D representation to their peer moving in space, and also for the researcher to help the child moving in VR.

Methodology

A qualitative study examined how the digital games and their specific designs shaped bodily and sensory forms of interaction, and explored implications of this for collaboration and meaning-making around mathematical concepts experienced in the Cartesian plane. On the basis of parental permission, twenty-one children (forteen girls, seven boys) aged 8–9 years from a London primary school participated in an insitu, constructive dyad, interaction design (Als, Jensen & Skov,2005) to promote children’s discussion, collaboration and interaction (paired by the teacher).Footnote 1All children had some experience with co-ordinates from the previous school year.

One pair of children at a time took part in sessions lasting approximately 45 minutes. Each session consisted of three parts: pre-interaction questions, interactive experience and post-interaction discussion. During interaction, one child took the role of navigator using the 2D representation, while the other, being the explorer, completed tasks in the virtual garden. Children exchanged roles between the two five-minute tasks described above: ‘Find the flower’ and ‘Draw a shape’. Both students in each pair did both tasks in both roles (navigator and expolorer). Two researchers were present, one to facilitate the game play and conduct pre- and post-interaction discussions, and the other to monitor video-recording. Facilitation focused on guiding children’s interactions with the technology and towards the task elements.

Video-recordings captured children’s interaction in the physical space of the virtual garden, the desktop 2D representation and one another, while screen capture recorded the virtual world as seen through the headset. Discussions before the game were video-recorded, in order to gain insight into previous relevant mathematical knowledge and children’s experience with VR, as well as after the interaction to explore their perception of their experience and how body movement in space influenced the way they talked about mathematical ideas.

Qualitative video analysis used a multimodal analytical approach, one which emphasizes situated action – focusing on recognizing, analysing and theorizing different ways in which people make meaning – and the place of specific resources and context in this process (Radford, Edwards & Arzarello,2009; Jewitt, 2013). It focuses on analysis of body posture and movement, gaze, gestures and talk in naturally occurring interaction and communication, in order to examine multimodal meaning-making, in line with an interactionist perspective which emphasizes the body as a public resource for thinking (Stevens,2012).

A constant comparative method (Gibson & Brown, 2009) was used to open-code the data, and then systematically to identify themes related to the research aims. The ELAN video analysis tool was used, where video can be slowed or stepped through frame by frame, and multiple video sources can be analysed synchronously (Brugman& Russel, 2004). Three researchers examined moment-by-moment body interactions, including gesture, action, facial expression, body posture and talk. The analysis focused on what children did, their movements and talk, and how this shaped their engagement with one another and the mathematical ideas. Particular attention was given to how the body (e.g. in movement and in position) acted as a communication resource and a mechanism for making meaning, as well as the role this played in creating links between the 3D VR space and the 2D visual representation. Analysis noted breakdowns, identified by struggles around a situation where intervention was required, and breakthroughs, identified by changes in the way children discussed, collaborated and approached a problem or concept.

Findings and Discussion

The findings focus on how the digital designs and collaborative interactionshaped body movement and development of strategies to reach joint understanding in working with Cartesian co-ordinates. It draws attention to: a) how the body, as a tangible object, shaped the generation of meaning around position in space in relation to the affordances of the different representations of the learning environment; b) how children used the resources of the learning environment to develop a shared understanding about space across the different representations.

To familiarise the children with the environment and the collaborative design (i.e. the navigator describing the position of the target flower and using feedback on the screen), co-ordinate labels were initially shown adjacent to each flower in the VR space and the 2D representation (see Fig. 1). After familiarisation, these labels were removed, leaving the numbers only on the x and y axes, which significantly shaped children’s movement and attention to their position in space. In general, when flowers were labelled, children moved in a random rather than a systematic way, paying attention to the labels, but not the properties of the Cartesian grid in VR space.

When the labels were removed, all explorers used an orthogonal way of moving in the grid. However, when given a flower’sco-ordinates, while they started from (0,0), they then typically moved on the y-axis followed by along the x-axis, despite initial guidance from the facilitator to move first along the x-axis and then along the y-axis. This meant that their first attempts to collect flowers led them to different co-ordinates, e.g. (3,2) instead of (2,3). Collaborative interaction was instrumental in facilitating the development of alternative strategies to locate target flowers successfully. The combined sensory engagement and verbal exchanges served to highlight discrepancies in perspectives between the two viewpoints, and led to strategies for resolution that supported children in making mappings between 2D and 3D. Two key themes emerged from the analysis, which elaborate: a) how the combined use of, and moving between, the two realms (2D and 3D representations) influenced the development of body-centredmeaning-making and communication across the tasks; b) how resolution strategies fostering integration of the two representations drew on key resources in the environment.

Moving between Two Realms: Meaning Making with the Body

The Cartesian plane, being central to the learning environment, foregrounded the use of co-ordinates as a means to describe positions in space. However, if one of the children did not follow the convention (x followed by y), their communication was ineffective, since their peer ended up at a different co-ordinate point. Furthermore, the system feedback was position-based, showing the changed position of the explorer, rather than their movement in real time. As a result, children invariably transformed their verbal instructions from an initial ‘mathematical’ description of position (using co-ordinates) to more ‘spatial’ or ‘body-centred’ instructions, or step by step body-centred instructions (e.g. turn right and then take two steps forward). This allowed for a more controlled and effective mode of communication across the two spaces. In addition, it demonstrated their understanding of the mapping of mathematical (numerical) co-ordinates to locations in space through their body movement in the Cartesian plane, and the linking of this to the 2D representation. Here we provide examples that show how verbal instructions influenced movement in VR and the implications of this movement in negotiating spatial concepts as the children encountered the affordances of the different representations.

Representational Affordances Shape Communication

Combined verbal instructions and body responses exposed different ways that children conceptualised how to move in the two spaces. Discrepancy between assumed 2D and 3D ‘ways of moving’ led to critical moments in interaction across the tasks. Typically, directions conveyed by the navigator included instructions such as ‘go down’ or ‘go up’, similar to how one would move a mouse on a screen. However, for the explorer working on a horizontal plane, movement was forwards, backwards or sideways. Initially, the explorer often interpreted these directions literally in relation to their bodies in 3D space, leading to disparities in meaning and intended location in space. The example below is illustrative of this phenomenon across the different tasks (see Fig. 6):

Task: Find a flower.

[C31 starts moving toward the x-axis].

C12“You are close! No, no, go up! Go up!”

C31: “What do you mean go up…?”[He jumps up (see Fig. 6a)].

C12: “Not jump! You are so close… Go up!”

[C31 starts walking toward the x-axis].

Later in the task Draw a shape

[C12 draws a square on the computer and guides C31].

[C12 uses her hands to represent the grid (see Fig. 6b)]

C12: “Go to three,one”

C31 [walks along the axis reaching a position]. “Forward?”[He moves in various directions while clicking the controller to collect flowers, hoping to collect the right one].

C12: [seems confused]: “Yes forward… no… Yes! Now go down”.

C31: “Down, you mean backwards?!”

C12: “No, you are going too far away! […] Now, go down a tiny bit”.

[C31 squats ‘down’(see Fig. 8c)].

C12: “No, no, not like that! Backwards basically”

Children thus used their own bodies in relation to the representational space as a frame of reference, both to give and to interpret instructions. Across these episodes, the navigator described movement on the grid, in relation to moving a mouse on the screen (“go up”), whereas the child in the VR space attributed ‘up’ to moving his body upwards (jumping), rather than forwards, and ‘downwards’ as squatting (see Fig. 6c), rather than moving backwards. In the later episode, the navigator used her hands in an attempt to ‘embody’ the 2D representation,by imagining it with her hands on the horizontal plane. Yet, despite this, she still ‘saw’ and talked about the Cartesian plane as a vertical representation – using ‘up’ and‘down’ (rather than ‘forwards’ and‘backwards’).

Despite recognizing these differences in one encounter, the issue persisted in a follow-up instance, showing the strength of these conceptualisations, which suggests ways in which the different representations (technology affordances) shape the way children both talk about and conceptualise movement in the different spaces. However, it also highlights new opportunities for children – by engaging with both representational presentations – to integrate the different perspectives. These instances demonstrate how spatial concepts like ‘up’ and ‘down’ are in flux with respect to their meaning, and how they are shaped by the resources used each time. Thechildren engaged in building mutual understanding about the relationship between ‘down’ and ‘backwards’,using motoric interaction as a resource for constructing a functional meaning of the concepts (a meaning that is effective and worked in the current setting).

Integrating the Grid in Body-Centred Movement

One form of body/spatial-centred directions focused on the number of footsteps a child should make. The video analysis revealed how the navigator often used instructions that referred to the number of footsteps their peer should take to reach a position, mapping it to one number of the target co-ordinates on the 2D representation. For example:

[C31 guides C12 to the point(2,5)starting from (0,0)].

C31: “Go straight to five: five… on the y-axis”

[C12 walks forward to (0,5)].

C31: “Then take two steps!”

[C12 turns right 90 degrees, takes two steps and collects the first flower].

While this strategy was successful at times, it was not consistently so. Children’s pace sizes were different, so the number of steps taken did not always directly map to the spaces between co-ordinate numbers or the number of grid references between co-ordinates. When this happened, navigators then used elements of the grid (e.g. 5 on the y-axis, and then go to the next flower) as landmarks to refine the inaccuracies of body-centred movement. While this directed attention to the ‘points’ where the flowers were located, they were used as reference locations to facilitate body movement rather than eliciting and fostering the use of numerical co-ordinates

Here, the body became a vehicle for communicating the discrepancy in mapping body movement (steps) to spatial referents (co-ordinates), leading to the use of other tangible grid referents (flowers). Although this enabled more accurate locating of target co-ordinates through body positioning, it did not develop into sustained use of co-ordinate numbers in joint communication. This highlights a potential tension between providing engaging features in a game-like context (here, the flowers), yet wanting to draw attention to the co-ordinates themselves. An alternative design might foster engagement with co-ordinates by, for example, requiring the naming of a co-ordinate pair for the respective flower to appear, or to gain colour, in the garden.

Walking a Shape: Patterns of Movement

While body-centred instructions were used predominately when co-ordinate-based communication failed, they were also evident in ‘Drawing a shape’ (see Fig.7) through the kinds of instructions provided. In the example below, C24 (the navigator) gives instructions for movement in the VR space that will ‘create’ a rectangle. His instructions are body-centred, following a pattern of continuous movement across several points, as opposed to specific ‘corner’ co-ordinatepoints. For example, “go all the way up and pick all the flowers you find”, rather than offering a sequence of specific co-ordinates, which in this instance starting at (0,0) would be (2,0), (2,5), (0,5), (0,0). This suggests that C24 perceived the shape on the screen as a continuous line beginning at one point, changing direction at specific points, until the shape is complete.

C24: [from (2,0)] “Go forwards one two three four five times and every time you pass a flower, pick it”.

[C23 moves forward parallel to the y-axis, with the x-axis behind her, counting the flowers she picks until five].

C24: “Then turn to your left, then go forwards and pick those two flowers”

[C23 turns 90 degrees left and picks the first flower]C23: “And the yellow one?”

C24:“Yes. Turn around”[C23 turns 90 degrees facing the y-axis]C24: “Yes, like that. Oh, no no”[C23 looks at the screen, then takes a step to the side towards the left (towards the y-axis) still facing the x-axis]

C24: “No no. No go back to five”.

[C23 takes two steps to her left away from the y-axis].

C24:“Oh my God, you are messing up!”

C23: “I can’t see”.

R: “That’s true”(they laugh).

C24: “Move right once”.

[C23 moves towards the y-axis and she ends up at y = 5].

C24: “Then move forwards. All the flowers that you pass, pick them up”

C23: “To zero?”

C24: “Yeah”.

C23:“OK.

[C23 moves forward down the y-axis and she clicks on all the flowers. She ends up atthe point marked [0]– the origin – and turns 90 degrees facing the end of the x-axis (with the y-axis on her leftside)].

R: “What kind of shape did you draw?”

C23: “A rectangle”.

From this example, we can see that the way C24 conceptualised the task influenced his instructions, which maps to the act of drawing, where a pen, for instance, would take a continuous path from A to B to C to D. In this context, then, body-centred instructions were more meaningful to describe the movement required to make a shape (in effect, draw a shape with the body) than providing an sequential set of co-ordinate pairs. Here, the order of the two co-ordinates is critical for ensuring lines are created appropriately, thus situating these in such a way (that involves collecting all the flowers as you go along) will make sure that this happens. This illustrates how the act of drawing a shape in 2D imposes the way guidance is given to move in 3D space, meaningfully connecting interaction across the two worlds. Indeed, Thom and McGarvey (2015) suggest that providing opportunities for spontaneous and prompted drawing is important for exploration and conceptualization in geometry.

Resolution Strategies: Resources for Fostering Integration of Perspectives

Attempts to resolve communication issues revealed two key resources that children drew on to integrate better the perspectives of 2D positioning and the body orientation or movement of the explorer in 3D space, support their directions and establish communication practices which functioned effectively across the different spaces. Key characteristics of these resources are: (a) that they supported integration through transitions from one space to another (from 2D to physical space); (b) that prevalent properties common in the different spaces functioned as common referents. Integration of perspectives became a key mechanism for supporting spatial reasoning based on different representations and facilitated effective communication across the different spaces.

Transitions from 2D to the Physical Space

Usually as a result of being given directions from peers, children in the VR environment changed their body orientation as they moved around the space. Since feedback on the 2D representation showed the position of the explorer, but not their movement or orientation, the navigator could only see where their peer was at that point in time, not where they had been seconds or minutes before, nor in which direction they were facing. This had a significant impact on how instructions could be played out through actions. For example, if the explorer changed orientation without making this clear to the navigator, the direction they then moved in resulting from the next instruction was completely different from that intended by the navigator. As a result,the use of alternative strategies for communicating movement in spacewas evident from the data.

One strategy involved step-by-step spatial movement instructions, providing the navigator with immediate feedback on the screen, feedback that reflected the real-time position of their peer. For example, when the navigator asked their peer to take a step right, they then checked their position on the screen and adjusted the next step instruction as needed. Another strategy was for the navigator to check both the screen as well as the physical positioning and orientation of their peer. Figure 8 shows C12 turning to look at her peer’s body orientation in the physical space, after looking at the screen to check where he was shown. From this, she was able to provide an instruction to “go up the other way”, followed by a gesture indicating direction, based on identifying the direction she wanted him to move on the screen, and then mapping this to his body orientation.

This perspective-taking required a combining of information from the 2D representation and their peer’s movement in the physical space,as well as from making use of the floor markings which aligned the physical to the VR space. Here, the body of the explorer worked as a tangible marker of position, orientation and movement. Perspective-taking that was triggered by the synchronous use of the two environments (visual 2D representation and tangible movement in VR space) facilitated their integration and involved a range of spatial concepts (body direction and orientation in the physical space; its representation on the 2D space; alignment of the 2D space with the physical space). While these concepts are not directly related to the use of co-ordinates, they provide important spatial thinking that is intrinsic to Cartesian co-ordinates.

Transcending Objects

Analysis of paired interaction across all sessions revealed a number of key properties in the environment that supported joint meaningful interaction or, in other words, ‘objects’ that were visible across tasks in all three spaces (2D, physical space and VR space) and, as such, they transcended all of them. In particular, these were the origin of the axes – the point (0, 0) – the x-axis and the y-axis, although in the physical space there were markers, but no numbers. These were easily recognisable objects resulting in unambiguous actions that enabled children to find the origin or points one to fiveunits along the x and y axes.

Analysis of children’s movement, interaction and communication revealed ways in which these objects were used to establish joint meaning and completion of the tasks. For instance, when making a shape, C19 and C20 used (0,0) as a shared referent to identify each angle of the shape they were trying to re-create in the VR space. After identifying an angle, C19 (in the VR space) went back to the origin and C20 (in the physical space) provided the new set of co-ordinates displayed on the computer (in the 2D space). Thus, the two interpreted (0,0) as a point of connection among all three spaces, which facilitated dialogue and, in turn,task completion. In another context, C12 and C31 used the origin as a shared point to ‘restart’, after reaching a wrong set of co-ordinates, in order to find common ground to orient the body in the VR space and to provide the correct directions from outside.

We call these ‘transcending objects’ as they serve as landmarks and common referents among all three spaces. We argue that these transcending objects have an ‘embodied mathematical meaning’ or, in other words, an inherent mapping between the ‘object’ relations and meaningful movement patterns that can guide interaction with Cartesian co-ordinates (e.g. routes from one co-ordinate point to another) and which is common across the three environment spaces (2D, physical and VR). These objects also facilitated integration of perspectives by functioning as ‘a reset’ in communication, especially the origin, or by helping children breakdown the problem of finding a position by identifying common referents and landmarks in space close to the end-point of movement. Here, the resources children used to generate meaning and effective strategies involved not only the body, but also its relationship to particular objects in spaces that were identified through joint interaction.

General Discussion and Conclusion

Interviews with elementary teachers revealed challenges in children’s experience with the use of Cartesianco-ordinates. Given increasing evidence of the benefits of meaningful movement for geometry learning and spatial thinking (e.g. Hall & Nemirovsky, 2012; Shoval, 2011; Kaur, 2013; Ma, 2017; DeSutter & Stieff, 2017), and the potential of digital designs to shape and foster specific movements, this article has reported on a study with elementary children engaging with the Cartesian Garden, an immersiveVR environment using HTC Vive, designed to support meaningful mathematical movement in a virtual (and physical) 3D space. The environment was also designed to foster collaborative engagement through a dynamically synchronised 2D (desktop computer) representation of the Cartesian grid, combining differently embodied experiences. In this design, the body becomes a ‘tangible’ resource for thinking, learning and joint activity, through bodily experience and body movement, position and orientation being made visible to collaborators.

Through a multimodal qualitative analysis of video data, three overarching findings emerged. Firstly,the analysis showed the importance of movement, as well as position, in learning about co-ordinates and how this needs better foregrounding in the design of learning environments. While the design of the VR environment centred around movement, the 2D representation provided position-based feedback, i.e. movement was represented as changes of position over time without showing a trace of previous positioning. By providing feedback on the result of action (position), but not the process (trajectory), the software design led to an emphasis on position.

However, the analysis demonstrates the importance of direction of movement and body orientation as part of the process of finding position (specific co-ordinates).In the VR, 3D space body orientation was critical in initiating direction of movement, as well as in following directions from peers, like ‘forwards’ or ‘backwards’, where the specific direction was dependent on body orientation at that moment in time. While the 2D representation of the Garden depicted the position of the child in VR at each moment, the path they took was not shown.

Consequently, peer feedback focused on the result of action and not on the process of reaching position. Position-based feedback fostered more step-by-step instructions, using the body as a frame of reference to provide more ‘spatial’ or ‘embodied’ descriptions of direction of movement in order to reach a specific position, typically disregarding the ‘x then y’ protocol.The design, therefore, did not foster concept congruent actions (Segal, Tversky & Black, 2014), in terms of ‘x then y’ movement, suggesting the importance of design features that guide the use of this protocol.

Secondly, meaning-making relied on mechanisms that enabled the crafting of links between the two different representations. A key idea is that of ‘transcending objects’, which are common in both representations, are easily recognisable and have an unambiguous, embodied mathematical meaning – children knew how they needed to move to reach (0,0). The use of transcending objects was supported by established movement and communication practices, and was facilitated by a learning design, which involved the alignment between the three spaces (physical, virtual and 2D).

Thirdly, the synchronous 2D and 3D representations in mathematical experience fostered the important role of collaboration in developing children’s spatial awareness, specifically around position, movement and orientation. The combined sensory engagement and verbal exchanges demonstrate how the affordances of the system shaped the way children established specific movement and communication practices through interaction with the environment, that facilitated them in creating connections between the various spaces. For example, walking patterns focused on moving forwards or backwards, taking specific numbers of steps and turning to change direction, which – through interaction – supported the development of a shared ‘language’ for a way of talking about movement in that space. Walking forward and turning to change direction was a common pattern of movement followed by the children and, over time, the successful adoption of the movement ‘metaphor’ (e.g. of ‘up’ and ‘down’ to refer to the direction of walking along the y-axis) while in the 3D virtual space.

These practices not only supported the connection between the two representations, but also bound together embodied forms of interaction in the VR space with the shared understandings and practices generated around these interactions, through the synchronous, combined use of the two representations. However, children’s interaction also highlighted challenges of the integrated representation design (e.g. Price et al., 2010; Price et al., 2015). While the design of synchronous dynamic changes across representations aimed to support this integration, the navigator often had to look at the physical 3D space to map orientation and real-time movement of the explorer to the 2D visual representation, suggesting the need for dynamic tracking and representation of movement – as opposed to position –from physical space. Nevertheless, the environment offered rich opportunities to engage in spatial reasoning, where the body and its movement became a meaning-making instrument and, at the same time, a tangible mathematical object – a public resource for creating a shared understanding of spatial concepts.

Notes

This study we report on was part of a larger study, where more than one school was involved, and which engaged students with different digital experiences. The numbering of participants (see below) was recorded across all participation in schools. The participant numbers of this specific school span from C12 – C31.

References

Abrahamson, D., & Bakker, A. (2016). Making sense of movement in embodied design for mathematics learning. Cognitive Research: Principles and Implications, 1(1), (#33).

Abrahamson, D., & Sánchez-García, R. (2016). Learning is moving in new ways: The ecological dynamics of mathematics education. Journal of the Learning Sciences, 25(2), 203–239.

Abrahamson, D. &Trninic, D. (2011). Toward an embodied-interaction design framework for mathematical concepts. In P. Blikstein & P. Marshall (Eds), Proceedings of the 10th Annual Interaction Design and Children conference (pp. 1–10). Ann Arbor, MI: IDC.

Adachi, T., Goseki, M., Muratsu, K., Mizoguchi, H., Namatame, M., Sugimoto, M., Kusunoki, F., Yamaguchi, E., Inagaki, S., & Takeda, Y. (2013). Human SUGOROKU: Full-body interaction system for students to learn vegetation succession. In J. Hourcade (Ed.), Proceedings of the 12thInternational conference onInteraction design and children (pp. 364–367). New York, NY: ACM.

Ainsworth, S., & Van Labeke, N. (2004). Multiple forms of dynamic representation. Learning and Instruction, 14(3), 241–255.

Alibali, M., & Nathan, M. (2012). Embodiment in mathematics teaching and learning: Evidence from learners’ and teachers’ gestures. Journal of the Learning Sciences, 21(2), 247–286.

Alibali, M., Young, A., Crooks, N., Yeo, A., Wolfgram, M., Ledesma, I., Nathan, M., Church, R., & Knuth, E. (2013). Students learn more when their teacher has learned to gesture effectively. Gesture, 13(2), 210–233.

Als, B., Jensen, J., & Skov, M. (2005). Comparison of think-aloud and constructive interaction in usability testing with children. In Proceedings of the 4th annual interaction design and children conference (pp. 9–16). Boulder, CO: IDC.

Anderson, M. (2005). Embodied cognition: A field guide. Artificial Intelligence, 149(1), 91–130.

Antle, A., Bevans, A., Tanenbaum, J., Seaborn, K., & Wang, S. (2011). Futura: Design for collaborative learning and game play on a multi-touch digital tabletop. In Proceedings of the 5thConference on tangible, embedded and embodied interaction (pp. 93–100). New York, NY: ACM.

Bailey, J., & Bailenson, J. (2017). Considering virtual reality in children’s lives. Journal of Children and Media, 11(1), 107–113.

Barsalou, L. (2008). Grounded cognition. Annual Review of Psychology, 59, 617–645.

Bruce, C., & Hawes, Z. (2015). The role of 2D and 3D mental rotation in mathematics for young children: What is it? Why does it matter? And what can we do about it? ZDM. The International Journal on Mathematics Education, 47(3), 331–343.

Brugman, H., & Russel, A. (2004). Annotating multi-media/multi-modal resources with ELAN. In M. Lino, M. Xavier, F. Ferreira, R. Costa, & R. Silva (Eds.), Proceedings of the 4thinternational conference on language resources and evaluation (pp. 2065–2068). Paris, France: ELRA.

Cress, U., Fischer, U., Moeller, K., Sauter, C., & Nuerk, H.-C. (2010). The use of a digital dance mat for training kindergarten children in a magnitude comparison task. In K. Gomez, L. Lyons, & J. Radinsky (Eds.), Proceedings of the 9thInternational conference of the learning sciences (Vol. 1, pp. 105–112). Chicago, IL: International Society of the Learning Sciences.

DeSutter, D., & Stieff, M. (2017). Teaching students to think spatially through embodied actions: Design principles for learning environments in science, technology, engineering, and mathematics. Cognitive Research: Principles and Implications, 2, (#22).

Duffy, S., Price, S., Volpe, G., Marshall, P., Berthouze, N., Cappagli, G., Cuturi, L., Baud Bovy, G., Trainor, D., & Gori, M. (2017). WeDRAW: Using multisensory serious games to explore concepts in primary mathematics. In G. Aldon & J. Trgalova (Eds.), Proceedings of the 13thInternational conference on Technology in Mathematics Teaching (pp. 467–470). ENS de Lyon: Lyon, France.

Elia, I., Gagatsis, A., & van den Heuvel-Panhuizen, M. (2014). The role of gestures in making connections between space and shape aspects and their verbal representations in the early years: Findings from a case study. Mathematics Education Research Journal, 26(4), 735–761.

Ferrara, F. (2006). Remembering and imagining: Moving back and forth between motion and its representation. In J. Novotná, H. Moraová, M. Krátká, & N. Stehlíková (Eds.), Proceedings of the 30thInternational Group for the Psychology of mathematics EducationConference (Vol. 3, pp. 65–72). Prague, Czech Republic: PME.

Gerofsky, S. (2012). Democratizing ‘big ideas’ of mathematics through multimodality: Using gesture, movement, sound and narrative as non-algebraic modalities for learning about functions. International Journal for Mathematics in Education, 4, 145–150.

Gibson, W., & Brown, A. (2009). Working with qualitative data. London, UK: SAGE Publications.

Goldin-Meadow, S., Cook, S., & Mitchell, Z. (2009). Gesturing gives children new ideas about math. Psychological Science, 20(3), 267–272.

Goldin-Meadow, S., Nusbaum, H., Kelly, S., & Wagner, S. (2001). Explaining math: Gesturing lightens the load. Psychological Science, 12(6), 516–522.

Goodwin, C. (2000). Action and embodiment within situated human interaction. Journal of Pragmatics, 32(10), 1489–1522.

Greenwald, S., Kulik, A., Kunert, A., Beck, S., Fröhlich, B., Cobb, S., & Maes, P. (2017). Workshop in technology and applications for collaborative learning in virtual reality. In B. Smith, M. Borge, E. Mercier, & K. Lim (Eds.), Proceedings of the 12thinternational conference on computer-supported collaborative learning (Vol. 2, pp. 719–726). Philadelphia, PA: International Society of the Learning Sciences.

Hall, R., & Nemirovsky, R. (2012). Modalities of body engagement in mathematical activity and learning. Journal of the Learning Sciences, 21(2), 207–215.

Jakobsen, M., & Hornbæk, K. (2014). Up close and personal: Collaborative work on a high-resolution multi-touch wall-display. ACM Transactions on Computer–Human Interaction, 21(2), –(#11).

Jewitt, C. (2013). Multimodal methods for researching digital technologies. In S. Price, C. Jewitt, et al. (Eds.), The SAGE handbook of digital technology research (pp. 250–266). London, UK: SAGE.

Johnson-Glenberg, M. (2018). Immersive VR and education: Embodied design principles that include gesture and hand controls. Frontiers in Robotics and AI, 5, (#81).

Jones, K., & Tzekaki, M. (2016). Research on the teaching and learning of geometry. In A. Gutiérrez, G. Leder, & P. Boero (Eds.), The second handbook of research on the psychology of mathematics education (pp. 109–149). Rotterdam, The Netherlands: Sense Publishers.

Kaur, H. (2013). Children’s dynamic thinking in angle comparison tasks. In A. Lindmeier & A. Heinze (Eds.), Proceedings of the 37thInternational Group of the Psychology of mathematics education conference (Vol. 3, pp. 145–152). Kiel, Germany: PME.

Kim, M., Roth, W.-M., & Thom, J. (2011). Children’s gestures and the embodied knowledge of geometry. International Journal of Science and Mathematics Education, 9(1), 207–238.

Kovacević, N. (2017). Spatial reasoning in mathematics. In Z. Kolar-Begović, R. Kolar-Šuper, & L. JukićMatić (Eds.), Mathematics education as a science and a profession (pp. 45–65). Osijek, Croatia: Element.

Lakoff, G., & Johnson, M. (1980). Metaphors we live by. Chicago, IL: University of Chicago Press.

Lakoff, G., & Núñez, R. (2000). Where mathematics comes from: How the embodied mind brings mathematics into being. New York, NY: Basic Books.

Ma, J. (2017). Multi-party, whole-body interactions in mathematical activity. Cognition and Instruction, 35(2), 141–164.

Malinverni, L., & Pares, N. (2015). Learning of abstract concepts through full-body interaction: A systematic review. Journal of Educational Technology & Society, 17(4), 100–117.

Mix, K., & Battista, M. (Eds.). (2018). Visualizing mathematics: Research in mathematics education. Cham, Switzerland: Springer.

Nemirovsky, R., Rasmussen, C., Sweeney, G., & Wawro, M. (2012). When the classroom floor becomes the complex plane: Addition and multiplication as ways of bodily navigation. Journal of the Learning Sciences, 21(2), 287–323.

NRC. (2006). Learning to think spatially. Washington, DC: National Academies Press.

Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. New York, NY: Basic Books.

Price, S., Jewitt, C., & Sakr, M. (2015). Exploring whole-body interaction and design for museums. Interacting with Computers, 28(5), 569–583.

Price, S., Sheridan, J., & PontualFalcão, T. (2010). Action and representation in tangible systems: Implications for design of learning interactions. In Proceedings of the 4thInternational conference on tangible, embedded and embodied interaction (pp. 145–152). Cambridge, MA: ACM.

Radford, L., Edwards, L., & Arzarello, F. (2009). Beyond words. Educational Studies in Mathematics, 70(1), 91–95.

Segal, A., Tversky, B., & Black, J. (2014). Conceptually congruent actions can promote thought. Journal of Applied Research in Memory and Cognition, 3(3), 124–130.

Shoval, E. (2011). Using mindful movement in cooperative learning while learning about angles. Instructional Science, 39(4), 453–466.

Sinclair, N., & Bruce, C. (2015). New opportunities in geometry education at the primary school. ZDM: The International Journal on Mathematics Education, 47(3), 319–329.

Smith, C., King, B., & Hoyte, J. (2014). Learning angles through movement: Critical actions for developing understanding in an embodied activity. The Journal of Mathematical Behavior, 36, 95–108.

Smith, L., & Gasser, M. (2005). The development of embodied cognition: Six lessons from babies. Artificial Life, 11(1–2), 13–30.

Somerville, S., & Bryant, P. (1985). Young children’s use of spatial coordinates. Child Development, 56(3), 604–613.

Stevens, R. (2012). The missing bodies of mathematical thinking and learning have been found. Journal of the Learning Sciences, 21(2), 337–346.

Tan, L., & Chow, K. (2018). An embodied approach to designing meaningful experiences with ambient media. Multimodal Technologies and Interaction, 2(2), (#13).

Thom, J., & McGarvey, L. (2015). The act and artifact of drawing(s): Observing geometric thinking with, in, and through children’s drawings. ZDM: The International Journal on Mathematics Education, 47(3), 465–481.

Uttal, D., Meadow, N., Tipton, E., Hand, L., Alden, A., Warren, C., & Newcombe, N. (2013). The malleability of spatial skills: A meta-analysis of training studies. Psychological Bulletin, 139(2), 352–402.

Walkington, C., Srisurichan, R., Nathan, M., Williams, C., & Alibali, M. (2012). Grounding mathematical justifications in concrete embodied experience: The link between action and cognition. Paper presented at the annual conference of the AERA. BC: Vancouver.

Wilson, M. (2002). Six views of embodied cognition. Psychonomic Bulletin and Review, 9(4), 625–636.

Zuckerman, O., & Gal-Oz, A. (2013). To TUI or not to TUI: Evaluating performance and preference in tangible vs graphical user interfaces. International Journal of Human–Computer Studies, 71(7), 803–820.

Acknowledgements

This research was part of the weDRAW project, which received funding from the European Union’s Horizon 2020 Research and Innovation Programme, Grant Agreement No. 732391. The digital environment (Cartesian Garden) discussed here was developed by Mereille Pruner, Stéphane Laffargue, Ignition Factory. We would like to thank the students and teachers of the UK schools who participated in the study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Price, S., Yiannoutsou, N. & Vezzoli, Y. Making the Body Tangible: Elementary Geometry Learning through VR. Digit Exp Math Educ 6, 213–232 (2020). https://doi.org/10.1007/s40751-020-00071-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40751-020-00071-7