Abstract

Objectives

This review will describe how human exercise performance at the highest level is exquisitely orchestrated by a set of responses by all body systems related to the evolutionary adaptations that have taken place over a long history. The review will also describe how many adaptations or features are co-opted (exaptations) for use in different ways and have utility other than for selective advantage.

Methods

A review of the literature by relevant search engines and reference lists in key published articles using the terms, performance, limitations, regulation, trade-offs as related to exercise, indicates that there are at least three areas which could be considered key in understanding the evolutionary basis of human exercise performance.

Results

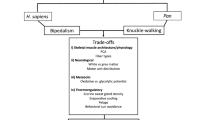

First, there is a basic assumption that exaptations have limitations or capacities which cannot be exceeded which in turn will limit our physical performance. Second, it is thought that some biological systems and tissues have additional capacity which is rarely fully accessed by the organism; referred to as a safety factor. Third, there are biological trade-offs which occur when there is an increase in one trait or characteristic traded for a decrease in another.

Conclusions

Adaptations have resulted in safety factors for body systems and tissues with trade-offs that are most advantageous for human performance for a specific environment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Human physical performance at the highest level requires an exquisitely orchestrated set of responses by all body systems. However, we tend to view physical performance as an outcome and seldom consider the myriad of intricate actions and reactions that need to be completed in a specific order for the performance to be successful, particularly as it relates to high level sporting success. To this end, if we consider the concept of adaptation as it relates to particular features of an organism, whereby there is seemingly a natural fit with the environment, we can mistakenly assume that features were intended to be purposeful and provide a distinct advantage for the organism’s survival. However, in many cases features are co-opted and made use of in other ways.

Evolutionary biologists Gould & Lewontin (1979), in their classic paper highlighted the misleading assumption that any structure is made for the best purpose by illustrating that in architecture, the common structure known as a spandrel is in fact simply a by-product of the necessary construction whereby a spandrel has no particular use. This is illustrated in Fig. 1, where the tapering triangular spaces formed between two rounded arches might provide an artist the opportunity to improve the aesthetics, but as a structure the spandrel has no intended use. Therefore, spandrels do not simply exist to house either artwork or ornaments, such as the impression of the sun in Fig. 1, but the architecture itself has created space, which has subsequently been used.

According to Gould & Lowentin (1979), spandrels do not exist to house artwork, they are a by-product of the necessary architecture (adjoining arches) which is then made use of

In human biology the co-opted features are termed exaptations (Gould & Vrba, 1982), and as described by Tattersall (2012, p. 44), “you can’t use a structure until you have it. As a result, most of our so-called “adaptations” actually start life as exaptations: features that are acquired through random changes in genetic codes, to be co-opted only later for specific uses”. Although it is not intuitively apparent how exaptations might influence sporting performance, there are useful examples to consider. For instance, the gripping forces of the hand muscles and the capacity for tolerating higher joint stress in Homo could have evolved in relation to toolmaking and use (Linde-Medina, 2011). Therefore, this adaptation for tool making and use could be an exaptation for manipulating a tennis racket or baseball bat even though the hand did not evolve to use these specific implements. How these exaptations might influence selection for expert performance is the subject of talent identification programs across almost all areas of competitive sport (Johnston et al., 2018).

It is also important to consider that all exaptations have natural limiting capacities. By extension, body systems and their associated organs also have limitations which cannot be exceeded, and in turn will place a limit on our physical performance. However, this view of biology ignores the reality that biological systems have limitations which are seldom reached, at least in healthy individuals with no apparent underlying pathology. In essence, unless there is some external influence, force or a pharmacological intervention, biological limitations are seldom achieved or exceeded since exceeding these limitations without some control mechanism will likely result, for example, in skeletal muscle rigor, myocardial ischemia, cerebral hypoxia, precipitous fall in blood pressure, heat stroke, and glucopaenic brain damage [Fitts, 1994; Noakes, 2000]. However, it is well recognised that biological systems and tissues will have additional capacity which is rarely fully accessed by the organism; this additional capacity is typically referred to as a safety factor. For instance, limb bones have a capacity to withstand greater loads than usually experienced in a range of situations (Blob et al., 2014), including sporting performances.

Integral to the existence of safety factors is the concept of trade-off which typically occurs when there is an increase in the value of one trait or characteristic, but in doing so means there will be a need for a decrease in the value of another trait. A key aspect of trade-offs is the central role they play in the life history of an organism (Stearns, 1989). Essentially, life history is related to the allocation of limited resources for different physiological functions (Leonard, 2012). Therefore, trade-offs exist so that limited resources, available space and energy are allocated as economically as possible and represent a compromise between performance characteristics since humans evolved to do many things. On this basis, a trade-off will also need to account for the maintenance of the required safety factor. A popular illustrative example is the trade-off between speed and stamina where the best sprinters do not make the best endurance runners or vice-versa (Garland, 2014). By necessity, this trade-off will alter skeletal muscle fibre characteristics where a redistribution of biochemical and structural resources will be maximised to meet the energy and performance requirements either for speed or for stamina (Essén et al., 1975; Maughan et al., 1983); where differences in muscle structure are needed to provide power and endurance, respectively. This trade-off will of course be governed by genetic differences and phenotype plasticity.

The aim of this review is to outline how human performance is influenced by body systems that have capacities which are seldom breached and that safety factors are an integral part of the biology of human exercise performance. In addition, the review will explore biological trade-offs and how these might influence capabilities and exercise performance.

Human Performance, Limitation and Regulation

The question as to what extent human physical performance is determined by physiological limitations is difficult to address because it requires an investigation as to whether there is a matching of the functional needs of the organism with its structure at various levels. Matching of structure and function in biology has a long history with the central tenet being that structure is regulated to satisfy, but not exceed the functional needs of the system, a relationship known as symmorphosis (Taylor & Weibel, 1981). For example, when comparing the respiratory system structures of different sized animals for their maximal oxygen consumptions (VO2max), it appeared that at VO2max the oxygen flow was limited by the various compartments of the respiratory system (air, blood, mitochondria) (Taylor & Weibel, 1981; Weibel et al., 1981). These authors concluded that there were no individual structures in the respiratory system that have capacity far exceeding demand so that animals do not build structures in excess of what is required. Subsequently, when matching structure with functional capacity with respect to the internal compartments of respiration (mitochondrial volume, capillary number and length), the diffusing capacity of the alveoli can theoretically provide more oxygen than can be delivered to and utilized by tissues (Weibel et al., 1991). However, this apparent surplus-to-requirements ratio of the lungs might be due to other functional requirements not measured in the experiments (e.g. immune function, speech, pH balance). Nevertheless, these studies did show that a physiological limitation exists since there was some matching of structure and functional capacity. Since these early studies which sought to understand whether there exists physiological limitation, the concept of symmorphosis has not been substantially advanced since there is difficulty measuring the components of a biological system across all levels and components of that system. More specifically, however, is whether any individual component of a physiological system is optimized so that its capacity exceeds other components.

The basis of physiological limitations in human performance is a necessary construct as it underpins our understanding of the capabilities that can be manipulated through training. In addition, there have been a number of proposed factors thought to limit exercise performance, typically referred to as the cardinal ‘exercise stopper’, including muscle acidification, glycogen depletion, respiratory muscle fatigue (Gandevia, 2001) perception of effort and unpleasant muscle pain (Staiano et al., 2018). A prime example used to illustrate a physiological limit of human endurance is that VO2max measured in elite athletes is limited in large part to cardiac compliance (Levine, 2008). However, this understanding is far from settled as the VO2max appears to be dependent on factors other than oxygen delivery (Beltrami et al. 2012).

The most common physiological limitation that has been a central tenet of human performance is the achievable maximal heart rate (HRmax) during exercise which is frequently estimated using the Eq. 220—age (± 12 beats/min) (Brooks et al., 2005). In negating age, this equation stipulates that HRmax of any person undertaking exercise will be 220 beats per minute regardless of training status; albeit that untrained individuals will unlikely reach this maximum. Notably, there is no published record of how this equation was derived, although Fox & Naughton (1972) suggested that it was derived from studies of North American and European males under varying conditions and with differing criteria for what was considered acceptably close to “maximum” (p. 102).

It appears that since its early suggestion this equation has been used almost without question as being representative of a maximal physiological limit for heart rate. However, it is now thought this mathematical representation of a physiological limitation is flawed as there is so much interindividual variation generally, but particularly in children or adolescents (Verschuren et al., 2011). Regardless of mathematical models, HRmax is fundamentally used as a measure of a cardiovascular ceiling. Since the heart cannot beat any faster than the cardiac muscle can contract and relax, it would be incorrect to suggest that the heart has no limit. However, using HRmax as a surrogate for establishing a limit to human performance is also potentially flawed since this forms only one component of cardiac output and negates the coordinated effort of multiple systems needed to achieve the particular performance.

The maximum heart rate equation is an example of the model of physiological limitation in human performance. Conversely, there is a competing model which posits that physiological regulation is potentially more explanatory with respect to human exercise performance (St Clair Gibson & Noakes, 2004). Originally proposed by Ulmer (1996), a system for the regulation of muscular metabolic rate by psychophysiological feedback would allow humans to optimally adjust their level of performance during heavy exercise. For example, when either cycling (Kay et al., 2001) or running (Marino et al., 2004) at high intensity, individuals adjust their skeletal muscle recruitment strategy to enable the successful completion of the exercise bout.

These two models, limitation vs. regulation, can be illustrated using basic engineering principles. For instance, a bridge that has collapsed is an example of a system that has exceeded its limiting condition (limitation or catastrophe). Whereas, a bridge that remains intact indicates that the system has not yet exceeded its limiting condition, but will fail the instant the limiting condition is exceeded. In contrast, the structure of a bridge is regulated by elements such as down cables, hydraulic buffers, aerodynamics and resonance capacity (Billah & Scanlan, 1991). The bridge and the additional structural elements respond to what is termed a live load which is a moment-by-moment change in conditions caused by vehicles, wind, earthquake and so on (Nowak, 1993). Therefore, until the system actually fails the factors which precipitate its failure are essentially unknown. Nevertheless, our observations lead us to conclude that since skyscrapers, bridges and the like rarely collapse, engineers have either made some assumptions about what might cause failure, or that they build and design structures which consider all the possibilities which might lead to system failure. As such, engineers base their calculations of limitations on what are termed safety factors (Butt & Cheatham, 1995). This is a fundamental concept that is also related to biological “design” and the reserve capacities which are inherent characteristics of organs and related systems (Diamond, 1991; Toloza et al., 1991).

Safety Factors

A classic paper by Meltzer (1907) systematically described what was thought to be the maximum safety factor for any particular organ and its associated system, but importantly noted that it was “self-evident that nature is economical and wastes neither material or energy” (p. 484). It was also noted that “organs and complex tissues…are built on a plan of great luxury. Some organs possess at least twice as much tissue as even a maximum of normal activity would require. In other organs, especially in those with internal secretion, the margin of safety amounts sometimes to ten or fifteen times the amount of the actual need” (p. 488–489). However, what is not particularly easily discerned is how a specific safety factor might be determined. Since a safety factor is related to the inherent capacity of a system beyond the expected load that system is likely to encounter, the safety factor (SF) can be determined by dividing the required capacity (C) by the expected maximum load (L) so that C/L = SF (Eq. 1).

A typical example for determining a safety factor is the passenger elevator, which according to engineering principles is normally a SF = 12. This SF indicates that the strength of the cables attached to the elevator have a capacity 12 times that of the expected load of the elevator under normal operation. The reason for the excessive capacity or large reserve is that it provides great confidence that the structure is not likely to fail where failure will potentially result in a significant penalty.

The relevance of this modelling in human biology is not readily appreciated, although it can be observed at various levels of organisation. In biological terms it would be of immense practical relevance to be able to delineate the safety factors which might limit human physical performance, since we assume that pinnacle performance is related to attaining, or at least avoiding some physiological limit. To this end, biological safety factors can be dichotomised and described at both the macro and micro level of organisation. However, with respect to any comparison of safety factors in engineering versus biology, there is a definitive caveat to consider. Engineers have the luxury of designing structures and systems from scratch, whereas biology must make use of existing materials. This means that any biological system must account for material being used or co-opted for multiple functions. For example, skin tissue has multiple functions: a semipermeable barrier, thermoregulation, osmoregulation, sensation (Lindstedt & Jones, 1987). As a consequence of having to ‘cobble’ together materials that are already available, biological systems are never truly optimal, they are simply built ‘reasonably’ so as to satisfy for the appropriate safety factor (Garland, 1988).

Macro Level Safety Factors

A prime example of a safety factor at a macro level of organisation is that any one individual can donate a kidney and still retain normal renal function. This observation indicates that the safety factor for renal function is at least two. It has also been shown that gas exchange of the lungs clearly has a large safety factor, as lobectomy has little effect on VO2max six months post resection, and pneumonectomy (removal of entire lung) results in only a 20% not a 50% decrease in VO2max (Bolliger et al., 1996). Given that perceived exertion during exercise seems to remain unchanged for either lobectomy or pneumonectomy, pulmonary functional reserve is significant. Notably, minimal change in exercise capacity following the removal of lobes also indicates that required ventilation with only half of the lung tissue is adequate.

In contrast, the safety factors of bone seems more complex and appears less uniform across species. As shown in Table 1, compressive resistance forces of bone indicate that safety factors can vary between 3.2 and 1.0 in terrestrial animals, including humans.

Importantly, the safety factor cannot be viewed in isolation for any specific bone. For example, intervertebral discs, ligaments and surface area will contribute to the overall compressive capacity (Alexander, 1981). Notably, the strength of bone material does not appear to be similar between small and large animals, where large animals might have a much lower safety factor than small animals (Biewener, 1982). It also appears that the material strength of bone is much more dependent on how animals develop constant peak ground forces where peak force decreases as body size increases (Cavagna et al., 1977). Conversely, small animals have a relative higher safety factor for bone than do larger animals. This indicates that bone tissue is modeled by factors other than constant load generated under normal locomotion but responds to the higher stresses that result from accelerations and decelerations that are typical of smaller animals (Biewener, 1982). This would be a key adaptation which would increase the chance of survival under predation; quick escapes and darting runs are a hallmark of smaller animals when preyed upon by larger, slower animals. The example of safety factor of bone used here highlights the fact that in biological terms safety factors are likely to be very complex and directly related to the specific interaction between a given species and their environment.

There are two further observations with respect to the safety factors of bone tissue which relate to physical performance. First, safety factors are occasionally exceeded such that bones do fracture. Second, some bones are poorly adapted for resisting fracture (e.g. auditory ossicles, femoral neck) whereas, some bones are highly resistant to fracture (e.g. skull) (Currey, 2003). Notably, long bones are subject to, and respond to external loads, resulting in safety factors which resist fracture in the direction of the external forces. In contrast, with flat bones of the skull, it appears that direct application of external loads is not necessary to add bone tissue and establish safety factors to resist fracture. By comparing the bone thicknesses of the tibia and the skull in miniature swine and armadillo in pen restricted versus those allowed to exercise, the development of tibial bone mass and cranial vault thickness was greater in the exercise group (Lieberman, 1996). These findings indicate that along with normal function (mastication, chewing), cranial vault bones respond to loading from exercise without the apparent need for direct force. Therefore, it appears that bones of the skull respond to systemic rather than localized loading and that adequate bone strength is achieved in flat bones through mechanical loading of long bones. This seems a reasonable assumption since failure of the cranial vault would be extremely costly. This relationship seems to also hold true for humans, although the cranial vault thickness (bregma and parietal eminence) is possibly associated with the required level of subsistence, or more precisely when comparing post-industrial and pre-industrial farming populations (Lieberman, 1996).

The salient point to note with respect to bone is that the limiting point is fracture, and that activities of daily living, which include exercise do not normally include fracture, at least in healthy individuals. As a consequence, the safety factors reflect the resistance to fracture so that the day-to-day variations in loadings are a result of damage inflicted by fatiguing activity and eventual remodeling. Conversely, the direction and compressive loading experienced at fracture must be different to loading which stimulates the safety factor of individual bones. Although a putative mechanism which determines the safety factor of biological tissue has not been identified, it has been noted that low safety factors result where loads are predictable (long bones) but are generally higher where loads are highly unpredictable (skull) and the penalty for failure is potentially very high (Alexander, 1981).

Micro Level Safety Factors

In considering how a biological safety factor is derived, variables such as availability of materials, deterioration and replacement costs are critical. As a general rule, the higher the cost of materials the lower the safety factor tends to be. This economic model favours matching capacity to demand since this would conserve energy and resources to be available directly or indirectly for reproductive opportunity.

To illustrate this, let us consider one of the earliest descriptions relating pancreatic enzyme outputs and malabsorption. It has been shown that functional reserve capacity of the pancreas in pancreatitis versus healthy subjects did not occur until enzyme output dropped to 10% or less of the normal level (DiMagno et al., 1973). The authors suggested that 90% of the pancreas will need to be destroyed before malabsorption occurs. In a study that reported 75% pancreatectomy, no significant malabsorption was reported provided that additional exogenous insulin was administered (Kalser et al., 1968). These studies indicate that the pancreas has an enormous reserve for enzyme secretion with a safety factor of about 10. In contrast to other organs such as the kidney or lungs where there are two, natural selection has conferred one pancreas but in doing so has endowed the tissue with enhanced capacity to do its work. From a health perspective, the large safety factor makes this observation particularly relevant given the enormous and unrelenting work the pancreas undertakes as opposed to what it would take to damage the pancreas and develop Type 2 diabetes mellitus. Biological capacity has a cost because the resources and space to produce economy are not limitless. An excessive amount of material for one component of a biological system will reduce the resources available for another. However, whether having two kidneys or two lungs outweighs the need for only one pancreas with an enhanced cellular capacity is a difficult proposition to prove given that 7-year survival following pancreatectomy is 75.9 and 30.9% for benign and malignant tumors, respectively (Zakaria et al., 2016).

Acquiring, consuming and utilizing energy is intimately connected to our capacity to perform physically and mentally. Therefore, an area where safety factors have been well investigated is the regulatory adaptation of the intestine and the relationship with energy intake and consumption. For example, the intestinal brush border and villi with the folding of the intestine result in an enormous surface area between the intestine and the substances passing through it. This enormous surface area has an absorptive capacity far in excess of the daily intake so that several kilograms of carbohydrates, 500 g of fat and 700 g of protein with more than 20 L of water per day can be processed (Guyton & Hall, 2006). This maximal processing capacity as a product of the surface area available for absorption is in contrast to the average energy intake (load) which is ~ 1.2 kg per day (National Health and Medical Research Council, 2013). This adaptation for large capacity allows us to utilise and store fuel at a rate that would enhance our prospects of survival. In fact, our capacity to store fat far exceeds that of other primates and represents a large safety factor with respect to energy storage (Swain-Lenz et al., 2019). However, this capacity needs to be balanced against recent data showing that sustained metabolic scope for endurance athletes is likely to have an alimentary supply limit of ~ 2.5 x basal metabolic rate (Thurber et al., 2019). This suggests that even with a large capacity for energy intake, there is a ceiling for metabolic supply after which expenditure requires consuming energy reserves. Similar to other animals, we will consume enough and store what is possible if we anticipate low supply. This point is important as it highlights that we are adapted for energy storage and abundance (Guyton & Hall, 2006) (p. 961–962). Unfortunately, our understanding for the enormous capacity for intestinal absorption does not come from human studies but from animal models. For example, when mice living in a normal ambient temperature of 22 °C were transferred to a less optimal temperature of 6 °C for 28 days, the most immediate response observed within 24 h of cold exposure was a 68% increase in food intake suggesting that the intestinal capacity of the mice is at least 2.5 times the normal load (Toloza et al., 1991). However, within 4 days of cold exposure, the intestine hypertrophied by 18% and intestinal glucose uptake increased from 6 to 19 mmol/day. Since the additional uptake encroached upon the safety factor leaving virtually no reserve for nutrient uptake, the mice established a new capacity of 24 mmol/day uptake; an increase of 25%. Notably, complimentary organs such as the liver, kidneys and spleen also hypertrophied so that higher metabolic and absorption rates were also met. This provides evidence for symmorphosis in this particular system whereby one particular part of the system does not have capacity that far exceeds capacity of other parts.

However, it is not just the additional capacity that is important but its reversal which has been shown when lactating female mice no longer required the additional nutrients (Hammond & Diamond, 1992). Thus, what can be concluded is that biological tissue is energetically costly, so atrophy ensues if it is not needed. This fits with the fundamental principle that nature does not waste material or energy but aims to preserve a safety factor which is economical.

Skeletal Muscle Safety Factors

The examples dealing with complimentary organs such as the liver, lungs, intestine and pancreas simply highlight the way in which these systems acutely adapt and reflect the need for nourishment, energy and the storage of fuel under the specific conditions of temperature and reproduction. However, a system that is critical to human performance and our ability to produce and sustain force is the musculoskeletal system.

Skeletal muscle is a complex tissue intimately connected with the central nervous system (CNS) relying on chemical differences and the generation of electrical impulses. A key component on which performance relies upon is neuromuscular transmission during maximal effort. One intriguing adaptation is that neurotransmitter released at the motor nerve terminal by singular impulses far exceeds the threshold for excitation of the muscle fibre. This reflects a significant reserve at this point of the transmission. However, force production results from a series of events normally precipitated by the CNS and ending at the actual muscle fibre. As such there are likely safety factors for tissues along this continuum.

Although the skeletal muscle safety factor can be ascertained and defined in a number of ways (Wood & Slater, 2001) it appears that the chemical transmitter acetylcholine (ACh) quanta (quantum) released, compared to the number required to generate the action potential is key. There are two architectural elements which are important for the safety factor at this point; the number of Ach receptors (AChRs) and the number of post-synaptic folds.

From animal studies, the safety factor for post-synaptic receptors far exceeds what would be required for successful chemical transmission (Chang et al., 1975; Paton & Waud, 1967). In humans, the quantity of folding in the post-synaptic membrane of the neuromuscular junction is relatively large compared with other species (e.g. 10 times greater in man than in frog) (Slater et al., 1992). It is also known that ACh quantum is significantly less in humans compared with other species, leaving the safety factor for chemical transmission to be marginally greater than one. Therefore, to improve the possibility for chemical transmission, and hence the safety factor, post-synaptic folding in humans is substantially high, amplifying the effect of ACh release. This means that the comparatively low level of ACh release in humans is balanced by the large post-synaptic folding. The reasons for this difference between quantum and post-synaptic folding are not entirely clear but one possibility is that as animal size increases there would be a need to innervate more skeletal muscle fibres per motor unit, limiting the available material in the pre-synaptic space (Wood & Slater, 2001). In terms of skeletal muscle fibre type, post-synaptic folding appears to be greater for Type II than for the Type I fibres (Ellisman et al., 1976; Padykula & Gauthier, 1970). In addition, there are differences in the properties of sodium (Na+) channels between fibre types (Ruff & Whittlesey, 1992) where Na+ channels contribute to the speed of the muscle action potential and its resistance to termination. This arrangement allows Type II fibres to fire at high frequencies, whereas Type I fibres can sustain tonic activation. From an evolutionary perspective this adaptation provides safety factors (quantum, post-synaptic folding and voltage gates) that are aligned to the functional capacity of the specific skeletal muscle fibre type. For instance, Type I fibres are specifically capable of being in a state of continuous action and able to resist fatigue in comparison to Type II fibres.

Evolutionary Basis of Trade-Offs and Human Exercise Performance

If biological systems have finite material, space and energy, then a key element of biological capacity is the apparent trade-off that will need to occur due to these constraints which would also limit the increase in the capacities of the two traits at the same time (Garland, 1988). The example of the safety factors related to skeletal muscle as described above illustrate the principle of trade-off with respect to exercise performance. Since we know that speed/power and endurance are largely determined by the fibre type composition, improving strength or endurance will incur a trade-off with the other. The most recognised constraint on physical performance is the ratio of slow-to-fast twitch muscle fibres (Garland & Carter, 1994). This particular trade-off has been studied in a range of species at individual and population levels (Bennett et al., 1984; Garland Jr, 1988; Vanhooydonck et al., 2001; Van Damme et al., 2002; Wilson et al., 2002; Wilson & James, 2004) where there is an apparent negative correlation between maximal power/force generation and fatigue resistance as a function of the fibre type composition. However, this relationship does not seem to hold for a trade-off between speed and endurance at the population level (Wilson & James, 2004). In an analysis of 600 world-class decathletes, individual performances in any pair of disciplines were positively correlated with the entire data set so the expected trade-off between speed and endurance was not detected (Van Damme et al., 2002). In contrast, when the analysis was restricted to athletic individuals of comparable ranking, trade-offs between particular traits were evident so that high level performance in power events (100 m sprint, shot put, long jump) were negatively correlated with the performances in the endurance event (1500 m). These data at least confirm that high level performance in one type of activity might not correlate to high level performance in another at an individual athlete level. Therefore, it is not controversial to state that humans evolved and are adapted to do many things and are not particularly ‘optimised’ for any sporting event.

Although humans are endowed with varying numbers of Type I and Type II fibres, it is the unique ability of the fibre type which contributes to whether a species is either adapted for endurance or for power/strength. There is a long-held view that training modality for either endurance or power (concurrent training) may not be conducive to the best improvements in the alternate modality (Dudley & Djamil, 1985). However, on close inspection this relationship is not necessarily the case because intense strength training leads to improvements in higher intensity cycling and running in trained individuals (Hickson et al., 1988; Hoff et al., 2002). The training regimen which consisted of intense resistance exercise 3 d per week over 10 weeks improved leg strength as measured by 1 x repetition maximum by 30% albeit with no appreciable changes in muscle girth, fibre type or oxidative enzymes (Hickson et al., 1988). In addition, high intensity endurance exercise of 4–8 min duration improved up to 13% and longer duration exercise by 16% with no change in VO2max. Since improvements in endurance do not necessarily accompany increases in VO2max and oxidative enzymes remain unchanged, to what can improvement in endurance be attributed? The adaptations that are likely to be of significance are those which alter neural input to the skeletal muscles since resistance training will lead to more synchronous motor unit firing and more efficient force production (Carroll et al., 2001). In fact, improved running economy observed in short endurance exercise of 5 km post explosive strength training, has been largely attributed to changes in neuromuscular characteristics and mechanical properties of the muscle tendon rather than aerobic capacity per se (Paavolainen et al., 1999; Kubo et al., 2002). Improvements in endurance capacity resulting from resistance training can also be explained from an evolutionary perspective. As outlined recently by Best (2020), strength and endurance training results in more favourable endurance performance because the inherent plasticity of human skeletal muscle is skewed toward enhanced endurance and fatigue resistance. Trade-offs also exist to balance the cost of future reproduction with the cost of survival. For example, when male ultra-endurance runners were sampled pre-and post-race for testosterone and immunological function, it was evident that libido and testosterone decreased but immune response was heightened (Longman et al., 2018). The conclusion was that there was a shift from reproductive priorities toward maintaining defence during a costly energetic period.

Summary

Adaptations have resulted in producing safety factors for body systems and tissues with trade-offs that are most advantageous for human performance for a specific environment. Although body systems and tissues have limits, as evidenced by bone fractures, these limits are seldom reached in the normal course of daily life and in sporting performances. This is likely because our capabilities are regulated within the safety factors of the tissues and systems. However, evolution has also provided for trade-offs where necessary so that a specific capacity can be developed but usually to the detriment of another.

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Alexander, R. M. (1981). Factors of safety in the structure of animals. Science Progress, 67(265), 109–130. https://doi.org/10.2307/43420519

Bennett, A. F., Huey, R. B., & John-Alder, H. (1984). Physiological correlates of natural activity and locomotor capacity in two species of lacertid lizards. Journal of Comparative Physiology B, 154(2), 113–118

Best, A. W. (2020). Why does strength training improve endurance performance. American Journal of Human Biology. https://doi.org/10.1002/ajhb.23526

Biewener, A. A. (1982). Bone strength in small mammals and bipedal birds: Do safety factors change with body size. Journal of Experimental Biology, 98(1), 289–301. http://jeb.biologists.org/content/jexbio/98/1/289.full.pdf?download=true

Billah, K. Y., & Scanlan, R. H. (1991). Resonance, Tacoma Narrows bridge failure, and undergraduate physics textbooks. American Journal of Physics, 59(2), 118–124. https://doi.org/10.1119/1.16590

Blob, R. W., Espinoza, N. R., Butcher, M. T., Lee, A. H., D’Amico, A. R., Baig, F., & Sheffield, K. M. (2014). Diversity of limb-bone safety factors for locomotion in terrestrial vertebrates: Evolution and mixed chains. Integrative and Comparative Biology, 54(6), 1058–1071. https://doi.org/10.1093/icb/icu032

Bolliger, C. T., Jordan, P., Solèr, M., Stulz, P., Tamm, M., Wyser, C. & Perruchoud, A. P. (1996). Pulmonary function and exercise capacity after lung resection. European Respiratory Journal, 9(3), 415–421. https://doi.org/10.1183/09031936.96.09030415

Brooks, G. A., Fahey, T. D., & Baldwin, K. M. (2005). Human bioenergetics and its applications. New York: McGrawn-Hill

Butt, A. H., & Cheatham, J. B. (1995). Mechanical analysis & design. Prentice Hall

Carroll, T. J., Riek, S., & Carson, R. G. (2001). Neural adaptations to resistance training. Sports medicine, 31(12), 829–840

Cavagna, G. A., Heglund, N. C., & Taylor, C. R. (1977). Mechanical work in terrestrial locomotion: Two basic mechanisms for minimizing energy expenditure. American Journal of Physiology-Regulatory, Integrative and Comparative Physiology, 233(5), R243–R261. https://www.physiology.org/doi/pdf/10.1152/ajpregu.1977.233.5.R243

Chang, C. C., Chuang, S. T., & Huang, M. C. (1975). Effects of chronic treatment with various neuromuscular blocking agents on the number and distribution of acetylcholine receptors in the rat diaphragm. Journal of Physiology, 250(1), 161–173. https://www.ncbi.nlm.nih.gov/pubmed/170397

Currey, J. D. (2003). How well are bones designed to resist fracture. Journal of Bone and Mineral Research, 18(4), 591–598. https://doi.org/10.1359/jbmr.2003.18.4.591

Diamond, J. (1991). Evolutionary design of intestinal nutrient absorption: Enough but not too much. News in Physiological Sciences, 6(2), 92–96. https://journals.physiology.org/doi/pdf/10.1152/physiologyonline.1991.6.2.92

DiMagno, E. P., Go, V. L. W., & Summerskill, W. H. J. (1973). Relations between pancreatic enzyme outputs and malabsorption in severe pancreatic insufficiency. New England Journal of Medicine, 288(16), 813–815

Dudley, G. A., & Djamil, R. (1985). Incompatibility of endurance-and strength-training modes of exercise. Journal of Applied Physiology, 59(5), 1446–1451. https://doi.org/journals.physiology.org/doi/abs/10.1152/jappl.1985.59.5.1446

Ellisman, M. H., Rash, J. E., Staehelin, L. A., & Porter, K. R. (1976). Studies of excitable membranes. II. A comparison of specializations at neuromuscular junctions and nonjunctional sarcolemmas of mammalian fast and slow twitch muscle fibers. Journal of Cell Biology, 68(3), 752–774. https://rupress.org/jcb/article/68/3/752/18656

Essén, B., Jansson, E., Henriksson, J., Taylor, A. W., & Saltin, B. (1975). Metabolic characteristics of fibre types in human skeletal muscle. Acta Physiologica Scandinavica, 95(2), 153–165. https://doi.org/10.1111/j.1748-1716.1975.tb10038.x

Fitts, R. H. (1994). Cellular mechanisms of muscle fatigue. Physiological Reviews, 74, 49–94. https://doi.org/10.1152/physrev.1994.74.1.49

Fox, III, S. M., & Naughton, J. P. (1972). Physical activity and the prevention of coronary heart disease. Preventive Medicine, 1(1–2), 92–120

Gandevia, S. (2001). Spinal and supraspinal factors in human muscle fatigue. Physiological Reviews, 81(4), 1726–1789

Garland, T. (2014). Trade-offs. Current Biology, 24(2), R60–61. https://doi.org/10.1016/j.cub.2013.11.036

Garland Jr, T. (1988). Genetic basis of activity metabolism. I. Inheritance of speed, stamina, and antipredator displays in the garter snake Thamnophis sirtalis. Evolution, 42(2), 335–350. https://onlinelibrary.wiley.com/doi/pdf/10.1111/j.1558-5646.1988.tb04137.x

Garland, T. Jr., & Carter, P. A. (1994). Evolutionary physiology. Annual Review of Physiology, 56(1), 579–621. https://www.annualreviews.org/doi/pdf/10.1146/annurev.ph.56.030194.003051

Gould, S. J., & Lewontin, R. C. (1979). The spandrels of San Marco and the Panglossian paradigm: A critique of the adaptationist programme. Proceedings of the Royal Society B: Biological Science, 205(1161), 581–598. https://doi.org/10.1098/rspb.1979.0086

Gould, S. J., & Vrba, E. S. (1982). Exaptation—a missing term in the science of form. Paleobiology, 8(1), 4–15

Guyton, A. C., & Hall, J. E. (2006). Textbook of medical physiology (11th ed.). WB Sounders Company

Hammond, K. A., & Diamond, J. (1992). An experimental test for a ceiling on sustained metabolic rate in lactating mice. Physiological Zoology, 65(5), 952–977. https://doi.org/10.2307/30158552

Hickson, R. C., Dvorak, B. A., Gorostiaga, E. M., Kurowski, T. T., & Foster, C. (1988). Potential for strength and endurance training to amplify endurance performance. Journal of Applied Physiology, 65(5), 2285–2290. https://doi.org/journals.physiology.org/doi/abs/10.1152/jappl.1988.65.5.2285

Hoff, J., Gran, A., & Helgerud, J. (2002). Maximal strength training improves aerobic endurance performance. Scandinavian Journal of Medicine & Science in Sports, 12(5), 288–295. https://onlinelibrary.wiley.com/doi/abs/10.1034/j.1600-0838.2002.01140.x

Johnston, K., Wattie, N., Schorer, J., & Baker, J. (2018). Talent identification in sport: A systematic review. Sports Medicine, 48(1), 97–109. https://doi.org/10.1007/s40279-017-0803-2

Kalser, M. H., Leite, C. A., Warren, W. D., Harper, C. L., & Jacobson, J. (1968). Fat assimilation after massive distal pancreatectomy. New England Journal of Medicine, 279(11), 570–576

Kay, D., Marino, F. E., Cannon, J., St Clair Gibson, A., Lambert, M., & Noakes, T. D. (2001). Evidence for neuromuscular fatigue during high-intensity cycling in warm, humid conditions. European Journal of Applied Physiology, 84, 115–121

Kubo, K., Kanehisa, H., & Fukunaga, T. (2002). Effects of resistance and stretching training programmes on the viscoelastic properties of human tendon structures in vivo. Journal of Physiology, 538(1), 219–226. https://physoc.onlinelibrary.wiley.com/doi/pdf/10.1113/jphysiol.2001.012703

Leonard, W. R. (2012). Laboratory and field methods for measuring human energy expenditure. American Journal of Human Biology, 24(3), 372–384. https://doi.org/10.1002/ajhb.22260

Levine, B. D. (2008). VO2,max: What do we know, and what do we still need to know. Journal of Physiology, 586, 25–34

Lieberman, D. E. (1996). How and why humans grow thin skulls: Experimental evidence for systemic cortical robusticity. American Journal of Physical Anthropology, 101(2), 217–236

Linde-Medina, M. (2011). Adaptation or exaptation? The case of the human hand. Journal of Biosciences, 36(4), 575–585. https://doi.org/10.1007/s12038-011-9102-5

Lindstedt, S. L., & Jones, J. H. (1987). Symmorphosis: The concept of optimal design. In M. E. Feder, A. F. Bennett, W. W. Burggren, & R. B. Huey (Eds.), New directions in ecological physiology (pp. 289–309). Cambridge University Press

Longman, D. P., Prall, S. P., Shattuck, E. C., Stephen, I. D., Stock, J. T., Wells, J. C. K., & Muehlenbein, M. P. (2018). Short-term resource allocation during extensive athletic competition. American Journal of Human Biology, 30, e23052. https://doi.org/10.1002/ajhb.23052

Marino, F. E., Lambert, M. I., & Noakes, T. D. (2004). Superior performance of African runners in warm humid but not in cool environmental conditions.Journal of Applied Physiology, 96(1), 124–130. https://doi.org/10.1152/japplphysiol.00582.2003

Maughan, R. J., Watson, J. S., & Weir, J. (1983). Relationships between muscle strength and muscle cross-sectional area in male sprinters and endurance runners. European Journal of Applied Physiology, 50(3), 309–318

Meltzer, S. J. (1907). The factors of safety in animal structure and animal economy. Science, 25(639), 481–498. https://www.jstor.org/stable/pdf/1633836.pdf

National Health and Medical Research Council. (2013). Australian dietary guidelines. Department of Health and Ageing

Noakes, T. D. (2000). Physiological models to understand exercise fatigue and the adaptations that predict or enhance athletic performance. Scandinavian Journal of Medicine & Science in Sports, 10(3), 123–145. https://doi.org/10.1034/j.1600838.2000.010003123.x

Nowak, A. S. (1993). Live load model for highway bridges. Structural Safety, 13(1–2), 53–66

Paavolainen, L., Hakkinen, K., Hamalainen, I., Nummela, A., & Rusko, H. (1999). Explosive-strength training improves 5-km running time by improving running economy and muscle power. Journal of Applied Physiology, 86(5), 1527–1533. https://doi.org/journals.physiology.org/doi/full/10.1152/jappl.1999.86.5.1527

Padykula, H. A., & Gauthier, G. F. (1970). The ultrastructure of the neuromuscular junctions of mammalian red, white, and intermediate skeletal muscle fibers. The Journal of Cell Biology, 46(1), 27–41. https://rupress.org/jcb/article/46/1/27/1713

Paton, W. D. M., & Waud, D. R. (1967). The margin of safety of neuromuscular transmission. Journal of Physiology, 191(1), 59–90. https://physoc.onlinelibrary.wiley.com/doi/pdf/10.1113/jphysiol.1967.sp008237

Ruff, R. L., & Whittlesey, D. (1992). Na+ current densities and voltage dependence in human intercostal muscle fibres. The Journal of physiology, 458(1), 85–97. https://physoc.onlinelibrary.wiley.com/doi/pdf/10.1113/jphysiol.1992.sp019407

Slater, C. R., Lyons, P. R., Walls, T. J., Fawcett, P. R., & Young, C. (1992). Structure and function of neuromuscular junctions in the vastus lateralis of man. Brain, 115(2), 451–478

Gibson, S. C., A., & Noakes, T. D. (2004). Evidence for complex system integration and dynamic neural regulation of skeletal muscle recruitment during exercise in humans. British Journal of Sports Medicine, 38(6), 797–806. https://doi.org/10.1136/bjsm.2003.009852

Staiano, W., Bosio, A., de Morree, H. M., Rampinini, E., & Marcora, S. (2018). The cardinal exercise stopper: Muscle fatigue, muscle pain or perception of effort. Progress in Brain Research, 240, 175–200. https://doi.org/10.1016/bs.pbr.2018.09.012

Stearns, S. C. (1989). Trade-offs in life-history evolution. Functional ecology, 3, 259–268

Swain-Lenz, D., Berrio, A., Safi, A., Crawford, G. E., & Wray, G. A. (2019). Comparative analyses of chromatin landscape in white adipose tissue suggest humans may haveless beigeing potential than other primates. Genome Biology and Evolution, 11(7), 1997–2008. https://doi.org/10.1093/gbe/evz134

Tattersall, I. (2012). Masters of the planet: The search for our human origins. St. Martin’s Press

Taylor, C. R., & Weibel, E. R. (1981). Design of the mammalian respiratory system. I. Problem and strategy. Respiration physiology, 44(1), 1–10

Thurber, C., Dugas, L. R., Ocobock, C., Carlson, B., Speakman, J. R., & Pontzer, H. (2019). Extreme events reveal an alimentary limit on sustained maximal human energy expenditure. Science Advances, 5(6), eaaw0341. https://doi.org/10.1126/sciadv.aaw0341

Toloza, E. M., Lam, M., & Diamond, J. (1991). Nutrient extraction by cold-exposed mice: A test of digestive safety margins. American Journal of Physiology-Gastrointestinal and Liver Physiology, 261(4), G608–G620. https://doi.org/journals.physiology.org/doi/abs/10.1152/ajpgi.1991.261.4.g608

Ulmer, H. V. (1996). Concept of an extracellular regulation of muscular metabolic rate during heavy exercise in humans by psychophysiological feedback. Experientia, 52(5), 416–420

Van Damme, R., Wilson, R. S., Vanhooydonck, B., & Aerts, P. (2002). Evolutionary biology: Performance constraints in decathletes. Nature, 415(6873), 755–756

Vanhooydonck, B., Van Damme, R., & Aerts, P. (2001). Speed and stamina trade-off inlacertid lizards. Evolution, 55(5), 1040–1048. https://onlinelibrary.wiley.com/doi/pdf/10.1111/j.0014-3820.2001.tb00620.x

Verschuren, O., Maltais, D. B., & Takken, T. (2011). The 220-age equation does not predict maximum heart rate in children and adolescents. Development Medicine & Child Neurology, 53(9), 861–864. https://doi.org/10.1111/j.1469-8749.2011.03989.x

Weibel, E. R., Taylor, C. R., Gehr, P., Hoppeler, H., Mathieu, O., & Maloiy, G. M. O. (1981). Design of the mammalian respiratory system. IX. Functional and structural limits for oxygen flow. Respiration Physiology, 44(1), 151–164

Weibel, E. R., Taylor, C. R., & Hoppeler, H. (1991). The concept of symmorphosis: A testable hypothesis of structure-function relationship. Proceedings of the National Academy of Sciences, 88(22), 10357–10361

Wilson, R. S., James, R. S., & Van Damme, R. (2002). Trade-offs between speed andendurance in the frog Xenopus laevis: A multi-level approach. Journal of Experimental Biology, 205(8), 1145–1152. https://doi.org/10.1242/jeb.205.8.1145

Wilson, R. S., & James, R. S. (2004). Constraints on muscular performance: Trade-offs between power output and fatigue resistance. Biology Letters, 271(Suppl 4), 222–225. https://doi.org/10.1098/rsbl.2003.0143

Wood, S. J., & Slater, C. R. (2001). Safety factor at the neuromuscular junction. Progress in Neurobiology, 64(4), 393–429

Zakaria, H. M., Stauffer, J. A., Raimondo, M., Woodward, T. A., Wallace, M. B., & Asbun, H. J. (2016). Total pancreatectomy: Short- and long-term outcomes at a high-volume pancreas center. World Journal of Gastrointestinal Surgery, 8(9), 634–642. https://doi.org/10.4240/wjgs.v8.i9.634

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author has no conflicts to declare.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marino, F.E. Adaptations, Safety Factors, Limitations and Trade-Offs in Human Exercise Performance. Adaptive Human Behavior and Physiology 8, 98–113 (2022). https://doi.org/10.1007/s40750-022-00185-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40750-022-00185-9