Abstract

In this paper, we propose a multi-stage evolutionary framework with adaptive selection (MSEFAS) for efficiently handling constrained multi-objective optimization problems (CMOPs). MSEFAS has two stages of optimization in its early phase of evolutionary search: one stage that encourages promising infeasible solutions to approach the feasible region and increases diversity, and the other stage that enables the population to span large infeasible regions and accelerates convergence. To adaptively determine the execution order of these two stages in the early process, MSEFAS treats the optimization stage with higher validity of selected solutions as the first stage and the other as the second one. In addition, at the late phase of evolutionary search, MSEFAS introduces a third stage to efficiently handle the various characteristics of CMOPs by considering the relationship between the constrained Pareto fronts (CPF) and unconstrained Pareto fronts. We compare the proposed framework with eleven state-of-the-art constrained multi-objective evolutionary algorithms on 56 benchmark CMOPs. Our results demonstrate the effectiveness of the proposed framework in handling a wide range of CMOPs, showcasing its potential for solving complex optimization problems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In real-world applications, a variety of optimization problems encompass multiple conflicting objectives and numerous equality and/or inequality constraints. Examples of such problems can be found in the design of tall buildings [1], optimization of robot grippers [2], development of composite structures [3], vehicle routing with time windows [4], and structural damper systems [5]. These challenges are collectively referred to as Constrained Multi-Objective Optimization Problems (CMOPs).

In general, a CMOP can be mathematically formulated as:

where \(F(x) \in Y\) is composed of M objective functions, and Y is the objective space; \({g_j}(x)\) and \({h_i}(x)\) represent the jth inequality constraint and the ith equality constraint respectively; r and l represent the number of inequality and equality constraints respectively; \(x \in \Omega \) is the solution consisting of V decision variables, and \(\Omega \) is the decision variable space. The overall constraint violation of the solution x is defined as follows [6]:

If a solution meets all of the constraints, it is considered a feasible solution; otherwise, it is considered an infeasible solution. A feasible solution \(x_1\) is said to dominate another feasible solution \(x_2\) if \(f_h(x_1) \le f_h(x_2)\) for all \(h \in {1, ..., M}\) and \(f_g(x_1) < f_g(x_2)\) for at least one \(g \in {1, ..., M}\). If no other feasible solution dominates it, a solution is known as a Pareto optimal solution. The set of all Pareto optimal solutions in the decision space is called the Pareto optimal set, and the image of these solutions in the objective space is known as the Pareto front (PF). When solving CMOPs, the goal is to approximate the PF with a set of well-converged and well-distributed feasible solutions [7].

CMOPs present significant challenges due to the constraints that can make a large portion of the search space infeasible, partitioning the feasible space into narrow, disconnected regions and potentially making the PF of unconstrained multi-objective optimization problems (MOPs) partially or completely infeasible [8]. These changes to the search space, caused by various constraints [9], make CMOPs generally more challenging to solve compared to unconstrained MOPs.

To address these challenges, an effective approach is to simultaneously balance the satisfaction of constraints and the optimization of the objective functions. Researchers have developed several techniques for achieving this balance, including the multi-ranking technique [10, 11], multi-stage technique [9, 12,13,14,15,16,17], and co-evolutionary technique [18,19,20]. Among these, multi-stage techniques have received increasing attention for balancing constraint satisfaction and objective optimization through the use of different criteria and strategies at different stages. However, current methods for solving CMOPs may not be efficient enough due to slow convergence or a lack of ability to explore feasible regions. Additionally, these methods may not be able to solve all CMOPs well due to the varying characteristics of different problems. Consequently, addressing CMOPs with varying characteristics effectively requires consideration of several key aspects. First, determining the optimal initial optimization population for different CMOPs during the early optimization stage is crucial in order to expedite the optimization process. Second, the adaptive selection of suitable optimization methods for various stages is essential, considering the evolving characteristics of the population of different CMOPs in the early stage. Lastly, adaptively allocating the appropriate number of execution stages, contingent upon the effectiveness of the employed optimization methods, is an important factor in devising a proficient approach to solving CMOPs.

To address the above challenges, an effective approach is to simultaneously balance the satisfaction of constraints and the optimization of the objective functions. Researchers have developed several techniques for achieving this balance, including the multi-ranking techniques [10, 11], multi-stage techniques [9, 12,13,14,15,16,17], and co-evolutionary techniques [18,19,20]. Among them, the challenge of co-evolutionary techniques is to balance convergence and diversity in solving CMOPs. Most co-evolutionary CMOEAs follow the principle that convergence takes precedence over diversity, such as C-NSGAII [21], but in fact both are equally important. Some algorithms, such as [22], fall into an easily searchable part of PF because of ignoring solutions with relatively poor convergence but good diversity performance, resulting in low effectiveness in solving CMOPs of disconnected feasible regions and discrete PFs. To achieve a balanced search in feasible and infeasible regions, CMOEAs such as C-TAEA [18], CCMODE [19] and CCMO [20] have been developed. Unfortunately, these algorithms ignore the information of infeasible solutions, resulting in poor optimization results on some CMOPs. To address the above limitations, the c-DPEA algorithm [23] is further developed. By introducing the information about infeasible solutions, c-DPEA is able to achieve good results in solving CMOPs with disconnected feasible regions and discrete PFs. However, the diversity of solutions is insufficient due to the existence of adaptive penalty term in the late optimization process. In addition, most existing multi-population based CMOEAs transfer information between two populations, because efficient information transfer can effectively improve the performance of both populations. Information transfer, however, is neglected in most CMOEAs.

The multi-stage techniques have also received increasing attention for balancing constraint satisfaction and objective optimization through the use of different criteria and strategies at different stages. However, current methods for solving CMOPs may not be efficient enough due to slow convergence or a lack of the ability to explore feasible regions. Additionally, these methods may not be able to solve all CMOPs well because of the varying characteristics of different problems. Consequently, addressing CMOPs with varying characteristics effectively requires consideration of several key aspects. For multi-stage optimization, the strategy for switching from one stage to another is also very important, because it directly affects the performance of CMOEAs. Researchers have proposed a number of switching strategies. For example, in PPS [24], the switch is performed when the maximum rate of change between the ideal point and the lowest point is less than a predefined value. In ToP [13], the switch is performed when multiple feasible solutions are obtained and some of them are of high quality. In CMOEA-MS [9], the switch is performed when the proportion of feasible solutions in the population is less than a predefined value. So the rationality of the switching strategy has an important impact on the optimization performance.

Considering the above issues in most existing multi-population and multi-stage CMOEAs, we aim to design an effective framework that can balance the convergence, diversity and feasibility of CMOPs, and give corresponding algorithms to satisfy the preferences in the entire optimization process for CMOPs with different characteristics. It also requires high-quality information transfer between populations in different optimization processes to solve as many CMOPs as possible.

Based on the above discussion, this work proposes a multi-stage evolutionary framework with adaptive selection. An algorithm based on this framework, called MSEFAS, is developed, which provides corresponding algorithms for solving CMOPs with different characteristics in different optimization stages. MSEFAS can realize high-quality information exchange between populations in different optimization processes to solve CMOPs more efficiently. Different from the switching strategy of existing CMOEAs, this paper designs a new strategy to allocate the execution stage for each algorithm. The main contributions of this work are as follows:

-

MSEFAS proposes a two-phase multi-stage evolutionary algorithm to address the challenges of CMOPs. The early phase consists of two stages: the first stage focuses on optimizing competitive infeasible solutions with favorable objective values and minimal constraint violations, facilitating a balance between diversity and feasibility. The second stage aims to enhance the convergence or diversity performance on CMOPs with limited feasible regions. In the later phase of the evolutionary process, a third stage is introduced to efficiently handle the diverse characteristics of CMOPs by examining the relationship between the constrained Pareto optimal front (CPF) and the unconstrained Pareto optimal front (UPF).

-

MSEFAS adaptively assigns the execution order to each algorithm in the early phase, providing initial optimized populations based on their effectiveness in selecting individuals for CMOPs and MOPs during multiple optimizations. Notably, the c-DPEA algorithm [23] is employed in the optimization stage that introduces infeasible solutions.

-

MSEFAS adaptively allocates execution stages to each algorithm, considering the cumulative effectiveness of individuals selected by both the infeasible solutions optimization algorithm and the offspring individual-based optimization algorithm for CMOPs and MOPs across multiple optimizations.

The remainder of this paper is structured as follows. In “Related work and motivation”, we review related work in the field of CMOPs and motivation. In “Proposed MSEFAS framework”, we present our proposed multi-stage evolutionary framework with adaptive selection (MSEFAS) for efficiently solving CMOPs with different characteristics. In “Experimental studies”, we present the experimental results of MSEFAS on various CMOPs. Finally, in “Conclusion”, we draw our conclusions and discuss future work.

Related work and motivation

This section briefly reviews existing relevant work and provides the motivation for this paper. Table 1 summarizes and evaluates relevant constrained multi-objective evolutionary algorithms (CMOEAs).

According to the techniques for effectively balancing the satisfaction of constraints and the optimization of objective functions, CMOEAs can be classified into three categories: multi-ranking techniques, multi-stage techniques, and co-evolutionary techniques.

The first category is the multi-ranking techniques. To overcome the disadvantage of constraint handling techniques (CHTs) that prefers constraints over objectives and leads to premature convergence, Ma et al. [10] proposed a new CHT based on constrained dominance principle (CDP). In CHT, the fitness function of each solution is defined as the weighted sum of both the CDP-based solution ranking and the Pareto-dominant-based solution ranking (ie, without considering any constraints). Simultaneously, the weights adopted in the fitness function are associated with the proportion of feasible solutions in the current population. Although the proposed ToR considers both convergence and feasibility, it neglects diversity. Moreover, since different problems have varying preferences for constraints and objectives, the constraint bias given by ToR is consistently greater than the objective bias, making it difficult to strike a balance between constraints and objectives.

The second category is multi-stage techniques. These methods involve dividing the optimization process into multiple stages to adaptively balance objective optimization and constraint satisfaction. Tian et al. [9] proposed a two-stage evolutionary algorithm that adjusts the fitness evaluation strategy during the evolution process to achieve this balance. The first stage disregards constraints to search the UPF, enabling the population to explore the feasible region across the infeasible region; the second stage searches for optimal solutions distributed at the boundary of the feasible region by considering constraints. However, the method only adjusts the search of these two stages based on the proportion of feasible solutions, which leads to a lower overall convergence rate. Liu et al. [13] introduced a two-phase framework called ToP, in which the first stage finds promising feasible regions by transforming the CMOP into a constrained single-objective optimization problem, and the second stage uses a specific CMOEA to find the final solutions. Although it employs the constrained single-objective optimization method, the problem’s difficulty is not reduced due to the lack of simplification of constraints. Additionally, it is challenging to find suitable conditions for transitioning from the first stage to the second stage. Zhu et al. [14] proposed the MOEA/D-DAE algorithm, which includes a detect-and-escape strategy to guide the search out of stagnant states caused by constraints. Although this method can help the population escape local optima, when the infeasible region of the constraint problem is complex, local optima can be easily misjudged as global optima. Ma et al. [15] proposed a multi-stage evolutionary algorithm that incrementally adds constraints at different stages of evolution to solve CMOPs with complex constraints. However, this approach results in the presence of some infeasible solutions in the later stages of evolution, reducing the optimization effectiveness. Yu et al. [16] proposed a dynamic selection preference assisted constrained multi-objective differential evolution (DE) algorithm, which switches the selection preference between the objective function and constraints during the evolution process. Yu et al. [17] introduced the purpose-directed two-phase multiobjective differential evolution (PDTP-MDE) algorithm, which divides the evolutionary process into two stages based on the intended purpose of each stage. Although the method reduces the optimization difficulty by decomposing the optimization problem into simpler subproblems, the decomposition relies on human experience, and the setting of weights also affects the quality of solutions generated based on the decomposition method. Ming et al. [26] proposed a dual-stage dual-population evolutionary algorithm that efficiently explores and exploits both infeasible and feasible solutions. Fan et al. [24] proposed the push-pull search (PPS) algorithm, which divides the search process into a push search phase and a pull search phase. Although this method can quickly traverse the infeasible region, it struggles to achieve the crossing of populations from UPF to CPF when the decision space region is small.

The third category is co-evolutionary techniques. Li et al. [18] proposed a parameter-free technique called the two-archive evolutionary algorithm. This method involves maintaining two archives: a convergence-oriented archive (CA) and a diversity-oriented archive (DA). The purpose of the two-archive technique is to explore under-exploited areas of the CA through the DA in order to complement the behavior of the CA and provide as much diversity information as possible. To achieve this, the method utilizes a constrained mating selection mechanism which adaptively selects suitable mating parents based on the evolutionary state. This mechanism takes advantage of the complementary effects of the two archives. Although utilizing two archives can effectively solve CMOPs with discontinuous or inner and outer layer feasible regions, the strong association between the two populations can negatively impact the evolution of their populations, resulting in suboptimal optimization performance on CMOPs with complex constraints. Wang et al. [27] proposed a decomposition-based multi-objective optimization method for solving constrained optimization problems (COPs). This method involves transforming the COP into a biobjective optimization problem (BOP) and then optimizing the transformed BOP in a decomposition-based framework. The method also employs a restart strategy to address complex constraints. Wang et al. [19] introduced the cooperative differential evolution framework (CCMODE), which involves M constrained single-objective subpopulations and an archived population that cooperate to optimize all objectives and constraints of CMOPs. Although this approach utilizes knowledge transfer among all single-objective subpopulations to promote the use of objective information by the archive population, it is time-consuming and resource-intensive due to the simultaneous optimization of the \(M + 1\) populations. Tian et al. [20] proposed a co-evolutionary framework for constrained multi-objective optimization that involves sharing information between two populations to solve CMOPs. Although the approach can achieve a balance between constraints and objectives through collaboration between two populations, some promising infeasible solutions are not considered. Wang et al. [28] introduced the cooperative multi-objective evolutionary algorithm with propulsive population (CMOEA-PP), which uses normal and propulsive populations to work together to solve CMOPs. Sun et al. [29] proposed the evolutionary algorithm with constraint relaxation strategy based on the differential evolution algorithm (CRS-DE), which divides the population into three subpopulations according to the overall constraint violation in order to relax constraints. Qiao et al. [25] introduced the multi-task constrained multi-objective optimization (MTCMO) framework, which involves the use of an auxiliary task with a dynamically decreasing constraint bound to assist in solving the main task. While the method makes the auxiliary problem highly correlated with the main problem by dynamically reducing the constraint boundary and provides a complement to the evolutionary direction, it adopts a fixed constraint boundary reduction method. This approach overlooks the fact that different CMOPs have distinct preferences for objectives and constraints during evolution.

In addition to the three techniques mentioned above, there have been some recent studies that have explored alternative approaches to solving CMOPs. Yuan et al. [30] introduced a cost value-based distance into the target space and used this distance, along with constraints, to define a metric for evaluating each individual’s contribution to exploring promising areas. This led to the development of a metric-based constrained multi-objective algorithm. Xiang et al. [31] proposed a new CHT (tradeoff model) that takes into account the underlying problem types, as determined by the relationship between the CPF and the UPF. Wu et al. [32] introduced the voting mechanism-based ensemble constraint handling framework (VMCH), which involves the use of multiple CHTs as voters to compare and select solutions. Peng et al. [33] proposed a constraint processing technique based on directed weights for CMOPs, in which infeasible individuals with good objective values and small constraint violations are retained in the population to improve the performance of the algorithm.

Although numerous CMOEAs have been employed to solve CMOPs with some success, most of them face difficulties when addressing CMOPs with high constraint complexity. This is primarily due to constraints causing the feasible region to become discrete, narrow, or challenging to traverse through the infeasible region. Multi-stage based CMOEAs are effective algorithms for handling complex CMOPs. Their principle involves dividing the process of solving the optimal feasible PF into multiple tasks, with each task processed at different stages. However, multi-stage based CMOEAs generally cannot achieve satisfactory results on all CMOPs and exhibit some limitations. The main reason is that it is impossible to accurately determine when to switch from one stage to another for CMOPs with varying characteristics, leading to insufficient efficiency and universality.

Existing multi-stage based CMOEAs mostly adopt a two-stage framework. For instance, the PPS approach [24] targets the unconstrained PF in the push stage and considers constraints in the pull stage, without considering solution feasibility in the early optimization stage. However, the convergence and the performance of searching feasible regions of PPS on solving CMOPs with discontinuous or disjoint feasible regions are not satisfied. In addition, the use of the MOEA/D framework in PPS results in a serious performance degradation when solving CMOPs with an irregular PF. On the other hand, ToP [13] considers all constraints initially, introducing a single objective before considering all constraints and objectives later. However, because ToP uses a reference vector to transform a CPF into a constrained single-objective optimization problem, the convergence rate is slow, giving rise to difficulties for the algorithm to converge to the CPF. Moreover, the solutions found in the first stage are relatively clustered, which affects the performance of optimization in the later stage. Similarly, CMOEA-MS [9] prioritizes objectives over constraints in the early stage, focuses on achieving a good solution diversity in the later stage. However, the convergence effect is not satisfactory when solving the CMOPs with disconnected CPF. MSCMO [15] initially considers fewer constraints, allowing populations to converge to promising feasible regions with a good degree of diversity, and searches for optimal solutions by considering additional constraints later. However, when the number of evaluations is limited, the solutions explored by the algorithm cannot satisfy all constraints, and there may be some infeasible solutions.

This work distinguishes itself with the existing work in three significant ways. Firstly, unlike traditional CMOEAs that do not account for the preference differences of CMOPs for objective optimization and constraint satisfaction at the onset of optimization, this study incorporates these preferences at the beginning of the search process. This is achieved by assessing the preferences of different CMOPs for objective optimization and constraint satisfaction, and subsequently adaptively assigning suitable algorithms to different stages based on their unique search characteristics. Secondly, this study diverges from previous algorithms that determine the number of executions at each optimization stage based on either user-specified conditions or the ratio of feasible solutions. Instead, the proposed algorithm facilitates an adaptive transition from one stage to another by assigning corresponding execution stages predicated on the effectiveness of solutions selected by each algorithm. Lastly, this research departs from the convention in existing CMOEAs of randomly selecting initial populations. Rather, it optimizes the initial populations during the early phase, thereby accelerating the initiation of the optimization process.

Building upon the aforementioned motivations, we propose an adaptive multi-stage evolutionary search for constrained multi-objective optimization to satisfy different CMOPs having different preferences for feasibility, diversity, and convergence. The algorithm comprises two phases. Initially, we must assess the preferences of CMOPs with different characteristics for feasibility, diversity, and convergence in the early stage. Consequently, two stages are introduced in the early phase. One stage is utilized to optimize competitive infeasible solutions with good objective values and low constraint violations, enabling a transition between diversity and feasibility. The other stage is designed to enhance the convergence or diversity performance of CMOPs with small feasible regions. The initial optimized populations and corresponding execution order are determined based on the effectiveness of the individuals selected for CMOPs and MOPs in these two stages. Additionally, to make all stages cooperate better with each other, the execution stages are adaptively assigned to each algorithm based on the accumulated effectiveness of the individuals selected in multiple optimizations. In the later phase, a third stage is introduced to ensure that the algorithm can solve as many CMOPs as possible, mainly because the relationship between the CPF and the UPF can enable high-quality information transfer between populations.

Proposed MSEFAS framework

The overall framework of MSEFAS is shown in Algorithm 1. MSEFAS first initializes two populations, \(P_1\) and \(P_2\), where \(P_1\) is used to solve the original CMOPs and \(P_2\) is used to solve unconstrained MOPs.

Since different problems exhibit varying characteristics, it is essential to determine at the beginning of the optimization process whether introducing infeasible solutions to increase diversity is necessary, how to select the initial optimized populations to enhance the optimization effect, and how to establish the execution stage of each algorithm in the optimization. To answer these three questions, we first need to evaluate the introducing infeasible solutions optimization algorithm (IF algorithm) and the offspring individual-based optimization algorithm (OF algorithm) separately to determine which of the two algorithms is more effective in selecting solutions. If the effectiveness of these two algorithms is determined by only one iteration at the beginning of the optimization, it may result in inaccurate judgments. Conversely, too many iterations can not only increase computational costs but also reduce the effectiveness of the optimization. In this work, we use the satisfaction of the number of iterations \(T_c\) being less than a given value as the determining condition. If this condition is satisfied, initial optimized populations, \(P_{inl1}\) and \(P_{inl2}\), along with the parameter r1 of the validity difference between the IF algorithm and the OF algorithm in selecting individuals for CMOPs, and the execution stages s1, s2, and s3 of each algorithm are defined using Algorithm 2. The early phase contains two stages, IF algorithm and OF algorithm. Based on the value of r1, it is determined whether the IF algorithm or OF algorithm should be used as the first stage of optimization in early phase for the CMOPs. The second stage consists of the algorithm that was not selected for the first stage. In order to efficiently deal with CMOPs with different characteristics based on the relationship between CPF and UPF, we apply the parental individual-based or offspring individual-based optimization algorithm (PO algorithm) in the late phase. The optimization process is completed by combining the order of operation of the algorithms and the execution stages of each algorithm as calculated using Algorithm 2. If the number of iterations \(T_c\) no longer meets a predefined condition, Algorithm 2 is no longer executed and the process is repeated based on the value of r1 until the termination condition is reached. The overall framework of the proposed algorithm is shown in Fig. 1.

Algorithm 2 has aims to generate the initial optimized populations and provide the execution stage of each algorithm.

To accomplish these goals, the algorithm first uses the IF and OF algorithms to solve the CMOPs and MOPs, respectively, and obtains the corresponding selected individuals \(P_1^{IF}\), \(P_2^{IF}\), \(P_1^{OF}\), and \(P_2^{OF}\). It then calculates the effectiveness parameters \(a_{P_1^{IF}}\), \(a_{P_2^{IF}}\), \(a_{P_1^{OF}}\), \(a_{P_2^{OF}}\) for these individuals using Eq. (3). These parameters represent the ratio of the number of individuals selected by each algorithm to the best N individuals selected for the CMOPs and MOPs.

Next, the algorithm uses Eq. (4) to calculate the difference r1 and r2 of the validity of the individuals selected by the two algorithms for the CMOPs and MOPs, respectively. Based on r1 and r2, it determines the initial population for the subsequent optimization process. The principle it follows is to select the population with the highest effectiveness, as determined by which algorithm selected the individuals.

The algorithm then gives the execution stage of each algorithm based on the effectiveness \(a_{P_1^{IF}}\), \(a_{P_2^{IF}}\), \(a_{P_1^{OF}}\), \(a_{P_2^{OF}}\) of the individuals selected by the two algorithms. It accumulates the effectiveness of the two algorithms for the CMOPs in each cycle, denoted as A1 and B1. When one of three cases is satisfied, the algorithm gives the execution stage for each algorithm: (1) for the CMOPs, the cumulative validity of individuals obtained by the IF algorithm is higher than that of the OF algorithm in multiple evaluations; (2) in one evaluation, the validity of individuals obtained by the IF algorithm is 0.2 higher than that of the OF algorithm for the CMOPs; or (3) for the MOPs, the validity of individuals obtained by the OF algorithm in one evaluation is 0.2 higher than that of the IF algorithm.

In the s3 stage, we apply the PO algorithm as described in Algorithm 3 (with main reference [34]). To improve convergence, we use the binary tournament selection method to select mating parents from \(P_i\) based on their quality, and generate N/2 offspring individuals \(OP_{i}\) using evolutionary operators. These offspring are combined with the parents to form \(COP_{i}\), which is then evaluated on the corresponding problem (lines 1–7).

Next, we calculate the difference between the success rates of the parent and offspring individuals using Eq. (5). If \(d_i \ge 0\), it indicates that the parent individuals make up a higher percentage of the optimal N individuals compared to the offspring. In this case, we select the parents for optimization, merge \(P_i\), \(OP_i\) and the selected \(P_{2/i}\) to obtain \(CP_i\), and refer to this as the PA Algorithm. On the other hand, if \(d_i < 0\), it indicates that the offspring make up a higher percentage of the optimal N individuals. In this case, we select the offspring for optimization, merge \(P_i\), \(OP_i\) and the selected \(OP_{2/i}\) to obtain \(CP_i\), and refer to this as the OF Algorithm. Both versions of \(CP_i\) are then evaluated on the corresponding problem separately (lines 8–19). Finally, we select N individuals from \(CP_i\) according to the environmental selection to form the new \(P_i\) (lines 20–21). The process of PO algorithm is shown in Fig. 2.

The details of the OF algorithm are described in Algorithm 4 (with main reference [34]). First, we randomly select the mating parents from \(P_i\) and then use the mating parents to generate offspring individuals \(OP_{i}\) of size N/2 by the evolutionary operators. \(P_i\), \(OP_{i}\), and \(OP_{2/i}\) are combined to obtain the \(COP_{i}\). After that, \(COP_{i}\) is evaluated on the corresponding problem. Finally, N individuals are selected from \(COP_{i}\) to form a new \(P_i\) using environmental selection.

Regarding the IF algorithm, in this paper, we embed the c-DPEA algorithm and Ship algorithm [35] into the IF algorithm, respectively, and discuss them in the later experiments.

Experimental studies

In this section, we perform comprehensive experimental studies to assess the performance of the proposed MSEFAS. First, we compare the proposed MSEFAS with several state-of-the-art algorithms via benchmark tests: MOEADDAE [14], CMOEA-MS [9], CCMO [20], c-DPEA [23], EMCMO [34], TiGE2 [11], CTAEA [18], PPS [24], ToP [13], DSPCMDE [16], and MSCMO [15] on several test suites: constrained DTLZ [36], DOC [13], MW [8], LIRCMOP [37], and DASCMOP [38]. Then we conduct a series of experiments to validate the effectiveness of the key functionalities of MSEFAS, including the adaptive assignment of execution stage, the adaptive selection of the initial optimized population, the algorithm selection in the initial stage, as well as the flexibility of the entire framework. Finally, we test the proposed MSEFAS on nine real-world CMOPs.

Performance Indicators

To evaluate the quality of the obtained solution sets, we use two widely-recognized performance indicators that consider both convergence and diversity: hypervolume (HV) [39] and inverted generational distance (IGD) [40]. For HV calculation, we use the maximum and minimum target values of the non-dominated solutions found by all compared algorithms as the reference point, with \(\delta = 0.01\) (as described in [41]). For IGD calculation, we use 10000 evenly distributed Pareto optimal solutions sampled from the theoretical PF as the reference points.

To test for statistical significance, we employ the Wilcoxon rank sum test with a significance level of \(5\%\). In the comparison results, "\(+\)" indicates that the proposed method is statistically better than the compared algorithm, "−" indicates that it is worse, and "\(=\)" means it is similar.

Parameter settings

-

Problems : The number of decision variables V and the number of objectives M for each benchmark problem are set as follows. For the constrained DTLZ problems, C1-DTLZ1, DC1-DTLZ1, DC2-DTLIZ1, and DC3-DTLZ1, \(M = 3\), \(V = 7\), and for the remaining 6 problems, \(M = 3\), \(V = 12\). For DOC problems, DOC8 and DOC9, \(M = 3\), for the remaining 7 problems, \(M = 2\), the settings of V for different problems can refer to [13]. For the MW problems, V is set to 15 for all problems, \(M = 3\) for MW4, MW8, and MW14, and \(M = 2\) for the remaining 11 problems. For the LIR-CMOP problem, V is set to 10 for all problems, \(M = 3\) for LIR-CMOP13 and LIRCMOP14, and \(M = 2\) for the remaining 12 problems. For the DASCMOP problems, V is set to 10 for all problems, \(M = 3\) for DAS-CMOP7, DAS-CMOP8 and DAS-CMOP9, and \(M = 2\) for the remaining six problems.

-

Algorithms : MOEADDAE adopts a detection and escape strategy to guide the search out of a stagnant state, and embeds this strategy into the decomposition-based multi-objective evolutionary framework. For MOEADDAE, the parameter \(\alpha \) is set to 0.95. CMOEA-MS is a two-stage evolutionary algorithm that adjusts the fitness evaluation strategy during evolution to adaptively balance objective optimization and constraint satisfaction, the parameter for determining the current stage \(\lambda =0.5\). CCMO is a dual-population-based CMOEA embedded with a constrained NSGA-II, which adopts the truncation strategy in SPEA2 [42] in environmental selection and utilizes the constrained dominance principle method to solve constraints. c-DPEA is a cooperative co-evolutionary algorithm in which two populations cooperate by exchanging information during offspring generation. EMCMO adopts an evolutionary multitasking-based constrained multi-objective optimization framework to solve CMOPs by transforming the optimization of the CMOPs into two related tasks. For EMCMO, the parameter \(\beta =0.2\). The parameter settings of these five comparison algorithms are the same as those in the original literature. For the proposed MSEFAS, the c-DPEA algorithm is adopted as the IF (Introducing Infeasible Solutions) algorithm, and its parameter settings are the same as those used in the c-DPEA algorithm. The certain condition is whether the number of iterations \(T_c\) is less than 16. The analysis of parameter \(T_c\) is given in “Analysis of parameter settings”. Detailed algorithm parameter settings can be found in Table SI of the Supplementary Material.

In addition to PPS, ToP and DSPCMDE adopt the differential evolution (DE) [43] operator and polynomial mutation (PM) [44]to generate offspring solutions, other algorithms all in this study use genetic algorithms with simulated binary crossover (SBX) [45] and PM as evolutionary operators to generate offspring solutions. The SBX operator has a crossover probability of \(p_c = 1\) and a distribution index of \(\eta _c = 20\). The PM operator has a mutation probability of \(p_m = 1/V\) and a distribution index of \(\eta _m = 20\). The parameters crossover rate CR and scale factor F in the DE operator are set to 1.0 and 0.5, respectively. To ensure a fair comparison, we set the initial population size to 100 and the maximum number of function evaluations (MaxFEs) to 200,000 for all algorithms.

All experiments in this paper are implemented on PlatEMO [46] using MATLAB R2021a on an Intel Corei7 with 3.6 GHz CPU, running on Microsoft Window 10 Enterprize SP1 64-bit operating system.

Analysis of parameter settings

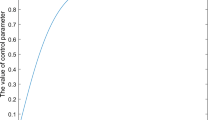

To test the influence of parameter \(T_c\) in the proposed MSEFAS on the experimental results, an experiment for sensitivity analysis is performed on the number of iterations \(T_c\). We perform experiments for \(T_c = 10, 20, 30, 40, 50, 60, 70, 80, 90\) and 100 iterations to solve C3-DTLZ4T, MW2, MW3 and MW8. Their IGD values are shown in Fig. 3. These results show that the algorithm works best when \(T_c\) is in the range of 10–20.

To explore the optimal setting of \(T_c\), we divided \(T_c\) between 10 and 20 more finely. Figure 4 shows the IGD values of the MSEFAS for \(T_c=10, 12, 14, 16, 18\) and 20 over 20 independent runs on C3-DTLZ4T, MW2, MW3 and MW8, respectively, from which it can be seen that the optimization performance is the best when \(T_c=16\). Generally, the optimal \(T_c\) value is problem-dependent. For fair comparisons, however, we set \(T_c\) to 16 for all test problems.

To investigate the influence of the four parameters \(p_c\), \(\eta _c\), \(p_m\) and \(\eta _m\) in the embedded c-DPEA algorithm on the optimization effect of MSEFAS, Fig. 5 shows the optimization effect of c-DPEA and MSEFAS algorithms on the MW2 when the four parameters change. As can be seen in Fig. 5, these four parameters have different degrees of influence on the optimization effect of both algorithms, but the MSEFAS consistently outperforms the c-DPEA.

Benchmark comparisons

Tables 2 and 3 show the mean and standard deviation of IGD for MSEFAS and the comparison algorithms on five benchmark test suites, based on 30 independent runs. Note that NAN in all Tables indicates that the algorithm does not find a feasible solution. From the results in Table 2, we can see that MSEFAS obtains the best results on 25 out of 56 problems. It outperforms MOEADDAE, CMOEA-MS, CCMO, c-DPEA, and EMCMO on 47, 46, 40, 41, and 36 problems, respectively, and is comparable to these algorithms on 4, 9, 16, 13, and 20 problems, respectively. In addition to the mean values of MSEFAS presented, we also provide the best values of MSEFAS, namely MSEFAS(best). The mean and standard deviation of HV for MSEFAS and the five comparison algorithms on the five benchmark test suites, based on 30 independent runs, can be found in Table SII of the Supplementary material. From the results in Table 3, we can see that MSEFAS obtains the best results on 26 out of 56 problems. It outperforms TiGE2, CTAEA, PPS, ToP, DSPCMDE, and MSCMO on 49, 53, 42, 50, 40, and 38 problems, respectively, and is comparable to these algorithms on 5, 3, 2, 1, 3, and 16 problems, respectively. These results show that MSEFAS performs better on most of the tested instances compared to the other algorithms.

Figures 6, 7, 8 and 9 show the feasible and non-dominated solution sets, as well as the running IGD values for all CMOEAs on LIRCMOP1, DOC8, MW14, and DASCMOP8, respectively. From these figures, we can observe that MSEFAS has better diversity than the other five CMOEAs on LIRCMOP1 (a type IV CMOP with many large infeasible regions and small feasible regions). The Pareto front of DOC8 is linear, disconnected, and has a small, nonlinear feasible region. MW14 has a disconnected geometry due to Pareto domination and is a type I CMOP.

The Pareto front of DASCMOP8 is composed of several disjoint segments blocked by large infeasible regions, and has multiple disconnected feasible regions. Figures 7, 8 and 9 show that MSEFAS is able to reach all non-dominated feasible regions and obtain well-distributed solutions on the CPF for DOC8, MW14, and DASCMOP8, especially when the feasible region is small or has multiple disconnected feasible regions, compared to the other five comparison algorithms.

Adaptive assignment of execution stage

To verify the effectiveness of the proposed MSEFAS in adaptively assigning the corresponding execution stage to each algorithm, we created five variants of MSEFAS by fixing the number of execution stages and denoted them as follows:

-

MSEFAS(0,1/2,1/2): the first stage is not executed and the second and third stages are both executed for 1/2 of the total process.

-

MSEFAS(1/4,1/4,1/2): the first and second stages are executed for 1/4 of the total process, and the third stage is executed for 1/2 of the total process.

-

MSEFAS(1/3,1/3,1/3): the three stages are all executed for 1/3 of the total process.

-

MSEFAS(1/2,1/4,1/4): the first stage is executed for 1/2 of the total process, and the second and third stages are both executed for 1/4 of the total process.

-

MSEFAS(1/2,1/2,0): the first and second stages are executed for 1/2 of the total process, and the third stage is not executed.

We compared MSEFAS with its five variants and the results for IGD and HV are shown in Table 4 and Table SIII. The results show that MSEFAS outperforms all five variants, demonstrating the effectiveness of the proposed method in adaptively assigning the execution stage to each algorithm.

In order to verify which of the three parts of the proposed MSEFAS plays an effective role, we also design three variants of the proposed algorithm, namely the algorithm with only the IF and OF parts (MSEFAS(IFOF)), the algorithm with only the IF and PO parts (MSEFAS(IFPO)), and the algorithm with only the OF and PO parts (MSEFAS(OFPO)). Table 5 shows the mean values of IGD obtained by MSEFAS and the three variant algorithms on five benchmark test suites for 30 independent runs.

As can be seen from Table 5, MSEFAS is superior to the three variant algorithms. Among the three variant algorithms, MSEFAS(IFPO) and MSEFAS(OFPO) perform better than MSEFAS(IFOF), and the common point of these two algorithms is that they both contain the PO algorithm, so it can be concluded that the PO algorithm plays an important role in MSEFAS. Although the effectiveness of the PO algorithm for MSEFAS is indispensable, it cannot exceed the MSEFAS algorithm, which also indicates that the effectiveness of MSEFAS is not only caused by the simple superposition of the IF algorithm, OF algorithm, and PO algorithm, but is mainly attributed to the fact that there is a strategy in MSEFAS that can adaptively provide suitable initial optimized populations and the execution stage of each algorithm for different test problems.

Adaptive selection of the initial optimized population

To verify the effectiveness of the proposed adaptive selection of the initial optimized population, we compared it to an optimization algorithm without adaptive selection of the initial population. The initial optimized populations without adaptive selection can be divided into two types: those given only by c-DPEA (denoted as MSEFAS(cDPEAPOP)) and those given only by CCMO (denoted as MSEFAS(CCMOPOP)). To ensure fairness, we made sure that the parameter settings in MSEFAS(cDPEAPOP) and MSEFAS(CCMOPOP) were the same as in MSEFAS. The results of the mean and standard deviation of IGD for MSEFAS and the two comparison algorithms on the five benchmark test suites, based on 30 independent runs, are shown in Table 6. The HV test results can be found in Table S-III in the Supplementary material. From these results, we can see that MSEFAS performs better than both MSEFAS(cDPEAPOP) and MSEFAS(CCMOPOP), demonstrating the effectiveness of the proposed adaptive selection of the initial optimized population.

Algorithm selection in the initial stage

To analyze the relationship between the test problems and the algorithms used in the initial stage, we created Table 7 which shows the algorithms used in the initial stage for different test problems. From Table 7, we can see that 34 out of 56 test problems use the IF algorithm in the initial stage, with most of them being type II and type IV problems, except for MW2, MW4, MW14. For type I problems of MW2, MW4 MW14, their UPF and CPF are either both linear or both disconnected and have small feasible regions. This suggests that when the CPF is part of the UPF or the UPF does not contain any part of the CPF, or has the characteristics of MW2, MW4, MW14 for some problems, in the initial optimization stage, the optimal solutions of the unconstrained MOPs may not help much to find the optimal solutions of the CMOPs, and may make it fall into the local optimal. However, the IF algorithm can introduce some potential infeasible solutions in the initial stage to increase the diversity of the solutions, so as to get rid of local optimization, so it is more effective to use the IF algorithm.

There are 22 test problems with the OF algorithm in the initial stage, most of which are type I, type II, and type III problems, except for LIRCMOP7, LIRCMOP8, and DASCMOP3. For type IV problems of LIRCMOP7 and LIRCMOP8, their unconstrained PFs are located in the infeasible region, and their true PFs are located on their constraint boundaries. This also suggests that when the CPF is the same as the UPF or the CPF is part of the UPF or the CPF and UPF partially overlap, in the initial optimization stage, the optimal solutions of the unconstrained MOPs are great helpful to find the optimal solutions of the CMOPs. In this case, the OF algorithm can be used to focus on speeding up the convergence to improve the optimization performance, so the OF algorithm is more effective.

In order to analyze the relationship between different test problems and the selected initial populations, the algorithms used to select \(P_{inl1}\) and \(P_{inl2}\) respectively for different test problems are given in Table 8. It can be seen from Table 8 that when \(P_{inl1}\) is obtained by IF or OF algorithm, if there are DAS-CMOPs with diversity, feasibility and convergence difficulties and partial DOC problems that contain many complex properties in both the decision space and the objective space, \(P_{inl2}\) mostly adopts IF algorithm, which indicates that compared with the OF algorithm for such problems, IF algorithm is more efficient to select \(P_{inl2}\) by increasing the diversity of solutions. In contrast, \(P_{inl2}\) tends to adopt OF algorithm when the CMOPs belongs to type I, type II, type III or partial type IV problems, which indicates that OF algorithm is more effective in select \(P_{inl2}\) by focusing on the convergence of solutions compared to the IF algorithm.

To more specifically understand the ratio of each phase, Fig. 10 gives the ratio of the three execution phases of the constrained DTLZ test problems in the optimization process. As can be seen in Fig. 10, for C1-DTLZ1, DC1-DTLZ1, DC1-DTLZ3, DC3-DTLZ1, and DC3-DTLZ3, the ranking of the ratio of execution phases is \(OF<IF<PO\), indicating that, except for the final PO stage, the number of optimizations performed by the IF algorithm is higher than the number of optimizations performed by the OF algorithm. This suggests that the introducing infeasible solutions stage has a greater effect on the overall optimization compared to the optimization stage based on offspring individuals. For C1-DTLZ3, C2-DTLZ2, C3-DTLZ4, DC2-DTLZ1, and DC2-DTLZ3, the ranking of the ratio of execution phases is \(IF<OF<PO\), which indicates that the number of optimizations performed by the OF algorithm is higher than the number of optimizations performed by the IF algorithm. This suggests that the optimization stage based on offspring individuals has a greater effect on the overall optimization compared to the introducing infeasible solutions optimization stage.

Flexibility analysis

To further confirm the flexibility of the proposed MSEFAS framework, we embedded the Ship algorithm [35] into the IF stage and denoted the resulting algorithm as MSEFAS(Ship). The results of mean and standard deviation of the IGD obtained by MSEFAS(Ship) and five comparison algorithms on five benchmark test suites for 30 independent runs are presented in Table 9. As can be seen, MSEFAS(Ship) significantly outperformed MOEADDAE, CMOEA-MS, CCMO, c-DPEA, and EMCMO on 46, 47, 39, 42, and 32 problems, respectively, and was comparable to them on 7, 7, 16, 12, and 23 problems. These results demonstrate the flexibility of the MSEFAS framework in allowing the incorporation of different algorithms into its different stages.

Results on real-world CMOPs

To verify the effectiveness of the proposed MSEFAS for real-world applications, we tested the proposed MSEFAS together with TiGE2, CTAEA, MOEADDAE, ToP, DSPCMDE and MSCMO on real-world problems of RMWCMOP. RWCMOP1 represents the pressure vessel design problem [47], RWCMOP2 is a vibrating platform design problem [48], RWCMOP3 is the two bar truss design problem [49], RWCMOP4 is the welded beam design problem [50], RWCMOP5 represents the disc brake design problem [51], RWCMOP6 is the speed reducer design problem [52], RWCMOP7 represents the gear train design problem [53], RWCMOP8 represents the gar side impact design problem [54], and RWCMOP9 represents the four bar plane truss problem [55]. For RWCMOP2, \(V=5\), \(M=2\). For RWCMOP3, \(V=3\), \(M=2\). For RWCMOP6, \(V=7\), \(M=2\). For RWCMOP8, \(V=7\), \(M=3\). For the remaining five problems, \(V=4\), \(M=2\). The initial population of all problems is 100. In addition to \(MaxFEs=26250\) for RWCMOP8, for other problems, \(MaxFEs=20000\). Table 10 lists the HV results obtained by MSEFAS and six compared algorithms on nine RWMOP test problems over 30 independent runs.

As we can see from the comparative results in Table 10, MSEFAS is superior to six algorithms under comparison on nine RWMOPs, confirming the effectiveness of MSEFAS on practical problems.

Conclusion

This paper has presented an adaptive selection multi-stage framework, MSEFAS, for effectively handling constrained multi-objective optimization problems (CMOPs). Generally, MSEFAS includes three tailored optimization stages and adaptively assigns the execution order and initial optimized populations to different algorithms based on their effectiveness in selecting promising individuals. Specifically, one stage optimizes competitive infeasible solutions with good objective values and low constraint violations, enabling the transition between diversity and feasibility; another stage is introduced to improve convergence or diversity performance on CMOPs with small feasible regions; the final stage considers the relationship between the constrained Pareto optimal front (CPF) and the unconstrained Pareto optimal front (UPF). In comparison with five state-of-the-art constrained multi-objective evolutionary algorithms on a variety of benchmark suites, we have demonstrated the effectiveness of the proposed framework for handling a wide range of CMOPs.

Although MSEFAS has demonstrated promising performance, there is still potential for future improvements. For different optimization problems, the quality of offspring during the optimization process directly affects the optimization results. Possible future work could explore the adaptive assignment of different evolutionary operators to various optimization problems based on their success rates in generating offspring individuals, or directly exploring more efficient evolutionary operators. Another possibility is to use machine learning to train deep network models for predicting potential offspring, thereby partially replacing the offspring individuals generated through evolutionary processes. Furthermore, based on the relationship between UPFs and CPFs, UPFs can significantly aid in finding CPFs, so we can learn knowledge from unconstrained MOPs and apply it to CMOPs. Considering that the dimensions of these two types of problems differ after accounting for constraints, another intriguing approach could involve using heterogeneous transfer learning methods to provide useful knowledge for transitioning across different optimization stages. This would further enhance the adaptability and effectiveness of the proposed framework in handling various CMOPs.

References

Kim HS, Kang JW (2012) Semi-active fuzzy control of a wind-excited tall building using multi-objective genetic algorithm. Eng Struct 41:242–257

Saravanan R, Ramabalan S, Ebenezer NGR, Dharmaraja C (2009) Evolutionary multi criteria design optimization of robot grippers. Appl Soft Comput 9(1):159–172

Omkar S, Senthilnath J, Khandelwal R, Naik GN, Gopalakrishnan S (2011) Artificial bee colony (ABC) for multi-objective design optimization of composite structures. Appl Soft Comput 11(1):489–499

Lee LH, Tan KC, Ou K, Chew YH (2003) Vehicle capacity planning system: a case study on vehicle routing problem with time windows. IEEE Trans Syst Man Cybern Part A Syst Hum 33(2):169–178

Amini F, Ghaderi P (2013) Hybridization of harmony search and ant colony optimization for optimal locating of structural dampers. Appl Soft Comput 13(5):2272–2280

Wang J, Ren W, Zhang Z, Huang H, Zhou Y (2020) A hybrid multiobjective memetic algorithm for multiobjective periodic vehicle routing problem with time windows. IEEE Trans Syst Man Cybern Syst 50(11):4732–4745. https://doi.org/10.1109/TSMC.2018.2861879

Tanabe R, Oyama A (2017) A note on constrained multi-objective optimization benchmark problems. In: 2017 IEEE congress on evolutionary computation (CEC), pp 1127–1134. https://doi.org/10.1109/CEC.2017.7969433

Ma Z, Wang Y (2019) Evolutionary constrained multiobjective optimization: test suite construction and performance comparisons. IEEE Trans Evolut Comput 23(6):972–986. https://doi.org/10.1109/TEVC.2019.2896967

Tian Y, Zhang Y, Su Y, Zhang X, Tan KC, Jin Y (2022) Balancing objective optimization and constraint satisfaction in constrained evolutionary multiobjective optimization. IEEE Trans Cybern 52(9):9559–9572. https://doi.org/10.1109/TCYB.2020.3021138

Ma Z, Wang Y, Song W (2021) A new fitness function with two rankings for evolutionary constrained multiobjective optimization. IEEE Trans Syst Man Cybern Syst 51(8):5005–5016. https://doi.org/10.1109/TSMC.2019.2943973

Zhou Y, Zhu M, Wang J, Zhang Z, Xiang Y, Zhang J (2018) Tri-goal evolution framework for constrained many-objective optimization. IEEE Trans Syst Man Cybern Syst 50(8):3086–3099

Fan Z, Wang Z, Li W, Yuan Y, You Y, Yang Z, Sun F, Ruan J (2020) Push and pull search embedded in an m2m framework for solving constrained multi-objective optimization problems. Swarm Evolut Comput 54:100651

Liu ZZ, Wang Y (2019) Handling constrained multiobjective optimization problems with constraints in both the decision and objective spaces. IEEE Trans Evolut Comput 23(5):870–884

Zhu Q, Zhang Q, Lin Q (2020) A constrained multiobjective evolutionary algorithm with detect-and-escape strategy. IEEE Trans Evolut Comput 24(5):938–947. https://doi.org/10.1109/TEVC.2020.2981949

Ma H, Wei H, Tian Y, Cheng R, Zhang X (2021) A multi-stage evolutionary algorithm for multi-objective optimization with complex constraints. Inf Sci 560:68–91

Yu K, Liang J, Qu B, Luo Y, Yue C (2022) Dynamic selection preference-assisted constrained multiobjective differential evolution. IEEE Trans Syst Man Cybern Syst 52(5):2954–2965. https://doi.org/10.1109/TSMC.2021.3061698

Yu K, Liang J, Qu B, Yue C (2021) Purpose-directed two-phase multiobjective differential evolution for constrained multiobjective optimization. Swarm Evolut Comput 60:100799

Li K, Chen R, Fu G, Yao X (2019) Two-archive evolutionary algorithm for constrained multiobjective optimization. IEEE Trans Evolut Comput 23(2):303–315. https://doi.org/10.1109/TEVC.2018.2855411

Wang J, Liang G, Zhang J (2019) Cooperative differential evolution framework for constrained multiobjective optimization. IEEE Trans Cybern 49(6):2060–2072. https://doi.org/10.1109/TCYB.2018.2819208

Tian Y, Zhang T, Xiao J, Zhang X, Jin Y (2021) A coevolutionary framework for constrained multiobjective optimization problems. IEEE Trans Evolut Comput 25(1):102–116. https://doi.org/10.1109/TEVC.2020.3004012

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evolut Comput 6(2):182–197

Liu HL, Chen L, Deb K, Goodman ED (2016) Investigating the effect of imbalance between convergence and diversity in evolutionary multiobjective algorithms. IEEE Trans Evolut Comput 21(3):408–425

Ming M, Trivedi A, Wang R, Srinivasan D, Zhang T (2021) A dual-population-based evolutionary algorithm for constrained multiobjective optimization. IEEE Trans Evolut Comput 25(4):739–753. https://doi.org/10.1109/TEVC.2021.3066301

Fan Z, Li W, Cai X, Li H, Wei C, Zhang Q, Deb K, Goodman E (2019) Push and pull search for solving constrained multi-objective optimization problems. Swarm Evolut Comput 44:665–679

Qiao K, Yu K, Qu B, Liang J, Song H, Yue C, Lin H, Tan KC (2022) Dynamic auxiliary task-based evolutionary multitasking for constrained multi-objective optimization. IEEE Trans Evolut Comput. https://doi.org/10.1109/TEVC.2022.3175065

Ming M, Wang R, Ishibuchi H, Zhang T (2021) A novel dual-stage dual-population evolutionary algorithm for constrained multi-objective optimization. IEEE Trans Evolut Comput. https://doi.org/10.1109/TEVC.2021.3131124

Wang BC, Li HX, Zhang Q, Wang Y (2021) Decomposition-based multiobjective optimization for constrained evolutionary optimization. IEEE Trans Syst Man Cybern Syst 51(1):574–587. https://doi.org/10.1109/TSMC.2018.2876335

Wang J, Li Y, Zhang Q, Zhang Z, Gao S (2022) Cooperative multiobjective evolutionary algorithm with propulsive population for constrained multiobjective optimization. IEEE Trans Syst Man Cybern Syst 52(6):3476–3491. https://doi.org/10.1109/TSMC.2021.3069986

Sun Z, Ren H, Yen GG, Chen T, Wu J, An H, Yang J (2022) An evolutionary algorithm with constraint relaxation strategy for highly constrained multiobjective optimization. In: IEEE transactions on cybernetics

Yuan J, Liu HL, Ong YS, He Z (2022) Indicator-based evolutionary algorithm for solving constrained multiobjective optimization problems. IEEE Trans Evolut Comput 26(2):379–391. https://doi.org/10.1109/TEVC.2021.3089155

Xiang Y, Yang X, Huang H, Wang J (2021) Balancing constraints and objectives by considering problem types in constrained multiobjective optimization. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2021.3089633

Wu G, Wen X, Wang L, Pedrycz W, Suganthan PN (2022) A voting-mechanism-based ensemble framework for constraint handling techniques. IEEE Trans Evolut Comput 26(4):646–660. https://doi.org/10.1109/TEVC.2021.3110130

Peng C, Liu HL, Gu F (2017) An evolutionary algorithm with directed weights for constrained multi-objective optimization. Appl Soft Comput 60:613–622

Qiao K, Yu K, Qu B, Liang J, Song H, Yue C (2022) An evolutionary multitasking optimization framework for constrained multiobjective optimization problems. IEEE Trans Evolut Comput 26(2):263–277. https://doi.org/10.1109/TEVC.2022.3145582

Ma Z, Wang Y (2021) Shift-based penalty for evolutionary constrained multiobjective optimization and its application. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2021.3069814

Deb K, Jain H (2014) An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans Evolut Comput 18(4):577–601. https://doi.org/10.1109/TEVC.2013.2281535

Fan Z, Li W, Cai X, Huang H, Fang Y, You Y, Mo J, Wei C, Goodman E (2019) An improved epsilon constraint-handling method in MOEA/D for CMOPS with large infeasible regions. Soft Comput 23(23):12491–12510

Fan Z, Li W, Cai X, Li H, Wei C, Zhang Q, Deb K, Goodman E (2020) Difficulty adjustable and scalable constrained multiobjective test problem toolkit. Evolut Comput 28(3):339–378

While L, Hingston P, Barone L, Huband S (2006) A faster algorithm for calculating hypervolume. IEEE Trans Evolut Comput 10(1):29–38

Bosman PA, Thierens D (2003) The balance between proximity and diversity in multiobjective evolutionary algorithms. IEEE Trans Evolut Comput 7(2):174–188

Knowles J (2006) Parego: a hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans Evolut Comput 10(1):50–66

Zitzler E, Laumanns M, Thiele L (2001) Spea2: improving the strength pareto evolutionary algorithm. TIK-Rep 103

Price K, Storn RM, Lampinen JA (2006) Differential evolution: a practical approach to global optimization. Springer, New York

Deb K, Goyal M et al (1996) A combined genetic adaptive search (GENEAS) for engineering design. Comput Sci Inform 26:30–45

Deb K, Agrawal RB et al (1995) Simulated binary crossover for continuous search space. Complex Syst 9(2):115–148

Tian Y, Cheng R, Zhang X, Jin Y (2017) Platemo: a MATLAB platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput Intell Mag 12(4):73–87

Kannan B, Kramer SN (1994) An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design

Narayanan S, Azarm S (1999) On improving multiobjective genetic algorithms for design optimization. Struct Optim 18:146–155

Chiandussi G, Codegone M, Ferrero S, Varesio FE (2012) Comparison of multi-objective optimization methodologies for engineering applications. Comput Math Appl 63(5):912–942

Deb K et al (1999) Evolutionary algorithms for multi-criterion optimization in engineering design. Evolut Algorithms Eng Comput Sci 2:135–161

Osyczka A, Kundu S (1995) A genetic algorithm-based multicriteria optimization method. In: Proceedings of the 1st world congress structural and multidisciplinary optimization, pp 909–914 (1995)

Azarm S, Tits A, Fan M (1999) Tradeoff-driven optimization-based design of mechanical systems. In: 4th symposium on multidisciplinary analysis and optimization, pp 4758

Ray T, Liew K (2002) A swarm metaphor for multiobjective design optimization. Eng Optim 34(2):141–153

Jain H, Deb K (2013) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: handling constraints and extending to an adaptive approach. IEEE Trans Evolut Comput 18(4):602–622

Cheng F, Li X (1999) Generalized center method for multiobjective engineering optimization. Eng Optim 31(5):641–661

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, H., Jin, Y. & Cheng, R. Adaptive multi-stage evolutionary search for constrained multi-objective optimization. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01542-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40747-024-01542-9