Abstract

Stream data mining aims to handle the continuous and ongoing generation of data flows (e.g. weather, stock and traffic data), which often encounters concept drift as time progresses. Traditional offline algorithms struggle with learning from real-time data, making online algorithms more fitting for mining the stream data with dynamic concepts. Among families of the online learning algorithms, single pass stands out for its efficiency in processing one sample point at a time, and inspecting it only once at most. Currently, there exist online algorithms tailored for single pass over the stream data by converting the problems of classification into minimum enclosing ball. However, these methods mainly focus on expanding the ball to enclose the new data. An excessively large ball might overwrite data of the new concept, creating difficulty in triggering the model updating process. This paper proposes a new online single pass framework for stream data mining, namely Scalable Concept Drift Adaptation (SCDA), and presents three distinct online methods (SCDA-I, SCDA-II and SCDA-III) based on that framework. These methods dynamically adjust the ball by expanding or contracting when new sample points arrive, thereby effectively avoiding the issue of excessively large balls. To evaluate their performance, we conduct the experiments on 7 synthetic and 5 real-world benchmark datasets and compete with the state-of-the-arts. The experiments demonstrate the applicability and flexibility of the SCDA methods in stream data mining by comparing three aspects: predictive performance, memory usage and scalability of the ball. Among them, the SCDA-III method performs best in all these aspects.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Presently, there is an explosion of data in practical applications such as online shopping [5], email [4], stock exchange [14] and more. These data comprise continuously generated, real-time streaming data and differ significantly from traditional static datasets. Amidst this vast data landscape, data mining technology plays a crucial role in extracting useful insights, with mining knowledge from data streams being particularly significant. However, traditional offline algorithms [18] struggle to swiftly adapt to dynamic and continuous data streams as they require iterative solutions to specific optimization problems based on static and quantitative training data. In contrast with offline algorithms, online algorithms [36] enable continuously incremental participation of training sample point and therefore excel in scenarios involving stream data mining.

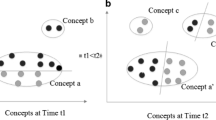

The burgeoning importance of online learning from the stream data lies in its capability to detect abnormal situations and learn from them, e.g. to identify abnormal traffic patterns [30] or network attacks [37] occurred first and pinpoint similar threats [35] thereafter. However, stream data mining faces challenges due to concept drift, referring to the shift in the target concept caused by evolving data distributions over time [17]. Concept drift research can be categorized into three aspects [22]: understanding, detection, and adaptation. Concept drift understanding [19] retrieves the time, extent and region in which concept drift occurs and lasts. Concept drift detection [1, 23] determines whether concept drift occurs by a change in the distribution of data or a change in time intervals. Yu et al. [34] designed an online learning algorithm to obtain distribution-independent test statistic for detecting concept drift in multiple data streams. Concept drift adaptation [20] refers to updating the current model based on drift. Yu et al. [33] developed a continuous support vector regression to address regression problems in nonstationary streaming data, and incorporated an incremental solving similarity term into quadratic programming problem. This paper belongs to the concept drift adaptation category as we believe the ultimate goal of stream data mining is to consistently adapt the model whenever new concepts appear, detection and understanding can be seen as some former procedures serving for the concept drift adaptation.

A variety of online learning based concept drift adaptation strategies have been proposed in literature. For instance, Yu et al. [32] employed a meta-learning approach and learning-to-adapt framework to address concept drift. Liu et al. [21] proposed a fast switch Naive Bayes application, resolving issues related to the impact of training data volume on the model. Li et al. [15] introduced an incremental semi-supervised learning algorithm based on neural networks tailored for stream data. Additionally, WIDSVM [6] is an algorithm utilizing weighted moving window techniques to derive an incremental-decremental Support Vector Machine (SVM) for traversing concept-drifting data streams. Among miscellaneous online learning algorithms, single pass stands out for its efficiency in processing one sample point at a time, and inspecting it only once at most. Currently, there exist online algorithms [9, 24] tailored for single pass over the stream data by converting the problems of classification into Minimum Enclosing Ball (MEB). However, these methods mainly focus on expanding the ball to enclose the new data. An excessively large ball might overwrite data of the new concept, creating difficulty in triggering the model updating process.

To address the challenges above, this paper presents a new framework based on MEB for concept drift adaptation on stream data, and then proposes three methods built upon this framework. When new sample points arrive, these methods adjust the ball dynamically by expanding or contracting to avoid the issue of excessively large balls, and all of them require only a single pass through the data stream. The study conduct experiments on 7 synthetic datasets and 5 real-world datasets, and compare the proposed methods with StreamSVM [24], Naive Bayes [38], Hoeffding Tree [11], ADWIN [2], and OSVM-SP [9]. Experimental results on prequential error, support vectors percent, and radius demonstrate that the SCDA-III method performs best in predictive performance, memory usage, and the scalability of the ball.

This paper is structured as follows: “Related work” presents an overview of related work in concept drift adaptation. “Preliminaries” reviews the formulations of SVM and MEB. “Methodology” provides an elaborate introduction of the proposed framework and methods. “Experiments” showcases the experimental results and analysis, and “Conclusion” summarizes and offers some promising directions in the future.

Related work

Active, passive adaptation for concept drift

Concept drift adaptation is categorized into two approaches: active adaptation [10] and passive adaptation [8]. Active adaptation involves proactive detection upon data arrival to identify and update the model for new concepts. However, determining the necessary parameters for active detection proves challenging. To address repeated concept drift and limited labeled data, Wen et al. [31] leveraged a specialized concept classifier pool and historical classifiers to label data, enhancing concept drift detection efficiency. In contrast, passive adaptation updates the model without actively detecting concept drift upon data arrival. Li et al. [15] introduced an incremental semi-supervised learning framework that resolves parameter issues and offers an efficient incremental semi-supervised algorithm. The methods proposed in this paper fall under passive adaptation.

Online, incremental learning

Concept drift adaptive model learning from stream data involves two main methods: online learning [28] and incremental learning [16]. Incremental learning entails dividing data into blocks and updating the model when a specific data block threshold is reached. While block updating models can capture data distributions, determining the necessary parameters for data blocks poses challenges. Wang et al. [29] successfully employed adaptive windowing, assigning weights to sample point to handle concept drift with notable effectiveness. Similarly, Silva et al. [25] introduced the SSOE-FP-ELM algorithm, which utilizes parameterized forgetting when traversing data blocks to detect concept drift. Online learning involves continuous learning of individual samples, enabling real-time model updates without the need to accumulate a specific data volume before updating.

Single pass, multiple passes online learning

Online classification algorithms scanning stream data can be categorized into two groups: multipass and single pass [12]. Multipass algorithms require multiple iterations through the data during model training to reach an optimal or convergent solution. However, this characteristic often renders the online learning process inefficient. In contrast, single-pass algorithms involve scanning the data only once during training, making them more suitable for large-scale real-time data processing scenarios.

StreamSVM is an adaptive method for handling concept drift. Utilizing a single pass, StreamSVM converts the classification problem into a minimum enclosing ball dilemma. When new sample points fall outside the existing ball, StreamSVM expands the ball to encompass the new point. However, this expansion strategy leads to unnecessary coverage of vast areas when the new point is far from the existing ball. StreamSVM’s limitation lies in its inability to shrink the ball, resulting in infinite expansion. Similarly, OSVM-SP adopts the minimum enclosing ball method, expanding the old ball’s radius to cover new sample points. However, like StreamSVM, OSVM-SP lacks the capability to shrink the ball, exhibiting limited flexibility. The SCDA framework created in this paper also belongs to single-pass traversal.

Preliminaries

This section introduce the formulations of batch mode SVM and MEB, along with their equivalence, all of which bear close relevance to our methods.The primary symbols utilized in this article are presented in Table 1.

Support vector machine

Let S be a training set with N labeled sample points, \(S = \{(\varvec{x}_i, y_i)|\varvec{x}_i\in \mathbb {R}^{d \times 1}, y_i\in \{1, -1\},i = 1,\ldots , N\}\), the objective of SVM is to find a hyperplane in reproducing kernel Hilbert space (RKHS) which separates sample points of different classes in the training set, and generalizes well on the unseen data. According to the Karush–Kuhn–Tucker (KKT) theorem [13], the dual problem of SVM is listed in Eqs. (1a) (1b) [9].

The variable \(\varvec{\alpha }\) in Eq. (1a) is a vector consisting of N Lagrange multipliers, \(\varvec{\alpha } = [\alpha _1, \ldots , \alpha _N]'\in \mathbb {R}^{N \times 1}\). For any sample point \(\varvec{x}\), SVM predicts its label by \(y = \text {sig}(f(\varvec{x}))\), in which \(\text {sig}(\cdot )\) represents the sign function, and function \(f(\varvec{x})\) is the output of SVM on sample point \(\varvec{x}\) which can be computed by:

Minimum enclosing ball

Let \(\{\varvec{x}_i\in \mathbb {R}^{d \times 1}|i = 1,\ldots , N\}\) be a training set with N sample points, the central idea of MEB is to find a minimal ball enclosing all of them. The primal problem of MEB is listed as follows [26, 27]:

Here, we employ a different kernel mapping function \(\varphi (\cdot )\) in MEB to distinguish \(\phi (\cdot )\) in SVM. The hypersphere learned by MEB can be represented by \((\varvec{c}, r)\), where the center \(\varvec{c}\) is a high-dimensional feature vector in RKHS and \(r\in \mathbb {R}^+\) represents the radius. For a random sample point \(\varvec{x}\), MEB predicts whether it is inside the hypersphere or not according to the following inequality:

Equivalence of SVM and MEB

The supervised problem of SVM can be equivalently reformulated as an unsupervised problem of MEB, so long as the label information in the former is encoded in the kernel mapping function in the latter. In other words, \(\varphi (\varvec{x}_i)\) is related to \(y_i\) and hence can also be denoted by \(\varphi (\varvec{x}_i,y_i)\). Specifically, Eqs. (1a), (1b) and (3) are equivalent to each other if the following two conditions are satisfied [24, 27]:

-

1.

\(\varphi (\varvec{x}_i,y_i) = [y_i\phi (\varvec{x}_i); \varvec{e}_i/\sqrt{C}]\).

-

2.

\(k_\phi (\varvec{x}_i, \varvec{x}_i) = \kappa _\phi , \forall \varvec{x}_i\).

The specific derivation process of the formulas can be found in Appendix A.

Methodology

Exchange of positive and negative annotations

Let \(\overline{S}\) be the mirror set of S by exchanging the positive and negative annotations, \(\overline{S} = \{(\varvec{x}_i, y_i)|(\varvec{x}_i, -y_i)\in S,i = 1,\ldots , |S|\}\), and \(\overline{\varvec{\alpha }}, \overline{f}(\cdot ), (\overline{\varvec{c}}, \overline{r})\) be the solutions learned on \(\overline{S}\). Two solution groups are correlated with each other in Eq. (5), which can be easily derived according to “Preliminaries”.

According to the results in Eq. (5) we can see that:

-

1.

For any random input \(\varvec{x}\), output functions \(f(\cdot ), \overline{f}(\cdot )\) learned on S and \(\overline{S}\) consistently output opposite label predictions. Given that the label of \(\varvec{x}\) is either 1 or \(-1\) in binary classification, there will be only one of them returning the correct prediction.

-

2.

Two centers \(\varvec{c}\) and \(\overline{\varvec{c}}\) own a static midpoint \(\varvec{A}\), \(\varvec{A}=[\varvec{0}; \frac{1}{\sqrt{C}}\varvec{\alpha }]\). Here the ‘static’ indicates that \(\varvec{A}\) is not relevant directly to the sample points.

-

3.

Two radii r and \(\overline{r}\) are equal to each other, hence we simply utilize r to denote the radii of both hyperspheres \((\varvec{c}, r)\) and \((\overline{\varvec{c}}, r)\). Likewise, \(\varvec{\alpha }\) is utilized to represent the vector of Lagrange multipliers of both functions \(f(\cdot )\) and \(\overline{f}(\cdot )\).

When the new sample point \((\varvec{x}_i, y_i)\) comes for \(i=1,2,\ldots \), we use \(\varvec{z}^i\) to denote its corresponding feature vector in RKHS according to the first condition in “Equivalence of SVM and MEB”, that is \(\varvec{z}^i = \varphi (\varvec{x}_i,y_i) = [y_i\phi (\varvec{x}_i); \varvec{e}_i/\sqrt{C}]\). And \(\overline{\varvec{z}}^i\) represents the mirror point of \(\varvec{z}^i\), \(\overline{\varvec{z}}^i = \varphi (\varvec{x}_i,-y_i) = [-y_i\phi (\varvec{x}_i); \varvec{e}_i/\sqrt{C}]\). Mirror points are another possibility of existence for the real sample points, possessing identical attributes but opposite classes. In view of the fact that the framework proposed in this manuscript aims to cover the real sample points using the minimum enclosing ball. Therefore, the importance of mirror points lies in serving as a reference that mirror points should be no nearer to the ball than the real sample points. Let the current balls (hyperspheres in RKHS) be \((\varvec{c}^{i-1}, r^{i-1})\) and \((\overline{\varvec{c}}^{i-1}, r^{i-1})\), in which the centers can be expressed as (6) [26].

The distances between \(\varvec{z}^i, \overline{\varvec{z}}^i\) and two centers \(\varvec{c}^{i-1}, \overline{\varvec{c}}^{i-1}\) can be denoted by \(d(\varvec{z}^i, \varvec{c}^{i-1})\), \(d(\varvec{z}^i, \overline{\varvec{c}}^{i-1})\), \(d(\overline{\varvec{z}}^i, \varvec{c}^{i-1})\) and \(d(\overline{\varvec{z}}^i, \overline{\varvec{c}}^{i-1})\), respectively. Moreover, the correlation of four distances is listed in Eq. (7), which can be easily derived according to their definitions.

Equation (7) indicates that the distance between a feature vector and the center of a ball is equal to the distance of the corresponding mirror feature vector and the corresponding center of the mirror ball. In view of this, only two distances (\(d(\varvec{z}^i, \varvec{c}^{i-1}), d(\overline{\varvec{z}}^i, \varvec{c}^{i-1})\), which can be computed by Eq. (8)) between \(\varvec{z}^i, \overline{\varvec{z}}^i\) and one center \(\varvec{c}^{i-1}\) are employed in the following sections, and we utilize \(d^{\text {min}}\) (\(d^{\text {max}}\) ) in Eq. (9) to represent the smaller (larger) distance in between.

Learning online based on stream data

The framework of this article is depicted in Fig. 1. When the ith sample point \((\varvec{x}_i, y_i)\) of data stream comes, feature mapping is firstly employed to generate sample point \(\varvec{z}^i\) and mirror point \(\overline{\varvec{z}}^i\) (circle and triangle in Fig. 1), which then go through three different paths according to individual situations. Specifically, (1) when two sample points are both outside of the current ball (the black one in Fig. 1), “New sample point and its mirror are outside of two balls” elaborates how to learn a new ball (the red one in Fig. 1) to replace the current one (see the blue arrows in Fig. 1). Moreover, three distinct methods (SCDA-I, SCDA-II and SCDA-III) are proposed in this section based on different strategies employed; (2) when two sample points are inside and outside of the current ball one each, “New sample point and its mirror are inside of separate balls” presents constructing a new ball by either shrink or be replaced by the current one (green arrows); (3) when two sample points are both inside, “New sample point and its mirror are inside of intersection area of two balls” explains why and how a new ball should be replaced by the current one (orange arrows). The pseudo code is listed in Algorithm 1.

New sample point and its mirror are outside of two balls

When new sample point \(\varvec{z}^i\) and its mirror \(\varvec{z}^i\) are outside of the current ball \((\varvec{c}^{i-1}, r_{i-1})\), we compare their distances further and update the model according to individual situations.

On the one hand, if \(\varvec{z}^i\) is nearer to the current ball than \(\overline{\varvec{z}}^i\) (see Fig. 2), the new ball \((\varvec{c}^{i}, r^{i})\) requires to be enlarged to enclose \(\varvec{z}^i\). Therefore, we enlarge the ball in the way of StreamSVM [24] according to Eq. (10) (see lines 8–9 in Algorithm 1). The specific derivation process of the formulas can be found in Appendix B.

On the other hand, if \(\varvec{z}^i\) is farther away from the current ball than \(\overline{\varvec{z}}^i\), we develop three different strategies (‘S’ for short) to deal with such situation as follows:

-

S1

enlarge the ball to enclose \(\varvec{z}^i\) in the same way of StreamSVM [24].

-

S2

enlarge the ball slightly to either enclose \(\varvec{z}^i\) or keep it nearby.

-

S3

keep the radius unchanged, slightly move the center towards \(\varvec{z}^i\) to either enclose it or keep it nearby.

The S1 strategy is employed by SCDA-I (See line 11 in Algorithm 1) to enlarge the ball same as above. In order to change the current situation, SCDA-II utilizes the S2 strategy (See line 12 in Algorithm 1) to enlarge the radius as least as possible. We find that, if the inequality \(r^{i-1} \geqslant (2\bigstar - 1)d^\text {max}\) satisfies, the least enlargement will be attained by Eq. (10) to make the sample point \(\varvec{z}^i\) enclosed (see Fig. 3a). Otherwise, the least enlargement will be attained by Eq. (11) to let the point \(\varvec{D}\) be center and make the sample point \(\varvec{z}^i\) nearby (see Fig. 3b). Here \(\varvec{D}\) denotes the intersection point of the perpendicular at midpoint of \(\varvec{z}^i, \overline{\varvec{z}}^i\), and the line segment that joins \(\varvec{z}^i, \varvec{c}^{i-1}\). The specific derivation process of the formulas can be found in Appendix C.

The S3 strategy employed in SCDA-III (see line 13 in Algorithm 1) moves the center as least as possible to change the current situation while keeping the radius unchanged (\(r^i\leftarrow r^{i-1}\)). We find that, if the inequality \(r^{i-1}\geqslant \bigstar d^\text {max}\) satisfies, the least movement will be attained by Eq. (12) to enclose the sample point \(\varvec{z}^i\) (see Fig. 4a); otherwise, the center will be moved by Eq. (11a) and stopped at point \(\varvec{D}\) to make the sample point \(\varvec{z}^i\) nearby (see Fig. 4b). The specific derivation process of the formulas can be found in Appendix D.

New sample point and its mirror are inside of separate balls

When \(d^{\text {min}}\leqslant r^{i-1} < d^{\text {max}}\), one sample point (\(\varvec{z}^i\) or \(\overline{\varvec{z}}^i\)) is inside of the current ball \((\varvec{c}^{i-1}, r^{i-1})\) and the other (\(\overline{\varvec{z}}^i\) or \(\varvec{z}^i\)) is outside. On the one hand \(\varvec{z}^i\) is inside and \(\overline{\varvec{z}}^i\) is outside (see Fig. 5), \(\overline{\varvec{z}}^i\) is actually inside of the mirror ball \((\overline{\varvec{c}}^{i-1}, r^{i-1})\) according to Eq. (7). It implicates that new data coincide with the current concept and no drift happens. Therefore, there is no need to update the model in such case and the variables are computed according to Eq. (13).

Otherwise, \(\overline{\varvec{z}}^i\) is inside of \((\varvec{c}^{i-1}, r^{i-1})\) and \(\varvec{z}^i\) is outside, which can be explained as the data in representation of the new knowledge contradicts the current concept. Therefore, the model requires to be adjusted to remove the contradiction. To be specific, the ball requires to shrink to a maximal inscribed ball according to Eq. (14) to uncover the sample point \(\overline{\varvec{z}}^i\) (see Fig. 6). Here a constant \(\text {COEF}\) is utilized to make sure that the sample point \(\overline{\varvec{z}}^i\) will be located slightly outside of the new ball (not on it) after the update, and \(\text {COEF}\) can be set slightly less than one, 0.999 for instance. The specific derivation process of the formulas can be found in Appendix E.

New sample point and its mirror are inside of intersection area of two balls

When \(d^{\text {max}}\leqslant r^{i-1}\), it represents \(\varvec{z}^i\) and \(\overline{\varvec{z}}^i\) are both inside of the ball \((\varvec{c}^{i-1}, r^{i-1})\). According to Eq. (7) we can also know that they are inside of the ball \((\overline{\varvec{c}}^{i-1}, r^{i-1})\) as well. In other words, they are inside of intersection area of two balls. A nonempty intersection area (if there exits) indicates some essential area in RKHS that a MEB has to enclose no matter what label annotations are set. Therefore, there is no need to update the model in such case and variables are computed according to Eq. (13).

Correlations of SCDA-I, SCDA-II and SCDA-III

SCDA-I, SCDA-II, and SCDA-III are three methods based on the SCDA framework, all capable of expanding and shrinking the minimum enclosing ball. When only mirror point is within the ball, all three methods shrink the ball. When the sample point is closer to the ball, all three methods expand the ball to encompass the sample point. When the mirror point is closer to the ball, SCDA-I expands the ball in the same manner as when the sample point is closer, SCDA-II aims to minimize the expansion of the radius as much as possible, and the distinction of SCDA-III lies in maintaining a constant radius. Both SCDA-I and SCDA-II methods increase the radius of the ball to varying degrees.

Experiments

Benchmark datasets

To assess the effectiveness of our method, we utilized seven synthetic and five real worldFootnote 1,Footnote 2,Footnote 3,Footnote 4,Footnote 5 binary concept drift datasets. To ensure compatibility with SVM models, symbolic features were excluded, while numeric features underwent Min–max normalization, scaling them within the range of \([-1, 1]\). Before conducting experiments, all datasets were subjected to normalization processing. No further processing was performed to avoid excessive manipulation that may affect data concept drift and experimental outcomes. Details regarding these datasets are outlined in Table 2.

Since the drift types of realworld datasets are unavailable, we utilize the Massive Online Analysis (MOA) toolbox [3] to generate synthetic datasets with various drift types such as gradual, sudden and incremental. Specifically, we utilize three popular data generators (Sine, SEA and AGR) to generate datasets with either gradual (e.g. Sine(g), SEA(g), AGR(g)) or sudden (Sine(s), SEA(s), AGR(s)) drift, and employ Hyperplane to create data with incremental drift. Each synthetic dataset comprises 10,000 data instances with balanced classes. Sudden drifts were positioned at the 5000th instance, while gradual drifts spanned from the 5000th to the 6000th instances, with a width of 1000. Hyperplane incremental drift was employed as a standard configuration.

Baseline methods

The three methods introduced in this paper employ distinct strategies for manipulating the enclosing ball. SCDA-I enlarges the ball to encapsulate both the prior ball and the incoming sample point. SCDA-II expands and repositions the ball to approach proximity with the new sample point. Meanwhile, SCDA-III maintains the circle’s radius unchanged while shifting the circle towards the new sample point until it encompasses it.

StreamSVM StreamSVM is an SVM algorithm utilizing a minimum enclosing ball technique to navigate streaming data. However, its model can solely expand the original ball to include both the original ball and new sample points, lacking the ability to shrink.

OSVM-SP This method extends the ball so that it precisely covers the radius of the previous ball and the new sample point. Notably, OSVM-SP exclusively enlarges the enclosing ball.

Naive Bayes Naive Bayes operates on the assumption of attribute independence within the label, estimating prior and conditional probabilities for each attribute accordingly.

Hoeffding Tree The Hoeffding Tree represents an incremental decision tree algorithm designed to learn from a continuous stream of data. It relies on the Hoeffding bound to ensure the accuracy of the observed mean.

ADWIN ADWIN functions as an adaptive sliding window algorithm, dynamically altering window size based on data fluctuations. Notably, it shrinks the window size in response to significant data changes and enlarges it when changes are less pronounced.

Performance

Prequential error

This section presents a comparative analysis of prequential errors among three methods introduced in this paper and five other methods across twelve datasets. The prequential error at time i, \(P_e(i)\), is utilized to evaluate the performance of online methods which can be computed by Eq. (15) according to [7]. For the sample point at time k, \(\hat{y}_k\) and \(y_k\) indicate the predicted label and ground truth, respectively. The results are shown in Table 3. Notably, the figures in bold highlight the superior performance indicators, while those in parentheses indicate the prequential error rankings among the eight methods. A method with a lower average rank demonstrates superior performance.

From the results we can see that, SCDA-I, SCDA-II and SCDA-III demonstrate significant predictive performance. Notably, SCDA-III showcases the lowest prequential error across all synthetic datasets and several realworld datasets such as Spam and Electricity. In other realworld datasets however, either SCDA-I or OSVM-SP slightly outperforms SCDA-III. The failure of StreamSVM is attributed to its simple principle of ball enlargement only, resulting in comparatively weaker performance. Both the proposed methods and OSVM-SP attempt to modify the StreamSVM method with different strategies. Overall, SCDA-III emerges as the superior method. The remaining three methods (NaiveBayes, Hoeffding Tree and ADWIN) yielding moderate results. Moreover, for the three proposed methods, the prequential error of datasets with sudden concept drift is generally lower than that of datasets with gradual concept drift.

Figures 7 and 8 illustrate the trend of prequential errors concerning the increase in the number of instances. These figures prominently showcase SCDA-III’s consistently lower prequential error across nearly all datasets. In AGR(s) and Sine(s) datasets, the prequential error initially decreases and stabilizes with the accumulation of data. When sudden drifts occur, the error spikes, gradually stabilizing afterward. In AGR(g) datasets, the prequential error initially diminishes with increasing instances, following a stable trend. The error increases during the onset of gradual drifts and then gradually stabilizes upon their conclusion. For Sine(g), SEA, and Hyperplane datasets, most methods exhibit a declining prequential error initially, later stabilizing. StreamSVM displays overall stability across synthetic datasets.

SV percent

The second experiment involves comparing the support vectors among StreamSVM, OSVM-SP, and the three methods proposed in this paper, all utilizing SVM. The percentage of support vectors reflects memory usage, typically increasing as the dataset size grows. Figure 9 displays the support vectors of these five methods solely on the synthesized dataset since the timing and characteristics of drift are known.

Observing the figure reveals that StreamSVM possesses minimal support vectors. OSVM-SP slightly exceeds Stream SVM but remains smaller than the methods introduced in this paper. Notably, the support vectors ratio among the three proposed methods is nearly identical. Analysis of the figure, combined with insights from the first experimental result, indicates significant model changes within the initial ten percent of data. Consequently, the support vectors proportion in this initial segment is notably high. On datasets like AGR(s), AGR(g), and Sine(s), drift occurrences are notably prominent within the 50–60% range. This heightened occurrence corresponds to a substantial influence of drift on the model, resulting in a higher proportion of corresponding support vectors.

Radius

In this section, the minimum enclosing ball radii of the three methods proposed in this paper are compared with StreamSVM. As the methods introduced in this paper aim to enhance StreamSVM, it serves as the comparative benchmark, while OSVM-SP, utilizing different techniques, is not included in this comparison. Figure 10 illustrates the radii concerning varying data quantities.

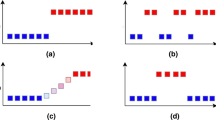

At the first sample point, the minimum enclosing ball is itself, resulting in a radius of 0. As subsequent sample points arrive, the radius begins to acquire values. By the tenth sample point, the radius stabilizes, undergoing slight adjustments thereafter. SCDA-I, SCDA-II, and SCDA-III encompass minimum enclosing ball models capable of both expansion and reduction, allowing their radii to increase or decrease. However, SCDA-III exhibits greater volatility, indicating higher sensitivity. The radii for SCDA-I and SCDA-II are nearly identical. In contrast, the radii for StreamSVM only demonstrate an increase with the growing data volume. Notably, the radius corresponding to StreamSVM appears significantly larger compared to the others, as depicted in the figure.

Ablation study

The results of the ablation experiment are depicted in Fig. 11. The abscissa displays six methods, while the ordinate represents the prequential error. Each method calculates the average prequential error across the 12 datasets outlined in Table 2. The error bars indicate the range, spanning the upper and lower boundaries, for each graph. The rectangles signify a quarter to three-quarters of the total data, encompassing half of the dataset. The red horizontal line represents the median. The symbol ‘+’ along the horizontal axis denotes minimum enclosing ball expansion(corresponding to statement 9 in Algorithm 1), while ‘–’ indicates reduction (corresponding to statement 16 in Algorithm 1). ‘None’ signifies no adjustment, ‘Plus’ refers to the expansion method as per statement 12 in Algorithm 1, and ‘Move’ represents the expansion method corresponding to statement 13 in Algorithm 1.

Upon comparing the first three methods, it’s evident that shrinking the minimum enclosing ball significantly reduces the prequential error. Among the last four methods, unadjusted prequential error remains notably high, necessitating attention. Among SCDA-I, SCDA-II, and SCDA-III, all of which involve shrinking the minimum enclosing ball, SCDA-III exhibits a slightly lower prequential error than the other two methods. An overall assessment highlights SCDA-III as the optimal method from the entire figure.

Performance analysis

The three SCDA methods and StreamSVM, OSVM-SP are all based on the transformation of SVM and MEB. The StreamSVM method expands the ball to a sufficient size when a small number of sample points arrive, making it difficult to trigger updates when subsequent sample points arrive. As shown in Fig. 7b, the StreamSVM curve grows the fastest when ten percent of the samples are reached, and the subsequent curve tends to stabilize. The OSVM-SP method is optimized based on StreamSVM, with a slower initial growth curve, but gradually approaching the curve of StreamSVM later on. In contrast, the SCDA methods can expand and contract the ball, and the prequential error is ultimately the lowest. From Fig. 9a, it can be seen that the lowest SV percent of StreamSVM is due to the difficulty in triggering model updates later on. The SV percent of the OSVM-SP method is slightly higher than that of StreamSVM. From Fig. 10a, it can be seen that the radius of StreamSVM grows the fastest in the first ten sample points, and subsequently grows slowly due to few updates triggered. In contrast, especially in SCDA-III, the radius of the SCDA method flexibly expands and contracts, and the final radius of the ball will not be excessively large like that of StreamSVM.

Conclusion

This paper introduces an SCDA framework utilizing SVM and minimum enclosing ball algorithms, presenting three distinct methods derived from this framework. The minimum enclosing ball within this framework dynamically adjusts its size, expanding or contracting based on specific conditions. Experimental analysis demonstrates the superior accuracy of our proposed method over the comparative approach. Particularly, the SCDA-III method exhibits greater efficacy, showcasing the enhanced flexibility of the minimum enclosing ball’s scalability.

Moving forward, our focus in future research will center on several key areas. Firstly, this study exclusively addresses binary classification data, prompting a meaningful exploration into methods for handling multiple classifications. Secondly, while this paper employs a balanced class synthetic dataset, investigating strategies for dealing with class imbalances presents an intriguing and challenging direction for further study. At last, the availability of massive data also brings the challenge in selecting a small quatity of informative data for learning the model.

References

Baidari I, Honnikoll N (2021) Bhattacharyya distance based concept drift detection method for evolving data stream. Expert Syst Appl 183:115303. https://doi.org/10.1016/j.eswa.2021.115303

Bifet A, Gavalda R (2007) Learning from time-changing data with adaptive windowing. In: Proceedings of the 2007 SIAM international conference on data mining. SIAM, pp 443–448. https://doi.org/10.1137/1.9781611972771.42

Bifet A, Holmes G, Pfahringer B et al (2010) Moa: Massive online analysis, a framework for stream classification and clustering. In: Proceedings of the first workshop on applications of pattern analysis. PMLR, pp 44–50

Butt UA, Amin R, Aldabbas H et al (2023) Cloud-based email phishing attack using machine and deep learning algorithm. Complex Intell Syst 9(3):3043–3070. https://doi.org/10.1007/s40747-022-00760-3

Fu H, Manogaran G, Wu K et al (2020) Intelligent decision-making of online shopping behavior based on internet of things. Int J Inf Manag 50:515–525. https://doi.org/10.1016/j.ijinfomgt.2019.03.010

Gâlmeanu H, Andonie R (2022) Weighted incremental-decremental support vector machines for concept drift with shifting window. Neural Netw 152:528–541. https://doi.org/10.1016/j.neunet.2022.05.018

Gama J, Sebastiao R, Rodrigues PP (2013) On evaluating stream learning algorithms. Mach Learn 90(3):317–346. https://doi.org/10.1007/s10994-012-5320-9

Heusinger M, Raab C, Schleif FM (2022) Passive concept drift handling via variations of learning vector quantization. Neural Comput Appl 34(1):89–100. https://doi.org/10.1007/s00521-020-05242-6

Hu L, Hu C, Huo Z et al (2022) Online support vector machine with a single pass for streaming data. Mathematics 10(17):3113. https://doi.org/10.3390/math10173113

Hu Y, Baraldi P, Di Maio F et al (2017) A systematic semi-supervised self-adaptable fault diagnostics approach in an evolving environment. Mech Syst Signal Process 88:413–427. https://doi.org/10.1016/j.ymssp.2016.11.004

Hulten G, Spencer L, Domingos P (2001) Mining time-changing data streams. In: Proceedings of the seventh ACM international conference on knowledge discovery and data mining, San Francisco USA, pp 97–106. https://doi.org/10.1145/502512.502529

Jain M, Kaur G, Saxena V (2022) A k-means clustering and svm based hybrid concept drift detection technique for network anomaly detection. Expert Syst Appl 193:116510. https://doi.org/10.1016/j.eswa.2022.116510

Kuhn HW, Tucker AW (2014) Nonlinear programming. In: Traces and emergence of nonlinear programming. Springer, pp 247–258

Kurani A, Doshi P, Vakharia A et al (2023) A comprehensive comparative study of artificial neural network (ann) and support vector machines (svm) on stock forecasting. Ann Data Sci 10(1):183–208. https://doi.org/10.1007/s40745-021-00344-x

Li Y, Wang Y, Liu Q et al (2019) Incremental semi-supervised learning on streaming data. Pattern Recognit 88:383–396. https://doi.org/10.1016/j.patcog.2018.11.006

Li Z, Huang W, Xiong Y et al (2020) Incremental learning imbalanced data streams with concept drift: the dynamic updated ensemble algorithm. Knowl Based Syst 195:105694. https://doi.org/10.1016/j.knosys.2020.105694

Lima M, Neto M, Silva Filho T et al (2022) Learning under concept drift for regression—a systematic literature review. IEEE Access 10:45410–45429. https://doi.org/10.1109/ACCESS.2022.3169785

Lin CC, Deng DJ, Kuo CH et al (2019) Concept drift detection and adaption in big imbalance industrial iot data using an ensemble learning method of offline classifiers. IEEE Access 7:56198–56207. https://doi.org/10.1109/ACCESS.2019.2912631

Liu A, Song Y, Zhang G et al (2017) Regional concept drift detection and density synchronized drift adaptation. In: IJCAI international joint conference on artificial intelligence. http://hdl.handle.net/10453/126374. Accessed 8 Jan 2024

Liu A, Lu J, Zhang G (2020) Diverse instance-weighting ensemble based on region drift disagreement for concept drift adaptation. IEEE Trans Neural Netw Learn Syst 32(1):293–307. https://doi.org/10.1109/TNNLS.2020.2978523

Liu A, Zhang G, Wang K et al (2020) Fast switch naïve bayes to avoid redundant update for concept drift learning. In: 2020 International joint conference on neural networks (IJCNN). IEEE, pp 1–7. https://doi.org/10.1109/IJCNN48605.2020.9207077

Lu J, Liu A, Dong F et al (2018) Learning under concept drift: a review. IEEE Trans Knowl Data Eng 31(12):2346–2363. https://doi.org/10.1109/TKDE.2018.2876857

Priya S, Uthra RA (2021) Deep learning framework for handling concept drift and class imbalanced complex decision-making on streaming data. Complex Intell Syst. https://doi.org/10.1007/s40747-021-00456-0

Rai P, Daume H, Venkatasubramanian S (2009) Streamed learning: one-pass svms. In: Proceedings of the 21st international joint conference on artificial intelligence, Pasadena USA, pp 1211–1216

da Silva CA, Krohling RA (2019) Semi-supervised online elastic extreme learning machine with forgetting parameter to deal with concept drift in data streams. In: 2019 International joint conference on neural networks (IJCNN). IEEE, pp 1–8. https://doi.org/10.1109/IJCNN.2019.8852361

Tax DM, Duin RP (2004) Support vector data description. Mach Learn 54(1):45–66

Tsang IW, Kwok JT, Cheung PM et al (2005) Core vector machines: Fast svm training on very large data sets. J Mach Learn Res 6(4)

Wang S, Minku LL, Yao X (2018) A systematic study of online class imbalance learning with concept drift. IEEE Trans Neural Netw Learn Syst 29(10):4802–4821. https://doi.org/10.1109/TNNLS.2017.2771290

Wang Y, Li T (2018) Improving semi-supervised co-forest algorithm in evolving data streams. Appl Intell 48:3248–3262. https://doi.org/10.1007/s10489-018-1149-7

Wang Z, Han D, Li M et al (2022) The abnormal traffic detection scheme based on pca and ssh. Connect Sci 34(1):1201–1220. https://doi.org/10.1080/09540091.2022.2051434

Wen Y, Zhou Q, Xue Y et al (2020) Transfer learning for semi-supervised classification of non-stationary data streams. In: Neural information processing: 27th international conference, ICONIP 2020, Bangkok, Thailand, November 18–22, 2020, Proceedings, Part V 27. Springer, pp 468–477

Yu E, Song Y, Zhang G et al (2022) Learn-to-adapt: concept drift adaptation for hybrid multiple streams. Neurocomputing 496:121–130. https://doi.org/10.1016/j.neucom.2022.05.025

Yu H, Lu J, Zhang G (2020) Continuous support vector regression for nonstationary streaming data. IEEE Trans Cybern 52(5):3592–3605

Yu H, Liu W, Lu J et al (2023) Detecting group concept drift from multiple data streams. Pattern Recognit 134:109113

Yuan S, Wu X (2021) Deep learning for insider threat detection: review, challenges and opportunities. Comput Secur 104:102221. https://doi.org/10.1016/j.cose.2021.102221

Zhang H, Liu W, Shan J et al (2018) Online active learning paired ensemble for concept drift and class imbalance. IEEE Access 6:73815–73828. https://doi.org/10.1109/ACCESS.2018.2882872

Zhang H, Li Y, Lv Z et al (2020) A real-time and ubiquitous network attack detection based on deep belief network and support vector machine. IEEE/CAA J Autom Sin 7(3):790–799. https://doi.org/10.1109/JAS.2020.1003099

Zhou ZH (2021) Machine learning. Springer Nature, Berlin

Acknowledgements

This work is supported by the National Natural Science Foundation of China No. 62002187, Youth Innovation Team of Colleges and Universities in Shandong Province (2023KJ331), the Science Research Project of Hebei Education Department of China under Grant No. QN2023184, the Fundamental Research Project of Shijiazhuang City under Grant No. 241791057A, the Scientific Research and Development Program of Hebei University of Economics and Business under Grant No. 2022ZD10, and the Qilu University of Technology (Shandong Academy of Sciences) Talent Scientific Research Project No. 2023RCKY127.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. On behalf of all authors, the corresponding author states that there is no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Derivation of the equivalence of SVM and MEB in “Equivalence of SVM and MEB”

As shown in “Support vector machine”, the dual problem of SVM in [9] is listed as follows:

The primal problem of MEB in [26, 27] is listed in “Minimum enclosing ball”. According to the KKT theorem [13], the dual problem of MEB can be computed in the following equation according to [9].

Clearly, the contraints and variables in Eqs. (A1b) and (A2b) are the same. Now the equivalence of SVM and MEB is transformed into the equivalence of two objective functions \(f_{\text {SVM}}\) and \(f_{\text {MEB}}\). According to [24, 27], the equivalence holds if the following two conditions are satisfied, which are also listed in “Equivalence of SVM and MEB”. In the following part we give the corresponding explanations.

-

1.

\(\varphi (\varvec{x}_i,y_i) = [y_i\phi (\varvec{x}_i); \varvec{e}_i/\sqrt{C}]\).

-

2.

\(k_\phi (\varvec{x}_i, \varvec{x}_i) = \kappa _\phi , \forall \varvec{x}_i\).

According to the first condition, we can easily determine that the kernel function \(k_\varphi (\varvec{x}_i, \varvec{x}_j)\) holds as follows:

Here \(\delta _{ij}\) in Eq. (A3) is denoted as follows:

Clearly, \(\delta _{ij} = y_iy_j\delta _{ij}\) holds for any pair of i, j. Therefore, the kernel function \(k_\varphi (\varvec{x}_i, \varvec{x}_j)\) in Eq. (A3) can be equivalently transformed as follows:

Besides, when \(i = j\), the kernel function holds as follows:

When \(k_\varphi (\varvec{x}_i, \varvec{x}_j)\) and \(k_\varphi (\varvec{x}_i, \varvec{x}_i)\) in \(f_{\text {MEB}}\) are substituted according to Eqs. (A5)–(A6), we can get a transformation of \(f_{\text {MEB}}\) as follows:

In Eq. (A7), the first term equals \(f_{\text {SVM}}\), and the second term equals to a constant \(\kappa _\phi + 1/C\) according to the second condition and the constraints in Eq. (A2b), which is irrelevant to the variable \(\varvec{\alpha }\) in the optimization problem. In other words, to maximize \(f_{\text {MEB}}\) is equivalent to maximize \(f_{\text {SVM}}\).

Appendix B: Derivation of Eq. (10)

According to Fig. 2, it is easy to see the equation \(d(\varvec{z}^i,\varvec{c}^{i-1}) + r^{i-1} = 2r^i\) holds since the new ball \((\varvec{c}^{i},r^{i})\) has to enclose both the old one \((\varvec{c}^{i-1},r^{i-1})\) and the new sample \(\varvec{z}^i\). Thus, the Eq. (B 8) satisfies, which is listed as the radius update in Eq. (10).

Besides, the new center \(\varvec{c}^{i}\) locates between two points of \(\varvec{z}^i\) and \(\varvec{c}^{i-1}\). Therefore, the following equation holds.

According to Fig. 2, the distance \(d(\varvec{c}^{i},\varvec{c}^{i-1})\) of new and old centers can be computed by \(d(\varvec{c}^{i},\varvec{c}^{i-1}) = d(\varvec{z}^i,\varvec{c}^{i-1}) - r^{i}\), which is then transformed into \(d(\varvec{c}^{i},\varvec{c}^{i-1}) = (d(\varvec{z}^i,\varvec{c}^{i-1}) - r^{i-1})/2\) according to Eq. (B 8). Combining the results of Eq. (B 9) and the distance \(d(\varvec{c}^{i},\varvec{c}^{i-1})\), the new center \(\varvec{c}^{i}\) can also be equivalently represented as follows:

According to “Exchange of positive and negative annotations”, two centers \(\varvec{c}^{i}, \varvec{c}^{i-1}\) can be expressed by the Lagrange multipliers \(\varvec{c}^{i} = \sum _{j=1}^{i}\alpha _j^{i}\varvec{z}^j, \varvec{c}^{i-1} = \sum _{j=1}^{i-1}\alpha _j^{i-1}\varvec{z}^j\). When \(\varvec{c}^{i}, \varvec{c}^{i-1}\) in Eq. (B 10) are substituted, we can get the following equation about the Lagrange multipliers.

Since Eq. (B 11) holds for any random \(\varvec{z}^j\), we can get its equivalent form in Eq. (B 12), which is listed as the multiplier update in Eq. (10) as well.

Appendix C: Derivation of Eqs. (11a)–(11c)

The new point \(\varvec{z}^i\) and its mirror \(\overline{\varvec{z}}^i\) are the vertexes of the triangle \(\Delta \varvec{c}^{i-1}\varvec{z}^i\overline{\varvec{z}}^i\) in Fig. 3b, which can also be represented by \(\varvec{z}^i = [y_i\phi (\varvec{x}_i); \varvec{e}_i/\sqrt{C}], \overline{\varvec{z}}^i = [-y_i\phi (\varvec{x}_i); \varvec{e}_i/\sqrt{C}]\) according to “Exchange of positive and negative annotations”. Therefore, the length \(d(\varvec{z}^i,\overline{\varvec{z}}^i)\) of one side of the triangle can be computed by \(d(\varvec{z}^i,\overline{\varvec{z}}^i) = 2\sqrt{\kappa _\phi }\), and the length of the other two sides can be denoted as \(d(\varvec{z}^i,\varvec{c}^{i-1}) = d^{\text {max}}, d(\overline{\varvec{z}}^i,\varvec{c}^{i-1}) = d^{\text {min}}\), respectively. Let the angle \(\angle \varvec{c}^{i-1}\varvec{z}^i\overline{\varvec{z}}^i\) be \(\theta \). According to the Cosine theorem, we have:

As for the triangle \(\Delta \varvec{E}\varvec{z}^i\varvec{D}\) in Fig. 3b, the side length \(d(\varvec{E},\varvec{z}^i) = \sqrt{\kappa _\phi }\) since \(\varvec{E}\) is the midpoint of \(\varvec{z}^i\) and \(\overline{\varvec{z}}^i\), and \(\angle \varvec{E}\varvec{z}^i\varvec{D}\) equals \(\theta \) because it represents the same angle as \(\angle \varvec{c}^{i-1}\varvec{z}^i\overline{\varvec{z}}^i\). Thus we have:

In view of \(d(\varvec{D},\varvec{z}^i)\) above and \(d(\varvec{z}^i,\varvec{c}^{i-1})\), we can get the distance \(d(\varvec{D}, \varvec{c}^{i-1})\) as follows:

According to Fig. 3b, \(\varvec{D}\) denotes the intersection point of the perpendicular at midpoint \(\varvec{E}\) of \(\varvec{z}^i, \overline{\varvec{z}}^i\), and the line segment that joins \(\varvec{z}^i, \varvec{c}^{i-1}\). It means if the new center locates at point \(\varvec{D}\), the distance between it and either \(\varvec{z}^i\) or \(\overline{\varvec{z}}^i\) would be equal to each other. Thus we claim that the sample \(\varvec{z}^i\) is kept nearby and not far away from the new center. The new center \(\varvec{c}^{i}\) is correlated with the old one \(\varvec{c}^{i-1}\) according to the following equation.

In Eq. (C 16), when \(\varvec{c}^{i}, \varvec{c}^{i-1}\) are substituted for \(\varvec{c}^{i} = \sum _{j=1}^{i}\alpha _j^{i}\varvec{z}^j\), \(\varvec{c}^{i-1} = \sum _{j=1}^{i-1}\alpha _j^{i-1}\varvec{z}^j\) and \(d(\varvec{D}, \varvec{c}^{i-1})\) is substituted according to Eq. (C 15), we can get the following equation about the Lagrange multipliers.

Since Eq. (C 17) holds for any random \(\varvec{z}^j\), we can get its equivalent form in Eq. (C 18), which is listed as the multiplier update in Eq. (11a). Here \(\bigstar \) is equal to \(4\kappa _\phi / ((d^\text {max})^2 + 4\kappa _\phi - (d^\text {min})^2)\), which is listed in Eq. (11c).

According to Fig. 3b, the new radius \(r^i\) is equal to the sum of the old one \(r^{i-1}\) and \(d(\varvec{D}, \varvec{c}^{i-1})\), which can be equally transformed into the following equation when combined with Eq. (C 15) and the notation \(\bigstar \). Equation (C 19) is listed as the radius update in Eq. (11b).

Appendix D: Derivation of Eq. (12)

According to Fig. 4a, the center moves from the old one \(\varvec{c}^{i-1}\) towards \(\varvec{z}^i\), and it stops at \(\varvec{c}^{i}\) once \(\varvec{z}^i\) is enclosed. The radius is kept unchanged, which means \(r^i = r^{i-1}\). Therefore, the distance of movement can be computed as follows:

Once the distance of old and new centers are certain, the new center \(\varvec{c}^{i}\) can be computed by the old one \(\varvec{c}^{i-1}\) according to the following equation.

In Eq. (D 21), when \(\varvec{c}^{i}, \varvec{c}^{i-1}\) are substituted for \(\varvec{c}^{i} = \sum _{j=1}^{i}\alpha _j^{i}\varvec{z}^j\), \(\varvec{c}^{i-1} = \sum _{j=1}^{i-1}\alpha _j^{i-1}\varvec{z}^j\) and \(d(\varvec{c}^{i-1}, \varvec{c}^{i})\) is substituted according to Equation (D 20), we can get the following equation about the Lagrange multipliers.

Since Eq. (D 22) holds for any random \(\varvec{z}^j\), we can get its equivalent form in Eq. (D 23), which is listed as the multiplier update in Eq. (12).

Appendix E: Derivation of Eq. (14)

According to the diameter difference between the black and red dashed balls in Fig. 6 we can see the equation \(2r^{i-1} - 2\tilde{r}^{i} = r^{i-1} - d(\overline{\varvec{z}}^{i}, \varvec{c}^{i-1})\) holds. Therefore, the radius \(\tilde{r}^{i}\) of the red dashed ball can be computed using the following equation.

Since the red dashed ball still encloses the point \(\overline{\varvec{z}}^{i}\) like the old ball (the black one), which does not solve the problem. However, if we slightly decrease the radius, we can uncover the point as needed. Thus the radius of the new ball (the red solid one in Fig. 6) can be computed by Eq. (E 25), which is also listed as the radius update in Eq. (14). Here \(\text {COEF}\) denotes a constant utilized to make sure that the sample \(\overline{\varvec{z}}^i\) will be located slightly outside of the new ball (not on it), and it can be set slightly less than one as mentioned in “New sample point and its mirror are inside of separate balls”.

In view of the fact that the diameter of the red dashed ball consists of three segments, we have the following equation holds.

In Eq. (E 26), if we substitute \(\tilde{r}^{i}\) and \(r^{i}\) according to Eqs. (E 24)–(E 25), we can get the distance \(d(\varvec{c}^{i},\varvec{c}^{i-1})\) of old and new centers as follows:

Once the distance of old and new centers are certain, the new center \(\varvec{c}^{i}\) can be computed by the old one \(\varvec{c}^{i-1}\) according to the following equation.

In Eq. (E 28), when \(\varvec{c}^{i}, \varvec{c}^{i-1}\) are substituted for \(\varvec{c}^{i} = \sum _{j=1}^{i-1}\alpha _j^{i}\varvec{z}^j + \alpha _i^{i}\overline{\varvec{z}}^i\), \(\varvec{c}^{i-1} = \sum _{j=1}^{i-1}\alpha _j^{i-1}\varvec{z}^j\) and \(d(\varvec{c}^{i},\varvec{c}^{i-1})\) is substituted according to Eq. (E 27), we can get the following equation about the Lagrange multipliers.

Since Eq. (E 29) holds for any random \(\varvec{z}^j\) and \(\overline{\varvec{z}}^i\), we can get its equivalent form in Eq. (E 30), which is listed as the multiplier update in Eq. (14). Here \(\spadesuit \) is equal to \(\text {COEF}/2 + (\text {COEF}-2)r^{i-1}/(2d^{\text {min}})\), which is listed in Eq. (14) as well.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hu, L., Li, W., Lu, Y. et al. Scalable concept drift adaptation for stream data mining. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01524-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40747-024-01524-x