Abstract

Intelligent monitoring technology plays an important role in promoting the development of coal mine safety management. Low illumination in the coal mine underground leads to difficult recognition of monitoring images and poor personnel detection accuracy. To alleviate this problem, a low illuminance image enhancement method proposed for personnel safety monitoring in underground coal mines. Specifically, the local enhancement module maps low illumination to normal illumination at pixel level preserving image details as much as possible. The transformer-based global adjustment module is applied to the locally enhanced images to avoid over-enhancement of bright areas and under-illumination of dark areas, and to prevent possible color deviations in the enhancement process. In addition, a feature similarity loss is proposed to constrain the similarity of target features to avoid the possible detrimental effect of enhancement on detection. Experimental results show that the proposed method improves the detection accuracy by 7.1% on the coal mine underground personal dataset, obtaining the highest accuracy compared to several other methods. The proposed method effectively improves the visualization and detection performance of low-light images, which contributes to the personnel safety monitoring in underground coal mines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

China is the largest producer and consumer of coal in the world [1], and safe coal mining is critical to energy security and economic stability [2]. Studies have shown that operational violations and mismanagement are the direct causes of coal mine safety accidents [3, 4]. With the development of computer technology, intelligent safety management in coal mines has become an inevitable trend [5]. Video monitoring technology is increasingly applied and plays an important role in coal mines safety management. However, as shown in Fig. 1, the low brightness and contrast of the monitoring images makes the target difficult to recognize. The main reasons are as follows. Although artificial lighting is used throughout the day in underground tunnel, it is obviously insufficient compared to natural light. Places far from the light source or obscured by equipment are very dim. Low image brightness and contrast not only lead to blurred target that are difficult to recognize, but also seriously affect feature extraction and analysis in subsequent advanced vision tasks. At the same time, the regions near the light source are affected by dust which resulting in local overexposure and whitening. The simultaneous presence of overexposure and underexposure in the image makes the image enhancement task more difficult. Therefore, low-light image enhancement in underground coal mines has important research and application value for improving video monitoring quality and detection capability.

Low light enhancement algorithms include traditional methods and deep learning-based methods. Traditional image enhancement methods mainly rely on histogram equalization methods, Retinex theory methods, and frequency domain methods. Histogram equalization methods can expand the dynamic range of gray scale pixel values and improve the overall brightness and contrast of the image. But they are prone to color distortion. Methods based on Retinex theory not only improve the brightness and contrast of the low light images, but also have significant advantages in color images. However, they are prone to severe texture distortion. In the frequency domain, image enhancement methods based on the wavelet transform have advantages in the analysis and enhancement of image details. But these methods may amplify noise in the images, cannot avoid over-enhancement and the transform parameters require manual intervention.

Deep learning-based low light enhancement methods have received increasing attention for better accuracy and robustness compared to traditional methods. The mainstream of deep learning-based low-light enhancement methods is based on supervised learning, which requires light-paired datasets. It is impractical to collect large amounts of light-paired image data from underground coal mines. Moreover, synthetic images are difficult to accurately represent real-world low-light conditions, such as spatial variations and varying levels of noise. It appears to be ineffective in generalizing real-world low-light images. In addition, enhancing the low-light image before performing detection is a logical strategy. However, the enhanced images may contain noise that is invisible to the human eyes and detrimental to subsequent object detection models due to the different purposes of image enhancement and object detection, and most of the algorithms with good enhancement results are difficult to achieve real-time detection.

We design a lightweight low-light image enhancement model and combined with YOLOv5s for personnel monitoring underground in coal mines. The main contributions of this work as follows:

-

(1)

A zero-reference learning-based low-light image enhancement method is proposed. It is used to enhance the brightness, contrast and color fidelity of images under low-light conditions. The spatial details of the image are preserved as much as possible. The problems of over-enhancement of bright areas and under-enhancement of dark areas are solved.

-

(2)

A feature similarity loss is proposed to ensure that the enhanced image features extracted by the detection backbone are similar to those of the original image features, avoiding the noise brought by image enhancement that is unfavorable to subsequent object detection.

-

(3)

Experimental results on the coal mine underground personal dataset show that the proposed method not only enhances the quality of monitoring images under low illumination conditions, but also improves the object detection accuracy. The performance is superior to existing state-of-the-art methods and satisfies the requirements for personnel safety detection in underground coal mines.

Related work

Traditional image enhancement

Traditional low-light enhancement methods include histogram equalization methods [6], frequency domain methods [7,8,9] and methods based on Retinex theory [10, 11]. Histogram equalization enhances images with a small dynamic range of gray values by changing the distribution of gray values. The method is simple and effective, but easily leads to color distortion and noise intensification. Based on the Retinex theory method, a low-illuminance image is split into a reflectance component and an illuminance component by a specific priori or regularization. The estimated reflectance component is considered as the enhancement result. This method leads to unrealistic enhancement effects, such as absence of details and color distortion. In addition, Retinex-based methods require a complex optimization process with a long running time. Frequency domain-based enhancement methods include homomorphic filtering and wavelet transform. The former is computationally expensive and lacks adaptivity. The advantage of wavelet transform is local analysis. It has excellent local features in both the spatial and frequency domains, which is beneficial for analyzing and highlighting image details. The disadvantage is over-illumination and amplification of noise.

Deep learning-based image enhancement

The mainstay of supervised learning-based image enhancement is Retinex theory being introduced into deep learning methods. These methods enhance the incident and reflected components separately using specialized sub-networks, such as the Retinex-Net [12]. In order to reduce the computational burden, Guo et al. built LightenNet [13], a lightweight network that consists of only four layers. Zhang et al. developed KinD [14] and KinD++ [15], which include three subnetworks for layer decomposition, reflectance recovery, and illuminance adjustment. These methods can achieve good performance. In addition, there are several methods that use the recently emerged deep learning model transformer. Cai et al. proposed Retinexformer [16], the first single-stage network based on visual transformer and Retinex, to compensate the limitations of convolutional neural networks in capturing long distances. Due to the high computational cost of the visual transformer, more approaches used hybrid CNN-transformer algorithms. Xu et al. [17] proposed the SNR-aware low illumination image enhancement method. The method works by constructing a signal-to-noise ratio prior and performs transformer-based long-range operations on image regions with low signal-to-noise ratio and CNN-based short-range operations on the other regions. Zhang et al. proposed LRT network [18] to achieve progressive image recovery and designed an angle transformer block based on transformer to simulate global dependencies.

The above supervised learning-based methods are challenged by the following issues. Deep models trained on synthetic data may introduce artifacts and color bias when processing real low-light images due to the differences between synthetic and real data. In addition, real low-light and normal-light data are difficult to obtain on a large scale and to cover a variety of low-light conditions and types of noise. Therefore, some methods adopt unsupervised learning, reinforcement learning, semi-supervised learning, and zero-reference learning to bypass the challenges in supervised learning. Jiang et al. proposed EnlightenGAN [19] based on unsupervised learning approach. An attention-guided U-net is used as the generator and global–local discriminator. To ensure that the enhancement result looks like a truly normal real-light image, global–local self-feature preservation is the key to the structure. Yu et al. built the DeepExposure network [20] based on reinforcement learning to achieve enhancement by fusing images with different exposures. Yang et al. proposed a semi-supervised learning DRBN network [21], which recovers the linear band representation of the enhanced image under supervised learning and recombines the given bands based on unsupervised adversarial learning. To compensate for the limited generalization ability and avoid the effect of unstable training, Chongyi Li et al. proposed Zero-DCE [22] and Zero-DCE++ [23], which are zero-reference learning-based image enhancement networks. These two networks only learn light mapping relationships from the training images.

Underground coal mine image enhancement and object detection methods

Dai et al. [24] proposed an adaptive weighted multiscale Retinex image enhancement method to improve the images quality of coal mine belt conveyors. An improved multiscale template matching algorithm is designed by combining the frame difference method and the area method to detect large foreign objects on coal mine belts. Guo et al. [25] proposed a lightweight conveyor belt damage detection model based on computer vision. The performance and the size of the proposed deep neural network are balanced by fusing feature-level and response-level knowledge distillation. To solve the problems of low detection accuracy and inefficient real-time performance of coal flow detection in complex underground environments, Ye et al. [26] proposed an adaptive focused target feature fusion network based on YOLOX. Xu et al. [27] proposed a safety detection method for moving targets in underground coal mines based on computer vision processing. The low frequency coefficients after wavelet transform are processed by dark primary color deblurring algorithm. The high-frequency coefficients are processed by semi-soft threshold filtering method. The two coefficients are fused to reconstruct the enhanced image.

The proposed method

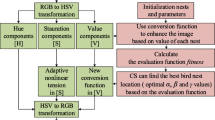

Most deep learning-based low light enhancement methods generate pixel output directly. Such methods rely on the large parameterized models and tend to overfit. Networks based on paired data sets, adversarial learning, or Retinex theory are usually difficult to train and have limited robustness. Inspired by the previous work [21], we developed a zero-reference learning-based low-light image enhancement model. Zero-reference learning is better able to preserve the details of the input image than the common end-to-end and Retinex-based enhancement approaches. This is due to the fact that only the pixel signals need to be adjusted and not regenerated. More importantly, the model is lightweight and requires no reference during training. The proposed low illumination image enhancement model is shown in Fig. 2. It consists of two branches: local pixel-level enhancement and transformer-based global adjustment.

Structure of proposed low-light image enhancement model, containing two branches: local pixel-level enhancement and transformer-based global adjustment. The input low illumination image is firstly enhanced with local pixel-level illumination, and then color and illumination adjustments are performed by the global branch to obtain the final enhanced image

Local pixel-level enhancement

In the local branch, we focus on estimating pixel-level illumination mapping relationships to correct the effects of illumination. Instead of using the down sampling followed by up sampling operation, the resolution of the input is maintained to preserve the details of the information and avoid affecting the subsequent detection results. The local pixel-level enhancement module consists of seven convolutional layers, with the last convolutional layer using the Tanh activation function and the remaining convolutions using the ReLU activation function.

Compared to the previous work [21], we use a reciprocal-based illumination mapping function instead of the quadratic iterative function. The light mapping function based on quadratic curve can be expressed as:

where \(L(I_{X} )\) denotes the result after local illumination enhancement for a given input \(I_{X}\), \(X\) denotes the pixel coordinates, \(\alpha\) is obtained by deep network training which can adjust the magnitude of the mapping curve and control the exposure level. The light mapping curve based on quadratic iteration can be expressed as:

where \(n\) denotes the number of iterations, \(A\) is a parameter map with the same size as the input image.

The reciprocal-based adaptive light mapping function can be expressed as:

Since \(\alpha\) is a pixel-level parameter, each pixel of the input image has a best-fit curve to adjust dynamic range. The pixel-level light mapping relation can be expressed as:

where\(A_{\alpha }\) is a parameter map with the same size as the input image.

The adaptive light mapping function curves are shown in Fig. 3. It can be seen that the quadratic curve cannot cover the whole [0,1] × [0,1] space when iterated 4 times, and the representation range can be expanded to the whole [0,1] × [0,1] space when iterated 8 times, which means that the increase of the iteration number \(n\) leads to the expansion of the representation range. This feature explains why a larger iteration number \(n\) produces brighter results in [21]. However, a higher number of iterations lead to increased computation, more parameters, difficulty in training and tuning and longer inference time. Compared with quadratic iterative functions, the reciprocal curve has a larger range of representation. More importantly, the reciprocal-based light mapping curves can process images without iteration and thus are lightweight and robust.

Transformer-based global adjustment

Transformer has achieved excellent performance in many computer vision tasks [28,29,30]. We designed a global adjustment module based on transformer to compensate for the lack of local enhancement by capturing the global interaction between individual pixels and their surroundings. Transformer-based global adjustment branch mainly contains a multi-head attention module and a multi-layer perceptron module. Two modules previously apply Layer Norm to normalize the implicit layers to accelerate model convergence. The specific calculation process of transformer-based global adjustment is shown in Fig. 4.

First, the input image is convolved to obtain high-dimensional and low-resolution image features. Low resolution saves computational cost and facilitates lightweight network design. Higher-dimensional features are good for extracting global features of the image.

Then, features are encoded for the multi-head attention module and the feature serialization process is shown in Fig. 5. The feature map after the 3 × 3 convolution is \(64 \times h \times w\). Each pixel of the feature map of size \(h \times w\) is used as a token and straightened to obtain a matrix \(X^{\prime}_{T}\) of size \((h \times w) \times 64\). The \(X^{\prime}_{T}\) is encoded by linear transformation to obtain the input sequence \(X_{T}\).

The multi-head attention module performs attention computation in multiple spaces. And the attention results in multiple spaces are spliced and linearly transformed to get the final output. The process of attention computation in a single space is as follows.

The input sequences \(X_{T}\) are multiplied by the network's self-learned weights \(W^{q} ,W^{k} ,W^{v}\) to obtain \(Q,K,V\). The computation process can be represented as follows:

where, \(X_{T}\) is the encoded two-dimensional sequence, \(W^{q} ,W^{k} ,W^{v}\) is the weights learned by the network, \(Q,K,V\) denotes three matrices of dimension \(d_{q} ,d_{k} ,d_{v}\) and \(d_{q} = d_{k} = d_{v}\).

Multi-head attention is the computation of attention in multi-subspace. The process can be represented as:

where \(head_{i}\) denotes the ith subspace, \(W^{o} ,W_{i}^{q} {,}W_{i}^{k} {,}W_{i}^{v}\) are the weights learned by the network automatically, The attention results \(Z_{i}\) of all subspaces are obtained through Eq. (6).

The multi-head attention module outputs a feature map of size 1 × 10 × 64, which is operated by the MLP and linear neural network to generate a 3 × 3 color matrix \(W_{ci,cj}\) and a 1 × 1 gamma value \(\gamma\). The color correction matrix adjusts the RGB three-channel colors, and the gamma value is used for nonlinear adjustment of global image illumination. These operations are noted as Eq. (8).

where \(ci,cj\) is a 3 × 3 color transformation matrix, \(\gamma\) is the exponential value of gamma correction, and \(\varepsilon\) is a non-negative minimum value. We set \(\varepsilon = 1e^{ - 8}\) in our experiments.

In summary, the input low illumination image first enters the local illumination enhancement branch for pixel-level enhancement, and then the global adjustment branch adjusts the result after local enhancement to obtain the final enhanced image. The overall process of the low-light image enhancement method can be expressed as:

Loss function

The low illumination enhancement losses are three presented in [22]. First, an exposure control loss is used to adjust the light level:

where \(m_{1}\) is the number of non-overlapping localized regions, \(Y_{m}\) is the average intensity of each localized region, \(E\) is set to 0.6. Then, a spatial consistency loss maintains the spatial consistency of the image.

where \(m_{2}\) is the number of local regions, \(\Omega (i)\) denotes the four neighboring regions (top, bottom, left, and right) centered on the region \(i\), \(Y\) and \(I\) are the average intensity values of the local regions of the enhanced image and the input image. Finally, the color constancy loss makes the enhanced colors as consistent as possible.

where, \(J^{p}\) and \(J^{q}\) denote the average intensity values of the \(p\) and \(q\) channels corresponding to the enhanced image, \((p,q)\) denotes a pair of channels.

IA-YOLO [31] demonstrated that object detection combined with image enhancement leads to poorer detection performance. This is mainly because image enhancement focuses on image quality, while object detection focuses on feature extraction in the target region. Image enhancement is sensitive to details and focuses on features with low abstraction. Object detection focuses on features with high abstraction. In order to achieve detection friendly image enhancement, we introduce a feature similarity loss. In knowledge distillation, KL scatter is used to measure the difference between the probability distributions of student and teacher networks [32]. Generative adversarial networks (GANs) [33] make the generated data as close as possible to the real data by minimizing the KL scatter of the real and generated data distributions.

Inspired by these works, we use KL scattering to constrain the similarity between the enhanced image and the original image features, avoiding the possible unfavorable effects of the enhancement process on the subsequent detection. Assuming that the two feature sequences are \(F_{1}\) and \(F_{2}\), the corresponding probability distribution functions are \(f_{1}\) and \(f_{2}\), and the KL scatter can be expressed as:

The computation process of feature similarity loss is shown in Fig. 6. The feature maps of the input image and the enhanced image are acquired by the backbone of YOLOv5s. Global average pooling is performed on these feature maps. Finally, the feature similarity loss is obtained by point-by-point KL scattering. The feature similarity loss based on KL scattering is represented as follows:

where, \(GAP(F_{In} )\) denotes the feature global pooling of the low light image, \(GAP(F_{En} )\) denotes the feature global pooling of the enhanced image.

Above all, the low-light image enhancement loss is denoted as Eq. (15).

Experimental validation and results

In this section, we first present the details of the four datasets, SICE [34], LOL [12], MIT-Adobe FiveK [35], and coal mine underground personnel dataset. Then we present the implementation details of the proposed low-light image enhancement model. In addition, to evaluate the performance, efficiency, and accuracy of the proposed low-light image enhancement method, we perform image enhancement and object detection comparisons with several state-of-the-art methods on coal mine underground personnel dataset.

Datasets and experiments details

SICE dataset [33] contains 589 multi-exposure images of indoor and outdoor scenes, each containing between 3 and 18 images with different exposures. We selected 3022 images with different exposure levels from the Part1 subset for training the low-light image enhancement module.

LOL-V1 dataset [12] is a paired dataset taken in real scenes. 500 pairs of low light/normal light images of size 400 × 600 were collected by varying the exposure time and sensitivity ISO. Among them, 485 pairs were categorized as the training set and the remaining 15 pairs as the test set.

MIT-Adobe FiveK dataset [35] contains 5000 images and 50 low-light images were selected for testing. Each image was manually adjusted by five professional photographers and saved in raw format. We selected the images adjusted by expert C as the reference images and converted them to sRGB format.

Coal mine underground personnel dataset (CMUPD) was made by China University of Mining and Technology (Beijing) based on the Mataihao coal mine in Inner Mongolia. The data were collected in an environment with low illumination, uneven light and dust concentration of 3.4–3.7 mg/m3. The capturing equipment was Hikvision explosion-proof waterproof camera with a maximum resolution of 1920 × 1080. The dataset contains 6770 images in the underground coal mine.

We train the low-light enhancement module only on the SICE dataset to learn the light mapping parameter maps. The experimental operating system is 64-bit Windows 11, the CPU is AMD Ryzen 7 5800H with Radeon Graphics, the GPU is NVIDIA RTX 3070 GPU. The network model is built based on python3.9.0, pytorch1.12.1 and cuda11.6 frameworks. The model uses Adam optimizer with the following hyperparameters: learning rate initialized to 0.0001, batch size set to 8, epoch set to 200, momentum set to 0.9 and decay coefficient set to 0.00005.

Experimental results and discussion

In this section, we have selected state-of-the-art and representative methods for quantitative and qualitative comparative experiments. The comparison algorithms include one method based on zero-reference learning: Zero-DCE [22], four methods based on supervised learning: URetinex-Net [36], Retinex-Net [12], MIRNetv2 [37] and Retinexformer [16], one method based on GAN: EnlightenGAN [19], and one method based on unsupervised learning: SCI [38]. We also compare the object detection performance of the different methods on the coal mine underground personnel dataset.

Quantitative analysis

We used two full-reference metrics (PSNR and SSIM) and two no-reference metrics (NIQE [39] and BRISQUE [40]) for image quality evaluation on LOL-VI, MIT-Adobe fiveK and coal mine underground personnel dataset. PSNR and SSIM are two commonly used full-reference metrics to evaluate the effectiveness of image enhancement. PSNR approximates the human perception of reconstruction quality and SSIM is used to measure the similarity of two images. Higher PSNR and SSIM indicate higher image quality and enhancement results closer to ground truth. BRISQUE and NIQE are two commonly used reference-free image quality evaluation metrics for images without ground truth. Smaller NIQE and larger BRISQUE indicate more naturalistic and perceptually favored quality. The results of the quantitative comparison are shown in Table 1.

For the full reference metrics, the supervised learning-based method achieves excellent performance on LOL-V1 compared to other methods. However, the performance on the MIT-Adobe FiveK dataset is severely degraded. Although the networks based on unsupervised learning, zero-reference learning, and adversarial learning do not achieve the highest performance on the LOL-V1 dataset, they have better robustness and generalization ability on the real low-light images of the MIT-Adobe dataset. This suggests that supervised learning-based networks are strongly dependent on training data and the excellent performance on LOL-V1 is due to the better guidance provided by the paired images during supervised training.

For the no-reference metric NIQE, the Retinex-Net performs the worst on the three datasets due to severe texture distortion. Supervised learning-based methods, except Retinex-Net, outperform the others on the LOL-V1 dataset, but they cannot maintain their performance on the MIT-Adobe dataset and the coal mine underground personnel dataset. The BRISQUE metrics have less variation among the methods, and the overall performance shows that the unsupervised learning methods slightly better than the supervised-based learning methods on the MIT-Adobe and the coal mine underground personnel dataset. It is significant that our method outperforms the other methods on most of the metrics, which proves the validity of the proposed method.

Qualitative analysis

The results of image enhancement in the underground coal mine are shown in Figs. 7, 8, and 9. Retinex-Net and URetinex-Net improved the brightness of the images but produced severe texture distortion and blurring. Although the enhancement of dark regions by Retinexformer, MIRNetv2, and SCI is very significant, the over-enhancement of bright regions leads to localized distortion. The methods with better enhancement performance are Zero-DCE, EnlightenGAN and our proposed method. Among them, the enhanced images of Zero-DCE are slightly off-color. EnlightenGAN is under-enhanced in dark regions. Our proposed method improves the brightness and contrast, and preserves the color and texture of the image well without significant color bias and distortion.

Detection for further evaluation

Personnel and helmet detection is performed to further evaluate the performance of the proposed image enhancement method. In this work, YOLOv5s is considered as the object detection model considering the model size, detection accuracy and deployment difficulty. The detection evaluation metrics are shown in Table 2, and the visualization results are shown in Figs. 10, 11, and 12.

YOLOv5s-based person and helmet detection of the images shown in Fig. 7. The red color represents the person detection results and the green color represents the helmet detection results

YOLOv5s-based person and helmet detection of the images shown in Fig. 8. The red color represents the person detection results and the green color represents the helmet detection results

YOLOv5s-based person and helmet detection of the images shown in Fig. 9. The red color represents the person detection results and the green color represents the helmet detection results

The results of the detection indicate that methods of low-light image enhancement have varying degrees of impact on subsequent object detection. In the original low-light images, some people and helmets were not detected or were detected with low accuracy. Retinex had a negative impact on object detection due to severe distortion. Precision, recall, and mAP after Retinex enhancement were worse than the original image. The images enhanced by SCI have a large number of undetected objects in the bright regions, resulting in a lower recall. This is due to the exposure of bright regions and dilution of object features. MIRNetv2 insufficient enhancement of dark areas and overexposure of bright areas leads to a decrease in detection performance. EnlightenGAN improves detection performance, but its effectiveness is limited due to insufficient light enhancement of dark regions, resulting in some hidden objects not being detected. On the other hand, Zero-DCE, Retinexformer, and URetinex-Net consistently improve detection performance and significantly reduce the number of missed targets. This is due to the advantage of significantly brightening dark areas and not over-enhancing or severely distorting bright areas. Retinexformer exhibits less distortion in the object area compared to SCI and MIRNetv2. This explains its ability to maintain good detection performance. It is worth mentioned that our proposed method shows excellent detection performance and achieves the highest mAP compared to the other methods.

Efficiency analysis

Table 2 shows the FLOPs, trainable parameters, and inference times of different low illumination enhancement methods on the 400 × 600 × 3 size image. The mAP is the mean average precision on the coal mine underground personnel dataset at an IOU of 0.5. SCI has the highest processing efficiency followed by Zero-DCE. The efficiency of the proposed method is similar to the SCI and Zero-DCE. The other methods, such as URetinex-Net, Retinexformer, and Enlighten-GAN, have higher mean average precision, but the model efficiency is low to meet the real-time detection requirements. Therefore, they are not suitable for personnel safety monitoring in underground coal mines. Our proposed method not only obtains the highest mean average precision, but also has a high processing speed.

Ablation study

PSNR and SSIM on the LOL-V1 dataset, the number of parameters, computation and inference time for processing 400 × 600 × 3 size image, and the detection performance on the coal mine underground personnel dataset.

We compare different illumination adaptive functions in the low illumination enhancement model. The results are shown in Table 3. Using the quadratic iterative function as the adaptive light mapping function, image quality and detection performance improve significantly when the iteration number is increased from 4 to 8, but only slightly when it is increased to 16. However, increasing the number of iterations results in a higher parameter count, increased computation, and longer inference time. Using the reciprocal function as the adaptive light mapping function avoids iterative computation, which reduces the computation and the number of parameters and achieves the best detection performance.

We explored the effect of the transformer-based global adjustment on low-light enhancement models. The results are shown in Table 4. After removing the global adjustment module, the mean average precision decreased by 3.4%. The main reason is that some objects in the under-enhanced dark areas are masked in the dark, while the features of objects in the over-enhanced bright areas are diluted. Additionally, localized over-enhancement and under-enhancement of dark areas limited the image quality.

We explore the impact of losses on image quality and detection performance (Table 5). Compared to the exposure control loss, spatial consistency loss and color constancy loss have a greater impact. The reason is that the lack of spatial consistency loss and color constancy results in severe distortion of the enhanced image, while the lack of exposure control loss only limits the improvement in image brightness. By focusing on the probability distribution of features, the influence of background information is weakened to some extent, which makes the model pay more attention to the information of the target region and generates features that are easy to detect. By imposing similarity constraints on the target features during the enhancement process, the detection performance is improved by 2.2% in mAP.

Conclusion

In order to improve the visualization effect and detection accuracy of underground personnel monitoring in coal mines, we propose a low-light image enhancement method based on zero-reference learning. The experimental results show that the brightness, contrast, and recognition rate of the image are significantly improved after enhancement by the proposed method. The original color of the image is preserved without texture distortion. Furthermore, the detection accuracy of the coal mine underground image enhanced with the proposed method is increased to 94.6%, which is higher than other methods. In addition, the proposed method does not require manual adjustment of parameters. It still performs well on exposed and low illuminated images. The proposed method greatly improves the capability and efficiency of personnel safety monitoring in underground coal mines. However, our proposed method has some limitations. In particular, the ability of our proposed method is very limited under extreme light conditions in coal mines, which is a very challenging problem. We will consider using the multimodal approach to further explore ways to solve this problem. In addition, our proposed method does not consider semantic information. In future, we will introduce semantic information into the network design to distinguish different regions and make the boundaries of enhancement results clearer.

References

Niu S (2014) Coal mine safety production situation and management strategy. Manag Eng 14:1838–5745

Solarz J, Gawlik-Kobylińska M, Ostant W, Maciejewski P (2022) Trends in energy security education with a focus on renewable and nonrenewable sources. Energies 15:1351

Fu G, Xie X, Jia Q, Li Z, Chen P, Ge Y (2020) The development history of accident causation models in the past 100 years: 24Model, a more modern accident causation model. Process Saf Environ 134:47–82

Wu Y, Fu G, Wu Z, Wang Y, Xie X, Han M, Lyu Q (2023) A popular systemic accident model in China: theory and applications of 24Model. Safety Sci 159:106013

Cheng L, Guo H, Lin H (2021) Evolutionary model of coal mine safety system based on multi-agent modeling. Process Saf Environ 147:1193–1200

Ibrahim H, Pik Kong N (2007) Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans Consum Electr 53:1752–1758

Kim SE, Jeon JJ, Eom IK (2016) Image contrast enhancement using entropy scaling in wavelet domain. Signal Process 127:1–11

Lidong H, Wei Z, Jun W, Zebin S (2015) Combination of contrast limited adaptive histogram equalisation and discrete wavelet transform for image enhancement. Iet Image Process 9:908–915

Łoza A, Bull DR, Hill PR, Achim AM (2013) Automatic contrast enhancement of low-light images based on local statistics of wavelet coefficients. Digit Signal Process 23:1856–1866

Li M, Liu J, Yang W, Sun X, Guo Z (2018) Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans Image Process 27:2828–2841

Gu Z, Li F, Fang F, Zhang G (2020) A novel retinex-based fractional-order variational model for images with severely low light. IEEE Trans Image Process 29:3239–3253

Wei C, Wang W, Yang W, Liu J (2018) Deep Retinex Decomposition for Low-Light Enhancement. arXiv:1808.04560

Li C, Guo J, Porikli F, Pang Y (2018) LightenNet: a convolutional neural network for weakly illuminated image enhancement. Pattern Recogn Lett 104:15–22

Zhang Y, Zhang J, Guo X (2019) Kindling the darkness: a practical low-light image enhancer. In: Proceedings of the 27th ACM International Conference on Multimedia. ACM, Nice France, pp. 1632–1640

Zhang Y, Guo X, Ma J, Liu W, Zhang J (2021) Beyond brightening low-light images. Int J Comput Vis 129:1013–1037

Cai Y, Bian H, Lin J, Wang H, Timofte R, Zhang Y (2023) Retinexformer: one-stage retinex-based transformer for low-light image enhancement. arXiv:2303.06705

Xu X, Wang R, Fu C-W, Jia J (2022) SNR-aware low-light image enhancement. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, New Orleans, LA, USA, pp. 17693–17703

Zhang S, Meng N, Lam EY (2023) LRT: an efficient low-light restoration transformer for dark light field images. IEEE Trans Image Process 32:4314–4326

Jiang Y, Gong X, Liu D, Cheng Y, Fang C, Shen X, Yang J, Zhou P, Wang Z (2021) EnlightenGAN: deep light enhancement without paired supervision. IEEE Trans Image Process 30:2340–2349

Yu R, Liu W, Zhang Y, Qu Z, Zhao D, Zhang B (2018) DeepExposure: learning to expose photos with asynchronously reinforced adversarial learning. In: Advances in neural information processing systems 31 (NIPS 2018). Neural Information Processing Systems (nips), La Jolla

Cao W, Wang R, Fan M, Fu X, Wang Y, Guo Z, Fan F (2021) Froth image clustering with feature semi-supervision through selection and label information. Int J Mach Learn Cyb 12:2499–2516

Guo C, Li C, Guo J, Loy CC, Hou J, Kwong S, Cong R (2020) Zero-reference deep curve estimation for low-light image enhancement. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, Seattle, WA, USA, pp. 1777–1786

Li C, Guo C, Loy CC (2022) Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Trans Pattern Anal 44:4225–4238

Dai L, Zhang X, Gardoni P, Lu H, Liu X, Królczyk G, Li Z (2023) A new machine vision detection method for identifying and screening out various large foreign objects on coal belt conveyor lines. Complex Intell Syst 9:5221–5234

Guo X, Liu X, Gardoni P, Glowacz A, Królczyk G, Incecik A, Li Z (2023) Machine vision based damage detection for conveyor belt safety using fusion knowledge distillation. Alex Eng J 71:161–172

Ye T, Zheng Z, Li Y, Zhang X, Deng X, Ouyang Y, Zhao Z, Gao X (2023) An adaptive focused target feature fusion network for detection of foreign bodies in coal flow. Int J Mach Learn Cyb 14:2777–2791

Xu P, Zhou Z, Geng Z (2022) Safety monitoring method of moving target in underground coal mine based on computer vision processing. Sci Rep-UK 12:17899

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE, Montreal, QC, Canada, pp. 9992–10002

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N (2021) An image is worth 16X16 words: transformers for image recognition at scale. arXiv:2010.11929v2

Cui Z, Li K, Gu L, Su S, Gao P, Jiang Z, Qiao Y, Harada T (2022) You only need 90K parameters to adapt light: a light weight transformer for image enhancement and exposure correction. arXiv:2205.14871

Liu W, Ren G, Yu R, Guo S, Zhu J, Zhang L (2022) Image-adaptive YOLO for object detection in adverse weather conditions. AAAI 36:1792–1800

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. arXiv:1503.02531

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2020) Generative adversarial networks. Commun ACM 11:139–144

Cai J, Gu S, Zhang L (2018) Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans Image Process 27:2049–2062

Bychkovsky V, Paris S, Chan E, Durand F (2011) Learning photographic global tonal adjustment with a Database of Input/Output Image Pairs. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, New York, pp. 97–104

Wu W, Weng J, Zhang P, Wang X, Yang W, Jiang J (2022) URetinex-net: retinex-based deep unfolding network for low-light image enhancement. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, New Orleans, LA, USA, pp 5891–5900

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2023) Learning enriched features for fast image restoration and enhancement. IEEE Trans Pattern Anal 45:1934–1948

Ma L, Ma T, Liu R, Fan X, Luo Z (2022) Toward fast, flexible, and robust low-light image enhancement. arXiv:2204.10137

Mittal A, Soundararajan R, Bovik AC (2013) Making a “Completely Blind” image quality analyzer. IEEE Signal Process Lett 20:209–212

Mittal A, Moorthy AK, Bovik AC (2012) No-Reference image quality assessment in the spatial domain. IEEE Trans Image Process 21:4695–4708

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 52274160, 52074305).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, W., Wang, S., Wu, J. et al. A low-light image enhancement method for personnel safety monitoring in underground coal mines. Complex Intell. Syst. 10, 4019–4032 (2024). https://doi.org/10.1007/s40747-024-01387-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01387-2