Abstract

Background noises are usually treated as redundant or even harmful to voice conversion. Therefore, when converting noisy speech, a pretrained module of speech separation is usually deployed to estimate clean speech prior to the conversion. However, this can lead to speech distortion due to the mismatch between the separation module and the conversion one. In this paper, a noise-robust voice conversion model is proposed, where a user can choose to retain or to remove the background sounds freely. Firstly, a speech separation module with a dual-decoder structure is proposed, where two decoders decode the denoised speech and the background sounds, respectively. A bridge module is used to capture the interactions between the denoised speech and the background sounds in parallel layers through information exchanging. Subsequently, a voice conversion module with multiple encoders to convert the estimated clean speech from the speech separation model. Finally, the speech separation and voice conversion module are jointly trained using a loss function combining cycle loss and mutual information loss, aiming to improve the decoupling efficacy among speech contents, pitch, and speaker identity. Experimental results show that the proposed model obtains significant improvements in both subjective and objective evaluation metrics compared with the existing baselines. The speech naturalness and speaker similarity of the converted speech are 3.47 and 3.43, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Voice conversion (VC) is a digital signal processing technology that can analyze and reconstruct acoustic features [1]-[4]. It makes the converted speech signals sound more similar to the characteristics of a target speaker, while ensuring that the speech content is consistent before and after the conversion. Voice conversion technology has a wide range of applications in personalized speech synthesis [5], film and television dubbing, speaker identity anonymization [6] and data augmentation. With the continuous development of deep learning, voice conversion has made significant improvements in both speech naturalness and similarity to the target speaker’s voice. For example, voice conversion methods based on generative adversarial networks [7, 8] have extended the good performance of voice conversion to non-parallel datasets [9, 10]. Meanwhile, voice conversion frameworks based on encoder–decoders [11, 12], which combine vector quantization [13, 14] and instance normalization [15], are investigated extensively to decouple speech contents from speaker identity and have improved the generalization ability of voice conversion models. These methods above perform well only when the voices of both source and target speakers are clean. However, ubiquitous ambient noises in real-world scenarios would severely affect the performance of voice conversion by leading to decreases in the quality of converted speech. Therefore, people have tried a lot of ways to remove background sounds of the input speech when conducting voice conversion.

Some studies have shown that pre-trained noise-robust automatic speech recognition could extract speech contents from noisy inputs to alleviate the negative impacts of background sounds [16, 17]. Another choice is to use a denoising module to preprocess noisy speech, which is typically conducted by denoising using advanced speech enhancement methods [18, 19], and then feeding the processed denoised speech into downstream tasks of voice conversion [20]. However, the separated processing procedures inevitably lead to speech distortions and thus reduce the quality of the converted speech. As an alternative to remove background sounds, Xie et al. proposed to use multitask learning to preserve background sounds [21]. Specifically, this method involves two tasks: one is to convert the input speech into the target speech, and the other is to reconstruct background sounds. By simultaneously optimizing the training objective of these two tasks, the model can convert speech while preserving the information of the background sounds. The work in [21] improves the speech separation (SS) task by considering phase information, but the model does not consider the relationships between background sounds and enhanced speech during training. Furthermore, in the aforementioned research, background sounds are often discarded as noise, thereby overlooking the fact that in certain application scenarios (such as audiobooks, dubbed movies, etc.), background sounds also contain valuable information.

In order to address the issues of significant degradation in speech quality when using noisy speech for conversion, as well as the possibility of flexibly preserving background sounds. A noise-robust voice conversion model is proposed by jointly training speech separation and voice conversion, where a user can choose to retain or to remove the background sounds freely. The model consists of an optimized speech separation module and a voice conversion module. Specifically, in the speech separation module, a dual-decoder speech separation method based on the Deep Complex Convolution Recurrent Network (DCCRN) [22] model uses two decoders to separate speech and background sounds, respectively. A bridge module [23] is introduced here to capture hidden information in denoised speech and noise through information exchange. The voice conversion module uses VQMIVC [24] as the backbone network and combines cycle loss with the original mutual information loss to enhance the adequacy of decoupling of features. A unified loss function is used to train the overall model to alleviate the problem of decreased model performance due to speech distortions caused by separated training. Experimental results show that the proposed method can significantly improve the quality of voice conversion and can effectively preserve or remove background sounds in noisy environments.

The contributions of this paper are summarized as follows:

-

(1)

A background sound controllable noise robust voice conversion method is proposed, which realizes flexible control of background sounds in noisy environments by jointly training a speech separation module (The speech separation module here aims to separate background noise from clean speech) and a voice conversion module.

-

(2)

The bridge module is introduced to capture the hidden information in the denoised speech and the background sounds, which reduces the coupling between the background sounds and the denoised speech, and provides high-quality speech for the subsequent voice conversion task.

-

(3)

The cycle loss and mutual information loss are combined to optimize the voice conversion module, which further improves the efficacy of decoupling between speech contents, speaker identity and pitch, and improves the quality of converted speech.

The organization of the remaining sections of this article is as follows: Sect. “Background-controllable voice conversion model” introduces the details of the proposed model in this article, as well as the configuration of the loss function. In Sect. “Experimental settings”, the experimental setup is described, including the dataset, the evaluation metrics, and the baseline methods. Section “Experimental results and analysis” analyses and summarizes the results of the comparative experiments and the ablation studies, with an additional visual analysis of audio examples. Finally, Sect. “Conclusion” presents the conclusion.

Background-controllable voice conversion model

The model consists of a speech separation module and a voice conversion module, as shown in Fig. 1. The noisy speech is first put into the speech separation module to obtain denoised speech and background sounds. The denoised speech is then used as the input to the conversion module for subsequent voice conversion. Finally, depending on the application scenarios, clean converted speech or converted speech with preserved background sounds can be obtained. The remaining part of this sections will introduce the details of each module.

Speech separation module

In Deep Noise Suppression Challenge 2020 [25], DCCRN has demonstrated the state-of-the-art performance. The model has a simple structure and low computing complexity, while the complex convolution used in the model can capture the phase information in the speech signals very well. Therefore, in this paper, DCCRN is used as the backbone network of the speech separation module, based on which a dual-decoder speech separation model is proposed to promote the separation of speech and background sounds. As shown in Fig. 2, the speech separation model consists of a branch of speech and a branch of background sounds. The noisy speech waveform is preprocessed to obtain a Mel spectrogram as the input of the network model. The two sets of branches share a complex encoder and two complex long short-term memory (LSTM) layers. Two independent complex decoders encode speech and background sounds, respectively, and a bridge module is used for modeling implicit feature fusions between the encoders. Finally, the estimated denoised speech and the background sound Mel spectrograms are obtained and perform as inputs to the downstream voice conversion task. The details of the complex encoder, complex LSTM, and bridge module will be introduced as below.

Complex encoder

It includes complex 2D convolutional blocks, complex batch normalization layers, and complex parametric rectified linear unit (PReLU) [26] activation functions. Complex convolution can be seen as convolution operations in Fourier domain. In Fourier domain, complex signals can be represented as complex numbers with real and imaginary parts. By converting the input signal and convolution kernel into complex representations, convolution operations can be transformed into dot-product operations, thereby improving computational efficiency. The schematic diagram of the calculation process is shown in Fig. 3.

Specifically, suppose that the complex convolution filter is \(Y = {Y_r} + j{Y_i}\), the real matrix \({Y_r}\) represents the real part of the complex convolution kernel, and \({Y_i}\) represents the imaginary part of the complex convolution kernel. At the same time, the complex matrix is defined as \(X = {X_r} + j{X_i}\), and the complex convolution operation formula is obtained as follows:

where \( \otimes\) denotes complex convolution and \( *\) denotes real convolution.

The complex batch normalization layer and the complex PReLU activation function follow the implementation in [27]. The complex PReLU activation function was originally designed to satisfy the Cauchy-Riemann Equation, so the activation function performs activation operations on the real and imaginary parts, respectively, which are calculated as shown in Eq. (2). The structure of the complex decoder module is basically the same as that of the complex encoder, so it will not be explained here.

Complex LSTM

Similar to the operation of complex convolution, complex LSTM replaces real convolution operation with complex convolution operation. Given the complex input X, the implementation of complex LSTM operation is given as follows:

In Eq. (3), \(LST{M_r}\) and \(LST{M_i}\) represent the real and imaginary parts of the traditional LSTM, \({F_{rr}}\) is the convolution calculation of \({X_r}\) and \(LST{M_r}\), and \({F_{out}}\) represents the feature output of a complex layer.

Bridge module

Bridge module is a module that integrates different types of features. Specifically, the bridge module consists of two parts: the information exchange part and the information integration part. The task of information exchange is to exchange the feature representation in each neural network with that in the other neural networks, so that the features in different neural networks can be transmitted and shared with each other. The task of information integration is to integrate the obtained feature representations after the exchange part and to generate the final prediction results. The bridge module structure used in this paper is similar to the structure of complex encoder, except that the convolution kernel of the complex 2D convolutional layer is 1*1, as shown in Fig. 4. By adding a bridge module between the two branches, the denoised speech and background sound are able to share the intermediate information of each layer, and the multi-layer interactive signals are able to improve the accuracy of predicting speech and background sounds.

Voice conversion module

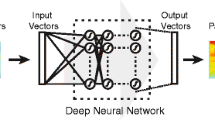

The voice conversion module uses VQMIVC as the backbone network, which includes three encoders and one decoder, as shown in Fig. 5. The original speech is preprocessed to obtain Mel spectrogram and log F0 (denoted by lf0 throughout the paper), which are inputs to the content encoder, pitch encoder, and speaker encoder to obtain content representation, pitch representation, and speaker embedding representation, respectively. Mutual information is used to measure the correlation between encoder outputs, and decoupling between content, pitch, and speaker identity is achieved by minimizing their mutual information. The decoder takes the output of the encoder as the input and generates the reconstructed Mel spectrogram. The reconstructed Mel spectrogram is used for the second round of encoding, as shown by the dashed line in Fig. 5.

In this paper, the cycle loss is introduced, which is combined with the mutual information loss to further improve the efficacy of feature decoupling. The idea of cycle loss was proposed by CycleGAN-VC in [28]. Specifically, the model converts the source speech into the target speech, then reverses the converted target speech, and finally converts back to the style of the source speech. The cycle consistent loss is determined by calculating the difference between the two transitions, so as to adjust the model parameters and improve the quality of voice conversion. In this paper, the reconstructed Mel spectrum obtained from the first encoder–decoder round is fed into the encoder again. By calculating the cycle consistency loss between the reconstructed Mel spectrogram obtained twice, information disentanglement is promoted.

Loss function

The loss function used to train the model consists of two parts: the loss for the speech separation module and the speech loss for the conversion module, as shown in Eq. (5).

The training loss for the speech separation module

The training loss of the speech separation module includes two parts: the loss of the speech branch and the loss of the noise branch. The speech branch calculates the L1 loss between the Mel spectrograms of the estimated speech and the clean speech, while the noise branch calculates the L1 loss between the Mel spectrograms of the estimated noise and the actual noise. The results of the two branches are then added together. The specific calculation process is shown in Eq. (6).

where \(C{D_E}\) and \(C{D_N}\) denote the speech branch decoder and the noise branch decoder, respectively, \({M_{t\_n}}\) denotes the noisy speech Mel spectrum, \({M_{t\_c}}\) denotes the clean speech Mel spectrum, and \(T\) denotes the number of utterances. The final value of \({L_{ss}}\) is the average of the losses on the \(T\) utterances.

The training loss for the voice conversion module

The training loss of the voice conversion module consists of the loss of vector quantization \({L_{VQ}}\), the loss of contrast prediction coding \({L_{CPC}}\), the loss of mutual information \({L_{MI}}\), the reconstruction loss \({L_{REC}}\) and the cycle loss \({L_{cyc}}\), where \({L_{VQ}}\), \({L_{CPC}}\), \({L_{MI}}\) and \({L_{REC}}\) follow the definitions in the VQMIVC, please refer to [24] for details. The cycle loss is shown in Eq. (7).

where \({M_t}^{\prime}\) denotes the predicted Mel spectrum obtained after the first round of coding and decoding operations, and \({\hat M_t}\) denotes the predicted Mel spectrum obtained finally by using the speech estimated in the first round as the input to the second round of coding and decoding operations.

The training loss of the voice conversion module is shown in Eq. (8).

where \(\alpha \geqslant 0,\beta \geqslant ,\gamma \geqslant 0\), are used as the weights of the objective function. In the experiments of this paper, these hyperparameters are set as follows: \(\alpha = 1e - 2,\)\(\beta = 5,\gamma = 10\). The value of \(\alpha \) is consistent with the optimal value obtained by the experiment in [24].

Experimental settings

Datasets

The proposed model was trained and tested on a mixture of MUSDB18-train dataset [29] and CSTR-VCTK dataset [30]. The MUSDB18-train dataset contains 100 complete music tracks of different genres, each of which is with independent drum, bass, vocal and other tracks. The CSTR-VCTK dataset contains nearly 44 h of clean speech clips from 109 English speakers with different accents, each of which lasts 4–9 s. Audio data are randomly extracted from the MUSDB18-train dataset as background sounds, cropped to the same length as the clean speech, and added to the CSTR-VCTK dataset with signal-to-noise ratios of -5 dB, 0 dB, 5 dB, 10 dB, and 20 dB. The mixed speech clips are divided into training and testing sets with a ratio of 9:1, and 10% of the speech of each speaker in the training set is extracted as the validation set for cross-validation during training. Since there is no intersection between the speakers in the training set and the testing set, the speech in the testing set can be used to verify the performance of zero-shot conversion of the proposed model. In the acoustic feature extraction stage, all audio files are down-sampled into 16 kHz, and 80-dim Mel spectrum and LogF0 are extracted from the clean and noisy speech using Librosa, with a frame length of 25 ms and a frame shift of 10 ms. During the testing stage, two male and two female speakers are randomly selected, and each pair is converted backward and forward. Forty utterances are selected for subjective and objective evaluation for each pair of speakers.

Training settings

The network of the speech separation module includes one encoder and two decoders, where the channel numbers of the encoder are set to: {16, 32, 64, 128, 256}. Due to the use of skip connections, the channel numbers of the decoders are set to be double of those in the corresponding encoder layers. The encoder and decoders each consist of six complex convolutional modules, with convolutional layer’s kernel size of [3, 3], stride of [2, 1], and padding of [1, 2]. The real and imaginary parts of the complex LSTM each have 1024 hidden units. The hyperparameters of the network for the voice conversion module are based on the VQMIVC model. The network is trained using the Adam optimizer, with the learning rate increasing from 1e-6 to 1e-3 in the first 15 epochs, halving every 100 epochs after 200 epochs, for a total of 500 epochs, with a batch size set to 8.

Baselines

Six advanced models are selected as comparison baselines in the experiments. The first four models, AutoVC, VQMIVC, VAE-CN-C, and SEGAN-VC, are trained on clean/noisy speech datasets and generate converted clean speech during the conversion phase, and their results are compared with the experimental results of our model without adding background sounds. The latter two models, MULTI-TASK-VC and Upper bound model, are trained only on noisy speech datasets and generated converted speech with background sounds during the conversion phase, and their results are compared with the experimental results of our model with retained background sounds. The introduction and specific parameter configurations of each model are listed as follows.

AutoVC [31]: This model is the first to implement zero-shot voice conversion by decoupling speech content and speaker identity through carefully designed information bottlenecks. In the experiments, we set the bottleneck dimension to 32, and the settings of the remaining parameters are the same as those in the original paper.

VQMIVC [24]: Mutual information is used as a correlation measure to improve the efficacy of decoupling speech contents, speaker identity and pitch. 256-dim Mel-spectrum and 1-dim LogF0 are extracted from clean speech as the inputs of the model.

VAE-CN-C [17]: The model has selected the best one from the previous models [12]. Based on the AdaINVC model [32], the content encoder and the speaker encoder are modified, and the domain adversarial training module is introduced, so that the model could perform noise robust voice conversion. The input of the model is clean speech and its noisy counterpart, and the output is clean speech with the target speaker’s characteristics.

SEGAN-VC [33]: The SEGAN method processes on speech in the waveform domain, and proposes an end-to-end speech enhancement framework based on the generative adversarial model. The method trains the SEGAN model in a cascade way together with the VC module proposed in this paper.

MULTI-TASK-VC [21]: Yao et al. propose an end-to-end framework via multi-task learning, which sequentially stacks a source separation module, a bottleneck feature extraction module, and a VC module. Using the deep complex convolution recurrent network optimized by the power-law compressed phase-aware (PLCPA) loss and the asymmetric loss to complete the source separation task. A unified reconstruction loss is used to train the model to improve the quality of voice conversion.

Upper bound model: In this model, the superior performance VQMIVC model is selected, and the clean source speaker speech is used for conversion, and the conversion result is superimposed with the original background sounds. The original background sounds is randomly chosen from the MUSDB18-train dataset and matched with the background noise added to the source speaker's speech.

Evaluation metrics

In this paper, the following metrics are used to evaluate the voice conversion methods qualitatively.

Subjective evaluation metric

The Mean Opinion Score (MOS) [34] is used as the subjective evaluation metric to evaluate the naturalness and similarity of the converted speech. Speech naturalness mainly evaluates the fluency and completeness of the content of the converted speech, while similarity mainly compares the characteristics of the converted speech with the target speaker’s voice. The MOS score is rated on a five-point scale, with higher scores indicating higher speech quality and clearer speech content. In these experiments, ten test subjects with a certain background in speech signal processing and sensitivity to sound are selected to rate the experimental results using MOS. The resulting MOS score is a numerical value between 1 and 5, which is the average subjective evaluation of all test subjects for each group of experimental results.

Objective evaluation metric

Four objective evaluation indicators are selected: Mel Cepstral Distortion (MCD), Pearson Correlation Coefficient (PCC) [35], Perceptual Evaluation of Speech Quality (PESQ) [36], and Short-Time Objective Intelligibility (STOI) [37]. MCD is used to measure the difference between two acoustic feature sequences and calculate their similarity by comparing the distance between the two speech signals on Mel Frequency Cepstral Coefficients (MFCCs), with Euclidean distance being a common calculation method. Generally, the smaller the value of MCD, the higher the similarity between the two speech signals. The calculation formula is shown in Eq. (9).

where N denotes the dimension of the Mel spectrum, \({m_c}\) and \({m_t}\) denote the Mel coefficients of the converted speech and those of the target speech, respectively.

The Pearson correlation coefficient between the F0 of the source speech and the converted speech can effectively evaluate the changes in speech content and intonation. A higher F0-PCC indicates a higher consistency of F0 variation between the converted speech and the source speech. The PESQ algorithm calculates the speech quality score between the original speech sample and the sample processed in a certain way based on their differences. The PESQ score ranges from -0.5 to 4.5, and a higher PESQ value indicates better auditory quality of the tested speech.

STOI is used to measure the degree to which speech signals remain clear under interferences. Compared with other evaluation indicators, STOI pays more attention to the characteristics of human hearing, and can better simulate the perception process of human auditory system to speech. The STOI score ranges from 0 to 1, and a higher score indicates higher clarity of speech.

The experimental results were subjectively evaluated by 30 test subjects, including 20 professionals who have been engaged in speech-related research and 10 ordinary testers. Statistical analysis was conducted on thousands of generated speech results for each experiment. All subjective evaluations provided a 95% confidence interval. The objective evaluation indicators such as MCD, F0-PCC, PESQ, and STOI were all based on the average values of the generated speech mentioned above.

Experimental results and analysis

Analysis of subjective evaluation results

In this group of experiments, except for the first two models trained on the clean speech dataset, other models are trained using noisy speech dataset with a signal-to-noise ratio of 5 dB. During conversion, the source speaker's speech is noisy with a signal-to-noise ratio of 5 dB, while the target speaker's speech is clean. Two male and two female speakers are selected in the experiments, and male–male, male–female, female–female, and female–male conversions are performed. 20 pairs of utterances are randomly selected from each group for subjective evaluation. The experimental result is the average value of the 20 pairs of speech results, as shown in Table 1.

“Ours_c” in Table 1 represents the model that uses the proposed method and the conversion result is clean speech, and the comparison objects are the first four groups of models in Table 1. “Ours_n” represents the model in which the converted results preserve the background sounds, and is compared with the last two models in the table. As shown in Table 1, the performance of the voice conversion model trained on a clean speech dataset (the first two models in the table) is significantly reduced due to the influence of noisy source speech. Since the SEGAN-VC model uses the same VC module as the one proposed in this paper, the experimental results indirectly reflect the superiority of the double-branch speech separation module proposed in this paper, which can effectively separate noise and speech. Compared with the advanced noise-robust voice conversion model, MULTI-TASK-VC, the proposed method in this paper improves the naturalness and similarity of the converted speech by 0.08 and 0.14, respectively, indicating that the cycle loss used in the conversion stage enhances the feature decoupling sufficiency. At the same time, the experimental results show that the quality of voice conversion between the same gender is better than that of cross-gender conversion.

Analysis of objective evaluation results

The relative performance of the proposed model and six baseline models are evaluated using objective evaluation metrics such as MCD, F0-PCC, PESQ, and STOI. In this group of objective evaluations, the MCD and F0-PCC metrics mainly evaluate the difference between the converted speech and the Ground Truth. The Ground Truth here means that the same background sound as the noisy source speaker's speech is added to the clean target speaker's speech, while the content of the noisy source speaker's speech is identical to that of the clean target speaker's speech. PESQ and STOI are used to evaluate the speech separation stage and estimate the difference between the estimated speech and the clean speech. Since “Ours_c” and “Ours_n” use the same speech separation module, and results of the two models are the same in terms of PESQ and STOI. The experimental parameter settings and training data selection are the same as in Sect. “Analysis of subjective evaluation results”, and the objective evaluation results are shown in Table 2.

As shown in Table 2, in terms of speech evaluation, the two models of the proposed framework are superior to the baseline models in both metrics of MCD and F0-PCC, objectively demonstrating the good performance of this paper’s method on voice conversion. The objective evaluation metrics of “Ours_n” model are slightly lower than those of “Ours_c”, which is understandable, because the speech with added background sounds will contaminate some details in clean speech, thus deteriorates the evaluation metrics. In addition, compared with the MULTI-TASK-VC method that also uses the DCCRN framework as the backbone network for speech separation module, the proposed model in this paper has improved that in both PESQ and STOI metrics. The experimental results demonstrate that the dual-decoder speech separation module proposed in this paper can effectively improve the separation accuracy between clean speech and background sounds by introducing a “bridge module” and provide high-quality speech input for downstream voice conversion tasks, thereby improving the overall performance of the model.

Ablation studies

To further verify the effectiveness of each component in the proposed framework, we have also conducted ablation experiments. Three sets of ablation experiments are set up to verify the performance of the model without using cycle consistency loss function (w/o \({L_{cyc}}\)), without using mutual information loss function (w/o \({L_{MI}}\)), and without using the noise branch of the speech separation module to separate noise (w/o \(C{D_N}\)), respectively. The remaining parts of experimental parameter settings are the same as those in Sect. “Analysis of subjective evaluation results”. The specific experimental results are shown in Table 3.

Experimental results show that compared with the original model, the three models have improved the subjective and objective evaluation metrics. Among them, the w/o \({L_{MI}}\) model achieves the lowest naturalness and similarity in the three groups of experiments, which indicates that the addition of mutual information loss can effectively realize the decoupling between speech contents, pitch and speaker identity features. The use of the \({L_{cyc}}\) loss further encourages information decoupling, avoids speech distortion caused by information leakage, and improves the accuracy of reconstructed speech. When the model removes the noise branch of the speech separation module, PESQ and STOI and other metrics will deteriorate seriously, but the naturalness and similarity indicators are slightly higher than those of w/o \({L_{MI}}\), indicating that the model pays more attention to the training of the conversion module after removing the noise branch. Ablation experiments confirm the effectiveness of the proposed model in the field of background sound controllable noise robust voice conversion.

Results with unseen noises

This section experimentally verifies the conversion performance of the proposed model under different signal-to-noise ratios as well as unseen noise environments. Among them, the unseen noise is from the NOISEX-92 noise corpus [38], factory1 noise, hfchannel noise and pink noise are selected and randomly added to the clean speech, and the SNR is set in the same setting as the visible noise. The experimental results are shown in Table 4.

This experiment is conducted in a scenario where the source speaker's speech has noises and the target speaker's speech is clean. At the same time, to facilitate comparison of the experimental results, the converted speech is cleaned by removing the background noises. Firstly, the model is evaluated for the scenarios where noises are seen in the training data. As the signal-to-noise ratio level increases, both the naturalness and MCD evaluation metrics of the speech improve. It is obvious that extracting clean speech from high signal-to-noise ratios signals is easier than that from low signal-to-noise ratios signals. Secondly, the performance of the model is evaluated in invisible noise scenarios. The results show that under the same signal-to-noise ratios conditions, the subjective and objective evaluation metrics of the converted speech in invisible noise scenarios are slightly lower than those in visible noise scenarios. However, compared with the baseline models in Sect. “Analysis of subjective evaluation results” and “Analysis of objective evaluation results”, the proposed model in this paper demonstrates better performance.

Visualization

In order to intuitively demonstrating the performance of the speech separation module and the voice conversion module in the proposed model, this section uses speech waveform and spectrogram to visually analyze some speech examples of the two modules. The visualization results of speech examples for the speech separation module are shown in Fig. 6.

Figure 6A–E shows the waveforms and their corresponding spectrograms from male speakers, and Fig. 6F–J shows the waveforms and their corresponding spectrogram from female speakers. From top to bottom, they show the original noisy speech, the estimated clean speech using the dual-branch speech separation model, the ground truth clean speech, the estimated background noises, and the ground truth background noises, respectively. From the visualization, it can be observed that both the estimated clean speech and background noises using the speech separation module are extremely similar to the ground truth ones in both waveform and spectrogram. The experimental results prove that the proposed dual-branch speech separation module in this paper is effective in separating clean speech and background noises.

Figure 7 shows the spectrogram of some speech examples for the voice conversion module. In the experiments, noises are added to the source speaker’s speech uniformly, and the SNR of the noisy speech is 5 dB. The target speaker’s speech is selected as the clean goal. The figure shows two scenarios of voice conversion, which are male-to-female (the first row) and female-to-male (the second row) conversions. From left to right, they show the source speaker’s speech, the target speaker’s speech, the converted speech with removed background noises, and the converted speech with retained background noises. From the part circled in black boxes in the figures, it can be seen that the proposed model has good performance in the details of pitch conversion. At the same time, comparing Fig. 7a,c, it is found that while performing speaker identity conversion, the proposed model ensures the consistency of the speech contents before and after the conversion. These results demonstrate that the combination of cycle consistency loss and mutual information loss used by the model has effectively improved the efficacy of the conversion, and has significantly improved the quality of the converted speech.

Conclusion

This paper proposes a noise-robust voice conversion model with controllable background sound, which can retain or remove background sound flexibly and obtain high quality converted speech. The model includes an optimized speech separation module and a voice conversion module. The speech separation module is composed of a dual-decoder model, which encodes the denoised speech and noise, respectively. The bridge module is used to promote the information interaction between the denoised speech and the background sound, alleviate the gradient descent problem, and improve the accuracy of speech and background sound prediction. The voice conversion module adopts the multi-encoder structure, introduces the cycle loss, and combines it with the mutual information loss to train the multi-encoder to improve the model decoupling adequacy. In addition, by jointly training the speech separation module and the voice conversion module, the speech distortion problem caused by the training mismatch between modules is alleviated. The subjective and objective evaluation results show that the converted speech obtained by the proposed method has higher naturalness and speaker similarity than the baseline method, and achieves comparable experimental results with the voice conversion model trained with a clean dataset. The speech naturalness and speaker similarity of the converted speech are 3.47 and 3.43, respectively.

However, there are still limitations in our work. Currently, we are unable to achieve cross-linguistic speech conversion due to the insufficient expressive power of the feature representations extracted during model training. Therefore, in the future research, we will focus on exploring more expressive feature representations to achieve cross-language voice conversion, which will further improve the practicality of the model.

Data availability

Data will be made available on request.

References

Sisman B, Yamagishi J, King S, Li H (2021) An overview of voice conversion and its challenges: from statistical modeling to deep learning. IEEE/ACM Trans Audio Speech Lang Process 29:132–157

Singh A, Kaur N, Kukreja V (2022) Computational intelligence in processing of speech acoustics: a survey. Complex Intell Syst 8:2623–2661

Mohammadi SH, Kain A (2017) An overview of voice conversion systems. Speech Commun Int J 88: 65–82

Liu F-k, Wang H, Ke Y-x, Zheng C-s (2022) One-shot voice conversion using a combination of U2-Net and vector quantization. Appl Acoustics 99: 109014

Fahad M-S, Ranjan A, Yadav J, Deepak A (2021) A survey of speech emotion recognition in natural environment. Digital Signal Processing 110:102951

Zhang X, Zhang X, Sun M (2023) Imperceptible black-box waveform-level adversarial attack towards automatic speaker recognition. Complex Intell Syst 9:65–79

Ram SR, Kumar V M, Subramanian B, Bacanin N, Zivkovic M, Strumberger I (2020) Speech enhancement through improvised conditional generative adversarial networks. Microprocessors Microsyst 79: 103281

Phan H et al (2020) Improving GANs for Speech Enhancement. IEEE Signal Process Lett 27:1700–1704. https://doi.org/10.1109/LSP.2020.3025020

Wang C, Yu Y-B CycleGAN-VC-GP: Improved CycleGAN-based Non-parallel Voice Conversion. In: 2020 IEEE 20th International Conference on Communication Technology (ICCT), Nanning, China, 2020, 1281–1284, https://doi.org/10.1109/ICCT50939.2020.9295938.

Yu X, Mak B Non-parallel many-to-many voice conversion by knowledge transfer from a text-to-speech model. In: ICASSP 2021—2021 IEEE international conference on acoustics, speech and signal processing (ICASSP), Toronto, ON, Canada, 2021, 5924–5928, https://doi.org/10.1109/ICASSP39728.2021.9414757.

Chu M et al (2023) E-DGAN: an encoder-decoder generative adversarial network based method for pathological to normal voice conversion. IEEE J Biomed Health Inform 27(5):2489–2500. https://doi.org/10.1109/JBHI.2023.3239551

Kheddar H, Himeur Y, Al-Maadeed S, Amira A, Bensaali F (2023) Deep transfer learning for automatic speech recognition: towards better generalization. Knowl-Based Syst 277:110851

Kang X, Huang H, Hu Y, Huang Z (2021) Connectionist temporal classification loss for vector quantized variational autoencoder in zero-shot voice conversion. Digital Signal Processing 116:103110

Wu D-Y, Lee H-y (2020) One-Shot Voice Conversion by Vector Quantization. In: ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, pp 7734–7738, https://doi.org/10.1109/ICASSP40776.2020.9053854.

Chen M, Shi Y, Hain T Towards Low-resource stargan voice conversion using weight adaptive instance normalization. In: ICASSP 2021—2021 IEEE international conference on acoustics, speech and signal processing (ICASSP), Toronto, ON, Canada, 2021, pp. 5949–5953, https://doi.org/10.1109/ICASSP39728.2021.9415042.

Ronssin D, Cernak M (2021) AC-VC: Non-parallel low latency phonetic posteriorgrams based voice conversion. In: 2021 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Cartagena, Colombia, pp. 710-716, https://doi.org/10.1109/ASRU51503.2021.9688277

Du H, Xie L, Li H (2022) Noise-robust voice conversion with domain adversarial training. Neural Netw 48(4):74–84

Pandey A, Wang D (2019) A New Framework for CNN-Based Speech Enhancement in the Time Domain. IEEE/ACM Trans Audio Speech Lang Process 27(7):1179–1188. https://doi.org/10.1109/TASLP.2019.2913512

Koizumi Y, Yatabe K, Delcroix M, Masuyama Y, Takeuchi D (2020) Speech Enhancement Using Self-Adaptation and Multi-Head Self-Attention, In: ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, pp 181–185, https://doi.org/10.1109/ICASSP40776.2020.9053214.

Xie C, Wu Y-C, Tobing PL, Huang W-C, Toda T Noisy-to-Noisy Voice Conversion Framework with Denoising Model. In: 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Tokyo, Japan, 2021, pp. 814-820

Yao J et al. preserving background sound in noise-robust voice conversion via multi-task learning. In: ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 2023, pp. 1–5, https://doi.org/10.1109/ICASSP49357.2023.10095960.

Hu Y, Liu Y, Lv S, Xing M, Zhang S, Fu Y, Wu J, Zhang B, Xie L DCCRN: Deep complex convolution recurrent network for phase-aware speech enhancement. in INTERSPEECH 2020, 2020.

Chen B, Wang Y, Liu Z, Tang R, Guo W, Zheng H, Yao W, Zhang M, He X Enhancing explicit and implicit feature interactions via information sharing for parallel deep CTR models. The 30th ACM International Conference on Information and Knowledge Management, Virtual Event Queensland, Australia 2021, pp.3757–3766.

Wang D, Deng L, Yu TY, Chen X, Meng H (2021) VQMIVC: Vector Quantization and Mutual Information-Based Unsupervised Speech Representation Disentanglement for One-shot Voice Conversion. In: 2021 21th Annual Conference of the International Speech Communication Association (INTERSPEECH), Brno, Czechia, March, pp 1344–1348.

Reddy CK, Dubey H, Gopal V, Cutler R, Braun S, Gamper H, Aichner R, Srinivasan S (2021) Icassp 2021 deep noise suppression challenge. In: 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, , pp. 6623–6627.

Wu Y-H, Lin W-H, Huang S-H Low-power hardware implementation for parametric rectified linear unit function. 2020 IEEE International Conference on Consumer Electronics—Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 2020, pp. 1–2, https://doi.org/10.1109/ICCE-Taiwan49838.2020.9258135.

Trabelsi C, Bilaniuk O, Zhang Y, Serdyuk D, Subramanian S, Santos JF, Mehri S, Rostamzadeh N, Bengio Y, Pal CJ Deep complex networks. arXiv preprint arXiv:1705.09792, 2017.

Kaneko T, Kameoka H CycleGAN-VC: Non-parallel Voice Conversion Using Cycle-Consistent Adversarial Networks. IN: 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 2018, 2100-2104, https://doi.org/10.23919/EUSIPCO.2018.8553236

Rafii Z, Liutkus A, St¨oter F-R (2017) Stylianos Ioannis Mimilakis, and Rachel Bittner, The MUSDB18 corpus for music separation

Veaux C, Yamagishi J, MacDonald K (2016) Superseded-CSRT VCTK Corpus: English multi-speaker corpus for CSRT voice cloning toolkit. University of Edinburgh, The Centre for Speech Technology Research (CSTR)

Qian K, Zhang Y, Chang S, Yang X, Hasegawa-Johnson M AutoVC: Zero-shot voice style transfer with only autoencoder loss. International Conference on Machine Learning (ICML 2019), Long Beach, California, June 2019, pp. 5210–5219.

Chou JC, Yeh CC, Lee HY One-shot voice conversion by separating speaker and content representations with instance normalization. In: Proc. 2019 20th Annual Conference of the International Speech Communication Association (INTERSPEECH), Graz, Austria, Sept. 2019, pp.664–668

Pascual S, Bonafonte A, Serrà J (2017) SEGAN: Speech Enhancement Generative Adversarial Network. In: Proc. 2017 18th Conference of the International Speech Communication Association (INTERSPEECH), Stockholm, Sweden, pp. 3642–3646.

Naderi B, Möller S Transformation of Mean Opinion Scores to Avoid Misleading of Ranked Based Statistical Techniques. In: 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), Athlone, Ireland, 2020, pp. 1–4, https://doi.org/10.1109/QoMEX48832.2020.9123078.

Polyak A, Wolf L (2019) Attention-based wavenet autoencoder for universal voice conversion. In: Proc. 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, , pp.6800–6804.

Rix A, Beerends J, Hollier M, Hekstra A Perceptual evaluation of speech quality (PESQ)-a new method for speech quality assessment of telephone networks and codecs. In: 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No.01CH37221), vol. 2. IEEE, 2001, pp. 749–752.

Taal CH, Richard HRCH, Jesper J (2011) An algorithm for intelligibility prediction of timefrequency weighted noisy speech. IEEE Transactions on Audio, Speech, and Language Processing, 19(7): 2125–2136

Varga A, Steeneken HJM (1993) Assessment for automatic speech recognition: II. noisex-92: a database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun 12(3):247–251

Acknowledgements

The work was supported by the Natural Science Foundation of China (62071484,62371469) and the Natural Science Foundation of Jiangsu Province (BK20180080).

Author information

Authors and Affiliations

Contributions

LC: conceptualization, data curation, formal analysis, investigation, methodology, writing (original draft), software. XZ: funding acquisition, project administration, resources. YL: software, validation, answering reviewers' questions. MS: methodology, funding acquisition, writing (review and editing), supervision. WC: writing (review & editing), answering reviewers' questions.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, L., Zhang, X., Li, Y. et al. A noise-robust voice conversion method with controllable background sounds. Complex Intell. Syst. 10, 3981–3994 (2024). https://doi.org/10.1007/s40747-024-01375-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-024-01375-6