Abstract

Image deblurring is an essential problem in computer vision. Due to highly structured and special facial components (e.g. eyes), most general image deblurring methods and face deblurring methods failed to yield more explicit structure and facial details, resulting in too smooth, uncoordinated and distorted face structure. Considering the unique face texture and sufficient facial details, we present an effective face deblurring network by exploiting more regularized structure and enhanced texture information (RSETNet). We first incorporate the face parsing network with fine-tuning to obtain more accurate face structure, and we present the feature adaptive denormalization (FAD) to regularize the facial structure as a condition of auxiliary to generate more harmonious and undistorted face structure. Meanwhile, to improve the generated facial texture information, we propose a new Laplace depth-wise separable convolution (LDConv) and multi-patch discriminator. Compared with existing methods, our face deblurring method could restore face structure more accurately and with more facial details. Experiments on two public face datasets have demonstrated the effectiveness of our proposed methods in terms of qualitative and quantitative indicators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Image deblurring is a classical problem in computer vision, which aims to restore clear images from blurred images. It has been continuously studied and has produced better results. Generally, it is divided into general image deblurring method and special image deblurring [13, 25]. Face deblurring is one of the most interesting subjects in various image deblurring tasks, such as face verification. In recent years, many researchers are devoted to eliminating face blur using deep learning-based methods [4, 6, 10, 11, 20, 28, 30], and also have gained some traction. However, in much-published research on the subject, some problems still exist, such as artifacts, insufficient contrast, and detectable flaws.

The failure of these methods mainly stems from neglecting the unique face structure and texture information [28, 29], which usually treat it as a general image debluring problem or simply introduce face semantic information. Owing to the success of deep learning, face deblurring methods based on it have been carried out extensively and showed promising performance. An overview of experimental results on the low-quality CelebA dataset with 25,000 blur kernels is shown in Fig. 2. It is analyzed several recent methods (include general image methods (e.g., DeblurGAN) and special face deblurring methods (e.g.,UMSN)). As we can see, several recent methods (DeblurGAN [10], DeblurGAN-V2 [11], DMPHN [30], UMSN [28], MPRNet [29], AirNet [12]) effectively removed the overall blur of the images, but failed in accurate facial structures and details. We can know that general image debluring methods can not directly apply on special structure face image. Specially, these methods fail to recover more facial details in highly structured regions or to generate more accurate facial features. For example, both general deblurring methods DeblurGAN [10] and DeblurGAN-V2 [11] produce more unharmonious and distorted face structures. The deblurred image, generated by DMPHN, is rather smooth but not sharp enough (see Fig. 2d). UMSN by Yasarla et al. yields low saturation and is not bright enough in the eyes (see Fig. 2e). In 2021, zamir et al. presented MPRNet [29] which also suffers from artifacts and insufficient contrast, especially in the eyes (see Fig. 2f). In 2022, AirNet [12] proposed by Li et al. suffers from a lack of facial detail and inaccurate regional structure of the eyes and mouth. To summarize, it is known that most SOTA methods perform well in terms of image texture but fail to generate accurate face regional structures and sufficient facial features when dealing with narrow edges or highly textured regions in the face. Therefore, how to maintain undistorted face structure and strengthen facial texture is still a challenging problem. Furthermore, Fig. 1 shows box plot [24] of SSIM on the CelebA-HQ dataset. we can know that our method has the best and the least variable SSIM response (i.e. smaller variances and fewer outliers). Moreover, in addition to 2D face deblurring, 3D face reconstruction approaches are viable for faces with low degrees of blur but not well for complex and ambiguous situations [3]. Thus, it is necessary exploited to overcome the drawbacks of existing models.

In this paper, we present a regularized structure and enhanced texture face deblurring network (RSETNet) to address the aforementioned issues. To be more specific, we first fine-tune the face parsing network to achieve more accurate contour structure of the blurred face and embed it into a generator as a facial prior for face restoration. Rather than considering the contour structure as prior or specific constraint, we design a new facial adaptive denormalization (FAD) as a supervision to regular facial structure and generate a more harmonious and undistorted face structure. Moreover, a Laplace depth-wise separable convolution (LDConv) is constructed to strengthen the clarity and details of facial texture, meanwhile, facial region is separated into back, hair and skin regions and with the corresponding multi-region reconstruction loss with greater weights on facial part to generate clear and natural facial texture. Furthermore, we introduce a multi-patch discriminator with different receptive fields to enhance deblurred facial image details. Figures 1 and 2 have illustrated that RSETNet is an effective deblurring network and achieves state-of-the-art deblurring performance. The main contributions of this paper are summarized as follows.

-

Facial adaptive denormalization (FAD) is proposed to incorporate face parsing with fine-tuning and produce more accurate face structure properties using denormalization.

-

Laplace depth-wise separable convolution (LDConv) is proposed to enhance facial texture information in shallow feature maps and produce sharp image.

-

We design a multi-region reconstruction loss with a higher weight on the facial region, which obtains more detailed facial texture.

The remainder of this paper is organized as follows. Section “Related works” presents preliminaries and the motivation. Our regularized structure and enhanced texture network is introduced in “Regularized structure and enhanced texture network”. In “Experiments”, experimental results from two public face datasets demonstrate the effectiveness of our method. Finally, our conclusions are presented in “Conclusion”.

Related works

In this section, we will look at two types of deblurring methods: general image deblurring methods and face deblurring methods. The former is generally superior for natural landscape pictures but, as previously stated, may fail to handle more complex structured images. The latter is designed specifically for face deblurring and works with highly structured images.

General image deblurring

General image deblurring methods are designed to deal with natural landscape images that are not highly structured. Its traditional image deblurring techniques frequently rely on unpromising assumptions [17, 26]. Recently, deep learning techniques, specifically GAN, have been successfully applied to image deblurring [10, 11, 23, 27, 30]. For example, Xu et al. [27] state that basic GAN is effective at capturing semantic information but cannot generate realistic high resolution images, to achieve this, they combine feature matching loss with multi-class GAN. Tao et al. [23] amplify the merit of various CNN structures and utilize a large receptive field to address two important and general issues in CNN-based deblurring systems. Inspired by spatial pyramid matching, Zhang et al. [30] present a deep hierarchical multi-patch network to deal with blurry images via a fine-to-coarse hierarchical representation. Furthermore, both DeblurGAN [10] and DeblurGAN-v2 [11], proposed by Kupyn et al., play an important role in image deblurring. Considering that the generated image would be too smooth due to the pixel level reconstruction loss (L1 or L2), they introduced perceptual loss for image deblurring. Although the methods described above have shown their superiority in general image deblurring, none of them can be directly applied to produce optimal results in face deblurring due to its particularities (e.g., highly structured eyes and mouth) (see Fig. 2b, c).

Face deblurring

Since the face’s geometric structure is regular but highly structured, directly applying general image deblurring techniques to face images results in rather smooth but not sharp enough. Thus, a unique face deblurring algorithm evolved. However, despite their superiority in terms of general image deblurring, facial structure and texture information has not been properly considered or utilized in existing face deblurring models. Moreover, studies [19, 22] state that after estimating the blur kernels, face images have fewer textures and edges, which makes it even difficult to restore the structure and texture of blurred facial features. As a result, there are numerous prior-based techniques that concentrate on the blur kernel or the latent picture to improve face deblurring results (e.g., semantic priors [19], face parsing [28]).

Specifically, Shen et al. [19] consider that facial images are highly structured and share several key semantic components (e.g., eyes and mouth), which provides the basis for semantic face deblurring. For example, Shen et al. [19] consider that face images are highly structured and share several key semantic components (e.g., eyes and mouths), which provides semantic face deblurring. They first extracted the global semantic priors (semantic labels) by a face parsing network, concatenated the semantic priors and the blurred image as input, and then imposed pixel-wise content loss and local structure losses to regularize the output within a two-scale deep CNN. Moreover, they imposed perceptual and adversarial losses to generate photo-realistic results in the second scale. However, as demonstrated in [28], it fails to reconstruct the eye and mouth regions since the significance of less important semantic regions has not been adjusted.

Moreover, Song et al. [22] think that restoring a high-resolution face image and deblurring simultaneously is difficult. They devised an efficient algorithm that utilizes the domain-specific knowledge of human faces to recover high-quality faces and employs facial component matching to restore facial details. Furthermore, to address the imbalance between several semantic classes, Rajeev Yasarla et al. [28] proposed an uncertainty guided multi-stream semantic networks (UMSN) which exploits semantic labels for facial image deblurring. It processes class-specific features independently by subnetworks with reconstruction a single semantic class and combine them to deblur the whole face image. During training they incorporated the confidence measure loss to guide the network towards challenging regions of the human face such as the eyes. However, it fails to restore sharp images with more facial details (see Fig. 2e). To optimally balance spatial details and high-level contextualized information while recovering images, Syed Waqas Zamir et al. [29] proposed a multi-stage architecture, called MPRNet, to progressively learns restoration functions for the degraded inputs, thereby breaking down the overall recovery process into more manageable steps. In the earlier stages, they employ an encoder-decoder for learning multi-scale contextual information, while they operates on the original image resolution to preserve fine spatial details in the last stage. Moreover, the supervised attention module (SAM) and the cross-stage feature fusion (CSFF) mechanism are plugged to enable progressive learning and help propagating multi-scale contextualized features from the earlier to later stages. However, this method leads to large artifacts (see Fig. 2f). Moreover, Zhang et al. [31] proposed a multi-scale progressive face-deblurring generative adversarial network in 2022 that requires no facial priors to generate more realistic multi-scale deblurring results through a single feed-forward process.

Furthermore, in addition to 2D face deblurring, 3D face reconstruction techniques have also produced promising results. Hu et al. suggested using 3D facial priors to guide face super-resolution [3]. They believe that the 3D coefficients are rich in hierarchical information, including face posture, illumination, texture, and information about identity and facial emotion. 2D landmark-based priors, which only pays attention to the distinct points of facial landmarks, may lead to the facial distortions and artifacts. Unfortunately, face deblurring based on 3D priors takes a lot of time and works well for faces with low degrees of blur but not so well for those with high levels. Therefore, this paper focuses on 2D deblurring method, and an approach to overcoming the previous problem is proposed in the following section.

Regularized structure and enhanced texture network

An overview of the proposed framework is shown in Fig. 3a. To restore sharp face images with more facial details, more regularized structure and enhanced texture information are utilized. It is consisted three parts: face parsing network, generator (mainly LDConv and FAD), and discriminator and works as follows. First, face structure is acquired by the face parsing network and regularized by FAD. Then, to enhance facial texture, an LDConv structure and multi-patch discriminator are constructed. The loss functions for these components are then described. Finally, the algorithm is listed.

Overview of the RSETNet architecture, it mainly consists of three modules: face parsing network, Generator and Multi-patch discriminator. Face parsing network aims to capture more face structure information by fine-tuning. Generator mainly includes ResBlock (see Fig. 4), Decoder block (D-block, see Fig. 5), LDconv and FAD block. We design LDconv to enhance texture information and generate more detailed features. FAD block is constructed to preferably reconstruct face structure information. The multi-patch discriminator is designed to generate more realistic images while greatly reducing the number of discriminator parameters

Face parsing

To obtain accurate face structure information (see \(P_t\) in Fig. 3), we feed blurred face images \(x_{b}\) into the parsing network and fine-tune it. Since five sense attributes of face segmentation are very unbalanced, we employ focal loss [14] in fine-tuning parsing network to face semantic segmentation. Formally,

where \(P_{t}^{'}\) denotes network prediction, \(P_{t}\) is the semantic label, and set the tunable focusing parameter \(\gamma =2.0\).

Generator

Our generator is composed of ResBlock, Decoder block (D-block), LDconv and FAD block. As shown in Fig. 3a, a series of ResBlocks makes up the encoder, which extracts both shallow and deep features. ResBlock consists of two residual modules, and through it, the feature map is downsampling by convolution with stride 2 (see Fig. 4). Decoder includes D-block, LDConv and FAD block. Unlike the original D-block, we first shrink the original m channels by 4 times and output it with n channels by up-sampling (see Fig. 5). This would drastically reduce the number of arguments. The details of ResBlock and D-block are as follows.

ResBlock: As shown in Fig. 4, it consists of two residual modules and each module includes convolution, spectral normalization (SN) and RELU activation functions. /2 denotes downsampling and reduces the feature map by 2 times. (m, n) denotes \((in_{channel}, out_{channel})\). The feature map is downsampling through a set of convolutional layers, and simultaneously is directly downsampling by another branch (see \(\textcircled {/2}\) branch), and finally is added by elements as the input of the second residual module. Similar operation is performed on the second residual module.

D-block: As shown in Fig. 5, it consists of three modules and each module includes convolution, batch normalization (BN), and RELU activation function (see Fig. 5). In Fig. 5, \(*2\) denotes an upsampling operation which enlarges the size of the feature graph by 2 times. m is the number of input channels in the feature map and the number of output channels is n. As shown in Fig. 5, first convolution reduces the number of channels of input feature map m into m/4, and after up-sampling by the second module, the last convolution layer enables the feature map to reach the target channel number n.

Laplace depth-wise separable convolution (LDConv) Liu et al. [15] pointed out that the shallow feature map from the encoder could represents the texture information of an image, while the deep feature map represents the structures. Similarly, we find that for decoder, its deep feature map does structural restoration, while its shallow feature map does texture restoration. Therefore, to enhance the texture information of the shallow feature map, Laplace operator (a type of differential operator) is introduced for edge sharpening. Formally, Eq. (2) shows how to calculate differential operator, which could enhance the texture information by combining surrounding pixels.

where the subscript (i, j) represents the location of pixels in the feature map f, and \(c \in \{1,\ldots ,n\}\) is the cth feature map channel. Since directly applying Laplace convolution on each channel of the feature map is inefficient, we adopt the mobilenet’s depth-wise separable convolution to solve that [2]. Formally,

where \(f_{c}\) is the cth channel of the feature map F, and \(W_{l}\) is the Laplace convolution kernel and \(W_{s}\) is a \(1\times 1\) convolution kernel. This generates grayscale mutation information and overwrites the original feature map, preserving both sharp and abrupt edges as well as background information. Thus, by convolution between F and \(W_s\) in LDConv (see Fig. 6), the texture information could be enhanced, and with it more detailed image.

Feature adaptive denormalization (FAD) block Some state-of-the-art methods [19, 21, 28] only employed face parsing as prior information to complete face deblurring without a suitable constraint on face structure. For face images, facial parts, skin, hair, and background have different mean and variance owning to their different attributes in representing information. However, batch normalization (BN), instance normalization (IN), and spectral normalization (SN), take the whole image as normalizing its uniform mean and variance without considering each face regions. To some extent, this results in the loss of various attributes of the face’s regions. Specifically, from Fig. 8 we can know that (a) original image can be divided into facial part, back part, hair part and skin part. Those parts have different mean and variance owning to their different attributes in representing information. If we use existing normalization methods taking whole image as normalizing, this would result in the loss of various attributes of the face’s regions. Inspired by the SPADE [18], we design the feature adaptive denormalization (FAD) Block to preferably restore face structure information. It as a supervision (\(P_t\)) provides adaptive effects to regular facial structure (F) and generate a more harmonious and undistorted face structure (see Fig. 3c).

Different from previous normalization methods or VAE sampling method, we first normalize the feature to the standard normal distribution with BN and use Face Parsing’s semantic segmentation labels (\(P_t\) divided into four regions: f, b, h, s (see Fig. 8)) to enable the network to learn the pixel-level mean and variance of each regions. To learn the distribution information of their respective regions, we regularize the generated face structure by the inverse normalization for making the normative constraints. Based on Pt as supervision, we can adaptively regular each face parts of F not as a whole image. As shown in Fig. 3c, we first feed the enhanced texture activation map F (3D tensor with \(C \times H \times W\) size) into a batch normalization (BN) to normalize F following normal distribution (see Eq. (4)), and then regularize facial structure by denormalization (see Eq. (6)), where C is the number of channels and \(H \times W\) denotes the spatial dimensions. This would make for generating more harmonious and undistorted face structure. Formally,

where \(\mu _{f}\) and \(\sigma _{f}^{2}\) are the mean and variance of F respectively, \(\gamma \) and \(\beta \) are the scale and shift, and small value \(\varepsilon \) is set for avoiding division by zero issue. To regularize face structure of \(F^{BN}\) according to face parsing \(P_t\) (see Fig. 3 (c)), its pixel-level mean \(\mu _{p}\) and variance \(\sigma _{p}^{2}\) are calculated by Eq. (5).

where \(W_{p}\) is a \(3 \times 3\) convolution kernel, and \(W_{\mu }\) and \(W_{\sigma }\) are convolution kernel to automatically learn its pixel-level mean and variance according to \(P_t\), respectively. That is to say, according to \(P_t\) (includes f, b, h, and s regions) as a supervision, Eq. (5) adaptively learns its pixel-level mean and variance of each regions. As shown in Fig. 3 (c), face parsing \(P_t\) is first subsampled to \(H \times W\) such that \(\mu _{p}\) and \(\sigma _{p}^{2}\) are with \(C\times H \times W\) size. Finally, each channel output \(F^{out}\) is obtained by feature adaptive denormalization:

where \(\otimes \) is multiplying by the elements. In this way, \(P_t\) as a supervision could regular facial structure (F), and facial information is restored structurally and prevent the damage of loss of facial information.

Multi-patch discriminator

Some studies illustrates that using multiple discriminators can produce better image but result in a large number of parameters for some GAN-based methods (e.g. GLCIC [5], FIFPNet [32], and LOAHNet [33]). To alleviate the problem and generate more realistic images, we introduce a multi-patch discriminator with different receptive fields and integrate one global discriminator to effectively supervise the generator (see Fig. 3a). The multi-patch discriminator consists of a series of SN Blocks which are composed of spectral normalization (SN) layers to stabilize discriminator. The details of SN block are shown in Fig. 7, which may increase the receptive field.

SN Block. It includes spectral normalization (SN), convolution and Relu activation functions. It consists of two branches in parallel. The kernel sizes of the first and second convolutional branch are \(4\times 4\) \(3\times 3\), respectively. In this way, the receptive field of SN block can be increased

Specifically, the last three SN blocks is followed by three \(3\times 3\) convolution to set their output feature maps with different patches \(8\times 8\), \(4\times 4\) and \(2\times 2\), respectively. The patch values of each position in different feature maps represent the perception results under different receptive fields. Finally, the output of last SN block is fed into the dense layer of the global discriminator to determine whether the whole image is real or fake. Both types of discriminators coexist and influence one another. The multi-patch discriminator obtains detailed information, while the global discriminator enhances the realistic and natural appearance of the generated image. Meanwhile, our multi-patch discriminator could greatly reduce the number of discriminator parameters so as to supervise the generator with multiple discriminators effectively.

Loss function

Multi-region reconstruction loss and adversarial loss are described in the following sections, and the loss of a face parsing network is mentioned in Eq. (1).

Multi-region reconstruction loss. Unlike some methods that directly use \(L_1\) loss to constraint the whole image, which may result in smooth results, our RSETNet focuses on face region reconstruction. Specially, to obtain precise face structure information from the blurred images, we first fine-turn the parsing network and divide the semantic face \(P_t\) into four regions: facial mask f, back mask b, hair mask h and skin mask s (see Fig. 8). To better restore these four regions, we construct four-region reconstruction loss (see Eq. (7)) to reconstruct face structure information, respectively. Formally,

where \(I_{g}\) is the generated face by the generator, \(I_ {t}\) is the target face, and N denotes the number of images in a batch, and \(i\in \{1, 2, \ldots , N\}\). The total reconstruction loss is shown in Eq. (8):

where set \(\lambda _{1}^\textrm{rec}=6\), \(\lambda _{2}^\textrm{rec}=10\), \(\lambda _{3}^\textrm{rec}=8\), \(\lambda _{4}^\textrm{rec}=12\) in our experiments. Importantly, the hyper-parameters determine how much attention the generator gives to different areas, for example, the face requires the most attention, while backgrounds are less important. Therefore, we give higher attention to face skin s and f and the lowest attention to the background. In this way, the multi-region reconstruction loss may compel the generator to concentrate on the facial structure generation and texture construction.

Adversarial loss. To supervise the generator, we integrate a global discriminator with a multi-patch discriminator. There are four outputs: three patch results at various scales and one global result. We employ the Relativistic adversarial loss [7, 8] as generator’s adversarial loss (see Eq. (9)):

where \(k\in \{1, 2, 3, 4\}\), \(D_{P_1}, \ldots ,D_{P_3}\) denote three patch discriminators with different receptive fields, and \(D_{P_4}\) denotes the global discriminator, \(sigmoid(\cdot )\) is sigmoid activation function and \(log(\cdot )\) is log function. Formally, the adversarial loss of generator can be defined as:

where \(\textrm{loss}_{g_k}^\textrm{adv}\) is from Eq. (10), we set \(\lambda _{4}^\textrm{adv}=1.0\), \(\lambda _{3}^\textrm{adv}=0.8\), \(\lambda _{2}^\textrm{adv}=0.4\), \(\lambda _{1}^\textrm{adv}=0.2\) in our experiments. According to the size of receptive field, we assign different degrees of attention. The larger the receptive field, the greater the weight.

Thus, the total loss of our generator is:

where set \(\lambda _1=120\) and \(\lambda _{2}=0.1\) in our experiments.

Similarly, our discriminator’s adversarial loss is composed of four components: three multi-patch discriminators and one global discriminator. Both generator and discriminator networks compete with each other to improve the accuracy of their generations. Formally, our adversarial loss of the discriminator can be defined as:

where \(k\in \{1, 2, 3, 4\}\) and \(D_{P_1},\ldots , D_{P_3}\) denote three patch discriminators, and \(D_{P_4}\) denotes the global discriminator. Thus, the adversarial loss of the entire discriminator can be written as:

where \(\textrm{loss}_{d}^\textrm{adv}\) and \(\textrm{loss}_{g}^\textrm{adv}\) share the same hyper-parameters. The whole algorithm is summarized in Algorithm 1.

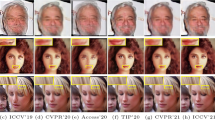

Qualitative comparisons on CelebA-HQ. a Blur image, b DeblurGAN (CVPR’18), c DeblurGAN-V2 (ICCV’19), d DMPHN (CVPR’19), e UMSN (TIP’20), f MPRNet (CVPR’21), g AirNet (CVPR’22), h RESTNet, i ground truth (GT). More results are shown in the Appendix B (see Fig. 15)

Qualitative comparisons on CelebA, a blur image, b DeblurGAN (CVPR’18), c DeblurGAN-V2 (ICCV’19), d DMPHN (CVPR’19), e UMSN (TIP’20), f MPRNet (CVPR’21), g RESTNet, h Ground truth (GT). More results are shown in the Appendix B (see Fig. 16)

Experiments

Experimental settings

Datasets. We have performed experiments on public face datasets to fully evaluate the validity of the proposed method. In the experiments, we use the following datasets: 160\(\times \)160 low-resolution CelebA [16] and 256\(\times \)256 high-resolution CelebA-HQ [9]. More concretely, the CelebA-HQ dataset is divided into 28, 000 training images, 1000 validation images and 1000 test images, respectively. Moreover, for CelebA-HQ dataset, We use a 25 blur kernel to blur the motion of the original image, with a 45 motion angle. Similarly, we divide CelebA into 162,770 training images, 19,867 validation images and 19,962 test images. Specially, we use the more complicated blur pattern to further evaluate our model on the CelebA. In detail, we use 3D camera trajectories [1] to produce 25,000 blur kernels with sizes ranging from \(13 \times 13\) to \(29 \times 29\). Furthermore, we generate millions of pairs of clean-blurry data using both \(160 \times 160\) image patches and convolution with 2500 blur kernels. In addition, we also apply Gaussian noise with \(\sigma =0.03\) to blurry images. Simultaneously, on the two clear datasets, the pre-trained face parsing network is used to construct the ground-truth of the semantic segmentation labels.

Training Details. We implement all the experiments in the Pytorch deep learning framework with an NVIDIA RTX 2080Ti GPU. In the experiment, we adopt a two-stage training strategy. In Stage-I, we utilize both focal loss and SGD optimizer with an initial learning rate of 0.02, \(momentum=0.9\), \(weight\_decay=0.0005\) to fine-tune the face parsing network. When the pre-trained face parsing network converges, we end Stage-I training. In Stage-II, we start to train the deblurring process. Furthermore, we employ the Adam optimizer with an initial learning rate of 0.0002, \(beta 1=0.5\), and \(beta 2=0.999\).

Experimental results

We compared our method (RSETNet) with five state-of-the-art methods: DeblurGAN (CVPR’18) [10], DeblurGAN-v2 (ICCV’19) [11], DMPHN (CVPR’19) [30], UMSN (TIP’20) [28], MPRNet (CVPR’21) [29], and AirNet (CVPR’22) [12]. Note that we selected their best performance results on both same datasets.

Qualitative comparisons on face parsing. The first row is blurry images. The second row is target images. The third row is the corresponding semantic masks obtained from the face parsing network on blurry faces without fine-tune. The fourth row is the corresponding semantic masks obtained from the face parsing network with fine-tuned blurry images. The fifth row is the corresponding semantic masks generated by the face parsing network with target images

Qualitative Comparisons. Figures 9 and 10 compare our approach to five state-of-the-art methods on both high-quality CelebA-HQ and low-quality CelebA datasets, respectively. Note that we selected their best performance results shown in Figs. 9 and 10. We can see that although DeblurGAN and DeblurGAN-V2 perform well on CelebA-HQ with a single type of blur kernel, they degrade quickly on CelebA with a more complex face blur. This demonstrates that DeblurGAN and DeblurGAN-v2 use feature matching to capture semantic information, but they do not consider pixel-level reconstruction. Therefore, it would result in the loss of face structure information and a poor performance on face detail reconstruction. DMPHN divides the image into multiple patches and employs MSE to complete face blur removal, which yields too smooth and unsharp images. UMSN completes the face deblurring using the semantic label of the face as prior information; it produces good results, but texture reconstruction is not meticulous. MPRNet achieves an optimal balance of spatial details and high-level contextualized information, but it also contains artifacts and lacks contrast. Both figures illustrate that our method achieve good performance than all the other methods, and generates clear and natural faces on both CelebA-HQ and CelebA. Specially, five SOTA methods fail to deblurring task when dealing with too blurry image (see the third row in Fig. 10). More results are shown in the Appendix B.

Furthermore, face parsing qualitative results is shown in Fig. 11. As can be observed from Fig. 11 that, when compared with the third row results without fine-tuning from blurry face, the fourth row results with fine-tuning from blurry face could achieve results similar to the fifth row results from the target images. This illustrates that our fine-tuning parsing network with focal loss can extract more accurate facial structure information from a blurred face.

Quantitative Comparisons. Four types of criteria is used to access the performance of various methods: (1) peak signal-to-noise ratio (PSNR); (2) Structural SIMilarity (SSIM); (3) \(L_1\) loss; and (4) Frechet inception distance (FID). PSNR based on the error between pixels measures the degree of image distortion. SSIM evaluates image quality in terms of brightness, contrast and structure. \(L_1\) evaluates images at the pixel level. FID comprehensively represents the distance between the Inception feature vectors of the generated image and the real image in the same domain.

Tables 1 and 2 show the qualitative results of different methods on the CelebA-HQ and CelebA datasets, respectively. we can observe that DeblurGAN and DeblurGAN-V2 achieve good results on the CelebA-HQ with a single-scale face deblurring kernel, but have little effect on the CelebA with a more complex face deblurring kernel. Our RSETNet achieves substantial improvement than all the other methods, in terms of PSNR, SSIM, \(L_1\) and FID.

The effect of different components in our model. a Blur face, b \(Without\; Parsing\), c \(Without\; LDConv\), d \(\text {RESETNet}\_L1\), e \(\text {RESETNet}\_DIS\), f RESTNET, g GT. More results are shown in the Appendix B (see Fig. 17)

To further study the effectiveness of these methods, we compared their performances from the point of view of statistics (box plot). The influence of different deblurring method are presented in Figs. 13 and 14 (see Appendix A). As can be observed from both tables and both figures, our method significantly advances the state-of-the-art by consistently achieving better PSNR, SSIM and \(L_1\) scores on all two datasets, i.e., the highest SSIM and PSNR and the lowest \(L_1\) with least variance change.

Ablation study

We further perform experiments to study the effect of the various part of our RSETNet. We investigate how the different combinations of our components affect our deblurring performance. We have performed five comparative experiments. As shown in Fig. 12, (a) blur face; (b) \(Without\; parsing\), which is without FAD and LDConv; (c) \(Without\; LDConv\), which is with FAD but without LDConv block; (d) \(\text {RSETNet}\_L_1\), which reconstructs the image as a whole rather than dividing it into multiple regions; (e) \(\text {RSETNet}\_DIS\), which only uses a common discriminator instead of multi-patch discriminator; (f) Our RSETNet; (g) ground truth (GT).

Box plot for face deblurring on the CelebA-HQ dataset. Comparing different SOTA methods, our RSETNet performs better than the state-of-the-art. See SSIM in Fig. 1

Qualitative and quantitative experimental results are shown in Fig. 12 and Table 3, respectively. Table 3 illustrates that (b) \(Without\; parsing\) (without FAD and LDConv) achieves the worst result in terms of PSNR, \(L_1\) loss and FID; (e) \(\text {RSETNet}\_DIS\) (without the multi-patch discriminator) is better in terms of PSNR, SSIM, and \(L_1\) loss but gets the lowest value in FID. Moreover, from Fig. 12, we know that (b) \(Without\; Parsing\) is worse at reconstructing structural information; (c) \(Without\; LDConv\) could enhance the structural information of face in face reconstruction than (b). Compared with RSETNet, (b) \(Without\; Parsing\) and (c) \(Without\; LDConv\), it is easy to known that LDConv could enhance the face texture information. Thus, combined with LDConv and FAD, our RSETNet was capable of generating high-quality face inpainting images with multi-patch discriminator and multi-region reconstruction loss. More qualitative results are shown in the Appendix B.

Conclusion

In this paper, we proposed an effective RSETNet network for Face deblurring. RSETNet achieves substantial improvement using more regularized structure and enhanced texture information. Our proposed FAD could regularize face structure and generate more harmonious and undistorted face structure and LDConv could enhance facial texture and produce more detailed facial texture. Furthermore, we designed a multi-region reconstruction loss and a multi-patch discriminator to supervise the network and restore high quality face images. Experiments show that our method has better performance than the state-of-the-art method. Furthermore, our method is easily adaptable to other deblurring tasks. To deblur an image with multiple faces, as with other face deblurring techniques, a combination of face detection techniques is required. We will concentrate on this issue in the future.

Data availability

The authors confirm that the data supporting the findings of this study are available within the articles (references [9] and [16]).

References

Boracchi G, Foi A (2012) Modeling the performance of image restoration from motion blur. IEEE Trans Image Process 21(8):3502–3517

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv:1704.04861

Hu X, Ren W, LaMaster J, Cao X, Li X, Li Z, Menze B, Liu W (2020) Face super-resolution guided by 3d facial priors. In: European conference on computer vision. Springer, pp 763–780

Huang Y, Yao H, Zhao S, Zhang Y (2015) Towards more efficient and flexible face image deblurring using robust salient face landmark detection. Multimed Tools Appl 76(1):1–20

Iizuka S, Simo-Serra E, Ishikawa H (2017) Globally and locally consistent image completion. ACM Trans Graph 36(4):1–14

Jin M, Hirsch M, Favaro P (2018) Learning face deblurring fast and wide. In: 2018 IEEE conference on computer vision and pattern recognition workshops

Jolicoeur-Martineau A (2018) The relativistic discriminator: a key element missing from standard gan. arXiv:1807.00734

Jolicoeur-Martineau A (2019) On relativistic f-divergences. arXiv:1901.02474

Karras T, Aila T, Laine S, Lehtinen J (2017) Progressive growing of gans for improved quality, stability, and variation. arXiv:1710.10196

Kupyn O, Budzan V, Mykhailych M, Mishkin D, Matas J (2018) Deblurgan: blind motion deblurring using conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8183–8192

Kupyn O, Martyniuk T, Wu J, Wang Z (2019) Deblurgan-v2: deblurring (orders-of-magnitude) faster and better. In: Proceedings of the IEEE international conference on computer vision, pp 8878–8887

Li B, Liu X, Hu P, Wu Z, Lv J, Peng X (2022) All-in-one image restoration for unknown corruption. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 17452–17462

Li H, Zhang Z, Jiang T, Luo P, Feng H, Xu Z (2023) Real-world deep local motion deblurring. In: 2023 association for the advancement of artificial intelligence. IEEE, pp 1–12

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Liu H, Jiang B, Song Y, Huang W, Yang C (2020) Rethinking image inpainting via a mutual encoder-decoder with feature equalizations. arXiv:2007.06929

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of international conference on computer vision

Pan J, Hu Z, Su Z, Lee H-Y, Yang M-H (2016) Soft-segmentation guided object motion deblurring. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp 459–468

Park T, Liu M-Y, Wang T-C, Zhu J-Y (2019) Semantic image synthesis with spatially-adaptive normalization. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2337–2346

Shen Z, Lai W-S, Xu T, Kautz J, Yang M-H (2018) Deep semantic face deblurring. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8260–8269

Shen Z, Lai WS, Xu T, Kautz J, Yang MH (2020) Exploiting semantics for face image deblurring. Int J Comput Vis (IJCV) 128(8):1829–46

Shen Z, Lai W-S, Xu T, Kautz J, Yang M-H (2020) Exploiting semantics for face image deblurring. Int J Comput Vis

Song Y, Zhang J, Gong L, He S, Bao L, Pan J, Yang Q, Yang M-H (2019) Joint face hallucination and deblurring via structure generation and detail enhancement. Int J Comput Vis 127(6–7):785–800

Tao X, Gao H, Shen X, Wang J, Jia J (2018) Scale-recurrent network for deep image deblurring. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8174–8182

Vardeman S (1984) Graphical methods for data analysis. J Qual Technol 16(3):177–178

Tang X, Zhao X, Liu J, Wang J, Miao Y, Zeng T (2023) Uncertainty-aware unsupervised image deblurring with deep residual prior. In: 2023 computer vision and pattern recognition. IEEE, pp 1–12

Xu L, Jia J (2010) Two-phase kernel estimation for robust motion deblurring. In: European conference on computer vision. Springer, pp 157–170

Xu X, Sun D, Pan J, Zhang Y, Pfister H, Yang M-H (2017) Learning to super-resolve blurry face and text images. In: Proceedings of the IEEE international conference on computer vision, pp 251–260

Yasarla R, Perazzi F, Patel VM (2020) Deblurring face images using uncertainty guided multi-stream semantic networks. IEEE Trans Image Process 29:6251–6263

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2021) Multi-stage progressive image restoration. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 14821–14831

Zhang H, Dai Y, Li H, Koniusz P (2019) Deep stacked hierarchical multi-patch network for image deblurring. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5978–5986

Zhang H, Shi C, Zhang X, Wu L, Li X, Peng J, Wu X, Lv J (2022) Multi-scale progressive blind face deblurring. Complex Intell Syst 9(2):1439–53

Zhang X, Shi C, Wang X, Wu X, Li X, Lv J, Mumtaz I (2021) Face inpainting based on gan by facial prediction and fusion as guidance information. Appl Soft Comput 111:107626

Zhou T, Ding C, Lin S, Wang X, Tao D (2020) Learning oracle attention for high-fidelity face completion. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7680–7689

Acknowledgements

This work was supported by the Sichuan Science and Technology program (Grant Nos. 2023ZHCG0018, 2023NSFSC0470, 2020JDTD0020, 2022YFG0026, 2021YFG0018, 23ZHSF0169) and National Natural Science Foundation of China (Grant No. 42130608).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix: A Box plot

To further study the effectiveness of these methods, we compared their performances from the point of view of statistics (box plot, see Figs. 13 and 14). As can be observed from both figures and Tables 1 and 2, our method achieves a mean PSNR of 29.21, a mean SSIM of 0.878 and a mean \(L_1\) value of 2.83 on CelebA-HQ dataset, and achieves a mean PSNR of 30.04, a mean SSIM of 0.941 (see Fig. 1) and a mean \(L_1\) value of 2.23 on CelebA dataset. Comparing variances by box plot (see Figs. 13 and 14), it is easy to see that our method has narrower boxes (smaller variances) and fewer outliers. This illustrates that our method has a significant effect on face deblurring with respect to both location and variation, and advances state-of-the-art by consistently achieving better PSNR, SSIM and \(L_1\) scores on all two datasets. Note that it is can compute the box plot of FID.

Appendix B: More experimental results

More qualitative comparisons on CelebA-HQ and CelebA dataset are illustrated (see Figs. 15 and 16). Figure 17 shows more ablation study results to illustrate the effect of different components in our model.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shi, C., Zhang, X., Li, X. et al. Face deblurring based on regularized structure and enhanced texture information. Complex Intell. Syst. 10, 1769–1786 (2024). https://doi.org/10.1007/s40747-023-01234-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01234-w