Abstract

Constrained multi-objective optimization problems (CMOPs) exist widely in the real world, which simultaneously contain multiple constraints to be satisfied and multiple conflicting objectives to be optimized. Therefore, the challage in addressing CMOPs is how to better balance constraints and objectives. To remedy this issue, this paper proposes a novel dual-population based constrained multi-objective evolutionary algorithm to solve CMOPs, in which two populations with different functions are employed. Specifically, the main population considers both objectives and constraints for solving the original CMOPs, while the auxiliary population is used only for optimization of objectives without considering constraints. In addition, a dynamic population size reducing mechanism is proposed, which is used to adjust the size of the auxiliary population, so as to reduce the consumption of computing resoruces in the later stage. Moreover, an independent external archive is set to store feasible solutions found by the auxiliary population, so as to provide high-quality feasible solutions for the main population. The experimental results on 55 benchmark functions show that the proposed algorithm exhibits superior or at least competitive performance compared to other state-of-the-art algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Many optimization problems in the real world usually contain multiple objective functions and complex constraints, which can be collectively referred to as constrained multi-objective optimization problems (CMOPs) [1,2,3]. Generally, CMOPs can be defined by the following formula [4]:

where \(F(\textbf{x})\) is the objective vector needed to optimize which consists of m objectives, \(\textbf{x}\) is the decision vector with D-dimension in the decision space \(\Omega \), \(g_{j}(\textbf{x})\) stands for the jth inequality constraint, and \(h_{j}(\textbf{x})\) represents the \((j-p)^{\textrm{th}}\) equality constraint. The constraint violation of \(\textbf{x}\) on the jth constraint is usually defined as:

where \(\theta \) is a very small constant used to relax the boundary of equality constraints. The total constraint violation degree of \(\textbf{x}\) needs to consider all equality constraints and inequality constraints, so it is defined as:

Based on the above concepts, when \(CV(\textbf{x})\) is equal to zero, \(\textbf{x}\) is called a feasible solution; otherwise, \(\textbf{x}\) is called an infeasible solution.

For two feasible solutions \(\mathbf {x_{1}}\) and \(\mathbf {x_{2}}\), \(\mathbf {x_{2}}\) is dominated by \(\mathbf {x_{1}}\) when the following conditions are met: \(F(\mathbf {x_{1}})\) is not worse than \(F(\mathbf {x_{2}})\) in any objective, and \(F(\mathbf {x_{1}})\) is better than \(F(\mathbf {x_{2}})\) in at least one objective [5]. Furthermore, if a solution \(\mathbf {x^{*}}\) is not dominated by any solution, then \(\mathbf {x^{*}}\) is called a Pareto-optimal solution. All Pareto-optimal solutions constitute the feasible Pareto set (PS), and the mapping of PS in objective space forms the feasible/constrained Pareto front (CPF). The purpose of solving CMOP is to find a well-distributed CPF. Obviously, this is not a simple task due to the existence of conflicting objectives and multiple complex constraints.

In order to deal with the relationship among several conflicting objectives, substantial multi-objective evolutionary algorithms (MOEAs) have been proposed over the past few decades and shown excellent performance in solving unconstrained multi-objective optimization problems. These MOEAs can be classified into three categories based on their selection mechanisms: the method of dominance-based [6], the method of decomposition-based [7], and the method of indicator-based [8], in which the first method have received a lot of attention due to their simplicity and ease of implementation. However, none of these methods can be directly used to solve CMOPs since they cannot effectively handle constraints. In order to rescue this issue, constraint handing techniques (CHTs) are designed to compare feasible and infeasible solutions, which are generally combined with MOEAs to form constrained MOEAs (CMOEAs) [9].

Indisputably, the combination methods of CHTs and MOEAs are very important, different combination methods will produce different effects, which will greatly affect the performance of the algorithms. If the algorithm favors the satisfaction of constraints, the population will easily find feasible solutions, but it will also fall into the local feasible region; if the algorithm gives priority to the optimization of objectives, the diversity of the population will be enhanced, but it may lead to the failure to find feasible solutions for the population. Therefore, in order to achieve the balance between objectives and constraints, researchers began to attempt dual-population and two-stage mechanisms [10, 11]. Evidence suggests that these two types of mechanisms can well balance between the objectives and constraints. In the two-stage methods, the population generally searches for unconstrained PF (UPF) in the first stage to utilize the information of the objectives, and gradually converges to CPF from UPF in the second stage. But the UPF information is ignored in the second stage, so that the population diversity cannot be maintained well. And if too much computing resources are used in the first phase, the computing resources in the second phase will not be sufficient, as a result, the population will not converge to the real CPF. For the dual-population mechanisms, the first population as main population considers both objectives and constraints, which used to searche CPF and ensure the feasibility of the output population. The second population as auxiliary population is employed to explore UPF without considering constraints, which can help the first population improve diversity. However, if UPF and CPF do not overlap, finding UPF will provide less help for the main population in the later stage. Therefore, the computing resources consumed by the second population in the later stage will be wasted. So, it is necessary to consider the resource allocation for the the auxiliary population.

Based on the above discussion, this paper designs a novel dual-population algorithm, named DPVAPS. The main contributions of this paper are as follows:

-

1.

A dynamic population size reducing mechanism is proposed, so that the size of the auxiliary population will gradually decrease during the evolution. When UPF and CPF do not completely overlap, the proposed mechanism can reduce the resource consumption of the auxiliary population in the later stage. Therefore, the main population will be allocated more computing resources to search for CPF.

-

2.

An external archive is used to save the feasible solutions found by the auxiliary population, which can provide more different feasible solutions for the main population, thereby increasing the diversity of the main population.

-

3.

Systematic and comprehensive experiments are carried out on five test suits sets to verify the effectiveness of the proposed algorithm.

The rest of this paper is organized as follows. the description of the existing CMOEAs are introduced in “Literature review”. In “The proposed algorithm” describes the proposed DPVAPS algorithm. The experimental results are given in “Experimental study”. The conclusion of the paper and future work are presented in “Conclusion”.

Literature review

In this section, the related work on CMOEAs will be briefly introduced. Generally speaking, CHTs and the internal mechanism used by CMOEAs play a decisive role in the performance of the algorithms. From this perspective, CMOEAs can be divided into the following categories: (1) The penalty function method [12,13,14]; (2) The methods that consider constraints and objectives separately [15,16,17]; (3) the method of using two-stage [18,19,20]; and (4) the method based on dual-population [21,22,23].

The penalty function method is one of the simplest CHTs, which constructs a penalty coefficient for the constraint violation degree of the individual, thus transforming the constrained optimization problem into an unconstrained optimization problem, and then MOEAs are employed to solve the transformed problems. Obviously, the penalty coefficient has a significant impact on the performance of the penalty function method. Penalty methods are classified into static and dynamic methods according to whether the penalty coefficient will change with the evolutionary generation. The weight of objectives and constraints is constant in the static method, which is very detrimental to the balance of them, and the performance of the static method will drop dramatically once the problem becomes complex. Therefore, the dynamic method is very popular in solving CMOPs. In [24], an adaptive dynamic penalty function method based on threshold is proposed by Jan et al., then it is embedded into the multiobjective evolutionary algorithm based on decomposition (MOEA/D) framework to solve CMOPs. Jiao et al. [25] designed a feasible-guiding strategy, in which a new fitness value is obtained by using the objective functions modified by the constraint violation degree. In addition, Ma et al. [26] assigned two rankings to each individual, one based on the objective function value and the other based on the constraint domination principle (CDP). The two rankings biased toward the objectives and constraints, respectively, and then the proportion of feasible solutions in the population is used to weight the them. Although the effect of the dynamic method will be significantly improved compared with the static method, it is very difficult to set appropriate change rules.

The methods that consider constraints and objectives separately mainly include CDP [27], stochastic ranking (SR) [28], and \(\epsilon \) constrained method [29]. For CDP, the dominant relationship between individual \(\textbf{x}\) and individual \(\textbf{y}\) are as follows:

-

(a)

\(\textbf{x}\) is feasible, but \(\textbf{y}\) is infeasible, then individual \(\textbf{x}\) dominates \(\textbf{y}\).

-

(b)

Both \(\textbf{x}\) and \(\textbf{y}\) are feasible, but \(\textbf{x}\) has the better objective function values than \(\textbf{y}\), then \(\textbf{x}\) dominates \(\textbf{y}\).

-

(c)

Neither \(\textbf{x}\) nor \(\textbf{y}\) is feasible, but \(CV(\textbf{x})<CV(\textbf{y})\), then individual \(\textbf{x}\) dominates \(\textbf{y}\).

CDP is widely used by researchers since it is easy to implement and free-parameter. The most typical algorithm is NSGA-II-CDP [27], which can help the population to find the feasible region quickly and improve the convergence of the population. However, CDP pays too much attention to the constraints, as a result, the population is easy to fall into the local optimum especially in some complex problems. In an attempt to surmount the shortcomings, SR and \(\epsilon \) constrained method are designed to consider the information of objectives. For the former, a probability parameter is used to determine whether comparisons between individuals are based on the CDP or the objective function. In this way, the information of objectives can be used to some extent and the diversity of population will be enhanced. However, improper parameter settings will cause the population to encounter difficulties in convergence. \(\epsilon \) constrained method uses the parameter \(\epsilon \) to relax the constraints, and all individuals in the population whose constraint violation degree is less than \(\epsilon \) are regarded as feasible solutions. \(\epsilon \) will gradually decrease with the evolution process, and the information of the objectives will also be used to guide the population evolution, but it is difficult to grasp the appropriate change rule, and the setting of the initial \(\epsilon \) value is very challenging.

For the method of using two-stage, it divides the evolution process of the population into two stages, and different stages perform different functions. Fan et al. [18] put forward a push and pull (PPS) framework whose first phase ignored all constraints and focused on exploring UPF. In the second stage, the \(\epsilon \) constrained method was employed to promote the population recovery to CPF from UPF. However, if the UPF is difficult to find for the population, too much computing resources will be consumed by the first stage, resulting in insufficient computing resources in the second phase, so that the complete CPF could not be found. In [30], a two-phase framework, TOP, was proposed by Liu and Wang. The purpose of the first stage is to transform CMOP into a constrained single-objective optimization problem for finding feasible regions by weighting all objectives. In the second phase, the CMOEA was employed to explore the complete CPF. Nevertheless, the performance of the first stage is not satisfactory for the problems with small or discrete feasible regions. Tian et al. [31] designed a two-stage CMOEA, including stage A and stage B. Stage A assigns the same priority to the objectives and the constraints, with the aim of finding all feasible domains. The constraints have a higher priority than the objectives in stage B, so as to promote the population converge to Pareto front. However, some excellent objectives information will be lost since the priority of the objectives is not greater than the constraints. Liang et al. [32] explored the relationship between UPF and CPF at the learning stage, and then the relationship was used to guide the evolution of the population at the evolutionary stage. In general, the design of the two-stage mechanism is very prominent, and the transition conditions between stages should be reasonable, which will greatly affect the performance of the algorithm in solving CMOPs. Moreover, for the current two-stage algorithm, the utilization of the objectives information is ignored in the second stage.

To solve the above issues, some dual-population algorithms have been proposed. In [22], a dual-population algorithm named CTAEA was proposed. It used two archive populations, one archive considered both constraints and objectives, while the other only considered objectives. The exchange of information between the two archives occured during the offspring generation and environmental selection. However, this strong correlation between two populations leads to the slow search speed of the algorithm. To circumvent this disadvantage, Tian et al. [21] developed a co-evolutionary framework (CCMO) using the weak correlation, in which the first population was mainly used to solve the original CMOPs, and the second population converged to the UPF by ignoring constraints directly. These two populations independently produced offspring and only exchanged information during the environmental selection. Qiao et al. [33] suggested a evolutionary multitasking-based CMO framework (EMCMO). Compared with CCMO, EMCMO considers the types of problems and analyzes the information of different individuals. However, the performance of the algorithm is not satisfactory when solving the problem whose UPF is far from the CPF. In [23], a dual-population algorithm named BiCo was proposed, which used two populations to approximate the CPF from different directions. The above dual-population algorithms all used two populations to consider constraints and objectives respectively, and used information exchange to balance constraints and objectives. However, when the UPF and CPF do not completely overlap, the second population has less auxiliary effect for the first population in the later stage. At this time, if the auxiliary population and the main population enjoy the same computing resources, the resource wasted by the auxiliary population will affect the performance of the algorithm. Therefore, it is necessary to reduce the resource consumption of the second population, so that the main population can use more resources to find the CPF.

In order to solve the above issues in dual-population algorithms, this paper proposes a novel dual-population algorithm. In the proposed algorithm, a dynamic population size reducing mechanism is proposed, which is used to save the computing resources occupied by the auxiliary population in the later stage. More detailed description will be presented in the next section.

The proposed algorithm

The procedure of the proposed algorithm

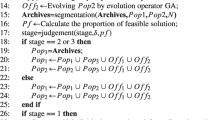

The overall framework and main procedure of the proposed algorithm are shown in Fig. 1 and Alogrithm 1, respectively. First, the two populations are randomly initialized respectively. \(POP_{1}\) is the main population and mainly responsible for searching for CPF. Meanwhile, the CDP method which has a strong ability to find feasible solutions is employed by the main population to improve the feasibility. The second population \(POP_{2}\) is the auxiliary population, which does not consider any constraint and adopts the fast non-dominated sorting method to push the population to approach the UPF, so as to utilize infeasible solutions to expand search. As shown in lines 6–9, the two populations use genetic algorithm (GA) to generate offspring, respectively. More specifically, the tournament selection method is used to select mating parents from the population, and the simulated binary crossover (SBX) [34] and the polynomial mutation (PM) [35] are employed to produce offspring based on the selected mating parents. The information exchange is achieved between the two populations mainly by sharing offspring during the environmental selection as shown in lines 14–15. Since the auxiliary population ignores all constraints, its convergence and diversity are better than the main population. Therefore, the auxiliary population will reach the feasible region earlier than the main population, which can provide more search directions to the main population. By this way, the diversity of the main population will be improved.

As shown in line 15, the dynamic adjustment of the auxiliary population size is mainly realized by a dynamic coefficient \(\delta \), which will decrease as the number of evolutionary generation increases. For a CMOP, if the UPF and CPF do not completely overlap, the information of UPF in the later stage is not very helpful for the main population to search for CPF. As the size of the auxiliary population decreases, the computing resources occupied in the later stage will also decrease. In this way, more computing resources can be allocated to the main population. As shown in lines 16–19, the external archive A is mainly used to store feasible solutions found by the auxiliary population, so as to provide more information to the main population during the environmental selection. When the size of A exceeds NP, A will be truncated [21].

Dynamic population size reducing mechanism

The dynamic population size reducing mechanism is designed with the purpose of avoiding the waste of computing resources in the later evolution stage. During the evolution of the two populations, the infeasible regions will not hinder the evolution of the auxiliary population since it does not consider any constraints, so it will converge faster than the main population. At this point, the information of the auxiliary population will help the main population to cross those large infeasible regions and reach the optimal feasible region. Moreover, the auxiliary population will provide more evolutionary directions for the main population to increase its diversity. However, after the auxiliary population reaches the UPF, its offspring will also be generated in the vicinity of the UPF and no more beneficial information will be produced. Even if more computing resources are given to the auxiliary population at this time, the help to the main population will be very limited. In contrast, the main population requires more computational resources to explore the CPF. Therefore, a dynamic population size reducing mechanism is designed to avoid the waste of computing resources by the auxiliary population and to allocate more resources to the main population.

To illustrate the dynamic population size reducing mechanism, an example is provided in Fig. 2.

In this case, the auxiliary population can only provide very limited assistance to the main population in the later stage, because the information of the auxiliary population cannot help the main population to improve the diversity on CPF. At this time, if the same computing resources is still allocated to the two populations, that is, the auxiliary population is kept the same population size as the main population, limited computing resources will be wasted by the auxiliary population. Therefore, a dynamic coefficient \(\delta \) develpoed to change the size of the auxiliary population is proposed as follows:

where \(|Gen |\) and \(|Gen_{max} |\) represent the current generation and the maximum generation, respectively. \(\delta _{min}\) represents the minimum of \(\delta \). Figure 3 shows the changing rule of dynamic coefficient \(\delta \) with the continuous increase of evolutionary generation. Given that the initial size of the auxiliary population is 100 and the \(\delta _{min}\) is 0.1, then as \(\delta \) decreases from 1 to 0.1, the size of the auxiliary population will gradually decrease and become 10 in the last generation. In this way, more computing resources can be used by the main population in the later stage, thereby improving diversity of the main population on the CPF. It should be noted that we have verified that the algorithm can achieve the best performance when \(\delta _{min}\) is set to 0.1.

External archive

In an attempt to increase the diversity of main population, the external archive A is created. Figure 4 illustrates the importance of setting an archive.

Archive A saves the feasible solutions encountered by POP2 during the evolutionary process. Because the auxiliary population does not consider constraints, there is a high probability that the high-quality feasible solutions in the auxiliary population will be dominated by those infeasible solutions, as a result, these feasible solutions are eliminated in the environmental selection stage. In addition, since these feasible solutions in the auxiliary population are not exactly the same as the feasible solutions searched by the main population, if these feasible solutions are preserved, they will form a complementary effect with the main population, searching the areas not searched by the main population, which will be extremely helpful to the diversity of the main population. Most importantly, it is very helpful to improve the distribution ability of the main population on the CPF.

Analysis for different evolution stages of DPVAPS

In this paper, the dual-population mechanism is used, and the size of the second population will decrease with the increase of evolution algebra. CCMO [21] also uses the dual-population mechanism, moreover, they both use NSGA-II as the basic optimizer. Here, their differences are compared:

Similarly, the dual-population mechanism is used in [21]. The purpose of the first population in CCMO is to search CPF, while the second population mainly focuses on the exploration of UPF. The size of the second population is always the same as that of the first population, so these two populations consume the same computing resources in the process of evolution. In addition, the second population lacks the mechanism to save feasible solutions, so the feasible solutions searched by the second population are not fully utilized by the first population, resulting in a waste of knowledge. However, the second population of DPVAPS decreases dynamically during evolution, so more computing resources will be used by the main population. In addition, the feasible solutions found by the second population will be saved to provide effective information for the main population and help the main population search CPF more completely. These are the two main differences between DPVAPS and CCMO.

In addition, in order to further study the efficiency of the proposed algorithm, the benchmark problem LIRCMOP8 is selected for comparative experiments. LIRCMOP8 has many large infeasible regions, these large infeasible blocks will block the evolution of the population and its UPF is located in the infeasible region, while the CPF is on the boundary of feasible region. The populations distribution of NSGA-II, CCMO, and DPVAPS in the objective space in the early, middle, and later stages on the problem LIRCMOP8 are listed in Fig. 5. It can be seen that in the early stage of evolution, the main populations of the three algorithms begins to converge towards CPF, and some individuals have reached the vicinity of CPF. In addition, the distribution of auxiliary populations of CCMO and DPVAPS is more dispersed due to all constraints are not considered. In the middle stage of evolution, some individuals of NSGA-II reach the CPF, while the other individuals are blocked by large infeasible blocks and stayed in the region far away from the CPF. The main populations of CCMO and DPVAPS are almost all distributed around the CPF, and their auxiliary populations are all near the UPF. The main reason is that their auxiliary populations do not consider constraints, which can help the main populations cross large infeasible blocks to reach the CPF. In the later stage of evolution, the population of NSGA-II is similar to that in the middle stage. Some of individuals are distributed on the CPF, while those blocked by the infeasible region are still stagnant away from the CPF. This is because NSGA-II lacks the mechanism to help the population cross the infeasible regions, so the individuals are easily hindered by the infeasible regions and cannot reach the optimal feasible region. In contrast, all individuals in the main populations of CCMO and DPVAPS can reach CPF. The CPF covered by CMMO is incomplete, the upper left corner of the CPF is not found. While the main population of DPVAPS is more evenly and completely distributed on the CPF. For two auxiliary populations, they have reached UPF. However, the number of individuals in auxiliary populations of DPVAPS is reduced to 10, while the size of auxiliary population of CCMO is still consistent with the main population. In this way, more computing resources can be used by the main population of DPVAPS. Moreover, the external archive in DPVAPS will save the feasible solutions in the auxiliary population and make up the area not searched by the main population. That is why the convergence and uniformity of the main population of DPVAPS is better than that of CCMO.

Experimental study

In this section, in an attempt to test the performance of the proposed algorithm, it will be compared with five state-of-the-art CMOEAs: NSGA-II [27], CCMO [21], BiCo[23], PPS [18], and ToP [30], in which the NSGA-II is one of the most classical CMOEAs and it is often chosen as the optimizer of the main population in the dual-population mechanism; CCMO and BiCo are classical dual-population algorithms; and PPS and ToP are the representatives of two-stage algorithms.

The experiments are carried out on five commonly used test sets, i.e., MW [36], LIRCMOP [37], DTLZ [22], CTP, and DASCMOP [38]. The specific settings of the number of objectives and dimensions are shown in Table 1. In addition, the population size NP is set to 100, the maximum number of evaluations is set to 60000, and each algorithm independently runs 30 times on each benchmark function. For the sake of fairness, the evolutionary strategies of all comparison algorithms are GA, and other parameters are the same as their original papers. The inverted generational distance (IGD) [39] and feasible rate (FR) are employed as indicators to measure the performance of the algorithms. IGD reflects the convergence and diversity of the algorithm. The smaller the IGD value of the algorithm is, the better its performance will be. FR highlights the ability of the algorithm to search for feasible solutions. The larger the value, the stronger the algorithm’s ability. All comparative experiments are performed on the PlatEMO [40] platform.

Verification of the proposed mechanisms

The dynamic population size reducing mechanism and the external archive are the two main contributions of this paper. In order to verify the effectiveness of these two mechanisms, two variants, DPVAPS_1 and DPVAPS_2, are created as control groups for comparative experiments in this section, in which DPVAPS_1 means that only the dynamic population size reducing mechanism is employed without using external archive, and DPVAPS_2 means that only external archive is used. The specific average and standard deviation of IGD values are shown in Table 2, in which the best result is marked in bold. “+”, “−”, and “=” indicate that the variant is significantly better than, worse than, or comparable to the proposed algorithm, respectively.

As can be seen from Table 2, DPVAPS achieves the best IGD average values on 19 out of the 24 functions compared with DPVAPS_1. What’s more, the performance of DPVAPS is significantly better than that of DPVAPS_1 on 14 benchmark problems, while it is outperformed by it on only 2 benchmark problem. For DPVAPS_1, the external archive is not employed, thus the feasible solutions in the main population are preserved. The disadvantage is that the information of the promising feasible solutions in the auxiliary population is ignored. As a result, the diversity of the main population is lost. Because the auxiliary population does not consider any constraint, the probability of feasible solutions being dominated by infeasible solutions is large, so these solutions will be eliminated in the environment selection stage. While these feasible solutions may be high-quality information for the main population. By using the feasible solutions in the auxiliary population, the evolution direction of the main population can be increased, more feasible regions can be searched, and some CPF fragments that are not easy to search can also be explored. Figure 6 shows the populations in the early, middle, and later stages of DPVAPS_1 on benchmark problem LIRCMOP8. Compared with the main population distribution of DPVAPS, DPVAPS_1 is not completely distributed on CPF, which is caused by the loss of diversity, while the external archive can remedy this defect. Therefore, the performance of DPVAPS is superior to its variant without external archive.

From the Table 2, DPVAPS achieves the better IGD average values on 22 out of the 24 problems. In addition, according to the Wilcoxon rank-sum test, DPVAPS performs significantly better than its variant DPVAPS_1 on 15 functions, but significantly worse than it on only 1 test problems. Compared with DPVAPS_2, the computational resources consumed by the auxiliary population of DPVAPS gradually decrease with the evolutionary process, so that more computational resources can be used by the main population. For some complex problems, if only half of the computational resources are employed by the main population, there is a situation that the computational resources are exhausted before the complete CPF is searched. While if the main population has enough computing resources, it will have the ability to continue to approach the real CPF after reaching the optimal feasible region. Therefore, DPVAPS shows more excellent performance than DPVAPS_2.

In summary, according to the above results and analysis, the two mechanisms in the proposed algorithm are very effective.

Parameter analysis

In the proposed algorithm, the size of the auxiliary population is controlled by parameter \(\delta _{min}\). The value of parameter \(\delta _{min}\) in the dynamic population size reducing mechanism is 0.1, indicating that the size of the auxiliary population gradually decreases to 10 with the increase of evolution. To verify the suitability of this parameter, several variants are created, namely DPVAPS_10, DPVAPS_20, DPVAPS_30, and DPVAPS_40, in which \(\delta _{min}\) is taken as 0.2, 0.3, 0.4, and 0.5 respectively, indicating that the size of the auxiliary population gradually decreases to 20, 30, 40, and 50. The experiments are conducted on two test sets, LIRCMOP and DTLZ, and the experimental results are shown in Table 3.

It can be seen from the experimental results that DPVAPS achieves the best IGD average on 15 out of 24 problems. And based on the Wilcoxon rank-sum test results, DPVAPS is significantly better than its variants on 9, 9, 7, and 11 problems respectively, while it is only surpassed by its variants on 1, 0, 1, and 1 test peoblems. The reason is that when the size of the auxiliary population gradually decreases to 10, the main population will enjoy more computing resources to search CPF. While the auxiliary population will still give some help to the main population in the later stage, so it still needs to enjoy some resources. To sum up, it is quite appropriate when \(\delta _{min}\) is 0.1.

Experimental results on MW and LIRCMOP problems

Table 4 shows the average and standard deviation of IGD values of the comparison algorithms on the five test sets, where the best result is marked in bold. Please note that if the algorithm cannot continuously find the feasible solutions on a problem, then only the feasibility rate is given in the form of “NAN (FR)”. “+”, “−”, and “=” indicate that the compared algorithm is significantly better than, worse than, or comparable to the proposed algorithm, respectively. As can be seen from the Table 4, DPVAPS outperforms NSGA-II, CCMO, BiCo, PPS, and ToP on 24, 14, 16, 28, and 23 functions, respectively. In contrast, these compared algorithms outperform the proposed algorithm only on 0, 2, 3, 0, and 2 functions, respectively. From the perspective of FR, only CCMO and DPVAPS achieve 100% feasibility rate on all problems, while other algorithms cannot consistently find feasible solutions in 30 runs on some problems.

MW and LIRCMOP test suits have small and the discrete feasible regions, which requires a strong diversity of algorithms. NSGA-II does not outperform DPVAPS on any problem in these two test set, this is because NAGS-II uses only CDP to handle the constraints, and the population will quickly find one of the feasible regions, but this will cause the population to converge to this feasible domain instead of spreading to other regions, so its diversity cannot be guaranteed. CCMO and BiCo exhibit the similar performance with DPVAPS on some benchmark problems, the reason is that they all use the dual-population mechanism, whose auxiliary populations can provide beneficial information to the main population and help the main population find more feasible areas. This also proves the advantages of the dual-population mechanism. The auxiliary population of CCMO always consumes equal computational resources with the main population, so it does not have the superior performance exhibited by DPVAPS on most problems. The auxiliary population in BiCo uses an angle-based selection mechanism to ensure the diversity of the auxiliary population, so it performs well on some problems with small and multiple feasible regions, such as MW2, LIRCMOP1, and LIRCMOP3. But on the whole, DPVAPS performs better than BiCo. PPS and ToP are two-stage algorithms, the purpose of population is to find UPF in the first stage of PPS, while the second stage is to find CPF. But in these complex problems, there is a risk of the population falling into a local optimum when finding the UPF, which greatly affects the effect of the second stage. The first stage of ToP is to find feasible regions, and the second stage is to search CPF. However, in the first stage, there is a great probability to search the local feasible region rather than the optimal feasible region, so the optimal Pareto front cannot be found. For DPVAPS, the main population can be allocated more computing resources to search the CPF since the resources consumed by the auxiliary population gradually decreases. In addition, external archiving can increase the diversity of main population, therefore, the main population can be more evenly distributed over the CPF.

To sum up, the experimental results on the MW and LIRCMOP benchmark function sets can prove that the proposed algorithm has relatively better performance than other algorithms.

Experimental results on DTLZ, CTP, and DASCMOP problems

The specific IGD values of DPVAPS and five comparison algorithms on the DTLZ, CTP, and DASCMOP test sets are shown in Table 5. The results are blacked out once the algorithm achieves the optimal average IGD value on this problem. It can be seen that DPVAPS achieves the best average IGD values on 22 out of 27 test problems. In addition, DPVAPS performes significantly better than NSGA-II, CCMO, BiCo, PPS, and ToP on 23, 14, 22, 26, and 26 problems respectively based on the rank-sum test, while they only significantly outperform DPVAPS on 2, 1, 0, 0, and 1 problems, respectively.

The DTLZ test set has different constraint properties that can make the UPF become feasible, partially feasible, or completely infeasible. This makes the problems have different degrees of difficulty. Only CCMO and DPVAPS can consistently find feasible solutions on all peoblems in 30 runs. While the main population of DPVAPS can use more computing resources, so it can cover the CPF more completely. The dimensions of CTP test problems are relatively small, and their feasible regions are large, so they are relatively easy to solve. All algorithms can achieve the 100% feasible rates on these problems. The DASCMOP test set has many constraints, resulting in the large infeasible area blocks in the search space. This requires that the algorithm has the ability to cross the infeasible region. The auxiliary population of CCMO can ignore the constraints and reach the UPF. Furthermore, the information exchange in the environmental selection stage can help the main population cross the infeasible regions to reach the optimal feasible region, so it shows the superior effect. The auxiliary population of BiCo transforms the constraints into the objective, which still has the risk of falling into a local feasible domain, and thus its results are worse than those of CCMO and DPVAPS. PPS and ToP are two-stage algorithms. Since the search engine of PPS is replaced by GA, resulting in the diversity of the population is decreased, the population uses too much computational resources to search UPF in the first stage, and thus the population does not have enough computational resources to search the true CPF in the second stage. The auxiliary population of DPVAPS can reach the UPF quickly without considering any constraint, so it can help the main population to reach the optimal feasible region across the infeasible region. What’s more, the main population can use more computing resources, and external archiving can help the main population search more feasible regions, so it shows excellent performance.

In conclusion, according to the results of the algorithms on DTLZ, CTP, and DASCMOP test suits, the performance of DPVAPS in solving simple or complex problems is better than that of the comparison algorithms.

Statistical results

In this section, all algorithms have carried out two statistical experiments on all 55 problems: Wilcoxon test and Friedman test. The experiments are conducted on KEEL software [41]. Table 6 shows the Wilcoxon test results. It can be seen that all \(R^{+}\) are greater than \(R^{-}\), indicating that DPVAPS is superior to the comparison algorithms. Furthermore, there are significant differences between the comparison algorithms and DPVAPS at the significance level \(\alpha \) = 0.05. Friedman test results are exhibited in the Fig. 7, the smaller the ranking value, the better the performance of the algorithm. It can be seen that the Rankings of the proposed algorithm is the smallest, which proves that its performance is superior to other algorithms. In a word, from the statistical results, the performance of DPVAPS is also the most superior compared with other comparison algorithms.

Figure 8 shows the convergence graphs of the average IGD values of DPVAPS and comparison algorithm for 30 runs on test questions LIRCMOP5 and LIRCMOP12. Among them, the LIRCMOP5 has the large infeasible regions, which block the evolution of the population. Similarly, the LIRCMOP12 also has the large infeasible regions, and its CPF is discrete. It can be seen from Fig. 8 that CCMO and DPVAPS achieve similar performance on the test problem LIRCMOP5, while DPVAPS achieves the fastest convergence speed on the test problem LIRCMOP12. In a word, DPVAPS has faster convergence speed compared with other algorithms.

Conclusion

This paper proposes a novel dual-population algorithm to solve CMOPs. The main population is responsible for searching for CPF, and the auxiliary population is responsible for searching for UPF. In addition, In order to reduce the waste of computing resources of the auxiliary population in the later stage, a dynamic population size reducing mechanism is designed to change the size of the auxiliary population. Furthermore, an external archive is used to store feasible solutions found by the auxiliary population, which can provide more new feasible solutions for the main population, thereby increasing the diversity of the main population on CPF. In the experimental stage, the effectiveness of the two mechanisms, the dynamic population size reducing mechanism and external archive, are verified. The rationality of parameter \(\delta _{min}\) used to control population size is also investigated. Moreover, the experimental results on 55 test problems prove that the proposed algorithm is promsing in solving CMOPs. However, the developed algorithm still has some limitations: (1) the size of auxiliary population decreases according to the same change rule on different problems. Therefore, a population size reduction mechanism based on the type of problems should be designed to deal with different problems; (2) the auxiliary population movement mechanism can be designed so that it can approach the CPF from the infeasible region side and explore the CPF with the main population. In the future, DPVAPS will be further improved to solve these problems, and can be applied to solve practical problems.

References

He C et al (2022) A self-organizing map approach for constrained multi-objective optimization problems. Complex Intell Syst 8:5355–5375

Qiao K et al (2022) Feature extraction for recommendation of constrained multi-objective evolutionary algorithms. IEEE Trans Evol Comput. https://doi.org/10.1109/TEVC.2022.3186667

Long W et al (2022) A constrained multi-objective optimization algorithm using an efficient global diversity strategy. Complex Intell Syst. https://doi.org/10.1007/s40747-022-00851-1

Chen Y et al (2022) Constraint multi-objective optimal design of hybrid renewable energy system considering load characteristics. Complex Intell Syst 8(2):803–817

Liang J et al (2022) A survey on evolutionary constrained multi-objective optimization. IEEE Trans Evol Comput. https://doi.org/10.1109/TEVC.2022.3155533

Du K-J, Li J-Y, Wang H, Zhang J (2022) Multi-objective multi-criteria evolutionary algorithm for multi-objective multi-task optimization. Complex Intell Syst. https://doi.org/10.1007/s40747-022-00650-8

Ishibuchi H, Nojima Y et al (2017) On the effect of normalization in moea/d for multi-objective and many-objective optimization. Complex Intell Syst 3(4):279–294

Sun Y, Yen GG, Yi Z (2018) Igd indicator-based evolutionary algorithm for many-objective optimization problems. IEEE Trans Evol Comput 23(2):173–187

Liang J, Ban X, Yu K, Qiao K, Qu B (2022) Constrained multiobjective differential evolution algorithm with infeasible-proportion control mechanism. Knowl-Based Syst 250:109105

Qiao K et al (2022) Dynamic auxiliary task-based evolutionary multitasking for constrained multi-objective optimization. IEEE Trans Evol Comput. https://doi.org/10.1109/TEVC.2022.3175065

Yu K, Liang J, Qu B, Yue C (2021) Purpose-directed two-phase multiobjective differential evolution for constrained multiobjective optimization. Swarm Evol Comput 60:100799

Lin C-H (2013) A rough penalty genetic algorithm for constrained optimization. Inf Sci 241:119–137

Tessema B, Yen GG (2009) An adaptive penalty formulation for constrained evolutionary optimization. IEEE Trans Syst Man Cybern Part A Syst Hum 39(3):565–578

Yu K, Liang J, Qu B, Luo Y, Yue C (2021) Dynamic selection preference-assisted constrained multiobjective differential evolution. IEEE Trans Syst Man Cybern Syst 52(5):2954–2965

Ning W et al (2017) Constrained multi-objective optimization using constrained non-dominated sorting combined with an improved hybrid multi-objective evolutionary algorithm. Eng Optim 49(10):1645–1664

Yang Y, Liu J, Tan S, Wang H (2019) A multi-objective differential evolutionary algorithm for constrained multi-objective optimization problems with low feasible ratio. Appl Soft Comput 80:42–56

Liu Z-Z, Wang Y, Wang B-C (2019) Indicator-based constrained multiobjective evolutionary algorithms. IEEE Trans Syst Man Cybern Syst 51(9):5414–5426

Fan Z et al (2019) Push and pull search for solving constrained multi-objective optimization problems. Swarm Evol Comput 44:665–679

Yu X, Lu Y (2018) A corner point-based algorithm to solve constrained multi-objective optimization problems. Appl Intell 48(9):3019–3037

Ming F et al (2021) A simple two-stage evolutionary algorithm for constrained multi-objective optimization. Knowl-Based Syst 228:107263

Tian Y, Zhang T, Xiao J, Zhang X, Jin Y (2020) A coevolutionary framework for constrained multiobjective optimization problems. IEEE Trans Evol Comput 25(1):102–116

Li K, Chen R, Fu G, Yao X (2018) Two-archive evolutionary algorithm for constrained multiobjective optimization. IEEE Trans Evol Comput 23(2):303–315

Liu Z-Z, Wang B-C, Tang K (2021) Handling constrained multiobjective optimization problems via bidirectional coevolution. IEEE Trans Cybern

Jan MA, Tairan N, Khanum RA (2013) Threshold based dynamic and adaptive penalty functions for constrained multiobjective optimization. In: IEEE, pp 49–54

Jiao L, Luo J, Shang R, Liu F (2014) A modified objective function method with feasible-guiding strategy to solve constrained multi-objective optimization problems. Appl Soft Comput 14:363–380

Ma Z, Wang Y, Song W (2019) A new fitness function with two rankings for evolutionary constrained multiobjective optimization. IEEE Trans Syst Man Cybern Syst

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Runarsson TP, Yao X (2000) Stochastic ranking for constrained evolutionary optimization. IEEE Trans Evol Comput 4(3):284–294

Takahama T, Sakai S (2010) Efficient constrained optimization by the \(\varepsilon \) constrained adaptive differential evolution. In: IEEE, pp 1–8

Liu Z-Z, Wang Y (2019) Handling constrained multiobjective optimization problems with constraints in both the decision and objective spaces. IEEE Trans Evol Comput 23(5):870–884

Tian Y et al (2021) Balancing objective optimization and constraint satisfaction in constrained evolutionary multiobjective optimization. IEEE Trans Cybern

Liang J et al (2022) Utilizing the relationship between unconstrained and constrained pareto fronts for constrained multiobjective optimization. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2022.3163759

Qiao K et al (2022) An evolutionary multitasking optimization framework for constrained multiobjective optimization problems. IEEE Trans Evol Comput 26(2):263–277

Deb K, Agrawal RB et al (1995) Simulated binary crossover for continuous search space. Complex Syst 9(2):115–148

Deb K, Goyal M et al (1996) A combined genetic adaptive search (geneas) for engineering design. Comput Sci Informat 26:30–45

Ma Z, Wang Y (2019) Evolutionary constrained multiobjective optimization: test suite construction and performance comparisons. IEEE Trans Evol Comput 23(6):972–986

Fan Z et al (2019) An improved epsilon constraint-handling method in moea/d for cmops with large infeasible regions. Soft Comput 23(23):12491–12510

Fan Z et al (2020) Difficulty adjustable and scalable constrained multiobjective test problem toolkit. Evol Comput 28(3):339–378

Bosman PA, Thierens D (2003) The balance between proximity and diversity in multiobjective evolutionary algorithms. IEEE Trans Evol Comput 7(2):174–188

Tian Y, Cheng R, Zhang X, Jin Y (2017) Platemo: a matlab platform for evolutionary multi-objective optimization [educational forum]. IEEE Comput Intell Mag 12(4):73–87

Alcalá-Fdez J et al (2009) Keel: a software tool to assess evolutionary algorithms for data mining problems. Soft Comput 13(3):307–318

Acknowledgements

This work was supported in part by the National Natural Science Fund for Outstanding Young Scholars of China (61922072), National Natural Science Foundation of China (62176238, 61806179, 61876169, 62106230, and 61976237), China Postdoctoral Science Foundation (2020M682347, 2021T140616, 2021M692920), Key Research and Development and Promotion Projects in Henan Province (192102210098), and Training Program of Young Backbone Teachers in Colleges and Universities in Henan Province (2020GGJS006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liang, J., Chen, Z., Wang, Y. et al. A dual-population constrained multi-objective evolutionary algorithm with variable auxiliary population size. Complex Intell. Syst. 9, 5907–5922 (2023). https://doi.org/10.1007/s40747-023-01042-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01042-2