Abstract

Gene expression profile data have high-dimensionality with a small number of samples. These data characteristics lead to a long training time and low performance in predictive model construction. To address this issue, the paper proposes a feature selection algorithm using non-dominant feature-guide search. The algorithm adopts a filtering framework based on feature sorting and search strategy to overcome the problems of long training time and poor performance. First, the feature pre-selection is completed according to the calculated feature category correlation. Second, a multi-objective optimization feature selection model is constructed. Non-dominant features are defined according to the Pareto dominance theory. Combined with the bidirectional search strategy, the Pareto dominance features under the current category maximum relevance feature are removed one by one. Finally, the optimal feature subset with maximum correlation and minimum redundancy is obtained. Experimental results on six gene expression data sets show that the algorithm is much better than Fisher score, maximum information coefficient, composition of feature relevancy, mini-batch K-means normalized mutual information feature inclusion, and max-Relevance and Min-Redundancy algorithms. Compared to feature selection method based on maximum information coefficient and approximate Markov blanket, the algorithm not only has high computational efficiency but also can obtain better classification capabilities in a smaller dimension.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

With bio-information development, gene expression profile analysis has become an essential means of oncogene identification and plays a critical role in cancer classification and prediction. Microarray technology [1] uses many probes each time, and gene information involves many aspects, leading to the high-dimensionality of microarray data. At the same time, the sample preparation cost is high, and the process is complex, which leads to the small-sample size and the uneven distribution of sample categories. Therefore, gene expression profile data are typical high-dimensional small-sample data [2], which has strong feature redundancy and all the characteristics of high-dimensional small-sample data. This type of data is directly used to build predictive models, and it is easy to have problems such as long training time, low model performance, and overfitting. Feature selection is required to eliminate and mitigate dimensional disasters, improve model performance, reduce runtime, and extract beneficial information [3].

Literature [4] summarizes the popular feature selection methods broadly divided into the filter, wrapper, and embedded methods [5]. Wrappers select subsets of features from the initial feature collection, train learners such as support vector machine (SVM) classifier, and evaluate subsets based on the learner's performance. Wrapper performs well in classification, but it costs too much and risks overfitting. Embedded methods automatically select features during training. This kind of method, although short in computing time, relies too much on classifiers. Filtering methods can be scaled efficiently on high-dimensional datasets, regardless of classifiers, and are particularly widely used in high-dimensional data.

In high-dimensional data, the removal of redundancy is a hot topic of research [6]. Filtering methods for feature redundancy problems based on information metrics [7], such as maximum relevancy and minimum redundancy feature selection (mRMR) [8], fast correlation-based filter (FCBF) [9], markov blanket based feature selection algorithm [10], and related improvement algorithms promoted based on the above algorithms [11]. These methods are not suitable for high-dimensional data processing because of large computation and high time complexity.

The paper proposes a feature selection algorithm using a non-dominant features-guided search (NDFS) to solve the above problems. The main ideas of this method are as follows: (1) Based on the framework combining feature ranking and search strategy, the irrelevant, redundant features can be quickly filtered and screened. (2) Fisher score and cosine distance measure the class correlation and similarity of features. (3) The concept of non-dominant features is proposed, and a two-way search strategy is adopted in the process of non-dominant feature-guided search. (4) Finally, a feature subset with maximum correlation and minimum redundancy is selected to improve the performance of subsequent classification.

The rest of this paper is organized as follows. “Related work” discusses related work. “Preliminaries” presents some preliminaries for this work. A novel feature selection method is given in “Proposed architecture and methods”. “Experimental results and discussion” gives experimental results and discussion. “Conclusion” concludes the paper.

Related work

This section first briefly introduces the nature of the feature selection problem, and then discusses the existing mainstream feature selection approaches.

Feature selection problem

Mathematically, the feature selection problem can be expressed in the following way. Assuming a dataset S contains d features. The essence of the feature selection problem is to select relevant features among d features, to optimize the given classification performance index as much as possible. Given a dataset S = {f1, f2, f3,┉,fd}, the goal is to select the optimal subset of features from S. Select a subset D = {f1, f2, f3, ⋯, fn}, where n < d, f1, f2, f3,⋯,fn represent the features of the dataset.

Existing feature selection approaches

Feature selection plays a critical role in classification problems, especially for data sets that have many features [12]. These features need to be measured in two ways: correlation between features and class and redundancy between features. Combined with the corresponding search strategy, the final feature subset is obtained [13].

Early feature selection methods only consider selecting features more relevant to categories. Relevant features can be derived from label information. The ranking of features by scoring them based on relevancy criterion, represented by Relief [14], ReliefF [15], Fisher score [16] and Maximal Information Coefficient (MIC) [17]. The relief method and its multi-class extension, ReliefF, select features from instances that are separated from different classes. The algorithm randomly selects an instance from the data, then calculates the distance to find positive or negative samples of its nearest neighbor, and updates the weight of each feature. The Relief series algorithms operate efficiently with no restrictions on data types. Fisher score algorithm uses probability distance as the evaluation criterion of a feature. The distance between the same class of samples is small, and the distance between different classes of samples is large. The Fisher score algorithm is versatile, has low time complexity, and is particularly suitable for working with high-dimensional datasets. However, in feature selection, there are many redundancy features because these algorithms fail to consider the relationship between features and features.

Redundant features do not provide any additional information other than noise for the classification algorithm, so they should be removed. Typical algorithms include mRMR, correlation-based feature selection (CFS) [18], composition of feature relevancy (CFR) [19]. mRMR is based on mutual information, minimizing the correlation between features and mutual information, and maximizing the correlation between features and class labels. In addition, many literatures are modified based on mRMR method, such as using normalized mutual information [20] and various monotonic dependence measures to replace mutual information for feature selection. CFS is evaluated based on the predictive power of each feature in the subset and its correlation, and the subsets of individual features with strong predictive power and low correlation within the feature subset perform well. CFR calculates the relevance score by calculating union condition information for candidate characteristics for a given selected feature collection category. Feature redundancy is given by the joint information of candidate features, categories, and selected features.

The calculation of feature redundancy is highly complex on high-dimensional data. Scholars adopt a two-stage feature selection method to balance correlation and redundancy to improve efficiency [21]. The basic idea is to calculate the correlation between features and categories for sorting and then use the algorithm based on a search strategy to remove redundant features. The typical algorithm is FCBF proposed in 2004. Firstly, it calculates the symmetrical uncertainty (SU) of each feature and class and sorts it in descending order, removing features less than pre-set thresholds, i.e., irrelevant features. Secondly, this algorithm selects the feature with the largest SU of the feature and class in the current feature set. It calculates the SU of the remaining features and the current features and the SU of the remaining features and class one by one until all redundant features under the feature are removed. Feature Selection Method Based on maximum information coefficient and approximate markov blanket (FCBF-MIC) [22] still uses symmetric uncertainty to measure the correlation between features and categories in the first stage. In the second stage, an approximate Markov blanket is used to fuse the maximum information coefficient measurement standard to remove redundant features. Feature selection algorithm based on approximate Markov blanket is proposed and named as normal max-relevance and min-redundancy (nmRMR) [23] algorithm. Firstly, the features are sorted using the maximum correlation minimum redundancy criterion. Secondly, the irrelevant and redundant features are removed according to the approximate Markov blanket condition. Mini batch K-means normalized mutual information feature inclusion (KNFI) [24] is proposed in 2019, which combines filter and wrapper techniques. The algorithm uses normalized mutual information as a measure to sort the features after clustering by small-batch K-means, the sorting features of the first stage are added to the subset one by one.

The above method can select the relevant features and eliminate the redundant features. However, they still need to be improved in determining the optimal subset of features efficiently and improving the classification performance.

Preliminaries

Fisher score has been maturely applied to feature selection problems. Pareto dominance theory is mainly used to deal with multi-objective optimization problems and the essence of feature selection problems is a multi-objective problem. This section introduces Fisher score algorithm and Pareto dominance theory.

Fisher score algorithm based on probability distance standard

Fisher score is a correlation-based feature evaluation criterion based on probability distance, a practical feature selection method. In the Fisher score algorithm, intra-class dispersion Sw represents intra-class distance, and inter-class dispersion Sb represents inter-class distance. The class distinguishing ability of a feature is the ratio of Sb to Sw. The larger this value is, the stronger the category correlation of the feature is.

Assuming there is a binary classification problem. The positive sample is marked as 1, the negative sample is marked as 0, the number of positive samples is n1, the number of negative samples is n0, the total number of samples is n, and the number of features is m.

Sw, Sb of feature f are defined as for Eqs. (1) and (2).

The correlation between feature f and class calculated by Fisher score is defined as Eq. (3).

where \(\mu_{0}^{\left( f \right)}\) is the mean of feature f in the negative sample, \(\mu_{1}^{\left( f \right)}\) is the mean of feature f in the positive sample, and \(\mu^{\left( f \right)}\) is the mean of feature f in the overall sample. \(\sigma_{0}^{\left( f \right)}\) and \(\sigma_{1}^{\left( f \right)}\) are the variance of feature f in negative and positive samples.

It can be seen from formula (3) that the greater the inter-class dispersion of a feature, the smaller the intra-class dispersion, and the better the classification effect of the feature.

Pareto dominance theory

Multi-objective optimization generally involves maximizing or minimizing multiple objective functions. Generally, minimizing a multi-objective optimization function [25] can be described as a formula (4).

In formula (4), x is the decision variable \(j_{1} \left( x \right),j_{2} \left( x \right), \ldots ,j_{k} \left( x \right)\) represent k objective functions, and the objective is to minimize them. \(g_{i} \left( x \right),h_{j} \left( x \right)\) is the constraint condition of the problem.

In this minimized multi-objective optimization problem, for k objective components, given any two decision variables \(x_{a}\) and \(x_{b}\). If the following two conditions are true, then \(x_{a}\) dominates \(x_{b}\).[26].

-

\((1)\,\,\forall i \in 1,2, \ldots ,k,\quad j_{i} \left( {x_{a} } \right) \le j_{i} \left( {x_{b} } \right)\)

-

\((2)\,\,\exists i \in 1,2, \ldots ,k,\quad j_{i} \left( {x_{a} } \right) < j_{i} \left( {x_{b} } \right)\)

When the value of the objective function corresponding to the solution \(x_{a}\) is better than the value of the objective function corresponding to the solution \(x_{b}\), \(x_{a}\) is called strong Pareto dominating \(x_{b}\). When a solution \(x_{a}\), there is no other solution that can dominate it, then it is called non-dominated solution.

Proposed architecture and methods

In this paper, a feature selection using non-dominant features-guided search is proposed. The Fisher score algorithm, based on the probability distance standard, measures the category correlation of features and extracts a set of pre-selected features with high correlation. Cosine similarity is used to measure the similarity between features based on geometric distance measurement standards, and sample features with lower dimensions represent more information of samples. The Pareto dominance theory is introduced to calculate the non-dominant features for guided search. The feature subset with the most significant category correlation and the least redundancy between features is selected. Figure 1 shows the overall architecture of this method.

Cosine similarity measure

Cosine similarity [27] is a method to measure the similarity of two vectors. This paper introduces it to calculate the similarity between features. Assuming there are two features f1 and f2, where they represent any two features from a feature set, the cosine value of the two features can be used to measure the similarity between the two features. The cosine similarity of the two features f1 and f2 was calculated using Eq. (5).

In Formula (5), given f1 and f2 as the feature vectors, n as the total number of instances. \(f_{{1_{i} }}\) and \(f_{{2_{i} }}\) represent the values of f1 and f2 corresponding to the ith instance, respectively.

Therefore, the range of feature similarity is [−1, 1]. The closer the value is to 1, the more similar the two features are.

Pareto dominance feature

In the process of feature selection, not only the correlation between features and class but also the correlation between features should be considered. Features with high-class correlation may be similar in data distribution, so the similarity between features and features may be high. It means there may be redundancy between features. High redundancy can not improve the model’s performance and even make the performance of the model decline sharply, so it is necessary to remove redundancy. Thus, the feature selection process can regard it as a multi-objective optimization problem. The goal is to select features with the highest class correlation and the lowest feature substitutability. Inspired by the Pareto theory in the multi-objective issue, the concept of the Pareto dominance feature is defined

Assuming there are two features f1 and f2, if the category correlation of f1 is higher than that of f2, and the similarity between f1 and f2 is higher than the given threshold, then f1 dominance f2. Otherwise, f1 is a non-dominated feature of f2.

Definition 1

Non-dominance feature. For any two features, f1 and f2, the binary relationship \(>\), \(\ge\) and \(\sim\) are defined as for formula (6).

Where, given \( C\left( {f_{i} } \right) \) as the category correlation of feature fi. \(C\left( {f_{1} } \right) > C\left( {f_{2} } \right)\) represents that the category correlation of f1 is higher than that of f2.\( {\text{S}}\left( {f_{1} ,f_{2} } \right)\) represents feature similarity between f1 and f2.\(\mu\) is the given similarity threshold.

In the NDFS algorithm proposed in this paper, the category correlation of features is calculated by Fisher score, and the similarity between features is calculated by cosine similarity.

The algorithm procedure

The specific process of the algorithm is shown in Table 1.

Assuming the original dataset has n samples, m genes, and the feature set \(F = \left\{ {f_{1} ,f_{2} , \cdots ,f_{m} } \right\}\). Assuming the threshold set in Fisher score algorithm is \(\mu_{1}\), and the similarity threshold is \(\mu_{2}\) when eliminating redundant features based on the non-dominated theory. Proposed algorithm proceeds as follows.

Step 1: Fisher score of each feature is calculated using the Fisher score algorithm. The features with small class correlation are removed by thresholds \(\mu_{1}\) to obtain a subset F of the remaining features.

Step 2: Given an empty set S and selects the first feature \(f_{k}\) from the remaining feature subset F that gives the largest Fisher score between the feature and the class target Y.

The feature \(f_{k}\) is added to the selected key feature set S (i.e., \(S \leftarrow S \cup f_{k}\)) and then removed from the set F (i.e., \(F \leftarrow F\backslash f_{k}\)).

Step 3: Select the largest Fisher score feature \(f_{d}\) in the remaining feature subset, where the class correlation of feature \(f_{s}\) in the key feature subset is greater than that of feature \(f_{d}\) (i.e., \(C\left( {f_{s} } \right) > C\left( {f_{d} } \right)\)). The similarity between \(f_{s}\) and \(f_{d}\) is calculated. If \(S\left( {f_{s} ,f_{d} } \right) > \mu_{2}\), the feature \(f_{d}\) is dominated by \(f_{s}\), and the feature \(f_{d}\) is removed from the remaining set. If \(S\left( {f_{s} ,f_{d} } \right) \le \mu_{2}\), the feature \(f_{d}\) is not dominated by \(f_{s}\). If the key feature subset does not have the dominated feature \(f_{d}\), it is added to the key feature subset.

Step4: If the remaining set F is empty, terminate the algorithm. Otherwise, go to step 3. The final output is the key feature subset S containing non-dominated features, which is the feature subset with the maximum correlation and minimum redundancy.

Experimental results and discussion

This section uses the proposed algorithm to discuss the experimental results on six high-dimensional small-sample data sets. Section “Experimental data sets” introduces the experimental data sets in detail. Section "Experimental setup” gives the experimental setup. Section “Feature selection experiment” describes the feature selection process experiment of NDFS in detail. And the feature selection results of the proposed method are compared with 6 algorithms in Section “Experimental result analysis”.

Experimental data sets

To verify the effectiveness and applicability of this method in dealing with the feature selection problem of high-dimensional and small-sample gene data, this paper selects six public gene data sets. The HeadNeck data set is obtained from the GEO database [28], the two public data sets Colon data set and the Leukemia data set are obtained from Kaggle, the Lung dataset, and the 11_Tumors dataset are obtained from the website http://www.gemssystem.org/, and the LIHC dataset is from the cancer genome atlas (TCGA). These data have been widely cited by scholars at home and abroad and have certain standards. Table 2 summarizes the 6 public datasets used in this study. It contains 4 binary datasets and 2 multi-category datasets.

The Colon dataset consists of 62 samples collected from Colon cancer patients, including 40 tumor samples and 22 normal samples. The Leukemia dataset contains 72 case samples of 2 different leukemias, including acute myeloid (AML) and acute lymphoblastic leukemia (ALL). The 11_Tumors dataset contains 174 samples and genetic data of 11 common human cancer cases, including prostate cancer, bladder/urethral cancer transitional cell carcinoma and squamous cell carcinoma) invasive breast ductal carcinoma, rectal cancer, gastric adenocarcinoma, clear kidney cell carcinoma, liver cancer, ovarian serous papillary adenocarcinoma, pancreatic cancer and Lung adenocarcinoma and squamous cell carcinoma). The Lung dataset contains four different Lung tumors (139 cases of adenocarcinoma, 6 cases of small cell Lung cancer, 21 cases of squamous cell carcinoma, and 20 cases of Lung carcinoid) and 17 cases of normal Lung tissue. The HeadNeck dataset contains 55 samples with local recurrence and 50 samples without local recurrence. The LIHC dataset from TCGA includes 374 liver cancer samples and 50 paracancerous samples. The number of samples in these datasets ranges from 62 to 424, and the number of features ranges from 2000 to 54,869, all of which are high-dimensional small-sample data.

Experimental setup

To evaluate the classification performance of the selected feature set, SVM, decision tree (DT), random forest (RF), logic regression (LR), and multi-layer perception machine (MLP) are selected to construct prediction models. The parameter settings of each model are shown in Table 3. AUC values, Accuracy, F1-score, and ROC are used as evaluation indicators to evaluate the performance of different feature results and the constructed prediction model.

To avoid overfitting and improve data reusability, fivefold cross-validation is used in this experiment. The original data set is randomly divided into five equal parts. The proportion of positive and negative samples is consistent with the original data's proportion of positive and negative samples in each equal part. One sample is selected as the test set, and the other four samples are selected as the training set. Five models are finally obtained after five times of execution. The evaluation indexes of these five models are taken as the final prediction model’s evaluation results by calculating the average value.

For performance comparison, the following algorithms have been selected: Fisher score, MIC, FCBF-MIC, CFR, KNFI, and mRMR. Since the Fisher score, MIC, CFR, and mRMR algorithms can directly select a certain number of features, the number of features selected by these two methods is consistent with the number of features finally selected by NDFS. Since the number of features that FCBF-MIC, and KNFI eventually generate cannot be known in advance, there is no limit to the number of features that the method ultimately selects. FCBF-MIC is directly consistent with the NDFS during the pre-selection process.

Feature selection experiment

When using the NDFS algorithm for feature selection, two thresholds need to be determined. One is the Fisher score threshold \(\mu_{1}\) for pre-selection using the Fisher score algorithm, and the other is the similarity threshold \(\mu_{2}\) for removing redundant features based on Pareto dominant theory.

To determine the threshold \(\mu_{1}\), the original features are sorted according to the Fisher score value. For visual observation, the top 50, 100, 200, 300, 400, 500 features are selected to form a series of feature subsets. According to these feature subsets, SVM and logistic regression (LR) is used to construct the classification model. The average classification accuracy is used to evaluate the feature subset. Finally, the Fisher score value corresponding to the appropriate number of features is selected to determine the threshold of each data set. Finally, the corresponding Fisher score is selected based on the appropriate number of features to determine each dataset’s threshold \(\mu_{1}\).

Figure 2 shows the average classification accuracy corresponding to different features on the 6 datasets. It can be seen from Fig. 2a that for Colon dataset, when the number of features is about 400, the accuracy of the SVM and LR classifiers is relatively high. At this time, the corresponding fisher value is 0.0627, so the threshold \(\mu_{1}\) of Colon dataset is determined to be 0.06, and the final number of selected features is 414. It can be seen from Fig. 2b that for the Leukemia dataset, when the number of features is about 400, the accuracy of the SVM and LR classifiers is relatively high. Therefore, the threshold \(\mu_{1}\) corresponding to the Leukemia dataset is determined to be 0.2, and the number of selected features is 406. As can be seen from Fig. 2c, for the 11_Tumors dataset, when the number of features is about 300, the accuracy of the classifier is relatively high. Therefore, the threshold \(\mu_{1}\) corresponding to the 11_Tumors dataset is determined to be 1.13, and the number of selected features is 302. It can be seen from Fig. 2d that for the Lung dataset, when the number of features is about 300, the accuracy of the classifier is relatively high. Therefore, the threshold \(\mu_{1}\) corresponding to the Lung dataset is determined to be 1.17, and the number of selected features is 299. It can be seen from Fig. 2e that for the HeadNeck dataset, when the number of features is about 300, it performs best on the LR classifier. Although the accuracy of SVM classifier increase with the decrease of the number of features, the performance of the LR classifier decreases gradually. To avoid losing important features, the number of features is about 300. Therefore, the threshold \(\mu_{1}\) corresponding to the HeadNeck dataset is determined to be 0.05, and the number of selected features is 287. It can be seen from Fig. 2f that for LIHC dataset, when the number of features is about 100, the accuracy of the classifier is relatively high. Therefore, the threshold \(\mu_{1}\) corresponding to the LIHC dataset is determined to be 1.53, and the number of selected features is 99.

To sum up, when the NDFS algorithm uses Fisher score to pre-select features in the first stage, the corresponding thresholds and the number of pre-selected features in different datasets are shown in the following Table 4.

For the second stage, to remove redundant features based on similarity measurement and Pareto dominance theory, the similarity threshold \(\mu_{2}\) should be determined. In this experiment, cosine similarity was used to measure the similarity between features. Therefore, the closer the value is to 1, the stronger the redundancy between features, and features with high-class correlation are more dominant to other features. Once the similarity threshold has been determined, the Pareto dominance feature corresponding to the feature can be removed. The larger the threshold, the less the Pareto dominance of the feature. The smaller the threshold, the more the Pareto dominating features and the more the deleted features.

Different candidate feature subsets are selected according to threshold \(\mu_{1}\) in Table 4. Calculate the classification accuracy corresponding to different similarity thresholds under candidate feature subsets. After many experiments, the threshold \(\mu_{2} = 0.68\) is finally determined. At this point, the number of optimal subset features of each dataset is displayed in Table 5.

Therefore, the final number of features selected by this method was determined as follows: Colon-8, Leukemia-15, 11_Tumors-11, Lung-4, HeadNeck-109, and LIHC-3.

Experimental result analysis

In this section, the proposed algorithms are compared with the other six algorithms (Fisher score, MIC, FCBF-MIC, CFR, KNFI, and mRMR) on the six data sets of HeadNeck, Colon, and Leukemia. Different classifiers were used to construct prediction models and evaluate the performance of the feature subsets selected by NDFS.

Table 6 lists the number of features selected by the proposed method and the six comparison algorithms.. According to the experimental setup in Section “Experimental setup”, Fisher score, MIC, CFR and mRMR all obtained the same number of dimensional features as NDFS. NDFS first retains only features that are strongly related to the category and more discriminative, greatly reducing the feature dimension, and then performs surprise search based on non-dominated features to retain the best number of features. The second stage of FCBF-MIC uses the MIC between features and features and between features and classes to calculate the approximate Markov blanket. The number of deleted features meeting this condition is less than the number of redundant features abandoned by NDFS using non-dominated feature-guided search. Therefore, in all data sets, the final number of features selected by FCBF-MIC is much higher than that of NDFS.

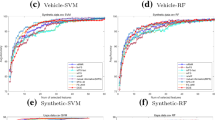

Tables 7, 8, and 9 show the classification accuracy, F1_score and AUC values of 6 datasets under 7 algorithms and 5 classifiers. Figure 3 shows the ROC curves of the proposed method and the other 6 methods under the SVM classifier. In the ROC curve, the ROC curve of the feature subset obtained by NDFS is closer to the upper left corner, and its performance is better. Compared with other six comparison algorithms, it can be easily explained why the proposed method performs better.

Fisher score, MIC only computes the relationship between features and categories, thus ignoring the relationship between features. NDFS can calculate between features based on removing irrelevant features, eliminating data redundancy, and completing the multi-objective calculation in feature selection. Therefore, compared with Fisher score and MIC algorithm, NDFS algorithm can remove redundant features. As far as the experimental results are concerned, the evaluation indexes of NDFS under the four classifiers in all data sets are superior to Fisher score and mic algorithms. The ACC of RF classifier is slightly lower than that of MIC in three data sets, but the generalization ability of NDFS in different learners is still better than these two algorithms on the whole.

Compared with FCBF-MIC, the classification results of NDFS are better than those of FCBF-MIC on Leukemia, HeadNeck, Colon and Lung datasets. The two algorithms have advantages and disadvantages in using different classifiers on 11_Tumors and LIHC data sets. FCBF-MIC uses SU for correlation analysis in the first stage and MIC for redundancy deletion in the second stage. There is no correlation between the two steps. NDFS can select non-dominated features through Fisher score value, and use two-way search strategy to make full use of the feature information of each calculation.

Compared with CFR, the classification effect of RF is better than that of NDFS only under the Leukemia dataset. However, under the other datasets, the NDFS has an obvious advantage. The mutual information and conditional mutual information used by CFR to calculate the relationship between features is too redundant and has low performance. CFR later used greedy searching strategy, but its final selected number threshold of features was not supported by sufficient solutions. NDFS calculates feature similarity in non-dominant features, and NDFS selects the final optimal subset based on the established similarity threshold.

When selecting features, KNFI focuses more on the influence of the current selected features on the performance accuracy of the classifier, ignoring the information of the features themselves. In the whole process of NDFS, not only the information of the feature itself is fully used, but also the classification information can be used as a reference for feature correlation threshold selection. The experimental results show that the classification effect of KNFI using DT is better than that of NDFS only under HeadNeck dataset, and NDFS can obtain a feature subset with better classification effect under other dataset classifiers.mRMR uses SVM on the HeadNeck dataset to achieve the best classification indicators, and some indicators on DT, LR, and MLP achieve the best results. F1 is also optimal under the DT classifier on the Lung dataset. But it lacks a competitive advantage under the rest of the datasets. mRMR uses mutual information to calculate feature information, which makes the feature far away and is still highly correlated with classification variables. NDFS calculates the feature similarity by considering the relationship between features to remove the disposable features, to generate a reliable feature subset.

Overall, the proposed algorithm uses NDFS in six datasets to select effective features for current problems. It has better performance in both binary and multi-classification tasks, and has good generalization ability on different classifiers.

To further compare the differences among the algorithms, Friedman nonparametric test was used to calculate, and the average ranking of the algorithms was used to judge whether there were significant differences between the algorithms. The average ranking of 7 algorithms in 6 datasets was calculated and compared with the f-distribution critical value with a confidence degree of 0.1 to obtain Table 10. It can be seen from Table 10 show that for five classifiers, \(T_{F}\) of classification accuracy and \(T_{F}\) of F1_score are both greater than the corresponding critical value, indicating significant differences between algorithms. Therefore, the proposed NDFS algorithm has good performance.

NDFS algorithm, in the best case, to be selected features are the key feature subset of the first feature dominating features. The time complexity is O(n). In the worst case, the unselected features are non-dominated features of the key feature subset, and the time complexity is O(n2). Since the NDFS algorithm can delete many irrelevant features first, it will reduce the Pareto dominance feature calculation scale and improve the overall speed of the method. Therefore, NDFS has relatively fast operating efficiency. The feature selection experiments on each data set were repeated 10 times, and then the mean was calculated as the estimation of the running time of feature selection. The final experimental results are shown in Table 11.

NDFS algorithm is a pre-selection based on Fisher score, which is higher than Fisher score and MIC algorithm in running time. However, the classification experiments show that the classification performance is poor, and the two algorithms eventually contain high redundancy features, so the proposed algorithm time is relatively acceptable. It can be seen from the table that compared with FCBF-MIC, CFR, and KNFI algorithms, NDFS has a lower running time on six datasets. Compared with mRMR algorithm, NDFS has lower running time on three datasets.

In summary, when the same number of feature subsets is selected, the classification performance of feature subsets selected by NDFS algorithm is better than those of Fisher score, MIC, CFR, and mRMR under most classifiers. NDFS selects important features and eliminates redundant features, which significantly preserves useful feature information. Compared with the FCBF-MIC and KNFI algorithm, NDFS adopts the distance-based measure more accurately than the probability value of information theory, so the performance of the selected feature subset is better.

Conclusion

In this paper, a feature selection using non-dominant features-guided search is proposed. Fisher score algorithm is used to measure the category correlation of features. Cosine similarity based on geometric distance standard is used to measure the similarity between features. Specifically, the algorithm combines the Pareto dominance theory to gradually remove the Pareto dominance feature (redundant feature) of the largest category correlation feature. A feature subset with maximum correlation and minimum redundancy is obtained.

This algorithm uses the fast and effective characteristics of the Fisher score algorithm to select related features. It makes up for the deficiency of Fisher score that does not consider the correlation between features. The proposed method is compared to six competing feature selection methods on six real-world data sets. This approach has better classification performance than Fisher score, MIC, CFR, and mRMR algorithms and does not only consider the category correlation or feature redundancy of features. Compared with the FCBF-MIC and KNFI algorithms, it can obtain the feature subset with better classification ability while accelerating the algorithm execution efficiency. In light of the above experimental results show that NDFS method outperforms other compared feature selection methods.

NDFS has been able to extract features with low redundancy and affecting gene category. It is also worth studying to analyze the genes that affect the disease from the selected genes. Further work will establish an interpretable association model of selected features and final results to provide a scientific basis for personalized diagnosis and treatment.

References

Ram PK, Kuila P (2019) Feature selection from microarray data: genetic algorithm based approach[J]. J Inform Optim Sci 40(8):1599–1610

Lim K, Li Z, Choi KP, Wong L (2015) A quantum leap in the reproducibility, precision, and sensitivity of gene expression profile analysis even when sample size is extremely small [J]. J Bioinform Computational Biol 13(4):1550018–1550018

Xue Y, Xue B, Zhang M (2019) Self-adaptive particle swarm optimization for large-scale feature selection in classification[J]. ACM Trans Knowl Discov from Data (TKDD) 13(5):1–27

Hambali MA, Oladele TO, Adewole KS (2020) Microarray cancer feature selection: review, challenges and research directions[J]. Int J Cogn Computing Eng 1(1):78–97

Rui Z, Feiping N et al (2019) Feature selection with multi-view data: a survey ScienceDirect[J]. Int J Inform Fusion 50:158–167

Manikandan G, Susi E, Abirami S (2019) Flexible-fuzzy mutual information based feature selection on high dimensional data[C]//2018 Tenth International Conference on Advanced Computing (ICoAC). IEEE

Nakariyakul S (2018) High-dimensional hybrid feature selection using interaction information-guided search[J]. Knowl-Based Syst 145(1):59–66

Peng H, Long F, Ding C (2005) Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy[J]. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Yu L, Liu H (2004) Eficient feature selection via analysis of relevance and redundancy[J]. J Mach Learn Res 5(12):1205–1224

Gao T, Ji Q (2016) Efficient Markov blanket discovery and its application[J]. IEEE Trans Cybern 47(5):1169–1179

Jo I, Lee S, Sejong Oh (2019) Improved measures of redundancy and relevance for mRMR feature selection[J]. Computers 8(2):42–42

Li S et al (2020) Feature selection for high dimensional data using weighted K-nearest neighbors and genetic algorithm. IEEE Access 8(8):139512–139528

Cai J, Luo J, Wang S et al (2018) Feature selection in machine learning: a new perspective[J]. Neurocomputing 300(26):70–79

Kira K, Rendell LA (1992) A practical approach to feature selection[J]. Proceedings of the Ninth International Workshop on Machine Learning (ML 1992), Aberdeen, Scotland, UK, July 1–3

Robnik-Šikonja M, Kononenko I (2003) Theoretical and empirical analysis of ReliefF and RReliefF[J]. Mach Learn, 53(1–2)

Gu Q, Li Z, Han J (2012) Generalized fisher score for feature selection[J]

Reshef DN, Reshef YA, Finucane HK et al (2011) Detecting novel associations in large data sets[J]. Science 334(6062):1518–1524

Hall MA (2000) Correlation-based feature selection for discrete and numeric class machine learning. 359−366

Gao W, Liang Hu, Zhang P, He J (2018) Feature selection considering the composition of feature relevancy[J]. Pattern Recogn Lett 112:70–74

Estevez PA, Tesmer M, Perez CA et al (2009) Normalized mutual information feature selection[J]. IEEE Trans Neural Netw 20(2):189–201

Javed K, Babri HA, Saeed M (2012) Feature selection based on class-dependent densities for high-dimensional binary data[J]. IEEE Trans Knowl Data Eng 24(3):465–477

Sun G, Song Z, Liu J et al (2017) Feature selection method based on maximum information coefficient and approximate markov blanket[J]. Acta Automatica Sinica (in Chinese) 43(05):795–805

Zhang L, Wang C et al (2018) A feature selection algorithm for maximum relevance minimum redundancy using approximate markov blanket[J]. J Xi’an Jiaotong Univ (in Chinese) 52(10):141–145

Thejas GS, Joshi SR, Iyengar SS et al (2019) Mini-batch normalized mutual information: a hybrid feature selection method[J]. IEEE Access 7(99):116875–116885

Xue B, Zhang MJ, Browne WN (2013) Particle swarm optimization for feature selection in classification: a multi-objective approach[J]. IEEE Trans Cybern 43(6):1656–1671

Zhou Yu, Kang J, Guo H (2020) Many-objective optimization of feature selection based on two-level particle cooperation[J]. Inf Sci 532(532):91–109

Saha S, Ghosh M, Ghosh S, Sen S, Singh PK, Geem ZW, Sarkar R (2020) Feature selection for facial emotion recognition using cosine similarity-based harmony search algorithm[J]. Appl Sci 10(8):2816

Walter V, Yin X, Wilkerson MD et al (2013) Molecular subtypes in head and neck cancer exhibit distinct patterns of chromosomal gain and loss of canonical cancer genes[J]. PLoS ONE 8(2):e56823

Funding

National Key R&D Program of China (Item NO: 2019YFC0121502), Project supported by the Special Fund of Shaanxi Key Laboratory of Network Data Analysis and Intelligent Processing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pan, X., Sun, J., Yu, H. et al. Feature selection using non-dominant features-guided search for gene expression profile data. Complex Intell. Syst. 9, 6139–6153 (2023). https://doi.org/10.1007/s40747-023-01039-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01039-x