Abstract

The traveling salesman problem (TSP) is an NP-hard problem. Thus far, a large number of researchers have proposed different ant colony optimization (ACO) algorithms to solve the TSP. These algorithms inevitably encounter problems such as long convergence time and the tendency to easily fall into local optima. On the basis of the ACO algorithm, this study proposes a dynamic adaptive ACO algorithm (DAACO). DAACO realizes the diversity of initialization of the ACO algorithm by dynamically determining the number of ants to be prevented from falling into local optimization. DAACO also adopts a hybrid local selection strategy to increase the quality of ant optimization and reduce the optimization time. Among the 20 instances of the TSPLIB dataset, the DAACO algorithm obtains 19 optimal values, and the solutions of 10 instances are better than those of other algorithms. The experimental results on the TSPLIB dataset show that the DAACO algorithm has obvious advantages in terms of convergence time, solution quality, and average value relative to existing state-of-the-art ACO algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The traveling salesman problem (TSP) is a combinatorial optimization problem and an NP-hard problem. At present, two types of algorithms are used to solve the TSP: one is an accurate algorithm while the other is a heuristic algorithm. As the calculation time of the accurate algorithm is too long, researchers have proposed various heuristic algorithms in an attempt to solve the TSP. For example, genetic algorithm (GA), simulated annealing, artificial bee colony (ABC) algorithm, ant colony algorithm, bat algorithm, etc. involve multiple iterations, and their optimization parameters require constant modification to improve their performance. The optimization strategy and evaluation system are the keys in these algorithms.

Chen and Chien [1] proposed a particle swarm optimization (PSO) technology called a genetic simulated annealing ant colony system to solve the TSP. Experiments were performed on 25 instances obtained from TSPLIB. The experimental results showed that the proposed method is superior to the methods of Angeniol (1988), Somhom (1997), Masutti and Castro (2009), and Pasti and Castro (2006) in all aspects.

Ouaarab et al. [2] proposed an improved discrete cuckoo search (DCS) algorithm. Practice has proved that the DCS algorithm is effective in solving continuous optimization problems. In the study, CS was expanded and improved by rebuilding the CS population and introducing new cuckoos to solve the problem of combination and continuity. The test results showed that DCS is better than some other heuristic algorithms.

Proposed in 2010, the bat algorithm [3] is a population metaheuristic algorithm that is based on human echolocation or bats’ biological sonar characteristics. Osaba et al. [4] proposed a discrete version of the bat algorithm to solve the well-known symmetric and asymmetric TSP. At the same time, they proposed an improvement to the basic structure of the classic bat algorithm.

Akhand et al. [5] proposed an effective SMO solution called discrete SMO (DSMO) to solve the TSP. In DSMO, each spider monkey represents a TSP solution, which uses operations on the basis of the exchange sequence (SS) and exchange operator (SO). Relative to other well-known methods, DSMO is highly effective in solving the TSP.

The ABC algorithm is a swarm intelligence method. Khan and Maiti [6] improved this algorithm by modifying the multiple update rules and K-opt operations to solve the TSP. Eight different rules were proposed to update the solution in the algorithm. Through the use of roulette selection in the algorithm, the rules are randomly selected from the rule set to complete, and the solution is updated by the bees used or the bystander bees. In the search phase of the algorithm, the K-opt operation is applied to any stagnant solution of a fixed value to make possible improvements. Relative to the existing algorithm, this algorithm is sufficient in terms of accuracy and consistency with the standard TSP.

Choong et al. [7] adopted a hyper-heuristic method called the “modified selection function,” which can automatically adjust the selection of the neighborhood search heuristic used by bees. Lin–Kernighan’s (LK) local search strategy was integrated to improve the performance of the proposed model. The performance comparison between this method and other current algorithms further demonstrated the effectiveness of the proposed model.

The tree seed algorithm (TSA) is an iterative search algorithm based on population. Cinar et al. [8] redesigned the basic TSA by integrating exchange, shift, and symmetric transformation operators to solve the optimization problem of permutation encoding and then proposed the DTSA. In the basic TSA, the solution update rule can be used for decision variables defined in the continuous solution space. The rule is replaced by a conversion operator in the DTSA and used to solve the TSP.

Yousefikhoshbakht [9] proposed the MPSO algorithm. In the moving phase of particles, the MPSO algorithm refers to the optimal solution of the current iteration and provides various local search algorithms to derive an improved solution. In addition, the algorithm proposes a method that other particles move to the best particle. The results highlighted the efficiency of the MPSO algorithm because it can achieve excellent solutions in most instances.

The algorithm used in the current study is at improving the traditional ACO algorithm. ACO is a metaheuristic algorithm with swarm intelligence and stochastic nature, and it has been used to deal with many comprehensive optimization problems. The characteristics of ACO include distributed computing, positive feedback, and ease of combining with different algorithms, such as GA and PSO for improving global search capabilities, to form various heuristic algorithms. This metaheuristic algorithm simulates the mechanism by which each ant obtains pheromones from other ants and then finds the global shortest path. The pheromone concentration plays a vital role in guiding ant behavior. On the basis of this mechanism, the accuracy and effectiveness of the algorithm are affected by the pheromone processing technology. On the one hand, the random probability selection in the ACO algorithm causes some major problems, such as long search time, slow convergence, and stagnation. On the other hand, the ACO algorithm has powerful discovery capabilities, excellent solutions, and easy computer implementation. Many researchers have proposed improvements to the ACO algorithm to overcome its deficiencies. In view of the above shortcomings, this research proposes an adaptive dynamic quantity of ant ACO algorithm (DAACO). Two improved strategies are added to the DAACO, one is the initialization strategy based on convex hull and K-means clustering, and the other is the local search strategy based on neighbor pair. The experimental results show that the proposed DAACO algorithm has better performance than the latest ACO algorithms (DFACO, DEACO). The rest of this research is organized as follows: the first section explores the related work on the ACO algorithm and the TSP. The next section discusses the ACO’s method of solving the TSP. The third section introduces the formula and architecture of the proposed dynamic adaptive ACO (DAACO) algorithm. Finally, the fourth section presents the experimental results and simulation analysis.

Related research

The traveling salesman problem (TSP) is one of the well-known problems in the field of mathematics. Consider a traveling merchant who wants to visit some cities and must choose the path he wants to take. The restriction of the path is that each city can only be visited once, and the merchant must return to the original city in the end. The goal of path selection is for the path distance to be the shortest among all paths.

The basic idea of using the ACO algorithm to solve the TSP is that a certain number of ants start from a random node and continuously evaluate and select the next node on the basis of the heuristic information and the pheromone left by the previous ants. When all the nodes have been visited, the order in which the ants visit the node constitutes a solution of the TSP. The result is optimized for multiple iterations. According to the node selection rules, each ant randomly starts to visit the node and then visits other nodes. During the learning process, the pheromone is constantly updated.

Ant colony algorithm

Dorigo and Gambardella [10] proposed an artificial ant colony algorithm that can solve the TSP. The ants in the artificial ant colony algorithm can use the pheromone deposited on the edge of the TSP graph to continuously generate short feasible routes. Computer simulation shows that the artificial ant colony algorithm can generate good solutions for symmetric and asymmetric instances of the TSP.

Dorigo and Gambardella [11] introduced the ant colony system (ACS), which is a distributed algorithm applied to the TSP. In the ACS, a group of agents called ants collaborate to find a good solution for the TSP. These ants use pheromones to cooperate. When constructing a solution, the pheromones are deposited on the edge of the TSP graph. The experiment results showed that the performance of the ACS is better than that of other natural heuristic algorithms. The study also compared ACS-3-opt (a version of ACS with a local search process) with some of the best performing algorithms to solve the symmetrical and asymmetrical TSP.

Elloumi et al. [12] proposed a novel method by introducing PSO, which was modified through the ACO algorithm to improve performance. Considering the completion time and optimal length, the authors verified the new hybrid method (PSO-ACO) by applying it to the TSP. The results showed that the proposed method is effective.

Escario et al. [13] proposed the ant colony extension (ACE), which is a novel algorithm that belongs to the general ACO framework. The two specific functions of the ACE are the task division between two types of ants and the implementation of the control strategy for each ant during the search process. The results of the ACE were compared with those of two well-known ACO algorithms, namely ACS and MMAS. In almost every instance of the TSP test, the ACE showed better performance than the ACS and MMAS.

Liu and Wang [14] proposed the minimum–maximum ant colony strategy (MPCSMACO). In the MPCSMACO algorithm, a variety of group strategies are introduced to realize information exchange and cooperation between various types of ant colonies. The chaotic search method using the ergodicity, randomness, and regularity of the logical mapping overcomes the long search time, avoids falling into the local extremum in the initial stage, and improves the accuracy of the later search. The min–max ant strategy in MPCSMACO is used to avoid local optimization solutions and stagnation. Ants with different probabilities search different areas according to pheromone concentration, thereby reducing the number of blind searches in the chaotic search method. The experimental results showed that the MPPCMACO algorithm has superior global search ability and convergence performance.

Duan and Yong [15] modified the pheromone update rule and adaptive adjustment strategy in the IMVPACO algorithm to effectively reflect the quality of the solution based on the pheromone increment. IMVPACO modifies the ant’s movement rules to adapt to large-scale problem solving, optimizes the path, and improves the search efficiency. Adopting the boundary symmetry mutation strategy improves not only the mutation efficiency but also the mutation quality. Simulation experiments showed that the proposed IMVPACO algorithm can obtain good results when searching for the optimal solution and has better global search capabilities and convergence performance than other traditional methods.

Lei and Wang [16] proposed an improvement to the efficiency of search results and sough to reduce the evolution speed and avoid the tendency of ACO to stagnate and fall into the local optimum when solving complex functions. In the proposed IWSMACO algorithm, the information weight factor is added to the path selection and pheromone adjustment mechanism to dynamically adjust the path selection probability and randomly select behavior rules. The supervision mechanism increases the dynamic convergence criterion of the supervision distance, adopts the optimal pheromone update strategy, adaptively selects excellent ants to update the pheromone trajectory, improves the solution quality of each iteration, and effectively guides the learning of future ants.

In 2016, in the DPSEMACO algorithm, Li and Wan [17] divided ants into two subgroups using the cooperation mechanism of biological communities, which evolved separately and exchanged information in time. The pheromone’s two-way dynamic adjustment of the evaporation factor strategy was used to change the corresponding path pheromone of the different subgroups to avoid falling into the local optimal state. Meanwhile, the parallel strategy was used to avoid falling into the local optimal state. The DPSEMACO algorithm can expand the search space and improve the overall search performance by repeatedly changing the pheromone of each subpopulation and adaptively adjusting the evaporation factor. The experimental results showed that the proposed DPSEMACO algorithm is feasible and effective in solving the TSP and exhibits good global search ability and high convergence speed.

Liu [18] proposed the probability formula of the optimal path and the pheromone update formula by combining the traditional ant colony algorithm and the local search algorithm and then proposed an improved ant colony algorithm called the LSACA algorithm. In the experimental analysis, the comparison with the traditional algorithm proved the feasibility and effectiveness of the proposed algorithm.

Mavrovouniotis et al. [19] proposed a memetic ACO algorithm that integrates local search operators (called unstring and string) into ACO to solve the DTSP. The proposed memetic ACO algorithm is aimed at solving the symmetric and asymmetric DTSP. The experimental results showed that the proposed memetic algorithm is more effective than other state-of-the-art algorithms.

Deng et al. [20] introduced a GA and ant colony algorithm and proposed a genetic and ant colony adaptive collaborative optimization (MGACACO) algorithm to solve complex optimization problems. The proposed MGACACO algorithm combines the exploring capability of the GA and the random ability of the ACO algorithm. The experimental results showed that the proposed MGACACO algorithm can avoid falling into local extreme values and has relatively good search accuracy and fast convergence speed.

Gülcü et al. [21] proposed a parallel cooperative hybrid algorithm on the basis of ACO (PACO-3-opt). This method uses the 3-opt algorithm to avoid the local minima. PACO-3-opt has multiple populations and a master-slave paradigm. Each population runs ACO to generate solutions. After a predetermined number of iterations, each population first runs 3-opt to improve the solution and then shares the best path with other populations. This process is iterated until the termination condition is met. Therefore, it can reach the global optimum. The experimental results showed that PACO-3-opt is more effective and reliable than other algorithms.

Dahan et al. [22] embedded the 3-opt algorithm in the basic FACO algorithm and proposed the DFACO algorithm to improve solutions by reducing the impact of the stagnation problem. A population contains a combination of regular ants and flying ants. The modifications are designed to help the DFACO algorithm obtain satisfactory solutions in a short processing time and avoid falling into a local minimum. The experimental results showed that in terms of solution quality, the DFACO algorithm achieves the best results for most instances. In addition, DFACO achieves superior results in terms of solution quality and execution time.

Ebadinezhad [23] proposed the DEACO algorithm for dynamically adjusting ACO parameters, that is, dynamically adjusting the evaporation rate of pheromones. In this mechanism, the main idea is to select the first node (starting node) based on clustering to achieve the shortest path. In this way, DEACO can find the least costly/shortest path for each cluster. The experimental results showed that compared with traditional ACO, the DEACO method has faster convergence speed and higher search accuracy.

Compared with the original ant colony algorithm, the current ant colony algorithm has been greatly improved and has become an effective method to solve TSP. Existing algorithms are mainly based on the ACO algorithm and focus on two aspects of improvement. One aspect is to improve ants’ local cruise strategy, guide the ants in finding the best direction, and speed up the convergence. The other aspect is to adaptively determine the ACO algorithm part of the parameters and improve the generalization of the algorithm as a whole. The improvement of the two parts of the strategy is important to enhance the optimization capability of the ACO algorithm.

The current research mainly improves the traditional ACO algorithm from three directions:

-

1.

A convex hull and the K-Means algorithm are used to adaptively determine the number of ants in each graph and thereby improve the generalization performance of the algorithm;

-

2.

The random placement strategy of ants is improved on the basis of K-Means clustering to increase the diversity of ant optimization;

-

3.

The mixed use of local search methods that search for one and two layers each time increases the reference information for local optimization and speeds up the convergence.

ACO-3-opt

The ACO-3-opt algorithm [24] is an improved algorithm based on the traditional ACO algorithm. Its main improvement strategy is to use the 3-opt algorithm to optimize the basic solution obtained by the ACO algorithm. The flow chart of the ACO-3-opt algorithm is as Fig. 1.

The basic process of the ACO-3-opt algorithm can be described as follows:

-

1.

Initialize the global parameters of the ACO algorithm;

-

2.

Initialize the iteration parameters;

-

3.

Select a node that has not been visited and update the local pheromone. If all nodes are visited, then go to step 4; otherwise, go to step 3;

-

4.

Use the 3-opt algorithm to optimize the TSP path, calculate the TSP path, update the global pheromone, and record the optimal solution for multiple iterations. If the number of iterations has been reached, then go to step 5; otherwise, go to step 2;

-

5.

Output the optimal solution in multiple iterations.

Ants start from a random initial node and continuously visit nodes that have not been traversed. The traditional ACO algorithm uses a roulette method to select by probability. i is the node where the current ant is located, and \(allowed_{k}\) is the set of nodes currently accessible by the current ant k. The formula is as Eq. 1:

where \(\alpha \) and \(\beta \) are empirical parameters representing the importance of the pheromone \(\tau _{ij}\) and heuristic value \(\eta _{ij}\), respectively. \(\tau _{ij}\) represents the pheromone on the path from node i to node j, \(\eta _{ij}\) represents the heuristic value between node i and node j. Here, \(\eta _{ij}\) is as Eq. 2:

where \(dis_{ij}\) is the Euclidean distance between node i and node j.

Whenever an ant successfully selects a node, it updates the pheromone between the two nodes. \( L_{0} \) is the initial path calculated using the greedy algorithm. The local pheromone update formula (ULP) is as Eq. 3:

where \(\tau _{0}\) is the initial pheromone value. \(\rho \) is the number between (0,1), which can be understood as the evaporation rate of pheromone.

The global pheromone update formula (UGP) is as Eq. 4:

where \(L_{best}\) is the optimal length among all current TSP paths. As the ACO algorithm easily falls into the local optimum, some researchers have considered combining the local optimization algorithm 3-opt in the ACO algorithm to improve the local optimization process. The 3-opt algorithm replaces the three edges in the old path with the new three edges to generate a better path and retains the better TSP path.

The experiments show that adding the 3-opt local optimization algorithm to the known heuristic algorithm can improve the algorithm’s optimization ability and the quality of the solution.

Dynamic adaptive ant quantity ACO algorithm (DAACO)

Before introducing the DAACO algorithm, this section provides an overview of two improvement strategies used by the DAACO algorithm.

The use of the ACO algorithm to solve the TSP involves parameters such as the number of ants, pheromone evaporation rate, and number of neighbors. These parameters are generally set before the program runs. To identify a good combination of parameters, we need to perform several experiments. Prior to such experiments, we propose the strategy of ant dynamic neighbors and pheromone dynamic evaporation to establish a direction for the automation of the ACO algorithm. In the experiment, a dynamic ant quantity algorithm is proposed. The basis of the proposed algorithm is as follows:

-

1.

The number of nodes in each graph varies. Thus, the number of ants that can be beneficial to one graph may not be beneficial to another graph. For example, if the number of ants is 40, then such quantity may be useful for a graph with 300 nodes, but it may not be suitable for a graph with 1,000 nodes. An increase in the number of nodes means an increase in complexity. Thus, more ants may be needed to meet this requirement;

-

2.

A graph can form multiple node groups in the overall scope. Given the characteristics of different node groups, the number of nodes within different node groups varies. The conventional ant initial placement algorithm is based on global node initialization. Therefore, all ants used may be initialized and placed in a node group. Such initial placement is unstable.

On the basis of these points, this experiment proposes a new method. First, a convex hull is used to calculate the number of classifications K. The clustering algorithm is used to distinguish K different node groups, calculate the corresponding number of ants of the node groups by the number of nodes of different node groups, and add the quantity of all ants in the node group to obtain the global quantity of ants. The quantity of ants in DAACO is determined on the basis of the number of nodes in the instance. Thus, DAACO can adaptively determine the quantity of ants in the graph according to the size of different instances. When the ants are initially placed, each node group is randomly placed according to its corresponding number of ants.

In addition, the DAACO algorithm uses a new node selection strategy. The following section introduces the two improvement strategies of the DAACO algorithm.

Strategy 1: dynamic ant (DA)

The dynamic ant quantity strategy in this experiment is realized by the convex hull algorithm and K-means clustering algorithm. The main steps are as follows:

-

1.

Calculate the convex hull area S of the nodes in the graph through the convex hull algorithm;

-

2.

Use half of the median of the distance between nodes as the radius \(r_{0}\) of the convex hull;

-

3.

Calculate the classification number \(K=2 \times S/ (\pi \times r_{0} \times r_{0})\);

-

4.

Classify the graph according to the classification number K, and use to calculate the number of ants \(n_{i} = {\log _{7}k_{i}} \times 2\) that should be set for the classification according to the number of nodes in each classification, where \(k_{i}\) is the number of nodes in different classifications;

-

5.

Finally, the overall number of ants is \(N = {\sum \nolimits _{i = 1}^{K}n_{i}}\).

The specific process of the convex hull algorithm and K-means algorithm is described in the next section.

Algorithm 1: convex hull algorithm

The convex hull algorithm [25, 26] used in this experiment is a conventional graph calculation method. The purpose of using the convex hull algorithm is to calculate the approximate area of the entire graph and then estimate the number of clusters K in the K-means algorithm. Convex hull is a concept in computational geometry. In a real vector space V, for a given set X, the intersection H of all convex sets containing X is called the convex hull of X. The convex hull algorithm is used in this experiment to estimate the different graphs that can be divided into several node groups to facilitate the subsequent use of the K-means clustering algorithm.

Algorithm 2: clustering algorithm

The K-means clustering algorithm [27] is an iterative solution clustering analysis algorithm. Its steps are to divide the data into K groups (K is calculated by the convex hull algorithm), randomly select K objects as the initial cluster center, calculate the distance between each object and each cluster center, and then assign each object to the cluster center closest to it. The cluster centers and the objects assigned to them represent a cluster. Each time a sample is allocated, the cluster center is recalculated on the basis of the existing objects in the cluster. This process continues until a certain termination condition is met. The termination condition is that the sum of the square error cost does not change continuously multiple times within the allowable error range or that the specified number of iterations is reached. The calculation formula of cost is as Eq. 5:

where K is the number of clusters divided into clusters and P is a node belonging to the cluster \(C_{i}\), \(\Vert P-u_{i}\Vert \) is the Euclidean distance from the node P to the centroid \(u_{i}\) of the cluster \(C_{i}\).

The calculation formula of \(u_{i}(x_{i},y_{i})\) is as Eq. 6:

where \(\Vert C_{i}\Vert \) is the number of nodes in the cluster \(C_{i}\)

The calculation flow of the K-means algorithm is as follows:

-

1.

The K-means algorithm first needs to select K initial cluster centers;

-

2.

Calculate the distance from each data object to the K initial cluster centers, and divide the data objects into the dataset closest to the cluster center. When all the data objects are divided, set the K clusters;

-

3.

Recalculate the mean of the data objects of each cluster, and use the mean as the new cluster center;

-

4.

If the number of iterations is reached or the cost error of multiple iterations is less than the preset error, then K-Means ends; otherwise, return to step 2.

An example of using ACO-3-opt to place the initial position of ants (the coordinates of points are from the fnl4461 instance of TSPLIB [28])

An example of using DAACO to place the initial position of ants (the coordinates of points are from the fnl4461 instance of TSPLIB [28])

With Algorithm 1, the number of categories K can be calculated. The clustering algorithm can be used to calculate the number of nodes in each category (node cluster), calculate the number of ants that should be placed in different node clusters, and then realize the dynamic determination of the number of ants in each graph.

Figure 2 takes the data fnl4461 (from TSPLIB [28]) as an example. On the basis of conventionally setting the number of ants and placing all ants randomly, we find that the number of ants cannot be flexibly determined and that the placement of ants cannot completely cover the entire graph. This condition limits the diversity of solutions and affects the quality of the solutions.

Figure 3 takes the data fnl4461 (from TSPLIB [28]) as an example. The convex hull algorithm is used to calculate the cluster number K of the graph as 12. Then, the K-means algorithm is used for classification. The number of ants that should be placed in each category is also calculated. The red triangle in Fig. 3 indicates where the ants are placed. The dynamic ant strategy based on the convex hull and K-means can flexibly calculate the number of ants in the graph while ensuring that the placement of the ants covers the entire graph as much as possible. Thus, it increases the diversity of the solution and ultimately improves the quality of the solution.

By comparing Figs. 2 and 3, we identify the following advantages in the Fig. 3:

-

1.

The number of ants is fully adapted to the scale of the graph, and thus, the diversity of ants during initialization can be enhanced;

-

2.

According to advantage 1, the placement position of the ants can evenly cover the entire graph, thereby increasing the diversity of ant’s optimization.

Strategy 2: choose the best (CB)

The strategy of selecting the optimal node in this study uses two methods, namely selecting the optimal node (CBN) and selecting the optimal neighbor pair (CBNP). When the number of nodes in the TSP path is less than 70% of the total number of nodes, the CBN algorithm is used; otherwise, the CBNP algorithm is used.

Algorithm 1: choose the best node (CBN)

In a conventional method, ants select the next node with the most pheromone in the current node’s candidate set. In each iteration of this experiment, the candidate node set of each node is updated according to the pheromone situation, that is, M better nodes are selected from the \(M \times 2\) candidate nodes of each node to form a new candidate node set, and M is the size of the candidate node set. The selection formula is as Eq. 7:

Whenever the ant visits a node, it traverses the candidate node set for that node, selects the node with the largest pheromone among the unvisited nodes as the next choice, and iterates successively.

In formula (7), \(\tau _{ij}\) represents the pheromone between node i and node j, \(allowed_{k}\) represents the set of neighbor nodes that the current ant k can visit, \(City_{visited}\) is the number of nodes that have been visited so far, n is the total number of nodes. When the number of nodes in the TSP path is less than 70% of the total number of nodes, CBN algorithm is used, otherwise CBNP algorithm is used.

In terms of pheromone update rules , two types of pheromone updates are adopted in the experiment: one is a local update that can adjust the search direction of ants in time, such as formula (3), while the other is a global update in which each iteration can guide the global search to converge in a better direction, such as formula (4). The following is the algorithm framework for selecting the optimal solution in the candidate node set.

Algorithm 2: choose the best neighbor pair (CBNP)

Before describing the algorithm in this section, we clarify the deep search method. If we can search n layers at a time, then when all possible n layer permutations are searched, the global optimal solution must be found. However, at this point in this method, the complexity is O(n!). The method degenerates to the original search mode, and duration is unacceptable. On the basis of this idea, the experiment in this work designs a similar method, which is to search two layers at a time. This method provides more selection information than searching only one layer, but it reduces the information more greatly than when searching n layers.

The above algorithm framework is a simple description of the two-layer search algorithm. However, we find that the running time is relatively long, as revealed in the experiment. Although the results obtained are optimized to some extent, they are unacceptable relative to the increased running time. Therefore, on the basis of the aforementioned algorithm model, an optimized algorithm is proposed.

This experiment puts forward the concept of “neighbor pair”, which is based on the basic concept of neighbors. In the search process, the search is generally sped up by selecting the optimal neighbor node from the candidate node set of the current node. Then, the next search is performed. In this experiment, to achieve the goal of searching for two steps at a time, node \(q_{1}\) is selected from the neighbor nodes of current node p, and node \(q_{2}\) is selected from the neighbor nodes of \(q_{1}\). We define \(q_{1}q_{2}\) as a “neighbor pair” of p. In this study, we use the sum of the pheromones between p and \(q_{1}\) and the pheromones between \(q_{1}\) and \(q_{2}\) as the evaluation of the pros and cons of the neighbor pair \(q_{1}q_{2}\) of node p. When the neighbor pair is selected in the search, the effects of searching for two steps can be achieved simultaneously

The algorithm for selecting the optimal solution in the neighbor pair is described as follows:

-

1.

Preprocess the set of candidate nodes for each node;

-

2.

Preprocess the “neighbor pair” for each node;

-

3.

When traversing the “neighbor pair” of each node, select the node in which none of the nodes in the “neighbor pair” has been visited and the pheromone of the “neighbor pair” is the largest.

The improved two-layer search algorithm is designed as Algorighm 2.

The selection formula is as Eq. 8:

After using the improved two-layer search algorithm, the time is significantly reduced. As this selection algorithm is called after a certain stage, the total time spent is not much different from and may sometimes be greater than that in the original method. Moreover, the results are improved.

Dynamic adaptive ant quantity ACO algorithm

The DAACO algorithm uses the two strategies explained above. The following discussion introduces the algorithm ideas of DAACO.

The basic ideas of the DAACO algorithm are as follows:

-

1.

In the parameter initialization stage, strategy 1 is used to dynamically determine the number of ants in each graph so that the number of ants can fully adapt to the scale of the graph;

-

2.

In the ant initialization stage, on the basis of clustering, ants are randomly placed so that their positions can fully cover the entire graph and increase the diversity of solutions;

-

3.

In the local optimization stage, strategy 2 is used to increase the amount of information for ants’ local optimization and to speed up the convergence;

-

4.

After the optimization is over, the 3-opt local optimization algorithm is used to optimize the obtained solution and thereby generate the final solution.

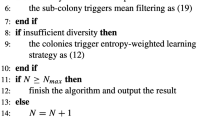

The DAACO algorithm framework is shown in Algorithm 3.

The DAACO algorithm is described as follows:

Initialize parameters \(\alpha ,\beta ,\rho ,M\) (line 1); at the initial stage of optimization, use strategy 1 to improve the number and distribution of ants (line 2); initialize the value of pheromone (line 3); initialize the neighbor information around the node, initialize the neighbor pair information around the node, randomly place ants based on clustering (lines 5–7); in the optimization process, when \(q_{0}\) is greater than the random threshold, use strategy 2 to select the next node; otherwise, use the probability to select the next node so that the ants can quickly move in a better direction during the entire optimization process, and then update the local pheromone (lines 9–16); to complete an iteration, use the 3-opt method for solution optimization (line 19); update the global pheromone (line 20).

Relative to ACO-3-opt, the DAACO algorithm has the following advantages:

-

1.

Based on the convex hull and clustering algorithm, DAACO dynamically calculates the number of ants in each picture so that the ants can be distributed in the picture as evenly as possible and thereby increase the diversity of ant searches;

-

2.

Propose the concept of neighbor pair, mix the selection strategies of searching neighbors and searching neighbor pair to provide nodes with heuristic information and increase the quality of ants’ optimization.

Experimental results

This section presents the comparative experimental results and analysis of the proposed DAACO and some other algorithms on the basis of the TSP instance set.

The algorithms ACO-3-opt and DAACO are all written in C++. The operating environment is Windows10X64 with CPU 1.10 GHz and 16 GB RAM. The main parameter settings in these algorithms are shown in Table 1. Each instance runs 30 iterations, and each iteration is run 300 times.

The experiment uses the examples in the TSP test benchmark dataset TSPLIB. According to the size of the instance, we derive the following definitions: small-scale instances with an instance size between [1–500], medium-scale instances with an instance size between [501–1000], and large-scale instances with an instance size between [1001–]. To facilitate the comparison with the latest research results, this study directly uses the data given in the literature.

Table 1 describes some of the parameters used in this study.

Strategy comparison

In the first stage, we present the comparison results of ACO-3-opt, the ACO-3-opt algorithm with strategy 1, the ACO-3-opt algorithm with strategy 2, and the proposed algorithm (DAACO). In small-scale instances, the results of these three algorithms are not much different. Thus, we select nine large-scale TSP examples to test the three algorithms. The data used in this experiment come from the TSPLIB library. For each instance, statistical average results are obtained in 30 simulation runs.

Table 2 shows the experimental results of the four algorithms. The error rate in Table 2 is listed as a function between the best experimental result of the test algorithm and the currently known optimal solution. The Error rate is obtained according to Eq. 9:

The error is the ratio relative to the optimal solution. BestSolution is the optimal solution obtained through experiments, and OptimalSolution is the currently known optimal solution. Table 2 shows that for nine TSP instances, the proposed DAACO can achieve a near-optimal solution.

Strategy 1 and Strategy 2 can obtain better solutions than the original ACO-3-opt algorithm. Moreover, DAACO can find the best results among all the algorithms. Relative to ACO-3-opt, DAACO shows a significantly improved optimization performance in the aforementioned instances. On the one hand, DAACO can optimize the initial distribution of ants to cover the entire graph as much as possible. On the other hand, the optimization strategy is modified and optimized during the DAACO algorithm’s search process.

Performance comparison

To fully illustrate the performance of the DAACO algorithm, we present some large-scale instances in Table 3 (i.e., Pr1002, U1060, Vm1084, Rl1304, Rl1323, Fl1577). Given the missing data in the comparative experiment in the reference, this study only provides the results of the DAACO experiment.

The second phase of this research focuses on comparing the proposed algorithm with the two most recent algorithms, namely DFACO and DEACO (Table 3). We perform the comparison in terms of AverageSolution, execution time, and BestSolution in each run. The first 14 rows in Table 3 show the examples of small-scale instances, the next 15–20 rows are considered as medium-scale instances, and the last 21–26 rows are the large-scale instances.

Table 3 shows that in many instances, the result of DAACO is better than those of the other two, as shown in the boldface text. DAACO obtained the optimal solution of 25 out of 26 instances, and the experimental performance was much better than that of DFACO and DEACO. Moreover, in many instances, the execution time of the proposed method is significantly less than that of the other two algorithms. In most instances, the results of the other instances obtained from DAACO, except for rat575, are satisfactory in terms of AverageSolution, BestSolution, and execution time. We find that DAACO performs better than DEACO and DFACO in medium-sized instances in terms of execution time and shortest path discovery. The reason behind this difference is the improvement of the number and placement of ants and the modification of the local selection strategy based on the classic ACO algorithm.

The third stage of this research is to compare the proposed algorithm (DAACO) with other recent algorithms, namely DCS, DEACO, and MGACACO. Table 4 indicates that although the proposed algorithm fails to provide OptimalSolution such as lin318, rat575, and other instances, other aspects such as StandardDeviation (SD) and AverageSolution (Avg) are considered in this comparison stage. In this experimental phase, 24 TSP instances and 30 simulation runs are executed independently. The results in Table 4 show that in terms of SD and Avg, DAACO’s results are the best, and it obtains the optimal solution for 20 instances.

To facilitate the comparison of the proposed algorithm with the DFACO and DEACO algorithms, Figs. 4 and 5 use the data of 20 instances given in the literature.

Figure 4 shows the average execution time of the above algorithm among 20 TSP instances in the literature. DAACO algorithm only takes 8.17% time of DFACO algorithm and 8.44% time of DEACO algorithm, and DAACO algorithm has better performance. The comparison of the average values of BestSolutions is shown in Fig. 5. Both figures show that the proposed method outperforms the other two algorithms in terms of execution time and BestSolutions.

Figure 6 illustrates the average solution of four TSP instances, namely lin318 (Fig. 6a), u574 (Fig. 6b), d657 (Fig. 6c), and d1655 (Fig. 6d), obtained by comparing the algorithms DCS, MGACACO, DEACO, and DFACO. lin318 and u574 are small and medium-scale graph data, respectively, while d657 and d1655 are large-scale graph data. From the results of these four typical experiments and Table 4, we find that the average value obtained by the DAACO algorithm is better than those of the other algorithms. Referring to other experimental results, we can conclude that the DAACO algorithm has a relatively wide range of adaptability.

Discussion

TSP is a classical combinatorial optimization problem. The effective solution of TSP is of great significance to the solution of other NP problems.

This research mainly studies the ACO to solve TSP. For the problem of fixed parameters of ACO, this research proposes an improved initialization method, which can adaptively determine the number of ants. For the problem that ACO is prone to fall into local optimization, this research proposes an improved optimization strategy, which can greatly optimize the ant optimization process. The experimental results show that DAACO has better solution performance than DFACO and DEACO, specifically, DAACO uses shorter time, gets better solution and has better stability.

Due to algorithm design and data set, our current research mainly focuses on solving symmetric TSP and lacks of research on asymmetric TSP. Trying to solve asymmetric TSP is one of our future research directions.

Conclusion

The ACO algorithm is an optimization algorithm inspired by the behavior of ants. The pheromone model is one of the main mechanisms in the ACO metaheuristic algorithm, which can generate probabilistic solutions. On the basis of ant colony algorithm, this research proposes two improved strategies to form DAACO algorithm, including: an initialization strategy based on convex hull and K-means clustering, which enables the number of ants to adapt to different scale instances and increases the diversity of initial solutions; a local search strategy based on neighbor pair optimizes the ant’s search mode, increases the heuristic information of the ant’s local search, and can effectively prevent the search process of the algorithm from falling into local optimization. DAACO algorithm has been tested in TSPLIB data set. Compared with the heuristic algorithm in recent years, DAACO shows good performance in solving performance and efficiency.

Data availability

Some or all data, models, or code generated or used during the study are available from the corresponding author by request.

References

Chen S-M, Chien C-Y (2010) A new method for solving the traveling salesman problem based on the genetic simulated annealing ant colony system with particle swarm optimization techniques. In: Proceedings of the ninth international conference on machine learning and cybernetics, Qingdao, 11–14 July 2010, vol 5. IEEE, pp 2477–2482. https://doi.org/10.1109/ICMLC.2010.5580809

Ouaarab A, Ahiod B, Yang X-S (2014) Discrete cuckoo search algorithm for the travelling salesman problem. Neural Comput Appl 24(7):1659–1669. https://doi.org/10.1007/s00521-013-1402-2

Yang X-S (2010) A new metaheuristic bat-inspired algorithm. In: Nature inspired cooperative strategies for optimization (NICSO 2010). Springer, Berlin, pp 65–74. https://doi.org/10.1007/978-3-642-12538-6_6

Osaba E, Yang X-S, Diaz F, Lopez-Garcia P, Carballedo R (2016) An improved discrete bat algorithm for symmetric and asymmetric traveling salesman problems. Eng Appl Artif Intell 48:59–71. https://doi.org/10.1016/j.engappai.2015.10.006

Akhand M, Ayon SI, Shahriyar S, Siddique N, Adeli H (2020) Discrete spider monkey optimization for travelling salesman problem. Appl Soft Comput 86:105887. https://doi.org/10.1016/j.asoc.2019.105887

Khan I, Maiti MK (2019) A swap sequence based artificial bee colony algorithm for traveling salesman problem. Swarm Evol Comput 44:428–438. https://doi.org/10.1016/j.swevo.2018.05.006

Choong SS, Wong L-P, Lim CP (2019) An artificial bee colony algorithm with a modified choice function for the traveling salesman problem. Swarm Evol Comput 44:622–635. https://doi.org/10.1016/j.swevo.2018.08.004

Cinar AC, Korkmaz S, Kiran MS (2020) A discrete tree-seed algorithm for solving symmetric traveling salesman problem. Eng Sci Technol Int J 23(4):879–890. https://doi.org/10.1016/j.jestch.2019.11.005

Yousefikhoshbakht M (2021) Solving the traveling salesman problem: a modified metaheuristic algorithm. Complexity 2021:Article ID 6668345. https://doi.org/10.1155/2021/6668345

Dorigo M, Gambardella LM (1997) Ant colonies for the travelling salesman problem. Biosystems 43(2):73–81. https://doi.org/10.1016/S0303-2647(97)01708-5

Dorigo M, Gambardella LM (1997) Ant colony system: a cooperative learning approach to the traveling salesman problem. IEEE Trans Evol Comput 1(1):53–66. https://doi.org/10.1109/4235.585892

Elloumi W, El Abed H, Abraham A, Alimi AM (2014) A comparative study of the improvement of performance using a PSO modified by ACO applied to TSP. Appl Soft Comput 25:234–241. https://doi.org/10.1016/j.asoc.2014.09.031

Escario JB, Jimenez JF, Giron-Sierra JM (2015) Ant colony extended: experiments on the travelling salesman problem. Expert Syst Appl 42(1):390–410. https://doi.org/10.1016/j.eswa.2014.07.054

Liu Y, Wang X (2015) Study on an improved ACO algorithm based on multi-strategy in solving function problem. Int J Database Theory Appl 8(6):223–232. https://doi.org/10.14257/ijdta.2015.8.5.19

Duan P, Yong A (2016) Research on an improved ant colony optimization algorithm and its application. Int J Hybrid Inf Technol 9(4):223–234. https://doi.org/10.14257/ijhit.2016.9.4.20

Lei W, Wang F (2016) Research on an improved ant colony optimization algorithm for solving traveling salesmen problem. Int J Database Theory Appl 9(9):25–36. https://doi.org/10.14257/ijdta.2016.9.9.03

Li M, Wan Z (2016) Research on an improved ACO algorithm based on multi-strategy for solving TSP. Int J Hybrid Inf Technol 9(9):323–334. https://doi.org/10.14257/ijhit.2016.9.9.30

Liu Y (2016) Research on the algorithm optimization of improved ant colony algorithm-LSACA. Int J Signal Process Image Process Pattern Recogn 9(3):143–154. https://doi.org/10.14257/ijsip.2016.9.3.13

Mavrovouniotis M, Müller FM, Yang S (2017) Ant colony optimization with local search for dynamic traveling salesman problems. IEEE Trans Cybern 47(7):1743–1756. https://doi.org/10.1109/TCYB.2016.2556742

Deng W, Zhao H, Zou L, Li G, Yang X, Wu D (2017) A novel collaborative optimization algorithm in solving complex optimization problems. Soft Comput 21(15):4387–4398. https://doi.org/10.1007/s00500-016-2071-8

Gülcü Ş, Mahi M, Baykan ÖK, Kodaz H (2018) A parallel cooperative hybrid method based on ant colony optimization and 3-opt algorithm for solving traveling salesman problem. Soft Comput 22(5):1669–1685. https://doi.org/10.1007/s00500-016-2432-3

Dahan F, El Hindi K, Mathkour H, AlSalman H (2019) Dynamic flying ant colony optimization (DFACO) for solving the traveling salesman problem. Sensors 19(8):1837. https://doi.org/10.3390/s19081837

Ebadinezhad S (2020) Deaco: adopting dynamic evaporation strategy to enhance ACO algorithm for the traveling salesman problem. Eng Appl Artif Intell 92:103649. https://doi.org/10.1016/j.engappai.2020.103649

Tuani AF, Keedwell E, Collett M (2020) Heterogenous adaptive ant colony optimization with 3-opt local search for the travelling salesman problem. Appl Soft Comput 97:106720. https://doi.org/10.1016/j.asoc.2020.106720

Graham RL (1972) An efficient algorithm for determining the convex hull of a finite planar set. Inf Pro Lett 1:132–133. https://doi.org/10.1016/0020-0190(72)90045-2

An PT, Huyen PTT, Le NT (2021) A modified graham’s convex hull algorithm for finding the connected orthogonal convex hull of a finite planar point set. Appl Math Comput 397:125889. https://doi.org/10.1016/j.amc.2020.125889

Hartigan JA, Wong MA (1979) Algorithm as 136: a k-means clustering algorithm. J R Stat Soc Ser C (Appl Stat) 28(1):100–108. https://doi.org/10.2307/2346830

Reinelt G (1991) TSPLIB—a traveling salesman problem library. ORSA J Comput 3(4):376–384. https://doi.org/10.1287/ijoc.3.4.376

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, H., Lee, A., Lee, W. et al. DAACO: adaptive dynamic quantity of ant ACO algorithm to solve the traveling salesman problem. Complex Intell. Syst. 9, 4317–4330 (2023). https://doi.org/10.1007/s40747-022-00949-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00949-6