Abstract

Opposition-based differential evolution (ODE) is a well-known DE variant that employs opposition-based learning (OBL) to accelerate the convergence speed. However, the existing OBL variants are population-based, which causes many shortcomings. The value of the jumping rate is not self-adaptively adjusted, so the algorithm easily traps into local optima. The population-based OBL wastes fitness evaluations when the algorithm converges to sub-optimal. In this paper, we proposed a novel OBL called subpopulation-based OBL (SPOBL) with a self-adaptive parameter control strategy. In SPOBL, the jumping rate acts on the individual, and the subpopulation is selected according to the individual’s jumping rate. In the self-adaptive parameter control strategy, the surviving individual’s jumping rate in each iteration will participate in the self-adaptive process. A generalized Lehmer mean is introduced to achieve an equilibrium between exploration and exploitation. We used DE and advanced DE variants combined with SPOBL to verify performance. The results of performance are evaluated on the CEC 2017 and CEC 2020 test suites. The SPOBL shows better performance compared to other OBL variants in terms of benchmark functions as well as real-world constrained optimization problems.

Similar content being viewed by others

Introduction

Many challenging problems arise in real-world applications [1,2,3,4,5]. Single-objective optimization algorithms play an essential role in many fields. Although some real-world problems can be solved, they are limited by the lack of computational power, and it is crucial to design an efficient algorithm. Bio-inspired computation can easily solve these problems. It is mainly divided into two categories: Evolutionary Computation (EC) and Swarm Intelligence (SI) [6]. Differential Evolution (DE) is the most outstanding evolutionary algorithm.

DE is a simple yet powerful population-based evolutionary algorithm for real-parameter optimization problems in the continuous domain, proposed by Storn and Price [7]. It has the advantages of simplicity, effectiveness, stability, etc. [8], and is widely used in real-world optimization problems [9], engineering applications [10], pattern recognition [11], feature selection [12], machine learning and other fields [13]. DE and its variants are used to solve complex problems. The common issues of DE are premature convergence and stagnation. These issues are closely related to population diversity. If the population diversity is very small, this means that the DE converges very fast. Otherwise, if the population diversity is consistently big, DE will not converge to the global optimal. Hence, it is essential to find a trade-off between exploration and exploitation [14].

Since DE was proposed, many techniques have been combined with DE to improve its performance [9, 10]. In the past decade, among different kinds of DE variants, opposition-based DE (ODE) was one of the best [15]. ODE employed OBL on population initialization and generation jumping [16]. DE employed OBL to accelerate the convergence speed by comparing the fitness of a candidate to its opposite and choosing the fitter one. The mathematical proof of OBL was given in [17,18,19]. Different opposite solutions are always closer to the global optimal with higher probability than random solutions. The issue of OBL variants has received considerable critical attention. Since then, many researchers have designed OBL variants to enhance the performance of soft computing algorithms [20,21,22]. To save the number of fitness evaluations, Rahnamayan et al. proposed OBL with protective generation jumping (OBLPGJ) to stop the opposition if the opposite solution’s success rate drops to a fixed threshold [23]. Rahnamayan et al. proposed quasi-opposition DE (QODE) [24]. In QODE, the opposite point was mapped to an interval. QODE reduced the expected distance between the optimum and its opposite. Ergezer et al. proposed quasi-reflection ODE (QRODE), which was the most cost effective opposition method with the highest probability of being closer to the optimal [18]. Wang et al. proposed generalized opposition-based DE (GODE) and successfully applied it to large-scale optimization problems [25]. Xu et al. proposed current-optimum-ODE (COODE) based on the optimal of the current population [26]. Seif et al. proposed the opposition-based metaheuristic optimization algorithm (OBA), which introduced extended opposition and reflected extended opposition [27]. Most studies in OBL have only focused on the upper and lower bounds of the current search space. In [28], centroid opposition-based DE (CODE) was proposed, which computed opposition by involving the entire population. In [29], Gaussian distribution-based opposition was presented to solve the inverse problem of structural damage assessment. This opposition aimed to enhance the exploration ability during both the initialization and iteration process. Xu et al. proposed opposition-mutual learning using mutual learning to guarantee the trade-off between exploration and exploitation [30].

In the opposition, some opposite solutions are randomly mapped to the current search space [18, 24, 27], some are calculated according to the upper and lower bounds of the current search space [15, 25], some are calculated according to the optimal of the current population [26], and some are calculated based on entire population [28]. The above OBL variants are all population-based, which calculate opposite solutions by entire population information if the condition of jumping rate is satisfied. No previous study has investigated subpopulation-based opposition. The following drawbacks affect OBL variants: (1) As the OBL variant follows a population-based strategy, fitness evaluations can be wasted if opposite individuals no longer enter the next generation during the optimization process [31]. (2) Up to now, far too little attention has been paid to parameter control strategy in OBL community.

This paper proposes a subpopulation-based OBL (SPOBL) with a self-adaptive parameter control strategy that mitigates all the limitations. Instead of using a population-based opposition, we employed a subpopulation-based opposition to reduce the waste of fitness evaluations. We found that the self-adaptive strategy is introduced by assigning jumping rate to each individual, it is possible to select some individuals as a subpopulation to perform the opposition. In the self-adaptive strategy, the subpopulation size is controlled to balance between exploration and exploitation during the evolutionary process. A generalized Lehmer mean is proposed, and the subpopulation size is dynamically controlled by a new parameter p. We carried out experiments on the CEC 2017 and CEC 2020 test suites [32, 33]. The results of 29 benchmark functions and 3 real-world problems confirm the excellent performance of SPOBL compared to eight state-of-the-art OBL variants. The main contributions of this paper are as follows:

-

1.

A new OBL variant called SPOBL is proposed, which is competitive with eight state-of-the-art OBL variants.

-

2.

The novel SPOBL can be combined with any DE variant and shows superior performance.

-

3.

SPOBL not only performs well on benchmark functions, but also outperforms other algorithms in dealing with real-world optimization problems.

The rest of this paper is organized as follows. The next section reviews the canonical DE algorithm and OBL variants. The subsequent section introduces SPOBL and integrates it with DE. The experimental studies are shown next. Finally, the conclusions are given.

Preliminaries

Canonical DE algorithm

Population initialization

The search space optimized by a real function is \({[a, b]}^D\), where D is the dimension of the optimized function. a is the lower bound of search space. b is the upper bound of the search space. The population of DE is initialized as

where \(\mathrm{rand}(0,1)\) is a random number whose value is uniformly distributed from (0,1). The size of \(X^0\) is \(D\times NP\). NP is the population size. The population is expressed as \(X^G=\{\mathbf{x }_i^G|i=1,2,...,NP\}\), \(\mathbf{x }_i^G=\{x_{i,j}^G|j=1,2,... ,D\), \(x_{i,j}^G\in [a,b]\}\), G represents the number of iterations.

Mutation

After the population \(X^G=\{\mathbf{x }_i^G|i=1,2,...,NP\}\) is initialized. Mutation operator is performed on the target vector \(\mathbf{x }_i^G=\{x_{i,j}^G|j= 1,2,...,D\}\). DE/rand/1 mutation strategy is as follows:

DE/rand/1

where the indexes \(r_1\ne r_2\ne r_3 \ne i\) are numbers randomly selected from [1, NP]. F is a real positive control parameter scaling difference vector. The value of F is bigger, the diversity of the population is higher. The convergence speed is faster due to the value of F being smaller.

Crossover

To maintain the population diversity after mutation, the donor vector \(\mathbf{v }_i\) and the target vector \(\mathbf{x }_i\) are crossed under the probability of CR. The specific operations are as follows:

where \(CR\in [0,1]\) is the crossover rate, \(j_{\mathrm{rand}}\) is a random integer from [1, D]. The donor vector \(\mathbf{v }_i\) differs from its target vector \(\mathbf{x }_i\) due to the use of \(j_{\mathrm{rand}}\). CR controls the number of variables from \(\mathbf{v }_i\) to \(\mathbf{u }_i\), so the larger value of CR maintains the population diversity.

Selection

Selection is an approach after mutation and crossover. The trail vector \(\mathbf{u }_i\) (offspring) should make a one-to-one selection with their corresponding target vector \(\mathbf{x }_i\) (parent). The specific selection formula is as follows:

where fitness(.) denotes the fitness function to be minimized. Therefore, if the new trial vector produces a value equal to or less than the objective function, it will replace the current generation’s corresponding target vector.

Opposition-based DE

OBL was presented as a machine learning scheme for reinforcement learning [16]. In the past 15 years, various metaheuristic algorithms have been enhanced by OBL and its variants. The primary purpose behind OBL is to consider the fitness values between candidate and opposite candidate simultaneously to obtain a better approximation of the current best candidate [15, 19, 20].

Let \(P=(x_1,x_2,...,x_{D})\) be a point in D-dimensional space, where \(x_{j}\in [a_{j},b_{j}]\), \(j=1,2,...,D\). The opposite point \(\breve{P}=(\breve{x}_{1},\breve{x}_{2 },...,\breve{x}_{D})\) is defined by its segments.

Opposition-based population initialization

Due to a lack of prior knowledge, we can only generate the opposite population based on the initial population. The specific steps are as follows:

-

Use (1) to generate an initial population X.

-

Use (5) to generate the opposite population \(O_{i,j}=a_{j}+b_{j}-X_{i,j}, i=1,2,...,NP, j=1,2,...,D\), where \(O_{i,j}\) and \(X_{i,j}\) respectively represent the j th dimensional component of the i th vector of the opposite population and the original population.

-

Select the first NP individuals with the best fitness value from the \(\{X\cup O\}\) to form the initial population.

Opposition-based generation jumping

In the same way, OBL has been introduced into the evolutionary process. The population can quickly converge to the local optimum during the evolutionary process. The value of the jumping rate J is used to control the number of times the OBL is executed during the evolutionary process. After a population has completed mutation, crossover and selection, it is determined whether the population performs opposition based on the jumping rate. Unlike the population initialization, in generation jumping, the bound of the opposite individual changes with the iteration progress, and the opposite individual is calculated based on dynamic bound.

where \(L_{j}\) and \(U_{j}\) are the lower bound and upper bound of jth variable in the current population.

OBL variants

In [24], QOBL was proposed, quasi opposite point was introduced as a uniformly random point between the center point and the opposite point. The quasi-opposition \(\breve{x}_{j}{}^{qo}\) is defined as follows:

where \(\mathrm{rand}(x,y)\) is a uniformly number between x and y. \(M_j\) is mid-point of j-dimensional components, \(\breve{x}_j\) represents opposite point of j-dimensional components.

After quasi opposition proposed, Ergezer et al. [18] introduced QROBL. The quasi-reflection opposition \(\breve{x}_{j}{}^{qr}\) is defined as follows:

Similar to quasi-opposition and quasi-reflection opposition, Seif et al. designed two OBL variants [27]: extended opposition \(\breve{x}_{j}{}^{eo}\) and reflected extended opposition \(\breve{x}_{j}{}^{reo}\) are defined by

In [26], Xu et al. proposed the current optimum opposition (COOBL), which used the information of the current best individual.

where \({x}_{\mathrm{best},j}\) is the best solution of current population.

Generalized OBL was proposed by Wang et al. [25]. The GOBL is defined by:

where k is a random number from [0,1].

In [28], Rahnamayan et al. proposed centroid opposition (COBL), which employed the information of the entire population (centroid point). The COBL is defined by:

Proposed algorithm

First, in the evolutionary process, the generation of the opposite population depends on the jumping rate. All individuals generate corresponding opposite individuals if the random number is smaller than the jumping rate, which is called the population-based opposite strategy. However, population-based opposition reduces the utilization of the opposite population when the global optimal is approached. Therefore, the subpopulation-based opposite strategy is proposed in this paper. Moreover, there is no fixed parameter setting suitable for all problems or at different stages of evolution of the same problem. The self-adaptive parameter control strategy can solve this problem. For these reasons, the subpopulation-based OBL (SPOBL) with a self-adaptive parameter strategy is proposed. SPODE is the proposed algorithm, which is the combination of DE and SPOBL.

Motivations

There are two primary motivations in this paper: (1) previous OBL variants are population-based, and there is no research on subpopulation-based. (2) The research on parameter control of OBL is not deep enough, so parameter control of OBL is a crucial problem in the OBL community.

First, OBL and its variants aim to increase population diversity and thus accelerate the convergence of the algorithm. However, there has been a problem that the individual generated by the opposition cannot enter the next generation during the evolutionary process, so the opposition wastes fitness evaluations. Such individual is called insensitive individual, and this individual is commonly found in existing population-based OBL variants. To eliminate the influence of the insensitive individual on the algorithm, this paper proposes a subpopulation-based opposition framework from the individual’s perspective.

Figure 1 shows the definition of the insensitive individual, where x represents the original individual, and ox represents the opposite individual of x. f(x) and f(ox) represent the corresponding fitness values, respectively. It can be seen that when \(f(x)<f(ox)\), then x is the insensitive individual (only consider the problem of minimization). To eliminate the influence of insensitive individuals on the performance of the algorithm, a subpopulation-based opposition is proposed.

The subpopulation-based opposition selects the individuals to perform the opposition by their jumping rates. Hence, insensitive individuals still participate in opposition during the iteration, and the subpopulation-based opposition is also influenced by the individuals. Although population-based and subpopulation-based oppositions are persistently affected by insensitive individuals, the latter is less impacted than the former. It also inspired us to design another strategy to further eliminate this effect.

Besides, OBL is a mechanism that allows the algorithm to explore more search regions, which will increase the exploration capability of the algorithm. To balance between exploration and exploitation, we proposed a self-adaptive parameter control strategy. This strategy is used to select individuals suitable for the opposition and enhances the exploitation ability by controlling the subpopulation size. At the beginning stage of evolution, the subpopulation size is large because exploration is more important than exploitation. However, overemphasizing exploration and ignoring exploitation will make the population converge too slowly or even not converge. As the iteration progresses, the subpopulation size should become smaller to eliminate the influence of insensitive individuals and finally balance between exploration and exploitation.

Subpopulation opposition-based learning

Subpopulation strategy

In [34], the population was divided into multiple subpopulations according to fitness values. Then different mutation strategies were assigned to each subpopulation. The subpopulation in this paper is randomly selected according to probability. The opposite subpopulation and the original population are combined. Then select the first NP fittest individuals to enter the next generation. In the improved algorithm, the jumping rate \({\varvec{J}}\) is a vector and acts on individuals, while the jumping rate J of ODE is a number and acts on the population. The previous OBL variants were all population based, and the OBL variant in this paper is subpopulation based. The specific operation steps are as follows:

-

After the population \(X=\{\mathbf{x }_i|i=1,2,...,NP\}\) is initialized, the jumping rate vector \({\varvec{J}}=\{j_1, j_2,...,j_{NP}\}\) is generated using a Gaussian distribution with mean \(\mu _{J}\), where \(\mu _{J}\) represents the mean value of the \({\varvec{J}}\), and its initial value is set to 0.3 [15].

-

After the population undergoes mutation, crossover and selection, the subpopulation \(SX=\{\mathbf{x }_j|j=1,2,...,m<NP\}\) with size m is generated according to \({\varvec{J}}=\{j_{i}|i=1,2,...,NP\}\). The corresponding individual becomes a member of the subpopulation, if a random number is less than \(j_i\). The subpopulation size is related to \(\mu _{J}\). The relationship between the subpopulation size and \(\mu _{J}\) is \(mean(m)\approx \mu _{J}\cdot NP\), \(mean(\circ )\) is the arithmetic mean of \(\circ \).

-

Equation (13) performs on SX to generate the opposite subpopulation \(OSX=\{{\varvec{x}}_j|j=1,2,...,m\}\).

-

Select the first NP fittest individuals from the set \(\{X\cup OSX\}\) to enter the next generation and record the surviving opposite individuals’ jumping rates as \(S_{J}\).

The individuals of the subpopulation are randomly selected according to their jumping rates. Random selection can increase the population diversity and maintain search efficiency. In each iteration, every individual is matched with a jumping rate. When the individual ’s jumping rate is higher than a random number, this individual as the subpopulation member and participates in the opposition. \(\mu _{J}\) is the mean value of the entire population’s jumping rate. With the increase of \(\mu _{J}\), the subpopulation size also increases. It can be seen from the above that \(\mu _{J}\) is an important parameter that affects the subpopulation size. Thus, the generalized Lehmer mean controls the value of \(\mu _{J}\) in the self-adaptive strategy. In summary, the generalized Lehmer mean can control the subpopulation size during the evolutionary process. More details about self-adaptive parameter control strategy and generalized Lehmer mean are observed in the next subsection.

Self-adaptive parameter control strategy

As the iteration progresses, the individual jumping rate \(j_{i}\) is generated by Gaussian random number with the mean value of \(\mu _{J}\) and variance of 0.1 [35]:

\(S_{J}\) denotes the set of jumping rates that successfully enters the next generation of individuals from OSX. The initial value of \(\mu _{J}\) is 0.3 and is updated with the following formula as the iteration progresses [15, 22].

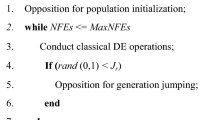

where \(\text {Lehmer}(S_{J},p)\) represents the generalized Lehmer mean of \(S_{J}\) with p as a parameter, the pseudo-code of SPOBL is provided in Algorithm 1. The corresponding explanation of the pseudo-code is shown in Fig. 2.

The core idea of Eq. (15) is: Individuals with good parameters are often easier to survive. It uses \(S_{J}\) to record the jumping rates that successfully enter the next generation of individuals. Then, \(S_{J}\) is employed to generate parameters more suitable for the evolutionary process. In [35], \(c=0.05\) usually performs best.

Generalized Lehmer mean

We can conclude from the previous analysis that the subpopulation size is related to \(\mu _{J}\), which determines the subpopulation diversity. The diversity will decrease [36] if the convergence speed is accelerated. To better balance between population diversity and convergence speed, the arithmetic mean and Lehmer mean were used to generate the crossover rate and scaling factor [35]. Compared to arithmetic mean, Lehmer mean provides a larger mean from the same group of data. To further extend the range of values of \(\mu _{J}\), we introduced the generalized Lehmer mean with the parameter p for controlling the value of \(\mu _{J}\) during the evolutionary process. Thus, the subpopulation size is dynamically controlled according to parameter p of the generalized Lehmer mean during the evolutionary process. The calculation formula of the generalized Lehmer mean is shown below:

Let \(n=2\), \(x_{1}\) and \(x_2\) are two positive numbers. In this case, partial derivative of generalized Lehmer mean of two positive numbers with respect to p is:

where Eq. (16) is a monotonically increasing function of p. With the increase of p, the value of generalized Lehmer mean obtained from the same set of data gradually increases. According to the previous analysis, the subpopulation size is controlled by controlling the value of p.

Let p is a real number, the value of L is an interesting problem when p tends to infinity. So Eq. (16) can also be expressed in the following form:

According to Eq. (18), the following conclusions can be drawn:

The value of \(\mu _J\) is a crucial problem in the evolutionary process, which affects the algorithm’s performance. In [15, 22], the recommended value of jumping rate was [0.1,0.4]. On the one hand, if the value of p is too large, it will result in the individual jumping rate with a value of 0.6, thus the fitness evaluations will be wasted. On the other hand, if the value of p is too small, that will cause the value of the individual jumping rate to be 0.1, and the effect of opposition is not significant. Thus, we designed a numerical test and considered four schemes for adapting \(\mu _J\) to gain the best performance. These four schemes are shown below.

-

1.

Positive: The value of p is 2, this scheme results in \(\mu _J\) rapidly grow.

-

2.

Neutral: The value of p is 1, this scheme causes \( \mu _J \) to remain almost unchanged.

-

3.

Negative: The value of p is 0.5, this scheme results in \(\mu _J\) rapidly decrease.

-

4.

Time varying: The \(\mu _{J}\) is linear decreases from 0.6 to 0.1.

The specific results are shown in Fig. 3. The horizontal axis indicates the number of iterations and the vertical axis represents the value of \(\mu _J\). We can see that when p equals 1, the value of \(\mu _J\) remains around 0.3 and the trend of \(\mu _J\) is parallel to the horizontal axis. However, when p is 0.5, the value of \(\mu _J\) rapidly decreases in the first 200 iterations, and after 200 iterations it also plateaus and the final value of \(\mu _J\) remains at 0.1. When the value of p is 1.5 and 2, the value of \(\mu _J\) also rapidly increases in the first 200 iterations. However, the speed of increment is different, and the larger the value of p, the faster \(\mu _J\) increases. When p equals 2, the final value of \(\mu _J\) falls around 0.6. Thus, the range of p is determined to be between 0.5 and 2 according to the results. The effect of the four schemes on the performance of SPODE has been further investigated in Sect. 4.2.4.

The generalized Lehmer mean can also be expressed as several common mean values. Without loss of generality, in this case, the generalized Lehmer mean is

from Eq. (21), it can be seen that generalized Lehmer mean is the harmonic mean, geometric mean, arithmetic mean and contraharmonic mean when \(p=0\), \(p=0.5\), \(p=1\) and \(p=2\), respectively.

Subpopulation opposition DE

The SPOBL is proposed by combining the subpopulation-based strategy and the self-adaptive parameter control mechanism with the generalized Lehmer mean. SPODE is a combination of DE and SPOBL. Unlike other ODE variants, SPODE applies the jumping rate to the individual. SPODE executes SPOBL in every iteration according to a predefined jumping rate. If a random number generated according to the uniform distribution is lower than or equal to the jumping rate, the individual corresponding to this jumping rate will be selected as a subpopulation and performs opposition. The entire pseudo-code of SPODE is presented in Algorithm 2.

Computational complexity of SPODE

Compared with classical DE, ODE variants require additional computations on the opposition. In each generation, all individuals in the subpopulation take into account the opposition. The computational complexity of SPOBL is \(O(D\times \mathrm{NP})\). The complexity of classical DE is \(O(G_\mathrm{max}\times D \times \mathrm{NP})\), and the total computational complexity of DE with SPOBL is \(O(G_\mathrm{max} \times [D \times \mathrm{NP}+ D \times \mathrm{NP}])\) which can be simplified to \(O(G_\mathrm{max}\times D \times \mathrm{NP})\). However, the main computational time of evolutionary algorithms is used to evaluate the fitness function. Moreover, the additional calculation cost due to diversity and opposition is negligible compared with fitness evaluations.

Experimental verification

Experimental setup

All the experiments were conducted on Windows 10 Pro-64 bit of a PC with Inter(R) Core(TM) i7-9700 CPU @ 3.0GHz. All the algorithms were implemented in the MATLAB 9.7 (R2019b) programming language.

Benchmark functions

We employed a set of 29 benchmark functions for explaining the performance of the proposed algorithm. The current well-known single objective optimization test suites contain CEC 2005, 2013, 2014, and 2017. We chose the CEC 2017 as the test suite for this experiment. In the CEC 2017, there are two unimodal functions (F1, F3), seven simple multimodal functions (F4–F10), ten expanded hybrid functions (F11-20), ten composition functions (F21–F30). Please refer to [33] for more details about CEC 2017.

Parameter setting

We compared the performance of SPOBL with eight state-of-the-art OBL variants, namely: (1) OBL [15], (2) QOBL [24], (3) QROBL [18], (4) GOBL [25], (5) COOBL [26], (6) EOBL [27], (7) REOBL [27] and (8) COBL [28]. To have a fair comparison, all algorithms in this section use DE/rand/1/bin. The values of F and CR are 0.5 and 0.9 [9]. The jumping rate of ODE, GODE, COODE and CODE is 0.3 [22, 37]. The jumping rate of QODE, QRODE, EODE and REODE is 0.05 [22, 37]. The initial value of \(\mu _J\) is 0.3. The dimensionality of the problems D is 30 and 50, and the corresponding population size NP is 150 and 250 [9, 10]. The max fitness evaluations is \(10^4\times D\). Every algorithm independently runs 30 times on each benchmark function [33].

Comparison metrics

The fitness error value (FEV) evaluates the performance of the test algorithm, which can be defined as follows.

where F(x) represents fitness function, \({\hat{x}}\) is the best solution of test algorithm, \(x^*\) is the global optimal of F(x).

Statistical test

To compare whether there is a significant difference in performance between the two algorithms, we used the pairwise comparison, which is the Wilcoxon rank-sum test with \(\alpha =0.05\) significance level. The null hypothesis is that the proposed algorithm and the corresponding algorithm are independent. When the null hypothesis is rejected, we used three symbols to denote the relationship between SPODE and the corresponding algorithm.

-

1.

\(+\): The performance of the proposed algorithm is significantly better than that of the corresponding algorithm.

-

2.

\(=\): The performance of the proposed algorithm is not statistically significant than that of the corresponding algorithm.

-

3.

−: The performance of the proposed algorithm is significantly worse than that of the corresponding algorithm.

In pairwise comparison, the family-wise error rate (FWER) loses control, such as the Wilcoxon test, should not be used to conduct various comparisons involving a set of algorithms [38]. Therefore, to determine whether the difference between multiple algorithms. We employ the Friedman test with Tukey–Kramer post hoc [39].

Experiment results

In this subsection, we designed six experiments to verify the performance of the proposed algorithm. In Sect. 4.2.1, we evaluated OBL variants and SPOBL with DE on the CEC 2017 at 30-D and 50-D. In Sect. 4.2.2, we analyzed the run-time complexity of OBL variants embedding on DE. In Sect. 4.2.3, we evaluated SPODE with different strategies to verify different algorithms’ influence. In Sect. 4.2.4, the comparison is performed between different p and time-varying. Finally, to illustrate the effectiveness of SPOBL on advanced DE variants, we calculated the efficiency of SPOBL embedding on LSHADE and jSO in Sects. 4.2.5 and 4.2.6 . The best results are highlighted in boldface.

Comparison for DE with OBL variants

In this part, the performance results on CEC 2017 at 30-D and 50-D are presented. Table 1 provides the results of mean and standard deviation and the Wilcoxon test. From the table, it can be seen that SPODE performs better than most ODE variants. For the unimodal functions (F1, F3), COODE and SPODE find the optimal on F1 and F3, respectively. Both SPODE and CODE converge to the local optimum on F1. This reveals that the exploitation ability of SPODE should be further studied. The performance of COODE on F1 and F3 rank first and second, which indicates that COODE has a high exploitation ability on the unimodal functions. For the multimodal functions (F4–F10), CODE outperforms the other algorithms on F5, F7, F8 and F10. SPODE only achieves the best performance on F6, ODE on F9 and COODE on F4. However, SPODE ranks second on F5, F7 and F8. It indicates that CODE has sufficient exploration ability on multimodal functions. SPODE is inspired by CODE, so SPODE is still very competitive in terms of multimodal functions. For hybrid functions (F11–F20), SPODE converges to global optimal on F11, F13, F14, F15 and F20. QRODE on F12 and F18. CODE on F16, F17 and F19. It demonstrates that SPODE has significant enhancement to performance due to the self-adaptive parameter control mechanism. For composition functions (F21–F30), SPODE has achieved superior results on F22, F23, F24 and F26. QODE has obtained the best performance on the F27, F28 and F30. QRODE on F25. CODE on F21 and F29. The composition functions have many local optima and many complex characteristics. Hence, the results denote that the proposed algorithm has a better balance between exploration and exploitation. The proposed mechanism facilitates better performance.

The Wilcoxon test results show that SPODE significantly finds more accurate solutions than ODE, QODE, QRODE, GODE, COODE, EODE and REODE on more than half of the functions. Compared with CODE, SPODE has significantly improved performance on 12 functions, but decreased performance on 4 functions. In other words, SPODE performs much better than other ODE variants. As shown in Table 2, the Friedman test results with Tukey–Kramer’s post hoc are provided. It can be seen from Table 2 that SPODE ranks first among all algorithms, and the outperformance over GODE and COODE is statistically significant. The top three algorithms are SPODE, ODE, and CODE. All in all, SPODE is better than all comparison algorithms in performance on CEC 2017 at 30-D.

Moreover, we analyzed the results of Table 1 based on the function’s properties. In unimodal functions (F1,F3), the performance gap of each algorithm is not significant. CODE dominates the best performance on hybrid functions. The performance of SPODE is better than other algorithms on hybrid functions (F11–F20) and composition functions (F21–F30). Table 1 shows that the proposed algorithm better balance between exploration and exploitation, and it can find better solutions than other algorithms when dealing with complex problems.

Furthermore, to clearly observe the convergence curves. We divided the comparison algorithms into two groups. The first group is based on the original OBL and contains QOBL, QROBL, EOBL and REOBL. The calculation of these OBL variants requires less information (only information about the current individual and the midpoint of the search space is needed). The second group includes OBL, COBL, COOBL and GOBL. In these OBL variants, more information is needed, such as the current best individual and centroid individual. Therefore, the second group is more complicated than that of the first group. The first and second groups are shown in Figs. 4 and 5, respectively.

In the first group, we can observe that SPODE achieves better solutions on F6, F8, F9, F13, F14, F15 and F19. SPODE also has the fastest convergence speed compared to ODE variants in the first group. The main reason is that the subpopulation strategy can select the proper individuals to participate in the opposition, and the first group ODE variants have not fully utilized the information in the search space, so SPODE demonstrates superior performance when compared with them. In the second group, a different situation is shown in Fig. 5. SPODE has the best solution on F6, F13, F14 and F15, and the final converged optimal of SPODE is not very different from other algorithms on these functions. However, SPODE still provides the fastest convergence speed. It reveals that the performance of the second group of ODE variants is better than that of the first group. Thus, SPODE has offered superior results than that of the eight ODE variants on CEC 2017 at 30-D.

Convergence curves of SPODE, QODE, QRODE, EODE and REODE on eight 30-D functions: Shifted and Rotated Lunacek Bi_Rastrigin Function, Shifted and Rotated Levy Function, Shifted and Rotated Schwefel’s Function, Hybrid Function 4 (N = 4), Hybrid Function 5 (N = 4), Hybrid Function 6 (N = 4), Hybrid Function 6 (N = 6) and Composition Function 10 (N = 3), respectively

Convergence curves of SPODE, ODE, COODE, CODE and GODE on eight 30-D functions: Shifted and Rotated Lunacek Bi_Rastrigin Function, Shifted and Rotated Levy Function, Shifted and Rotated Schwefel’s Function, Hybrid Function 4 (N = 4), Hybrid Function 5 (N = 4), Hybrid Function 6 (N = 4), Hybrid Function 6 (N = 6) and Composition Function 10 (N = 3), respectively

The performance results on CEC 2017 at 50-D are presented to compare the difference between 30-D and 50-D. The trends in Table 3 are similar to those in Table 1. For unimodal functions (F1, F3), SPODE ranks second and first on F1 and F3, respectively. COODE still ranks first on F1, but SPODE ranks second on F1. This means that SPODE remains a great performance with the dimensional increase. For multimodal functions (F4–F10), SPODE obtains the best results on F5 and F6. ODE on F9. COODE on F4. CODE on F7, F8 and F10. SPODE ranks second place on F7 and F8. There is only one function on which SPODE achieves the optimal on 30-D, but there are two functions on 50-D. It means that the exploration ability of SPODE is enhanced. For hybrid functions (F11–F20), it can be noted that SPODE has gotten the best performance on F11, F14, F16 and F19. ODE on F13 and F15. QRODE on F18. COODE on F12. CODE on F17 and F20. In these functions, since hybrid functions have characteristics of multimodal functions as well as unimodal functions, no single algorithm can have the best performance on all hybrid functions, but SPODE shows the performance on the functions compared to other algorithms. For composition functions (F21–F30), SPODE outperforms the other algorithms on F21, F23, F24, F26 and F30. QRODE on F22. REODE on F25, F27 and F28. CODE on F29. The obtained results show that the proposed algorithm is competitive and stable due to the equilibrium state between exploration and exploitation.

The Wilcoxon test results show that SPODE significantly finds more accurate solutions than ODE, QODE, QRODE, GODE, COODE, EODE, REODE, and CODE for more than half of the functions. Compared with CODE, SPODE has significantly improved performance on 16 functions, but decreased performance on 4 functions. Compared with ODE, SPODE has significantly improved performance on 18 functions, but decreased performance on 7 functions. In other words, SPODE performs much better than other ODE variants. As shown in Table 4, the Friedman test results with Tukey–Kramer’s post hoc are provided. It can be seen from Table 4 that SPODE ranks first among all algorithms, and the outperformance over QODE, GODE, EODE and REODE is statistically significant. The top three algorithms are SPODE, CODE and QRODE. In summary, SPODE outperforms all comparative algorithms on the CEC 2017 at 50-D. Moreover, we analyzed the results of Table 3 based on the function’s properties. Compared with the results at 30-D, SPODE finds more accurate solutions at 50-D. SPODE significantly exhibits better performance in approximately half of the functions. In summary, the proposed algorithm shows a potent competitive edge on the CEC 2017 test suite at 30-D and 50-D.

To further study the convergence behavior of ODE variants, Figs. 6 and 7 show convergence graphs for eight CEC 2017 functions at 50-D. In Fig. 6, we can observe that SPODE achieves better solutions on these six functions. Similar convergence behavior can also be obtained in Figs. 4 and 6. SPODE demonstrates superior performance on both 30-D and 50-D. A different situation is shown in Fig. 7. SPODE obtains the best solution on F6, F8, F14, F16, F19 and F30. Although SPODE is trapped in a local optimum on F9. SPODE still provides the fastest convergence speed. Thus, SPODE has offered superior results than that of the eight ODE variants on both 30-D and 50-D.

Run-time complexity

In this subsection, we used the widely known run-time complexity criterion to measure the efficiency of different algorithms [33]. In Tables 5 and 6, the run-time complexity results on the CEC 2017 at 30-D and 50-D are introduced. In Table 5, we can see that SPOBL slightly consumes more time than other OBL variants, and we can find a similar tendency on the CEC 2017 at 50-D in Table 6. SPODE has two main aspects that differ from other OBL variants in each iteration. First, the subpopulation strategy needs to select subpopulation based on the jumping rate, which is non-existent in other population-based OBL variants, so this strategy brings extra run-time. Moreover, the indexes of surviving individuals are calculated in the parameter control mechanism to guide the next generation of parameters, and this mechanism is executed in each iteration. As a result, SPOBL slightly consumes more run-time than other OBL variants, but the increased run-time is negligible compared to the performance improvement.

Convergence curves of SPODE, QODE, QRODE, EODE and REODE on eight 50-D functions: Shifted and Rotated Lunacek Bi_Rastrigin Function, Shifted and Rotated Levy Function, Shifted and Rotated Schwefel’s Function, Hybrid Function 4 (N = 4), Hybrid Function 5 (N = 4), Hybrid Function 6 (N = 4), Hybrid Function 6 (N = 6) and Composition Function 10 (N = 3), respectively

Convergence curves of SPODE, ODE, COODE, CODE and GODE on eight 50-D functions: Shifted and Rotated Lunacek Bi_Rastrigin Function, Shifted and Rotated Levy Function, Shifted and Rotated Schwefel’s Function, Hybrid Function 4 (N = 4), Hybrid Function 5 (N = 4), Hybrid Function 6 (N = 4), Hybrid Function 6 (N = 6) and Composition Function 10 (N = 3), respectively

Evaluation on the components in SPODE

The new OBL variant is proposed in this paper includes two strategies: subpopulation-based opposition and self-adaptive parameter control. In this part, to compare the influence of different strategies on the proposed algorithm. SPODEwosa represents SPODE without a self-adaptive control strategy. SPODEwosp denotes SPODE without the subpopulation-based opposition.

Table 7 provides the results of mean, standard deviation and Wilcoxon test of the algorithms. SPODE achieves higher accuracy for 18 functions, SPODEwosa for 5 functions and SPODEwosp for 6 functions. For unimodal functions (F1, F3), SPODE significantly enhances the performance than its competitors. For multimodal functions (F4–F10), SPODE achieves the best performance on half of the functions, and the dominance of SPODE’s performance on multimodal functions is not significant compared to other types of functions. This indicates that SPODE’s exploration ability is not enhanced despite its hybrid subpopulation and self-adaptive parameter strategies. For hybrid functions and composition functions (F11–F30), SPODE has achieved impressive performance improvements on these functions, except for F18 and F20. This means that SPODE has obtained a balance between exploration and exploitation on these functions. The average ranks of these algorithms on the 29 functions are 1.36, 2.16 and 2.36, respectively. It is clear that SPODEwosa performs much better than SPODEwosp.

From the results of the Wilcoxon test listed in Table 7, compared with SPODEwosa, SPODE has significantly improved performance for 21 functions. Compared with SPODEwosp, SPODE has significantly improved performance for 19 functions, but decreased performance for 6 functions. SPODEwosp statistically outperforms SPODE on multimodal, hybrid and composition functions (F4, F9, F18, F25, F27 and F28), which means that the self-adaptive parameter control strategy can achieve a good ratio between exploration and exploitation on complex functions. So, SPODE is significantly better than SPODEwosp and SPODEwosa.

Figure 8 shows the convergence curves of SPODE, SPODEwosa and SPODEwosp. From the curve of F6, SPODE finds the global optimal, but both SPODEwosa and SPODEwosp fall into the local optimum. From the other subfigures, similar trends can be observed on F8, F11 and F29. SPODE and SPODEwosa achieve a similar performance. SPODEwosp converges to the local optimum. Both SPODE and SPODEwosa converge faster in the early stage and achieve the global optimal in the later stage, but SPODE always converges a little more rapidly than SPODEwosp. Moreover, it reveals that the influence of the subpopulation-based strategy on the proposed algorithm is more significant than that of the self-adaptive parameter control strategy. The main reason is that the subpopulation-based strategy can reduce the redundancy of calculation when solving complex functions.

Setting of newly parameter

The jumping rate J is the most important parameter in the ODE and its variants. It affects the frequency of the opposition. ODE will degenerate to DE if \(J=0\). In the subpopulation-based strategy, \({\varvec{J}}\) acts on individuals, \(\mu _J\) represents the mean value of \({\varvec{J}}\). Moreover, the Gaussian distribution with \(\mu _J\) as the mean generates a jumping rate for each individual. Thus, \(\mu _J\) affects the subpopulation size according to each individual’s jumping rate. This paper proposes a self-adaptive formula for \(\mu _J\) to better adapt the algorithm during the evolutionary process. Furthermore, the arithmetic mean is extended to the generalized Lehmer mean in the Eq. (15). In the generalized Lehmer mean, the degree of this mean is expressed by the value of p. In this subsection, a proper setting of this new parameter is provided.

In Table 8, the results of SPODE with different p and time-varying strategies are introduced. SPODE with \(p=0.5\) significantly outperforms other strategies on 19, 22, 22 and 21 functions. The performance superiority of SPODE with \(p=0.5\) gradually becomes more significant as the value of p increases. Compared with the time-varying strategy, generalized Lehmer mean with different p has significantly improved the performance on this test suite. In the previous section, \(p=0.5\) indicates the value of \(\mu _{J}\) rapidly decreases, which means that subpopulation size gets smaller and smaller during the evolutionary process. When the evolution enters the later stage, the opposition will waste the fitness evaluations, so SPODE with a negative strategy (\(p=0.5\)) has a more significant advantage than other strategies.

The box plots of fitness error values of all strategies on six functions are depicted in Fig. 9 to show the distribution of SPOBL solutions under different strategies. These six functions are from different categories. All of them have the same trends that the performance superiority of SPODE gradually becomes more significant and stable as the value of p increases. From the figure, we can see SPOBL with \(p=0.5\) demonstrates the capability to find promising solutions.

Comparison for LSHADE with OBL variants

In the previous part, we combined SPOBL with DE to obtain SPODE. To further verify the performance of SPOBL with DE variants, we combined SPOBL with two state-of-the-art DE variant: LSHADE [40] and jSO [41], and compared the effects of OBL variants on the performance of LSHADE and jSO.

Since DE was proposed, many famous DE variants have been proposed. SHADE was proposed and won 3rd place in the CEC 2013 competition [42], and LSHADE won 1st place in the CEC 2014 competition [40]. SHADE, as a basic powerful DE variant, has been extensively studied by researchers, such as SPS-LSHADE-EIG [43], LSHADE-ND [44], iLSHADE [45], LSHADE-EpSin [46], jSO [41], L_covnSHADE [47], LSHADE-cnEpSin [48], and LSHADE-RSP [49]. The jSO is an improved version of the iLSHADE algorithm [45], mainly with a new weighted version of mutation strategy. The new mutation strategy is called DE/current-to-pBest-w/1. At the early stage of the evolutionary, the smaller F is used, while the higher F is used at the later stage. For illustration simplicity, in this section LSHADE-SPOBL and LSHADE-OBL represent the algorithm after embedding SPOBL and OBL into LSHADE.

In this part, the performance results of LSHADE with OBL variants on CEC 2017 at 30-D and 50-D are presented. Tables 9 and 11 provide the results of mean, std and Wilcoxon test of algorithms at 30-D and 50-D, respectively. The results of the Friedman test are presented on Tables 10 and 12. From the tables, the following have been observed.

-

1.

For the unimodal functions (F1, F3), all LSHADE-OBL variants achieve the best solution for all the functions. There is no significant difference in the performance of these algorithms on these functions.

-

2.

For the multimodal functions (F4–F10), LSHADE-SPOBL finds the best performance on 3 and 2 functions at 30-D and 50-D, respectively. LSHADE performs better than SPOBL on most functions, while the other LSHADE-OBL variants are dominated by LSHADE-SPOBL and the LSHADE on multimodal functions.

-

3.

For the hybrid functions (F11–F20), LSHADE-SPOBL keeps the outperformance and superiority of the performance on all functions, except on F16, F17 and F20. Moreover, the significance of the performance of LSHADE-SPOBL is enhanced as the dimensionality increases. LSHADE always surpasses SPOBL on F16, F17, and F20. It indicates that LSHADE-SPOBL weakens the performance of the LSHADE on these functions.

-

4.

For the composition functions (F21–F30), LSHADE-SPOBL obtains the best accuracy for 3 and 4 functions at 30-D and 50-D, respectively. LSHADE for 1 and 2 functions. On composition functions, the dominance of LSHADE-SPOBL as well as the LSHADE decreases. It means that no single algorithm can achieve the best performance on all functions.

From Table 9, it can be seen that LSHADE-SPOBL performs better than other LSHADE-OBL variants. To be precise, LSHADE-SPOBL significantly finds more accurate solutions than LSHADE-OBL, LSHADE-QOBL, LSHADE-QROBL, LSHADE-GOBL, LSHADE-COOBL, LSHADE-EOBL, LSHADE-REOBL, and LSHADE-COBL for more than half of the functions. Compared with LSHADE-COBL, LSHADE-SPOBL has significantly improved performance on 17 functions. Compared with LSHADE-GOBL, LSHADE-SPOBL has significantly improved performance on 23 functions. Compared with LSHADE-COOBL, LSHADE-SPOBL has significantly improved performance on 20 functions. Although there is no obvious difference between LSHADE-SPOBL and LSHADE on most functions, the performance of LSHADE-SPOBL is still significantly better than that of LSHADE on the 5 functions. In other words, LSHADE-SPOBL performs much better than other LSHADE-OBL variants.

As shown in Table 10, the Friedman test results with Tukey–Kramer’s post hoc are provided. It can be seen from Table 10 that LSHADE-SPOBL ranks first among all algorithms, and the outperformance over OBL, QOBL, GOBL, COOBL, EOBL, REOBL, and COBL is statistically significant. The top three algorithms are LSHADE-SPOBL, LSHADE, and LSHADE-QROBL. All in all, LSHADE-SPOBL is better than all comparison algorithms in performance on CEC 2017 at 30-D. In Table 12, a similar situation can be found at 50-D.

Moreover, we analyzed the results of Tables 9 and 11 based on the function’s properties. In the unimodal functions (F1, F3), the performance gap between each algorithm is not significant. However, the performance of LSHADE-SPOBL is better than other algorithms on hybrid functions (F11–F20) and composition functions (F21–F30). LSHADE assisted by SPOBL performs better than LSHADE on F13, F19, F22, F23, F24, F26 and F27. It reveals that LSHADE-SPOBL achieves a well-balanced state between exploration and exploitation, and finds the best solution than other algorithms when dealing with complex problems.

In this part, the performance results of jSO with different OBL variants on CEC 2017 at 30-D and 50-D are presented. Tables 13 and 15 provide the results of mean, standard deviation and Wilcoxon test of algorithms at 30-D and 50-D, respectively. From the tables, we can observe the following.

-

1.

For unimodal functions (F1, F3), most jSO-OBL variants provide similar performance on F1 and F3. jSO-SPOBL is slight better than jSO-OBL, jSO-GOBL and jSO-COOBL on F3 at 50-D. Thus, the scalability of jSO-SPOBL is enhanced by the dimensionality increases.

-

2.

For multimodal functions (F4-F10), jSO-SPOBL finds the best solution for 5 and 6 functions at 30-D and 50-D, respectively. jSO-SPOBL significantly exhibits better performance than other algorithms on the most functions. jSO-SPOBL outperforms the comparison algorithm in terms of both mean and std.

-

3.

For hybrid functions (F11–F20), jSO-SPOBL finds the best solution for 6 and 3 functions at 30-D and 50-D, respectively. jSO statistically performs better than jSO-SPOBL on F12, F18 and F19. jSO-SPOBL statistically surpasses jSO on F13, F16, F17 and F20. Although the number of best value of the mean values of jSO-SPOBL on these functions is not as many as that of jSO, it does not hurt the overall performance of jSO-SPOBL on these functions.

-

4.

For composition functions (F21–F30), jSO-SPOBL provides the best mean values for 5 and 2 functions at 30-D and 50-D, respectively. jSO-SPOBL is superior to jSO, jSO-OBL, jSO-QOBL, jSO-QROBL, jSO-GOBL, jSO-COOBL, jSO-EOBL, jSO-REOBL and jSO-COBL on 3, 5, 8, 7, 7, 8, 6, 7 and 5 functions at 30-D, respectively. In addition, similar results to LSHADE at 50-D are obtained in this section.

Some observations can be obtained from the Wilcoxon test results in Tables 13 and 15. jSO-SPOBL performs better than other jSO-OBL variants. To be precise, jSO-SPOBL significantly finds more accurate solutions than jSO-OBL, jSO-QOBL, jSO-QROBL, jSO-GOBL, jSO-COOBL, jSO-EOBL, jSO-REOBL, and jSO-COBL for more than half of the functions. Compared with jSO-QOBL, jSO-SPOBL has significantly improved performance on 22 functions. Compared with jSO-COOBL, jSO-SPOBL has significantly improved performance on 23 functions. Compared with jSO-GOBL, jSO-SPOBL has significantly improved performance on 21 functions. Although there is no obvious difference between jSO-SPOBL and jSO on most functions, the performance of jSO-SPOBL is still significantly better than that of jSO on the 4 problems. In other words, jSO-SPOBL performs much better than all other jSO-OBL variants.

As shown in Table 14, the results of the Friedman test with Tukey–Kramer’s post hoc are provided. It can be seen from Table 14 that jSO-SPOBL ranks first among all algorithms, and the outperformance over jSO-OBL variants is statistically significant. The top three algorithms are jSO-SPOBL, jSO, jSO-EOBL. In Table 16, it can be concluded that jSO-SPOBL still surpasses other jSO-OBL variants, except for jSO-QOBL, jSO-QROBL, jSO-EOBL and jSO-REOBL. jSO-SPOBL performs better than these four jSO-OBL variants on 22, 19, 18 and 20 functions at 30-D, respectively. jSO-SPOBL statistically finds the best performance of these four jSO-OBL variants on 12, 11, 13 and 11 functions at 50-D, respectively. However, this does not affect the significant performance of SPOBL among all OBL variants, which indicates that QOBL, QROBL, EOBL and REOBL embedding into jSO exhibit excellent performance with increasing dimensionality. Although SPOBL ranks first, the outperformance over jSO-OBL, jSO-GOBL, jSO-COOBL and jSO-COBL is statistically significant. The top three algorithms are jSO-SPOBL, jSO-QROBL and jSO-REOBL. Thus, jSO-SPOBL is better than all comparison algorithms in performance on CEC 2017 at 30-D and 50-D.

These improvements reveal that SPOBL benefits the performance of jSO and LSHADE. It can be explained by the following reasons. On the one hand, SPOBL employs a subpopulation strategy to select proper individuals to participate in the opposition, which can increase the probability of selecting individuals suitable for the opposition using the jumping rate of each individual during the evolutionary process. On the other hand, the self-adaptive parameter mechanism makes full use of the previous generation’s information, since the parameters of the surviving individuals in the previous iteration are very valuable experience for parameter control. Therefore, SPOBL has good scalability which adapts to different dimensional problems and portability which embeds into any DE variant.

Comparison for jSO with OBL variants

In this part, the performance results of jSO with different OBL variants on CEC 2017 at 30-D and 50-D are presented. Tables 13 and 15 provide the results of mean, standard deviation and Wilcoxon test of algorithms at 30-D and 50-D, respectively. From the tables, we can observe the following.

-

1.

For unimodal functions (F1, F3), most jSO-OBL variants provide similar performance on F1 and F3. jSO-SPOBL is slight better than jSO-OBL, jSO-GOBL and jSO-COOBL on F3 at 50-D. Thus, the scalability of jSO-SPOBL is enhanced by the dimensionality increases.

-

2.

For multimodal functions (F4-F10), jSO-SPOBL finds the best solution for 5 and 6 functions at 30-D and 50-D, respectively. jSO-SPOBL significantly exhibits better performance than other algorithms on the most functions. jSO-SPOBL outperforms the comparison algorithm in terms of both mean and std.

-

3.

For hybrid functions (F11–F20), jSO-SPOBL finds the best solution for 6 and 3 functions at 30-D and 50-D, respectively. jSO statistically performs better than jSO-SPOBL on F12, F18 and F19. jSO-SPOBL statistically surpasses jSO on F13, F16, F17 and F20. Although the number of best value of the mean values of jSO-SPOBL on these functions is not as many as that of jSO, it does not hurt the overall performance of jSO-SPOBL on these functions.

-

4.

For composition functions (F21–F30), jSO-SPOBL provides the best mean values for 5 and 2 functions at 30-D and 50-D, respectively. jSO-SPOBL is superior to jSO, jSO-OBL, jSO-QOBL, jSO-QROBL, jSO-GOBL, jSO-COOBL, jSO-EOBL, jSO-REOBL and jSO-COBL on 3, 5, 8, 7, 7, 8, 6, 7 and 5 functions at 30-D, respectively. In addition, similar results to LSHADE at 50-D are obtained in this section.

Some observations can be obtained from the Wilcoxon test results in Tables 13 and 15. jSO-SPOBL performs better than other jSO-OBL variants. To be precise, jSO-SPOBL significantly finds more accurate solutions than jSO-OBL, jSO-QOBL, jSO-QROBL, jSO-GOBL, jSO-COOBL, jSO-EOBL, jSO-REOBL, and jSO-COBL for more than half of the functions. Compared with jSO-QOBL, jSO-SPOBL has significantly improved performance on 22 functions. Compared with jSO-COOBL, jSO-SPOBL has significantly improved performance on 23 functions. Compared with jSO-GOBL, jSO-SPOBL has significantly improved performance on 21 functions. Although there is no obvious difference between jSO-SPOBL and jSO on most functions, the performance of jSO-SPOBL is still significantly better than that of jSO on the 4 problems. In other words, jSO-SPOBL performs much better than all other jSO-OBL variants.

As shown in Table 14, the results of the Friedman test with Tukey–Kramer’s post hoc are provided. It can be seen from Table 14 that jSO-SPOBL ranks first among all algorithms, and the outperformance over jSO-OBL variants is statistically significant. The top three algorithms are jSO-SPOBL, jSO, jSO-EOBL. In Table 16, it can be concluded that jSO-SPOBL still surpasses other jSO-OBL variants, except for jSO-QOBL, jSO-QROBL, jSO-EOBL and jSO-REOBL. jSO-SPOBL performs better than these four jSO-OBL variants on 22, 19, 18 and 20 functions at 30-D, respectively. jSO-SPOBL statistically finds the best performance of these four jSO-OBL variants on 12, 11, 13 and 11 functions at 50-D, respectively. However, this does not affect the significant performance of SPOBL among all OBL variants, which indicates that QOBL, QROBL, EOBL and REOBL embedding into jSO exhibit excellent performance with increasing dimensionality. Although SPOBL ranks first, the outperformance over jSO-OBL, jSO-GOBL, jSO-COOBL and jSO-COBL is statistically significant. The top three algorithms are jSO-SPOBL, jSO-QROBL and jSO-REOBL. Thus, jSO-SPOBL is better than all comparison algorithms in performance on CEC 2017 at 30-D and 50-D.

These improvements reveal that SPOBL benefits the performance of jSO and LSHADE. It can be explained by the following reasons. On the one hand, SPOBL employs a subpopulation strategy to select proper individuals to participate in the opposition, which can increase the probability of selecting individuals suitable for the opposition using the jumping rate of each individual during the evolutionary process. On the other hand, the self-adaptive parameter mechanism makes full use of the previous generation’s information, since the parameters of the surviving individuals in the previous iteration are very valuable experience for parameter control. Therefore, SPOBL has good scalability which adapts to different dimensional problems and portability which embeds into any DE variant.

Real-world constrained optimization problems

To validate the effectiveness of SPOBL, it is essential that the performance is evaluated on real-world optimization problems and is also compared against the popular existing algorithms. The CEC 2020 test suite contains non-linear and non-convex constrained optimization problems [32]. Moreover, the majority of problems of CEC 2020 originate from real-world applications.

In this subsection, SPOBL is compared with three up-to-date metaheuristics which are all published on the proceedings of CEC 2020 and GECCO 2020.

-

1.

SASS: Self-Adaptive Spherical Search Algorithm [50].

-

2.

sCMAgES: Modified Covariance Matrix Adaptation Evolution Strategy [51].

-

3.

COLSHADE: LSHADE for Constrained Optimization with Lévy Flight [52].

Among these three algorithms, SASS, sCMAgES and COLSHADE rank first, second and third, respectively. SASS is an excellent constraint optimization algorithm, and it is an interesting problem to embed SPOBL into SASS and observe the performance improvement. Besides, this paper discusses a new OBL, so there is not much discussion on constraint handling techniques. Since SPOBL is an OBL variant and is not an algorithm for solving constrained optimization problems, this section embeds SPOBL into SASS and the algorithm is denoted as SASS-SPOBL. In this subsection, the above-mentioned algorithms are run 25 times on each problem. The max fitness evaluations and performance evaluations are the same as [32]. The mean, std, median, best and worst values of fitness are shown in the following subsection.

Planetary gear train design optimization problem

This problem is concerned with the design of the gear train to determine the number of teeth on each gear to obtain a velocity ratio between the input and output shafts [53]. The main objective of this problem is to minimize the maximum error in the gear ratio. Six integer variables and 11 constraints are contained in this problem. More details of this problem can be found in [32].

In Table 17, the outcomes of planetary gear train design optimization problem are reported for all algorithms. It can be seen that the performance of SASS-SPOBL is better than other algorithms. The top three mean fitness values are 0.529216, 0.530809 and 0.541026, which are SASS-SPOBL, sCMAgES and COLSHADE. SASS-SPOBL can yield a significant minimum fitness value of 0.525769, which is lower than that of other algorithms. It means that SASS-SPOBL has a better performance than its competitors on this problem.

Four-stage gear box problem

This problem’s essential purpose is to minimize the gear box weight. The mixed-integer nonlinear programming formulation of this problem contains 22 design variables and 86 nonlinear constraints.

Table 18 shows the result of a four-stage gearbox problem. The best fitness values obtained by SASS-SPOBL and COLSHADE can reach 35.35923. Besides, the best and std values found by SASS-SPOBL are smaller than those obtained by other metaheuristics. It indicates that COLSHADE is the best algorithm for solving this problem. Besides, it also shows that SASS-SPOBL cannot significantly outperform the comparison algorithms in all problems due to the complexity of the real-world problems.

SOPWM for 13-level inverters

Synchronous optimal pulse-width modulation (SOP) is an up-and-coming technique for medium-voltage high-power drives. An optimization technique is used to minimize the harmonic distortion of the machine stator current while determining the switching angles. SOPWM can be expressed as a scaleable constrained optimization problem. For different levels of frequency inverters, the SOPWM problem is formulated by the following [32].

Minimize:

subject to:

with bounds:

Table 19 shows the best results by these algorithms. The four statistical values found by SASS-SPOBL are superior to the other algorithms, except for the best value. Moreover, the std value of SASS-SPOBL is smaller than that of the rest approaches, which means that SASS-SPOBL is more stable than other competitors. Therefore, the SASS-SPOBL is a very promising method to handle this problem.

Conclusions

In the OBL family, the opposition operator is very important. The opposition operator randomly maps the population to another location in the search space to increase the convergence speed of the algorithm. Many OBL variants are population based and can only ensure either exploration or exploitation. When they converge to the later stage, they will waste the fitness evaluations. Many strategies aim to reduce waste. However, the individual’s information on the opposition operator has a great influence on the effect of the operator, but there is no research concentrating on it. To achieve a better balance between exploration and exploitation, we proposed a novel OBL variant called SPOBL, which is subpopulation-based opposition. However, in the past years, many OBL variants have been proposed to enhance the performance of metaheuristic algorithms. The population-based opposition still has many disadvantages: (1) waste the fitness evaluations, and (2) select suitable parameters for OBL. The main idea of this paper is to overcome the above shortcomings. To reduce the calculation redundancy of fitness evaluations, we presented a subpopulation-based opposition instead of population-based opposition. Also, we applied a self-adaptive parameter control strategy with generalized Lehmer mean in SPOBL to balance between exploration and exploitation during the evolutionary process.

The performance of the proposed algorithm is verified on the CEC 2017 and CEC 2020 test suites. Eight state-of-the-art OBL variants are compared in experiments to illustrate the effectiveness of the proposed algorithm. The results confirm that SPOBL has a significant improvement compared with other OBL variants, but also show that SPOBL can be applied to any advanced DE variant to improve the corresponding algorithm’s performance.

Future research directions: (1) apply SPOBL to multi-objective EAs, (2) Apply SPOBL to other metaheuristic algorithms and compare their performance, (3) analyze the convergence behavior of subpopulation-based opposition and (4) design a novel OBL variant which aims to balance between exploration and exploitation.

References

Cheng R, He C, Jin Y, Yao X (2018) Model-based evolutionary algorithms: a short survey. Complex Intell Syst 4(4):283–292. https://doi.org/10.1007/s40747-018-0080-1

Huang C, Li Y, Yao X (2020) A survey of automatic parameter tuning methods for metaheuristics. IEEE Trans Evolut Comput 24(2):201–216. https://doi.org/10.1109/tevc.2019.2921598

Osaba E, Villar-Rodriguez E, Ser JD, Nebro AJ, Molina D, LaTorre A, Suganthan PN, Coello CAC, Herrera F (2021) A tutorial on the design, experimentation and application of metaheuristic algorithms to real-world optimization problems. Swarm Evolut Comput 64:100888. https://doi.org/10.1016/j.swevo.2021.100888

Wang L, Gao Y, Gao S, Yong X (2021) A new feature selection method based on a self-variant genetic algorithm applied to android malware detection. Symmetry 13(7):1290. https://doi.org/10.3390/sym13071290

Xu J, Jin Y, Du W (2021) A federated data-driven evolutionary algorithm for expensive multi-/many-objective optimization. Complex Intell Syst. https://doi.org/10.1007/s40747-021-00506-7

Ser JD, Osaba E, Molina D, Yang XS, Salcedo-Sanz S, Camacho D, Das S, Suganthan PN, Coello CAC, Herrera F (2019) Bio-inspired computation: where we stand and what’s next. Swarm Evolut Comput 48:220–250. https://doi.org/10.1016/j.swevo.2019.04.008

Storn R, Price K (1995) Differential evolution—a simple and efficient adaptive scheme for global optimization over continuous spaces. Tech. Rep. TR-95-012, International Computer Science, Berkeley, California

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11(4):341–359. https://doi.org/10.1023/a:1008202821328

Das S, Suganthan PN (2011) Differential evolution: a survey of the state-of-the-art. IEEE Trans Evoluti Comput 15(1):4–31. https://doi.org/10.1109/tevc.2010.2059031

Das S, Mullick SS, Suganthan P (2016) Recent advances in differential evolution—an updated survey. Swarm Evolut Comput 27:1–30. https://doi.org/10.1016/j.swevo.2016.01.004

Zhang Y, wei Gong D, zhi Gao X, Tian T, yan Sun X (2020) Binary differential evolution with self-learning for multi-objective feature selection. Inform Sci 507:67–85. https://doi.org/10.1016/j.ins.2019.08.040

Alswaitti M, Albughdadi M, Isa NAM (2019) Variance-based differential evolution algorithm with an optional crossover for data clustering. Appl Soft Comput 80:1–17. https://doi.org/10.1016/j.asoc.2019.03.013

Aladeemy M, Adwan L, Booth A, Khasawneh MT, Poranki S (2020) New feature selection methods based on opposition-based learning and self-adaptive cohort intelligence for predicting patient no-shows. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2019.105866

Hussain A, Muhammad YS (2019) Trade-off between exploration and exploitation with genetic algorithm using a novel selection operator. Complex Intell Syst 6(1):1–14. https://doi.org/10.1007/s40747-019-0102-7

Rahnamayan S, Tizhoosh HR, Salama MMA (2008) Opposition-based differential evolution. IEEE Trans Evolut Comput 12(1):64–79. https://doi.org/10.1109/tevc.2007.894200

Tizhoosh H (2005) Opposition-based learning: a new scheme for machine intelligence. In: International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06). IEEE. https://doi.org/10.1109/cimca.2005.1631345

Ergezer M, Simon D (2014) Mathematical and experimental analyses of oppositional algorithms. IEEE Trans Cybern 44(11):2178–2189. https://doi.org/10.1109/tcyb.2014.2303117

Ergezer M, Simon D, Du D (2009) Oppositional biogeography-based optimization. In: 2009 IEEE International Conference on Systems, Man and Cybernetics. IEEE. https://doi.org/10.1109/icsmc.2009.5346043

Rahnamayan S, Wang GG, Ventresca M (2012) An intuitive distance-based explanation of opposition-based sampling. Appl Soft Comput 12(9):2828–2839. https://doi.org/10.1016/j.asoc.2012.03.034

Al-Qunaieer FS, Tizhoosh HR, Rahnamayan S (2010) Opposition based computing—a survey. In: The 2010 International Joint Conference on Neural Networks (IJCNN). IEEE. https://doi.org/10.1109/ijcnn.2010.5596906

Xu Q, Wang L, Wang N, Hei X, Zhao L (2014) A review of opposition-based learning from 2005 to 2012. Eng Appl Artif Intell 29:1–12. https://doi.org/10.1016/j.engappai.2013.12.004

Mahdavi S, Rahnamayan S, Deb K (2018) Opposition based learning: a literature review. Swarm Evolut Comput 39:1–23. https://doi.org/10.1016/j.swevo.2017.09.010

Esmailzadeh A, Rahnamayan S (2011) Opposition-based differential evolution with protective generation jumping. In: 2011 IEEE Symposium on Differential Evolution (SDE). IEEE. https://doi.org/10.1109/sde.2011.5952059

Rahnamayan S, Tizhoosh HR, Salama MM (2007) Quasi-oppositional differential evolution. In: 2007 IEEE Congress on Evolutionary Computation. IEEE. https://doi.org/10.1109/cec.2007.4424748

Wang H, Wu Z, Rahnamayan S (2010) Enhanced opposition-based differential evolution for solving high-dimensional continuous optimization problems. Soft Comput 15(11):2127–2140. https://doi.org/10.1007/s00500-010-0642-7

Xu, Wang L, He B, Wang N (2011) Modified opposition-based differential evolution for function optimization. J Comput Inform Syst 7(5):1582–1591

Seif Z, Ahmadi M (2015) An opposition-based algorithm for function optimization. Eng Appli Artif Intell 37:293–306. https://doi.org/10.1016/j.engappai.2014.09.009

Rahnamayan S, Jesuthasan J, Bourennani F, Salehinejad H, Naterer GF (2014) Computing opposition by involving entire population. In: 2014 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2014.6900329

Alkayem NF, Shen L, Asteris PG, Sokol M, Xin Z, Cao M (2021) A new self-adaptive quasi-oppositional stochastic fractal search for the inverse problem of structural damage assessment. Alexand Eng J. https://doi.org/10.1016/j.aej.2021.06.094

Xu Y, Yang X, Yang Z, Li X, Wang P, Ding R, Liu W (2021) An enhanced differential evolution algorithm with a new oppositional-mutual learning strategy. Neurocomputing 435:162–175. https://doi.org/10.1016/j.neucom.2021.01.003

Choi TJ, Togelius J, Cheong YG (2021) A fast and efficient stochastic opposition-based learning for differential evolution in numerical optimization. Swarm Evolut Computat. https://doi.org/10.1016/j.swevo.2020.100768

Kumar A, Wu G, Ali MZ, Mallipeddi R, Suganthan PN, Das S (2020) A test-suite of non-convex constrained optimization problems from the real-world and some baseline results. Swarm Evolut Comput. https://doi.org/10.1016/j.swevo.2020.100693

Awad N, Ali M, JLBQ, Suganthan P (2016) Problem definitions and evalua- tion criteria for the cec 2017 special session and competition on single objective bound constrained real-parameter numerical optimization, technical report, ntu, singapore. Tech. rep

Cui L, Li G, Lin Q, Chen J, Lu N (2016) Adaptive differential evolution algorithm with novel mutation strategies in multiple sub-populations. Comput Oper Res 67:155–173. https://doi.org/10.1016/j.cor.2015.09.006

Zhang J, Sanderson A (2009) JADE: adaptive differential evolution with optional external archive. IEEE Trans Evol Computat 13(5):945–958. https://doi.org/10.1109/tevc.2009.2014613

Wang H, Wu Z, Rahnamayan S, Wang J (2010) Diversity analysis of opposition-based differential evolution—an experimental study. In: Advances in Computation and Intelligence, pp. 95–102. Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-642-16493-4_10

Wang W, Wang H, Sun H, Rahnamayan S (2016) Using opposition-based learning to enhance differential evolution: A comparative study. In: 2016 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2016.7743780

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evolut Comput 1(1):3–18. https://doi.org/10.1016/j.swevo.2011.02.002

García S, Fernández A, Luengo J, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Inform Sci 180(10):2044–2064. https://doi.org/10.1016/j.ins.2009.12.010

Tanabe R, Fukunaga AS (2014) Improving the search performance of SHADE using linear population size reduction. In: 2014 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2014.6900380

Brest J, Maucec MS, Boskovic B (2017) Single objective real-parameter optimization: Algorithm jSO. In: 2017 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2017.7969456

Tanabe R, Fukunaga A (2013) Success-history based parameter adaptation for differential evolution. In: 2013 IEEE Congress on Evolutionary Computation. IEEE. https://doi.org/10.1109/cec.2013.6557555

Guo SM, Tsai JSH, Yang CC, Hsu PH (2015) A self-optimization approach for l-SHADE incorporated with eigenvector-based crossover and successful-parent-selecting framework on CEC 2015 benchmark set. In: 2015 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2015.7256999

Sallam KM, Sarker RA, Essam DL, Elsayed SM (2015) Neurodynamic differential evolution algorithm and solving CEC2015 competition problems. In: 2015 IEEE Congress on Evolutionary Computation (CEC). IEEE . https://doi.org/10.1109/cec.2015.7257003

Brest J, Maucec MS, Boskovic B (2016) iL-SHADE: Improved l-SHADE algorithm for single objective real-parameter optimization. In: 2016 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2016.7743922

Awad NH, Ali MZ, Suganthan PN, Reynolds RG (2016) An ensemble sinusoidal parameter adaptation incorporated with l-SHADE for solving CEC2014 benchmark problems. In: 2016 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2016.7744163

Awad NH, Ali MZ, Suganthan PN, Reynolds RG, Shatnawi AM (2017) A novel differential crossover strategy based on covariance matrix learning with euclidean neighborhood for solving real-world problems. In: 2017 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2017.7969337

Awad NH, Ali MZ, Suganthan PN (2017) Ensemble sinusoidal differential covariance matrix adaptation with euclidean neighborhood for solving CEC2017 benchmark problems. In: 2017 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2017.7969336

Stanovov V, Akhmedova S, Semenkin E (2018) LSHADE algorithm with rank-based selective pressure strategy for solving CEC 2017 benchmark problems. In: 2018 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec.2018.8477977

Kumar A, Das S, Zelinka I (2020) A self-adaptive spherical search algorithm for real-world constrained optimization problems. In: Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion. ACM. https://doi.org/10.1145/3377929.3398186

Kumar A, Das S, Zelinka I (2020) A modified covariance matrix adaptation evolution strategy for real-world constrained optimization problems. In: Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion. ACM. https://doi.org/10.1145/3377929.3398185

Gurrola-Ramos J, Hernandez-Aguirre A, Dalmau-Cedeno O (2020) COLSHADE for real-world single-objective constrained optimization problems. In: 2020 IEEE Congress on Evolutionary Computation (CEC). IEEE. https://doi.org/10.1109/cec48606.2020.9185583

Dukic M, Dobrosavljevic Z (1990) A method of a spread-spectrum radar polyphase code design. IEEE J Select Areas Commun 8(5):743–749. https://doi.org/10.1109/49.56381

Acknowledgements

The work was supported by the National Natural Science Foundation of China (Nos. 11961001 and 61561001), China. The Construction Project of First-class Subjects in Ningxia Higher Education (No. NXYLXK2017B09), China. The Major Proprietary Funded Project of North Minzu University (No. ZDZX201901), China. The Graduate Innovation Project of North Minzu University (No. YCX21152), China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest