Abstract

Simple graph convolution (SGC) achieves competitive classification accuracy to graph convolutional networks (GCNs) in various tasks while being computationally more efficient and fitting fewer parameters. However, the width of SGC is narrow due to the over-smoothing of SGC with higher power, which limits the learning ability of graph representations. Here, we propose AdjMix, a simple and attentional graph convolutional model, that is scalable to wider structure and captures more nodes features information, by simultaneously mixing the adjacency matrices of different powers. We point out that the key factor of over-smoothing is the mismatched weights of adjacency matrices, and design AdjMix to address the over-smoothing of SGC and GCNs by adjusting the weights to matching values. Experiments on citation networks including Pubmed, Citeseer, and Cora show that our AdjMix improves over SGC by 2.4%, 2.2%, and 3.2%, respectively, while achieving same performance in terms of parameters and complexity, and obtains better performance in terms of classification accuracy, parameters, and complexity, compared to other baselines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Motivated by the increasing proliferation of graph structured data in real-world applications and the limited expressive power of convolutional neural networks (CNNs) for learning such data, graph convolutional networks (GCNs) [21] and subsequent variants have experienced great attention and have become popular topics. These models show promising performance in various fields, including citation networks [21, 38], social networks [7], traffic networks [13, 25], applied chemistry [29], recommender systems [8, 44], and so on.

Each layer in GCNs essentially acts as an operation of Laplacian smoothing [26]. Applying the operation twice, the nodes features in the same connected component are similar, which eases the classification task. As the times increase, the node features are more similar. Eventually, the node features tend to the fixed value [26], which makes them indistinguishable. This is called the over-smoothing problem. A key factor of the over-smoothing is the mismatched weights of adjacency matrices in the various powers. Therefore, we can effectively tackle the over-smoothing by adjusting the weights to appropriate and better values.

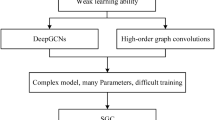

In addition to the over-smoothing, GCNs may inherit excess complexity and superfluous computation [40]. To reduce the complexity, a recent surge of research interest has concentrated on simple graph convolution (SGC) [40]. By removing the nonlinearities between GCNs layer and collapsing the weight matrices between consecutive layers, SGC achieves similar or even superior classification accuracy to GCNs in various application areas while being computationally more efficient and fitting fewer parameters. Nevertheless, SGC fails to adjust the weights of adjacency matrices in the various powers to matching values, thus bringing potential concerns of over-smoothing when taking higher powers. Limited to the over-smoothing, the width (the power of adjacency matrix) of SGC is narrow. This narrow mechanism naturally limits the scale of the receptive field. Due to the restricted receptive field, SGC has difficulty in obtaining more global information and propagating adequate label information.

Here, we propose a novel AdjMix that learns to adjust the weights of adjacency matrices in the different powers to matching values and capture more nodes and global information in an end-to-end fashion (Fig. 1). AdjMix can be viewed as a graph analogue of Inception [36], which allows for convolution model to increase the receptive field by mixing different kernel sizes. The main challenge to combine the adjacency matrices of different powers into a new single adjacency matrix is that the weights of adjacency matrices in the various powers are incompatible. To address the above challenges, we require a model that learns how to adjust the weights to matching values.

Our contributions are three-fold. (1) We provide new insights and point out that the key factor of this over-smoothing is the mismatched weights of adjacency matrices in the various powers. (2) We propose a new AdjMix to tackle the over-smoothing by adjusting the weights to appropriate values. Because AdjMix has no the limits of the over-smoothing, AdjMix can obtain more global information through taking higher powers instead of increasing depth and is scalable to wider structure. (3) Through an empirical assessment on node classification tasks, we show that the AdjMix matches the complexity and parameters of SGC while outperforming SGC and other state-of-the-art methods significantly in terms of classification accuracy.

Related works

Spectral CNN [6] is the first successful attempt at extending CNNs on graphs for dealing with graph-based tasks. Defferrard et al. [10] propose a localized filter using Chebyshev polynomials to avoid the computationally expensive Laplacian eigendecomposition of [6]. Later, Kipf and Welling [21] propose graph convolutional networks (GCNs) by simplifying the Chebyshev polynomials based on a redefined propagation matrix. Li et al. [26] prove that each convolution of GCNs is actually a special form of Laplacian smoothing, which suffers from potential limits of over-smoothing with many graph convolution layers. GraphSAGE [14] leverages the node information with sampling and aggregation to generate node embeddings for tackling the limitations of transductive learning. FastGCN [7] regards the convolution as integral transforms of embedding functions to significantly reduce the time and memory resources. Xu et al. [42] analyze the learning ability of graph neural networks (GNNs) for capturing different graph structures and propose graph isomorphism network (GIN) that is as powerful as the Weisfeiler–Lehman test for graph isomorphism. Klicpera et al. [22] propose an improved propagation scheme with personalized PageRank to leverage the information from a large neighborhood. Li et al. [27] propose proper low-pass graph convolutional filters that inject graph relations into data features in semi-supervised learning. Velikovi et al. [39] propose a deep graph infomax (DGI) that is based upon mutual information to learn graph embeddings on both transductive and inductive learning tasks. By combining these works of [9, 17, 18, 32, 33, 35] with DGI, DGI is more powerful. Several researches obtain multi-scale information via high-order propagation matrix [2, 3, 24, 29, 31].

A recent surge of research interest has focused on simpler and linear models to achieve efficient computations for node and graph learning tasks. Thekumparampil et al. [37] propose a linear GCNs model that removes all intermediate non-linear activation functions to simplify computations, which achieves a comparable to GCNs and other state-of-the-art models. SGC [40], as a simpler and efficient variant of GCNs, removes the nonlinearities between GCNs layer and collapses the weight matrices between consecutive layers, has achieved comparable performance to GCNs on a variety of tasks.

Many researches demonstrate that graph attentional models try to assign various edge weights based on node features and have contributed to performance improvement on graph learning tasks [19, 23, 37, 38, 45]. Nevertheless, these models with attention mechanism add significant overhead to complexity and parameters. There are many other works [5, 41, 46] for a more comprehensive review.

Proposed method

Preliminaries

We follow [3, 21] to introduce graph notations and problem definition. We represent an undirected graph \({\mathcal {G}}\) with n vertices and e edges as \((\varepsilon ,\nu ,A,X)\), where \(\varepsilon \) and \(\nu \) are the edge set and vertex set, respectively, \(X\in {\mathbb {R}}^{n\times c_{0}}\) is the node feature matrix assuming each node has \(c_{0}\) features, and \(A\in \{0,1\}^{n\times n}\) is the adjacency matrix with each entry \(a_{ij}\) describing the edge weights between vertex i and j. We use edge weights to indicate connection strengths between vertices (nodes).

Graph convolutional networks. We follow [21] to introduce the convolution of GCNs, which is defined as:

where S is the normalized adjacency matrix with added self-loops, with \(S={\tilde{D}}^{-\frac{1}{2}}{\tilde{A}}{\tilde{D}}^{-\frac{1}{2}}\). Here \({\tilde{A}}=A+I\), \(I\in {\mathbb {R}}^{n\times n}\) is the identity matrix, and \({\tilde{D}}\) is the degree matrix of \({\tilde{A}}\), with \({\tilde{D}}_{ii}=\sum \nolimits _{j}{\tilde{A}}_{ij}\). \(H^{(k)}\) and \(\theta ^{(k)}\) denote the input node representations and the learned weight matrix at layer k. Specially, \(H^{(k)}=X\) when \(k=1\), which serves as input to the first GCNs layer. We use the nonlinear activation function \(\text {ReLU}\) to achieve the pointwise nonlinear transformation, with \(\text {ReLU}(x)={\text {max}}(0, x)\).

Similar to CNNs, GCNs learn graph representations by stacking multiple layers. Stacking the convolutional layer twice, the popular two-layer GCNs can be written as:

where \(\theta ^{(1)}\) and \(\theta ^{(2)}\) are different weight matrices. We apply \(\text {softmax}\) classifier to predict the labels for node classification, with \(\text {softmax}(\cdot )=\frac{\exp (\cdot )}{\sum \nolimits _{i}\exp (\cdot )}\).

Simple graph convolution. By repeatedly stacking GCNs layers, we describe k-layer GCNs in general form as:

Wu et al. [40] show that the nonlinearity between GCNs layer is unimportant for classification tasks. Therefore, for k-layer GCNs, we remove the \(\text {ReLU}\) functions between each layer. The k-layer GCNs become:

To simplify notation and computations, we collapse the S into a single matrix \(S^{k}\) by raising S to the k-th power and reparameterize weights into a single matrix \(\theta \) via \(\theta =\theta ^{(1)}\theta ^{(2)}\cdots \theta ^{(k)}\). We write the k-layer GCNs in simple form:

We refer to the simplified model as simple graph convolution (SGC) [40]. In SGC, we reduce the excess complexity of GCNs and achieve a simplified linear model while showing competing performance compared to GCNs.

SGC encounters bottleneck

In traditional CNNs, the depth (stacking layers) increases the receptive field of internal features [15]. In SGC, we increase the receptive field by increasing the k value in Eq. (5). Equation (5) shows that SGC captures the features information of neighbor nodes that are k-hops away in the graph. Considering \(S={\tilde{D}}^{-\frac{1}{2}}{\tilde{A}}{\tilde{D}}^{-\frac{1}{2}}\) and \({\tilde{A}}=A+I\), then:

To analyze Eqs. (5) and (6) intuitively and clearly, we take Fig. 2 as an example for numerical experiments. Obviously, in Fig. 2, \(A=\begin{bmatrix} 0 &{} 1 &{} 0 &{} 0 &{} 0 &{} 1 \\ 1 &{} 0 &{} 1 &{} 0 &{} 1 &{} 0 \\ 0 &{} 1 &{} 0 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} 1 &{} 0 &{} 1 &{} 0 \\ 0 &{} 1 &{} 0 &{} 1 &{} 0 &{} 1 \\ 1 &{} 0 &{} 0 &{} 0 &{} 1 &{} 0 \\ \end{bmatrix}\), \(A+I=\begin{bmatrix} 1 &{} 1 &{} 0 &{} 0 &{} 0 &{} 1 \\ 1 &{} 1 &{} 1 &{} 0 &{} 1 &{} 0 \\ 0 &{} 1 &{} 1 &{} 1&{} 0 &{} 0 \\ 0 &{} 0 &{} 1 &{} 1 &{} 1 &{} 0 \\ 0 &{} 1 &{} 0 &{} 1 &{} 1 &{} 1 \\ 1 &{} 0 &{} 0 &{} 0 &{} 1 &{} 1 \\ \end{bmatrix}\), and \({\tilde{D}}=\begin{bmatrix} 3 &{} 0 &{} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 4 &{} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 0 &{} 3 &{} 0&{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 3 &{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 &{} 4 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 &{} 0 &{} 3 \\ \end{bmatrix}\). Then:

We can observe the impact of different k values on these weights between nodes at various distances (the various powers weights of S). The high power weights of S increase from 0 while the low power weights of S gradually decrease when increasing k value. Furthermore, the all weights of S tend to a fixed value when \(k\ge 20\), which makes nodes features indistinguishable. In addition, these weights are relatively close when \(k\ge 3\). This implies that SGC may suffer from over-smoothing when \(k\ge 3\). Limited to the over-smoothing, SGC has difficulty in capturing many nodes features and exploring the global graph structure.

The overall architecture

In SGC, we improve the learning ability by increasing the scale of receptive field. The analysis in Sect. 3.2 shows that SGC fails to further enlarge the receptive field due to the over-smoothing of SGC with larger k value (\(k\ge 3\)). This hurts the classification performance on graph learning tasks. By expanding Eq. (6), we find that \(S^{k}\) includes a series of weights of adjacency matrices in the various powers. We hypothesize that the weights do not match and the mismatched weights are the key factor of the over-smoothing. Therefore, we can effectively tackle the over-smoothing by adjusting the weights to matching values. Motivated by the above analyses, we propose to adjust the weights by constructing a new adjacency matrix. Based on the adjacency matrix, we develop our AdjMix architecture (Fig. 1). In our AdjMix, we first construct an adjacency matrix with attention mechanism to adjust the weights. In addition, we design a simple and attentional graph convolution model to capture the features information of neighbor nodes that are k-hops away. Finally, we apply a softmax classifier to classify each node.

Simple and attentional graph convolution layer

In our simple and attentional graph convolution layer, node representations are updated in four steps, i.e. multi-scale adjacency matrix with attention mechanism, multi-scale feature propagation, multi-scale linear transformation, and nonlinear activation. We now introduce each step in detail.

Multi-scale adjacency matrix with attention mechanism. In GCNs, the adjacency matrix A is used to denote the edge weights between nodes that are one hop away. However, the information propagation of graph is inadequate because of its inability to denote the edge weights between nodes that are k-hops (\(k>1\)) away. To address the limits, we mix various powers of the A into a single multi-scale adjacency matrix with attention mechanism.

where \(\check{S}_{\text {adj}}\) is the multi-scale adjacency matrix with attention mechanism, and \(S_{\text {adj}}^{1}\) is a normalized adjacency matrix without added self-loops, with \(S_{\text {adj}}^{1}=S_{\text {adj}}=D^{-\frac{1}{2}}AD^{-\frac{1}{2}}\). Here \(S_{\text {adj}}^{k}\) is the k power of \(S_{\text {adj}}\), and D is the degree matrix of A, with \(D_{ii}=\sum _{j}A_{ij}\). We consider node’s own feature via \(S_{\text {adj}}^{0}\), with \(S_{\text {adj}}^{0}=I\). In addition, we utilize the various powers of the \(S_{\text {adj}}^{1}\) (such as \(S_{\text {adj}}^{k}\)) to obtain feature information from all nodes that are k-hops away in the graph. By applying a series of attention scores \(\alpha _{0},\alpha _{1},\ldots \alpha _{k}\), we can flexibly adjust the weights of these adjacent powers of \(S_{\text {adj}}\) to avoid the concerns of over-smoothing.

Theorem 1

Multi-scale adjacency matrix with attention mechanism is an operation of permutation invariance.

Proof

Let \(S_{\text {adj}}\in {\mathbb {R}}^{n\times n}\) (n nodes), then \(S_{\text {adj}}^{0}\in {\mathbb {R}}^{n\times n}\),

\(S_{\text {adj}}^{1}\),\(S_{\text {adj}}^{2}\),\(\cdots \),\(S_{\text {adj}}^{k}\) \(\in {\mathbb {R}}^{n\times n}\), and \(\check{S}_{\text {adj}}=\alpha _{0}S_{\text {adj}}^{0}+\alpha _{1}S_{\text {adj}}^{1}+\cdots +\alpha _{k}S_{\text {adj}}^{k}\in {\mathbb {R}}^{n\times n}\). Because our multi-scale adjacency matrix is element-wise operation, the spatial location of \(\check{S}_{\text {adj}}\) is permutation invariant.

Multi-scale feature propagation. In order to aggregate various neighboring features, we design a multi-scale feature propagation that is what distinguishes our convolution from these convolutions of GCNs and SGC. The node feature matrix X is updated along these neighboring nodes at different scales in Eq. (15):

Intuitively, this step captures multi-scale feature information while keeping larger receptive field. Furthermore, we try to adjust the contributions of nodes features at different scales by applying a series of attention scores for performance improvement.

Multi-scale linear transformation and nonlinear activation. After the multi-scale feature propagation, our convolutional layer is identical to a multi-layer perceptron (MLP). We use a learned weight matrix W to conduct multi-scale linear transformation. Finally, a nonlinear activation function such as \(\text {ReLU}\) is applied before outputting feature representation H. We conclude that the feature representation is updated.

Based on Eqs. (15) and (16), our simple and attentional graph convolution is defined as:

Our algorithm is summarized in Algorithm 1. Our convolution model is used to learn k-hops nodes features and global graph structure by aggregating neighborhoods information as described in Eq. (17). In GCNs, the convolution suffers from inability in capturing nodes feature with different neighbors. However, our convolution can explore the interaction of neighboring nodes that are k-hops away. In SGC, the convolution fails to adjust the contributions of nodes features with different neighbors. In our convolution model, we can consider and adjust the contributions by a series of attention scores. In addition, limited to the over-smoothing of GCNs and SGC models, the receptive field of the models is narrow. This limits the expressive power. We adjust the weights to address the over-smoothing via multi-scale adjacency matrix with attention mechanism. Our model can improve the expressive power and learn global graph topology. However, AdjMix needs to set different k values (the highest power of \(S_{\text {adj}}\)) to achieve the best performance according to different datasets, compared to these baselines such as GCNs and SGC.

Output layer

In output layer, following Kipf and Welling [21], we predict the label of nodes using a \(\text {softmax}\) classifier and adopt the loss function from GCNs. The class prediction \(Y_{\text {AdjMix}}\) (i.e. AdjMix model) takes the following form:

Complexity analysis and parameters comparison

Similar to SGC, we can regard \({\bar{H}}\) as a fixed feature extraction since the computation of \({\bar{H}} = \check{S}_{\text {adj}}X\) requires no weight. By precomputing the fixed feature extraction \({\bar{H}}\), the computation of the proposed model is very efficient. Let \(A\in {\mathbb {R}}^{n\times n}\), \(X\in {\mathbb {R}}^{n\times c_{0}}\), and \(W\in {\mathbb {R}}^{c_{0}\times c_{1}}\)(\(c_{1}\) filters). As introduced in Sect. 3.4, our \(\check{S}_{\text {adj}}\in {\mathbb {R}}^{n\times n}\), \({\bar{H}}\in {\mathbb {R}}^{n\times c_{0}}\), and \(Y_{\text {AdjMix}} = \text {softmax}(\text {ReLU}({\bar{H}}W))\in {\mathbb {R}}^{n\times c_{1}}\), where \(c_{1}\) denotes the number of classes. Because \(\check{S}_{\text {adj}}\) is usually a sparse matrix with m non-zero entries, the time complexity and parameters of \(\text {AdjMix}\) are \( O (m\times c_{0}\times c_{1}) \) and \( O (c_{0}\times c_{1}) \), respectively. Compared to GCNs, our \(\text {AdjMix}\) performs better in terms of the complexity and parameters.

Experiments

We evaluate the benefits of AdjMix against a number of state-of-the-art models, with the goal of answering the following research questions:

Q1 Can AdjMix address the concerns of the over-smoothing when capturing the features information at long distances?

Q2 How does AdjMix compare to the state-of-the-art models on semi-supervised node classification tasks?

Q3 How does AdjMix compare to GCNs and other models in terms of the complexity and parameters on all datasets?

Datasets. To evaluate the effectiveness of AdjMix, we conduct experiments on citation network datasets chosen from benchmarks commonly used on semi-supervised node learning tasks. We use the datasets on public splits [21, 43] of Pubmed, Citeseer, and Cora [34], which are summarized in Table 1.

Model configurations. We implement our model using Adam [20] optimizer in TensorFlow [1]. We now report the optimized hyperparameters to achieve the best performance on different datasets. On Cora, we set the learning rate to 0.0003 and dropout to 0.95 for improving the performance in terms of stability and test accuracy. On other datasets, we set the learning rate to 0.01 and dropout to 0 due to the very stable results. In addition, we apply 0.005, 0.000825, 0.0004 L2 regularization factor and train 240, 1,500, 48,000 epochs on Pubmed, Citeseer, and Cora, respectively. We optimize the highest power (k power) of the normalized adjacency matrix \(S_{\text {adj}}\) according to prediction performance. We set k to 21, k to 4, k to 8 on Pubmed, Citeseer, and Cora, respectively. We tune these attention scores \(\alpha _{0}\), \(\alpha _{1}\), \(\cdots \), \(\alpha _{k}\) to suitable values, with \(\alpha _{0} =\) 2.7, \(\alpha _{1} =\) 1.5, \(\alpha _{2} =\) 1.05, \(\alpha _{3} =\) 0.85, \(\alpha _{4} =\) 0.85, \(\alpha _{5} =\) 0.8, \(\alpha _{6} =\) 0.75, \(\alpha _{7} =\) 0.7, \(\alpha _{12} =\) 0.6, \(\alpha _{13} =\) 0.6, \(\alpha _{14} =\) 0.55, \(\alpha _{15} =\) 0.55, \(\alpha _{16} =\) 0.55, \(\alpha _{17} =\) 0.5, \(\alpha _{18} =\) 0.5, \(\alpha _{19} =\) 0.5, \(\alpha _{20} =\) 0.45, \(\alpha _{21} =\) 0.45, and other \(\alpha =\) 0.65 on Pubmed, with \(\alpha _{0} =\) 1.6, \(\alpha _{1} =\) 1.3, \(\alpha _{2} =\) 1.12, \(\alpha _{3} =\) 0.85, and \(\alpha _{4} =\) 0.8 on Citeseer, and with \(\alpha _{0} =\) 1.76, \(\alpha _{1} =\) 1.65, \(\alpha _{2} =\) 1.155, \(\alpha _{3} =\) 0.935, \(\alpha _{4} =\) 0.935, \(\alpha _{5} =\) 0.88, \(\alpha _{6} =\) 0.825, \(\alpha _{7} =\) 0.77, and \(\alpha _{8} =\) 0.715 on Cora. The other parameters of the proposed model are consistent with GCNs.

Baselines and experimental setting

In our experiments, we compare AdjMix with various state-of-the-art baselines, including Planetoid [43], gated graph neural networks (GGNN) [28], graph convolutional networks for fingerprint (GCN-FP) [11], message passing neural networks (MPNN) [12], diffusion-convolutional neural networks (DCNN) [4], graph attention networks (GAT) [38], Graph sample and aggregate (GraphSAGE) [14], graph convolutional networks (GCNs) [21], adaptive lanczos network (AdaLNet) [29], Lanczos network (LNet) [29], graph isomorphism network (GIN) [42], deep graph InfoMax (DGI) [39], learned MixHop (MixHop-learn) [3], and simple graph convolution (SGC) [40].

Our multi-scale adjacency matrix with four power in Fig. 2. We summarize these results based on \(\check{S}_{\text {adj}}=\alpha _{0}S_{\text {adj}}^{0}+\alpha _{1}S_{\text {adj}}^{1}+\alpha _{2}S_{\text {adj}}^{2}+\alpha _{3}S_{\text {adj}}^{3}+\alpha _{4}S_{\text {adj}}^{4}\), with \(\alpha _{0}=\) 1.6, \(\alpha _{1}=\) 1.3, \(\alpha _{2}=\) 1.12, \(\alpha _{3}=\) 0.85, and \(\alpha _{4}=\) 0.8

Analysis of over-smoothing

To address Q1, we conduct a variety of numerical experiments in Fig. 3. In Fig. 3c–e, by increasing the power of the \(S_{\text {adj}}\), the weights are distinguishable while obtaining more nodes features. It is observed from Fig. 3f, g that further increasing the power of the \(S_{\text {adj}}\) makes the weights more close and the nodes features more indistinguishable. This naturally leads to over-smoothing. As described in Sect. 3.3, a main factor of the over-smoothing is the mismatched weights. Therefore, we propose multi-scale adjacency matrix to adjust these weights to appropriate values. We observe that these weights of the proposed multi-scale adjacency matrix are distinguishable. Namely, the features of different neighboring nodes in the proposed multi-scale adjacency matrix are distinguishable. This implies that our model can effectively avoid the concerns of over-smoothing when capturing the features information of neighboring nodes at long distances. Therefore, by increasing the powers of the adjacency matrix, our model can enlarge the receptive field and improve the expressive power of learning graph representations.

Results for node classification

Table 2 compares the performance of AdjMix to these state-of-the-art node classification baselines in terms of classification accuracy and stability on citation networks. These results provide positive answers to question Q2. We observe that our AdjMix obtains the highest performance including classification accuracy and stability among all state-of-the-art approaches on Pubmed, Citeseer, and Cora, improves over GCNs [21] by 2.3%, 4.6%, and 2.6%, improves over AdaLNet [29] by 3.4%, 7.0%, and 4.0%, and improves over SGC [40] by 2.4%, 2.2%, and 3.2% respectively. Our AdjMix achieves similar stability while significantly improving classification results compared to SGC [40], and outperforms other baselines by a large margin in terms of stability. Interestingly, our simplified model variant, Adjmix-2, outperforms SGC [40] by a large margin on all datasets. This is because our Adjmix-2 can adjust the weights of adjacency matrices in the different powers to matching values. These results demonstrate the effectiveness of the proposed models for adjusting the weights.

Influence of model width (the highest power of \(S_{\text {adj}}\), i.e. k) and node’s own feature. AdjMix without AS denotes our AdjMix model without these attention scores \(\alpha _{0}\), \(\alpha _{1}\), \(\cdots \), \(\alpha _{k}\), then \(\check{S}_{\text {adj}}=S_{\text {adj}}^{0}+S_{\text {adj}}^{1}+\cdots +S_{\text {adj}}^{k}\). AdjMix without AS and \(S_{\text {adj}}^{0}\) denotes our AdjMix model without these attention scores and \(S_{\text {adj}}^{0}\), then \(\check{S}_{\text {adj}}=S_{\text {adj}}^{1}+S_{\text {adj}}^{2}+\cdots +S_{\text {adj}}^{k}\)

Figure 4 shows the influence of model width and node’s own feature on all datasets. We observe that the accuracy of overall trend in our AdjMix without AS and AdjMix without AS and \(S_{\text {adj}}^{0}\) models improves as model goes wider (increases k) until the width (k) of 21, 4, and 8 on Pubmed, Citeseer, and Cora, respectively. By further increasing the model width, the accuracy of our models begins to decrease. This is because our models with wider structure may mix the features from various clusters. Compared to AdjMix without AS model, AdjMix without AS and \(S_{\text {adj}}^{0}\) model improves performance at different model widths on all datasets. This demonstrates the importance of node’s own feature for designing model. These comparison results show the best model width and prove the benefits of own node features to performance improvement.

Analysis of parameters and complexity

As explained in He et al. [16], the actual running time is sensitive to hardware and implementations. We use the theoretical time complexity to show the complexity, rather than the actual running time, following Liu et al. [30]. To support answering Q3, we compare our methods with these state-of-the-art node classification baselines in terms of parameters and complexity on citation networks. As described in Sect. 3.6, we list the parameters and complexity in Table 3. Interestingly, in terms of parameters and complexity, the proposed models achieve as good performance as SGC [40], and perform better compared to other baselines. These results demonstrate the contribution of designing one-layer model.

Based on Tables 2 and 3, we conclude that the proposed models outperform SGC [40] by a large margin while maintaining same parameters and complexity, and they achieve state-of-the-art performance in terms of accuracy, parameters, and complexity, compared to other baselines. These results show the significant advantages of the proposed models.

Conclusion

In this paper, we propose a simple and attentional graph convolutional network architecture, AdjMix, for semi-supervised node learning tasks. Notably, our AdjMix can adjust the weights of adjacency matrices in the various powers to address the over-smoothing of SGC and GCNs, thus capturing more nodes features information by taking wider model. By precomputing the fixed feature extraction \({\bar{H}}\), our AdjMix achieves as good performance as SGC in terms of parameters and complexity. Experiments on node classification benchmarks show the superiority of capturing the node features and entire graph structure. In the future, we would design a learned network to automatically adjust these weights to matching values and apply the proposed model to more graph learning tasks. Especially, we will study how to apply the proposed model to directed graphs.

References

Abadi M, Agarwal A, Barham P, Brevdo E (2016) Tensorflow: large-scale machine learning on heterogeneous distributed systems. arXiv preprint. arXiv:1603.04467

Abu-El-Haija S, Kapoor A, Perozzi B, Lee J (2019) N-gcn: multi-scale graph convolution for semi-supervised node classification. In: UAI, pp 1–9

Abu-El-Haija S, Perozzi B, Kapoor A, Harutyunyan H, Alipourfard N, Lerman K, Steeg G, Galstyan A (2019) Mixhop: higher-order graph convolution architectures via sparsified neighborhood mixing. In: ICML, pp 21–29

Atwood J, Towsley D (2016) Diffusion-convolutional neural networks. In: NIPS, pp 1993–2001

Battaglia P, Hamrick J, Bapst V (2018) Relational inductive biases, deep learning, and graph networks. arXiv preprint. arXiv:1806.01261

Bruna J, Zaremba W, Szlam A, LeCun Y (2014) Spectral networks and locally connected networks on graphs. In: ICLR, pp 1–14

Chen J, Ma T, Xiao C (2018) Fastgcn: fast learning with graph convolutional networks via importance sampling. In: ICLR, pp 1–15

Chen L, Wu L, Hong R, Zhang K, Wang M (2020) Revisiting graph based collaborative filtering: a linear residual graph convolutional network approach. In: AAAI, pp 27–34

Chiang H, Chen M, Huang Y (2019) Wavelet-based EEG processing for epilepsy detection using fuzzy entropy and associative petri net. IEEE Access 7:103255–103262

Defferrard M, Bresson X, Vandergheynst P (2016) Convolutional neural networks on graphs with fast localized spectral filtering. In: NIPS, pp 3844–3852

Duvenaud D, Maclaurin D, Aguilera-Iparraguirre J, Gómez-Bombarelli R, Hirzel T (2015) Convolutional networks on graphs for learning molecular fingerprints. In: NIPS, pp 2224–2232

Gilmer J, Schoenholz S, Riley P, Vinyals O, Dahl G (2017) Neural message passing for quantum chemistry. In: ICML, pp 1263–1272

Guo S, Lin Y, Feng N, Song C, Wan H (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: AAAI, pp 922–929

Hamilton W, Ying Z, Leskovec J (2017) Inductive representation learning on large graphs. In: NIPS, pp 1024–1034

Hariharan B, Arbeláez P, Girshick R, Malik J (2015) Hypercolumns for object segmentation and fine-grained localization. In: CVPR, pp 447–456

He K, Sun J (2015) Convolutional neural networks at constrained time cost. In: CVPR, pp 5353–5360

Islas M, Rubio J, Muñiz S, Ochoa G, Pacheco J, Meda-Campaña J, Mujica-Vargas D, Aguilar-Ibañez C, Gutierrez G, Zacarias A (2021) A fuzzy logic model for hourly electrical power demand modeling. Electronics 10(4):448

de Jesús Rubio J, Lughofer E, Pieper J, Cruz P, Martinez D, Ochoa G, Islas M, Garcia E (2021) Adapting h-infinity controller for the desired reference tracking of the sphere position in the maglev process. Inf Sci 569:669–686

Kampffmeyer M, Chen Y, Liang X, Wang H, Zhang Y, Xing E (2019) Rethinking knowledge graph propagation for zero-shot learning. In: CVPR, pp 11487–11496

Kingma D, Ba J (2015) Adam: a method for stochastic optimization. In: ICLR, pp 1–15

Kipf T, Welling M (2017) Semi-supervised classification with graph convolutional networks. In: ICLR, pp 1–14

Klicpera J, Bojchevski A, Günnemann S (2019) Predict then propagate: graph neural networks meet personalized pagerank. In: ICLR, pp 1–15

Lee J, Rossi R, Kim S, Ahmed N, Koh E (2019) Attention models in graphs: a survey. ACM Trans Knowl Discov Data (TKDD) 13(6):1–25

Lei F, Liu X, Dai Q, Ling B, Zhao H, Liu Y (2020) Hybrid low-order and higher-order graph convolutional networks. Comput Intell Neurosci Vol.2020, 3283890:1–3283890:9

Li J, Han Z, Cheng H, Su J, Wang P, Zhang J, Pan L (2019) Predicting path failure in time-evolving graphs. In: KDD, pp 1279–1289

Li Q, Han Z, Wu X (2018) Deeper insights into graph convolutional networks for semi-supervised learning. In: AAAI, pp 3538–3545

Li Q, Wu X, Liu H, Zhang X, Guan Z (2019) Label efficient semi-supervised learning via graph filtering. In: CVPR, pp 9582–9591

Li Y, Tarlow D, Brockschmidt M, Zemel R (2015) Gated graph sequence neural networks. In: ICLR, pp 1–20

Liao R, Zhao Z, Urtasun R, Zemel R (2019) Lanczosnet: multi-scale deep graph convolutional networks. In: ICLR, pp 1–18

Liu X, Xia G, Lei F, Zhang Y, Chang S (2021) Higher-order graph convolutional networks with multi-scale neighborhood pooling for semi-supervised node classification. IEEE Access 9:31268–31275

Luan S, Zhao M, Chang X, Precup D (2019) Break the ceiling: stronger multi-scale deep graph convolutional networks. In: NIPS, pp 10943–10953

Meda-Campaña J (2018) On the estimation and control of nonlinear systems with parametric uncertainties and noisy outputs. IEEE Access 6:31968–31973

de Rubio J (2021) Stability analysis of the modified Levenberg–Marquardt algorithm for the artificial neural network training. IEEE Trans Neural Netw Learn Syst 32(8):3510–3524

Sen P, Namata G, Bilgic M, Getoor L, Galligher B, Eliassi-Rad T (2008) Collective classification in network data. AI Mag 29(3):93–93

Soriano L, Zamora E, Vazquez-Nicolas J, Hernández G, Madrigal J, Balderas D (2020) Pd control compensation based on a cascade neural network applied to a robot manipulator. Front Neurorobot Vol.14, 577749:1–577749:9

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: CVPR, pp 1–9

Thekumparampil K, Wang C, Oh S, Li L (2018) Attention-based graph neural network for semi-supervised learning. arXiv preprint. arXiv:1803.03735

Veličković P, Cucurull G, Casanova A, Romero A, Lio P, Bengio Y (2018) Graph attention networks. In: ICLR, pp 1–12

Velickovic P, Fedus W, Hamilton W, Liò P, Bengio Y, Hjelm R (2019) Deep graph infomax. In: ICLR, pp 1–19

Wu F, Zhang T, Souza J, Fifty C, Yu T, Weinberger K (2019) Simplifying graph convolutional networks. In: ICML, pp 6861–6871

Wu Z, Pan S, Chen F, Long G, Zhang C, Yu P (2020) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learn Syst 32(1):4–24

Xu K, Hu W, Leskovec J, Jegelka S (2019) How powerful are graph neural networks? In: ICLR, pp 1–17

Yang Z, Cohen W, Salakhutdinov R (2016) Revisiting semi-supervised learning with graph embeddings. In: ICML, pp 40–48

Yu W, Qin Z (2020) Graph convolutional network for recommendation with low-pass collaborative filters. In: ICML, pp 10936–10945

Zhang J, Shi X, Xie J, Ma H, King I, Yeung D (2018) Gaan: Gated attention networks for learning on large and spatiotemporal graphs. In: UAI, pp 339–349

Zhou J, Cui G, Zhang Z, Yang C, Liu Z, Wang L, Li C, Sun M (2018) Graph neural networks: a review of methods and applications. arXiv:1812.08434

Acknowledgements

This work was partly supported by the Guangdong Provincial Key Laboratory of Intellectual Property and Big Data (2018B030322016), the National Natural Science Foundation of China (U1701266), Special Projects for Key Fields in Higher Education of Guangdong, China (2020ZDZX3077, 2021ZDZX1042), the Science and Technology Project of Guangzhou City(202002020035), the Characteristic Innovation Projects of Ordinary Universities in Guangdong Province, China (2020KTSCX212, 2019KTSCX245), the Projects of South China Institute of Software Engineering of Guangzhou University (ky202012).

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, X., Lei, F., Xia, G. et al. AdjMix: simplifying and attending graph convolutional networks. Complex Intell. Syst. 8, 1005–1014 (2022). https://doi.org/10.1007/s40747-021-00567-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-021-00567-8