Abstract

In recent years, deep learning has led to impressive results in many fields. In this paper, we introduce a multiscale artificial neural network for high-dimensional nonlinear maps based on the idea of hierarchical nested bases in the fast multipole method and the \(\mathcal {H}^2\)-matrices. This approach allows us to efficiently approximate discretized nonlinear maps arising from partial differential equations or integral equations. It also naturally extends our recent work based on the generalization of hierarchical matrices (Fan et al. arXiv:1807.01883), but with a reduced number of parameters. In particular, the number of parameters of the neural network grows linearly with the dimension of the parameter space of the discretized PDE. We demonstrate the properties of the architecture by approximating the solution maps of nonlinear Schrödinger equation, the radiative transfer equation and the Kohn–Sham map.

Similar content being viewed by others

References

Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., et al.: Tensorflow: A system for large-scale machine learning. In: OSDI, vol. 16, pp. 265–283. USENIX Association (2016)

Anglin, J.R., Ketterle, W.: Bose–Einstein condensation of atomic gases. Nature 416(6877), 211 (2002)

Araya-Polo, M., Jennings, J., Adler, A., Dahlke, T.: Deep-learning tomography. Lead. Edge 37(1), 58–66 (2018)

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 2481–2495 (2017)

Bao, W., Du, Q.: Computing the ground state solution of Bose–Einstein condensates by a normalized gradient flow. SIAM J. Sci. Comput. 25(5), 1674–1697 (2004)

Beck, C., E, W., Jentzen, A.: Machine learning approximation algorithms for high-dimensional fully nonlinear partial differential equations and second-order backward stochastic differential equations. arXiv:1709.05963 (2017)

Berg, J., Nyström, K.: A unified deep artificial neural network approach to partial differential equations in complex geometries. arXiv:1711.06464 (2017)

Börm, S., Grasedyck, L., Hackbusch, W.: Introduction to hierarchical matrices with applications. Eng. Anal. Bound. Elem. 27(5), 405–422 (2003)

Bruna, J., Mallat, S.: Invariant scattering convolution networks. IEEE Trans. Pattern Anal. Mach. Intell. 35(8), 1872–1886 (2013)

Chan, S., Elsheikh, A.H.: A machine learning approach for efficient uncertainty quantification using multiscale methods. J. Comput. Phys. 354, 493–511 (2018)

Chaudhari, P., Oberman, A., Osher, S., Soatto, S., Carlier, G.: Partial differential equations for training deep neural networks. In: 2017 51st Asilomar Conference on Signals, Systems, and Computers, pp. 1627–1631 (2017)

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2018)

Chollet, F., et al.: Keras. https://keras.io (2015). Accessed April 30, 2018

Cohen, N., Sharir, O., Shashua, A.: On the expressive power of deep learning: a tensor analysis. arXiv:1509.05009 (2018)

E, W., Han, J., Jentzen, A.: Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Commun. Math. Stat. 5(4), 349–380 (2017)

Fan, Y., An, J., Ying, L.: Fast algorithms for integral formulations of steady-state radiative transfer equation. J. Comput. Phys. 380, 191–211 (2019)

Fan, Y., Lin, L., Ying, L., Zepeda-Núñez, L.: A multiscale neural network based on hierarchical matrices. arXiv:1807.01883 (2018)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Greengard, L., Rokhlin, V.: A fast algorithm for particle simulations. J. Comput. Phys. 73(2), 325–348 (1987)

Hackbusch, W.: A sparse matrix arithmetic based on \(\cal{H}\)-matrices. Part I: introduction to \(\cal{H}\)-matrices. Computing 62(2), 89–108 (1999)

Hackbusch, W., Khoromskij, B.N.: A sparse \(\cal{H}\)-matrix arithmetic: general complexity estimates. J. Comput. Appl. Math. 125(1–2), 479–501 (2000)

Hackbusch, W., Khoromskij, B.N., Sauter, S.: On \(\cal{H}^2\)-Matrices. Lectures on Applied Mathematics. Springer, Berlin (2000)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hinton, G., Deng, L., Yu, D., Dahl, G.E., Mohamed, Ar, Jaitly, N., Senior, A., Vanhoucke, V., Nguyen, P., Sainath, T.N., Kingsbury, B.: Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process. Mag. 29(6), 82–97 (2012)

Hohenberg, P., Kohn, W.: Inhomogeneous electron gas. Phys. Rev. 136(3B), B864 (1964)

Hornik, K.: Approximation capabilities of multilayer feedforward networks. Neural Netw. 4(2), 251–257 (1991)

Khoo, Y., Lu, J., Ying, L.: Solving parametric PDE problems with artificial neural networks. arXiv:1707.03351 (2017)

Khrulkov, V., Novikov, A., Oseledets, I.: Expressive power of recurrent neural networks. arXiv:1711.00811 (2018)

Klose, A.D., Netz, U., Beuthan, J., Hielscher, A.H.: Optical tomography using the time-independent equation of radiative transfer—part 1: forward model. J. Quant. Spectrosc. Radiat. Transf. 72(5), 691–713 (2002)

Koch, R., Becker, R.: Evaluation of quadrature schemes for the discrete ordinates method. J. Quant. Spectrosc. Radiat. Transf. 84(4), 423–435 (2004)

Kohn, W., Sham, L.J.: Self-consistent equations including exchange and correlation effects. Phys. Rev. 140(4A), A1133 (1965)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 1, NIPS’12, pp. 1097–1105, USA, Curran Associates Inc (2012)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–444 (2015)

Leung, M.K.K., Xiong, H.Y., Lee, L.J., Frey, B.J.: Deep learning of the tissue-regulated splicing code. Bioinformatics 30(12), i121–i129 (2014)

Li, Y., Cheng, X., Lu, J.: Butterfly-Net: Optimal function representation based on convolutional neural networks. arXiv:1805.07451 (2018)

Lin, L., Lu, J., Ying, L.: Fast construction of hierarchical matrix representation from matrix–vector multiplication. J. Comput. Phys. 230(10), 4071–4087 (2011)

Litjens, G., Kooi, T., Bejnordi, B.E., Setio, A.A.A., Ciompi, F., Ghafoorian, M., van der Laak, J.A.W.M., van Ginneken, B., Sánchez, C.I.: A survey on deep learning in medical image analysis. Med. Image Anal. 42, 60–88 (2017)

Ma, J., Sheridan, R.P., Liaw, A., Dahl, G.E., Svetnik, V.: Deep neural nets as a method for quantitative structure–activity relationships. J. Chem. Inf. Model. 55(2), 263–274 (2015)

Marshak, A., Davis, A.: 3D Radiative Transfer in Cloudy Atmospheres. Springer, Berlin (2005)

Mhaskar, H., Liao, Q., Poggio, T.: Learning functions: when is deep better than shallow. arXiv:1603.00988 (2018)

Paschalis, P., Giokaris, N.D., Karabarbounis, A., Loudos, G., Maintas, D., Papanicolas, C., Spanoudaki, V., Tsoumpas, C., Stiliaris, E.: Tomographic image reconstruction using artificial neural networks. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrom. Detect. Assoc. Equip. 527(1), 211–215 (2004). (Proceedings of the 2nd International Conference on Imaging Technologies in Biomedical Sciences)

Pitaevskii, L.: Vortex lines in an imperfect Bose gas. Sov. Phys. JETP 13(2), 451–454 (1961)

Pomraning, G.C.: The Equations of Radiation Hydrodynamics. Courier Corporation, Chelmsford (1973)

Raissi, M., Karniadakis, G.E.: Hidden physics models: machine learning of nonlinear partial differential equations. J. Comput. Phys. 357, 125–141 (2018)

Ren, K., Zhang, R., Zhong, Y.: A fast algorithm for radiative transport in isotropic media. arXiv:1610.00835 (2016)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: Convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, pp. 234–241. Springer International Publishing, Cham (2015)

Rudd, K., Muro, G.D., Ferrari, S.: A constrained backpropagation approach for the adaptive solution of partial differential equations. IEEE Trans. Neural Netw. Learn. Syst. 25(3), 571–584 (2014)

Sarikaya, R., Hinton, G.E., Deoras, A.: Application of deep belief networks for natural language understanding. IEEE/ACM Trans. Audio Speech Lang. Process. 22(4), 778–784 (2014)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Silver, D., Huang, A., Maddison, C.J., Guez, L.S.A., Driessche, G.V.D., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M.: Mastering the game of Go with deep neural networks and tree search. Nature 529(7587), 484–489 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-sacle image recognition. Computing Research Repository (CoRR). arXiv:1409.1556 (2014)

Socher, R., Bengio, Y., Manning, C.D.: Deep learning for NLP (without magic). In: The 50th Annual Meeting of the Association for Computational Linguistics, Tutorial Abstracts, vol. 5 (2012)

Spiliopoulos, K., Sirignano, J.: DGM: A deep learning algorithm for solving partial differential equations. arXiv:1708.07469 (2018)

Sutskever, I., Vinyals, O., Le, Q.V.: Sequence to sequence learning with neural networks. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems, vol. 27, pp. 3104–3112. Curran Associates, Inc., New York (2014)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. Computing Research Repository (CoRR). arXiv:1409.4842 (2014)

Timothy, D.: Incorporating Nesterov momentum into Adam. http://cs229.stanford.edu/proj2015/054_report.pdf (2015)

Trefethen, L.: Spectral Methods in MATLAB. Society for Industrial and Applied Mathematics, Philadelphia (2000)

Tyrtyshnikov, E.: Mosaic-skeleton approximations. Calcolo 33(1–2), 47–57 (1998). (1996. Toeplitz matrices: structures, algorithms and applications (Cortona, 1996))

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Deep image prior. arXiv:1711.10925 (2018)

Wang, T., Wu, D.J., Coates, A., Ng, A.Y.: End-to-end text recognition with convolutional neural networks. In: Pattern Recognition (ICPR), 2012 21st International Conference on Pattern Recognition (ICPR2012), pp. 3304–3308 (2012)

Wang, Y., Siu, C.W., Chung, E.T., Efendiev, Y., Wang, M.: Deep multiscale model learning. arXiv:1806.04830 (2018)

Xiong, H.Y., et al.: The human splicing code reveals new insights into the genetic determinants of disease. Science 347(6218), 1254806 (2015)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) Computer Vision—ECCV 2014. Lecture Notes in Computer Science, vol. 8689, pp. 818–833. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10590-1_53

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

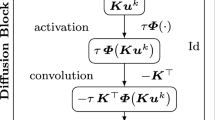

Appendix: Comparing MNN-\(\mathcal {H}^2\) with CNN

Appendix: Comparing MNN-\(\mathcal {H}^2\) with CNN

In this appendix, by comparing MNN-\(\mathcal {H}^2\) with the classical convolutional neural networks (CNN), we show that multiscale neural networks not only reduce the number of parameters, but also improve the accuracy. Since the RTE example is not translation invariant, we perform the comparison using NLSE and Kohn–Sham map.

NLSE with inhomogeneous background potential Here we study the one-dimensional NLSE using the setup from Sect. 4.1.1 for different number of Gaussians in the potential V (4.2). The training and test errors for MNN-\(\mathcal {H}^2\) and CNN are presented in Fig. 20. The channel number, layer number and window size of CNN are optimally tuned based on the training error. The figure demonstrates that MNN-\(\mathcal {H}^2\) has fewer parameters and gives a better approximation to the NLSE.

Kohn–Sham map For the Kohn–Sham map, we consider the one-dimensional setting in (4.16) with varying number of Gaussian wells. The width of the Gaussian well is set to be 6. In this case, the average size of the band gap is 0.01, and the electron density at point x can depend sensitively on the value of the potential at a point y that is far away. Figure 21 presents the training and test errors of MNN-\(\mathcal {H}^2\) and CNN, where MNN-\(\mathcal {H}^2\) outperforms a regular CNN with a comparable number of parameters.

Rights and permissions

About this article

Cite this article

Fan, Y., Feliu-Fabà, J., Lin, L. et al. A multiscale neural network based on hierarchical nested bases. Res Math Sci 6, 21 (2019). https://doi.org/10.1007/s40687-019-0183-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40687-019-0183-3