Abstract

This comprehensive review paper aims to provide an in-depth analysis of the most recent developments in the applications of artificial intelligence (AI) techniques, with an emphasis on their critical role in the demand side of power distribution systems. This paper offers a meticulous examination of various AI models and a pragmatic guide to aid in selecting the suitable techniques for three areas: load forecasting, anomaly detection, and demand response in real-world applications. In the realm of load forecasting, the paper presents a thorough guide for choosing the most fitting machine learning and deep learning models, inclusive of reinforcement learning, in conjunction with the application of hybrid models and learning optimization strategies. This selection process is informed by the properties of load data and the specific scenarios that necessitate forecasting. Concerning anomaly detection, this paper provides an overview of the merits and limitations of disparate learning methods, fostering a discussion on the optimization strategies that can be harnessed to navigate the issue of imbalanced data, a prevalent concern in power system anomaly detection. As for demand response, we delve into the utilization of AI techniques, examining both incentive-based and price-based demand response schemes. We take into account various control targets, input sources, and applications that pertain to their use and effectiveness. In conclusion, this review paper is structured to offer useful insights into the selection and design of AI techniques focusing on the demand-side applications of future energy systems. It provides guidance and future directions for the development of sustainable energy systems, aiming to serve as a cornerstone for ongoing research within this swiftly evolving field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

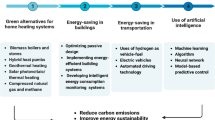

The urgent challenge facing our society is the decarbonization of the energy system to mitigate the impact of climate change and achieve a net-zero carbon future. Climate change is affecting over 25,000 species, pushing them towards extinction, as reported in numerous studies [1,2,3]. Additionally, traditional energy sources, such as oil and gas, are expected to be depleted by 2050 [2], while the demand for energy continues to increase. In fact, non-OECD economic growth is expected to increase energy demand by over 30% by 2050 [4].

In this context, it is crucial to increase the use of renewable energy sources (RESs) in future energy systems to meet the rising energy demand and decarbonize the energy sector. Figure 1 illustrates the historical global power consumption data from 1800 to 2019 [5], showing that the proportion of energy provided by RESs is increasing rapidly, although it still remains relatively small [6]. According to the International Energy Agency’s Electricity Market Report 2023, RESs together with nuclear, will on average meet more than 90% of the increase in global demand by 2025 [7].

Although RESs are becoming more prevalent, integrating them directly into current power grids designed for dispatching conventional power generation presents two critical challenges [8,9,10].

-

RESs exhibit intermittency, making it difficult to predict their generation and manage the associated risks of power imbalances and blackouts.

-

Current energy systems and markets lack adequate mechanisms to integrate sustainable renewable energy for required emission controls in major decarbonization efforts.

Artificial intelligence (AI) has successfully solved many real-world problems in computer vision and natural language processing, and is promising in addressing energy challenges. AI can enable a comprehensive framework for effective power system control, management, energy market pricing, and policy recommendations [11,12,13,14,15]. Machine learning (ML) and deep learning (DL) models have been widely employed to optimize energy efficiency, conversion, distribution, and decarbonization in smart grids, showcasing the potential of data-driven approaches in this field [10, 11, 13,14,15]. These models provide timely feedback, enabling efficient two-way communication between the grid and customers and significantly enhancing the security, reliability, and efficiency of the system [4, 16,17,18]. With AI, a smart grid can optimize renewable resource utilization, balance electricity production and consumption, improve grid reliability, and ensure security [19]. Smart grid applications have grown rapidly in recent years, with a growing market share [13]. Figure 2 illustrates a typical smart grid structure.

1.1 AI Techniques on Demand Side

The demand side, or consumption side, is one of the crucial parts of future smart energy systems. It’s expected to facilitate low-carbon and net-zero development as energy consumption increases and consumers are empowered by AI techniques [20]. Various AI-based technologies have been applied to enable smarter power consumption. For instance, neural network-based AI methods have been widely employed to predict future power consumption in smart grids, significantly improving power dispatch, load scheduling, and market management [20, 21]. Data-driven classification methods have also been utilized for electrical load anomaly detection, primarily aimed at ensuring the safe operation of power systems [22]. The research scope of anomaly detection ranges from large-scale industrial power system monitoring and smart buildings to generic residential houses. Anomaly detection can also be categorized into system security detection and non-invasive residents’ health status monitoring based on the type of detection activities [20, 22, 23]. Previous works on demand response have proposed methods to manage resources efficiently and provide feedback to the energy market [24].

Given the critical role of the consumption side in power systems, numerous reviews have recently been conducted to summarize the applications of AI-based strategies in demand-side management. For instance, Raza et al. presented a review work on the application of load forecasting [25]. The paper discussed the classification of load forecasting, the impact of surrounding environments on predictive results, and the performance of the different artificial neural network (ANN)-based forecasting approaches. It identified key parameters that affect the accuracy of ANN-based forecast models, such as forecast model architecture, input combination, activation functions, and the training algorithm of the network. The review highlighted the potential of AI techniques for effective load forecasting to achieve the concept of smart grid and buildings, providing valuable insights into the importance of accurate load forecasting for efficient energy management and better power system planning. However, the review primarily focused on short-term load forecasting techniques, which may limit its applicability to long-term planning and decision-making. Additionally, it did not provide a comparative analysis of different forecasting techniques or their relative strengths and limitations from the standpoint of real-world applications. Tanveer et al. provided a comprehensive overview of data-driven and large-scale-based approaches for forecasting building energy demand [26]. The review discussed the importance of energy consumption models in energy management and conservation for buildings, categorizing methods for building energy simulations into four level classes. However, the review did not delve into specific examples or approaches of how these AI schemes have been applied in real-world situations. Khan et al. conducted a related work investigation focusing on load forecasting, dynamic pricing, and demand-side management (DSM) [27]. The review discussed the role of load forecasting in the planning and operation of power systems and how future smart grids will utilize load forecasting and dynamic pricing-based techniques for effective DSM. It also provided a comparative study of forecasting techniques and discussed the challenges of load forecasting and DSM, covering various techniques such as appliance scheduling, dynamic pricing schemes, and optimization of energy consumption to manage energy on the consumer side. Nevertheless, the review did not provide a detailed analysis of the effectiveness or practical implementation issues of these techniques. Additionally, the paper primarily focused on residential energy management systems and may not be directly applicable to other types of smart grid applications.

Himeur et al. presented a survey on AI-enhanced anomaly detection approaches on the consumption side [28]. The review provided a comprehensive taxonomy to classify existing algorithms based on different modules and parameters adopted. It also presented a critical analysis of the state-of-the-art, exploring current difficulties, limitations, and market barriers associated with the development and implementation of anomaly detection systems. The review focused on anomaly detection frameworks for building energy consumption, but it did not provide a comprehensive review of anomaly detection frameworks for other types of energy consumption, such as industrial or transportation. Antonopoulos et al. conducted a review summarizing the applications of ML methods on power consumption [29]. They discussed over 160 related papers, 40 companies, commercial initiatives, and 21 large-scale projects. The review provides insights into the potential benefits of these technologies in improving energy efficiency and sustainability. It discusses various AI techniques that have been used in this field, their advantages and drawbacks, and their real-world applications. The review also highlights the challenges and opportunities for future research in this area. However, it is possible that the work may overlook some real-world challenges and limitations in implementing AI and ML on the demand side. For example, the review may not fully consider the cost-effectiveness of these technologies, the availability of data and infrastructure required for their implementation, and the potential ethical and social implications of their use. Additionally, the review may not fully address the practical challenges of integrating AI with existing energy systems and policies. Wang et al. presented an application-oriented review based on smart meter data [30]. The authors begin by conducting a literature review of smart meter data analytics on the demand side, focusing on the newest developments, particularly over the past five years. They then provide a well-designed clustering of smart meter data analytics applications from the perspective of load analysis, load forecasting, load management, and more. The paper also discusses open research questions for future research directions, including big data issues, new ML technologies, new business models, the transition of energy systems, and data privacy and security. One major contribution of this paper is its comprehensive overview of current research in smart meter data analytics. The authors provide a detailed taxonomy for different applications of smart meter data analytics and discuss various techniques and methodologies adopted or developed to address each application. Additionally, they identify key research trends such as big data issues and novel ML technologies. However, similar to the previous review works, while the proposed study does discuss some practical challenges facing the implementation of smart meter data analytics in the power industry (such as data privacy and security), it does not provide much information on how these challenges can be addressed in practice.

1.2 The Motivation of Our Study

Despite the growing application of AI techniques in power systems, a significant gap remains between theoretical algorithms and their practical implementation. Prior works, while evaluating the effectiveness of AI in enhancing performance across diverse applications such as load forecasting, often fail to provide a comprehensive guide for the selection, optimization, and construction of various ML models that meet the specific requirements of energy systems. For instance, Fig. 3 illustrates the three crucial components on the demand side of power systems: load forecasting, anomaly detection, and demand response. Each of these components follows the data-driven approach, which begins with data processing to collect input data from various sources such as power distribution systems, previous prediction/detection outputs, markets, and historical datasets. This data is then managed, structured, and in some cases, subjected to complex processing procedures such as feature extraction and normalization before being used to train the selected AI model for specific scenarios.

From a systemic viewpoint, it is vital to note that load forecasting and anomaly detection are intertwined tasks that output estimated future power usage information and real-time diagnosis results for power systems. These tasks are not isolated, but mutually dependent. The results of load forecasting and anomaly detection provide critical references for demand response, which in turn impacts the energy market, supply and demand sides, and the environment, ultimately influencing future forecasting and detection results. The interconnectedness of these components underscores the importance of considering their interactions when designing data-driven approaches for energy systems. Thus, there is a need for a review that considers not just the effects of each component, but also provides practical insights into their interplay. By synthesizing previous research across domains, our study offers a holistic and strategic perspective on future sustainable development. While emphasizing the importance of each research area, our study bridges these domains and underscores the need to address real-world application challenges, ultimately providing a comprehensive overview of the demand side of power systems. Our contributions focus on providing practical insights for evaluating, selecting, and optimizing various ML and DL models in each component, as well as offering a holistic view for better understanding and meeting the requirements of energy systems. Some practical issues such as energy system sensor/input noises, data labeling errors/cost, the resilience of existing energy infrastructure, data imbalance, data availabilities, operational constraints, etc., are analyzed for better application of different ML/DL models, as these issues can impact the implementation of AI methods in power distribution systems [22, 31,32,33].

Figure 4 shows the hierarchical structure of each component. In this section, we focus on summarizing the previous efforts and discussing the current state-of-the-art in load forecasting, anomaly detection, and demand response using AI techniques. In the load forecasting domain, we summarize previous efforts according to different AI technologies used, discuss promising optimization schemes from the perspective of implementation, and compare the advantages and limitations of reviewed prediction methods for different applications. Next, we review anomaly detection approaches that identify abnormal load patterns and consumption behaviors, ensuring the security of power grids and reducing unnecessary power usage and CO2 emissions. We provide a holistic summary of promising optimization schemes for addressing data imbalance issues and discuss the associated challenges and trade-offs of these anomaly detection approaches. Finally, we introduce advanced strategies in demand response to comprehensively assess demand-side power usage and facilitate interaction between the system and consumers. Demand response assists in managing consumption to reduce cost, waste, and risks, ensuring the balance between power generation and consumption and the reliability of future power systems. Our comprehensive review aims to provide a roadmap for researchers and practitioners to better understand the capabilities of AI techniques in enhancing power consumption on the demand side. By examining the features and challenges of each field and discussing optimization strategies, this work has the potential to drive innovation and inform the development of practical solutions that can benefit the power industry and society as a whole. The remainder of the paper is organized as follows: Sect. 2 summarizes load forecasting developments, Sect. 3 reviews anomaly detection, Sect. 4 explores demand response, and Sect. 5 concludes our work.

2 Load Forecasting

Load forecasting plays an essential role in energy dispatch and grid operations for energy suppliers and system operators. Effective and accurate load forecasting methods can improve system reliability, load scheduling, energy utilization, and reduce operating costs and risks. Especially given the vulnerability of RESs to environmental factors, the ability to estimate future power consumption is essential to improve the distribution efficiency of RESs [34,35,36,37,38].

Load forecasting methods can be typically categorized based on application scenarios into short-term, medium-term, and long-term forecasting [34,35,36,37,38,39,40].

Short-term forecasting typically refers to predictions up to 72 hours ahead, and it is essential for operational planning, such as unit commitment and economic dispatch. Medium-term forecasting, covering a period from one week to one year, is often used for maintenance scheduling and fuel reserve management. Long-term forecasting methods, used for periods exceeding one year, contribute to strategic planning, such as capacity expansion and infrastructure development [19, 40, 41]. While there is no universally accepted classification based on the predictive horizon, it is important to note that different forecasting scenarios present their unique challenges and advantages, necessitating different modeling strategies [34,35,36].

Another way to classify load forecasting methods is based on the datadriven techniques employed. In general, these forecasting approaches can be categorized as ML-based and DL-based, each presenting distinct advantages and limitations [42]. In our work, we strive to offer a comprehensive analysis of data-driven model-based load forecasting, illustrating the features, benefits, and limitations of each approach in real-world applications from an AI perspective. To this end, we have classified the previous efforts into three categories: ML-based, DL-based, and statistical learning-based. Given the lack of universally accepted definitions for statistical learning and ML, the classification of forecasting methods can be somewhat subjective. For clarity and a more meaningful analysis, we define the classifications as shown in Table 1. Figure 5 effectively visualizes the different classifications of load forecasting approaches, indicating that ML, DL, and statistical learning-based approaches can be employed across diverse scenarios based on their respective strengths and suitability.

2.1 Statistical Learning-Based Load Forecasting

Statistical learning-based forecasting using regression models is simple to implement and interpret. Energy consumption is often correlated with exogenous factors and historical consumption, and a regression model can be fitted using the data to predict future loads. Linear regression is one of the most classical and popular methods used in load forecasting [46]. For example, Fan et al. proposed a comparison between linear and nonlinear techniques and found that both have their own best feature set for model development [47]. The proposed work showed that linear models might be better when raw features are used, and nonlinear methods can achieve satisfactory performance when the feature set is well-processed. Ming et al. and Syed et al. explored improved linear models for load forecasting [48, 49]. Compared with typical nonlinear models, the proposed improved linear regression models are much more computationally efficient, and the simplicity of linear methods makes them useful over different time scales [50].

Unlike general regression-based methods that correlate various information with energy consumption data, an autoregression-based method focuses on data points from a time series and correlates the future values of a variable with only its past values. Thus, in autoregression models, the historical load is the only factor that affects future consumption [23]. One of the most used autoregression models is the autoregressive integrated moving average (ARIMA) for non-stationary time series forecasting. Many studies aimed to improve the standard ARIMA. Lee et al. developed an improved ARIMA-based short-term load forecasting model [51], outperforming back-propagation neural networks (BPNN). To overcome the limitations of ARIMA, it is commonly combined with a nonlinear model to yield a hybrid load predictor. Zou et al. developed an ARIMA-based hybrid short-term load predictor [52], in which a BPNN is used to reduce the residual error of ARIMA. The test results demonstrate that the proposed hybrid framework outperforms the original ARIMA. A similar effort was also provided by Wang et al. [23]. To improve the accuracy of real-time forecasting using ARIMA, Wang et al. proposed a method that incorporates an artificial neural network (ANN) model to dynamically learn the forecasting errors of ARIMA [23]. The proposed approach employs an online learning approach, where the final prediction output is a combination of the ARIMA predicted result and an estimated error provided by the ANN model. Comparative results demonstrated that the proposed approach outperforms the single ARIMA model in terms of accuracy.

While linear models can be effective, they are not well-suited for highly nonlinear data and may require hybrid approaches to improve performance. To address this limitation, more effective nonlinear models are needed. Fan et al. developed a data-mining technology-based one-day-ahead building load predictor and compared it with multiple linear regression (MLR), support vector regression (SVR), multi-layer perceptron (MLP), binary tree, multivariate adaptive regression spline, and k-nearest neighbor (kNN) methods [53]. It was found that although MLR requires the least computational time compared to the other models, it still does not perform very well because smart building related processes are usually nonlinear and complex [53]. Khorsheed et al. developed a nonlinear model for long-term peak load forecasting and demonstrated that this model is more accurate than the linear model [54]. Fan et al. developed two load forecasting models to manage and optimize building cooling and compared them with a few models, such as MLR, autoregression, and BPNN [55, 56]. It was found that MLR accurately predicts the on-site cooling load while requiring minimal training data using simple hardware with low computational complexity. Benefiting from the straightforward structure and fast calculation speed of the statistical models, statistical learning-based methods are widely applied in load forecasting for smart grids. Both linear and nonlinear models play important roles in different forecasting scenarios [57]. Principal component analysis, sensitivity analysis, and stepwise regression were introduced by Yildiz et al. [50] to further enhance the accuracy of regression methods. Regression models are also widely employed to monitor energy consumption, measure and verify energy efficiency [58,59,60], and identify operation and maintenance problems [60,61,62].

2.2 Machine Learning-Based Load Forecasting

Recently, neural network-based methods have gained significant attention and are increasingly being applied for load forecasting [63]. Classical ML models are relatively simple, readily interpretable, and computationally efficient compared to DL models.

2.2.1 Artificial Neural Network (ANN)-Based Load Forecasting

Among various ML-based load predictors, artificial neural networks (ANNs) have the advantage of extracting features from data to perform accurate regression, making them widely used in load forecasting [63,64,65,66,67,68,69]. Amber et al. proposed a building electric load forecasting method that incorporates various environmental data, and compared it with other methods such as genetic algorithms, support vector machines (SVM), and deep neural networks (DNN) [69]. The results indicated that ANN outperforms all the other methods while maintaining a reasonable level of complexity. Furthermore, the comparison between DNN and ANN revealed that the performance of DNN might be superior when the training dataset is limited. Davut Solyali proposed an ML-based load forecasting method in their previous work [63], where they compared different models for both long- and short-term predictions. The models included SVM, multiple linear regression (MLR), a neuro-fuzzy inference system, and an ANN. The results indicated that SVM is the best-performing model for long-term forecasting, while ANN showed better performance for short-term forecasting.

Some studies have also focused on enhancing the prediction accuracy of ANN by selecting model inputs and features. For instance, Ding et al. and Patel et al. developed load prediction methods by integrating ANNs and k-nearest neighbors (kNN) [64, 70]. These methods considered the effects of environmental factors and used kNN to cluster input variables. They demonstrated that training ANN on the processed data can lead to better outcomes. Ding et al. explored the effect of input variables on the cooling load prediction accuracy of an office building [64]. The authors used two ML models, ANN and SVM, for prediction. The clustering method was utilized to optimize the selection of input variables for 1-hour-ahead cooling load prediction. The comparison aimed to enhance the prediction performance of ML-based predictors.

2.2.2 Other Machine Learning-Based Load Forecasting

In addition to ANNs, other ML models, such as extreme gradient boost (XGBoost), have been utilized for load forecasting in smart grids. Al-Rakhami et al. developed an XGBoost-based load forecasting model for residential buildings and revealed that XGBoost can effectively address overfitting issues in load forecasting [70]. By comparing the results of different forecasting approaches, the advantage of XGBoost in avoiding overfitting problems is demonstrated. Vantuch et al. studied the computational complexities of prediction and training, showing that random forest regression (RFR) and XGBoost exhibited the lowest complexities, followed by ANN and SVR. The flexible neural tree is the most computationally expensive model, and its prediction could have been better compared to those of RFR and XGBoost [66]. Wang et al. proposed a load forecasting framework that employs an XGBoost-based one-step-ahead forecaster with quantile regression [71]. The XGBoost-based quantile regression model is used to generate a prediction interval for the next step. The proposed strategy dynamically tracks recent prediction results to adjust the parameters of the quantile regression model to optimize the final results. Notably, the proposed approach is compared with various ML/DL models, and the comparison results demonstrate that the proposed forecasting framework is the most accurate, providing reliable and accurate results in real-time. Zhang et al. developed improved grey wolf optimization- and extreme learning machine-based load predictors hosted in the cloud [72]. The improved grey wolf optimization is used to optimize the parameters of the extreme learning machine. A comparison between the initial extreme learning machine model and the model with the selected optimal parameters validates the effectiveness of the improved grey wolf optimization- and extreme learning machine-based load predictors. Fan et al. developed a data mining-based ensemble load forecasting method to achieve one-day-ahead forecasting [53]. Through introducing the data training and feature selection and elimination progress, eight different ML models are employed to improve the feasibility of the proposed ensemble learning approach. Finally, the study concludes that the proposed comprehensive framework outperforms the initial eight models and achieves better forecasting results.

2.2.3 Recurrent Neural Network (RNN)-Based Load Forecasting

Among various DL methods, recurrent neural networks (RNNs) are commonly used for load forecasting with sequential data. Long short-term memory (LSTM) is a typical RNN-based method widely employed for load forecasting [34, 38, 73,74,75,76,77,78]. Song et al. and Kumari et al. recently developed two different LSTM-based load predictors [76, 77, 79]. Song et al.’s work focused on verifying the accuracy of the LSTM-based forecasting approach using two real-world datasets and employed model echo state networks for comparison. Kumari et al. tested the robustness of LSTM for time-series forecasting using different datasets. Both LSTM-based approaches demonstrated good performance in load forecasting. Zhang et al. studied a hybrid forecasting approach that integrates Fiber Bragg Grating sensors with LSTM to forecast electrical load [79]. Compared with BPNN, the proposed method reduced the complexity of the network, saved running time, and improved forecasting accuracy. Bouktif et al. developed an advanced LSTM-based load forecasting algorithm [73] using a genetic algorithm (GA) to obtain the optimal lag and layer number, providing guidelines for optimizing LSTM.

Although RNNs are commonly used for time series forecasting, the inherent limitation of the vanishing gradient during training restricts their applications. As a result, many authors have attempted to improve RNNs by integrating hybrid models [34, 74]. For example, Zhang et al. and Cenek et al. developed LSTM/ANN-based predictors [38, 77]. Zhang et al. trained an LSTM to extract features from time-series data, and an ANN was used to analyze the relationship between features and the load [38]. Cenek et al. developed a grid load predictor that considered environmental factors [77]. An LSTM was used to predict future weather outputs for ANN, which forecasted the final outputs for the load. Both studies found that the hybrid methods outperformed individual ANN and LSTM models in load forecasting.

2.2.4 Convolutional Neural Network (CNN)-Based Load Forecasting

Convolutional neural networks (CNNs) are also widely used in load forecasting [80]. CNNs leverage the unique linear operation called convolution in at least one layer of the network to effectively learn representations and extract features from time series data [81]. The output layer is typically placed after the fully connected layers and resembles a standard ANN layer.

Kuo et al. and Amarasinghe et al. developed CNN-based load predictors [35, 82]. Kuo et al. compared CNN with several benchmark models including SVM, decision tree, MLP, and LSTM, and found that CNN outperformed all the tested models [35]. However, Amarasinghe et al. found that CNN did not provide a clear advantage over other models, and LSTM showed better outcomes in their comparison results [82]. This difference in performance may be due to the complexity and diversity of the building-level power consumption data in the test dataset. As a sequential model, LSTM is better equipped to learn features from such time series data compared to CNN [82]. Dong et al. developed a hybrid load forecasting method that combines CNN with K-means clustering [83]. By applying K-means clustering to the data before training the CNN, they achieved better predictive accuracy compared to other models such as SVR, neural network, linear regression + K-means, SVR + K-means, and neural network + K-means. Another CNN-based hybrid short-term load predictor was presented by Aurangzeb et al. who used a novel pyramidal CNN model to reduce the computational complexity of load forecasting [81]. The proposed method demonstrated improved forecasting accuracy. However, it is worth noting that CNN-based hybrid predictors may have higher training costs compared to other machine learning-based load forecasting approaches. Alhussein developed a CNN-LSTM-based load forecasting method that enhanced forecasting accuracy and outperformed baseline models [84]. While CNN-based hybrid predictors generally improve predictive accuracy, it is important to consider their training costs compared to other machine learning-based load forecasting approaches.

2.3 Optimization Strategies for Improving Learning-based Load Forecasting

Regardless of the different models employed for load forecasting, the forecasting performance is affected by factors from real-world applications, such as the predictive horizon, feature extraction, and computational resources. In this section, we discuss several recent improvement methods [42].

2.3.1 Forecasting Methods Under Different Horizons

Different models perform differently on short- and long-term load forecasting. It is essential to choose the most suitable model for various forecasting horizons to maximize prediction accuracy and performance [42]. In this work, we point out that:

-

For short-term load forecasting, it is essential to use models that can effectively capture the changes and variations in time-series load data. Moreover, the running time of these models is also a crucial consideration, as real-time predictions are often required [42, 67, 84].

-

For long-term load forecasting, the models should be robust against the impact of data noise and overfitting or underfitting issues. These models need to have a good generalization capability to be able to forecast load accurately for longer periods [42, 66, 67].

Wang et al. in a study of building thermal load forecasting, comprehensively discussed twelve forecasting models, including LSTM and XGBoost [84]. The test results of this work pointed out that the heuristic load forecasting methods are recommended for projects with limited budgets and resources, and LSTM is proven to be robust against input uncertainty and recommended for short-term forecasting. For long-term forecasting, XGBoost is found to be more accurate and trained with the predicted results to enhance the robustness of the model. Yang et al. developed a multi-step-ahead load predictor using an autoencoder neural network with a pre-recurrent feature layer [85]. This method showed satisfactory performance when the forecasting horizon is less than 3 hours. Vantuc et al. compared different ML-based approaches under varying forecasting horizons from one hour to one week [66]. A comparison of ANN, SVR, RFR, XGBoost, and a flexible neural tree showed that ANN provides the highest stability when the forecasting horizon changes, which is also consistent with the findings by Sangrody et al. [67]. Due to the significant impact of the prediction horizon on results, Wang et al. proposed a load forecasting framework that combines an XGBoost-based one-step-ahead forecaster with an LSTM-based one-day-ahead predictor [86]. The framework can dynamically evaluate the step-by-step LSTM-based one-day-ahead load forecasting. If the forecast result is deemed inaccurate, the framework can automatically switch to the XGBoost-based one-step-ahead load forecasting model to improve prediction accuracy.

2.3.2 Decomposition-Based Feature Extraction

Data decomposition is another widely used strategy for enhancing the performance of load forecasting algorithms. A non-stationary series, like electrical load, is characterized by statistical properties that vary over time. Therefore, decomposing a non-stationary time series signal into a set of intrinsic mode functions and a residue can facilitate learning [87].

Empirical mode decomposition (EMD) is an unsupervised data-driven decomposition method commonly used for non-stationary time series data. Qiu et al. and Bedi et al. developed load forecasting methods based on EMD and compared their results to popular DL models such as DNN and LSTM [2, 88]. Both hybrid methods outperformed the single DL models, demonstrating the effectiveness of EMD-based decomposition in improving forecasting performance. Another decomposition method, seasonal-trend decomposition based on Loess (STL), was employed by Fan et al. to enhance load forecasting [89]. After STL decomposition, the subsequences became more regular and easier to learn, leading to improved performance compared to LSTM and LSTM- XGBoost models. Park et al. explored complex multi-user load prediction by developing a characteristic load decomposition-based load predictor [90]. The aggregated load measured at one node was divided using the characteristic load decomposition method to improve forecasting performance.

These studies demonstrate that decomposition-based feature extraction methods can be effective in enhancing the learning performance of load forecasting models. Decomposition provides an alternative way to extract features from time-series data. However, it is important to consider the training cost, efficiency, and flexibility of these methods to determine their practicality for real-world load forecasting applications. Further research is needed to investigate these aspects.

2.3.3 Attention Mechanism for Learning-Based Forecasting Approaches

The attention mechanism, developed from cognitive attention for sequence-to- sequence learning, has proven to be a powerful tool for improving DL-based learning models [91]. By allowing models to focus on the relationship between inputs and outputs during the training process, attention can improve interpretability and learning performance.

In recent studies, attention mechanisms have been applied to load forecasting models with promising results. For example, Li et al. proposed an RNN-based load predictor with attention and demonstrated its effectiveness through comparative results [92]. Similarly, Jin et al. designed an attention- based encoder-decoder network with Bayesian optimization for load prediction and found that the attention-enhanced approach consistently provided more accurate results [93]. Wang et al. developed a bi-LSTM-based predictor with attention and rolling update for short-term load forecasting [94]. The rolling update is used effectively to update the training dataset in the real-time forecasting process. Attention is employed to assign the influence weights of different input variables. Then a bi-LSTM is used for predicting. Unlike general LSTMs that transmit unidirectionally and only focus on past information, bi-LSTM provides a two-path training method. Both past and future data are taken into account. According to their test results, compared to the traditional bi-LSTM model, the proposed attention and rolling update boosted bi-LSTM model can achieve more accurate forecasting results. Another method was reported by Wu et al. [95], in which a short-term forecasting method is presented using an attention-based approach with CNN, LSTM, and bi-LSTM. The input dataset includes temperature, cooling load, and gas consumption information for the past five days. An attention-based CNN is utilized to extract the features of the input. Then, LSTM and bi-LSTM are combined to forecast the load for the next hour. The outputs indicate that the proposed method is more accurate than single LSTM, bi-LSTM, BPNN, RFR, SVR, and hybrid models: CNN-bi-LSTM, and CNN-LSTM. Sehovac et al. proposed a load forecasting method combining Sequence RNN and attention [96]. Given that the structure is composed of an encoder and decoder, and longer series may increase decoding challenges, attention is introduced to prioritize the input series. Compared with the other attention-empowered studies, the results showed that the proposed method with attention is more accurate. At the same time, this work also notes that the forecasting accuracy decreases as the forecasting horizon increases; moreover, longer input sequences may not always increase the accuracy.

As demonstrated in previous works, the attention mechanism is an effective strategy to improve the performance of predictors. However, it should be noted that incorporating attention mechanisms into models may lead to longer training times, which may limit the performance of predictors, especially for the applications of short-term load forecasting. Nonetheless, the potential benefits of attention mechanisms in terms of interpretability and improved prediction accuracy make it a promising area of research in the field of learning-based forecasting approaches.

2.3.4 Reinforcement Learning (RL)-Empowered Load Forecasting Schemes

Reinforcement learning (RL) is a subset of ML in which an agent learns to make decisions by interacting with the environment and receiving rewards or penalties based on its actions [97]. The agent senses the environment and chooses actions that influence it, seeking to find a policy that maximizes the accumulated rewards [97]. Compared to ML/DL-based load forecasting models that rely solely on training data, RL models can adjust their predictions dynamically based on new inputs, resulting in more accurate and reliable load forecasts, even in the presence of unforeseen events [98,99,100].

There are two main RL methods: policy-based and value-based RL [98]. In policy-based RL, such as policy gradient, the agent directly learns and updates the policy that maps state to action, repeating the process of “selecting the initial policy, finding the value function, and finding the new policy from the value function” until the optimal policy is found. To improve the forecastability of the entire electrical load, Xie et al. proposed an RL-based data sampling control approach that interacts with smart meter data [101]. The proposed approach can be implemented both offline and online by interacting with real-time data from each household. The test results show that the proposed RL-based algorithm outperforms competing algorithms and delivers superior predictive performance.

In value-based RL, such as Q-learning and Deep Q-Network (DQN), the agent focuses on the action-value function and finds the policy through the value function, repeating the process of “selecting the initial value function, choosing the best action in the state, finding the new value function” until the optimal value function is found. Feng and Zhang proposed a dynamic predictive model selection mechanism based on Q-learning to enhance the accuracy of multi-model load forecasting [98]. The approach dynamically selects the most accurate forecasting output from multiple ML/DL models based on real- time observations, and experimental results using two years of data show that it improves accuracy by approximately 50% compared to using any single ML/DL method alone. Dabbaghjamanesh et al. presented a Q-learning-based load forecasting algorithm for hybrid electric vehicle (EV) charging [102]. The algorithm employs both an ANN and an RNN to forecast the load simultaneously, and a Q-learning agent is utilized to determine which results to use as the final output. The algorithm is tested in three different scenarios, each with varying EV charging characteristics, and the comparison results demonstrate that the Q-learning-based approach can consistently predict EV charging load with greater accuracy and flexibility compared to the ANN and RNN methods. Park et al. proposed a novel approach to improve the accuracy of short-term load forecasting using a similar day selection model based on DQN [100]. The proposed model dynamically selects the most suitable training data, significantly boosting the performance of the ML model. The proposed method outperforms existing models in terms of accuracy, as demonstrated through extensive experiments on real-world load and meteorological data from Korea. As demonstrated by the aforementioned works, RL technologies have been extensively employed in load forecasting and have shown remarkable forecasting results. However, it should be noted that, at present, most of these advances primarily utilize RL to dynamically select the appropriate model, training data, sampling rate, and other related parameters. The primary prediction models still rely on ML and DL techniques. That is the reason the RL-empowered strategies are listed in Section 2.4. In line with the OpenAI framework, it is expected that more promising RL-based prediction approaches will be developed in the future.

2.4 Discussion of Forecasting Methods

As a review work targeted to discuss each strategy with an eye on real-world applications, it is critical to point out that

-

It is intractable to identify the “most accurate” or “fastest” approach [65, 71, 86]. The evaluation of prediction results shows different trade-offs depending on how various metrics prioritize their performance criteria from different perspectives [86].

For example, a variety of evaluation metrics has been proposed to evaluate load forecasting results, such as mean absolute error (MAE), root mean square deviation (RMSE), mean absolute percentage error (MAPE), symmetric mean absolute percent error (SMAPE), R2, and Theil’s inequality coefficient (TIC) [27, 103, 104]. However, it is found that MAPE offers a multi-dimensional perspective of predictive results evaluation. Sometimes, it may misinterpret the results, especially when the length of the data series is not specified [65].

-

Various prediction horizons require different considerations to trade-off accuracy, flexibility, and reliability.

As mentioned before, according to the application scenarios, load forecasting methods can be categorized into short-, medium-, and long-term forecasting [34,35,36,37,38,39,40]. The short-term is generally defined as up to 72 hours, which can help to fix the time-lag measurements and provide an immediate impact on the operation [37, 38, 40]. However, the implementation of this method is always limited by sensing noise, calculation speed, human behaviors, and over-or-under training problems [37]. The Mid-term refers to the prediction period as one week, months, or up to one year, while the long-term is the prediction period with a prediction period longer than one year [40]. These works have greater importance for long-term planning, economic growth, policy adjustment, system capacity determination, and maintenance, but suffer from the limitation of prediction accuracy [37, 40, 105]. Although each forecasting approach has its application scenarios and advantages, a long-standing problem with load forecasting is that no classification criteria based on the predictive horizon have yet emerged [34,35,36]. For example, some previous work further divided the short term into very short term and short term [35]. Where the very short-term is defined as less than one day, short-term is longer than one day and less than one week, med-term is less than one year and long-term is longer than one year. For a better discussion of the applications of each strategy, the predictive horizons in this work are classified into short- and long-term, defined as less than one day and more than one day, respectively [34,35,36]. In Table 2, this work summarizes the features of each forecasting approach.

Table 2 compares the advantages and limitations of DL-based, ML-based, and statistical learning-based methods and guides the selection of load forecasting models. A statistical learning-based approach is recommended for forecasting scenarios requiring easy implementation, low computational cost, and real-time processing regardless of the prediction horizon. However, according to the features of target data, additional optimization may be needed to provide accurate forecasting outputs [46, 53, 56]. ML-based methods are usually recommended for long-term prediction. Although ML-based methods generally require a longer training time compared to statistical learning- based methods, they still offer a reasonable trade-off between computational complexity and prediction accuracy [54, 56].

DL-based methods are recommended for short-term predictions when accuracy is the priority. The strategy can automatically capture more data features and yield more accurate predictions [81]. Though the interpretability of DL has been questioned, the recent research efforts in explainable AI and attention mechanism shed light on the interpretability of DL, which could improve the interpretability of DL-based load forecasting methods [38] At the same time, DL methods generally require more training data than ML approaches, which also can be another limitation [69] Besides, it is also worth noting that, given the complexity of electrical load data over a long period, the features captured by DL-based methods can be less relevant and thus less effective in prediction [84]. To improve the application of DL approaches in power systems, some hybrid models using DL-based methods for extracting data features and ML-based methods for prediction also achieve satisfactory results [38].

However, it is important to emphasize that these observations may not always hold true and may vary depending on the data and implementation. For instance, Hosein et al. discovered that although DL-based methods often require longer computational times than other models, they can still be preferable, even with a short epoch [36]. Additionally, RL technology also provides a promising strategy to improve the performance of ML/DL-based forecasting approaches [106]. For load forecasting with large-scale or multi-feature training datasets, RL can be used as a step-by-step feature extraction mechanism to dynamically improve the forecasting accuracy [100].

3 Anomaly Detection

Electricity providers aim to meet the electricity demand while facing both technical and non-technical electricity losses. Technical losses result from the physical limitations of a power system, such as resistance, while non-technical losses arise from electricity theft and leakage, leading to high costs and potential security breaches. It is essential to avoid non-technical losses. The differences between technical and non-technical losses are presented in Table 3, as reported in [107].

Anomaly detection is a crucial aspect of smart grid, particularly on the demand side. To effectively identify abnormal power usage, various data science techniques are utilized to train an anomaly detection algorithm [108]. The candidate data can be classified as either “general anomalies” or divided into different types of anomalies, depending on the feature extraction method used [109]. Anomaly detection can provide feedback on energy consumption for problem diagnosis, which benefits energy suppliers and ecosystems [23, 110,111,112]. Electrical load anomaly detection methods can be categorized into two main groups: regression model-based and classification model-based [22]. Figure 6 illustrates the detection mechanism of each strategy.

3.1 Regression-Model-Based Anomaly Detection

Regression model-based anomaly detection is a technique that relies on the principles of forecasting. It utilizes a forecasting model to fit historical data and predict the load for future time slots. The predicted values are then compared to the realized actual data, and large deviations between the two can be identified as anomalies. Zhang et al. used a linear regression model to fit and predict electrical load considering the effects of environmental factors on residential energy consumption [68]. A linear regression model outputs prediction as a baseline and any realized data points that deviate extensively from the baseline are identified to be anomalous. However, from the application perspective, there are two concerns as follows. First, individual differences in temperature sensitivity can restrict the applications of this approach. Second, considering the complexity of the residential load series, linear regression may only adequately capture some essential features, resulting in false detection. Chou et al. and Hollingsworth et al. developed hybrid prediction model-based anomaly detectors capturing the nonlinearity in electrical load series [113, 114]. Both studies employed ARIMA to perform linear regression, then incorporated non- linear ML models, such as ANN and LSTM, to compensate for ARIMA losses. Consistent with previous forecasting models, the hybrid models facilitate better characterization of load time series. Though hybrid methods can improve load forecasting performance, there is no guarantee for perfect anomaly detection. First, a hybrid model may need to forecast a future load with higher accuracy. Second, considering the inaccuracy in predictions, the two-sigma rule may be insufficiently effective in detecting all anomalous data.

To address issues with unsatisfactory detection, Luo et al. developed a dynamic anomaly detector [115]. No fixed or preset threshold is used when exploring differences between predictions and real data. Instead, an active adaptive threshold is employed, and the dynamic detection rule ensured that the design could adapt to time-varying anomalies. However, the dependence on the forecast results remained high. To further improve anomaly detection, Fenza et al. developed a drift-aware method to detect anomalies in smart grids [116]. An LSTM is used to extract historical data features and forecast load. Then the detection algorithm calculated the trend in predictive error and detected consumption anomalies. Some other novel attempts have been recently proposed by Wang et al., Xu et al. and Cui et al. to improve the regression-based detection [20, 23, 117, 118].

Three improved regression model-based detection methods were proposed by Wang et al. to overcome the intrinsic limitations of the regression-based approaches [20, 23, 71]. The Bayes information criterion is employed to avoid over-or-under-fitting during real-time prediction and improve the predictive performance. Then, an independent detection mechanism is developed to analyze the estimated next-step load and the observed real-time load and screen out anomalies. The proposed test results show that the hybrid approach outperformed the introduced alternative ML- and DL-based approaches in terms of both prediction and anomaly detection. Wang et al. also proposed a novel prediction result-based detection strategy, which outperforms traditional ML/DL-based detection methods and saves on training costs [71]. The approach dynamically evaluates real-time power consumption information and identifies if it is consistent with the historical power usage habits, using the estimated next-step load as a reference. The proposed method offers accurate anomaly detection without relying on labeled data and can be beneficial in scenarios where labeled data is not readily available. Cui et al. also used the outcomes of predictive models as baselines for anomaly detection [117]; however, the predictive results are not simply replayed. Instead, a supervised learning-based classification is employed to perform good detection, thus improving accuracy and enhancing forecasting robustness. Xu et al. developed an RNN-based predictor using quantile regression and Z-scores to detect anomalies [118]. These new approaches employed ML to improve prediction and detection based on regression-based methods. However, the simplicity of regression-based detection has been compromised as the prediction and detection structures become more complex.

3.2 Classification Model-Based Anomaly Detection

In addition to regression model-based anomaly detectors, classification model- based anomaly detectors have also been widely used [23]. Classification-based anomaly detectors can be further divided into supervised learning-based, unsupervised learning-based, and semi-supervised learning-based methods.

3.2.1 Supervised Learning-Based Methods

Various supervised learning-based models are used for anomaly detection, among which SVM has gained popularity due to its good performance in large- scale systems [119]. Since each kernel is non-parametric and operates locally, it is not necessary to have the same functional form for all data, thus reducing computational costs [119, 120]. Nagi et al., Jokar et al. and Depuru et al. have designed different SVM-based anomaly detectors for smart grids [120,121,122]. Nagi et al. used a feature selection/extraction function to process raw data and trained SVM to determine data correctness, resulting in improved detection accuracy [120]. Jokar et al. developed an SVM-based anomaly detector to identify electricity theft from a grid with an advanced metering infrastructure [122]. They employed K-means to cluster the training data into different groups, and the number of clusters is determined by the Silhouette coefficient. The clustered datasets are used to train an SVM to identify abnormal samples, resulting in higher accuracy for anomaly detection. Depuru et al. developed an SVM-based electricity theft detection algorithm by combining SVM with a rule engine that divides customers into genuine electricity users and thieves [121]. Although the classification results of the original SVM seem to be suboptimal, the hybrid SVM methods effectively detected smart grid anomalies.

Supervised learning-based methods, such as SVM, have shown good performance in large-scale systems due to their ability to handle high-dimensional data and non-linear relationships (Ozay et al. [119]). However, the performance of these models can vary depending on the system size and the data’s class distribution. Pinceti et al. [123] compared the effectiveness of KNN, SVM, and replicator neural networks in detecting anomalies in real-world data and found that KNN performed well. However, their test data suffered from class imbalance issues, which may have contributed to the superior performance of the simple clustering method. In contrast, Ozay et al. [119] tested SVM and KNN on various systems and found that KNN outperformed SVM in small sized systems but performed worse in large-sized ones due to its sensitivity to class imbalance. In the context of anomaly detection, hidden Markov models have also been widely used. Makonin et al. [124] proposed an improved non-intrusive load monitoring method using a novel variant of the Viterbi algorithm, the sparse Viterbi algorithm, which can effectively manage large sparse matrices. The proposed method disaggregated a model with many super-states while preserving between-load dependencies in real-time, leading to better load monitoring.

DL-based anomaly detectors are known to achieve accurate detection compared to ML-based methods. Devlin et al. developed a feed-forward neural network-based load monitor that detected anomalies with an average precision of 76.3% using raw meter data for time series decomposition and detection [125]. To discuss the advantages of DL-based methods, Buzau et al. and He et al. developed two novel electrical load anomaly detectors [126, 127]. Buzau et al. used an LSTM and MLP-based classifier that outperformed traditional ML-based methods such as SVM, LR, XGBoost tree, MLP, and CNN. He et al. developed a conditional deep belief network-based electricity theft detector that can detect anomalies in real-time, and its performance is better than ANN- and SVM-based detectors [127]. Furthermore, compared to detection accuracy, another essential aspect of detection method evaluation is robustness [128]. A robust model should consistently provide accurate results under different circumstances [129]. In this regard, Rolnick et al. suggested that DL-based supervised learning methods are more robust to label noise than ML-based supervised learning classification methods [128]. To improve the performance of DL methods in load forecasting, attention mechanisms have been introduced. Javed et al. proposed a new anomaly detection strategy incorporating a combination of an attention mechanism with an LSTM-based CNN to identify erroneous and anomalous readings generated through errors or attacks in Connected-and-Automated Vehicles [130]. According to the test results, the proposed approach significantly improved the detection rate compared to alternative methods based on the Kalman filter and CNN-Kalman filter.

3.2.2 Unsupervised Learning-Based Methods

Supervised learning methods can be effective in anomaly detection, but their reliance on high-quality labeled data can be expensive and limit their practicality [22]. To address this challenge, unsupervised learning methods offer a promising alternative that does not require labeled data and can be more cost-effective [131]. One commonly used approach is the autoencoder, which compresses input data into latent variables through an encoder, then reconstructs the data using a decoder [132, 133]. Anomaly scores are calculated for each observation, and the ones exceeding a threshold are classified as anomalies.

Several studies have demonstrated the effectiveness of unsupervised learning-based anomaly detection. Fan et al. proposed a method that combined spectral density estimation with decision tree classification [131]. After feature extraction, an autoencoder is used to calculate anomaly scores, and observations with scores above a preset threshold are identified as anomalies. Zheng et al. developed an autoencoder-based model that used maximum likelihood estimation to detect anomalies [111]. By calculating the average and correlation variances of the dataset using reconstruction error vectors, anomalies are detected with reference to the distribution of reconstruction errors.

Other studies have used unsupervised learning methods for specific applications. For instance, Zhao et al. used graph signal processing to process power consumption data for low-rate load monitoring purposes [134]. Meanwhile, Hussain et al. developed an electricity theft detection method that employed statistical feature extraction, robust principal component analysis, and outlier removal clustering [111].

While unsupervised learning-based anomaly detection methods can achieve accurate results with low labeling costs, they have some limitations. These include low computational efficiency for large datasets, sensitivity to feature extraction, lack of ground truth data for evaluating results, and weak interpretability [31, 131, 135, 136]. Nonetheless, unsupervised learning-based anomaly detection methods offer a cost-effective and efficient alternative to supervised methods, and their effectiveness has been demonstrated in various applications.

3.2.3 Semi-Supervised Learning-Based Methods

Semi-supervised classification is a type of ML that combines both supervised and unsupervised learning methods. It aims to reduce the labeling cost of the supervised classification and improve the interpretability of the unsupervised classification. This approach is particularly useful when external information is scarce [137]. In a training dataset, some data are labeled, and the semi-supervised method can identify unlabeled classes associated with labeled patterns to determine whether the unlabeled data belong to such clusters [138, 139].

One of the most commonly used machine learning models in semi-supervised learning-based load detectors is SVM. Yan et al. developed a semi-SVM-based anomaly detector to detect faults in air handling units [140]. The classifier iteratively inserts new test samples during semi-supervised learning and compares its classification accuracy to the preset confidence level threshold. If the accuracy is higher than the threshold, the training data size is increased. Wang et al. proposed a hybrid semi-supervised learning framework that employs SVM and K-means to achieve detection at low labeling costs [22]. The proposed method first employs K-means for data preprocessing. Then, an SVM-based classifier is proposed to identify the obtained patterns. Finally, the cross-entropy loss function is used to evaluate the classification results of the SVM. The classification result is determined after calculating the loss before and after introducing new samples into the dataset. Similar to Yan et al.’s work [140], if the classification accuracy is higher than a threshold, the test data is adopted into the training pool.

Iwayemi et al. developed a semi-supervised learning-based residential appliance annotator that created two-dimensional feature vectors featuring the dynamic time warping distance and the step changes in the power consumption of an appliance event [135]. The Mahalanobis distance is used to measure these feature vectors and identify the boundaries of appliance groups. After labeling all unlearned data, semi-supervised learning-based algorithms completed the training. These hybrid models are shown to effectively achieve a good trade-off between model performance and labeling costs.

DL methods can enhance anomaly detection in semi-supervised learning- based approaches. Lu et al. developed a semi-supervised auto-encoder-based load anomaly detector [141]. The semi-supervised auto-encoder generative model consists of an encoder, decoder, discriminator, and classifier. The encoder and decoder are connected in the form of an autoencoder that captures the features of the data. Yang et al. designed a temporal convolutional network- based semi-supervised load monitoring method to evaluate classification loss and compared the approach to machine learning- and deep learning-based methods [142]. In their work, the proposed temporal convolutional network- based method is found to be practical and applicable in real-time detection tasks when compared with other alternative semi-supervised detection approaches.

In addition to the advantage of relatively low labeling costs, semi- supervised learning is also robust to data sparsity, thereby reducing the impact of data imbalance on classification [119]. However, from the view of real-world application, semi-supervised learning has some limitations. It relies on accurate prior knowledge about the relationship between labeled and unlabeled data structures [137, 140, 143]. That limits its applications. Furthermore, introducing new unlabeled samples into the training data pool may cause performance degradation [144].

3.2.4 Data Imbalance and Optimization Strategies

The issue of data imbalance is prevalent in real-world power consumption datasets, where abnormal events occur at a relatively low frequency, resulting in a sparse distribution of abnormal data [122, 131]. However, this characteristic of sparse anomalies poses a challenge for neural network-based models, which are a fundamental component of many DL approaches [145]. Neural networks learn the features of each class by optimizing the weights and activations of each node during the learning phase [146]. However, the assumption underlying this learning is that the training data are uniformly distributed among all classes [131, 145, 146]. In other words, if a class is not evenly represented, the neural network-based model may not achieve optimal performance.

Figure 7 illustrates a conceptual view of imbalanced classification, where grey nodes represent training data in Class 0 (the majority class), and red nodes represent Class 1 (the minority class). The ideal classification result is shown in Figure 7a. However, due to the significant difference in the number of data samples in the two classes, the neural network often fails to learn the features of Class 1, resulting in the classification result shown in Figure 7b. To address this issue, several strategies have been proposed to optimize the performance of imbalanced classification models.

-

a.

Abnormal data generation

To address the problem of data imbalance, Jokar et al. developed an SVM- based anomaly detector that can identify electricity theft in a grid [122]. This method involves generating “fake data”—i.e., abnormal power consumption data—from benign data. Specifically, n data samples are randomly selected from the benign dataset to establish a similarly sized but new benign dataset labeled x. Electricity theft is typically associated with reduced paid power consumption, and the electricity theft dataset ‘y’ can be represented as ‘y = x * a’, where ‘a’ is a preset parameter in [0, 1]. The method used to learn the optimized dataset is irrelevant, although the training is identical. However, a limitation of this method is that the distributions of all malicious samples are assumed to be consistent with the distributions of the benign samples, which may not be true in all cases.

This method has also been used in Wang et al.’s work [23]. Considering the imbalanced nature of real-world power consumption datasets, their work introduced this method to include some “fake data” in the training data to balance the dataset. Their test results show that the model with optimized training data effectively screens out anomalies. However, it should be noted that this method requires prior knowledge of the types of anomalies that need to be detected and may not be applicable in scenarios where the types of anomalies are unknown, or new types of anomalies are emerging.

-

b.

One-class classification

The one-class classification approach is a special case in supervised learning- based detection and involves a binary rule where data are classified as abnormal if they cannot be classified into a benign dataset [109, 117]. This approach has been shown to save labeling costs and improve the efficiency of supervised- based detectors [147]. Several studies have compared the detection performance of one-class and multi-class classification methods in the presence of training data imbalance. Nguyen et al. and Fu et al. showed that one-class classification methods perform better in such cases [148, 149]. However, Kokar et al. found the opposite result in their study, where an SVM-based one-classification test failed to show promising results compared to multi-class-based classification [122]. Therefore, there is no clear-cut conclusion as to which method is better, but several studies have demonstrated that the one-class classification approach is promising in reducing labeling costs and addressing data imbalance.

-

c.

Class weights-based optimization

To further address the data imbalance problem, various optimization strategies have been proposed. One such strategy is the two-step optimization approach, which assigns optimal class weights during learning. This approach has been used in many works to improve the performance of classification models against data imbalance [150, 151].

The two-step optimization approach involves two main steps. In the first step, a data survey is conducted to determine which class is the minority class and the proportion of the minority class. In the second step, a proper class weight is set for the minority class, and the classification model can weigh the class more heavily during the training phase. This way, the impact of data imbalance on training can be reduced, and the model can be optimized for better performance [145, 151].

Although the two-step optimization approach can effectively address the data imbalance problem, it is important to note that the optimization process can lead to extra training time and computational costs. Therefore, the trade-off between the improved performance and increased training time and computational costs should be carefully considered when applying this approach.

-

d.

Over-or-under sampling

This approach for addressing imbalanced data problems is based on data sampling techniques [152]. By randomly over-sampling or under-sampling the minority or majority class, the classification method can manage the number of samples in each class to train models in a more balanced way [153]. While this approach has not yet been applied to smart grid anomaly detection, Huan et al. previously proposed an under-sampling-based detection approach for screening abnormal traffic in network management [154]. Their work involved setting the number of clusters in the normal class to the number of abnormal data, and retaining samples closest to the cluster center to achieve under-sampling. The proposed approach uses clustering to avoid oversampling issues and provides a novel approach for effectively selecting samples from the majority class.

3.3 Discussion of Anomaly Detection Methods

We have presented many anomaly detection methods in the previous sections. Each comes with its unique advantages and limitations, making them suitable for different scenarios. To assist in selecting the appropriate anomaly detection method for smart grid implementation, we summarize the advantages and limitations of these methods in Table 4.

It should be noted that various evaluation metrics have been proposed for assessing the performance of anomaly detection methods, similar to load forecasting strategies [155]. However, due to the imbalanced nature of anomalies in real-world datasets, there is ongoing debate regarding the most appropriate evaluation metric. Some research suggests that the G-mean score provides a more balanced interpretation of classification performance, while others claim that the Matthew correlation coefficient (MCC) is more suitable [156]. The choice of metric depends on the specific application and priorities of the stakeholders involved [65].

-

Therefore, pointing out the “best” metric for anomaly detection is impractical [155].

In this work, we aim to evaluate the performance of each detection strategy from the perspectives of both ML/DL and real-world implementation.

The regression-based approach is a useful method as it can provide both load forecasting and anomaly detection within a single framework. However, while it can save training data and has a simple detection mechanism, its detection accuracy is limited. This is because the method lacks an independent abnormal data determination design, which causes it to rely too heavily on the prediction outcomes [23]. Moreover, this method may not be suitable for complex power systems with unpredictable loads.

Classification-based detection strategies offer a promising approach for effectively identifying anomalies. However, each approach has its own limitations. The supervised learning-based method, for instance, can provide high detection accuracy but is limited by its data labeling cost [22]. Additionally, defining an anomaly in the context of daily electricity usage variability can be challenging, which makes data labeling quality a potential issue. Moreover, customer privacy concerns must be taken into account as a preliminary data exploration phase is required.

Unsupervised learning-based approaches provide a labeling-free scheme and are robust to input noise [157, 158]. However, their applications are limited due to their high computational cost and weak interpretability [31, 131, 135, 136]. The semi-supervised learning approach strikes a balance between supervised and unsupervised learning approaches. However, it is important to note that the underlying assumption of semi-supervised learning is that all classes are separated clearly, which may make this approach unsuitable for some unknown datasets [137, 140, 143]. Additionally, as with supervised learning-based strategies, customer privacy issues are also involved in this approach.

Hyperspace Dimensional Computing (HDC) is a novel data-driven classification strategy recently introduced to the energy domain [155]. By mapping the data into a high-dimensional space, the features of each class can be learned more efficiently. Additionally, HDC is hardware-friendly and can be implemented on a wide range of platforms, including low-power and embedded devices. Wang et al. proposed an HDC-based anomaly detection method, which is the first attempt to introduce this approach in the energy domain [155]. The proposed HDC-based method is compared with various approaches, including ML models such as SVM, KNN, AND, and DL models such as LSTM, DNN, and their class-weight optimized detectors. The test results show that the HDC-based detection method not only provides accurate detection results but also saves model optimization cost and pre-training costs. More importantly, this approach is training time-efficient, saving more time on large-scale dataset- based training. However, it should be noted that the HDC-based approach is based on supervised learning, which may be limited in its application in some cases due to the high data labeling costs.

4 Demand Response

Demand response has gained significant attention and has become an essential component of smart grids [159]. It is an effective way to manage demand by reducing system peak loads, enhancing system reliability, and delaying system upgrades [160, 161]. AI and data science technologies have provided an affordable solution for demand response, which has further propelled the development of power grids [159]. By learning from human power usage behavior, demand response can efficiently control the use of individual appliances, minimize user discomfort, increase the utilization of renewable energy, and reduce energy costs [162].

Demand response can be categorized into two types: incentive-based demand response and price-based demand response, as shown in Figure 8 [8, 160, 161, 163,164,165]. The incentive-based demand response suggests that users adjust their load profile or adopt some control over their appliances. This approach includes direct load control, interruptible service, demand bidding, capacity services, emergency protection, and ancillary service markets, according to different planning periods [8]. On the other hand, price-based demand response adjusts hourly electricity prices to reflect supply and demand balance or mismatch in real-time, such that users can respond to prices and adjust their load. This approach includes critical-peak pricing, time-of-use pricing, real-time pricing, and peak load reduction credits [8, 166, 167].

Demand response plays an essential role in improving energy efficiency, utilization of renewable energy sources, and reducing emissions from the power consumption side [163, 168].

4.1 AI for Incentive-Based Demand Response