Abstract

Common theories of multiattribute preferential choice predict that people choose options that have on average better attribute values than alternative options. However, following an alternative memory-based view on preferences people might sometimes prefer options that are more similar to memorized options that were experienced positively in the past. In two incentivized preferential choice experiments (N = 32, N = 28), we empirically compare these theoretical accounts, finding support for the memory-based value theory. Computational modeling using predictive model comparison showed that only a few participants could be described by a model that uses sums of subjectively weighted attribute values when experience was available. Most participants’ choices resembled the predictions of the memory-based model, according to which preferences are based on the similarity between novel and old memorized options. Further, people whose experience consisted of direct sensory exposure, like tasting a portion of food, were also those with higher likelihoods of a memory-based process, compared to people whose exposure was indirect. These results highlight the central role of memory and experience in preferential choices and add to the growing evidence for memory and similarity-based processes in the domain of human preferences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Imagine a person selecting a lightweight but expensive pair of running shoes. How do they arrive at this preference? A common psychological theory of preferences is multi-attribute value or multi-attribute utility theory (e.g. Keeney and Raiffa 1976). It explains preferences by a trade-off between attribute values; for example, a lighter weight of a shoe justifies a higher price (e.g. Dyer and Sarin, 1979; Keeney and Raiffa 1976; Krantz and Tversky 1971). People are assumed to have different preferences because of inter-individual differences in the importance of attributes (if the shoes’ weight is unimportant, it will not justify a high price) and the utility given to different attribute values. This account of human preferences is highly prominent and generally well supported (e.g. Galotti 1999; Ikemi 2005; Poortinga et al. 2003; Van Oel and Van den Berkhof 2013; Wu et al. 1988). We will empirically test this attribute-based approach against an alternative memory-based view on human preferences.

Memory-based approaches represent another family of multiattribute preference theories, which need not be inconsistent with multi-attribute value theory (Payne, Bettman, Schkade, Schwarz, and Gregory 1999; Slovic 1995). Memory-based approaches include attribute value updating with experience (Müller-Trede et al. 2015; range frequency theory, Parducci 1965; options as information theory, Sher and McKenzie 2014) or extrapolating from the evaluations or from value anchors in memory (Barkan et al. 2016). These memory-based approaches stress that experience and memory will systematically affect preferential evaluations. The present work focuses on memory-based preference theories that explain preferences based on a combination of the memorized attribute values and the subjective overall evaluation of previous options in memory (Gilboa and Schmeidler 1995, 1997, 2001; Gonzalez et al. 2003; Scheibehenne et al. 2015). These memory-based value theories suggest that people use a current option’s attribute values in combination with the attribute values and evaluation of options in memory and make a choice based on similarity. People should, for instance, prefer a new lightweight shoe over a heavyweight shoe after they have had good experiences with lightweight shoes, because the new shoe’s attribute values are similar to the experienced attribute values. Conversely, if people had memorized bad experiences with lightweight shoes, they would dislike a lightweight new shoe. The preferences regarding a new option that is very similar to a previous option’s attribute values should resemble the previous option’s good or bad subjective evaluation. Conversely, if new options are dissimilar to previously experienced options, the subjective evaluations of the options in memory should have little influence on the preference for the new option. This theory, which we will label memory-based value theory of multiattribute preferences, suggests that preferences are a function of the similarity to and the experienced subjective evaluations of memorized previous options.

The present article aims to test one instantiation of a memory-based value theory against the classic multi-attribute value theory. In the classic multi-attribute value theory, preferences are a function of the current option’s attribute values. In memory-based value theory, preferences depend on the attribute values and the overall evaluation of memorized options compared to the current option’s attribute values. The classic multi-attribute value theory, the associated psychological processes, and the elicitation of the subjective importance weights (Fischer 1995) have been studied extensively in psychology, marketing, and consumer research (for a review in marketing, Van Ittersum et al. 2007). However, comparably little attention has been devoted to the family of memory-based value theories (as already remarked by Weber and Johnson 2011). Therefore, we report two empirical tests of one specific instantiation of memory-based value theory in different consumer domains.

Similarity as a fundamental cognitive mechanism of decision-making

The similarity to memorized options, which is a key component of the memory-based value theory of preferences that we investigate, has received support across many psychological domains. Most of this work focusses on inferences, meaning situations in which decision makers receive external feedback, such as learning skills (Gonzalez et al. 2003), learning judgments (Juslin et al. 2008), or learning categorizations (Medin and Schaffer 1978; Nosofsky 1992; Nosofsky et al. 2014; Smith 2014). For example, the well-supported instance-based learning theory posits that the similarity to past action-situation pairs determines the next actions’ perceived utility (Gonzalez et al. 2003). Despite the empirical support for similarity-based processes in inferences, in the domain of preferences, in which due to the subjective nature of the decision no external feedback about performance exists, the application of similarity-based theories seems underrepresented compared to their prominent role in the domain of inferences.

Mechanisms underlying memory-based and multi-attribute value theory

The psychological mechanisms in multi-attribute value theory differ from those in memory-based value theory (see Table 1). One difference concerns the role of current versus memorized information. In multi-attribute value theory, the current options’ attribute values are what drives preferences without necessarily accessing experiences from memory. By contrast, in memory-based value theory, previously experienced options influence preferences. As such, memory-based value theory is path-dependent, meaning that if two people have experienced different options, their preference for the same new option may differ; and the theory consequently allows that the order in which options are experienced can change preferences for subsequent options. Memory-based value theory also requires the processing of not only the attribute values but also of the overall evaluation of memorized options, such as “how did I like past running shoes and how lightweight were these shoes?” In multi-attribute value theory, by contrast, people need to access the subjective importance of attributes “how important is the weight of running shoes?” Lastly, in memory-based value theory, the information is integrated into a psychological similarity by computing distances, whereas in multi-attribute value theory, information is integrated by a linear additive summation.

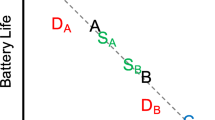

Memory-based and multi-attribute value theory’s predictions differ regarding attribute interactions and the complexity of the cognitive process. When preferences are an interaction-free linear function of attributes such as in Fig. 1a, both theories can explain such preferences (Fig. 1a, small panel “MAV” and “MEM”, the formal models used for the prediction are described in the next section). Multi-attribute value theory traditionally does not describe interactions between attributes (Keeney and Raiffa 1993; Keeny and Raiffa 1976). Memory-based value theory describes such interaction-free preferences if people have seen all options, and this principle extends beyond the shown example with two attributes. However, when the true preferences are not interaction-free, as in Fig. 1b, memory-based and multi-attribute value theory’s predictions differ unless the latter theory’s complexity is increased by accounting for interactions. In Fig. 1b, options are preferred for which either both attributes take low or both take high values, and the options with intermediate attribute values are less preferred. The classic multi-attribute value theory cannot explain these preferences unless explicit attribute interactions are added. Therefore, when fitted to the data, it predicts similar preferences across all attribute value combinations (Fig. 1b, small panel “MAV”). Memory-based value theory, by contrast, can explain these preferences well, given the decision-maker has experienced the attribute combinations (Fig. 1b, small panel “MEM”). This is because the memory-based theory determines preferences from the similarity between an option and the other options’ overall subjective evaluation, that is the preference. A memory-based value theory can thus explain many preferential patterns that multi-attribute value theory explains and above this can describe additional data patterns in the presence of attribute interactions without increasing the theory’s complexity.

Illustration one difference between memory-based theory value and multi-attribute value theory. MAV = multi-attribute value, MEM = memory-based value. Big panels show simulated preferences, small panels show model predictions of subjective values. Points are options in a two-attribute space; color shade represents preference strength. A Interaction-free preferences assuming equal weighting and corresponding predictions. B Preferences with interactions and corresponding predictions. In the case with interactions, the MAV model cannot represent the true preferences, unless the model complexity is increased by adding interaction terms. For the formal model description, please see the main text (below)

In the current work, we empirically compare memory-based value theory to multi-attribute value theory. We will use existing cognitive model implementations of the theories and test them in a preferential domain, adding to the growing evidence for memory’s central role in preference formation (e.g., Gluth et al. 2015; Mechera-Ostrovsky and Gluth 2018; Sher and McKenzie 2014). To this end, we conducted two multi-attribute choice experiments and used computational modeling to assess the theories’ predictive validity. The experimental task design reported here goes beyond earlier work (Bettman and Zins 1977; Scheibehenne et al. 2015), because several past experimental designs fully controlled the options’ attribute values but needed to use hypothetical rather than real choice options without the possibility to incentivize participants (e.g. Gershman et al. 2017; Sher and McKenzie 2014). Other designs used real consumer products with incentives, but without fully controlling the options’ attributes (e.g. Gluth et al. 2015). Both of the experimental designs used in our tests have the advantage of providing full control of the attribute values and simultaneously using real products that allow incentivizing participants.

Empirical evidence for similarity-based processes in the preferential domain

There are some results supporting similarity to memorized options as a cognitive mechanism in preferential choices. Early exploratory results have found indicative evidence for similarity to previous choices in consumer decisions (Bettman and Zins 1977). Huber (1975) received mixed results when formally testing a multi-attribute value theory against a specific version of a memory-based preference theory, testing if the psychological similarity to a prototypical previous experience influences preferences for new options. Scheibehenne et al. (2015) have reported support for memory-similarity processes in consumer choice. Participants were trained in an environment in which the true relationship between wine attributes and prices was similarity-based, and most participants inferred prices for new wines from the similarity between the new and past wines in memory. However, since participants received external feedback about the actual wine prices in this study, the study design resembled an inferential learning task rather than a pure preferential task. Overall, these empirical results indicate that similarity to options in memory constitutes a promising cognitive process underlying not only inferential but also preferential choices.

Cognitive models instantiating memory-based and multi-attribute value theory

The next paragraphs present the computational cognitive preference models that we used to formalize the theoretical accounts. Both these models are deliberately not new models, rather we focus on comparing two established models in the domain of preferences.

Multi-attribute value model

The multi-attribute value model we use is the classic additive multi-attribute value model without interactions (Keeny and Raiffa 1976), which calculates a subjective value for an object i characterized by attributes ai by

where aid denotes the dth attribute value of the ith object. The weights wd are free parameters, interpreted as subjective importance of attributes, which allow to model individual heterogeneity in the attribute value trade-offs; further, b is a free parameter mathematically corresponding to the intercept in a regression, which is interpreted as individual evaluative bias toward objects.

For decisions between purchasing an object i or keeping m dollars, the model additionally calculates a subjective value of money by

where mi is the monetary loss if buying object i and wm is a free model parameter, that we do not interpret here (because it mainly rescales the monetary range to fit the attribute range). In this case, the decision-maker’s net value of an option i equals the subjective value difference between the object and its price, vi – vmi. In case of a choice between an option and a monetary amount (as implemented in the experiment), the choice probability of choosing the option oi over the monetary amount mi can be determined with a logit choice rule: \(\Pr \left({ \text{prefer } o_{i} } \right) = \frac{1}{{1 + \exp \left( { v_{i} - v_{{m_{i} }} } \right)}}.\)

Memory-based value model

We implemented memory-based value theory using a classic multiple-cue judgment model which is commonly used to explain inferential choice (Juslin et al. 2008; Scheibehenne et al. 2015) and extends Nosofsky’s (1986) exemplar model suggested by the generalized context model (GCM). The model assumes that the similarity to options in memory determines preferences. Psychological similarity is computed by a similarity function, and the similarity sie between an object i and an object e in memory is given by the inversely scaled distance between the objects’ attribute values:

Where aid and aed are the attribute values of the dth attribute of objects i and e, respectively. Parameters, wd and \(\lambda\), are free model parameters, with \(0\le {w}_{d}\le 1; {\sum }_{d}{w}_{d}=1,\) and \(\lambda\) > 0. The parameter wd is interpreted as the proportion of attention to the dth attribute, and \(\lambda\) is the discriminability between objects. These parameters allow to model inter-individual differences. The model here uses a city-block (Manhattan) distance metric (other metrics are possible, Goldstone and Son 2005), which is the recommended metric for separable attributes as we will use (Nosofsky 1992). The distance is rescaled to a similarity measure by an exponential decay function that allows to model individual discriminability of objects in psychological space.

The subjective overall evaluation of option i is a weighted sum of the memorized qualities of the previous options, weighted by the standardized similarity between the current and previously seen options:

where sie is the similarity between the current object i and a past object e, and ve is the quality value of an object in memory, e indexes the options experienced until and excluding the current trial.

When responses are binary (select the option or the money), we use softmax choice rule (Sutton and Barto 1998), which is the equivalent of the logit choice rule in the multi-attribute value model, to arrive at the predicted probability to prefer an option over the monetary value: \(\Pr\left( {\text{prefer } o_{i} } \right) = \frac{1}{{1 + \exp \left( { - \tau \left( {v_{i} - m_{i} } \right)} \right)}} ,\) where mi is the price of option i, the parameter \(\tau\) is a temperature parameter and a free model parameter (\(\tau >0)\) with higher values leading to more random choice.

Relation of the memory-based preference model to other models

Our implementation of the memory-based model resembles Juslin’s (2003) extension of Nosofsky’s (1986) model (GCM) with an added softmax choice rule. Our model also relates to instance-based learning (IBL, Gonzalez et al. 2003) and the microeconomic case-based decision theory (case-based decision theory, Gilboa and Schmeidler 1995). The latter has been formally studied but not yet empirically tested (Gilboa and Schmeidler 1995, 1997, 2001; Gilboa et al. 2002). The GCM and IBL share with our model that psychological similarity to options in memory forms the basis of evaluations (Gonzalez et al. 2003; Juslin et al. 2008; Medin and Schaffer 1978; Nosofsky 1986). Unlike these models, our model does not rely on external feedback but uses the decision-maker’s subjective past estimations. Thus, our model relies on an internal evaluation criterion.

Experiment 1: preferences between writing pens

The goal of Experiment 1 was to empirically test the memory-based value theory against multi-attribute value theory. We used a multi-attribute preferential choice task about writing pens, described by four binary attributes. Figure 3 shows the materials. In an initial phase, participants formed subjective preferences for half of the available pens, after which they indicated preferences for eight old and eight new pens with novel attribute value combinations.

Methods

Participants

In total, 33 participants recruited from the University of Basel subject pool completed an online study, one was excluded (for evaluating the eight pens in the BDM auction with only two distinct values), leaving a final sample of N = 32; 29 females and 3 males (91% and 9%, respectively), mean age 25 years (Mdn = 22, SD = 6, range 19–50 years), mean remuneration was 2.6 CHF (Mdn = 2.7, SD = 0.8, range 1.2–3.9 CHF; n = 18 received a pen, see below for the incentives), the study was approved by the institutional review board of the Department of Psychology of the University of Basel.

Task

An incentivized multi-attribute choice task was used, as illustrated in Fig. 2. The task was to choose a writing pen shown image on the screen which had four attributes, see Fig. 3. Participants initially saw only 8 of the 16 pen attribute combinations (stimulus 1–8 in Table 2). For each of the 8 pens, participants first stated their willingness to pay as a value between 0.00 and 4.00 with two-digit precision, which was incentivized using a Becker–Degroot–Marschak auction, hereafter BDM auctionFootnote 1 (for details, see Becker et al. 1964); which was repeated once; the first BDM auction served as familiarization. The elicitation of the willingness to pay allowed us to, by design, account for inter-individual differences in how much the participants generally valued pens to avoid assuming that everybody liked pens equally.

Task in the Experiments. The structure of the stimuli in the learning and test phase is shown in Table 2, stimuli are shown in Fig. 4. In the experiment, instructions were phrased in German and have been translated for this manuscript, the translation is not verbatim. BDM auction = Becker-Degroot-Marschak Auction (see text and footnote 1)

Experimental Materials in Experiment 1. A Gray images at the center show the layout of the pen presented on the computer screen, which was overlaid with the four attribute values with attribute 1 = tint color (blue/black), attribute 2 = ornament color (yellow/orange), attribute 3 = case color (silver/black), and attribute 4 = case shape (cap/no cap). B Actual pens that were payed out

After the BDM evaluation, participants completed a two-alternative choice task in which they repeatedly chose between one of the eight pens they had already seen and a monetary value. The monetary value was the individual’s BDM value, because it adapts the choice task to individual differences in liking pens. Participants made the choices for 8 pens in random order, repeated for 10 blocks (yielding 80 choices). Each block contained the same set of choice pairs in a different order. The pens were repeated to allow for valid estimations of the decision models. Participants could choose freely between the pen or money; no feedback was given, and the task was incentivized (see below). Our design involved choosing between a pen and money, rather than between two pens, to keep the task relatively short.Footnote 2 The learning phase served to stabilize preferences, accounting for the fact that BDM values represent true subjective values imperfectly (Noussair et al. 2004), and different response formats (pricing or choice) can produce inconsistent preferences (Lichtenstein and Slovic 1971). Because we model choices in our analysis, this design kept response modalities for old (learning) and novel (test) options identical.

Next and without announcement, participants entered a test phase. The task did not change, participants selected between a pen and money, but they also saw 8 new pens with new attribute combinations. Participants decided between pens and the BDM value, and for the pens they had not seen before the average BDM value of the evaluation phase for the other pens was presented as the second monetary option. In within-block randomized order, choices were repeated for all pens for 5 blocks (80 trials in total).

The task was designed such that every value of each attribute appeared during the learning phase, but not all possible attribute value combinations were shown, ensuring that all participants experienced the attribute values and to avoid that differences in experienced attribute ranges would influence the new attributes’ perception (Sher and McKenzie 2014). Further, participants were familiarized with the attribute value ranges before the evaluation phase. Table 2 shows the attribute value combinations during learning and test, with values 0 and 1 representing the pens’ two attribute values. Attributes were binary to simplify the task. The mean similarity between the options in the test phase and the learning phase was kept relatively equal (see Supplement A, Table s1); additionally, the design ensured that each new option had a high similarity to some old options and had a low similarity to other old options.

Materials and procedure

Figure 3 shows the material: writing pens differing in tint color, case color, the color of an ornament, and case shape, resulting in 16 possible attribute combinations. The pens were manufactured for this experiment (to incentivize participants) such that pens differed in only the four attributes and no other aspects. After the experiment, one random trial of the BDM auction or the choice phase was realized as bonus payment in form of a real pen or money. BDM trials were paid according to the BDM mechanism (see footnote 1), choice trials were paid according to the participant’s choice. The instructions informed participants about the bonus payment procedure.

Results

The analyses were conducted in the statistical environment R v3.6.0 (R Core Team 2019), the cognitive models were estimated using the glm native R function and the cognitive models package v1.0.2 (Jarecki & Seitz, 2019). The analysis code is available at https://osf.io/bn53z/.

Descriptive similarity analysis

Qualitative tests of predictions from memory-based value theory will be reported first followed by cognitive modeling results. If preferences involve similarity to memorized options, then the new options that a person likes should be more similar to the old options in memory that the person has liked compared to the disliked old options in memory. Conversely, new options that a person disliked should be more similar to old disliked options than old liked options in memory. The theory thus predicts that the similarity between new and old pens determines how much a person likes the new pens. To test these hypotheses, we defined the old pens in memory as pens seen during the learning phase and defined liking a pen as choosing it more often than the pen chosen with median frequencyFootnote 3 (disliking otherwise) at the individual level, because participants had their own preferences.Footnote 4 Similarity was measured as Manhattan (city-block) distanceFootnote 5 between each new pen in the test phase and the old learning-phase pens, averaged across the old pens. We tested if the liked new pens were more similar to the liked old pens than to the disliked old pens, which was supported: Fig. 4a shows that the pens that were liked in both the learning and test phase were more similar to each other (similarity M = 2.20), compared to the pens that were liked in the learning but disliked in the test phase (M = 1.53). These effects are based on the new pens; the mean similarity difference was MΔ = 0.67 and significantly greater than zero, 95% CI [0.53, 0.81], t(31) = 9.94, p < .001, Cohen’s d = 1.757. Considering the similarity to the disliked old pens, the opposite pattern emerges, as hypothesized: Pens that were disliked during both the test and learning phases were more similar to each other (M = 2.02) compared to pens disliked during the learning but liked during the test phase (M = 1.54), with a significant difference, MΔ −0.47, 95% CI [–0.66, –0.29], t(31) = –5.20, p < .001, Cohen’s d = 0.920. These analyses used only the new pens, the effects from computing the similarity between learning phase and test phase using old and new pens are even stronger; see Supplement B, Table s3.

Experiment 1: A Similarities between pens during learning and test given liking the pens. Similarity of pens interacts with the liking/disliking of the old/new pens. Triangles show the mean similarity to pens that were liked during learning, circles are mean similarities to pens that were disliked during learning; similarities were first averaged across the respective pens in the learning phase; analysis based on novel options shown in the test phase. B Choice proportions correlate positively (negatively) with similarity to the most (least) liked learning options. Pr(Choice) = relative choice frequency for each option during test trials; analysis based on old and new options in the test phase. Count = frequency of options and participants at this point

Memory-based value theory further suggests that when new pens are more similar to an old pen that a person preferred very much, the person should choose the new pens more often. Conversely, the more similar a new pen is to a consistently disliked old pen, the less it should be chosen. Figure 4b shows that the relative pen choice frequency during the test phase—i.e., preferring pens over money—correlates positively with the test-phase pens’ similarity to the most-liked past pen from the learning phase. Conversely, choice frequencies correlate negatively with similarity to the least-liked past pen, at the aggregate level. Most-liked (least-liked) pens were defined as the pen with the maximum (minimum) choice frequencies during learning, and similarity was defined as described above. If multiple pens were most-liked during learning, the mean similarity between pens in the test set and the most-liked learning set pens was used. The resulting data pattern does not change when computing correlations for each different pen separately, suggesting that the correlation is not an aggregation artifact (Supplement B, Figs. 1 and 2). Participants were 3.4 times more likely to select the pen over money if the pen increased in similarity to the pens they liked most in the learning phase, which was reliable in a generalized mixed logit (GLM) regression taking the repeated measures into account (random participant intercept and correlated slope, pooled across pens), \(O{R}_{sim(most)}\) = 3.45, b = 1.24, 95%CI [0.94,1.57], p < .001. Participants were less likely to prefer a pen over money when the pen increased in similarity to the least-liked pen from the learning phase, \(O{R}_{sim\left(least\right)}\) = 0.23, b = –1.48, 95%CI [–1.98,– 1.04], p < .001. GLMs were fit in R by maximum likelihood with the lme4 package version 1.1 (Bates et al. 2015), here and below. These analyses are based on the new and old pens shown in the test phase.

Because in certain cases, the predictions of memory-based value theory resemble those of multi-attribute value theory, the just-shown qualitative findings could also be the result of a decision process following multi-attribute value theory. To directly pinpoint the two theories’ processes against each other, computational model comparisons were employed.

Model-based analysis

Modeling Procedure

The models were compared to a baseline random choice model with constant Pr(prefer oi) = 0.50 for all trials. Sensible models are expected to outperform the baseline model. An additional simplified version of the multi-attribute value model was tested to control for effects of overfitting, because the multi-attribute value model might overfit the data and therefore perform badly in predicting choices for an hold-out sample (Dawes 1979; Geman et al. 1992). The additional model was constructed by constraining all parameters of the multi-attribute value model to unit weights (+ 1 or −1), with the sign being based on the sign of the estimated weights from the unconstrained multi-attribute value model.Footnote 6 We call this model the unit-weights multi-attribute value model (hereafter MAV-UNI).

The memory-based model’s attention weight parameters were restricted to equal-weighting (see also Persson and Rieskamp 2009), which means that only its memory-similarity mechanism, rather than an attention mechanism, is left to account for data. The memory-based model requires as input the subjective overall evaluations of objects in memory (ve in Eq. 4), which is a latent value that we approximated by increasing the BDM value for a chosen learning-phase pen decreasing the BDM value for rejected pens.Footnote 7 All free model parameters (ws in the multi-attribute value model; \(\lambda , \tau\) in the memory-based model) were estimated at the individual level to the data from the first 80 trials in which only 8 of the 16 pens appeared using maximum likelihood.Footnote 8

Parameter estimates

Table 3 shows summary statistics of the resulting estimated parameter values after classifying participants as best described by each model. The most important attribute, for the participants best described by a multi-attribute value theory, was the color of the pen.

The models’ parameters were fixed to each participants’ maximum-likelihood estimates to generate the model predictions for the hold-out data in the last 80 trials (the data not used for parameter estimation). Predictive model comparisons were used to account for differences in model complexity, since a priori it is not clear which model is more complex because although the multi-attribute-value model has more parameter, the memory-based model uses the trial order as input.

Model comparison at the aggregate level

The mean log likelihoods of the data given the models, aggregated over participants and trials, showed that the memory-based model (MEM) predicted participants’ test-phase choices best. The rank order of log likelihoods was MEM > MAV > RAND > MAV-UNI with respective mean log likelihoods equal to –40, –55, –80, –114 (log likelihood values of 0 indicate perfect predictions). The multi-attribute value (MAV) model and the equal-weights version of it (MAV-UNI) were even outperformed by the random model, in the aggregate. This is, in part, driven by the sensitivity of log likelihood to outliers since some individuals are particularly poorly predicted by the MAV model (Fig. 5a shows four outliers). When changing the scoring rule to a less outlier-sensitive one, the random choice model performs worst in the aggregate, as expected: scored by predictive accuracy (meaning proportion correct predictions with an arg max choice rule with Pr > 0.50 = 1, Pr < 0.50 = 0, Pr = 0.50 = 0.50), the models are ordered as MEM > MAV > MAV-UNI > RAND, with respective mean accuracies of 78%, 76%, 53%, and 50% predicted choices. Figure 5a and b also show that compared to the memory-based model, the multi-attribute value model with equal weights performs poorly when measured by mean-squared error, indicating that although the multi-attribute value model seems to correctly capture the direction of preferences, it mostly fails to predict the degree of preference strengths. Taken together, at the aggregate level, the memory-based model performs better than the additive multi-attribute value models. Because the aggregate level analysis averages out individual heterogeneity, we report an individual strategy classification next.

Experiment 1: Model comparison on the aggregate and individual levels. Panels A and B show the models’ goodness of fit (higher values denote better fit); dots represent means across all participants. Panel C shows that 19 of 32 participants are best predicted by the MEM model and displays the corresponding evidence strength per individual participants below (1 = strong evidence). MEM = memory-based model, MAV = multi-attribute value model, MAV-UNI = MAV with unit weights, RAND = baseline random choice model

Model comparison at the individual level

Individuals were classified as described by a model based on the log likelihood of their responses in the last 80 trials given the model predictions. The results shown in Fig. 5c reveal that the memory-based value model predicts more than half of the participants best (n = 18 of 32, or 56%), followed by the multi-attribute value model (n = 11; 38%). The unit-weights and random model predicted two participants best (6%). Figure 5c also shows that the relative evidence strength (Wagenmakers and Farrell 2004) for the models is strong or very strong for most participants. Taken together, the individual-level analysis corroborates the aggregate-level results, suggesting that similarity to evaluations from memory play a vital role in preference formation for the majority of participants. The results also show that a substantial proportion of participants follow a decision process as explained by the multi-attribute value model, a finding that was not transparent at the aggregate level.

Illustration of model differences

The differences in model predictions will be briefly illustrated next. A situation where memory-based and multi-attribute value construction differ strongly is when one attribute seems most relevant for preferences given previous experience. Figure 6 illustrates a participant (S7, Exp. 1) who during the 80 learning trials prefers options for which the second attribute value is 1, mostly preferring options x1xx over x0xx. Multi-attribute value theory explains this behavior by a high importance of a value of 1 on the second attribute, as shown in the MAV importance weight estimates in the bottom-right of Fig. 6. Accordingly, multi-attribute value theory predicts the participant to like the novel option “0110,” but the participant never choses this option in the test trials. The memory-based value theory can capture this behavior. It compares the novel option “0110” to the learning-phase options and realizes that the novel option is rather dissimilar to the liked old options.

Illustration of a case where memory-based preference theory outperforms multi-attribute value-based preference theory. Shown are observed choices (dots) and model predictions (bars) for participant S7 in Experiment 1. MEM = Memory-based value model, MAV = Multi-attribute value model. The lower-right panel, MAV weights, shows that, based on the learning data, the MAV model assumes that the most important attribute is the second attribute being “1” (bars to the left denote importance of attribute value 0, bars to the right denote importance of value 1). In the test phase (right panel), the MAV model predicts a too high preference for the new attribute combination 0110 (see also text)

Extrapolation analysis

Next, we analyze why the additive multi-attribute value model failed to predict choices compared to the memory-based model. We investigate, how well a memory-based versus a multi-attribute-based process extrapolates to preferences for in the test phase. We selected the trials in which the models made very different predictions about the eight novel options that appeared in the test phase, across participants. Figure 7 compares the preference strength predicted by the models to the mean observed choice proportion for each novel option in the test phase. The figure shows that when the memory-based (MEM) model predicts higher preference strength compared to the multi-attribute value-based models (MAV), the MAV model underestimates the observed preference (left panel).

Experiment 1: Model predictions and observed preferences during the test phase. Grey color and L = previously encountered learning-phase attribute combinations denoted as old options from the learning phase, black color and T = new test-phase attribute combinations. MEM = Memory-based model, MAV = Multi-attribute value model; left panel: MEM predicts higher preference strength than MAV, middle panel: MEM and MAV predict roughly equal preference strengths, right panel: MEM predicts lower preference strength then MAV. Observed: mean choice proportion. Count: number of unique individuals per bin. Error bars = standard errors. Note that, the error bars are large because the plot is aggregating over all participants, those well described by the MEM and the MAV model

When the MEM model predicts a lower preference strength than MAV, the MAV model tends to overestimate the observed preferences for the pens. Only in the trials where MAV and MEM make roughly equal predictions does the MAV model capture observed choices well (middle panel). These results indicate that a multi-attribute value preference model fails in extrapolation because this process exaggerates preferences in the wrong direction, on average, compared to the memory-based model.

Temporal change analyses

Temporal changes in preferences offer a different way to compare assumptions underlying multi-attribute value theory and memory-based value theory. Because the classic multi-attribute-based theory suggests that decision-makers’ importance weights do not change over time, it assumes stable preferences unless one adds an additional importance learning process that learns importance weights to the model, which is possible but not part of the original model. By contrast, memory-based value theory can suggest that preferences change in a systematic way because decision-makers’ values and preferences develop dynamically with the incoming experience.

Figure 8a and b illustrate the preference development according to the models across the experimental trials 1 through 80. The figure also highlights two participants’ preferences; one participant with stable preferences and one with changes across the encounters of the pens. Note that memory-based value theory can model changing and stable preferences (e.g., Fig. 8a, stimulus “1011”). Figure 8c shows that participants changed their preferences by 17 percentage points, on average; where preference change was measured as the change in choices between the first half and the second half of the trials (M = 0.18, Mdn = 0.18, SD = 0.07, range = 0.05–0.32), which is greater than zero, 95% CI [0.15, 0.20], t(31) = 14.97, p < .001, d = 2.64. As robustness check, we excluded the first block—in which participants may explore the task-and re-tested for preference changes between trials 9–48 and 49–80; the preference changes remained (M = 0.19, 95% CI [0.16, 0.22], t(31) = 14.29, p < .001, d = 2.52). This means that many participants’ preference changed over time, which is not in line with the assumption of preference stability in multi-attribute value theory. Also, an extension of the multi-attribute value model that includes attribute interactions cannot model the observed changes over time.Footnote 9 Thus, it appears as if the mulit-attribute value model needs to be extended with a dynamic learning component that allows to explain changes of the importance of attributes.

Illustration of changes of preferences across the learning phase. MEM = memory-based value model; MAV = multi-attribute value-based. The top row (A, B) illustrates the temporal dynamics of the preferences by two participants and the predicted preferences by the models, separately by stimulus in the learning phase; observed data are shown as logistic regression fit; A Shows a participant with stable preferences; B Shows a participant with changing preferences. Bottom panel C Shows the size of changes in preferences between early and late learning trials by participant (see also text); orange dots are means; boxplots show the variability in change for the 8 pens; the dashed line is the grand mean. All values are positive because the absolute change is shown

To assess the models’ performance with respect to the preference changes over time, we compared the observed and predicted preference changes by the MAV and MEM model (given their estimated parameters). To this end, we calculated the just-described measure of preference change based on the predictions by the MEM model and separately for the MAV model, the latter of which predicts zero change. The performance was scored by the individual mean-squared error (MSE) between the predicted change and the observed change. It turned out that the MEM model outperformed the MAV model for n = 17 participants, whereas the MAV model scored better for n = 15 participants; the mean MSE was MSEMEM = 5.8% (SD = 3%), and MSEMAV = 6.5% (SD = 3.8%; median: MSEMEM = 5.3%, and MSEMAV = 6.0%).

Discussion

Experiment 1 used a preferential choice task to compare memory-based to additive multi-attribute value theories. The results of the first experiment suggest that memory and similarity to past experiences may be determinants of preferential choices. Both at the aggregate and individual level, a model with a memory-based comparison process performed at least as well as the standard additive interaction-free multi-attribute valuation model in predicting preferential choices; however, a substantial minority of participants was best described by models representing multi-attribute theories of preferential choices. This shows heterogeneity in human preference formation.

Experiment 2: tasting cereal bars

The pens in Experiment 1 were presented visually. Although visual presentation is common in situations such as internet purchases, many real-world preferential choices are more experiential. That is, individuals can touch, taste, or smell the goods before a purchase in situations such as food tastings, in clothes stores, or by test-driving cars. To avoid limiting our results’ generalizability to visual presentation formats, we extended the experimental paradigm by adding experience. Further, we adjusted the presentation on the screen to control for bottom-up visual saliency effects and slightly modified the learning phase to account for individual differences in the time needed to form preferences (please see the procedure section).

Methods

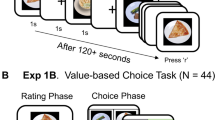

Experiment 2 used the same statistical task structure and design as Experiment 1, with the main difference that now, participants evaluated food (cereal bars) that they had tasted before their evaluation.

Participants

In total, 30 participants recruited from the University of Basel subject pool completed a laboratory study, two were excluded (for zero choice variance and technical issues), leaving a final sample of N = 28; 26 females and 2 males (93% and 7%, respectively), mean age 23 years (Mdn = 22, SD = 7, range 18–57 years), mean remuneration was 1.1 CHF (Mdn = 1.0, SD = 0.8, range 0.0–2.9 CHF; n = 14 received a cereal bar), data were collected at the University of Basel, the study was approved by institutional review board of the University of Basel.

Procedure

The experimental procedure resembled the procedure in Experiment 1 except that in Experiment 2, the real cereal bars were given to participants for a taste test (Fig. 8a); then, the cereal bars were shown on the computer as images of the individual ingredients (Fig. 8b). Initially, participants evaluated 8 of the 16 available cereal bars in a repeated BDM auction after tasting each bar; the bars’ order was randomized. The auction was repeated twice, and the first auction served as familiarization. BDM values could range from 0.00 to 3.00, with two-digit precision. Then, participants were chosen repeatedly between the eight options and the respective BDM value in a binary choice format to develop preference relations through repeated exposure. Experiment 2 differed from Experiment 1 in that whereas learning ended after 80 trials in Experiment 1, Experiment 2 used a relative stopping criterion. Learning ended when participants had consistently chosen either the monetary value or the cereal bar for one option for 4 consecutive trials, or after a maximum of 80 trials; this relative criterion allowed different participants to form preferences at their individual speeds (19 of 28 participants used the maximum of 80 trials, see Supplement B). After the preference learning phase, participants entered a second phase without announcement in which they decided for a total of 80 trials between a monetary value and all available 16 cereal bars including 8 previously unseen cereal bars, which were presented in 5 blocks, in random order within blocks. This was followed by demographics questions.

Materials

The stimuli had four binary attribute value combinations as in Experiment 1 (Table 2) but in Experiment 2, cereal bars were used. There were 16 cereal bars that contained up to four ingredients (cinnamon, plum, almond, and chia seeds). Cereal bars could, for example, be a plain cereal bar (attribute combination 0000), or contain plums (attribute combination 0100), or chia and plum and almonds (attribute combination 1110).

Figure 9c shows two example bars. We had a manufacturer produce these cereal bars to incentivize choices. The ingredients were selected because the ingredient profile was not typical for cereal bars in Switzerland, where the data were collected, but each individual ingredient had a high retail popularity in Switzerland at the time of the study (see Supplement C for details).

Experimental Materials in Experiment 2. Panel a: Samples of cereal bars that participants tasted during the experiment; the presentation order was randomized across participants. Panel b: Gray images at the center show the layout of the option presented on the screen, which was overlaid with the respective attribute values. Panel c: Actual cereal bars

Experiment 2 was experiential. Participants tried a small cube of each of the eight cereal bars that were shown in the BDM auction (approximately 1-cm/0.40-inch side length). The cereal bars were tasted in random order. After tasting a piece, participants entered their BDM values for the corresponding cereal bar on the screen, on which the cereal bars were represented as pictographs, shown in Fig. 9 (details can be found in Supplement D).

The incentives were identical to those in Experiment 1 and additoinally participants for whom the payout consisted of a cereal bar were asked to eat another cube of the bar to strengthen the incentive scheme.

Results

We first present descriptive results concerning the influence of similarity on preferences, followed by the computational modeling results.

Evaluations

During the BDM auction based on the cereal bars’ tasting, the median participant evaluated the bar containing chia, plum, and cinnamon the highest (Mdn = 2.00, M = 1.88, SD = 0.94 range 0.00–3.00, where 3 is the maximum value). The second-most-valued cereal bar contained plum and cinnamon (Mdn = 1.90, M = 1.68, SD = 0.71, range 0.30–2.45), and the least-valued cereal bar contained chia and plum (Mdn = 0.90, M = 1.11, SD = 0.98, range 0.00–2.70).

Descriptive similarity analysis

We first present tests of the qualitative ideas behind memory-based value theory, which holds that similarity and subjective quality of objects in memory determine preferences for new options. Accordingly, the preferences for a new cereal bar are based on the similarity to previous cereal bars in memory, such that liked new bars should be more similar to the bars that a person previously liked than to previously disliked cereal bars. Conversely, disliked new cereal bars should be more similar to previously disliked cereal bars (liked and disliked were defined as in Experiment 1). As shown in Fig. 10a, the options that were liked in both the learning and test phases were more similar to each other (M = 2.11), compared to options that were liked in the learning but disliked in the test phase (M = 1.51), with a mean difference of 0.59, significantly greater than zero, 95% CI [0.38, 0.81], t(25) = 5.71, p < .001, Cohen’s d = 1.119. Considering the similarity to the disliked learning options, the pattern reversed. Options that were disliked during both the test and learning phase were more similar to each other (M = 2.00) compared to options that were disliked during learning but liked during test (M = 1.63), with a significant difference, M = 0.37, 95% CI [–0.56, –0.19], t(25) = –4.13, p < .001, Cohen’s d = 0.810. These analyses used data for the new test-phase options (results for old and new test-phase options are stronger, see Supplement B, Table S3).

Qualitative Results in Experiment 2: A Similarities between options at test and learning. Dots represent means. Shown are the similarities between novel options at test and old options at learning, grouped by liking the test options and liking learning options, averaged across learning options; analysis based on novel options shown in the test phase. B Choice proportions. Relative choice frequencies for the cereal bar correlate positively (negatively) with the similarities to the most-liked (least-liked) options during learning; analysis based on old and new options shown in the test phase. Count = frequency of options and participants at this point

Moreover, memory-based value theory supposes that preferential choices should be associated with the similarity to and overall evaluation of options in memory. Figure 10b illustrates a stronger preference for cereal bars with increasing similarity to those bars that were much liked during learning. It also shows less preference for bars more similar to those bars that were disliked during learning. Both increase and decrease were reliable in a generalized mixed logit (GLM) regression taking the repeated measures into account (random participant intercept and correlated slope, pooled across cereal bars); for the choice proportion increase, the odds ratio was \(O{R}_{sim(most)}\) = 10, b = 2.34, 95%CI [1.49, 3.28], p < .001; choice proportion decreases by similarity to least-liked option \({OR}_{sim(least)}\) = 0.05, b = –2.90, 95%CI [–4.11, -1.78], p < .001 (b refers to unstandardized coefficients). Analyses are based on the old and new test-phase options. These qualitative results are in line with the basic ideas underlying a memory-based value theory.

Model-based analysis

The model fitting and comparison methodology resembled the one in Experiment 1.

Parameter estimates

Table 4 shows summary statistics of the estimated parameter values for Experiment 2.

Model comparison at the aggregate level

The mean log likelihood aggregated over participants revealed that the memory-based model (MEM) predicted the data in the test phase best. The multi-attribute value model (MAV) was outperformed by the random choice model, on average; the order of the average model fits equaled MAV < MAV-UNI < RAND < MEM with respective mean log likelihoods equal to –350, –113, –111, –65 (values of 0 indicate perfect predictive fit). Again, the MAV models’ poor performance is driven by some individuals deviating strongly from the MAV model, as Fig. 11 shows. When changing to predictive accuracy as a scoring rule (which is less outlier-sensitive), the random model performed worst, as expected: RAND < MAV-UNI < MAV < MEM with respective predictive accuracies of 50%, 61%, 79%, and 81%, accuracy being the proportion of correctly predicted choices by the different models. Thus, at the aggregate level, preferences are best described based on a memory-based value model. Figure 11 shows that based on log likelihoods, the memory-based model outperforms the multi-attribute value model and also outperforms the equal-weight multi-attribute value model and is slightly better than the multi-attribute value model with fitted weights based on mean-squared error. The results corroborate the results of Experiment 1, showing that memory-based value theory describes preferences better than weighted value trade-offs at the aggregate level. Nevertheless, the ranges of the model fit indicate considerable heterogeneity in model performance across participants.

Experiment 2: Models comparison on the aggregate and individual level. Panels a and b show the goodness of fit; dots represent means across all participants. Panel c shows that 25 of 28 participants are predicted best by the memory-based model and display the corresponding relative evidence strength for the models; MEM = memory-based model, MAV = multi-attribute value model, MAV-UNI: MAV model with unit weights, RAND = random choice

Model comparison at the individual level

Figure 11 shows the individual-level model comparison (right panel). The results reveal that the memory-based value model predicted the majority of participants best (24, 86%), the unit-weights multi-attribute value model predicted no participant best, the additive multi-attribute value model predicted 3 participants best, and the random-choice model predicted one participant best. These proportions favor the memory-based model stronger than the findings in Experiment 1 (where 56% were best predicted by the similarity model). Figure 11 displays that, similar to Experiment 1, the individual strategy classifications were characterized mostly by strong or very strong evidence for the respective models. In sum, the individual level model comparisons presented here extend the results obtained from Experiment 1, (Table 4) suggesting that a memory-based value formation matters in preferential choices and more so if first-hand experience is available to decision-makers.

Model extrapolation analysis

The last analysis reports to which degree a memory- versus a multi-attribute-based value model extrapolates correctly from participants’ experience to their preferences about novel options. We focused on the trials in which models made different predictions for preferences about the test phase’s novel cereal bars.

Figure 12 shows the predictive performance of all models for the novel options in the test phase. As in Experiment 1, the multi-attribute value model (MAV) under-predicts the observed preferences in cases where the memory-based model (MEM) predicts higher preference strength compared to MAV (left panel). When MEM predicts higher preference strengths than MAV, the latter model over-predicts the preference strength for the cereal bars compared to the money. In the trials where MAV and MEM make similar predictions (maximally 20 percentage points difference in predictions), all models capture the observed choices rather well (middle panel). These results corroborate our findings from Experiment 1, that a multi-attribute value preference model tended to over-predict the strength of individuals’ preferences in the wrong direction (on average) compared to the similarity-based model.

Experiment 2: Model predictions and observed preferences in the test phase. Shown are the proportion of cereal bar choice and model predictions. On the x-axis, L marks attribute combinations that were experienced in the learning phase, T marks new test-phase attribute combinations. MEM: Memory-based model, MAV: Multi-attribute value model. The left panel shows trials where MEM predicts higher preference strength than MAV, the middle panel trials where MEM and MAV predict about equal preference strengths, the right panel trials where MEM predicts lower preference strength then MAV. Observed: mean choices of the option over the presented money value. Count: number of unique individuals per bin. Error bars denote standard errors

Temporal change analyses

The multi-attribute-value model and the underlying theory assume that preferences remain stable across time, because the importance weights in multi-attribute value theory are assumed to be stable. To test for changes in preferences, we first computed the preferential change measured for Experiment 1 (preference change between late and early learning trials). The results show that preferences change over time, on average by 13 percentage points. A change-point analysis using fisher’s exact test revealed significant overall changes in preferences over time p = .001, M = 0.13 percent, 95% CI [0.10, 0.16], t(27) = 8.43, p < .001, Cohen’s d = 1.59. We repeated the test for temporal changes without the first choice block, which we presume to be the most noisy, and still found reliable preference changes, M = 0.12, 95% CI [0.08, 0.16], t(27) = 5.51, p < .001, d = 1.04. When comparing the preference change predicted by the memory-based model (MEM) and multi-attribute-value model (MAV predicts no change) to the observed preference change, the MEM model outperformed the MAV model for 19 participants, and the MAV model scored better for 9 participants as measured by mean-squared error. However, collapsing across participants, the grand mean MSE was not different, MSEMEM = 6.5% (SD = 5.2%), MSEMAV = 6.5% (SD = 5.4%), the medians were MSEMEM = 5.3%, MSEMAV = 6.0%.

Discussion

The results from Experiment 2 suggest that memory-based comparison processes are important in human preference formation. These results add to the evidence from previous empirical findings for the role of similarity to memorized experiences in preferential choice (Scheibehenne et al. 2015). In the task where participants were exposed to direct experience with a product, the preferences of the majority of respondents were better predicted by a similarity-based process involving a comparison of novel options to previously experienced options than by the standard multi-attribute value process, which involves weighting and integrating the novel options’ attributes.

General discussion

The present study empirically examined the role of memory-based processes in preferential choices. Preferential choices are among the most frequent choices in daily lives in the western world, with the number of food- and beverage-related choices amounting to 190 per day (Wansink and Sobal 2007). Understanding preferences is therefore of practical relevance. Traditionally, preferential choices under certainty have been viewed as a rule-based combination of the subjective importance of options’ attributes (e.g. Keeney and von Winterfeldt 2007). Numerous studies in consumer choice and marketing have focused on this theoretical paradigm to investigate preference formation. A different view, which has received much less empirical attention, is that many preferential choice situations involve a memory-based cognitive process that relies on past experiences rather than the consideration of the current option only. According to this memory-based value theory, decision-makers utilize their previous experiences from memory and compare the current option to their previous experiences to form a subjective value of the current option. We tested this theoretical account in incentivized discrete preferential choice tasks.

The results of two experiments (N = 32, N = 28) showed that, qualitatively, the similarity of current options to previous options and the subjective quality of the previous options matter for preferring a new option. In both experiments, the memory-based value theory accounted better for preferential choices than the traditional additive multi-attribute value theory, and the responses of the more than half of participants favored the memory-based value model. These results add to the evidence for memory-based processes in subjective value formation, at least for the investigated consumer domains. The two experiments differed in that Experiment 1 presented options visually, whereas in Experiment 2, participants could experience the subjective quality of the options hands-on.

These findings extend previous results in several ways. First, we go beyond exploratory results (Bettman and Zins 1977) where indicative evidence suggested a similarity-to-previous-choices strategy in consumer decisions. Second, we use a preferential task without feedback, which goes beyond Scheibehenne et al. (2015), who exposed participants to feedback. In addition, our task was fully incentivized. The experiments reported here combine the benefits of a highly controlled environment with four binary attributes-typically found in studies of judgment or categorization-with a task that asks for purely subjective evaluations and let participants sample goods, which is characteristic of preference tasks.

For the current model of memory-based preferences, the results suggest that experienced value and experienced attributes contribute to the cognitive process of preference formation. These results contribute to the psychology of preference formation by providing evidence in favor of one memory-based preference model; this model uses a specific similarity metric (Manhattan) and has constrained weights (equal) and involves no memory decay. These assumptions can be relaxed in future work to examine the generalizability of the underlying theory that similarity to memorized options and memorized experiences’ overall value determine preferences.

Comparison of the memory-based theory with related theories

The memory-based value formation theory tested here is not the only model proposing that similarity to memory matters in preferential choice, but our empirical findings demonstrate support for the underlying hypothesized mechanism. The goal of this article was not to present an entirely novel model but rather to rigorously examine if the cognitive principles of memory and similarity can describe human preferences. Other modeling approaches that relate to the model that we tested here include the economic case-based decision theory, which combines similarity to previous utilities in an action-selection model. This model, to date, has not yet been tested empirically and differs from our model in that it includes action similarity and utility functions (Gilboa and Schmeidler 1995; Gilboa et al. 2002). Similarly, instance-based learning and exemplar-based learning models share the fundamental assumptions about similarity-weighted categorization and judgments (Gonzalez et al. 2003; Juslin et al. 2008; Medin and Schaffer 1978; Nosofsky 1986). The formal model tested here is very close to those approaches. Specifically, exemplar models differ from our model in that they assume discrete categorical responses, whereas our model is an instance of Juslin et al.’s judgment model combined with a softmax choice rule based on a comparison between the value judgment of the options. Furthermore, our model assumes no external feedback but evaluates options based only on the decision-maker’s subjective estimations of the available options. Therefore, it relies on an internal instead of an external evaluation criterion.

Scope and limitations of memory-based preference formation

Although our findings indicate that a memory-similarity process matters in human preferences, we do not claim that such processes underlie all preferential choices. Memory-based preferential value formation can be utilized in situations where substantial past experience exists, which we believe to be true for many everyday consumer choice situations. Where preferential choices are characterized by less experience, decision-makers may rely on either a multi-attribute value process or on second-hand experience, possibly brought to them by media, peers, or institutions. Thus, even in preferential domains characterized by little first-hand experience, a memory-similarity theory can be applied using third-party sources. We suspect, however, that there is a gradual shift in the preferential process, such that decision-makers with scant or no experience rely primarily on the importance of attributes and multi-attribute integration, but as they accumulate experience in a domain, the preference formation process shifts toward a more memory-based subjective value formation. More research is needed to clarify the dynamics of the cognitive processes underlying preference construction when gathering experience about the options. Such dynamic models have been successfully used in classification (Bourne et al. 2006; Smith and Minda 1998). In sum, we believe that in everyday preferential choice situations such as basic consumer choices, memory-based strategies play a major role. However, decision-makers do not always have experience in a domain-consider rare choices like buying a house; and in these cases, multi-attribute integration or a different non-experiential strategy may be critical.

Linear models with interactions

The basic multi-attribute value model that we have tested here involves no attribute interactions. This is not to argue that human cognition cannot perform attribute interactions, but multi-attribute value theory has predominately been formulated as interaction-free in most consumer research, for instance, in the conjoint measurement literature that estimates part-worth utilities (e.g. Ares et al. 2010; Heide and Olsen 2017; Molin et al. 1996) and in the experimental judgment and decision-making literature on preferences, where the respective model is called WADD (Dieckmann, Dippold, and Dietrich 2009; Sher and McKenzie 2014). Therefore, the current experimental task was not specifically designed to pit a multi-attribute value model with interactions against a memory-based value model. From a computational complexity perspective, it is important to note that a multi-attribute value model that assumes that people account for all possible interactions may not scale well in real-world preferential choice situations which involve more than a small number of attributes, because the number of interaction terms grows exponentially with the number of features. Regarding our experimental data, we explored the performance of a multi-attribute value model with interaction terms and the results suggests that it does not substantially outperform the memory-based model (Supplement B, Table S4). However, further research is needed to specifically design a task that allows to investigate the role of interactions in multi-attribute approaches compared to memory-based approaches to preferential decision-making.

Extensions of the model

The memory model we tested is closely related to Juslin et al.’s (2003) exemplar-based multiple-cue judgment learning model (see also Hoffmann et al. 2014), which is based on Nosofsky’s (1986) general context model. These models have been extended in several ways to accommodate various behavioral patterns; below, we outline several extensions relevant for the preferential domain. Note that, these extensions are not technically necessary to model the current experimental findings; rather, the extensions showcase the possibilities gained from taking an exemplar-memory view on preferential choice. One interesting extension concerns memory decay; for instance, Speekenbrink and Shanks (2010) used decay model learning in a changing environment. We believe that in many real-world situations, the quality of options can change systematically over time, and to predict the effect of such changes, the model tested here can be extended by a memory-decay parameter. A further extension, which has been studied in exemplar-based categorization models, is the use of a different similarity function (Ashby and Maddox1993; Jäkel et al. 2008, for exemplar-based categorization models). Instead of the city-block metric used here, a Euclidean metric may be used if the attributes are continuous (Ashby and Maddox 1993). Since man preferential situations involve continuous attributes, this extension is worthwhile considering. Another extension concerns modeling prior preference. Many categorization models fit a response bias parameter to account for prior differences in preferences for an option over another. Furthermore, it is possible to combine the memory-based and multi-attribute-based preference formation theories (as recently in Albrecht et al. 2020). One possible combination involves allowing for subjective attribute importance and use the importance to weight in the calculation of the similarity between the present and previous options. In this way, preferences are based on both a trade-off between attributes and a similarity-to-memory comparison.

Directions for future research

Our findings provide several directions for future research, one concerns memory retrieval, another one concerns the influence of cueing. A yet-to-be-tested prediction from a memory-based view on preferences is that memory context influences the evaluation of current options. In other words, retrieval of an especially positive or negative memory of an option, possibly even cued by the current option, is predicted to bias the subsequent subjective evaluation of the current option. This pattern cannot be predicted by the traditional versions of multi-attribute value theories of preference formation, where the stored importance weights of the attributes in a particular preferential domain should not change by being reminded of a past experience.

Along the same line as the cued-memory effect, another effect that memory-based value theory can predict is order effects. If, as speculated above, more recent experiences show more decisive influence than more distant experiences, then it is expected that, especially in domains with limited experience, the subjective evaluation of novel objects depends not only on the amount but also on the order of the previous experience.

A different future direction concerns the cognitive underpinnings of memory-based value theory. Memory-based value theory differs from the classic multi-attribute value theory regarding cognitive processing in several ways. In multi-attribute value theory, individuals need to acquire, store, and retrieve each attribute’s subjective importance in the domain of choice. In memory-based value theory, individuals need to store and process experiences. Cognitive systems retrieve previous choices from memory and process the similarity of the current option to the liked and disliked objects from the past. By contrast, in multi-attribute value theory, cognitive systems require importance weights, where retrieving weights need not be explicit, and individuals are usually unable to accurately verbalize the importance of the weights that they use (Wu et al. 1988). Further, multi-attribute value theory necessitates integrating the weights in a rule-based manner, for example, by weighting and adding. By contrast, memory-based value theory requires the mind to carry out implicit similarity comparisons to memory content. Evidence suggests that compared to similarity comparisons to previous experience, weighting and integration processes utilize different memory components (Hoffmann et al. 2014). Lastly, a model that combines multi-attribute importance with memory-based retrieval of preferential evaluations would be an interesting avenue for future work.

Conclusion

The current findings add to the growing evidence for the empirical validity of memory-based subjective value formation in a preferential domain, rendering processes that involve memory a viable alternative to classic multi-attribute value theories. The results reveal that a memory-based preference formation model describes preferential choices better than the standard (interaction-free) weighting and integration of subjective importance of attributes. What is key is that the memory-based value formation view specifies the process of how past experiences influence the preference for new options. We show that similarity to experiences in memory matters for preference construction and that similarity to content in memory matters more in situations in which individuals can gain first-hand experience with a choice option rather than only visually assess options.

Code availability

Notes

A BDM auction is an incentive-compatible value elicitation method. Respondents are asked to provide their true evaluation of a good and are incentivized as follows. At the end of the experiment, a random value is drawn from a uniform distribution over the range of admissible valuations. Participants receive the good if their subjective evaluation exceeds the randomly drawn value, otherwise they receive the value in cash. Thus, participants have an incentive to provide their true subjective value for the good.

We wanted 10 repetitions per stimulus during learning and 5 repetitions per stimulus during test. The use of pairs of pens would have required to use more combinations and would have led to 280 learning trials (28 pairs × 10), and 600 test trials (120 pairs × 5), i.e., 880 trials. Using choices between a pen and a monetary value reduces this to 240 trials (80 learning and 160 test trials). The resulting task represents considering buying a pen or keeping the money to spend on something else (see also incentives in the text).

A sanity check was performed for this definition of “liking,” examining if liking reflects merely the monetary amounts with which pens were paired or if it reflects the pen’s attributes. If liking only reflects the monetary amounts, participants should prefer higher monetary amounts no matter which pen was shown. However, it turned out that the pens were chosen more if paired with higher monetary amounts (positive individual-level correlation of pen choice probability and money value, Mdn ρ = .80, M = .68, SD = 0.36). Thus, the definition of “liking” reflects more than disliking small monetary amounts. Moreover, apparently the BDM elicitation used to determine each pen’s subjective monetary value correlated with preferences but did not produce perfect indifference prices (similar also Noussair et al. 2004). Thus, our subsequent analyses will rely on choice frequencies rather than on BDM values.

For one participant in Experiment 1, we had to use \(\ge\) median (rather than > median) due to a skewed choice distribution to ensure that at least one pen was liked and disliked in the test and learning phases; for one participant in Experiment 2, we had to do the same.

For example, the similarity between option t in the test set and the liked option in the learning set is based on the city-block distance dist as follows: \(dis{t}_{t,liked}= \frac{1}{8}\sum_{i=1}^{8}dist\left({x}_{i,learn\_liked}, {x}_{t}\right)\) where \({x}_{i,learn\_liked}\) represents the ith option in the learning set that was liked, xt represents the attributes of the test set option t. The city-block distance dist was then rescaled to yield a similarity metric: \(si{m}_{t,liked = }4-dis{t}_{t,liked}\).

The weights in the equal-weights multi-attribute value model were set to w = –1 if W* < 0 and +1 if w* = 0, and to 0 if w* = 0, where w* is each participants’ best-fitting estimate of the weight, rounded to the fourth digit, in the full multi-attribute value model that was previously described.

The by-participant subjective value of object e was \({{v}_{e}= m}_{e}+\frac{1}{2}\mathrm{r}\left(m\right)\) if object e was preferred and \({m}_{e}-\frac{1}{2}\mathrm{r}\left(m\right)\, \mathrm{if}\, \mathrm{object } \, e\, \mathrm{was\, rejected}\) me is the BDM value of option e shown during learning, and r(m) is the range (max (m) —min (m)) min (m) – max (m) of a participant’s BDM values.

Multi-attribute value model parameters (b, ws and m) were fit by the glm procedure in R using a linear regression procedure with a logit link. Memory-based value model parameters (λ, τ) were fit with a grid-and-maximum-likelihood procedure where the four best parameter sets resulting from a regular grid search provided starting values for repeated maximum likelihood optimization through nonlinear optimization with augmented Lagrange method using the Rsolnp R package version 1.16 (Ghalanos and Theussl 2015). For the latter model, data from trial 1–3 were not used in parameter estimation since the parameter estimate of the choice rule parameter \(\tau\) is estimated more precisely when excluding very early learning trials. We checked for the parameter estimates’ robustness when including the initial trials, and the parameter estimates did not change substantially. Predictions were rounded to 3-digit precision.

The learning phase was not a full latin square design because it did not present every attribute combination (half of the attribute combinations were withheld as test objects in the predictive model comparison); therefore, it was not possible to estimate a MAV model with interaction terms and include it in the predictive model comparison analyses above.

References

Albrecht R, Hoffmann JA, Pleskac TJ, Rieskamp J, von Helversen B (2020) Competitive retrieval strategy causes multimodal response distributions in multiple-cue judgments. J Exp Psychol Learn Mem Cogn 46:1064–1090. https://doi.org/10.1037/xlm0000772