Abstract

Designing and constructing pedagogical tests that contain items (i.e. questions) which measure various types of skills for different levels of students equitably is a challenging task. Teachers and item writers alike need to ensure that the quality of assessment materials is consistent, if student evaluations are to be objective and effective. Assessment quality and validity are therefore heavily reliant on the quality of the items included in the test. Moreover, the notion of difficulty is an essential factor that can determine the overall quality of the items and the resulting tests.Thus, item difficulty prediction is extremely important in any pedagogical learning environment. Although difficulty is traditionally estimated either by experts or through pre-testing, such methods are criticised for being costly, time-consuming, subjective and difficult to scale, and consequently, the use of automatic approaches as proxies for these traditional methods is gaining more and more traction. In this paper, we provide a comprehensive and systematic review of methods for the priori prediction of question difficulty. The aims of this review are to: 1) provide an overview of the research community regarding the publication landscape; 2) explore the use of automatic, text-based prediction models; 3) summarise influential difficulty features; and 4) examine the performance of the prediction models. Supervised machine learning prediction models were found to be mostly used to overcome the limitations of traditional item calibration methods. Moreover, linguistic features were found to play a major role in the determination of item difficulty levels, and several syntactic and semantic features were explored by researchers in this area to explain the difficulty of pedagogical assessments. Based on these findings, a number of challenges to the item difficulty prediction community are posed, including the need for a publicly available repository of standardised data-sets and further investigation into alternative feature elicitation and prediction models.

Similar content being viewed by others

Introduction

The pedagogical assessment of students is a fundamental component of any educational or learning environment. Assessments should reflect the teaching objectives and measure the student’s level of knowledge or skill against some defined level of attainment required for them to pass a course. Thus, designing and constructing tests that contain items (i.e. questions)Footnote 1 which measure the various types of skills of different levels of students in an equitable way is a challenging task. Teachers and item writers alike must ensure the consistent quality of assessment materials and maintain fairness if they are to provide an objective and effective evaluation of the assessed students. As the quality and validity of an assessment are heavily reliant on the quality of its items, significant effort and resources have been devoted to item analysis tasks over recent years.

The notion of difficulty is an essential factor that can determine the overall quality of items and tests. In particular, item difficulty estimation—also referred to as “item calibration”—refers to the estimation of the skill or knowledge level needed by students to answer an item or question (Franzen, 2011). Item difficulty predictive modelling is therefore an interdisciplinary field that encompasses psychometrics, educational psychology, linguistics and more recently, artificial intelligence (AI). The former of these three fields provides well-established theoretical frameworks of cognitive processes involved in assessments, which can then be represented, characterised and evaluated using a variety of powerful data-driven computational models available in AI. Automating the process of item calibration is crucial if it is to become more objective and scalable; essential qualities when being included in traditional paper-based testing instruments and intelligent learning environments such as Computerised Adaptive Testing (CAT), Intelligent Tutoring Systems (ITSs) and Automatic Question Generators (AQG). In adaptive testing, the a priori estimation of difficulty should be determined in order to present test-takers with questions that are designed to evaluate the student at the appropriate knowledge level with respect to difficulty, in a process known as item sequencing (i.e. the process of tailoring the order of questions according to the student’s knowledge level). Difficulty estimation can also help to understand the current knowledge level of students, which can be used to build and tailor the appropriate student modeling components in ITSs. Furthermore, difficulty prediction models can be used to evaluate automatically generated questions, which are typically constructed in massive numbers, to: 1) detect non-functional questions (i.e. questions of an inappropriate level of difficulty, such as being either too easy or too difficult); 2) eliminate implausible distractors; or 3) generate difficulty-aware questions (Gao et al., 2018; Yeung et al., 2019). With regard to paper-based examinations, the development of an innovative means to predict difficulty can facilitate objective, cost-effective item calibration for item writers and standardised test organisations alike.

Traditional methods for obtaining an a priori estimation of difficulty have primarily relied on two methods (Choi & Moon, 2020; Rust & Golombok, 2014): i) pre-testing; and ii) the use of experts’ judgement. However, such approaches are frequently criticised in the literature for being costly, time-consuming, subjective and difficult to scale (Benedetto et al., 2020b; Hsu et al., 2018; Loukina et al., 2016). More recently, a variety of alternative methods have been considered as a means to overcome these limitations, including data-driven approaches that rely on the generation of a symbolic or sub-symbolic predictive model. Thus, there has been a clear distinction between earlier studies that utilised human-based methods based on experts’ perceptions of difficulty or educational taxonomy (i.e. expert-driven) approaches, and the emergence of a more recent trend corresponding to the use of machine-driven approaches, where statistical and data-driven models have been employed in an attempt to automate the process of difficulty estimation. In this paper, we introduce a classification that distinguishes and characterises these two approaches to item difficulty prediction, as illustrated in Fig. 1.

Essentially, expert-driven approaches are those that are based on establishing an expert consensus that exploits their domain knowledge and experience and uncovers meaningful information from the data (Ling et al., 2008). These approaches are qualitative in nature and mostly rely on pre-defined features of difficulty found in educational taxonomies, or difficulty perceptions of educational experts. This contrasts with automatic techniques for extracting new information from the data (i.e. machine-driven approaches) which focus on quantifying the concept of difficulty by employing statistical or data-driven prediction models to enhance scalability and minimise human intervention. Although these methods might not necessarily reflect an objective reality, automatic difficulty prediction frameworks provide a mechanism by which an estimation will be consistent, as a result of exploiting an algorithmic process. This is especially true when compared to heuristic approaches (such as experts’ judgments) which have been proven to be highly inconsistent as a means of item calibration (Conejo et al., 2014; Pérez et al., 2012).

As a further attempt to minimise the effect of external sources of difficulty that can increase subjectivity, we focus on difficulty sources that are intrinsic to the questions (i.e. text-based). In other words, we do not consider models that base their estimation on external factors such as the learners’ level of knowledge or their performance. Despite being undeniably important factors that can affect difficulty, providing objective criteria to measure such sources is very challenging. The fundamental rationale behind this study is therefore to explore the use of automatic methods that perform a priori question difficulty estimation of textual questions, and in particular, to understand the potential of differentArtificial Intelligence methods (i.e. Machine Learning and Natural Language Processing (NLP)) to model the task of item difficulty prediction at a linguistic level.

To understand both the opportunities and challenges for research into item difficulty prediction, a systematic and comprehensive overview has been conducted that investigates how different automatic approaches have been implemented, as well as characterising their individual merits and weaknesses. The findings of this review should accommodate future advances in an emerging field that is still developing rapidly. The characterisation of this interdisciplinary research area should also provide researchers in this, and other related areas, with a comprehensive reference of a variety of automatic difficulty prediction approaches that can be used to inform decisions about current knowledge gaps, limitations and concerns, as well as to suggest directions for future research. Indeed, the interdisciplinarity of the subject brings together a variety of methods and techniques that are employed from different fields to address a common task from different perspectives. Furthermore, synthesising the different approaches employed in the studies surveyed here should help enhance the quality and comparability of future research by highlighting both the commonalities and differences between these studies.

The primary aim of this review is therefore to provide a comprehensive overview of the current automatic predictive models of item difficulty, through the investigation of the ways that certain item features can affect their inherent difficulty level, as well as exploring the ways in which computational models are currently used to predict difficulty in an automated manner. Thus, the systematic review addresses the following objectives:

-

1.

Provide an overview of the field of automatic approaches to text-based item difficulty prediction with regard to the following statistics:

-

Rate of publication ("Publication Trends" section)

-

Publication venues ("Publication Trends" section)

-

-

2.

Characterise the automatic approaches currently applied to question difficulty prediction, by addressing the following questions:

-

What tasks are involved in the difficulty prediction models? ("Key Tasks for Predicting Item Difficulty" section)

-

What are the most common data-driven approaches? ("Key Tasks for Predicting Item Difficulty" section)

-

What are the most investigated domains? ("Domains and Item Types" section)

-

What are the most investigated item types? ("Domains and Item Types" section)

-

-

3.

Summarise different types of features that were found to influence question difficulty:

-

What are the most common features used? ("Linguistic Difficulty Features" section)

-

What type of features are typically extracted from items? ("Linguistic Difficulty Features" section)

-

-

4.

Examine the performance of automatic difficulty prediction models:

-

What are the types of evaluation methods? ("Evaluation Approaches for Item Difficulty Prediction" section)

-

What types of metrics, measurements are considered? ("Evaluation Metrics" section)

-

-

5.

Identify the challenges involved in developing a comparative study:

-

What are the sources of data-sets being used? ("Evaluation Approaches for Item Difficulty Prediction" section)

-

How do difficulty prediction models perform? ("Comparative Performance of Prediction Models" section)

-

The systematic review methodology adopted by this study is presented in the "Review Methodology / Protocol" section, and the findings, structured using the set of objectives listed above are then presented in the "Findings from the Systematic Review" section. A reflection based on these findings appears in the "Discussion and Future Challenges" section, where we discuss challenges and opportunities for future work, and we frame our systematic review in the context of studies that also discussed recent approaches to question difficulty estimation from text. Finally, we draw conclusions in the "Conclusion" section.

Review Methodology / Protocol

For this study, a review protocol was developed, based on the guidelines given by Keele (2007), which details the methods that were used to conduct the review. Such a protocol is essential as it reduces subjectivity and bias within the study, as well as facilitating reproducibility. Therefore, our protocol consists of a sequence of stages starting with the development of the review protocol itself, and ending with the reporting of the final outcomes. These stages are characterised in more detail as follows:

-

Search Strategy:

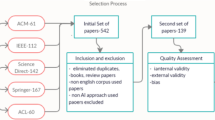

The search process was conducted manually using the following publication archives: IEEE,Footnote 2 ACM Digital Library,Footnote 3 ScienceDirect,Footnote 4 Springer,Footnote 5 and Elsevier.Footnote 6 Furthermore, general-purpose and academic-focused search engines such as Google Search and Google Scholar were also included to identify relevant publications. These archives and search engines were used to identify the first collection of relevant papers (start set). Then, additional publications were included in the search by performing backward and forward snowballingFootnote 7 (Wohlin, 2014); where the reference list of, and the citation to, each paper inthe start set were examined. The citations to the paper being examined are studied using the ‘cited by’ option in Google Scholar. This recursive process was performed for each paper in the start set and the subsequently identified papers.

-

Search Queries:

The field of question difficulty estimation is an interdisciplinary one. Relevant fields such as educational assessment, psychology and computer science all use different, yet synonymous terms to address the same task. Therefore, in order to identify keywords that reflect the most common terminologies mentioned in the previous literature, different combinations of search terms were assembled. The aim here is to maximise the identification of all of the relevant publications, and to address the properties of each database in terms of the available types of operators. As a result, the following combinations of keywords and operators were used: Item difficulty prediction, Item difficulty estimation, Item difficulty modelling, Difficulty modelling, (item OR question) AND difficulty AND (estimation OR prediction OR modelling)

-

Study Selection:

Three phases were followed as part of the study selection process in this review (Fig. 2): Identification, Screening and Eligibility. During the identification stage, 148 papers were identified through screening of the above-mentioned publication archives. Further publications were identified using other sources such as general Google search (4 papers), Google Scholar (22 papers), and snowballing (16 papers). Finally, an additional 13 papers were included based on the suggestions of the anonymous reviewers of this paper. The bibliographic information and abstracts of each publication were initially held in a reference manager. The titles and abstracts were then fully screened to exclude clearly ineligible publications. The screening process excluded a total of 73 papers were excluded on the basis of relevance with respect to the titles (n=44) and abstracts (n=29). For example, during this phase, we excluded papers that performed difficulty prediction of questions in question-answering communities. If any uncertainty was encountered during this phase, inclusivity was prioritised by including the publications for further eligibility assessment. It is only then that the full text of the remaining publications was systematically examined, leaving a total of 55 unique studies for the review itself. An extensive list of inclusion and exclusion criteria (listed below) was utilised to conduct the screening and eligibility phases of this process.

-

Inclusion/exclusion criteria:

All of the publications that were included in the study focus on automatic approaches for difficulty prediction, without imposing any constraints on the publication year, publication type, domain or item type. However, a small number of publications have been excluded where they violate one or more of the following criteria:

-

The publication is not written in English.

-

The full text of the publication is not available.

-

The proposed difficulty model is not evaluated.

-

We exclude publications that predict difficulty based on approaches that are heuristic or that utilise educational taxonomies.

-

The difficulty prediction framework does not employ machine learning or NLP approaches. The rationale here is to focus on the application of AI techniques for the task of difficulty prediction.

-

The publication estimates difficulty after administrating the test. We only focus on methods which offer a priori prediction of difficulty in order to overcome the limitations of traditional prediction methods.

-

The items are not textual (i.e. they contain images, graphs or formulas). We exclude these types of items as they require different analytical techniques compared to those used for textual items.

-

The publication does not address assessment items. For example, we exclude studies that predict the difficulty of questions in question-answering communities such as Stack Overflow,Footnote 8 as this type of question differs completely from assessment questions with respect to their purpose and structure.

-

The difficulty features are not intrinsically extracted from items. By this, we mean that we only focus on difficulty features that are derived from items’ structure, hence, we exclude features which are based on students’ performance (e.g. response time) or, for example, wearable sensors.

-

The publication focuses on item classification based on features other than difficulty. For example, we exclude publications that classify items based on question type.

-

-

Quality Assessment:

The quality assessment process was conducted after reading the full text and filling in a pre-defined data extraction form for each study. A simple scoring technique was used to evaluate the reporting quality, rigour and credibility of the selected studies. All papers were evaluated against a set of 8 quality criteria that were adapted from the quality assessment checklist suggested in Keele (2007). Table 1 describes the quality criteria applied, and the resultsof the quality assessment process are presented in Table 2. Three responses are used for scoring the criteria: yes, no and partially. The last response is used when the criterion is not fully met. Furthermore, a paper is scored ineligible if it received more than 4 “no” responses.

Table 1 Quality assessment criteria Table 2 Quality assessment of publications included in the study using Table 1 criteria (Yes/No/Partially) In what follows, we elaborate on the scoring process that we adhered to when deciding if a paper satisfied a certain criterion.

-

Q1: The first criterion requires the presence of an explicit statement that describes the aims, objectives or research questions of the study.

-

Q2: For a study to score ‘yes’ in this criterion, we check if the authors include important contextual aspects that help the reader to understand the purpose behind developing the difficulty model, for example, the type of assessment that was considered (formative or summative), or the learning environment of which the model was designed for (traditional classroom or an eLearning platform). Another aspect of being more articulate is being explicit about the investigated domain and the type of questions targeted in the study.

-

Q3: This question establishes whether the research questions were answered and explicitly stated in the findings.

-

Q4: Here, we consider the description of the overall approach (i.e. plan) that was followed in the study by the authors to answer the research questions.

-

Q5: In this criterion, we consider the methods and procedures that were used to collect and analyse the data. For instance, we check if the characteristics of the participants are reported in terms of their number, selection process and the reportingof their demographic data.

-

Q6: For a study to score ‘yes’ in this criterion, it must describe the techniques and processes that were used to clean, transform and model the collected data.

-

Q7: This question requires that the evaluation metrics used to measure difficulty and validate the efficiency of the proposed approach are described, defined or presented as mathematical formulas.

-

Q8: Is there a clear statement of findings that relate to the aims of the study? When a study provides a sufficient and comprehensive description of the findings of the study, it receives a score of ‘yes’ in this criterion, otherwise a score of ‘no’ is given.

-

-

Data Extraction:

A specific form was designed for the data extraction process which was primarily directed by the objectives of this review. The form included: title, year of publication, feature extraction methods, prediction method, domain, item type, number of items, data source, evaluation type, number of participants, observed difficulty measurement, difficulty features, results, publication type, publication venue and quality score. Table 12 in the Appendix summarises the most important data extracted from each of the selected studies.

Findings from the Systematic Review

The following sections present the analysis and synthesis of the findings from the studies surveyed. We structure our discussion around the questions proposed in the "Introduction" section.

Publication Trends

In this section, we provide an overview of the wider research community of question difficulty prediction with regard to publication trends, popular venues and active research groups. These aspects are crucial in understanding how the research area has evolved throughout the years, supported by technological advancements in NLP and other relevant tools.

Regarding the provenance of the different publications, conference proceedings were found to be the most common publication venue for the papers considered in this study (with a total of 28 conference papers, or 53% of the publications in the study), with journals being the next significant venue (16 journal papers, or 30%). Other types of publication venues included workshop papers, technical reports and papers archived in pre-print repositories such as the arXivFootnote 9 open access repository. The most popular publication venues identified in this review are technically (as opposed to pedagogically) oriented due to the fact that the scope of the review specifically focuses on data-driven approaches for item difficulty prediction. More than 70% of the venues published research on technical topics such as Artificial Intelligence (AAAI, IJCAI, ICTAI, AI Review, etc.) and Knowledge Engineering (Journal of Web Semantics, Semantic Web Journal, Journal of Knowledge Engineering, CIKM, KCAP, etc.), or in Computational Linguistics and Information Retrieval (COLING, CICLing, SIGIR, etc.) with several other venues being at the intersection of Pedagogical Research and Technology (IJAIED, AIED, LAK, etc.).

This finding further emphasises the interdisciplinarity of research into question difficulty prediction, in that it is a discipline that combines technical, pedagogical, psychological and linguistic perspectives. This interdisciplinarity is also apparent when examining the provenance of different authors working in this field (i.e. with respect to the departments that authors are affiliated with). We found that the majority of researchers (64%) were affiliated with computer science departments; whereas19% of researchers were affiliated with pedagogically oriented departments such as the departments of education and educational psychology. The remaining authors were positioned in language departments such as linguistics and computational linguistics(17%), as listed in Table 3. A number of research groups (n = 8) have contributed to publishing almost half of the publications (n = 23).

The reason for this could be due to the fact that the field of automatic difficulty prediction is still relatively new, and as such, there is a small number of highly active groups that are responsible for a significant number of the studies.

There have been broadly two waves of research activity (Fig. 3), with the first emerging in the mid-1990s, whereby the use of artificial neural networks appeared in some studies. These techniques -at that time- represented a novel approach for exploring non-linear relationships between item parameters and difficulty. Previous research had tothis point only employed statistical approaches, which explains the relationships in a linear manner (Boldt, 1998; Boldt & Freedle, 1996; Fei et al., 2003; Perkins et al., 1995).

The second wave of studies started in 2010 as researchers began to explore different data-driven approaches to this problem, such as the use of rule-based expert systems, support vector machines (SVM) and Naïve Bayesian models (Beinborn et al., 2015; Hutzler et al., 2014; Perikos et al., 2011). There was a steep increase in publications between 2013 and 2014 that then plateaued and was broadly stable until near the end of the decade, where there was a slight rise (in particular, during 2017, 2019 and 2020), indicating the growing relevance of the field in the research community. This observed increase could be attributed to the fact that other closely relevant research communities (which incorporate an item difficulty model as a component) were also thriving during these years. One such community was the Automatic Question Generation (AQG) field which was primarily interested in utilising AI approaches to automatically generate questions. According to the most recent review, the number of publications in the field of AQG peaked during the years 2014 to 2018 (Kurdi et al., 2021). In AQG, difficulty prediction is considered an essential evaluation metric to validate the quality and functionality of the generated items. In Faizan and Lohmann (2018); Gao et al. (2018); Khodeir et al. (2018); Seyler et al. (2017), various difficulty estimation frameworks were proposed to generate questions of desirable difficulty levels. Moreover, the possible influence of other related research areas such as Computerised Adaptive Testing (CAT) and Intelligent Tutoring Systems (ITSs) cannot be ruled out. These research areas also attempt to improve adaptivity and personalisation of their systems by incorporating a difficulty estimation model as a crucial component to adapt exercises to the students’ skill/ knowledge level. For example, a bank of difficulty-labelled items was generated in Settles et al. (2020) to be used with a CAT system to ensure that the questions were administered to students in a personalised manner. In contrast, a number of difficulty estimation frameworks were proposed to be implemented within ITSs (Hutzler et al., 2014; Khodeir et al., 2018; Perikos et al., 2016).

Key Tasks for Predicting Item Difficulty

There are four key architectural components that difficulty prediction models usually have in common which represent the following four fundamental tasks: 1) Ground truth labelling, 2) pre-processing, 3) feature extraction and 4) prediction. These components are discussed in more detail in the following sub-sections.

Ground Truth Labelling

The first step in any question difficulty prediction process is to label the ground truth difficulty for each question. Ground truth labels are typically obtained using one of two possible approaches: 1) psychometric testing theories, or 2) manual labeling (see Table 4).

The first approach refers to the use of common psychometric theories; namely Classical Test Theory (CTT) (Hambleton & Jones, 1993) and Item Response Theory (IRT) (Baker & Kim, 2017) to calculate the difficulty score statistically. The proportion correct statistic (CTT) and the Rasch model (IRT) were utilised in 34% and 26% of the studies respectively. These theories enable item writers to predict the test’s outcome by analysing specific parameters related to the items, and the performance of students taking the tests (due to the difficulty of the item itself or the academic ability of the students). In order to define difficulty using psychometric theories, an appropriate dataset consisting of a number of questions with their corresponding student performance results must first be obtained. In this type of labelling, difficulty is defined as a continuous value within a pre-defined range. In the case of CTT, difficulty was calculated using the p-value,Footnote 10 which represents difficulty as a value in the range [0;1]. However, continuous values in various ranges (depending on the study design) were produced using IRT.

In the second approach, domain experts were asked to rate items using a categorical scale representing different difficulty levels based on their experience. Therefore, the difficulty format is presented as discrete values representing the various difficulty levels. Of the papers considered, 34% favoured the use of experts’ opinions to calculate the observed difficulty scores. Despite the possible subjectivity of this approach, it is still considered a good indicator of item quality in general, and in particular, item difficulty.

Other approaches for observed difficulty measurement include psychometric models such as Delta (a model based on CTT) or the use of automatic labeling where question-answering systems are used to label answered questions as “easy and unanswered onesas “difficult. One paper (Felice & Buttery, 2019) used a well-known language standard (i.e. CEFR levels) to indicate ground truth difficulty.

Pre-processing

Various surface-level features have been examined to explore how different syntactic structures of questions affect difficulty. Standard NLP techniques were used to perform basic textual analysis to i) pre-process item text or ii) extract syntactic/lexical features.

The text pre-processing step is therefore fundamental in most NLP-related tasks, and corresponds to the transformation of raw textual data into smaller, more defined components by removing the unnecessary textual elements such as punctuation and adverbs. Item text pre-processing typically includes the use of common NLP techniques such as stemming, lowercasing, stopword removal, chunking, Part of Speech (POS) tagging and lemmatisation (Beinborn et al., 2014; C. Lin et al., 2015; Sano, 2015; Susanti et al., 2017; Xue et al., 2020). Additionally, NLP parsers were used to extract syntactic/lexical features of questions by analysing their constituent words/sentences. For example, the Stanford NLP Parser (Manning et al., 2014) was used by the work of Yaneva et al. (2019) to extract syntactic features such as the count of negation, noun phrases and the average length of sentences and noun phrases, etc., which proved to be amongst the most effective predictors of difficulty.

Feature Extraction

The feature extraction methods that were utilised in the papers featured within this study can be categorised according to the level of understanding required to extract the textual features. There was a crucial distinction between syntactic and semantic levels of understanding. The syntactic level of understanding focuses on surface-level features of the input, such as word count or word length. The extraction of this type of superficial features is fairly simple, as it only requires the use of deterministic tools such as readability and complexity measures or traditional language models such as basic NLP parsers. In contrast, the semantic level is characterised by a deeper level of understanding, and focuses on the semantic representation of the input. State-of-the-art neural language models were used to compute features for this level. In the following subsections, we will discuss the various feature extraction methods that were employed in the studies examined, based onthe level of understating that they target. Table 5 summarises the most common feature extraction methods found in the literature.

Syntax-level Feature Extraction

When investigating sources of difficulty in textual questions, textual complexity plays an important role. The basic intuition here is that more textually complex questions require students to have more advanced language proficiency skills in order to read, understand and answer questions correctly. Determining the linguistic complexity of the question’s string is an intuitive difficulty measure that was utilised in a number of studies and is considered one of the basic tasks in NLP (Benedetto et al., 2020a, 2020b; Susanti et al., 2017; Yaneva et al., 2019). For this purpose, readability measures were commonly used to produce descriptive statistics that quantify how difficult a text (in our case, a question) is to read. The Flesch Reading Ease (Flesch, 1948) and the Flesch-Kincaid readability score (Kincaid et al., 1975) are two sophisticated readability measures that measure surface lexical features such as word length and sentence length; for example, if used within the context of a question, a high score in the Flesch Reading Ease test would indicate that the question is easier to read than one with a low score. Thus, the underlying intuition here is that a question’s readability level correlates with its level of difficulty. Benedetto et al. (2020a) used these readability indexes (in combination with other features) to measure text complexity of questions by counting features such as the number of words and average word length. However, on their own, the readability measures did not perform as well as other linguistic features such as TF-IDF. This result was consistent with the findings reported in Yaneva et al. (2019) which stated that the Flesch readability measures were rather weak predictors of itemdifficulty, demonstrating that easy and difficult questions cannot be distinguished through surface readability metrics. Likewise, Susanti et al. (2017) explored the use of readability indexes to measure the readability of reading passages of English language vocabulary questions, with the aim of examining its relationship with question difficulty. It was found that reading passage difficulty had the least influence on question difficulty.

For the same purpose of measuring the textual complexity of questions, some authors utilised corpus analysis software as a feature extraction method. These software were mainly used by linguists to find certain patterns in text. For example, the WordSmith software package (Scott, 2008) was used in the work by Pandarova et al. (2019) to measure the complexity of reading passages in reading comprehension questions by extracting features such as word length or the number of sentences/ clauses found in a question. A text complexity prediction system named TextEvaluator (Sheehan et al., 2013) was used in the work by Loukina et al. (2016) to generate multiple textual complexity features (e.g. the frequency of academicwords and the frequency of concessive and adversative conjuncts). This fully automated system generates features based on vocabulary lists and various NLP techniques such as tagging and automated parsing.

Term-frequency-inverse document frequency (TF-IDF) is a numerical measure that is commonly used in text mining and information retrieval to count the occurrences of certain words or n-grams to demonstrate how important that word is to a document in a corpus (Salton & McGill, 1986). It was mainly used in the context of item difficulty estimation to extract linguistic features; more specifically, to examine the relationship between difficulty and the important words that appear in the question. In the work by Benedetto et al. (2020b), TF-IDF was used to produce arrays of features from the input text (i.e. question and distractors) which were then used as an input for a regression module that was developed to estimate the difficulty of newly generated multiple choice questions (MCQs). Their model was able to predict difficulty with accuracy (RMSE = 0.807). The authors used the same measure in a following study, in combination with other measures, and were able to improve the accuracy of their model (RMSE = 0.753). In a separate study, Lin et al. (2015) investigated how TF-IDF could be used with RDF graphs (linked data), in contrast to simply being used with textual data. Their proposed hybrid measure called TF-IDF (DL), was used within the feature engineering process to initially transform named graphs into vectors, and subsequently measure the semantic similarity between these vectors using text-based cosine similarity measures. This feature was later used to control the difficulty of questions generated from RDF resources.

Given the simplicity of computing surface-level features, some studies (Hoshino & Nakagawa, 2010; Yaneva et al., 2019, 2020) have utilised publicly available basic NLP parsers to compute simple features (e.g. average sentence lengthand negation count), such as the Stanford NLP parser (Manning et al., 2014).

Semantic-level Feature Extraction

The semantic similarity of different textual elements found within questions (e.g. the stem, distractors, reading passage, etc.) was amongst the most investigated features in the difficulty estimation literature. This level of understanding goes beyond syntactic or lexical features and requires the extraction of semantic representations of the input. Within the field of NLP, semantic similarity is the process of measuring the relationship between texts or documents using a defined metric. To achieve this, textual items must be expressed numerically by transforming them into feature vectors that encode the meaning of words in a way that groups together words that are similar in meaning within the vector space, in a process known as word embedding. Alternatively, structured semantic models such as ontologies can be employed to measure semantic similarity between concepts that are present in the text of the question. In this subsection, we discuss the most common feature extraction methods that were used to extract semantic features. These include ontology-based similarity measures, neural language models (e.g. LSTM), and two types of word embedding: traditional (i.e. static) word embedding and contextualised word embedding. The different types of semantic-based difficulty features used by the studies in this review are discussed further in the “Semantic-based Features” section.

Rich semantic data models such as ontologies have been used to represent and support the extraction of semantic features. Ontologies have been frequently used in a number of recent studies because they provide an effective mechanism for explicitly representing a certain domain of knowledge in the form of concepts, which are connected through semantic relations (i.e. predicates). Therefore, textual mining of ontological components (i.e. concepts, predicates and instances) can help identify semantic sources of difficulty. The work of Alsubait et al. (2013) illustrates how to extract semantic features from ontologies. A similarity-based theory for controlling the difficulty of ontology-based auto-generated MCQs where concept similarity was used to select question distractors. According to their theory, distractors which contain concepts that are semantically similar tothe concept of the key increase the difficulty of MCQs. To illustrate this intuition, for distractors to be semantically similar to the key in an MCQ: 1) they should not have a sub-class relationship with the concept of the stem; and 2) their similarity to the key should exceed a certain threshold.

Recent studies have started to recognise the importance of context in the task of question difficulty prediction (as is the case with most NLP tasks). Thus, in the very recent literature, studies started to focus on context-aware embeddings suchas word2vec, ELMo and BERT. These techniques were utilised as an attempt to overcome the limitations of previous context-agnostic feature extraction models.

Textual analysis of reading comprehension questions was performed by Huang et al. (2017) through the use of word embeddings that were trained on a large-scale corpus using word2vec (Mikolov et al., 2013a, b). This process was carried out to allow the proposed difficulty prediction model to learn the textual features of a question from a semantic perspective. Similarly, word2vec was used by Hsu et al. (2018) to transform textual elements of the question into semantic vectors. The cosine similarity metric was then utilised to calculate the semantic similarity between the text of item elements; such that the semantic similarity was expressed through the distance between the vector representations of words. Word2vec-trained word embeddings were also used by Yaneva et al. (2019) to predict the difficulty of MCQs in high-stakes medical exams, in addition to other linguistic and psycholinguistic features. They demonstrated that word embeddings had the highest predictive power when conducting an ablation study to understand the contribution of each set of features.

Long short-term memory (LSTM) is a deep learning model commonly used in NLP. It is one of the neural language models that utilises the context of the question to improve the accuracy of prediction. It was used by Cheng et al. (2019) as a part of anend-to-end neural network to extract the question semantic structure. Bidirectional LSTM (BiLSTM) was employed by Bi et al. (2021); Qiu et al. (2019) and Gao et al. (2018) to encode the question text into a contextualised representation. For example, a difficulty prediction model for MCQs in medical exams was proposed in Qiu et al. (2019) that leveraged BiLSTM to compute the semantic representations for all question components (stem, options and medical text). After that, two types of difficulties were encoded using two different modules: a confusion difficulty module and a recall difficulty module. The final prediction was thus generated based on the aggregation of these two types of difficulties. The model was then compared to a number of end-to-end difficulty prediction models including SVM + TF-IDF, TACNN (Huang et al., 2017) after applying some changes, and two variants of the same model. It was found that the proposed model significantly outperformed all baselines with all metrics. However, LSTM or BiLSTM are not the best neural language models to capture the true meaning of words based on their context. This is mainly because they learn right-to-left and left-to-right contexts separately. Furthermore, this sequential computation of LSTM significantly increases the time needed for the neural net to learn.

The more recent literature (since 2019) has started experimenting with state-of-the-art contextualised word embedding techniques such as ELMo (Embeddings from Language Model) (Peters et al., 2017) and BERT (Bidirectional Encoder Representations fromTransformers) (Devlin et al., 2018). Unlike traditional word embedding techniques (e.g. word2vec and GLoVe), pre-trained contextual models deal with different prospects of words based on their usage in context. In this sense, they also address some of the limitations of LSTM. The context of the words is captured faster and more accurately since the learning process is conducted from both directions simultaneously. The success of these context-sensitive encoders for many NLP tasks has brought a great deal of interest in how these models can perform on the task of difficulty estimation.

In Xue et al. (2020), a transfer learning-based model using ELMo was proposed with an additional encoding layer based on Bi-LSTM to produce embeddings from the question textual elements (stem and options). The model was pre-trained on the task of response time prediction, to improve the accuracy of difficulty level predictions. It was found that transfer learning was in fact effective and item stem represented the most useful source of difficulty (RMSE = 23.32). In Yaneva et al. (2019, 2020), the authors model three types of difficulty features: 1) embeddings; 2) linguistic features (e.g. lexical and syntactic features); and 3) information retrieval features. They experiment with two types of embeddings, word2vecand ELMo, pre-trained on a task-specific corpus (MEDLINE abstracts).Footnote 11 The linguistic features were extracted using the Stanford NLP parser and readability measures. The combination of all featuresreported the best results (RMSE = 22.45 compared to ZeroR 23.65). As a result of an ablation study that was carried out to examine the effect of each set of features, they found that embeddings (word2ved and ELMo) and linguistic features were the strongest predictors of difficulty with comparable performance.

Other recent papers have started to apply transformer-based models to compute embeddings of the questions’ textual components. The first attempt to use BERT was in the work by Yeung et al. (2019) where the language models’ bi-directional contextual representation was utilised to generate distractors of controlled difficulty. The generation was based on the similarity of the context of the carrier sentence to distractors in a gap-filling MCQ. This was achieved by initially masking the target word (i.e. answer) in each carrier sentence, and then selecting the candidate words that were most highly ranked by BERT for the masked word according to their relative ranking in BERT. This allowed them to measure the semantic similarity of the context ofthe carrier sentence to the generated distractors. The authors compared their model to word embeddings trained by Skipgram (Mikolov et al., 2013a, b) by calculating cosine similarity between the distractor candidates and the answer. The results show that BERT outperforms the semantic similarity baseline in terms of correlation with human judgment.

A multi-task BERT (MTBERT) was used for the task of question difficulty prediction in Zhou & Tao (2020). The pre-trained BERT model was further pre-trained on an additional corpus, to then be fine-tuned for predicting the difficulty of programming problems. The proposed model achieved an accuracy of 67% over two neural network baselines; namely, basic BERT and BiLSTM. Additional pre-training for the transformers was also carried out in Benedetto et al. (2021) while using BERT and DistilBERT to predict the difficulty of MCQs. A dataset covering the same topics that were assessed by the questions was used for additional task-specific training. The transformers were evaluated against two existing models that leveraged TF-IDF (Benedetto et al., 2020b) and ELMo, and were found to produce better performance. In Loginova et al. (2021), the authors explored the possibility of modelling difficulty prediction of MCQs by using the uncertainty of question-answering models as a measure of difficulty. In other words, it was argued that machine-perceived difficulty correlates with human-perceived difficulty. For QA, they experimented with three transformers; namely, BERT, DistilBERT and XLNet, to produce raw softmax scores that were then converted into a unique value, which was subsequently used to represent the difficulty score for the question. This model was evaluated against three ELMo-based baselines targeting different similarity-based features (question and the passage, the answer and distractors and the answer and the passage). It was found that their model performed as well as other baselines, except for the comprehension questions, which were reported to be best predicted by the ELMo-based model.

Prediction

In spite of their diversity, all of the studies we considered had addressed the task of question difficulty estimation as a supervised problem, whereby a dataset containing questions labelled with difficulty levels (i.e. ground truth) was used to train a model to predict the difficulty level of new questions. A smaller number of studies (n = 10) did not necessarily use machine learning to train a prediction model, but rather employed NLP techniques to automatically extract the difficulty features, prior to performing a correlation analysis to compare them to the real (i.e. ground truth) difficulty labels. The overwhelming dominance of supervised learning techniques is likely due to the characteristics of the task itself. First, the majority of studiesconsidered the problem of question difficulty prediction as a multi-class classification task whereby the question can be classified as easy or difficult (i.e. binary classification) or easy, moderate, or difficult (i.e. multi-classification). This problem can be intuitively modeled using supervised learning methods where the categories are pre-defined, and the model is designed to capture them. Second, given the sensitive nature of the type of datasets required in this field (educational questions), the lack of availability of a large training data set hinders the application of unsupervised feature learning methods. Although the use of large training data sets is beneficial for any ML-based framework, supervised learning techniques tend to not require as large a data set as those required by unsupervised learning approaches. Furthermore, due to the inherent subjectivity of difficulty (in that it is often affected by previous knowledge or individual differences), there is high trust and general acceptance in the educational community of the difficulty scores produced by educational experts or calculated based on students’ performance (see “Evaluation Approaches for Item Difficulty Prediction” section). Supervised learning methods allow us to include the human perspective during the feature engineering process which results in models that are better aligned with humans’ perceptions of difficulty.

Input

The main type of input used by the different studies we examined were strings representing the question’s text. Different studies utilised different components of the question as input, depending on the objective of the study. For example, studies that examined the difficulty of MCQs typically extracted the features from the stem, answer, and distractors (Alsubait et al., 2016). The reading passage was typically added in reading comprehension MCQs as an additional source of difficulty (Huang et al., 2017). To process the question text efficiently, most studies transformed the question textual components into a feature vector using an automatic feature extraction method. Only four studies conducted a manual coding of features as the sole feature extraction method (Aryadoust, 2013; Perkins et al., 1995). The choice of manual feature extraction in older studies could be attributed to the simplicity of NLP techniques at the time.

In addition to the question’s textual components, some studies have used other types of textual input such as word lists (Yaneva et al., 2020). For example, Susanti et al. (2017) used JACET8000; a word list containing 8000 words labeled with difficulty levels for Japanese students learning English as a second language. Non-textual inputs were also employed in some studies (n = 7). For example, structured input such as ontologies and knowledge graphs were used as additional input for thepurpose of capturing semantic-level features from the questions (Faizan & Lohmann, 2018; Kurdi et al., 2021; Vinu & Kumar, 2020).

Output

The output of the difficulty models was either a continuous or categorical value depending on the way the task was modeled (i.e. regression or classification). The majority of studies produced continuous difficulty values (67%). The choice ofthe output format was also affected by the way ground truth difficulty was calculated. In models which produced difficulty scores (i.e. continuous values), ground truth labels were calculated using psychometric models such as IRT. Meanwhile, the categorical output was typically produced to be compared to difficulty labels obtained by asking educational experts to rate the difficulty level of questions. The cardinality of the difficulty classifications (categories) typically ranged between 2 (easy/difficult) to 5 (very easy, easy, medium, difficult, very difficult).

Learning Algorithms

Among the different machine learning methods, neural networks were the most commonly used type of supervised learning algorithm used for difficulty estimation (45%). Various types of neural networks were utilised depending on the paper’s objectives, such as Convolutional Neural Networks (CNN), Attention-based Neural Networks or Fully Connected Neural Networks (FCNN). In these studies, difficulty was equally modeled as a regression and a classification task. Neural network-based approaches were applied to various domains such as language, computer science and medicine. They were some of the first data-driven methods used in the item difficulty prediction literature. Perkins et al. (1995) utiliseda three-layer backpropagation ANN to predict the difficulty of reading comprehension items taken from a TOEFL test. The aim was to explore an unconventional approach that could outperform existing statistical approaches. Neural networks were praised for providing the means to explore non-linear relationships between variables, compared to statistical methods which assume a linear one. Moreover, the capabilities of neural networks to self-learn and adapt with minimal error rates are amongst the most frequently cited motivations for utilising neural networks for item prediction in the literature (Aryadoust, 2013; Perkins et al., 1995).

Older studies that leveraged a neural network architecture typically employed surface-level measures to automatically extract features or relied on manual feature extraction. For example, in the works of Aryadoust (2013) and Perkins et al. (1995), the manual feature extraction of syntactic features was performed. The result of this manual coding was then used to train a neural network model to predict the difficulty based on syntactic features such as word count and question type. Though these studies are somewhat outdated in several respects, their comparative perspective is still very informative for understanding the gradual growth of this research community. In contrast, recent neural network-based approaches (since 2017) have started using neural language models such as word embedding for the feature extraction component of the model. For instance, a pre-trained BERT was used in Zhou and Tao (2020) to predict the difficulty of programming problems. BERT was additionally trained on a task-specific corpus to compute word vectors from the programming problems which were then fed into an attention-based neural network classifier. A CNN was employed by Huang et al. (2017) to predict the difficulty of 30,000 reading comprehension MCQs collected from a standard English test. The question elements (i.e. the stem, options and the reading passage) were analysed for each question. Sentence representations werethen extracted from the item components using a CNN-based architecture. Finally, the difficulty level was determined by aggregating the semantic representation of all items’ components. This approach accurately predicted the difficulty of reading comprehension questions, outperforming that of the domain experts.

Other popular ML methods were Random Forest (RF) and Support Vector Machine (SVM) (14% and 17% respectively), as illustrated in Fig. 4. What is interesting is that all the studies that selected RF as a prediction model conducted an algorithm selection experiment to choose the algorithm that performs better on the question difficulty prediction task. It was consistently found across these studies that the RF regressor outperformed other ML algorithms such as Linear regression, SVM, Gaussian processes, fully connected neural networks and Decision Trees (Benedetto et al., 2020a; Xu et al., 2022; Yaneva et al., 2020).

In Yaneva et al. (2019, 2020), a Random Forest regressor was used to predict the difficulty of MCQs in high-stakes medical tests based on syntactic and semantic features. The syntax-level features were produced using the Stanford NLP parser in combination with readability measures. Meanwhile, the semantic-level features (word embedding) were captured using word2vec and ELMo. When compared to students’ performance, the proposed approach outperformed the baselines (ZeroR and simplified variants of the same model) with RMSE (22.45).

Similarly, SVM models were also trained using syntactic and semantic features. Beinborn et al. (2014, 2015) trained an SVM model to predict the difficulty of c-tests and cloze-tests (also sometimes referred to as gap-fillexercises) using syntactic features produced using a text classification toolkit (DKPro Core). Meanwhile, word embeddings trained using word2vec was few into an SVM classifier in Hsu et al. (2018) to predict the difficulty of MCQs in the social studies domain.

Statistics-based learning using regression was used in seven studies. The majority of these utilised simple feature extraction approaches, where the focus was specifically on features such as word/sentence length, word count, clause type or paragraph length (Pandarova et al., 2019; Settles et al., 2020; Trace et al., 2017).

Domains and Item Types

The majority of papers on difficulty prediction are domain-specific (as illustrated in Table 6). Language learning was found to be the most frequently investigated domain (55%), followed by Computer Science (15%) and Medicine (11%). Domain-independent (i.e. generic) studies accounted for 19% of publications. Other domains were in the minority, including mathematics and social studies. With regard to the different types of item formats that were investigated, Multiple Choice Questions (MCQs) were the most common. They constitute an important form of assessment questions that require the learner to select a correct answer (i.e. key) from a set of false options (i.e. distractors). Other question types such as gap-filling items and factual items were also common (Table 7). Furthermore, domain-specific questions that could not be categorised into the previous item types (e.g. programming problems) were studied.

Language Assessment

The popularity of the language learning and medical domains could be explained in part by: 1) the existence of several Standardised Test organisations that offer international and national language proficiency tests (e.g. TOEFL or IELTS); and 2) medical licensing examinations which require a massive number of frequently updated items. Difficulty estimation is considered a fundamental process in these types of tests as it ensures fairness and the comparability of ‘high-stakes’ formal examinations, which are used to inform important decisions with respect to certification and employment.

Given the nature of language assessment, in that the language proficiency of learners is typically evaluated, syntactic features were frequently examined in studies that focused on language learning questions. Earlier studies (i.e. since 2013) have mainly investigated how syntax-level linguistic features such as word or sentence length could affect question difficulty. For this purpose, publicly available textual analysis tools such as readability measures and corpus analysis software were employed. It was not until 2017 that researchers started to exploit the questions’ textual elements at the semantic level for question difficulty prediction in the language domain. Sophisticated language models such as word embeddings and Transformers have started to be applied for learning semantic features (Bi et al., 2021; Gao et al., 2018; He et al., 2021; Huang et al., 2017; Lin et al., 2019; Loginova et al., 2021; Susanti et al., 2020, 2017; Yeung et al., 2019).

As a result of the popularity of the language domain, various types of items have been examined, including reading comprehension (RC), cloze tests, c-tests and grammar questions (Table 8). The most investigated item type in language assessment were reading comprehension items in the form of: i) MCQs (Bi et al., 2021; Boldt & Freedle, 1996; He et al., 2021; Hutzler et al., 2014; Lin et al., 2019; Loginova et al., 2021; Perkins et al., 1995; Sano, 2015; Vinu & Kumar, 2017), ii) fill-in-the-gap (Choi & Moon, 2020) or iii) factual questions (Gao et al., 2018). Early examples of research considering the difficulty of reading comprehension questions include Boldt and Freedle (1996), Boldt (1998) and Perkins et al. (1995). The interest in investigating this type of question was consistent over the last decade until very recently (Bi et al., 2021; He et al., 2021; Loginova et al., 2021). Considering the unique structure of RC questions, multiple components of the question were typically studied, including the reading passage, the stem, and distractors (in the case of MCQs). Different language models were used to extract linguistic features of RC questions both on the syntactic and semantic levels. Earlier studies employed manual coding practices and corpus analysis tools to extract features (Boldt & Freedle, 1996; Hutzler et al., 2014; Perkins et al., 1995; Sano, 2015), while later studies benefitted from advancements in the NLP field and leveraged advanced methods such as neural language models (Bi et al., 2021; He et al., 2021; Huang et al., 2017; LLin et al., 2019; Loginova et al., 2021).

Cloze- and c-tests also received considerable attention in studies that focused on predicting the difficulty of questions in the language domain. These are two widely used types of reduced redundancy testing for language assessment. Both question formats result in a gap-filling question with different characteristics: full words are deleted from text in cloze tests; while only the second half of the word is deleted in c-tests. Difficulty prediction of this type of question targets the textual passage, the gap or both as sources of difficulty. In Hou et al. (2019), the complexity of the passage of cloze questions is examined based on two features: 1) mean token length; and 2) mean sentence length. A linear regression model was used to predict the difficulty based on these features, and the resulting analysis found a positive correlation between the predicted difficulty and students’ performance. The work of Beinborn et al. (2014, 2015) used a text classification toolkit to examine the difficulty of cloze and c-tests at both the passage and gap levels. A set of 70 linguistic features including the reading complexity of the passage and the difficulty of the target word were used to train an SVM model. A positive correlationwas reported between the ground truth difficulty and the predicted difficulty. None of the studies that examined cloze or c-tests employed advanced neural language models for the feature extraction of the proposed difficulty prediction model.

Other aspects of language assessment, such as grammar, vocabulary and listening comprehension, were also investigated using various questions formats such as closest in meaning (CIM) vocabulary and gap-filling grammar questions (Yeung et al., 2019). Simple and complex feature extraction methods were used in a series of studies by the same research group to predict the difficulty of CIM questions (Susanti et al., 2016, 2020, 2017). In the first two studies, the authors used readability measures and cosine similarity to extract the features for the CIM questions. However, in their latest paper (Susanti et al., 2020), they employed word embeddings (using GloVe) instead of cosine similarity to measure the similarity between the correct answer and distractors. They found that the GloVe-based word embeddings yielded a more accurate prediction of difficulty compared to other similarity measures.

Medicine

In contrast to the language domain, papers that investigated the domain of Medicine did not consider the difficulty of the language of the question, but rather focused on measuring the difficulty of the question’s domain-related content. For example, Kurdi et al. (2021) used the relation strength between medical concepts (e.g. symptoms or medical history) to measure the difficulty of medical case-based questions. Furthermore, Qiu et al. (2019) studied the effect of the level of similarity between questions and related medical documents on difficulty. The most popular question format in the domain of medicine was MCQs. This interest in MCQs is driven by the ability to explore different sources of difficulty, through the analysis of the relationship between item elements such as item stem, distractors and correct response. Indeed, various studies found a positive correlation between difficulty and the semantic similarity between: i) distractors (Alsubait et al., 2016); ii) the stem and distractors (Hsu et al., 2018; Settles et al., 2020); and iii) the stem and the correct answer (Susanti et al., 2017). Furthermore, MCQs are easier and faster to grade, which in turn can help provide students with prompt feedback. In studies that investigated medical questions, syntactic features were typically disregarded, and instead they mostly focused on extracting semantic level features using state-of-the-art language models such as word2vec, ELMo, LSTM or BiLSTM.

Computer Science

Programming problems represented the most studied question type in the domain of Computer Science. Given the unique structure of these questions, sources of difficulty were also unique and could only be applied to this type of questions. In Grivokostopoulou et al. (2015, 2017), search algorithm exercises were investigated for possible sources of difficulty. Exercise-specific features such as the number of nodes, the average children that the node has, the depth of thetree and the solution length were examined. The proposed model’s predictions were compared to those produced by experts and showed an average accuracy of 85%.

In a recent study, a difficulty prediction model for SQL problems was proposed (SQL-DP) (Xu et al., 2022). Both the problem stem and answer were used as sources of difficulty. In a similar way to many other difficulty prediction models, the stem textsemantic features were obtained using word2vec. For the answer, the authors used TBCNN (Mou et al., 2016), a framework that parses code into an Abstract Syntax Tree (AST) to capture the tree structure information of the code. These features were then used as input to a Random Forests model, and used to predict the difficulty of new SQL problems. It was found that SQL-DP consistently outperformed other similar frameworks.

Domain-independent Studies

Domain-independent studies accounted for the second largest number of studies (almost 19% of publications in this area). In contrast to many of the domain-specific studies, the main rationale for domain-generic studies was to explore the possibility ofproducing a generalisable difficulty prediction framework that could be applied to other domains. What is interesting about the approaches utilised in domain-independent studies is that almost all papers developed difficulty estimation models based on ontological features. Indeed, eight out of nine domain-independent studies proposed to control difficulty using semantic features that were extracted using ontologies or knowledge bases (e.g. Wikidata). Furthermore, these papers only estimated the difficulty of automatically generated questions, suggesting that semantic models such as the use of ontologies and knowledge bases can provide an effective means to model generic difficulty frameworks, that can be generalised across various domains. One limitation of these approaches is that the questions investigated are governed by the way they were generated (i.e. ontology-based question generation), and thus they were only used to estimate the difficulty of automatically generated questions from ontologies. This raises the question of whether these types of features can be also applied to human-authored questions, something worthy of further exploration. For example, future studies could use semantic annotation techniques such as entity linking or named entity recognition to link the concepts in human-authored questions to their counterparts in a knowledge base in order to apply semantic difficulty features.

Evaluation Approaches for Item Difficulty Prediction

Data Sources

As this systematic review focuses on the use of data-driven approaches for item difficulty prediction, each study therefore utilises one or more data-sets in building (or training) the predictive model of item difficulty, and evaluating the efficacy of the model itself. Such data-sets therefore should be labelled with students’ performance, either for use in the model generation, or to compare with the model output.

However, when examining the literature on item difficulty prediction, there was a dearth of publicly available data-sets of items that were labelled with such difficulty scores. This could explain why the evaluation of predictive models in this area is dominated by private data-sets (which are not available for use by other studies). Only 6 of the 55 featured studies have utilised a publicly available dataset. Meanwhile, the majority of studies (90%) used private datasets that were obtained using one or more of the following sources:

-

Collected from standardised tests;

-

Automatically generated;

-

Collected from relevant textbooks;

-

Collected from an online learning platform/ website;

-

Created by experts;

-

Collected from a university- or school-level course.

Figure 5 illustrates the different categories of data sources used in the context of item difficulty estimation.

Almost 27% of papers use items that are collected from known standardised tests, where the examination participants’ real performance results are also collected. This allows researchers to obtain a large number of test results from the performance of real participants; for example, Hsu et al. (2018) collected their data from a national standardised entrance examination labelled with the performance of 270,000 participants that took the exam. Interestingly, those studies that collected data from such tests also focus on the medical and language domains. This correlation supports our previous observation regarding the dominance of these domains in the literature under investigation ("Domains and Item Types" section).

The abundance of publications in the field of Automatic Question Generation (AQG) in the last few years may have also contributed to the rise in the number of publications in the area of automatic difficulty prediction research. This justification explains the fact that the second most used type of data is automatically generated items (about 27%). As opposed to traditional human-authored items, this new type of item has a number of differences that are related to its complexity level and the type of features investigated. They are normally less complicated than human-authored questions in terms of their structure and cognitive level. However, the majority of automatically generated questions have a simple structure that only addresses the first level of Bloom’s taxonomy; i.e. recall (Leo et al., 2019). With regards to difficulty features, the semantic similarity between the item components has frequently been investigated; moreover, such questions are governed by the way they were generated. For example, items that were generated from domain ontologies usually explore features which are driven from ontologies such as the strength of relation between predicates or the level of closeness of instances. Despite the ability of automatic generators to generate massive numbers of items, studies utilising these sources employed relatively small data-sets with an average of only 159 items.

In 16% of the studies considered, the questions were collected from online learning platforms or domain-related websites. For example, 1657 programming problems were collected from LeetCodeFootnote 12 and were labelled with the number of submitted solutions and the passing rate of the problem.

Domain-specific textbooks and preparation books were also used as data sources (14%). This type of data source was used in the language domain due to the availability of various preparation books which contain items that were used to train students on how to pass language proficiency tests. The remaining data sources included items that were hand-crafted by domain experts to address the specific objectives of the study (9%) and items collected from school- or university-level assessments (7%).

Focusing on studies that employed publicly available data-sets (n = 6), question corpora from the fields of machine reading comprehension and question answering (QA) systems were used to estimate the difficulty of reading comprehension questions (Bi et al., 2021; Gao et al., 2018; Loginova et al., 2021). The Stanford Question Answering Dataset (SQuAD) (Rajpurkar et al., 2016) contains 150 K questions in the form of paragraph-answer pairs extracted from Wikipedia articles. It was used by Bi et al. (2021) and Gao et al. (2018) for training and evaluating their difficulty prediction frameworks on the task of calibrating reading comprehension questions. Loginova et al. (2021) used the RACE dataset (Lai et al., 2017) which consists of 25,000 passages in English from school reading comprehension exams associated with 4 MCQs for each passage. Compared to SQuAD, this dataset requires advance reasoning skills to answer the questions. Given that the questions contained in these benchmarks are not annotated with difficulty labels, various techniques were used to label the questions with difficulty levels including crowdsourcing, employing QA systems to answer the questions or asking experts to manually annotate the questions after sampling a smaller subset. HotpotQA (Yang et al., 2018), which is another QA benchmark, was the only dataset that was manually annotated with complexity levels.

Evaluation Methods

When evaluating the performance of the difficulty prediction model, comparisons are typically made with respect to a baseline of the observed difficulty obtained from one or more of the following sources: 1) details of the students’ real performance; 2) difficulty labels provided by domain experts; or 3) a comparison with some other baseline (Table 9). The two most frequently applied evaluation method in the studies surveyed is through students performance and expert reviews (52% and 34% of studies for each method respectively). The first method is carried out by making a comparison with student performance by either utilising real or mock examination performance. This approach has been lauded for providing additional empirical evidence of difficulty as questions are typically validated through real-life or experimental testing environments with real student cohorts. However, it does require more time and effort than other approaches to administer the items to an appropriate sample of students and later calculate the observed difficulty scores using traditional measures such as IRT. Also, considerable effort is required to maintain ethical considerations with respect to such studies that include human participants. The average number of student participants in the studies reviewed here was around 719 students per study. Interestingly, those studies which collected data from standardised tests typically employed a larger student population than those that used other data sources, although this is arguably due to the fact that such tests constantly attract massive numbers of examinees.

The other commonly applied method is that of presenting items to a group of domain experts and obtaining their judgment on each item’s difficulty based on their experience. Of all of the publications considered, 34% compared their systems’ predictions with experts’ judgments. On average, three experts were recruited per paper, and each expert would typically be a subject teacher or item writer. These experts would be responsible for judging the difficulty of the items, based on the pre-defined features which might include syntactic, semantic or cognitive features. However, most studies did not provide the criteria by which experts rate the difficulty level. Despite the possible bias of this approach, it is nonetheless the primary evaluation method used by education institutions to validate and filter items besides pre-testing (Huang et al., 2017). Therefore, expert judgment is still considered an important indicator of difficulty level, despite the fact that several studies raised questions regarding the consistency and reliability of such baselines resulting from an approach which favours human intuition (Conejo et al., 2014; Thorndike, 1982; Wauters et al., 2012).

The third common evaluation method compared the proposed model to one or multiple baselines (14% of the studies reviewed here). This evaluation approach was always employed in combination with one of the two previously discussed evaluation methods. Three types of baselines were found to be used for performance comparison: 1) comparison with an existing difficulty prediction model; 2) comparison with another feature extraction technique; or 3) comparison with one or more variants of the same model.