Abstract

In this paper, I argue that the fields of artificial intelligence (AI) and education have been deeply intertwined since the early days of AI. Specifically, I show that many of the early pioneers of AI were cognitive scientists who also made pioneering and impactful contributions to the field of education. These researchers saw AI as a tool for thinking about human learning and used their understanding of how people learn to further AI. Furthermore, I trace two distinct approaches to thinking about cognition and learning that pervade the early histories of AI and education. Despite their differences, researchers from both strands were united in their quest to simultaneously understand and improve human and machine cognition. Today, this perspective is neither prevalent in AI nor the learning sciences. I conclude with some thoughts on how the artificial intelligence in education and learning sciences communities might reinvigorate this lost perspective.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Before we embark on the substance of this essay, it is worthwhile to clarify a potential source of confusion. For many, AI is identified as a narrowly focused field directed toward the goal of programming computers in such a fashion that they acquire the appearance of intelligence. Thus it may seem paradoxical that researchers in the field have anything to say about the structure of human language or related issues in education. However, the above description is misleading. It correctly delineates the major methodology of the science, that is, the use of computers to build precise models of cognitive theories. But it mistakenly identifies this as the only purpose of the field. Although there is much practical good that can come of more intelligent machines, the fundamental theoretical goal of the discipline is understanding intelligent processes independent of their particular physical realization. (Goldstein & Papert, 1977, pp. 84–85)

Over the past few decades, there have been numerous advances in applying artificial intelligence (AI) to educational problems. As such, when people think of the intersection of artificial intelligence and education, what likely comes to mind are the applications of AI to enhancing education (e.g., intelligent tutoring systems, automated essay scoring, and learning analytics). Indeed, this is the focus of the International Artificial Intelligence in Education Society: “It promotes rigorous research and development of interactive and adaptive learning environments for learners of all ages, across all domains” (International Artificial Intelligence in Education Society, n.d.). In this paper, I show that historically, artificial intelligence and education have been intertwined in more principled and mutually reinforcing ways than thinking of education as just another application area of artificial intelligence would suggest.

My goal is by no means to present a complete history of the field of artificial intelligence or the field of education research. I also do not intend to provide a detailed history of the field of artificial intelligence in education (AIED). Rather, my goal is to present a narrative of how the two fields of artificial intelligence and education had intertwined histories since the 1960s, and how important figures in the development of artificial intelligence also played a significant role in the history of education research.Footnote 1 I primarily focus on some of the leading researchers in the early history of AI (1950s-1970s) in the United States and (to a lesser extent) the United Kingdom.

The focus on early pioneers in the field is to show that the very development of the field was intertwined with education research. As such, this means that I do not focus on many important leaders of the field of AIED as they are not typically recognized as major figures in the early history of AI at large; however, the history I speak to does intersect with the development of AIED, as described below. It also means this history focuses primarily on White male researchers. This is largely an artifact of who the active researchers were in the field of AI (and academic research, more broadly) at the time. It is important to acknowledge that many of these researchers worked with women and non-White researchers who may not be as well-recognized today, and that the diversity of researchers working in these areas has naturally increased over the years. Moreover, most of the early work in “sanctioned” AI historyFootnote 2 was happening in the US and the UK, although there were likely AI pioneers elsewhere in the world. An exploration of whether researchers in other countries in the early days of AI were also exploring the mutual interplay between AI and education would be an interesting area of further research.

Although glimpses of this story are told in the histories of individual fields, to my knowledge, the intertwined histories of these two fields have never been fully documented previously. For example, in his insightful chapter, “A Short History of the Learning Sciences,” Lee (2017) highlights that the early days of the learning sciences had roots in artificial intelligence and cognitive science:

The so-called “cognitive revolution” led to interdisciplinary work among researchers to build new models of human knowledge. The models would enable advances in the development of artificial intelligence technologies, meaning that problem solving, text comprehension, and natural language processing figured prominently. The concern in the artificial intelligence community was on the workings of the human mind, not immediately on issues of training or education.

It is easy to read the above as suggesting that AI provided tools that were later applied by others to educational problems. While to some extent it is true that the “the concern in the artificial intelligence community was…not immediately on issues on issues of training or education,” the narrative I present below suggests that many AI pioneers were committed to advancing education. In another chapter that is also called “A Short History of the Learning Sciences,” Hoadley (2018)—who, as an undergraduate, worked with AI pioneer Seymour Papert—makes only brief mention of how the birth of computing, AI, and cognitive science were some of the many seeds for the learning sciences. Moreover, Pea (2016) in his “Prehistory of the Learning Sciences,” focused on specific people and events that led to the formation of the learning sciences, but did not explicitly mention the role that artificial intelligence played at all, aside from passing mentions of the Artificial Intelligence in Education community. In her seminal history of education research, Lagemann (2002) dedicates a few pages to discussing the rise of cognitive science and as such, mentions some of the pioneers discussed in this paper (mainly Simon and Newell), but she does not explicitly connect these figures to education research.Footnote 3

Histories of artificial intelligence fare no better. Nilsson’s (2009) 700-page book on the history of AI only makes a couple of passing remarks about how education intersected with that history. Pamela McCorduck’s humanistic account of the history of AI mostly only discusses education in the context of Papert’s work with Logo in a chapter called “Applied Artificial Intelligence” (McCorduck, 2004). Interestingly enough, even Howard Gardner, a prominent education researcher, makes almost no mention of education in his book on the history of cognitive science (Gardner, 1987).

The learning sciences and artificial intelligence are both fairly new fields, having only emerged a few decades ago. Therefore, much of the history presented in this paper is still held in the individual and collective memories of individuals who either played a role in this history or who witnessed its unfolding. As such, it might seem odd that someone who was not alive for most of this history should be one to write it. Nonetheless, perhaps the story will be slightly less biased if it comes from someone who was not involved in it and who had to reconstruct this story from primary sources. Indeed, much of what is narrated here might be “obvious” to earlier generations of researchers in artificial intelligence or education, and as such, these researchers might face expert blind spots (Nathan et al., 2001) in constructing this narrative. If my own experience as a novice at the intersection of these two fields is telling, this rich history is not obvious to novices entering these fields. As time passes, if this history goes unwritten and untaught, I think what is obvious to current experts may be lost to the next generation of researchers in the fields of artificial intelligence, education, and AIED.

To construct this historical narrative, I used a combination of publications from the key figures involved, unpublished grey literature, historical sources, and archival material, especially from the Herbert Simon and Allen Newell Digital Collections. The paper alternates between sections focused on specific AI pioneers—describing their work in both AI and education—and sections focused on the formation of fields or subdivisions within fields that are relevant to this history. The sections on AI pioneers begin by describing their overall approach to AI research and end by discussing their direct and indirect contributions to education. The sections on fields discuss broader trends in the histories of AI and education that move beyond the specific pioneers. The majority of the paper spans work covering the 1950s-1990s. In the final section, I discuss where the relevant fields have headed since the 1990s, how the ethos present in earlier days of AI and the learning sciences has seemingly disappeared, and what we might do about that.

Overall, the historical narrative presented in this paper arrives at two overarching claims:

-

1.

Early artificial intelligence pioneers were cognitive scientists who were united in the broad goal of understanding thinking and learning in machines and humans, and as such were also invested in research on education. The point is not just that they were cognitive scientists whose work had implications for education, but rather that these researchers were also at times directly involved in education research and had a significant impact on the course of education research. In this sense, such researchers differ from most AI researchers and most learning scientists today.

-

2.

There were largely two different (and, at times, opposing) approaches, which manifested in various ways in both the history of AI and the history of education research.

The second claim was also made by me in another article (Doroudi, 2020), where I claimed that there is a “bias-variance tradeoff” (a concept drawn from machine learning) between different approaches in education research. That article drew on similar examples from the histories of AI and education to make this point. However, the present paper puts such claims in a broader historical context and more clearly describes how the “two camps” have evolved over time. Moreover, by juxtaposing the two aforementioned overarching claims, the overall picture that emerges is one in which early researchers who took different approaches in AI and education were at once united, despite their differences. The hope is that understanding and charting these historical trends can help make sense of and possibly repair ongoing fault lines in the learning sciences today, and perhaps reinvigorate this lost perspective of synergistically thinking about AI and education.

Simon and Newell: From Logic Theorist to LISP Tutor

In 1956, a workshop was held at Dartmouth College by the name of “Dartmouth Summer Research Project on Artificial Intelligence.” This event, organized by John McCarthy, along with Marvin Minsky, Nathaniel Rochester, and Claude Shannon, is widely regarded as the origin of artificial intelligence and the event that gave the field its name. Gardner (1987) singles out four of the workshop attendees—Herbert Simon, Allen Newell, Minsky, and McCarthy—as the “Dartmouth Tetrad” for their subsequent work in defining the field of artificial intelligence. After the formation of the American Association of Artificial Intelligence in 1979, Newell, Minsky, and McCarthy would all serve as three of its first five presidents.

The story I present here will begin with the work of three of the Dartmouth Tetrad (Simon, Newell, and Minsky), along with their colleagues and students. In this section, I begin by briefly describing the early pioneering work of Simon and Newell in the fields of artificial intelligence and psychology, and then discuss their contributions to education and how it related to their work in AI.

An Information-Processing Approach to AI

While the Dartmouth Workshop was a seminal event in the formation of AI, the first AI programs were being developed prior to the workshop. In 1955, Simon and Newell, professors at Carnegie Institute of Technology (now Carnegie Mellon University), along with J. C. Shaw, created the Logic Theorist, a program capable of proving logical theorems from Russell and Whitehead’s Principia Mathematica (a foundational text in mathematical logic) by manipulating “symbol structures” (Nilsson, 2009).Footnote 4 Simon and Newell presented this program at the Dartmouth Workshop. Shortly thereafter, in a paper titled “Elements of a Theory of Human Problem Solving,” Newell et al. (1958) describe the Logic Theorist and its links to human problem solving:

The program of LT was not fashioned directly as a theory of human behavior; it was constructed in order to get a program that would prove theorems in logic. To be sure, in constructing it the authors were guided by a firm belief that a practicable program could be constructed only if it used many of the processes that humans use. (p. 154)

In this paper, the authors laid the foundations of information-processing psychology. In a follow-up paper, “Human Problem Solving: The State of the Theory in 1970,” Simon and Newell (1971) describe the theory of information-processing psychology and their strategy for developing it over 15 years. The first three steps of their strategy culminate in the development of an artificial intelligence program like the Logic Theorist:

3. Discover and define a program, written in the language of information processes, that is capable of solving some class of problems that humans find difficult. Use whatever evidence is available to incorporate in the program processes that resemble those used by humans. (Do not admit processes, like very rapid arithmetic, that humans are known to be incapable of; p. 146)

But this was not the final destination; the next step in Newell and Simon’s strategy was to actually collect human data:

4. If the first three steps are successful, obtain data, as detailed as possible, on human behavior in solving the same problems as those tackled by the program. Search for the similarities and differences between the behavior of program and human subject. Modify the program to achieve a better approximation to the human behavior. (p. 146)

The fourth step of their procedure was carried out with extensive “think-alouds” of experts solving a variety of problem solving tasks such as cryptarithmetic, logic, chess, and algebra word problems. They followed what Ericsson and Simon (1980) would later formalize as the think-aloud protocol, which has since become a popular method for eliciting insights into human behavior in the social sciences, including education research.

Much of the theory articulated in their paper was about how experts solve problems, but how does a human learn to solve problems? Simon and Newell (1971) postulated a theory for how people might come to develop a means of solving problems in terms of what they called production systems:

In a production system, each routine has a bipartite form, consisting of a condition and an action. The condition defines some test or set of tests to be performed on the knowledge state...If the test is satisfied, the action is executed; if the test is not satisfied, no action is taken, and control is transferred to some other production. (p. 156)

Learning then becomes a matter of gradually accumulating the various production rules necessary to solve a problem. The development and analysis of production systems subsequently became an important part of information-processing psychology (Newell, 1973).

Overall, this 1971 paper describes a program of research that simultaneously defined information-processing psychology, a major branch of cognitive psychology, as well as the symbolic approach to artificial intelligence that dominated the early days of the field. But this work also played a role in the development of educational theory and educational technology to the present day. At the end of their paper, Simon and Newell (1971) have a section on “The Practice of Education.” This short section of their paper is very insightful on the way that Simon and Newell conceived of their work’s impact on education. They motivated their work’s impact by calling on the need to develop a science of education:

The professions always live in an uneasy relation with the basic sciences that should nourish and be nourished by them. It is really only within the present century that medicine can be said to rest solidly on the foundation of deep knowledge in the biological sciences, or the practice of engineering on modern physics and chemistry. Perhaps we should plead the recency of the dependence in those fields in mitigation of the scandal of psychology’s meager contribution to education. (p. 158)

Simon and Newell (1971) then go on to explain how information-processing psychology could answer this call to improve educational practice:

The theory of problem solving described here gives us a new basis for attacking the psychology of education and the learning process. It allows us to describe in detail the information and programs that the skilled performer possesses, and to show how they permit him to perform successfully. But the greatest opportunities for bringing the theory to bear upon the practice of education will come as we move from a theory that explains the structure of human problem-solving programs to a theory that explains how these programs develop in the face of task requirements—the kind of theory we have been discussing in the previous sections of this article [i.e., production systems]. (p. 158)

However, Simon and Newell did not just leave it to others to apply information-processing psychology to advance education; they tried to directly advance education themselves.

Forgotten Pioneers in Education

In 1967, Newell and his student, James Moore, had actually worked on developing an intelligent tutoring system, Merlin, fittingly to teach graduate artificial intelligence (Moore & Newell, 1974). However, for Moore and Newell (1974), this was actually a much bigger undertaking than simply creating a tutoring system; they were trying to create a system that could understand:

The task was to make it easy to construct and play with simple, laboratory-sized instances of artificial intelligence programs. Because of our direct interest in artificial intelligence, the effort transmuted into one of building a program that would understand artificial intelligence—that would be able to explain and run programs, ask and answer questions about them, and so on, at some reasonable level. The intent was to tackle a real domain of knowledge as the area for constructing a system that understood. (pp. 201–202)

In 1970, in a workshop on education and computing, Newell gave an invited talk entitled “What are the Intellectual Operations required for a Meaningful Teaching Agent?” Referring to his work on Merlin, Newell (1970) outlined 12 aspects of intelligence that they found need to be embodied in a meaningful teaching agent. Newell mentioned that there were two routes to go about automating intelligent operations in a computer: (1) automating that which is currently easy “for immediate payoff, at the price of finding that the important operations have been left untouched,” or (2) identifying “the essential intellectual operations involved” and automating those “at the price of unknown and indefinite delays in application.” Newell had opted for the second approach.

According to Laird and Rosenbloom (1992), “Merlin contained many new ideas before they became popular in mainstream AI, such as attached procedures, general mapping, indefinite context dependence, and automatic compilation” (p. 31). However, after six or so years of work, Merlin was apparently never created as a tutoring system and the various parts were not coherently put together. According to Laird and Rosenbloom (1992),

Even with all its innovations, by the end of the project, Newell regarded Merlin as a failure. It was a practical failure because it never worked well enough to be useful (possibly because of its ambitious goals), and it was a scientific failure because it had no impact on the rest of the field. Part of the scientific failure can be attributed to Newell’s belief that it was not appropriate to publish articles on incomplete systems. Many of the ideas in Merlin could have been published in the late sixties, but Newell held on, waiting until these ideas could be embedded within a complete running system that did it all. (p. 31)

In the end, he had to pay the price of “indefinite delays in application.” Merlin is virtually undocumented in the history of intelligent tutoring systems (see e.g., Nwana, 1990; Sleeman & Brown, 1982). The first intelligent tutoring system was created in 1970 by Jaime R. Carbonell. Had Newell gone with the “immediate payoff” route of automization, he might have been credited with creating the first intelligent tutoring system.

In 1966, slightly before Newell began working on Merlin, Simon (1967) coined the term “learning engineering” (Willcox et al., 2016) in an address titled “The Job of a College President”:

The learning engineers would have several responsibilities. The most important is that, working in collaboration with members of the faculty whose interest they can excite, they design and redesign learning experiences in particular disciplines. (p. 77)

Simon remained interested in systematic efforts in improving university education and worked on founding the Center for Innovation in Learning at CMU in 1994 (Reif & Simon, 1994; Simon, 1992a, 1995). The center was dedicated to cross-campus research in education, including supporting a PhD program in instructional science (Hayes, 1996). Although at least some of Simon’s interest in this area was due to his passion for teaching as a university professor, his interest in the educational implications of cognitive science played a role as well. Indeed, the effort to form the Center for Innovation in Learning seemingly started in 1992 with Simon sending a memo to the vice provost for education with the subject “Proposal for an initiative on cognitive theory in instruction” (Simon, 1992b). The concept of learning engineering seemingly only gained widespread interest in the 2010s with the formation of campuswide learning engineering initiatives, including the Simon Initiative at CMU (named in honor of Herbert Simon), and the broader formation of the learning engineering research community, a group of researchers and practitioners with backgrounds in fields such as educational technology, instructional design, educational data mining, learning analytics, and the learning sciences interested in improving the design of learning environments in data-informed ways.

In 1975, Simon applied for and received a grant from the Alfred P. Sloan Foundation to conduct a large-scale study with other researchers at CMU on “Educational Implications of Information-Processing Psychology,” effectively drawing out the ideas first suggested by Simon and Newell (1971). This grant had several thrusts including teaching problem solving in a course at CMU and developing “computer-generated problems for individually-paced courses.” The longer term objective for the latter thrust was that it “should also be extendable into a tutoring system that can diagnose students’ specific difficulties with problems and provide appropriate hints, as well as produce the answer,” a vision that would later be largely implemented in the large body of tutoring systems coming out of Carnegie Mellon as described below. Newell had actually already embarked on some of this work. In 1971, Newell created a method for automatically generating questions in an artificial intelligence course. Ramani and Newell (1973) subsequently wrote a paper on the automated generation of computer programming problems. Although they submitted the paper to the recently formed journal Instructional Science, it was never published. The work conducted under the grant, while of relevance to education, was mostly conducted under the auspices of psychology (e.g., studying children’s thinking).

Later, Zhu and Simon (1987) tested teaching several algebra and geometry tasks using only worked examples or problem-solving exercises and showed that both could be an effective way of learning these tasks when compared to traditional lecture-style instruction. They also showed, using think-aloud protocols, that students effectively learn several production rules for an algebra factoring task. Finally, they showed that an example-based curriculum for three years of algebra and geometry in Chinese middle schools was seemingly as effective as traditional instruction and led to learning the material in two years instead of three. Zhu and Simon (1987) constructed their examples and sequenced them by postulating the underlying production rules, and therefore their claim is that carefully constructed examples based on how experts solve problems can be an efficient form of instruction. This is one of the earliest studies comparing worked examples with problem solving tasks and lecture-based instruction, and probably the earliest large-scale field experiment of the benefit of worked examples (Sweller, 1994). The use of worked examples was one of six evidence-based recommendations given in the What Works Clearinghouse Practice Guide on “Organizing Instruction and Study to Improve Student Learning,” which explicitly cited Zhu and Simon (1987) as one piece of evidence.

John R. Anderson joined Newell and Simon at Carnegie Mellon in 1978 and was interested in developing a cognitive architecture that could precisely and accurately simulate human cognition (American Psychological Association, 1995). He developed the ACT theory (standing for Adaptive Control of Thought) of human cognition, which has since evolved into ACT-R. After publishing his 1983 monograph “The Architecture of Cognition,” Anderson needed to find a way to improve his ACT theory, which seemed to be complete, so he tried to break the theory by using it to create intelligent tutoring systems (American Psychological Association, 1995):

The basic idea was to build into the computer a model of how ACT would solve a cognitive task like generating proofs in geometry. The tutor used ACT’s theory of skill acquisition to get the student to emulate the model. As Anderson remembers the proposal in 1983, it seemed preposterous that ACT could be right about anything so complex. It seemed certain that the enterprise would come crashing down and from the ruins a better theory would arise. However, this effort to develop cognitive tutors has been remarkably successful. While the research program had some theoretically interesting difficulties, it is often cited as the most successful intelligent tutoring effort and is making a significant impact on mathematics achievement in a number of schools in the city of Pittsburgh. It is starting to develop a life of its own and is growing substantially independent of Anderson’s involvement.

Indeed, this work led to the extensive work on intelligent tutoring systems at Carnegie Mellon and affected research on such systems worldwide. As a result of these endeavors, in 1998, Carnegie Mellon researchers, including Anderson and colleagues Kenneth Koedinger and Steve Ritter, founded Carnegie Learning Inc., which develops Cognitive Tutors for algebra and other fields that are still being used by over half a million students per year in classrooms across the United States (Bhattacharjee, 2009). While Newell’s pioneering work on intelligent tutoring did not see the light of day, Anderson’s became very influential.

From the above, it is clear that Simon, Newell, and Anderson made several contributions to the field of education, but their impact in the field goes far beyond these direct contributions. In the 1950s, the predominant learning theory in education was behaviorism; due to the work of Simon, Newell, and their colleagues, information-processing psychology or cognitivism offered an alternative paradigm, which became mainstream in education in the 1970s. In the 1990s, Anderson and Simon, along with Lynne Reder, wrote a sequence of articles in educational venues to dismiss new educational theories that were gaining popularity at the time, namely situated learning and constructivism, by bringing myriad evidence from information-processing psychology (Anderson et al., 1996, 1998, 1999). One of these articles, “Situated Learning and Education” (Anderson et al., 1996), was published in Educational Researcher, one of the most prominent journals in the field of educational research, and led to a seminal debate between Anderson, Reder, and Simon on the one hand and James Greeno on the other, who had moved from being a proponent of information-processing psychology to being an influential advocate for the situative perspective (Anderson et al., 1997, 2000; Greeno, 1997). Based on Google Scholar, Anderson et al. (1996) is currently the 25th most cited article in Educational Researcher. The ninth most cited article in the journal was one of many articles that tried to make sense of this debate: “On Two Metaphors for Learning and the Dangers of Choosing Just One” (Sfard, 1998). It is important to note that Anderson, Reder, and Simon were not proposing an alternative to trendy theories of learning (situativism and constructivism); rather they were defending the predominant paradigm in educational research on learning after the heyday of behaviorism.

It should by now be clear that over the span of several decades, Simon, Newell, and Anderson simultaneously made direct contributions to education (largely as applications of their pioneering work in psychology) and helped shape the landscape of theories of learning and cognition in education for decades. But beyond that, they were committed to reminding the education community that information-processing psychology provided the science that education needed to succeed. In their paper critiquing radical constructivism, Anderson et al. (1999) made a call for bringing information-processing psychology to bear on education research, similar to the call that Simon and Newell (1971) had made earlier, but with seemingly greater concern about the “antiscience” state of education research:

Education has failed to show steady progress because it has shifted back and forth among simplistic positions such as the associationist and rationalist philosophies. Modern cognitive psychology provides a basis for genuine progress by careful scientific analysis that identifies those aspects of theoretical positions that contribute to student learning and those that do not. Radical constructivism serves as the current exemplar of simplistic extremism, and certain of its devotees exhibit an antiscience bias that, should it prevail, would destroy any hope for progress in education. (p. 231)

But the proponents of these “antiscence” positions (radical constructivism and situativism) were no strangers to cognitive science and many of them were actually originally coming from the information-processing tradition and artificial intelligence itself. They turned away from it, because, to them, it lacked something. So what was the science of Simon and Newell lacking?

The Situative Perspective as a Reaction to AI

If 1956 saw the birth of cognitive science and artificial intelligence, we might say that 1987 saw the birth of situativism, which emerged to address what its proponents saw as limitations to the information-processing approach (which also became known as cognitivism). In the 1980s, several researchers from a variety of fields independently developed related ideas around how cognition and learning are necessarily context-dependent, and not taking the situation into account can lead to gross oversimplifications. Lauren Resnick, the president of the American Educational Research Association in 1987, gave her presidential address on the topic of “Learning in School and Out” (Resnick, 1987), which synthesized work emerging from a variety of disciplines pointing to how learning that happens in out-of-school contexts widely differs from in-school learning. In the same year, James Greeno and John Seely Brown founded the Institute for Research on Learning (IRL) in Palo Alto, California. This organization brought together many of the researchers that were thinking about the situated nature of cognition and learning, and was highly influential in the turn that such research took over the next few years. Situativism is not really one unified theory, but a conglomerate of a variety of particular theories developed in different fields. Given the different focus of each field, the terms “situated cognition,” “situated action,” or “situated learning” are often used. However, Greeno (1997) suggested that such terms are misleading, because “all learning and cognition is situated by assumption” (p. 16), advocating for the term “situative perspective” instead.

The situative perspective is also related to and influenced by much earlier sociocultural theories drawing on the work of Vygotsky and other Russian psychologists, which gained attention in the US in the 1980s through the work of Michael Cole and others. It is also related to a number of overlapping theories that all emerged around the same time in reaction to cognitivism, such as distributed cognition (Hutchins et al., 1990; Salomon, 1993), extended mind (Clark & Chalmers, 1998), and embodied cognition (Johnson, 1989; Varela et al., 1991).

To those who are familiar with situativism, it is perhaps abundantly clear that it arose in reaction to the limitations of cognitivism as a theory of how people learn. What I suspect is less clear is the extent to which it arose in reaction to the broader field of artificial intelligence, and the extent to which AI influenced the thinking of the pioneers of the situativism. Indeed, many of the early proponents of the situative perspective were coming from within the AI tradition itself but had seen limitations to the traditional AI approach. John Seely Brown and Allan Collins, who wrote one of the early papers advocating for situated learning (Brown et al., 1989)—the second most cited paper published in Educational Researcher—had worked on some of the earliest intelligent tutoring systems (Brown et al., 1975a, b; Carbonell & Collins, 1973). Brown et al. (1975a) explicitly proposed a tutoring system rooted in production rules. Moreover, Brown in particular conducted core AI research on various topics as well (Brown, 1973; De Kleer & Brown, 1984; Lenat & Brown, 1984). Etienne Wenger, who coined the concept of “communities of practice” with Jean Lave, initially wanted to write his dissertation “in the context of trying to understand the role that artificial intelligence could play in supporting learning in situ” but it “ became clear fairly early on that the field of artificial intelligence as it was conceived of was too narrow for such an enterprise” since “the traditions of information-processing theories and cognitive psychology did address questions about learning but did so in a way that seemed too out of context to be useful” (Wenger, 1990, p. 3). Lave and Wenger (1991) wrote the second most cited book in the field of education, and Wenger’s (1999) book on communities of practice is the third most cited book in education (Green, 2016).Footnote 5

Moreover, despite engaging in a debate with Anderson, Reder, and Simon, James Greeno acknowledged that he “had the valuable privileges of co-authoring papers with Anderson and with Simon and of serving as a co-chair of Reder’s dissertation committee” (Greeno, 1997, p. 5). Outside of education, another important pioneer of situated cognition, Terry Winograd, was a student of Seymour Papert and Marvin Minsky (whom we will discuss shortly) and made very important contributions to the early history of artificial intelligence. Two exceptions to this trend are Jean Lave and Lucy Suchman, who were anthropologists by training, but even they were operating in collaboration with AI researchers. For example, Suchman (1984) acknowledged John Seely Brown as having the greatest influence on her dissertation, which subsequently became an influential book in human-computer interaction and the learning sciences.

Thus, it is clear that situativism arose in reaction to the limitations of AI, but did AI have any further influence on the direction of situativist researchers? The majority of research in this tradition gravitated towards using methods of deep qualitative inquiry, such as ethnography to understand learning in situ, but some of the very pioneers of situativist theories still advocated for the use of computational methods to enhance our understanding of learning, a point I will return to in the final section of this paper. However, the use of these methods did not gain much traction as researchers turned more and more towards qualitative methods to understanding learning in context. Much of the work in the learning sciences today is rooted in situativist theories of learning, but the origins of such theories as reactions to artificial intelligence would not be apparent without taking a historical look at the field.

Different Approaches to AI: Symbolic vs. Non-symbolic and Neat vs. Scruffy

While situativism was reactionary to AI, it was not part of AI per se. Even AI researchers who adopted a situativist perspective gravitated towards other fields, such as anthropology and human-computer interaction to conduct their work. However, within the field of artificial intelligence, there were also competing approaches that challenged the one taken by Simon, Newell and their colleagues. I will now give a very high-level exposition of different approaches to AI research, in order to set the stage for how a competing approach resulted in a different line of inquiry in education as well.

The early days of AI, from the 1950s to the 1980s, was dominated by what is often called symbolic AI or good-old fashioned AI (GOFAI) (Haugeland, 1989), which is embodied in the work of Simon, Newell, and those influenced by their work. This approach is in stark contrast to a competing approach that has taken a number of forms throughout the history of artificial intelligence, but which may be broadly characterized as non-symbolic or subsymbolic AI. The current dominant paradigm in AI is a type of non-symbolic AI: machine learning. Within machine learning, an increasingly popular approach is deep learning, which is rooted in an early approach called connectionism. Connectionism—which involves simulating learning via artificial neural networks—actually first emerged in the 1940s (McCulloch & Pitts, 1943), and so it predates the birth of AI, but this approach was not taken seriously in the early days of AI by researchers who supported symbolic AI (Nilsson, 2009; Olazaran, 1996). However, neural networks made their way back into mainstream AI after advances in algorithms—such as the development of the back propagation algorithm in the 1980s—and currently dominate the field of AI.

If connectionism and machine learning are the antithesis to symbolic AI, then what was the analogous antithesis to information-processing approaches to education? This is where the story gets a little complicated. As we have already seen, the pushback to information-processing psychology came from situativism and radical constructivism. But these theories share no immediately obvious relationship with neural networks. Interestingly, some connections have been drawn between connectionism and situative and constructivist theories (Doroudi, 2020; Quartz, 1999; Shapiro & Spaulding, 2021; Winograd, 2006), but these connections have not had practical import on approaches in education. However, there was another competitor to symbolic AI, which I believe is often obscured by the distinction between symbolic and connectionist approaches. To understand this other approach, we need to examine a different dichotomy in the history of AI: neats vs. scruffies.

The distinction was first introduced by Roger Schank in the 1970s (Abelson, 1981; Nilsson, 2009; Schank, 1983). According to Abelson (1981), “The primary concern of the neat is that things should be orderly and predictable while the scruffy seeks the rough-and-tumble of life as it comes.” Neats are researchers that take a more precise scientific approach that favors mathematically elegant solutions, whereas scruffies are researchers that take a more ad hoc and intuition-driven engineering approach. According to Kolodner (2002), who was Roger Schank’s student,

While neats focused on the way isolated components of cognition worked, scruffies hoped to uncover the interactions between those components. Neats believed that understanding each of the components would provide us with what we needed to see how they fit together into a working system of cognition. Scruffies believed that no component of our cognitive systems was isolated, but rather, because each depends so much on the workings of the others, their interactions were the key to understanding cognition. (p. 141)

Kolodner (2002) specifically refers to Simon, Newell, and Anderson as “quintessential neats,” and Schank, Minsky, and Papert as “quintessential scruffies” in AI. Extending the definitions to education, situativist and constructivist education researchers fall largely on the scruffy side of the spectrum. Therefore, to better understand the parallels in AI and education that rejected the information-processing perspective we must now turn to the founders of AI on the scruffy side (Minsky and Papert, and in a later section, Schank).

Papert and Minsky: From Lattice Theory to Logo Turtles

As mentioned earlier, Marvin Minsky was one of the Dartmouth Tetrad. Seymour Papert was not present at the Dartmouth Conference, but joined the AI movement early on when he moved to the Massachusetts Institute of Technology (MIT) in 1964, and formed the AI Laboratory with Minsky. I believe it is common to regard Minsky as one of the founders of AI and Papert as a seminal figure in educational technology. However, this is an oversimplification; Minsky and Papert both played important roles in the field of AI and in the field of education. They coauthored Perceptrons: An Introduction to Computational Geometry, an important technical book in the history of AI. Minsky’s book The Society of Mind was originally a collaboration with Papert (Minsky, 1988). Moreover, Papert acknowledges in one of his seminal books on education, Mindstorms, that “Marvin Minsky was the most important person in my intellectual life during the growth of the ideas in this book” (Papert, 1980). A recently published book edited by Cynthia Solomon, Inventive Minds: Marvin Minsky on Education, collects six essays that Minsky has written about education (Minsky, 2019). Furthermore, Minsky and Papert were both associate editors of the Journal of the Learning Sciences when it formed in 1991 (Journal of the Learning Sciences, 1991).

Minsky and Papert’s 1969 book Perceptrons played an important role in devaluing research on connectionism in the 70 s. According to Olazaran (1996), the book did not completely end all connectionist research, but it led to the institutionalization and legitimization of symbolic AI as the mainstream. While this may very well be true, I think it obscures Minsky and Papert’s actual positions in AI research by suggesting they were proponents of symbolic AI. Indeed, Olazaran (1996) claims they were symbolic AI researchers. Perhaps, their role in “shutting down” perceptrons research was seen as so large that other researchers were naturally inclined to situate them in the symbolic camp. Indeed, Newell (1969) wrote a very positive book review of Perceptrons reinforcing the idea that he was in the same camp as the authors. Moreover, Simon and Newell have, to my knowledge, never entered into any public disputes or debates with Papert and Minsky over their approaches to AI. Perhaps, they saw each other with respect as early proponents of a new field that exhibited mathematical rigor who shared some common “foes”: connectionism and philosophical critiques against AI (Dreyfus, 1965; Papert, 1968). But in fact, their approaches were sharply different in both AI and education. This can be gauged by taking a closer look at the work of Papert and Minsky; we will begin with a look at their approach to AI research, followed by an exposition of Papert’s contributions to education (which as outlined above were developed in collaboration with Minsky).

A Piagetian Approach to AI

To understand the difference in approach, a bit of background on Papert is needed. Papert obtained two PhDs in mathematics in the 1950s, both on the topic of lattices. In 1958, he then moved to Geneva where he spent the next several years working with the famous psychologist and genetic epistemologist, Jean Piaget, who is the founder of constructivism as a psychological theory. Piaget’s influence on Papert affected his approach to AI research and education: “If Piaget had not intervened in my life I would now be a ‘real mathematician’ instead of being whatever it is that I have become” (Papert, 1980, p. 215). In 1964, Papert moved to the Massachusetts Institute of Technology (MIT) to work with Minsky on artificial intelligence. Papert (1980) notes the reason for moving from studying children with Piaget to studying AI at MIT:

Two worlds could hardly be more different. But I made the transition because I believed that my new world of machines could provide a perspective that might lead to solutions to problems that had eluded us in the old world of children. Looking back I see that the cross-fertilization has brought benefits in both directions. For several years now Marvin Minsky and I have been working on a general theory of intelligence (called “The Society Theory of Mind”) which has emerged from a strategy of thinking simultaneously about how children do and how computers might think. (p. 208)

Minsky and Papert’s early approach to AI is well encapsulated in a 1972 progress report on their recently formed MIT AI Laboratory. After mentioning a number of projects that they were undertaking, Minsky and Papert (1972) describe their general approach:

These subjects were all closely related. The natural language project was intertwined with the commonsense meaning and reasoning study, in turn essential to the other areas, including machine vision. Our main experimental subject worlds, namely the “blocks world” robotics environment and the children’s story environment, are better suited to these studies than are the puzzle, game, and theorem-proving environments that became traditional in the early years of AI research. Our evolution of theories of Intelligence has become closely bound to the study of development of intelligence in children, so the educational methodology project is symbiotic with the other studies, both in refining older theories and in stimulating new ones; we hope this project will develop into a center like that of Piaget in Geneva.

Like Simon and Newell’s approach, Minsky and Papert were interested in studying both machine and human cognition, but some of the key differences in their approaches are apparent in the aforementioned quote. Minsky and Papert were interested in a wider range of AI tasks, like common sense reasoning, natural language processing, robotics, and computer vision, all of which are prominent areas of AI today. Moreover, they were interested in children, not experts. Relatedly, they emphasized learning and development (hence the emphasis on children) over performance, which is markedly different from Newell and Simon’s approach of emphasizing the study of performance. Indeed, according to Newell and Simon (1972),

If performance is not well understood, it is somewhat premature to study learning. Nevertheless, we pay a price for the omission of learning, for we might otherwise draw inferences about the performance system from the fact that the system must be capable of modification through learning. It is our judgment that in the present state of the art, the study of performance must be give precedence, even if the strategy is not costless. (p. 8)

Minsky (1977) later provided justification for this choice to focus on development as follows:

Minds are complex, intricate systems that evolve through elaborate developmental processes. To describe one, even at a single moment of that history, must be very difficult. On the surface, one might suppose it even harder to describe its whole developmental history. Shouldn't we content ourselves with trying to describe just the “final performance?” We think just the contrary. Only a good theory of the principles of the mind’s development can yield a manageable theory of how it finally comes to work. (p. 1085)

Later in their report, Minsky and Papert (1972) explicitly state limitations of research on “Automatic Theorem Provers” (without making explicit mention of Newell and Simon) such as the lack of emphasis on “a highly organized structure of especially appropriate facts, models, analogies, planning mechanisms, self-discipline procedures, etc.” as well as the lack of heuristics in solving proofs (e.g., mathematical insights used in solving the proof that are not part of the proof itself). They then use this to motivate the need for what they call “microworlds”:

We are dependent on having simple but highly developed models of many phenomena. Each model—or “microworld” as we shall call it—is very schematic…we talk about a fairyland in which things are so simplified that almost every statement about them would be literally false if asserted about the real world. Nevertheless, we feel they are so important that we plan to assign a large portion of our effort to developing a collection of these microworlds and finding how to embed their suggestive and predictive powers in larger systems without being misled by their incompatibility with literal truth. We see this problem—of using schematic heuristic knowledge—as a central problem in Artificial Intelligence.

Indeed, confining AI programs to tackling problems in microworlds or “toy problems”—another phrase attributed to Papert (Nilsson, 2009)—became an important approach at MIT and in AI in general to this day. But as indicated by the quote above, Papert and Minsky’s goal was to see how to combine microworlds to develop intelligence that is meaningful in the real world. This is indicative of Papert and Minsky’s general approach to AI. Namely, they were interested in building up models of intelligence in a bottom-up fashion. Rather than positing one grand “unified theory of cognition” (Newell, 1994), they realized that the mind must consist of a variety of many small interacting components, and that how minds organize many pieces of localized knowledge is more important than universal general problem-solving strategies. It is the interaction of all these pieces that makes up intelligence and gives rise to learning.

This naturally leads to the question: how does the mind represent knowledge? Knowledge representation is a fundamental concern of AI (and an important but understudied concern in education as well). Minsky (1974) wrote one of the seminal papers on knowledge representation describing a representation he called “frames”:

Here is the essence of the theory: When one encounters a new situation (or makes a substantial change in one’s view of the present problem) one selects from memory a structure called a Frame. This is a remembered framework to be adapted to fit reality by changing details as necessary.

A frame is a data-structure for representing a stereotyped situation, like being in a certain kind of living room, or going to a child’s birthday party. Attached to each frame are several kinds of information. Some of this information is about how to use the frame. Some is about what one can expect to happen next. Some is about what to do if these expectations are not confirmed.

Frames allow for navigating unforeseen situations in terms of situations one has seen before. It means that early on, one might make mistakes by extrapolating based on a default version of a frame, but as a situation becomes clearer, one can customize the frame (by filling in certain “terminals” or “slots”) to meet the needs of the situation. Importantly, frames were meant to be relevant to a variety of areas of artificial intelligence, including computer vision, language processing, and memory (Goldstein & Papert, 1977).

Frames became one component of Minsky and Papert’s broader bottom-up approach to artificial intelligence, which is outlined in Minsky’s famous book, The Society of Mind, which as mentioned earlier was jointly developed with Papert. As the name suggests, Minsky (1988) suggests the mind is a society of agents:

I’ll call Society of Mind this scheme in which each mind is made of many smaller processes. These we’ll call agents. Each mental agent by itself can only do some simple thing that needs no mind or thought at all. Yet when we join these agents in societies—in certain very special ways—this leads to true intelligence. (p. 17)

Ironically, this approach shares some commonalities with connectionism. Both posit a bottom-up process that gives rise to learning. Like Minsky’s agents, each individual neuron is not sophisticated, but it is the connections between many neurons that can result in learning to do complex tasks. Indeed, in a chapter that “grew out of many long hours of conversation with Seymour Papert” (p. 249), Turkle (1991) classified both connectionism and the society of mind theory as part of “Emergent AI,” which arose as a “romantic” response to traditional information-processing AI. But there is a clear difference—each of the agents in Minsky’s society is itself still interesting and there are several distinct kinds of agents that are designed to play conceptually different roles. In their prologue to the second edition of Perceptrons, Minsky and Papert (1988) claim that “the marvelous powers of the brain emerge not from any single, uniformly structured connectionist network but from the highly evolved arrangements of smaller, specialized networks which are interconnected in very specific ways” (p. xxiii). Minsky and Papert (1988) further admit when discussing the often dichotomized “poles of connectionist learning and symbolic reasoning”, that “it never makes any sense to choose either of those two views as one’s only model of the mind. Both are partial and manifestly useful views of a reality of which science is still far from a comprehensive understanding” (p. xxiii).

Papert: The Educational Thinker and Tinkerer

In tandem with developing this work in AI, Papert made critical advances in educational technology and educational theory. In 1966, Papert—along with Wallace Feurzeig, Cynthia Solomon, and Daniel Bobrow—conceived of the Logo programming language to introduce programming to kids (Solomon et al., 2020). (Bobrow was a student of Minsky’s and a prominent AI researcher in his own right who became president of AAAI in 1989.) According to Papert (1980), his goal was to design a language that would “have the power of professional programming languages, but [he] also wanted it to have easy entry routes for nonmathematical beginners.” Logo was originally a nongraphical programming language designed “for playing with words and sentences” (Solomon et al., 2020, p. 33), but early on Papert saw the power of adding a graphical component where children write programs to move a “turtle” (either a triangle on the screen or a physical robot connected to the computer) that traces geometric patterns (Papert, 1980).

In 1980, Papert wrote his seminal book, Mindstorms: Children, Computers, and Powerful Ideas, which described how he envisioned the ability for computers to enact educational change (through Logo-like programs). Papert took the idea of a “microworld” that he and Minsky had earlier used in AI and repurposed it to be a core part of his educational theory. In fact, I believe those familiar with the concept of microworld in Papert’s educational thought would likely not realize the AI origins of this concept as he does not seem to explicitly link the two—to Papert, it was a natural extension. A microworld in Logo is “a little world, a little slice of reality. It’s strictly limited, completely defined by the turtle and the ways it can be made to move and draw” (Papert, 1987b). The fact that these microworlds were not completely accurate renditions of reality was not a disadvantage, but rather a testament to the power of the approach:

So, we design microworlds that exemplify not only the “correct” Newtonian ideas, but many others as well: the historically and psychologically important Aristotelian ones, the more complex Einsteinian ones, and even a “generalized law-of-motion world” that acts as a framework for an infinite variety of laws of motion that individuals can invent for themselves. Thus learners can progress from Aristotle to Newton and even to Einstein via as many intermediate worlds as they wish. (p. 125)

To Papert, this would not confuse students but rather help them understand central concepts like motion in more intuitive ways (Papert, 1980). The fact that many students (including MIT undergraduates that Papert’s colleague, Andrea diSessa, studied) struggle with the concept of motion is precisely because of the way they learn the underlying mathematics and physics; they do not get the intuition they would otherwise get from experimenting with microworlds:

And I’m going to suggest that in a very general way, not only in the computer context but probably in all important learning, an essential and central mechanism is to confine yourself to a little piece of reality that is simple enough to understand. It’s by looking at little slices of reality at a time that you learn to understand the greater complexities of the whole world, the macroworld. (Papert, 1987b, p. 81)

Clearly this is a drastically different conception of learning than the one traditional information-processing psychology espouses. Here, learning is not an expert transmitting certain rules to a student, but rather the student picking up “little nuggets of knowledge” as they experiment and discover a world for themselves (Papert, 1987b). Moreover, not every child is expected to learn the same things; each child can learn something that interests them (Papert, 1987b): “No two people follow the same path of learnings, discoveries, and revelations. You learn in the deepest way when something happens that makes you fall in love with a particular piece of knowledge. (p. 82)”.

As such, Logo became more than a tool for children to learn programming, but also a tool to help children learn about and experience various subjects including geometry, physics, and art. However, Logo did not teach these subjects as an intelligent tutoring system would; it allowed students to discover the powerful ideas in these domains (with guidance from a teacher and peers). As described by Abelson and diSessa (1986), two of Papert’s colleagues, “The abundance of the phenomena students can investigate on their own with the aid of computer models shows that computers can foster a style of education where ‘learning through discovery’ becomes more than just a well-intentioned phrase.” (p. xiii). Moreover, Abelson and diSessa (1986) explain, “turtle geometry” not only changes the way students interact with the content, but it also changes the nature of geometric knowledge that students engage with:

Besides altering the form of a student's encounter with mathematics, we wish to emphasize the role of computation in changing the nature of the content that is taught under the rubric of mathematics….Most important in this endeavor is the expression of mathematical concepts in terms of constructive, process-oriented formulations, which can often be more assimilable and more in tune with intuitive modes of thought than the axiomatic-deductive formalisms in which these concepts are usually couched. As a consequence we are able to help students attain a working knowledge of concepts such as topological invariance and intrinsic curvature, which are usually reserved for much more advanced courses. (p. xiv)

This approach contrasts sharply with the proof-based geometry tutoring systems being developed by Anderson, Koedinger, and colleagues around the same time (Anderson et al., 1985; Koedinger & Anderson, 1990).

But what does Papert’s educational philosophy have to do with AI? In Mindstorms, Papert (1980) has a chapter titled “Logo’s Roots: Piaget and AI.” For Papert, Piaget provided the learning theory and epistemology that underpinned his endeavor, but AI allowed Papert to interpret Piaget in a richer way using computational metaphors: “The aim of AI is to give concrete form to ideas about thinking that previously might have seemed abstract, even metaphysical” (Papert, 1980, pp. 157158). In a sense, his use of AI is similar to that of Newell and Simon: better understanding human intelligence by creating artificial intelligence. However, as we have already seen, his approach was quite different:

In artificial intelligence, researchers use computational models to gain insight into human psychology as well as reflect on human psychology as a source of ideas about how to make mechanisms emulate human intelligence. This enterprise strikes many as illogical: Even when the performance looks identical, is there any reason to think that underlying processes are the same? Others find it illicit: The line between man and machine is seen as immutable by both theology and mythology. There is a fear that we will dehumanize what is essentially human by inappropriate analogies between our “judgments” and those computer “calculations.” I take these objections very seriously, but feel that they are based on a view of artificial intelligence that is more reductionist [than] anything I myself am interested in. (Papert, 1980, p. 164, emphasis added)

Papert (1980) then gives a particular example of how AI has influenced his and Minsky’s thinking about how people learn: how a society of agents can give rise to Piagetian conservation. Piagetian conservation refers to the concept that before the age of seven, children generally do not grasp the concept of how quantity is conserved even when it comes in different forms (e.g., the quantity of a liquid is conserved regardless of the size of the container holding it). Papert and Minsky argue that this theory could begin to be explained by a set of four simple-minded agents and their interactions (Minsky, 1988; Papert, 1980). Unlike Simon and Newell, Papert and Minsky did not actually believe they had found the exact cognitive mechanisms that explain this phenomena, but rather, they found insights into a process that could resemble it:

This model is absurdly oversimplified in suggesting that even so simple a piece of a child’s thinking (such as this conservation) can be understood in terms of interactions of four agents. Dozens or hundreds are needed to account for the complexity of the real process. But, despite its simplicity, the model accurately conveys some of the principles of the theory: in particular, that the components of the system are more like people than they are like propositions and their interactions are more like social interactions than like the operations of mathematical logic. (Papert, 1980, pp. 168-169)

This insight in turn presumably led Papert to realize the kinds of educational experiences that students need in order to develop their “society of mind,” and thus the kind of educational experiences that Logo-like microworlds would need to support. Moreover, according to Papert (1980):

While psychologists use ideas from AI to build formal, scientific theories about mental processes, children use the same ideas in a more informal and personal way to think about themselves. And obviously I believe this to be a good thing in that the ability to articulate the processes of thinking enables us to improve them. (p. 158).

Therefore, Logo provides an environment for children to articulate and think about their own thinking (just as the programming language Lisp allowed AI researchers to concretize their theories and models). Logo did not use AI directly, but its use was designed to embody a theory of learning that was influenced by Papert and Minsky’s kind of AI.

Minsky and Papert’s approach to simultaneously studying AI and education was exemplified in a press release describing a 1970 symposium hosted by the AI Labroratory called “Teaching Children Thinking” (Minsky & Papert, 1970).Footnote 6 Having held this symposium prior to publishing their first AI progress report, the press release pronounced:

The meeting is the first public sign of a shift in emphasis of the program of research in the Artificial Intelligence Laboratory. In the past the principle goals have been connected with machines. These goals will not be dropped, but work on human intelligence and on education will be expanded to have equal attention....plans are being developed to create a program in graduate study in which students will be given a comprehensive exposure to all aspects of the study of thinking. This includes studying developmental psychology in the tradition of Piaget, machine intelligence, educational methods, philosophy, linguistics, and topics of mathematics that are considered to be relevant to a firm understanding of these subjects. (Minsky & Papert, 1970)

The press release then goes on to state how “current lines of educational innovation go in exactly the wrong direction” (Minsky & Papert, 1970). They claimed that “The mere mention of the ‘new math’ throws them into a rage. So do most trends in the psychology of learning and in programmed instruction” (Minsky & Papert, 1970). Perhaps ironically, the symposium had a panel discussion led by Marvin Minsky, with Allen Newell and Patrick Suppes as two of the three panelists. Newell was working on Merlin at the time, and Suppes was pioneering efforts in computer-assisted instruction, much of which consisted of teaching elementary school students elementary logic and new math. One wonders how much rage was present in the panel discussion!

In the twenty-first century, Logo has not fundamentally changed education in K-12 schools. However, Papert (1980) did not see Logo as the solution, but rather as a model “that will contribute to the essentially social process of constructing the education of the future” (Papert, 1980, p. 182). In a sense, Logo and Papert’s legacy have had success in this regard. Many children’s programming languages that have gained popularity in recent years were either directly or indirectly inspired by Logo. Scratch, the popular block-based programming language for kids, was developed by Papert’s student Mitchel Resnick. Lego’s popular robotics kit, Lego Mindstorms, was inspired by Papert and named after his book. However, Logo was about more than just computer science education; to reiterate, it could help students learn about topics such as geometry, physics, art, and perhaps most importantly, their own thinking.

Moreover, Papert has had an immense impact on educational theory. His theory of constructionism took Piaget’s constructivism and augmented it with the idea that a student’s constructions are best supported by having objects (whether real or digital) to build and tinker with. This has been a source of inspiration for the modern-day maker movement (Stager, 2013). Many of Papert’s students and colleagues who worked on Logo were or are leading figures in the learning sciences and educational technology.Footnote 7 In addition, one of Papert’s student, Terry Winograd, made important contributions to AI before becoming one of the foremost advocates for situated cognition, as mentioned earlier. In fact, it appears that seeds of situated learning and embodied cognition existed in Papert’s writings before the movement took off in the late 80 s (Papert, 1976, 1980). For example, Papert (1980) describes the power of objects like gears (his childhood obsession) and the Logo turtle in learning, by connecting the body and the mind:

The gear can be used to illustrate many powerful “advanced” mathematical ideas, such as groups or relative motion. But it does more than this. As well as connecting with the formal knowledge of mathematics, it also connects with the “body knowledge,” the sensorimotor schemata of a child. You can be the gear, you can understand how it turns by projecting yourself into its place and turning with it. It is this double relationship—both abstract and sensory—that gives the gear the power to carry powerful mathematics into the mind. (p. viii)

Beyond this legacy in educational technology and the learning sciences, Papert—who was an anti-apartheid activist in his youthful days in South Africa—should also be recognized as an education revolutionary, visionary, and critic who sought to fundamentally change the nature of schools. This puts him alongside the ranks of Paulo Freire, Ivan Illich, and Neil Postman. Indeed, discussions with Freire influenced Papert’s thinking in The Children’s Machine: Rethinking School in the Age of the Computer, which Freire in turn referred to as “a thoughtful book that is important for educators and parents and essential to the future of their children” (Papert, 1993, back cover).Footnote 8 However, unlike many technologists and entrepreneurs who want to “disrupt” education, Papert did not take a technocentric approach; in fact, he himself coined the term “technocentric” to critique it, as he recognized that technology was only secondary to “the most important components of educational situations—people and cultures” (Papert, 1987a, p. 23).

The Intertwined History of AI and Education in the UK

The narrative described so far is predominantly centered on the history of artificial intelligence and education in the United States. While the Dartmouth Tetrad are renowned for their pioneering contributions to AI, there was also early research in AI happening in the United Kingdom. In this section, I briefly show that a lot of the aspects of the intertwined history of AI and education in the US were also paralleled by AI pioneers based in the UK.

Donald Michie, who had worked with Alan Turing and others as a Bletchley Park codebreaker in World War II, was one of the earliest AI researchers in the UK (Nilsson, 2009). In 1960, he created the Matchbox Educable Noughts and Crosses Engine (MENACE), an arrangement of 304 matchboxes and some glass beads that (when operated by a human properly) could learn to play the game of noughts and crosses (or tic-tac-toe; Nilsson, 2009). In 1965, Michie established the UK’s first AI laboratory, the Experimental Programming Unit, which became the Department of Machine Intelligence and Perception a year later, at the University of Edinburgh. In 1970, the UK’s Social Science Research Council awarded a $10,000 grant to Michie “for a study of computer assisted instruction with young children” (Annett, 1976). In 1972, SSRC awarded Jim Howe, one of Michie's colleagues and a founding member of the Department of Machine Intelligence, a $15,000 grant to investigate “An Intelligent Teaching System” (Annett, 1976). Howe would receive several other grants from SSRC over the next few years in the area of educational computing, including one on “Learning through LOGO” (Annett, 1976). Learning mathematics through Logo programming became a large project in Howe’s group and several influential researchers in what would become the AIED community were part of that project, including Timothy O'Shea, Benedict du Boulay (who completed his PhD under Howe’s supervision), and Sylvia Weir (who joined Papert’s lab in 1978). This work included a focus on using Logo to help students with various disabilities (e.g., physical disabilities, dyslexia, and autism) to learn basic communication skills (Howe, 1978). From 1977 to 1996, Jim Howe was the head of the Department of Artificial Intelligence, which evolved out of the Department of Machine Intelligence and Perception.

Michie and Howe continued to pursue educational technology research throughout their careers. For example, in 1989, Michie and Bain (1989) wrote a paper advocating for the necessity of advancing machine learning for creating machines that can teach:

It is our view that the inability of computers to learn has been a principal cause of their inability to teach. It is becoming apparent from the emerging science of Machine Learning that the development of a theoretical basis for learning must be rooted in formalisms sufficiently powerful for the expression of human-type concepts. (p. 20)

That same year, Michie et al. (1989) also published a paper called “Learning by Teaching,” which advanced a relatively unexplored idea of using AI to support learning by having the student teach the computer using examples, rather than vice versa. In 1994, Michie, along with his wife and fellow AI researcher, Jean Hayes Michie, founded the Human-Computer Learning Foundation, a charity dedicated to enhancing education by designing software where “human and computer agents incrementally learn from each other through mutual interaction” (Human-Computer Learning Foundation, n.d.).

These UK-based leaders in AI were not merely engaged in cutting-edge applications of educational technology, but like Simon, Newell, Papert, and Minsky, their interest in education was an extension of their mutual investigation of cognition and learning in humans and machines. According to Annett (1976),

the real significance of the Edinburgh work lies in its AI orientation. When the results of this project are available we may be able to reach some preliminary conclusions on the future viability of knowledge-based teaching systems, but complex problems are involved which will not be solved on the basis of these projects alone. If they are solved in technical and educational terms the question of cost still remains and even a sanguine estimate suggests it will be considerable. Nevertheless the investigation of technical and educational fesibility seems a reasonable aim not just in case implementation may be possible in the long term, but because of the light which could be thrown on some of the basic issues of the nature of “knowledge and “understanding”. This [work]…is breaking new ground in ways of conceiving the nature of the teaching/learning process. (p. 11)

As Howe (1978) described it,

the learner is viewed as a builder of mental models, erecting for each new domain a knowledge structure that can be brought to bear to solve problems in that domain. Recent research in artificial intelligence suggests that building computer programs is a powerful means of characterising and testing our understanding of cognitive tasks (see, for example, Newell & Simon, 1972; Lindsay and Norman, 1972; Howe, 1975; Longuet-Higgins, 1976). An implication of the AI approach is that teaching children to build and use computer programs to explicate and test their thinking about problems should be a valuable educational activity.

Similarly, according to a report written by a working party, which included Christopher Longuet-Higgins, who was one of the founders of the Department of Machine Intelligence and Perception at Edinburgh and who coined the name “cognitive science” (Longuet-Higgins, 1973) for the emerging field:

advances of our understanding of our own thought processes are also critical for improvements in education and training….Computer aided instruction is already useful, but the realization of its full potential must depend on further advances in our understanding of human cognition and on our ability to write programs that make computers function in an intelligent way. (cited in Annett, 1976, p. 4)

Other researchers also had the same attitude towards studying AI and education in an intertwined fashion. For example, Gordon Pask was a leading cybernetician who was designing analog computer machines that could adaptively teach students as early as the 1950s; he was also doing what would aptly be called AI research at this time (but as it was under the field of cybernetics, his work is typically disregarded in the “sanctioned” history of AI). Pask (1972) tried to clarify the distinction between AI (or “computation science”) as a conceptual tool for reasoning about how people think and learn, and “computer techniques” as the infrastructure that enables computer-assisted instruction (CAI):

Computation science deals with relational networks and processes that may represent concepts; with the structure of knowledge and the activity of real and artificial minds. Computation science lies in (even is) the kernel of CAI; it lends stature to the subject and bridges the interdisciplinary gap, between philosophy, education, psychology and the mathematical theory of organisations. Computer techniques, in contrast, bear the same relation to CAI as instrument making to physics or reagent manufacture to chemistry. (p. 236)

The idea of supporting such interdisciplinary research that bridged between AI and education was also supported in a 1973 SSRC Educational Research Board report, where “It was proposed that ‘learning science’, a field involving education, cognitive psychology and artificial intelligence, should be supported…and probably in the form of a long term interdisciplinary research unit” (Annett, 1976, p. 4). The term “learning science,” preempted a field that would emerge in the US nearly 20 years later. Learning Science did not take off as a new field in the UK in the 1970s, but a decade later, the seeds of another new field were being sowed in the UK, and that is where we turn our attention next.

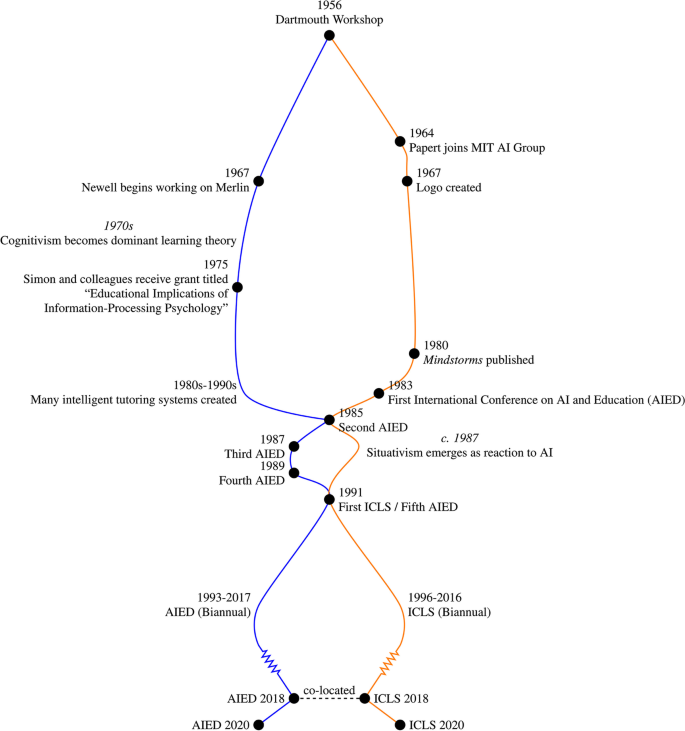

Artificial Intelligence in Education: The Field