Abstract

Aharonov’s weak value is a physical quantity obtainable by weak measurement, which admits amplification through state selections. The amplification is expected to be useful for precision measurement, but it is achieved at the expense of statistical deterioration due to the selections, which brings the question as to whether it can provide a truly superior means over the conventional measurement. We approach this problem by taking measurement uncertainty into account, and present a probabilistic evaluation of its impact to the final result of the measurement in weak measurement. By examining the significance condition for detecting the coupling \(g\), we show that the trade-off relation between the amplification effect and the statistical deterioration does permit a finite range of usable amplification where the superiority is ensured. Apart from the Gaussian state models employed for demonstration, our argument is mathematically rigorous and general; it is free from approximation and valid for arbitrary observables \(A\) and couplings \(g\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The novel physical quantity in quantum mechanics called weak value, proposed earlier by Aharonov and co-workers [1, 2], has been attracting much attention in recent years (for a review, see, e.g., [3, 4]). One of the reasons for this renewed interest is that, unlike the standard physical value given by one of the eigenvalues of an observable \(A\), the weak value takes a definite value for any \(A\) and hence may be considered meaningful simultaneously even for a set of non-commutable observables. This inspired a new insight for understanding counter-intuitive phenomena, such as the three-box paradox [5] and Hardy’s paradox [6]. The other, arguably stronger, motive for the interest comes from the realisation that the weak value can be amplified by adjusting the process of its relevant measurement, weak measurement. Specifically, by choosing properly the initial and the final state (pre- and postselection) of the process, the weak value can be made arbitrarily large, and this may be utilised for precision measurement. In fact, it has been reported that a significant amplification is achieved to observe successfully the spin Hall effect of light [7], which is too subtle to see by the conventional measurement. A similar amplification has also been shown to be available for detecting ultrasensitive beam deflection in a Sagnac interferometer [8]. Given this situation, there has been an intensive activity on theoretical analysis over the applicational merit of weak measurement technique, of which two major approaches we shall mention here.

One of them concerns with the question of signal amplification and its limit, where one asks the question as to whether there exists a limit on amplification, and if so why. This was addressed recently in [9], which extended the treatment of [10] to the full order of the coupling \(g\) between the system and the measurement device, where the amplification is analysed based on the average shift of the meter device. For a special case in which the observable \(A\) fulfils the condition \(A^2 = \mathrm {Id}\) and the meter wave functions are assumed to be of Gaussian states, it has been shown that the amplification rate, as well as the signal-to-noise ratio, has an upper limit [11, 12]. No such limit arises if the meter state can be tuned precisely according to the weak value and the coupling [13].

The other approach is based on estimation theory, and addresses the question whether the technique of postselection can be statistically superior to the conventional one, both in the case where an ideal ‘noiseless’ experiment can be performed [15], and in the presence of certain types of statistical ‘noise’ [16–18]. Utilising the standard estimation theory [19–21], they examine the feasibility of improving parameter estimation of the coupling constant \(g\) by weak measurement. By comparing the quantum Fisher information of the whole system before and after the postselection, or its classical counterpart of the probability measure of the measured outcomes under such ‘noise’ before and after the postselection, it is demonstrated that the latter weighted by the survival rate is never greater than the former, concluding that in general the technique of postselection statistically deteriorates the quality of estimation.

We here provide a novel approach to the theoretical analysis on the merit of weak value amplification technique in view of measurement uncertainty, in which the significance of measurement can be examined explicitly. There, the concept of ‘measurement uncertainty’ [22, 23] is taken into consideration in a manner faithful to its original philosophy, which is to acknowledge the existence of unavoidable elements of uncertainty inherent to all measurements, including those which cannot be dealt with by the usual estimation theory, either in principle or in practice. The importance of this concept has been well recognised by experimental physicists who undertake precision measurement, and the theoretical analysis of weak measurement concerning the limit of signal amplification may also be implicitly motivated by its existence, but it has been mostly overlooked or inappropriately treated in the prevalent theoretical literature, with the aforementioned analysis based on estimation theory being no exception.

In this paper, the probabilistic evaluation of its impact to the final result of the measurement will be shown to be separable into instrumental, quantum, and nonlinear components. The merit of utilising weak measurement, or in general the technique of postselection, is then understood as the practice of taking advantage of the trade-off relation between reduced instrumental uncertainty due to its signal amplification effect, and statistical deterioration (increase in quantum and nonlinear component) caused by decrease in survival rate. This consolidates the aforementioned two different approaches that concern themselves on either the signal amplification effect or statistical gain, and consequently explains well our intuition in a unified manner. A numerical demonstration by Gaussian wave function follows the qualitative argument, and the significance condition for the successful affirmation of the existence of an physical effect, which is one of the concerns shared by many weak measurement experiments including those mentioned in the beginning of this introduction, is shown to lead to the appearance of a finite range of useful weak value amplification. Apart from the Gaussian state analysis, our treatment is general; it is valid for an arbitrary-dimensional system with arbitrary observables \(A\) for all range of couplings \(g\), and no approximation is used throughout.

This paper is organised as follows. After recalling the process of weak measurement and the basic properties of weak value in Sect. 2, we provide a brief review of the concept of measurement uncertainty in Sect. 3, along with an argument on probabilistically evaluating its impact to the final result of the measurement. The argument is then applied to the case of both the conventional and weak measurements to compute their total uncertainties in Sect. 4, and derive the condition of significant measurement for the detection of the coupling constant \(g\). The validity of our argument is demonstrated numerically in Sect. 5 for weak measurement of an observable with two-point spectrum under Gaussian state wave functions for the meter. The last Sect. 6 is devoted to Summary and Conclusion.

2 Weak value and weak measurement

We begin by recalling the process of the conventional indirect measurement scheme for obtaining the expectation value, and consecutively that of the weak measurement for obtaining the weak value. In order to avoid unnecessary complication and to provide ease in readability, the following mathematical facts are stated in a laxer way than one finds in the mathematical literature, although they can be verified rigorously. Those who are interested in their exact statements with full proofs are referred to our previous work [24].

2.1 Preparation

Let \(\mathcal {H}, \, \mathcal {K}\) be Hilbert spaces associated to the system of interest and that of the meter device, respectively. We wish to find the value of an observable \(A\) of the system represented by a self-adjoint operator on \(\mathcal {H}\). This is done through the employment of observables \(Q\), \(P\) of the meter device, which are represented by self-adjoint operators on \(\mathcal {K} \) satisfying the canonical commutation relation \([Q, P] = i\hbar \) (we put \(\hbar = 1\) hereafter for brevity). The measurement is assumed to be of von Neumann type, in which the evolution of the composite system \(\mathcal {H} \otimes \mathcal {K}\) is described by the unitary operator \(e^{-igA \otimes P}\) with a coupling parameter \(g \in [0, \infty )\). Prior to the interaction, the measured system shall be prepared in some state \(|\phi _{i}\rangle \in \mathcal {H}\). Along with this preselection, the measuring device is also prepared in a state \(|\psi \rangle \in \mathcal {K}\). The state of the composite system evolves after the interaction into \(|\omega (g)\rangle := e^{-igA \otimes P} |\phi _{i} \otimes \psi \rangle \).

2.2 Conventional measurement

As for obtaining the (conventional) expectation value of \(A\) on \(|\phi _{i}\rangle \), we measure the observable \(X = Q\) of the measuring device and examine its shift in the expectation value \(\mathrm {Exp}_{X}(\psi ) := \langle \psi |X|\psi \rangle / \Vert \psi \Vert ^{2}\) before and after the interaction. Imposing certain mathematical conditions on \(|\phi _{i}\rangle \) and \(|\psi \rangle \) and defining the shift by

one verifies

In addition, we have \(\mathrm {\Delta }^c_P(g) = 0\) for the case \(X=P\).

2.3 Weak measurement

Now, for obtaining the weak value of \(A\), we resort to the weak measurement, which can be seen as an expansion of the aforedescribed conventional measurement. Namely, we choose a state \(|\phi _{f}\rangle \in \mathcal {H}\) of the system, and perform a projective measurement on the composite state \(|\omega (g)\rangle \). Those that result in \(|\phi _{f}\rangle \) shall be kept, otherwise discarded. After this postselection, the composite state will be disentangled into \(|\phi _{f} \otimes \psi (g)\rangle \), where \(|\psi (g)\rangle := \langle \phi _{f}| \omega (g) \rangle \). We intend to extract information of the triplet \((A, |\phi _{i}\rangle , |\phi _{f}\rangle )\) from the above measurement, and to this end we choose an observable \(X = Q\) or \(P\) of the measuring device and examine its shift between the two selections:

Under certain mathematical conditions on \(|\phi _{i}\rangle \) and \(|\psi \rangle \), both of the functions \(g \mapsto \mathrm {\Delta }^{w}_{X}(g)\) are proven to be differentiable and well defined over an open subset of \([0, \infty )\). In particular, the functions \(\mathrm {\Delta }^{w}_{X}(g)\) are defined at \(g=0\) if and only if \(\langle \phi _{f} | \phi _{i}\rangle \ne 0\), in which case the derivatives at \(g=0\) read

with a constant,

defined by the initial state \(|\psi \rangle \) of the meter, where \(\{Q, P\} := { QP} + { PQ}\) is the anticommutator. We also have

where \(\mathrm {Var}_{X}(\psi ) := \mathrm {Exp}_{X^{2}}(\psi ) - \left( \mathrm {Exp}_{X}(\psi )\right) ^{2}\) is the variance of \(X\) on the state \(|\psi \rangle \), and

is a complex-valued quantity called the weak value.

From the weak measurement described above, the real and imaginary part of \(A_{w}\) are obtained in the weakest limit \(g \rightarrow 0\) of the interaction, which is in accordance with the term ‘weak value’ assigned for \(A_w\). Incidentally, by imposing stricter mathematical conditions on the preselected state \(|\phi _{i}\rangle \), higher order differentiability of the shifts can also be ensured, and this may lead to the notion of ‘higher order weak values’, of which formulae are obtained analogously.

2.4 Remarks

Before proceeding to the main topics of this paper that follows this section, we mention that by choosing any orthonormal basis \(\mathcal {B}\) of the system \(\mathcal {H}\), the shift of the weak and conventional measurements is related to each other with the following equality:

where

is the survival rate of the postselection process in weak measurement, which tends to \(\left| \langle \phi _{f} | \phi _{i}\rangle \right| ^{2}\) for \(g \rightarrow 0\). In this respect, the well-known relation

between the weak and expectation value can be accounted for as a special case of this relation (8), which states that the effect of postselections disappears completely when it is averaged over with their corresponding survival rates.

We also note that the weak value \(A_{w} \) can take any arbitrary complex value by an appropriate choice of states in the two selections for nontrivial observable \(A\). Indeed, observing that

holds for any normalised \(|\phi _{i}\rangle \) with \(|\chi \rangle \) being a normalised vector orthogonal to \(|\phi _{i}\rangle \), one can choose the postselection as \(|\phi _{f}\rangle = c\, |\phi _{i}\rangle + |\chi \rangle \) with \(0 \ne c \in \mathbb {C}\) to find

Clearly, one can change freely the value of \(A_{w} \) by choosing \(c\) in \(|\phi _{f}\rangle \) appropriately, unless \(|\phi _{i}\rangle \) happens to be an eigenstate of \(A\) for which \(\mathrm {Var}_{A}(\phi _{i}) = 0\). This is a remarkable property of the weak value and can be contrasted to the conventional spectrum and expectation value which are always real-valued, and bounded when \(A\) is bounded.

3 Measurement under uncertainty

In the previous section, we have reviewed the measurement scheme of both the expectation and the weak value of an observable on a quantum system. We now return to the main topic of this paper, which addresses the question of how we can understand the merit of weak value amplification technique, which initially seems to lead to a conflicting intuition between improved signal-to-noise ratio through amplification effect and statistical deterioration caused by postselection. The key to its unified apprehension is an appropriate understanding and modelling of the general concept of measurement uncertainty and its proper evaluation.

3.1 Measurement uncertainty

It is an inescapable fact that all experiments are affected by technical imperfection in one way or another, and consequently one of the most basic and important tasks in experimental physics is to properly evaluate its effect in the results obtained. For this purpose, a modern and appropriate treatment of this unavoidable imperfection has been outlined in [22, 23] under the concept measurement uncertainty, whose use is widely encouraged in the literature of contemporary metrology replacing the more conventional term ‘error’ or ‘noise’. One of the important aspects of the concept is the observation that, fundamentally, we can never have perfect knowledge of every technical imperfection in actual measurements, and consequently we may never know what the intended ‘true’ value is. Hence it is advocated that the concept of measurement uncertainty quantify the level of doubt of the quantity obtained by measurement, rather than the level of error, which is defined as the difference between the obtained quantity and the true value, where the latter definition of the imperfection requires the knowledge of what we cannot know in the first place.

As a simple example, consider the situation where a high-precision optical measurement is performed inside a laboratory located in an urban area. Since the optical system can never be completely isolated from the environment, the measured outcomes are affected by numerous factors: seismic contribution from the earth and the traffic, electromagnetic contribution from wireless communication, intractable defect on the instrument itself and so on, not forgetting the human factors on the experimenter side. Regardless of every effort made by the experimenter, the sources of uncertainty cannot be completely tracked down and eliminated, nor can their behaviour be fully understood even statistically, either in principle or in regard of cost-effectiveness. Inevitably, there remains some level of doubt on the final result obtained, which typically originates from a complicated combination of a vast range of partially unknown technical imperfection.

The treatment of the technical imperfection in our work is deeply inspired by this philosophy. In view of this, to appropriately integrate the measurement uncertainty in the quantum measurement faithful to its original idea, it is vital for us to incorporate ‘uncertainness’ in some form other than what is usually done in conventional works based on the standard statistical analysis. One of the examples where this problem occurs is the way of modelling the behaviour of the imperfection of the measurement device that disperses the ‘ideal’ outcomes to the actual measured outcomes, despite that the behaviour of the former outcomes, fundamentally speaking, can never be known perfectly. Many traditional treatments [16–18] model this by a certain map form the probability measure of the ideal measurement to that of the actual outcome, which in fact implicitly implies that the experimenter has perfect knowledge on the behaviour of the dispersion, hence assumes that he is in the standing point of an omniscient ‘daemon’. This is, of course, a situation far from likely in actual experimental settings. In this respect, it is desirable to develop a general framework of modelling the measurement uncertainty and evaluating its impact on the result obtained in quantum metrology, but for our present purpose to capture the essence of the merit of weak value amplification in the presence of measurement uncertainty, the following simple model called the \(\delta \)-uncertainty bar model shall suffice.

The \(\delta \)-uncertainty bar model assumes a global interval of halfwidth \(\delta \ge 0\) within which the intended ideal outcomes and the experimental outcomes always lie. This is a simple model that can represent partial knowledge of the behaviour of technical imperfection in experiments, which occur typically when one has incomplete knowledge of the behaviour of dispersion of the measured outcomes caused either by the defect of the measuring device or by remaining unknown effects of external environmental conditions. Note that even if one assumes the existence of an ideal probability measure of the ideal outcomes, the expected measured outcomes under this incomplete description of the dispersion may not necessarily result in a probability measure. Now, in evaluating the impact of the uncertainty, we resort to a simple computational trick as demonstrated in the next paragraph.

3.2 Evaluation of uncertainty

Suppose that we are to measure a value \(F(\phi )\) when the system is in the state \(|\phi \rangle \in \mathcal {H}\). Usually, this is done by measuring an appropriate observable \(X\) of the system (or POVM in general) with the spectral decomposition

where \(E_{X}\) is the spectral measure of \(X\), which is the general notation for the familiar \(X = \sum _{i} a_i |a_i\rangle \langle a_i|\), and is valid including the case where the spectrum of \(X\) is continuous. Specifying a quantum state \(|\phi \rangle \) results in a probability measure \(\Vert E_{X}(\cdot ) \phi \Vert ^{2}\), by which the probabilistic behaviour of the ideal measurement outcomes is described. Let \(\{ \tilde{x}_{1}, \ldots , \tilde{x}_{N} \}\) be the measured outcomes of \(X\) under measurement uncertainty. It is then of interest to find the best estimator \(f\) that minimises its expected deviation from the intended value,

under finite number \(N\) of measurements.

Now, the \(\delta \)-uncertainty bar model implies the existence of a sequence of ideal outcomes \(x_{n} \in [\tilde{x}_{n} - \delta _{X}, \tilde{x}_{n} + \delta _{X}]\) for each measured outcomes \(\tilde{x}_{n}\) under uncertainty. A sequence of triangle inequalities yields

where

and

being naturally the expected average of the ideal outcomes.

Since we know nothing of the behaviour of the dispersion \(x_{n} \rightarrow \tilde{x_{n}}\) except that their difference is bounded by \(\delta _X\), the upper bound

of the first component of the inequality (15) ought to be evaluated. The proper method of its evaluation for general \(f\) is beyond the scope of this paper, and we shall focus on the evaluation of the case where \(f\) is linear, i.e. \(f(x) = ax + b\) for some \(a, b \in \mathbb {R}\). For that case, we readily have the following evaluation:

The evaluation of the second component \(\kappa ^{N}_{f(X)}(\phi )\) of the inequality (15), which arises from the finiteness of the number \(N\) of repeated measurements actually performed, admits the familiar statistical treatment based on the quantum state description. Since the ideal outcomes \(x_{n}\) are intrinsically probabilistic, we learn from a concentration inequality that the deviation almost certainly vanishes in the limit \(N \rightarrow \infty \) (law of large numbers). In particular, Chebyshev’s inequality [25] yields a convenient probabilistic estimation, which states that the probability of obtaining \(\kappa ^{N}_f(X)(\phi )\) to be less than a value \(\kappa \) is bounded as

If one demands that the lower bound (the r.h.s. of (22)) be a desired value \(0 < \eta < 1\), then by solving \(\kappa \) in favour of \(\eta \), one obtains the desired probabilistic estimation of the deviation of \(\kappa ^{N}_{f(X)}(\phi )\) as

which is specified by the probability \(\eta \).

Combining the above arguments, by defining the total uncertainty of the measurement as

we see that that the deviation (14) of the estimation of \(F(\phi )\) by the estimator \(f\) with finite number \(N\) of measurements under \(\delta \)-uncertainty bar model, is expected to be bounded by

with probability greater than \(\eta \). Note that the set of ideal outcomes \(\{x_{n}\}\) assumed in the above argument is for auxiliary purpose only, and does not appear in the final results. For an intuitive physical understanding of each contribution of uncertainties in (24), we call in this paper (at the cost of neutrality) the first component \(\upsilon ^{\delta _{X}}_{f(X)}\) the instrumental uncertainty, the second component \(\kappa ^{N}_{f(X)}(\eta ; \phi )\) the quantum uncertainty, and the last component \(\beta _{f(X)}(\phi )\) the bias of the estimator, respectively. The choice of the estimator \(f\), unlike in the usual arguments in estimation theory, results in a trade-off relation among the three components of the total uncertainty.

4 Uncertainty of measurements and merit of weak value amplification

The question whether the weak measurement technique may improve measurement in any sense is admittedly an extremely broad and complicated problem. In this section, we address this question in view of uncertainty, by considering a particular situation in which many recent experiments utilising weak measurement are related [7, 8], that is, those measuring an extremely small interaction \(g\) in the presence of a relatively large measurement uncertainty. We shall demonstrate that this indeed leads to a positive answer.

We consider the measurement settings discussed in Sect. 2, and assume for the sake of simplicity that only the measurement of \(Q\) on the quantum state after the interaction is affected by uncertainty. We shall compute the total uncertainty in each setting of conventional and weak measurement in the following two paragraphs, and give a discussion by comparing the results in the last paragraph.

4.1 Uncertainty of conventional measurement

For the measurement of \(g\) in the conventional case, we choose an unbiased estimator \(f(x) = (x - \mathrm {Exp}_{Q}(\psi ))/ \mathrm {Exp}_{A}(\phi _{i})\) of \(g\) based on the relation (2) to obtain the total uncertainty

of the conventional measurement. Note that we have used the formula

for the \(Q\) variance of the meter on the entangled state \(|\omega (g)\rangle \).

4.2 Uncertainty of weak measurement

Without loss of generality, we consider only the case of the measurement in \(Q\), and put \(\mathrm {Im}A_{w} = 0\) for brevity. Based on the Eq. (4), which is in turn equivalent to

we choose a (in general) biased estimator \(f(x) = (x - \mathrm {Exp}_{Q}(\psi )) / \mathrm {Re}A_{w}\), (\(\mathrm {Re}A_w \ne 0\)) of \(g\) for the weak measurement case. Although this is not the optimal estimator, this is what is actually used in the experiments cited above, and hence is sufficient for the purpose of demonstration.

Now, in evaluating the uncertainty, since the process of obtaining \(N^{\prime }\) out of \(N\) outcomes generally depends on the postselection in relation to the preselection, we must also take the survival rate (9) into account. To discuss the uncertainties along this more realistic line, note that the probability of \(N^{\prime }\) survived out of \(N\) is given by the binomial distribution:

with \(r\) given by the survival rate (9). To each of these \(N^{\prime }\) outcomes, inequality (15) holds with the lower bound of the probability (22). Thus, the average probability that the measurement yields outcomes within the quantum uncertainty \(\kappa \) is given by the sum over all possible \(N^{\prime }\),

To ensure the overall uncertainty level by some \(0 < \eta < 1 - \mathrm {Bi}\left[ 0; N,r(\phi _{i}\rightarrow \phi _{f})\right] \), we may put \(\eta = {H}^{N}_{f(Q)}(\kappa )\). This relation can be solved for \(\kappa \) to obtain the inverse

since each term in the sum (30) is a continuous and strictly monotonically increasing function in \(\kappa \). From this, the uncertainty of estimating \(g\) by weak measurement is given by

The third term (bias) in (32) is due to the nonlinearity of the shift with respect to \(g\), which cannot be ignored for nonvanishing \(g\) in realistic settings, while the choice of the estimator is linear.

4.3 Merit of weak value amplification

By comparing (26) and (32), an immediate observation one makes is the fact that the contribution of the instrumental uncertainty can be made much smaller in the case of weak measurement by amplification of the weak value. To be more specific, suppose that \(A\) is a bounded operator, as in the situation of the aforementioned experiments where \(A\) has only two point spectrum. Since the numerical range \(W(A) := \{\langle \phi |A| \phi \rangle : \Vert \phi \Vert = 1 \}\) is bounded for a bounded operator \(A\), there exists a limit to the extent to which the instrumental uncertainty can be suppressed. In contrast, recall that the weak value can take arbitrary complex value by an appropriate choice of the pair of initial and final states. Hence, by amplifying the real part \(\mathrm {Re}A_{w}\) of the weak value outside of \(W(A)\), the impact of the instrumental uncertainty can in principle be improved at will.

However, this improvement can be attained only at the price of statistical deterioration. This is a consequence of the fact that, since amplification of the weak value in general results in smaller survival rates, the quantum uncertainty of weak measurement, in which the postselection process is involved, tends to be much larger than that of the conventional case, especially when one requires higher probability \(\eta \) assurance. Indeed, since it is always possible that none out of \(N\) pairs of prepared states remains by postselection, the quantum uncertainty (31) can be defined only for \(0<\eta <1 - \mathrm {Bi}\left[ 0; N,r(\phi _{i}\rightarrow \phi _{f})\right] \), in contrast to the conventional measurement case, where the choice \(0 < \eta < 1\) is possible. Hence, we see that the quantum uncertainty (31) diverges to infinity as \(\eta \rightarrow 1 - \mathrm {Bi}\left[ 0; N,r(\phi _{i}\rightarrow \phi _{f})\right] \), while its counterpart for the conventional measurement case remains finite. Even in the ideal limit \(N \rightarrow \infty \) where the quantum uncertainty vanishes, there still remains a bias due to the nonlinearity of the shift.

From the above observations, one sees that the essence of the weak value amplification technique lies in the trade-off relation between reduced instrumental uncertainty due to its signal amplification effect, and statistical deterioration caused by decrease in survival rate. In particular, the technique may turn out to be effective in the situation when the measurand \(g\) is much smaller compared to \(\delta _{Q}\), and there are an ample number of pairs of quantum states available in the measurement to suppress the quantum uncertainty. This is indeed the situation shared by many recent experiments performed on optical systems.

We now demonstrate this by a simple example, which in turn will be followed by a numerical demonstration in the next section. Suppose, for the sake of simplicity, that one probes the very existence of a physical effect by the measurement of the coupling constant \(g\) (as in the experimental verification of SHEL [7]). One may conclude that the effect exists with confidence \(\eta \) if one successfully distinguishes the unknown coupling \(g\) from \(g=0\), under the significance condition given by

where \(\epsilon ^{\delta _{Q}, N}_{f(Q)}(\eta )\) is either (26) or (32) for the respective measurement scheme. For the conventional measurement case, we see from (26) that in the case of a relatively large measurement uncertainty \(\delta _{Q}\) compared to the small coupling \(g\) and the maximum available expectation value \(\mathrm {Exp}_{A}(\phi _{i}) \in W(A)\), i.e.

for all \(\mathrm {Exp}_{A}(\phi _{i}) \in W(A)\), the significance condition (33) is broken. However, the condition can be maintained for the weak measurement through the amplification of \(\mathrm {Re}A_{w}\), as we shall see in the following numerical demonstration.

5 Numerical demonstration

For illustration, an analytically computable model is now in order. To ease our analysis, we assume that \(A\) has a finite point spectrum, i.e.,

with each \(\lambda _{n}\) being an eigenvalue of \(A\) and \(E_{\{\lambda _{n}\}}\) the projection on its accompanying eigenspace.

5.1 Meter state after postselection

Under the setting above, the von Neumann type interaction can be calculated as

which in turn leads to

As a byproduct of this result, one finds from (37) that, for fixed \(g\) and \(|\psi \rangle \), the final state \(| \psi (g) \rangle \) of the meter after the postselection always lies in the subspace spanned by the finite number \(N\) of vectors

which is independent of the choice of the pre- and postselections. As an immediate corollary of this fact, one sees that, for an observable \(A\) with a finite point spectrum, the shifts \(\mathrm {\Delta }^{w}_{X}(g)\), (\(X = Q, P\)) are always bounded irrespective of the choice of pre- and postselections under the fixed \(g\) and the meter state \(|\psi \rangle \).

5.2 Observable with two-point spectrum

Now, to simplify the matter further, we consider the model in which \(A\) has a discrete spectrum consisting of two distinct values \(\{\lambda _{1}, \lambda _{2}\}\), and the initial state \(|\psi \rangle \) of the meter \(\mathcal {K} = L^{2}(\mathbb {R})\) is given by normalised Gaussian wave functions

centred at \(x = 0\) with width \(d > 0\). Despite its simplicity, this model is sufficiently general in the sense that it covers the settings adopted in recent experiments of weak measurement [7, 8]. We also note that the condition \(A^{2} = \mathrm {Id}\), under which the previous works [9, 11, 12] performed a full order calculation of the shift, is in fact a special case of our setting (\(\{\lambda _{1}, \lambda _{2}\} = \{ -1, 1\}\)).

Identifying the usual position and momentum operators on \(L^{2}(\mathbb {R})\) with \(Q=\hat{x}\) and \(P=\hat{p}\), and using the shorthand, \(\Lambda _{m} := (\lambda _{1} + \lambda _{2})/{2}\) and \(\Lambda _{r} := (\lambda _{2} - \lambda _{1})/{2}\), we find

where \(A_{r} := A_{w} - \Lambda _{m}\), and

is a parameter corresponding to the amplification rate. One then verifies that the above functions are of class \(C^{\infty }\) with respect to \(g\), and the ratios \({\mathrm {\Delta }^{w}_{Q}(g)}/{g}\) and \({\mathrm {\Delta }^{w}_{P}(g)}/{(g/d^{2})}\) indeed tend to \(\mathrm {Re} A_{w}\) and \(\mathrm {Im} A_{w}\) in the weak limit \(g \rightarrow 0\), respectively (cf. (4) and (6)). At this point, we observe that both the ratios are dependent on \(g\) and \(d\) only through the combination \(g/d\). Thus, instead of considering the weak limit \(g \rightarrow 0\), one may equally consider the broad limit of the width \(d \rightarrow \infty \) to obtain the weak value. In other words, the aim of weak measurement to obtain the weak value can be achieved with a coupling \(g\) which is not weak at all. This confirms the anticipation [14] that the weak value may be observed with non-small \(g\), i.e. by ‘non-weak’ measurement.

5.3 Uncertainty and the significance condition

We now return to the main purpose of this section, namely, the numerical demonstration of the validity of the significance condition (33). The total uncertainties for this model for both \(X=\hat{x}, \hat{p}\) can be analytically obtained based on (32) and its counterpart for measuring \(\mathrm {Im}A_{w}\). For this purpose, we need the survival rate and the variances, which are found to be

and

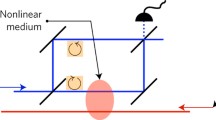

For demonstration, we consider only the case \(X=\hat{x}\) without loss of generality. We choose the spin component \(S_z = \sigma _z/2\) along the z-axis for the observable \(A\) of interest, and set \(g = 1/50\) and \(\delta _{Q} = 1/2\), so that the significance condition (33) for the conventional measurement is always broken (\(|g| \cdot \Vert S_z \Vert = 1/100 \ll 1/2 = \delta _{Q}\)). On the other hand, for the weak measurement, one finds that, with a proper choice of measurement setups, this condition can indeed be fulfilled as shown in Fig. 1.

Ratio of the total uncertainty \(\epsilon ^{\delta , N}_{f(Q)}(\eta )\) in measuring the coupling constant \(g\) to its absolute value \(|g|\). By amplifying real part of the weak value of the spin \(S_{z} = \sigma _z/2\) out of its numerical range \([-1/2, 1/2]\) up to \(\mathrm {Re}(S_{z})_{w} \approx 100\), the significance condition (33) is attained with confidence \(\eta =0.95\). (parameters: \(\delta _{Q} = 1/2\), \(N_{0}= 10^{7}\), \(g=1/50\) and \(d = 4\))

From the figure, one sees a clear trade-off relation between the instrumental uncertainty and the combination of quantum uncertainty and nonlinearity (bias). This confirms on a quantitative basis the qualitative description given in Sect. 4 that the merit of weak value amplification can arise if one can exploit the trade-off relation between reduced instrumental uncertainty due to its signal amplification effect, and the statistical deterioration (increased quantum uncertainty and bias) caused by the decrease in survival rate.

6 Summary and conclusion

The purpose of this paper was to present a novel theoretical analysis on the applicational merit of weak value amplification in which uncontrollable imperfection of measurement is taken into account, and thereby shows that the merit indeed appears in case where the imperfection meets a certain trade-off condition with other sources of uncertainty.

In treating the technical imperfection, by which all experiments are unavoidably affected, we have adopted the concept of measurement uncertainty, which derives from the recognition of those sources of dispersion whose behaviour is intrinsically unknown to us. This leads us to the observation that, in particular, we cannot resort to traditional statistical measures, and the \(\delta \)-uncertainty bar employed in this paper is an attempt to model that type of uncertainty. The subsequent arguments have thus been made carefully not to assume full knowledge of the statistical behaviour of the dispersed measurement outcomes, except for those arising purely from quantum origins (shot noise). By means of a small computational trick, we have obtained a probabilistic evaluation of the impact of technical imperfection to the measurement result, and demonstrated that it can be separated into three components, namely, the instrumental uncertainty, quantum uncertainty and bias.

Based on the above analysis, we have evaluated the total uncertainties for both the conventional and weak measurement for measuring the coupling \(g\). Comparing the results (26) and (32), we see that there emerges a trade-off relation between signal amplification effect and statistical loss. These respectively result in reduced instrumental uncertainty and statistical deterioration, which explains well our intuitive understanding of weak measurement in a unified and rigorous manner. The technique is then shown to be particularly effective in the situation when the measurand \(g\) is much smaller than the existing measurement uncertainty, while there are an ample number of pairs of quantum states available for the measurement, which is indeed the situation shared in many successful experiments by the weak measurement technique. A numerical demonstration was performed to confirm this observation of the trade-off relation mentioned above, showing that there indeed exists a finite range of amplification possible by weak measurement.

The technique of weak measurement may have a wide range of potential applicability, one of which being the possibility of improving the detector precision for gravitational waves, for which discussions are already under way [26–28]. Naturally, the problem posed in Sect. 5 is qualitatively different from that of the gravitational wave detector, and hence the model and arguments ought to be appropriately modified in analysing each particular situation. Nevertheless, the existence of uncontrollable uncertainty is universal in all experiments, and when such uncertainty becomes an essential obstacle for the successful measurement as in the gravitational wave detection, the postselection technique may well be a good point to look into to overcome the difficulty. In regard to this, we hope that the general theoretical formulation and the results obtained here will be usable as a basis to discern when the precision measurement by weak measurement can be meaningful, and if so, to evaluate the uncertainty in the final outcome according to the modern notion of measurement uncertainty.

References

Aharonov, Y., Bergmann, P.G., Lebowitz, L.: Time symmetry in the quantum process of measurement. Phys. Rev. 134, B1410 (1964)

Aharonov, Y., Albert, D.Z., Vaidman, L.: How the result of a measurement of a component of the spin of a spin-1/2 particle can turn out to be 100. Phys. Rev. Lett. 60, 1351 (1988)

Aharonov, Y., Popescu, S., Tollaksen, A.: A time-symmetric formulation of quantum mechanics. Phys. Today 63(11), 27–32 (2010)

Kofman, A.G., Ashhab, S., Nori, F.: Nonperturbative theory of weak pre- and post-selected measurements. Phys. Rep. 520, 43 (2012)

Aharonov, Y., Vaidman, L.: Complete description of a quantum system at a given time. J. Phys. A Math. Gen. 24, 2315 (1991)

Yokota, K., Yamamoto, T., Koashi, M., Imoto, N.: Direct observation of Hardy’s paradox by joint weak measurement with an entangled photon pair. New J. Phys. 11, 033011 (2009)

Hosten, O., Kwiat, P.: Observation of the spin hall effect of light via weak measurements. Science 319, 787 (2008)

Dixon, P.B., Starling, D.J., Jordan, A.N., Howell, J.C.: Ultrasensitive beam deflection measurement via interferometric weak value amplification. Phys. Rev. Lett. 102, 173601 (2009)

Wu, S., Li, Y.: Weak measurements beyond the Aharonov-Albert-Vaidman formalism. Phys. Rev. A 83, 052106 (2011)

Jozsa, R.: Complex weak values in quantum measurement. Phys. Rev. A 76, 044103 (2007)

Koike, T., Tanaka, S.: Limits on amplification by Aharonov-Albert-Vaidman weak measurement. Phys. Rev. A 84, 062106 (2011)

Nakamura, K., Nishizawa, A., Fujimoto, M.K.: Evaluation of weak measurements to all orders. Phys. Rev. A 85, 012113 (2012)

Susa, Y., Shikano, Y., Hosoya, A.: Optimal probe wave function of weak-value amplification. Phys. Rev. A 85, 052110 (2012)

Aharonov, Y., Rohrlich, D.: Quantum Paradoxes: Quantum Theory for the Perplexed. Wiley-VCH, Weinheim (2005)

Tanaka, S., Yamamoto, N.: Information amplification via postselection: a parameter-estimation perspective. Phys. Rev. A 88, 042116 (2013)

Knee, G.C., Briggs, G.A.D., Benjamin, S.C., Gauger, E.M.: Quantum sensors based on weak-value amplification cannot overcome decoherence. Phys. Rev. A 87, 012115 (2013)

Knee, G.C., Gauger, E.M.: When amplification with weak values fails to suppress technical noise. Phys. Rev. X 4, 011032 (2014)

Ferrie, C., Combes, J.: Weak value amplification is suboptimal for estimation and detection. Phys. Rev. Lett. 112, 040406 (2014)

Fisher, R.: Theory of statistical estimation. Math. Proc. Cambridge Philos. Soc. 22, 700 (1925)

Cramér, H.: Mathematical Methods of Statistics. Princeton University, Princeton (1946)

Lehmann, E., Casella, G.: Theory of Point Estimation. Springer Verlag, Berlin (1983)

Joint Committee for Guides in Metrology: Guide to the expression of uncertainty in measurement, JCGM 100 (2008)

Joint Committee for Guides in Metrology: International vocabulary of metrology Basic and general concepts and associated terms, JCGM 200 (2008)

Lee, J., Tsutsui, I.: Uncertainty of weak measurement and merit of amplification. arXiv:1305.2721 (2013)

Tchebichef, P.: Des valeurs moyennes. J. Math. Pures Appl. 2(12), 177 (1867)

Nakamura, K, Nishizawa, A., Fujimoto, M.: Annual Report of the National Astronomical Observatory of Japan, vol. 14, p. 31. http://www.nao.ac.jp/contents/about-naoj/reports/annualreport/en/2011/e_web_all.pdf (FY2011)

Puentes, G., Hermosa, N., Torres, J.P.: Weak measurements with orbital-angular-momentum pointer states. Phys. Rev. Lett. 109, 040401 (2012)

Strübi, G., Bruder, C.: Measuring ultrasmall time delays of light by joint weak measurements. Phys. Rev. Lett. 110, 083605 (2013)

Acknowledgments

We thank Prof. A. Hosoya for helpful discussions. This work was supported by JSPS Grant-in-Aid for Scientific Research (C), No. 25400423.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lee, J., Tsutsui, I. Merit of amplification by weak measurement in view of measurement uncertainty. Quantum Stud.: Math. Found. 1, 65–78 (2014). https://doi.org/10.1007/s40509-014-0002-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40509-014-0002-x