Abstract

A meta-analysis of single-subject research was conducted examining the effectiveness of computer-assisted interventions (CAI) for teaching a wide range of skills to students with autism spectrum disorders (ASD) within the school-based context. Intervention effects were measured by computing improvement rate difference (IRD), which is a simple approach to visual analysis that correlates well with both parametric and non-parametric effect size measures. Overall, results suggest that CAI may be a promising approach for teaching skills to students with ASD. However, several concerns make this conclusion tenuous. Recommendations for future research are discussed.

Similar content being viewed by others

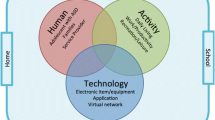

As a group, individuals with autism spectrum disorders (ASD) demonstrate relatively strong skills in responding to visual media (Quill 1997; Wetherby and Prizant 2000). This affinity to visual materials underscores the success of interventions that use picture-based cues to help students with ASD organize daily events and activities, communicates more effectively, imitates appropriate behaviors, and/or acquires both academic and functional skills. In fact, strategies that incorporate visual presentation and that allow for repeated imitation of skills and/or behaviors currently are considered best practice for educating individuals with ASD (National Research Council; NRC 2001). Due to the increasing number of students with ASD, there is a need to develop and systematically validate new and innovative visual-based interventions. One such method that builds upon the visual learning strengths of students with ASD and can be adapted to fit within a variety of educational contexts is the use of computers.

Traditionally, the term computer has been used to refer to personal desktop and laptop devices that most people use. While the personal computer is still the most popular type of device, advancing technology has broadened our view of what a computer looks like. Today, computers represent any electronic device that accepts, processes, stores, and/or outputs data at high speeds. As such, computers have come to represent an array of gadgets beyond desktop and laptop computers and now include mobile devices like smartphones (e.g., Android and iPhones) and tablets (e.g., iPad and Kindle). Beyond how computers may be defined, they have become a ubiquitous part of the global world and their use will continue to grow in the future.

The use of computers for enhancing the academic, behavioral, and social outcomes of students with ASD is a relatively new area of research, but one that has great potential. Many parents report their children’s fascination with and propensity for learning from visually based media such as computers (Nally et al. 2000). In addition, researchers have identified that individuals with ASD not only demonstrate significant skill acquisition when taught via computers, but also have a preference for instruction delivered through such devices (e.g., Bernard-Opitz et al. 2001; Moore et al. 2000; Shane and Albert 2008). Given such preferences, the use of computer-assisted interventions (CAI) emerges as an ideal method for teaching students with ASD for several reasons. First, students with ASD often find the world confusing and unpredictable, and have difficulty dealing with change. As such, educational practices should make every effort to describe expectations, provide routines, and present immediate and consistent consequences for responding (Iovannone et al. 2003). Computers not only provide a predictable learning environment for the student with ASD, but also produce consistent responses in a manner that likely will maintain interest and, possibly, increase motivation. Second, viewing of instruction through electronic media may allow individuals with ASD to focus their attention on relevant stimuli (Charlop-Christy and Daneshvar 2003; Shipley-Benamou et al. 2002). Because students with ASD have difficulty screening out unnecessary sensory information (Quill 1997), focusing on a computer, where only necessary information is presented, may maximize their attention. Third, the use of computers likely creates an environment for learning that appears to individuals with ASD as less threatening (Sansosti et al. 2010). That is, computers are free from social demands and likely can be viewed repeatedly by the student without fatigue.

Recently, there has been a proliferation of single-subject research investigating the utility of school-based, CAI for students with ASD. For example, CAI for students with ASD have been demonstrated to increase: (a) object labeling and vocabulary acquisition (e.g., Coleman-Martin et al. 2005; Massaro and Bosseler 2006), (b) correct letter sequences and spelling (e.g., Kinney et al. 2003; Scholosser and Blischak 2004), (c) reading skills (e.g., Mechling et al. 2007), (d) appropriate classroom behaviors (e.g., Mechling et al. 2006; Whalen et al. 2006), and (e) social skills (e.g., Simpson et al. 2004; Sansosti and Powell-Smith 2008). From the information available, it appears that computers can be harnessed to support a wide variety of skills to children with ASD.

With the proliferation of research in this area, several recent reviews have made attempts to summarize the extant single-subject research examining the effectiveness of CAI. For example, Pennington (2010) provided a descriptive review of CAI research conducted between 1997 and 2008. Specifically, this review examined the effectiveness of CAI for teaching skills related to literacy. Overall, Pennington concluded that computer-based interventions have promise for teaching academic skills to students with ASD. Wainer and Ingersoll (2011) also conducted a descriptive review of research focused on the use of interactive multimedia for teaching individuals with ASD language content and pragmatics, emotional recognition, and social skills. In their review of the extant literature, interactive multimedia programs were found to be both engaging and beneficial for ASD learners due to their known strengths in the area of visual processing and determined to have promise as an educational approach. More recently, Ramdoss et al. (2011a, b) conducted two systematic reviews on the effects of CAI for improving literacy skills (e.g., reading and sentence construction) and improving vocal and non-vocal communications, respectively. Taken together, the results of these separate reviews provide initial insight regarding the efficacy of CAI for students with ASD. In fact, each of the reviews suggests that CAI is a promising approach for supporting the needs of students with ASD. However, each of these reviews did not provide any metric that measured the overall magnitude of effect of CAI for students with ASD.

Need for Quantitative Synthesis of Single-Subject Research

Today, school-based practitioners are faced with ever-increasing demands to identify and utilize evidence-based practices (Reichow et al. 2008; Simpson 2008). Such demands began with the mandates set forth by the No Child Left Behind Act (NCLB 2001) and were extended further by the Individuals with Disabilities Education Improvement Act, which required educators to select appropriate instruction strategies that are “…based on peer-reviewed research to the extent practical…” (IDEIA 2004, 20 U.S.C. 1414 §614, p. 118). More recent demands have called for the calculations of effect size and confidence intervals to establish those strategies that promote the greatest amount of expected change (e.g., What Works Clearing House, see http://ies.ed.gov/ncee/wwc/; Whitehurst 2004). Therefore, failure to provide a statistical summary indicating the amount of behavior change runs contrary to contemporary practice and leaves practitioners to rely on the conclusions drawn by the studies’ authors. In an era when empirically validated approaches are routinely demanded within school contexts, it is crucial to differentiate between promising and evidence-based practices (Yell and Drasgow 2000).

Given the increase in single-subject research investigating the use of computers to teach students with ASD in recent years combined with the demand for more stringent design and analysis of research conducted within school-based contexts, determination of whether or not CAI represents an evidence-based approach is warranted. First, such determination should be based on specific criteria for evaluating single-subject research studies that are considered to be of high quality. As part of their work with the Council for Exceptional Children, Division of Research, Quality Indicator Task Force, Horner et al. (2005) provided a set of guidelines for determining when single-subject research documents a practice as evidence-based. These guidelines assert that (a) both the strategy and context in which the strategy is used have been clearly defined; (b) the efficacy of the approach has been documented in at least five published studies in peer-reviewed journals; (c) the research has been conducted across three different geographical locations and includes at least 20 total participants; (d) the strategy was implemented with fidelity, (e) social validity (i.e., acceptability of the intervention) has been measured, and (f) results demonstrate experimental control through the use of multiple baseline, reversal, and/or alternating treatment designs. Second, efforts should be taken to systematically summarize the extant single-subject literature by employing some form of effect size metric. Traditionally, single-subject research has been interpreted by visual inspection of graphed data. Through visual inspection, a large treatment effect is indicated by a stark contrast in the levels of data between the baseline and intervention phase(s). However, such analysis can be subjective and often fails to demonstrate the impact of an intervention when only small change is indicated. Over the years, calculation of the percentage of non-overlapping data (PND) has been suggested as an alternative for systematically synthesizing single-subject research studies (Scruggs and Mastropieri 1998, 2001) as it provides a method for quantifying outcomes objectively and can be calculated on any type of single-subject research design (Parker et al. 2007). PND, a non-parametric approach to summarizing research, determines the magnitude of behavior change from baseline to treatment phase by calculating the proportion of non-overlapping data between those phases. Specifically, the PND is calculated by counting the number of data points in the treatment condition(s) that do not overlap with the highest (or lowest if appropriate) baseline data point, divided by the total number of treatment data points, and multiplied by 100 (to calculate a percentage). The higher the percentage obtained, the stronger the intervention effectiveness. Although PND is the oldest and most widely known non-parametric method for analyzing single-subject data, it has been scrutinized for its confounding condition for floor or ceiling datum (Wolery et al. 2008) and variability in data trends (Ma 2006). Due to such limitations, other indices of non-overlapping data such as the percentage of data exceeding the median (PEM; Ma 2006), the percentage of all overlapping data (PAND; Parker et al. 2007), and the pairwise data overlap squared (PDO2; Wolery et al., 2008) have been promoted. Despite efforts to provide accurate synthesis of single-subject research, each of these iterations also has been criticized for not being sensitive enough to detect the important characteristics of trends and variability within time-series data (Wolery et al. 2008), as well as being unable to calculate confidence intervals (Parker et al. 2009).

Parker et al. (2009) and Parker et al. (2014) suggest using the improvement rate difference (IRD) to supplement visual inspection of graphs and for calculating effect size of single-case research. IRD has been used for decades in the medical field (referred to “risk reduction” or “risk difference”) to describe the absolute change in risk that is attributable to an experimental intervention. This metric is valued within the medical community due to its ease of interpretation, as well as the fact that it does not require specific data assumptions for confidence intervals to be calculated (Altman 1999). IRD represents the difference between two proportions (baseline and intervention). More specifically, it is the difference in improvement rates between baseline and intervention phases (Higgins and Green 2009; Parker et al. 2009. By knowing the absolute difference in improvement, practitioners can determine the effect of an intervention and if the change in behavior is worth repeating. To calculate IRD, a minimum number of data points are removed from either baseline or intervention phases to eliminate all overlap. Data points removed from the baseline phase are considered “improved,” meaning they overlap with the intervention. Data points removed from the intervention phased are considered “not improved,” meaning they overlap with the baseline. The proportion of data points “improved” in baseline is then subtracted from the proportion of data points “improved” in the intervention phase (IR I –IR B = IRD). The maximum IRD score is 1.00 or 100 % (all intervention data exceed baseline). An IRD of 0.70 to 1.0 indicates a large effect size, 0.50 to 0.70 a moderate effect size, and less than 0.50 a small or questionable effect size (Parker et al. 2009). An IRD of 0.50 indicates that half of the scores between baseline and treatment phase were overlapping so there is only chance-level improvement. One distinct advantage of IRD is that it affords the ability to calculate confidence intervals. Practitioners can interpret the width of a confidence interval as the precision of the approach (large intervals indicate that the IRD is not trustworthy, whereas narrow intervals indicate more precision). In addition to this practical advantage, Parker et al. (2009) found that IRD correlated well with the R 2 and Kruskal–Wallis W effect sizes (0.86) and with PND (0.83). To date, research utilizing IRD calculations has been embraced more within the biosciences. However, there is growing support for its use within educational research due to the demands for research to include stronger designs and effect size calculations with confidence intervals (Higgins and Green 2009; Whitehurst 2004). IRD has been utilized in a variety of single-case research meta-analysis (e.g., Ganz et al. 2012a, b; Miller and Lee 2013; Vannest, et al. 2010.

Purpose

The purpose of this study was to provide a quantitative meta-analysis of existing single-subject research studies that have investigated the use of school-based CAI for children with ASD. As stated previously, there is an increased demand for educators to implement evidence-based practices within schools. While there have been a myriad of single-subject studies demonstrating the effectiveness of CAI, it is necessary to evaluate the extant literature using a common metric such as effect size via a meta-analysis (Kavale 2001). A synthesis of the available single-subject research would add substantial information to our existing knowledge regarding the efficacy of CAI for children and adolescents with ASD within school-based contexts and would provide practitioners with much needed information necessary for educational decision-making. To this end, a meta-analysis was conducted of single-subject studies that included the use of CAI for students with ASD. Thus, this investigation was interested in primarily answering the following question: Are school-based CAI effective for students with ASD? Specifically, this meta-analysis determines the overall impact of computer-based technologies for teaching students with ASD using the improvement rate difference (IRD). In addition, this meta-analysis provides information as to whether computer-assisted interventions can be considered an evidence-based practice as outlined in Horner et al. (2005).

Method

Identification of Studies

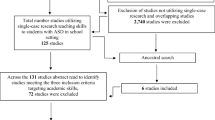

Studies included in this meta-analysis were located by conducting a search of journal articles published from 1995 to 2013 using PsycINFO and EBSCO databases. Multiple searches were conducted using a combination of the following descriptors: autism, autistic disorder, autism spectrum disorder, high functioning autism (HFA), Asperger’s syndrome (AS), pervasive developmental disorder (PDD), computers, computer-assisted instruction, and computer-assisted learning. An ancestral search of studies using the reference lists of each study located through PsycINFO or EBSCO also was conducted in an effort to locate additional studies that did not appear in the online searches. In addition, manual searches of the journals Journal of Autism and Developmental Disorders, Focus on Autism and Other Developmental Disabilities, Journal of Positive Behavior Interventions, and Research in Autism Spectrum Disorders were conducted to identify references that were not located through electronic search. In total, 76 studies were located (69 empirical studies and 7 case studies) that examined the use of computers or computer-assisted instruction for teaching children and adolescents with ASD.

Following the initial location of articles, the authors reviewed each study to determine inclusion eligibility based on the following criteria. First, the study was conducted between 1995 and 2013 and was published in a peer-reviewed journal. Second, participants in the study were diagnosed/identified with an ASD (i.e., autism, AS, and PDD). In a handful of studies, participants without ASD were included in the study. In these instances, only data for the individual with ASD were analyzed as part of the meta-analysis. Third, the study employed a single-subject research design that demonstrated experimental control (e.g., multiple baseline and reversal design). Non-experimental, AB designs and non-empirical case studies were excluded from the analysis because such approaches do not provide sufficient information to rule out the influence of a host of confounding variables (Kazdin 1982), making it difficult to determine the natural course that the behavior(s) would have taken had no intervention occurred (Risely and Wolf 1972). Fourth, the study presented data in graphical displays that depicted individual data points to allow for calculation of the IRD. Studies that incorporated dichotomous-dependent variables (e.g., correct/incorrect), or that had fewer than three probes in a multiple-probe design, were excluded from the analysis because they could not permit appropriate calculation of the IRD. In addition, studies that employed group designs were excluded in order to provide a uniform metric of treatment effectiveness and because of the difficulty combining the effect size measures with IRD analysis. Fifth, the study utilized outcome measures targeting academic, behavior, and/or social skills.

Out of the 76 original studies found, 48 were excluded for the following reasons: the use of group designs (n = 13); the inclusion of participants whose primary diagnoses were not ASD (n = 3); the inclusion of participants who were adults or who were not attending school (n = 2); the use of AB designs, case studies, or other designs that provided only descriptive interpretations of findings (n = 11); the article was an unpublished doctoral dissertation (n = 2); and insufficient data and/or graphical displays that permit the calculation of IRD (n = 17). As a result, a total of 28 studies met the multiple inclusion criteria and were included in the quantitative analysis.

Coding of Studies

Each of the 28 studies included in the meta-analysis was summarized and information coded for further analysis. Specifically, a summary table was prepared that provided information regarding: (a) participant characteristics, including number, diagnosis, and age; (b) setting characteristics describing the location where the intervention was implemented; (c) type of research design; (d) description of the target skill(s) or dependent variable(s); (e) intervention (independent variable) description, including type/format of computer-assisted strategy utilized and the length of the intervention; and (f) confirmation of whether the study measured inter-observer reliability, treatment integrity, and/or social validity (see Table 1 for a descriptive analysis of the included studies). The nature of the coding system utilized allowed for assessment of whether or not CAI met the criteria as an evidence-based practice using the guidelines set forth by Horner et al. (2005).

Reliability of Coding

To establish inter-rater reliability for the coding procedure, the first two authors independently rated all studies using the categories mentioned above and compared their results. An agreement was recorded when both raters indicated any of the study features as being the same, and a disagreement was recorded when only one rater coded a specific study feature. Inter-rater reliability was established as a percent agreement between both raters and was calculated by dividing the total number of agreements by the total number of agreements plus disagreements and multiplying by 100. Inter-rater reliability for coding of study features was 97 % (range 94–100 %). Cohen’s kappa (κ) also was calculated. This measure of reliability (used for qualitative items) is more conservative and adjusts for chance agreement (Suen and Ary 1989). The kappa calculation was 0.92.

Data Extraction

In order to ensure that data utilized within the meta-analysis were accurate, graphs from each of the 28 published studies were digitized using GraphClick software (Arizona Software 2008). By creating digitized graphs, we were able to recreate the original data points. Specifically, we were able to pinpoint and recreate X-axis and Y-axis data values digitally and transfer these values into an Excel spreadsheet (see Parker et al. 2009, for a more detailed description of the process). This process permitted the ability to generate graphs that was not crowded (i.e., difficult to view).

Effect Size Calculation

Using the digitized graphs, two raters calculated IRD scores for every participant for baseline and treatment contrasts, excluding generalization and maintenance data points. Specifically, IRD was calculated by determining the percent of improved data points in the treatment phase divided by the total number of data points while eliminating any overlapping data points between baseline and treatment conditions. To ensure accuracy of calculations, the two raters independently computed IRD scores for each of the studies and then compared their calculations. Initially, these two raters calculated their overall reliability for eight randomly selected studies included in the meta-analysis (30 % of the studies). For these first eight studies, inter-rater reliability was 83 %. While this initial reliability statistic was slightly lower than expected, the two raters met to resolve discrepancies through further inspection of data for each of the eight studies. It was discovered that the two raters differed on how to calculate scores that tied across phases (e.g., one data point of 20 % during baseline and one point of 20 % during intervention). For this study, such data points were considered as overlapping. Following this discovery, both raters independently recalculated scores for the first eight studies, resulting in an inter-rater reliability of 95 %. IRD scores then were calculated independently for the remaining studies. In addition to the two raters’ calculations, one independent reviewer (an advanced graduate student trained in calculating IRD) coded eight randomly selected studies. Overall, the independent reviewer demonstrated 100 % inter-rater agreement with the two raters.

Additionally, procedures were utilized in order to create confidence intervals (CI) for each of the IRD calculations. The current data were analyzed using NCSS Statistical Software (REF). Specifically, we conducted a test of two proportions with the option to include exact 84 % CIs based on bootstrap for IRD calculation. The 84 % CI was selected for judging the precision of IRD scores for the following reasons. First, an 84 % confidence limit is liberal enough to permit clinical decision-making (e.g., altering interventions) when such decisions are not high stakes (Ganz et al. 2012a, b). Second, using the 84 % CI is equivalent to making an inference test of differences at the p = 0.05 level (Schenker and Gentleman 2001; Payton et al. 2003).

Results

Study Characteristics

The 28 studies selected for inclusion in this meta-analysis were published between 1995 and 2013 and measured the effects of computer-assisted interventions on a total of 93 participants with ASD. These studies appeared in a total of 13 journals, with approximately half of them (46 %) published in either the Journal of Autism and Developmental Disorders or Focus on Autism and Other Developmental Disabilities. All of the studies included in the meta-analysis employed single-subject research designs. Among these, 46 % used a multiple baseline design (n = 13), 25 % used a multiple probe design (n = 7), 14 % used an ABAB reversal design (n = 4), 7 % used a changing conditions design (n = 2), and 7 % used an alternating treatment design (n = 2). Inter-observer reliability was reported in 26 of the 28 studies (93 %), and intervention fidelity was measured in 21 studies (75 %). However, social validity was measured in only ten studies (36 %). A summary of the participants, research design, target skills, strategies utilized, and reliability/validity is presented in Table 1.

Participant and Intervention Characteristics

The 93 participants ranged in age from 3 years, 2 months to 18 years (mean age = 9 years, 5 months; SD = 3.53). Most of the study participants were boys (n = 77, 83 %) and elementary school age (6–11 years; n = 56, 60 %). There was a relatively equal representation of preschool (3–5 years; n = 16, 17 %), middle school (12–14 years; n = 15, 16 %), and high school (16–20 years; n = 6, 6 %) students in the overall sample. All of the studies analyzed included participants who had a diagnosis of autism (n = 86, 92 %), Asperger syndrome (n = 3, 3 %), or were categorized as ASD with no specification (n = 4, 4 %).

Each of the studies included in the meta-analysis targeted various characteristics of social, behavioral, and academic difficulties in children with ASD and were conducted within school-based settings. Specifically, seven of the studies targeted social behaviors (Bernard-Opitz et al. 2001; Cheng and Ye 2010; Hetzroni and Tannous 2004; Murdock et al. 2013; Sansosti and Powell-Smith 2008; Simpson et al. 2004; Whalen et al. 2006), nine studies targeted behavioral skills (Ayres et al. 2009; Bereznak et al. 2012; Cihak et al. 2010; Flores et al. 2012; Hagiwara and Smith-Myles 1999; Mancil et al. 2009; Mechling et al. 2006, 2009; Soares et al. 2009), and 12 studies targeted academic skills (Bosseler and Massaro 2003; Coleman-Martin et al. 2005; Ganz et al. 2014; Hetzroni and Shalem 2005; Pennington 2010; Schlosser and Blischak 2004; Simpson and Keen 2010; Smith 2013; Smith-Myles et al. 2007; Soares et al. 2009; Yaw et al. 2011). The computer-assisted interventions implemented within the studies ranged in length from 3 to 30 sessions. Most of the studies employed interventions that were of medium length or 11–20 sessions long (n = 16, 57 %), while brief (1–10 sessions; n = 4, 14 %) and long interventions (over 20 sessions; n = 8, 29 %) were less common.

Overall (Omnibus) Effects of CAI

Data from this study yielded, 151 separate effects sizes from a total of 28 studies. Total mean IRD for all studies included within the meta-analysis was 0.61 CI84 [0.48, 0.74], indicating a moderate effect. That is, CAI intervention data showed a 61 % improvement rate from baseline to intervention phases on a range of outcomes, and we are reasonably certain the range of improvement is within 48 to 74 %. There was significant variation across studies, contributing to the lower average IRD calculation. Figure 1 illustrates the IRD and 84 % CIs for each CAI intervention and by individual study.

Variation in Effects by Targeted Outcomes

Differences in IRD scores also were examined relative to type of intervention. That is, separate IRD analyses were calculated across the three categories of dependent variables: academic skills, behavioral skills, and social skills. Analysis of academic skill outcomes yielded 73 separate effect sizes from a total of 13 studies. The total mean IRD value for interventions targeting academic skills was 0.66 CI84 [0.63, 0.69; moderate effect]. For studies targeting behavioral skills, a total of 35 separate effects sizes from seven studies yielded a mean IRD of 0.44 CI84 [0.38, 0.49; small effect]. Similarly, analysis of social skills outcomes yielded 43 separate effect sizes from a total of eight studies with a total mean IRD calculation of 0.29 CI84 [0.24, 0.33; small effect]. Taken together, results of analysis by type of intervention suggest that CAI may be more effective when targeting academic skills. Results from behavioral and social skills variables should be considered preliminary due to low numbers of studies evaluating these outcomes combined with the high level of variability of findings from individual studies (see Fig. 1). Figure 2 illustrates the overall IRD and 84 % CIs for targeted outcomes.

Variation in Effects by Age

Additional IRD calculations were conducted to examine differences across age levels of students (preschool, elementary, middle, and high). For preschool age students, 18 separate effect sizes from four studies yielded an IRD score of 0.43 CI84 [0.36, 0.49; small effect]. Elementary-aged students had 70 separate effect sizes from 11 studies. The mean IRD for elementary-aged students was 0.41 CI84 [0.37, 0.45; small effect]. For middle-school-aged students, 38 separate effect sizes from nine studies yielded an IRD of 0.39 CI84 [0.34, 0.44; small effect]. High school-aged students had 24 separate effects sizes from four studies and yielded an overall IRD of 0.64 CI84 [0.57, 0.70; moderate effect]. Most of the studies included in this meta-analysis examined the effects of CAI with elementary-aged students (n = 11), and only a handful of studies were examined preschool (n = 4) and high school (n = 4) populations. As such, the calculations should be interpreted with caution. Figure 3 illustrates overall IRD and 84 % CIs by age.

Discussion

Descriptively, the extant literature appears to comply with the guidelines offered by Horner et al. (2005) for determining if a practice is evidence-based. Specifically, the results of CAI were synthesized across 28 peer-reviewed studies conducted by 25 primary researchers across 15 different geographical locations (11 states and 4 countries), and cumulatively included 93 participants. In addition, all of the studies included in this analysis demonstrated experimental control and the majority of studies (n = 26; 93 %) provided a measure of inter-observer agreement and treatment integrity (n = 21; 75 %). Unfortunately, only ten studies (36 %) assessed social validity. Despite the failure of the majority of studies to collect data on fidelity of implementation and intervention acceptability, the extant literature appears to comply with the guidelines offered by Horner et al., permitting CAI to be considered an evidence-based approach.

Despite the alignment with the aforementioned features, results of IRD calculations suggest that CAI demonstrates only a moderate impact to students with ASD. As such, we provide a less than enthusiastic endorsement of CAI and posit that such interventions possess the potential to impact students with ASD positively, but likely are impacted by a host of additional factors that account for effects. Such a tempered consideration largely is due to the possibility of additional factors/variables (i.e., participant and intervention characteristics) that may account for effects. First, mean IRD calculations were highly variable (ranging from −0.05 to 1.00). Although many of the studies included within this meta-analysis demonstrated effective results, a handful of studies (e.g., Bernard-Opitz et al. 2001; Hetzroni and Tannous 2004; Mancil, et al. 2009; Murdock et al. 2013) demonstrated questionable outcomes despite having a good experimental control. Such variability suggests that CAI may be effective for some students and not for others. Given this information, it is possible that CAI is suited to participants with certain characteristics (i.e., increased language and cognitive functioning; ability to understand basic social behaviors). It is worthy to note that while all students in the studies reviewed were identified as being on the autism spectrum, quantification of the degree or severity of autism-related symptoms and academic, behavioral, and social skills difficulties rarely was provided. Likewise, the manner in which computer technologies were used in the studies varied greatly and may have influenced the results. In some studies, computers were used as an augment to other forms of instruction. In other studies, participants were taught skills through independent interactions with computers. It is likely that certain individuals were better able to use computers independently and others needed a greater level of support. Again, specific characteristics of participants may be an important variable impacting the effectiveness of CAI. As such, the claim that CAI is an effective strategy should be tempered until future research provides more conclusive findings based on thorough analysis of multiple participant and intervention variables.

Second, results of this analysis indicate that outcomes of CAI were mixed based on the type of intervention designed. That is, CAI appears to be more effective for teaching academic skills to students with ASD than for improving behavioral and/or social skills. The fact that CAI demonstrated limited effectiveness for teaching behavioral and social skills may not be surprising given the highly variable nature of behavioral and social interactions. It may be that students have learned the skills through CAI, but fail to apply the skills within real-world contexts. However, this finding suggests that CAI does not demonstrate the same outcomes across different domains of skill acquisition and/or improvement. As a result, more conclusive evidence is needed to make a full determination of whether or not CAI is an evidence-based modality for all levels of instruction.

Third, much of the research combines CAI with the use of additional intervention strategies (i.e., self-monitoring and consequent strategies). As such, it becomes difficult to ascertain which element (e.g., prompting, reinforcement, and CAI) is the critical component of the intervention, or whether a combination of approaches has the greatest effect. While there is no clear evidence of a difference in the present analysis among those studies that used CAI alone versus those that employed other strategies, several confounding variables were evident. The confounding of CAI with other strategies is a problem that should be overcome. Specifically, research is needed examining the extent to which CAI individually contributes to outcomes.

From the preceding discussion, it is apparent that CAI has positive claims that suggest it is a promising strategy for supporting skill acquisition of students with ASD in school-based contexts. However, it is unclear from the present analysis that CAI is an evidence-based strategy. In a prior descriptive review, Pennington (2010) suggested that CAI may have promise as an effective literacy intervention for students with autism. The results of our analysis support Pennington’s claim and contribute added knowledge regarding the effectiveness of CAI for promoting behavioral and socials skills in children with ASD. Overall, the results of this study provide data suggesting that CAI has noteworthy potential for improving the academic, behavioral, and social outcomes of students with ASD, but are not yet an evidence-based strategy.

Limitations of Current Analysis

The results of this meta-analysis should be viewed as preliminary due to several limitations. First, application of rigorous inclusion criteria resulted in a limited sample size. Although 28 studies are sufficient, such a limited number of studies preclude a thorough analysis of some of the variables of interest (e.g., specific diagnoses, cognitive and language levels, setting characteristics, and intervention features). Second, interpretation of the results was limited by the method of analysis. That is, the analysis was based on a subset of studies that yielded IRD data. Third, interpretation of the results was limited further by the degree of variation in design and implementation of CAI. While some of the research reviewed demonstrated clear examples of well-controlled studies, much of the extant literature combines CAI with the use of additional intervention strategies (i.e., self-monitoring and consequent strategies). This raises the possibility that additional design characteristics may be important to the success of the intervention. Fourth, all studies located and included within this analysis were conducted within school-based contexts. Therefore, claims that CAI is an effective approach outside of school-based contexts cannot be made.

Future Research Recommendations

There are several recommendations for future research examining the effectiveness of CAI for students with ASD that were exposed as part of this meta-analysis. First, more methodologically robust investigations should be incorporated into future studies that include methods for data collection beyond the intervention phase. Specifically, future research should include extended data collection on maintenance and generalization of skills, social validity of the intervention, and treatment fidelity. The inclusion of such elements of data collection and subsequent analysis would allow for more definitive claims of the efficacy of CAI. Second, future studies must provide more detailed information pertaining to descriptions of participants. Of particular importance is information pertaining to cognitive and language ability, severity of deficits, specific (and confirmed) diagnosis of participants on the autism spectrum (e.g., severity) and setting characteristics. More adequate participant descriptions would make it easier to determine whether participant related variables moderate the effect of CAI in addition to allowing the creation of profiles of “responders” and “non-responders” to interventions. Third, the present meta-analysis included CAI within school-based contexts only. Future research should utilize similar methodology to examine the outcomes of computer-assisted interventions in other settings, such as clinic-based settings and home/community environments. Fourth, future research should further examine the overall effectiveness of CAI with ASD populations using more rigorous methods of data analysis (i.e., hierarchical linear modeling) that permit the ability to examine moderating variables.

References

References marked with denote studies included in the meta-analysis.an asterisk

Altman, D. G. (1999). Practical statistics for medical research. Bristol: Chapman & Hall.

Arizona Software (2008). GraphClick for Mac [Graph digitizing software]. Phoenix, AZ: Author.

*Ayres, K. M., Maquire, A., McClimon, D. (2009). Acquisition and generalization of chained task taught with computer based video instruction to children with autism. Educational and Training in Developmental Disabilities, 44, 493–508.

*Bereznak, S., Ayres, K.M., Mechling, L.C., Alexander, J.L. (2012). Video self-prompting and mobile technology to increase daily living and vocational independence for students with autism spectrum disorders. Journal of Developmental and Physical Disabilities, 24, 269–285.

*Bernard-Opitz, V., Sriram, N., Nakhoda-Sapuan, S. (2001). Enhancing social problem solving in children with autism and normal children through computer-assisted instruction. Journal of Autism and Developmental Disorders, 31, 377–398.

*Bosseler, A., & Massaro, D.W. (2003). Development and evaluation of a computer-animated tutor for vocabulary and language learning in children with autism. Journal of Autism and Developmental Disorders, 33, 653–672.

*Burton, C.E., Anderson, D.H., Prater, M.A., Dyches, T.T. (2013). Video self-modeling on an iPad to teach functional math skills to adolescents with autism and intellectual disability. Focus on Autism and Other Development Disabilities, 28, 67–77.

Charlop-Christy, M. J., & Daneshvar, S. (2003). Using video modeling to teach perspective taking to children with autism. Journal of Positive Behavior Interventions, 5, 12–21.

*Cheng, Y., & Ye, J. (2010). Exploring the social competence of students with autism spectrum conditions in a collaborative virtual learning environment: The pilot study. Computers and Education, 54, 1068–1077.

*Cihak, D., Fahrenkrog, C., Ayres, K. M., & Smith, C. (2010). The use of video modeling via a video iPod and a system of least prompts to improve transitional behaviors for students with autism spectrum disorders in the general education classroom. Journal of Positive Behavior Interventions, 12, 103–115.

*Coleman-Martin, M.B., Heller, K.W., Cihak, D.F., Irvine, K.L. (2005). Using computer-assisted instruction and the nonverbal reading approach to teach word identification. Focus on Autism and Other Developmental Disabilities, 20, 80–90.

*Flores, M., Musgrove, K., Renner, S., Hinton, V., Strozier, S., Franklin, S., Hil, D. (2012). A comparison of communication using the Apple iPad and a picture-based system. Augmentative and Alternative Communication, 28, 74–84.

*Ganz, J.B., Boles, M.B., Goodwyn, F.D., Flores, M.M. (2014). Efficacy of handheld electronic visual supports to enhance vocabulary in children with ASD. Focus on Autism and Other Developmental Disabilities, 29, 3–12.

Ganz, J. B., Davis, J. L., Lund, E. M., Goodwyn, F. D., & Simpson, R. L. (2012a). Meta-analysis of PECS with individuals with ASD: Investigation of targeted versus non-targeted outcomes, participant characteristics, and implementation phase. Research in Developmental Disabilities, 33, 406–418.

Ganz, J. B., Earles-Vollrath, T. L., Heath, A. K., Parker, R. I., Rispoli, M. J., & Duran, J. B. (2012b). A meta-analysis of single case research studies on aided augmentative and alternative communication systems with individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 42, 60–74.

*Hagiwara, T., & Myles, B.S. (1999). A multimedia social story intervention: Teaching skills to children with autism. Focus on Autism and Other Developmental Disabilities, 14, 82–95.

*Hetzroni, O.E., & Shalem, U. (2005). From logos to orthographic symbols: A multilevel fading computer program for teaching nonverbal children with autism. Focus on Autism and Other Developmental Disabilities, 24, 201–212.

*Hetzroni, O.E., & Tannous, J. (2004). Effects of a computer-based intervention program on the communicative functions of children with autism. Journal of Autism and Developmental Disorders, 34, 95–113.

Higgins, J.P.T., & Green, S. (2009). Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration. Available from www.cochrane-handbook.org.

Horner, R. H., Carr, E. G., Halle, J., McGee, G., Odom, A., & Wolery, M. (2005). The use of single subject research to identify evidence-based practice in special education. Exceptional Children, 71, 165–179.

Individuals with Disabilities Education Improvement Act of 2004, 20 U.S.C.§ 614 et seq.

Iovannone, R., Dunlap, G., Huber, H., & Kincaid, D. (2003). Effective educational practices for students with autism spectrum disorders. Focus on Autism and Other Developmental Disabilities, 18, 150–165.

*Jowett, E.L., Morre, D.W., Anderson, A. (2012). Using an iPad-based video modeling package to teach numeracy skills to a child with an autism spectrum disorder. Developmental Neurorehabilitation, 15, 304–312.

Kavale, K. A. (2001). Meta-analysis: A primer. Exceptionality, 9, 177–183.

Kazdin, A. E. (1982). Single-case experimental designs: Methods for clinical and applied settings. New York: Oxford University Press.

Kinney, E. M., Vedora, J., & Stromer, R. (2003). Computer-presented video models to teach generative spelling to a child with an autism spectrum disorder. Journal of Positive Behavior Interventions, 5, 22–29.

Ma, H. (2009). Effectiveness of intervention on the behavior of individuals with autism: a meta-analysis using percentage of data points exceeding the median of baseline phase (PEM). Behavior Modification, 33, 339–359.

*Mancil, R.G., Haydon, T., Whitby, P. (2009). Differentiated effects of paper and computer-assisted Social StoriesTM on inappropriate behavior in children with autism. Focus on Autism and Other Developmental Disabilities, 24, 205–215.

Massaro, D. W., & Bosseler, A. (2006). The importance of the face in a computer-animated tutor for vocabulary learning by children with autism. Autism, 10, 495–510.

*Mechling, L.C., Gast, D.L., Cronin, B.A. (2006). The effects of presenting high-preference items, paired with choice, via computer-based video programming on task completion of students with autism. Focus on Autism and Other Developmental Disabilities, 21, 7–13.

Mechling, L. C., Gast, D. L., & Krupa, K. (2007). Impact of SMART board technology: an investigation of sight word reading and observational learning. Journal of Autism and Developmental Disorders, 37, 1869–1882.

*Mechling, L.C, Gast, D.L., Seid, N.H. (2009). Using a personal digital assistant to increase independent task completion by students with autism spectrum disorders. Journal of Autism and Developmental Disorders, 39, 1420–1434.

Miller, F. G., & Lee, D. L. (2013). Do functional behavioral assessments improve intervention effectiveness for students diagnosed with ADHD? A single-subject meta-analysis. Journal of Behavioral Education, 22, 253–282.

Moore, D., McGrath, P., & Thorpe, J. (2000). Computer-aided learning for people with autism: a framework for research and development. Innovations in Education and Training International, 37, 218–228.

*Murdock, L.C., Ganz, J., Crittendon, J. (2013). Use of an iPad play story to increase play dialogue of preschoolers with autism spectrum disorders. Journal of Autism and Developmental Disorders, 43, 2174–2189.

Nally, B., Houlton, B., & Ralph, S. (2000). The management of television and video by parents of children with autism. Autism, 4, 331–337.

National Research Council. (2001). Educating children with autism. Washington, DC: National Academy Press.

No Child Left Behind Act of 2001, 20 U.S.C.§ 6301 et seq.

Parker, R.I., Hagan-Burke, S., & Vannest, K.J. (2007). Percent of all non-overlapping data PAND: An alternative to PND. The Journal of Special Education, 40, 194–204.

Parker, R.I., Hagan-Burke, S., & Vannest, K.J. (2007). Percent of all non-overlapping data PAND: An alternative to PND. The Journal of Special Education, 40, 194-204

Parker, R. I., Vannest, K. J., & Brown, L. (2009). The improvement rate difference for single-case research. Exceptional Children, 75, 135–150.

Parker, R. I., Vannest, K. J., & Davis, J. L. (2014). Non-overlap analysis for single-case research. In T. R. Kratochwill & J. R. Levin (Eds.), Single-case intervention Research: Methodological and statistical advances (pp. 127–151). Washington, D.C.: American Psychological Association.

Payton, M. E., Greenstone, M. H., & Schenker, N. (2003). Overlapping confidence intervals or standard error intervals: What do they mean in terms of statistical significance? Journal of Insect Science, 3, 1–6.

*Pennington, R.C. (2010). Computer-assisted instruction for teaching academic skills to students with autism spectrum disorders: A review of literature. Focus on Autism and Other Developmental Disabilities, 25, 239–248.

Pennington, R. C., Stenhogg, D. M., Gibson, J., & Ballou, K. (2012). Using simultaneous prompting to teacher computer-based story writing to a student with autism. Education and Treatment of Children, 35, 389–406.

Quill, K. (1997). Instructional considerations for young children with autism: the rationale for visually cued instruction. Journal of Autism and Developmental Disorders, 27, 697–714.

Ramdoss, S., Mulloy, A., Lang, R., O’Reilly, M., Sigafoos, J., Lancioni, G., Didden, R., & Zein, F. E. (2011a). Use of computer-based interventions to improve literacy skills in students with autism spectrum disorders: a systematic review. Research in Autism Spectrum Disorders, 5, 1306–1318.

Ramdoss, S., Lang, R., Mulloy, A., Franco, J., O'Reily, M., Didden, R., & Lancioni, G. (2011b). Use of computerbased interventions to teach communication skills to children with autism spectrum disorders: a systematic review. Journal of Behavioral Education, 20, 55–76.

Reichow, B., Volkmar, F. R., & Cicchetti, D. V. (2008). Development of the evaluative methods for evaluating and determining evidence-based practices in autism. Journal of Autism and Developmental Disorders, 38, 1311–1319.

Risely, T. R., & Wolf, M. M. (1972). Strategies for analyzing behavioral change over time. In J. Nesselroade & H. Reese (Eds.), Life-span developmental psychology: Methodological issues (pp. 175–183). New York: Academic.

*Sansosti, F.J., & Powell-Smith, K.A. (2008). Using computer-presented social stories and video models to increase the social communication skills of children with higher-functioning autism spectrum disorders. Journal of Positive Behavior Interventions, 10, 162–178.

Sansosti, F. J., Powell-Smith, K. A., & Cowan, R. J. (2010). High functioning autism/Asperger syndrome in schools: Assessment and Intervention. New York: Guilford.

Schenker, N., & Gentleman, J. F. (2001). On judging the significant of differences by examining overlap between confidence intervals. The American Statistician, 55, 182–186.

*Schlosser, R.W., & Blischak, D.M. (2004). Effects of speech and print feedback on spelling in children with autism. Journal of Speech, Language, and Hearing Research, 47, 848–862.

Scruggs, T. E., & Mastropieri, M. A. (1998). Summarizing single-subject research: Issues and applications. Behavior Modification, 22, 221–242.

Scruggs, T. E., & Mastropieri, M. A. (2001). How to summarize single-participant research: Ideas and applications. Exceptionality, 9, 227–244.

Shane, H. C., & Albert, P. D. (2008). Electronic screen media for persons with autism spectrum disorders: Results of a survey. Journal of Autism and Developmental Disorders, 38, 1499–1508.

Shipley-Benamou, R., Lutzker, J. R., & Taubman, M. (2002). Teaching daily living skills to children with autism through instructional video modeling. Journal of Positive Behavior Interventions, 4, 165–175.

*Simpson, K., & Keen, D. (2010). Teaching young children with autism graphic symbols embedded within an interactive song. Journal of Developmental and Physical Disabilities, 22, 165–177.

*Simpson, A., Langone, J., Ayres, K.M. (2004). Embedded video and computer based instruction to improve social skills for students with autism. Education and Training in Developmental Disabilities, 39, 240–252.

Simpson, R. L. (2008). Children and youth with autism spectrum disorders: the search for effective methods. Focus on Exceptional Children, 40, 1–14.

*Smith, B.R., Spooner, F., Wood, C.L. (2013). Using embedded computer-assisted explicit instruction to teach science to students with autism spectrum disorder. Research in Autism Spectrum Disorders, 7, 433–443.

*Smith-Myles, B., Ferguson, H., Hagiwara, T. (2007). Using a personal digital assistant to improve the recording of homework assignments by an adolescent with Asperger Syndrome. Focus on Autism and Developmental Disorders, 22, 96–99.

*Soares, D.A., Vannest, K.J., Harrison, J. (2009). Computer aided self-monitoring to increase academic production and reduce self-injurious behavior in a child with autism. Behavioral Interventions, 24, 171–183.

Suen, H. K., & Ary, D. (1989). Analyzing quantitative behavior observation data. Hillsdale: Erlbaum.

Vannest, K. J., Davis, J. L., Davis, C. R., Mason, B. A., & Burke, M. D. (2010). Effective intervention for behavior with a daily behavior report card: a meta-analysis. School Psychology Review, 39, 654–672.

Wainer, A. L., & Ingersoll, B. R. (2011). The use of innovative computer technology for teaching social communication to individuals with autism spectrum disorders. Research in Autism Spectrum Disorders, 5, 96–107.

Wetherby, A., & Prizant, B. (2000). Autism spectrum disorders: a transactional developmental perspective. Baltimore: Brookes.

*Whalen, C., Liden, L., Ingersoll, B., Dallaire, E., Liden, S. (2006). Positive behavioral changes associated with the use of computer-assisted instruction for young children. Journal of Speech and Language Pathology and Applied Behavior Analysis, 1, 11–26.

Whitehurst, G. (2004). Wisdom of the head, not the heart. Distinguished Public Policy Lecture at Northwestern University, Institute for Policy Research. Evanston: IL.CAI.

Wolery, M., Busick, M., Reichow, B., & Barton, E. E. (2008). Comparison of overlap methods for quantitatively synthesizing single subject data. The Journal of Special Education, 44, 18–28.

*Yaw, J.S., Skinner, C.H., Parkhurst, J., Taylor, C.M., Booher, J., Chambers, K. (2011). Extending research on a computer-based sight-word reading intervention to a student with autism. Journal of Behavioral Education, 20, 44–54.

Yell, M. L., & Drasgow, E. (2000). Litigating a free appropriate public education: the Lovaas hearings and cases. The Journal of Special Education, 33, 205–214.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sansosti, F.J., Doolan, M.L., Remaklus, B. et al. Computer-Assisted Interventions for Students with Autism Spectrum Disorders within School-Based Contexts: A Quantitative Meta-Analysis of Single-Subject Research. Rev J Autism Dev Disord 2, 128–140 (2015). https://doi.org/10.1007/s40489-014-0042-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40489-014-0042-5